Research on Blind Super-Resolution Technology for Infrared Images of Power Equipment Based on Compressed Sensing Theory

Abstract

:1. Introduction

2. CS Blind SR Model Based on the Principle of Image Degradation

3. Blur Kernel Estimation and Construction of Blur Matrix

3.1. Blur Kernel Estimation

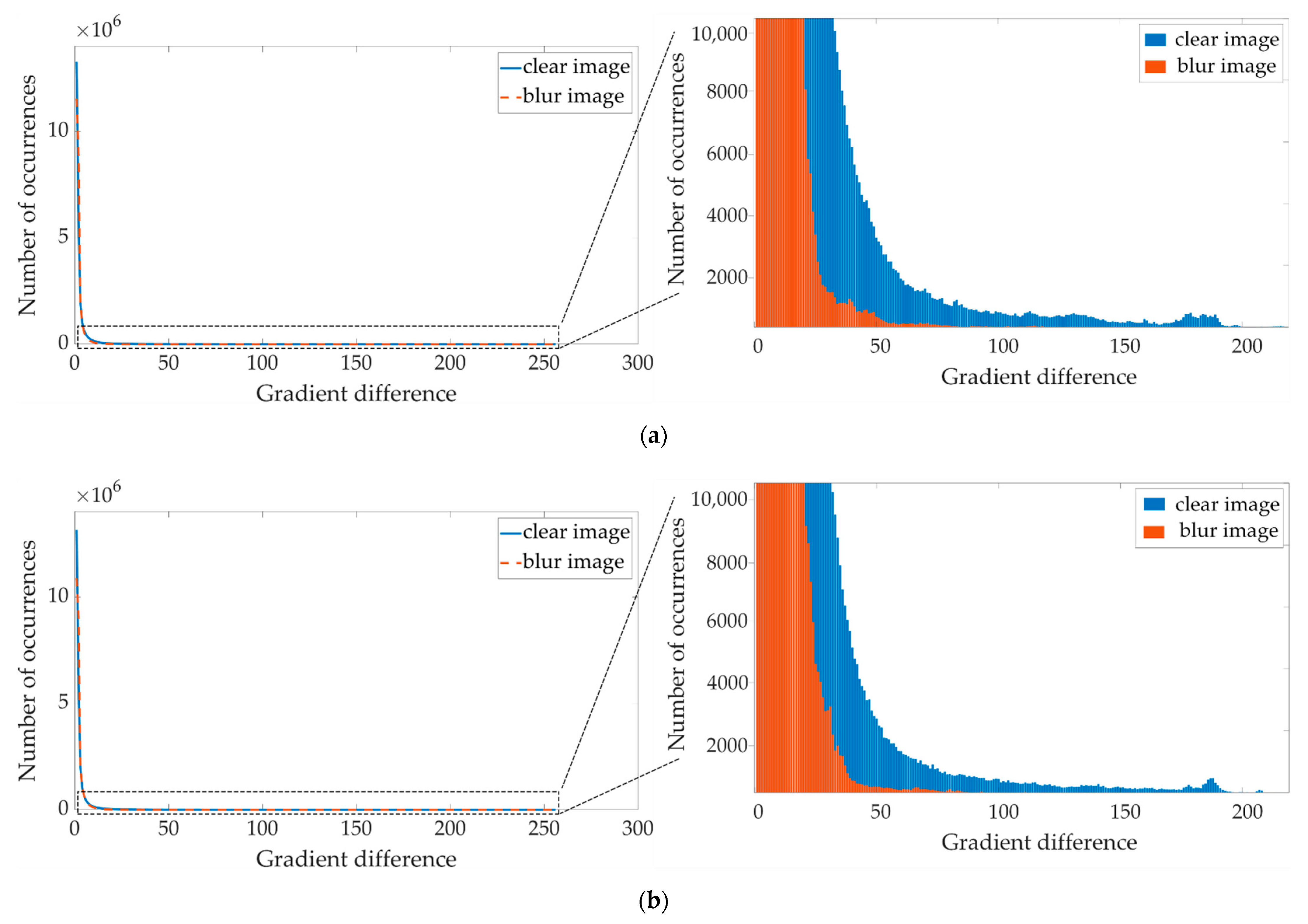

3.1.1. Blur Kernel Estimation Model Based on Image Gradient Prior

3.1.2. Solving Subproblem

3.1.3. Solving Subproblem

| Algorithm 1: Blur Kernel Estimation Algorithm. |

| Input: Blurred image |

| generate the initial value of each variable |

| for do |

| repeat |

| solve for using the gradient descent method, . |

| repeat |

| solve for using (18), . |

| repeat |

| solve for using (20), . |

| repeat |

| solve for using (22), solve for using (15), . |

| until |

| . |

| until |

| . |

| until |

| . |

| until |

| solve for using (25). |

| . |

| end for |

| Output: blur kernel . |

3.2. Blur Matrix Construction

4. Image SR Reconstruction Algorithm

4.1. Objective Function Construction

4.2. Optimization of Objective Function

- Initialize and separately

- Perform the first iteration of the TV constraint: solve for using Gradient descent,.

- Perform the second iteration of the Sparse constraint; according to (40), obtain by the proximal gradient method through .

- Determination: stop iteration if is less than the error constraint , or is greater than the maximum number of iterations . Otherwise, let and return to step 2.

- Output: reconstructed HR image .

5. Experiment and Result Analysis

5.1. Experimental Data and Evaluation Parameters

5.2. Synthetic Infrared Image Reconstruction Experiment

5.3. Actual Infrared Image Reconstruction Experiment

5.4. Norm Validity Verification

5.5. Validity Verification of Blur Matrix

5.6. Analysis of TwTVSI Algorithm Performance

6. Discussion on Future Application Scenarios

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jalil, B.; Leone, G.R.; Martinelli, M.; Moroni, D.; Pascali, M.A.; Berton, A. Fault Detection in Power Equipment via an Unmanned Aerial System Using Multi Modal Data. Sensors 2019, 19, 3014. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Zhang, Z. Improving Neural Network Detection Accuracy of Electric Power Bushings in Infrared Images by Hough Transform. Sensors 2020, 20, 2931. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, J.; Huang, H.; Hu, S.; Zhang, A.; Ma, H.; Sun, W. Super-resolution based on compressive sensing and structural self-similarity for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4864–4876. [Google Scholar] [CrossRef]

- Wang, L.; Wu, H.; Pan, C. Fast image upsampling via the displacement field. IEEE Trans. Image Process. 2014, 23, 5123–5135. [Google Scholar] [CrossRef]

- Nguyen, N.; Milanfar, P. A wavelet-based interpolation-restoration method for superresolution (wavelet superresolution). Int. J. Circuits Syst. Signal Process. 2000, 19, 321–338. [Google Scholar] [CrossRef]

- Takeda, H.; Farsiu, S.; Milanfar, P. Kernel regression for image processing and reconstruction. IEEE Trans. Image Process. 2007, 16, 349–366. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freeman, W.T.; Jones, T.R.; Pasztor, E.C. Example-based super-resolution. IEEE Comput. Graph. Appl. 2002, 22, 56–65. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Wright, J.; Huang, T.; Yi, M. Image super-resolution as sparse representation of raw image patches. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Yang, J.; Wang, Z.; Lin, Z.; Cohen, S.; Thomas, H. Coupled dictionary training for image super-resolution. IEEE Trans. Image Process. 2012, 21, 3467–3478. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the 13th European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Rasti, P.; Demirel, H.; Anbarjafari, G. Improved iterative back projection for video super-resolution. In Proceedings of the 22nd Signal Processing and Communications Applications Conference (SIU), Trabzon, Turkey, 23–25 April 2014. [Google Scholar]

- Zhang, X.; Burger, M.; Bresson, X.; Osher, S. Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM J. Imaging Sci. 2010, 3, 253–276. [Google Scholar] [CrossRef]

- Li, L.; Xie, Y.; Hu, W.; Zhang, W. Single image super-resolution using combined total variation regularization by split Bregman Iteration. Neurocomputing 2014, 142, 551–560. [Google Scholar] [CrossRef]

- Gambardella, A.; Migliaccio, M. On the superresolution of microwave scanning radiometer measurements. IEEE Geosci. Remote Sens. Lett. 2008, 5, 796–800. [Google Scholar] [CrossRef]

- Huo, W.; Tuo, X.; Zhang, Y.; Huang, Y. Balanced Tikhonov and Total Variation Deconvolution Approach for Radar Forward-Looking Super-Resolution Imaging. IEEE Geosci. Remote Sens. Lett. 2021, 1–5. [Google Scholar] [CrossRef]

- Liu, X.; Chen, L.; Wang, W.; Zhao, J. Robust multi-frame super-resolution based on spatially weighted half-quadratic estimation and adaptive BTV regularization. IEEE Trans. Image Process. 2018, 27, 4971–4986. [Google Scholar] [CrossRef]

- Michaeli, T.; Irani, M. Nonparametric Blind Super-resolution. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 945–952. [Google Scholar]

- Efrat, N.; Glasner, D.; Apartsin, A.; Nadler, B.; Levin, A. Accurate blur models vs. image priors in single image super-resolution. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 2832–2839. [Google Scholar]

- Shao, W.Z.; Ge, Q.; Wang, L.Q.; Lin, Y.Z.; Deng, H.S.; Li, H.B. Nonparametric Blind Super-Resolution Using Adaptive Heavy-Tailed Priors. J. Math. Imaging Vis. 2019, 61, 885–917. [Google Scholar] [CrossRef]

- Qian, Q.; Gunturk, B.K. Blind super-resolution restoration with frame-by-frame nonparametric blur estimation. Multidimens. Syst. Signal Process. 2016, 27, 255–273. [Google Scholar] [CrossRef]

- Kim, W.H.; Lee, J.S. Blind single image super resolution with low computational complexity. Multimed. Tools Appl. 2017, 76, 7235–7249. [Google Scholar] [CrossRef]

- Almeida, M.S.C.; Almeida, L.B. Blind and semi-blind deblurring of natural images. IEEE Trans. Image Process. 2009, 19, 36–52. [Google Scholar] [CrossRef]

- Zuo, W.; Ren, D.; Zhang, D.; Gu, S.; Zhang, L. Learning iteration-wise generalized shrinkage–thresholding operators for blind deconvolution. IEEE Trans. Image Process. 2016, 25, 1751–1764. [Google Scholar] [CrossRef]

- Pan, J.; Hu, Z.; Su, Z.; Yang, M.H. L0-regularized intensity and gradient prior for deblurring text images and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 342–355. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J. The restricted isometry property and its implications for compressed sensing. Comptes Rendus Math. 2008, 346, 589–592. [Google Scholar] [CrossRef]

- Tang, S.; Zheng, W.; Xie, X.; He, T.; Yang, P.; Luo, L.; Zhao, H. Multi-regularization-constrained blur kernel estimation method for blind motion deblurring. IEEE Access 2019, 7, 5296–5311. [Google Scholar] [CrossRef]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M.H. Blind image deblurring using dark channel prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1628–1636. [Google Scholar]

- Yan, Y.; Ren, W.; Guo, Y.; Rui, W.; Xiaochun, C. Image deblurring via extreme channels prior. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CCVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4003–4011. [Google Scholar]

- Cho, S.; Lee, S. Fast Motion Deblurring. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Xu, L.; Lu, C.; Xu, Y.; Jia, J. Image smoothing via L0 gradient minimization. In Proceedings of the 2011 SIGGRAPH Asia Conference, Hong Kong, China, 11–15 December 2011; pp. 1–12. [Google Scholar]

- Zhang, H.; Hager, W.W. A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 2004, 14, 1043–1056. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Zheng, S.; Jia, J. Unnatural l0 sparse representation for natural image deblurring. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CCVPR), Portland, OR, USA, 23–28 June 2013; pp. 1107–1114. [Google Scholar]

- Sulam, J.; Ophir, B.; Zibulevsky, M.; Elad, M. Trainlets: Dictionary Learning in High Dimensions. IEEE Trans. Signal Process. 2016, 64, 3180–3193. [Google Scholar] [CrossRef]

- Keys, R.G. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 2003, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Krishnan, D.; Fergus, R. Fast image deconvolution using hyper-Laplacian priors. Adv. Neural Inf. Process. Syst. 2009, 22, 1033–1041. [Google Scholar]

| Image Number | Keys | Shao | Michaeli | Kim | Ours |

|---|---|---|---|---|---|

| 1 | 19.875 | 27.031 | 30.784 | 29.339 | 32.364 |

| 2 | 22.098 | 29.508 | 34.180 | 32.142 | 35.882 |

| 3 | 21.671 | 29.874 | 34.954 | 35.377 | 37.047 |

| 4 | 18.124 | 20.616 | 23.167 | 25.423 | 24.267 |

| 5 | 18.664 | 26.541 | 29.313 | 28.800 | 30.954 |

| 6 | 17.381 | 25.453 | 26.271 | 26.299 | 28.534 |

| 7 | 24.336 | 34.171 | 35.325 | 37.638 | 42.721 |

| 8 | 20.385 | 26.413 | 34.928 | 27.116 | 32.957 |

| Image Number | Keys | Shao | Michaeli | Kim | Ours |

|---|---|---|---|---|---|

| 1 | 5.904 | 6.103 | 6.111 | 6.148 | 6.275 |

| 2 | 6.577 | 6.413 | 6.657 | 6.709 | 6.748 |

| 3 | 6.218 | 6.281 | 6.338 | 6.342 | 6.381 |

| 4 | 5.730 | 5.803 | 5.862 | 5.881 | 5.906 |

| 5 | 6.133 | 6.141 | 6.247 | 6.243 | 6.298 |

| 6 | 5.605 | 5.571 | 5.660 | 5.691 | 5.772 |

| 7 | 6.722 | 6.364 | 6.765 | 6.772 | 6.846 |

| 8 | 5.813 | 5.852 | 5.890 | 5.883 | 5.916 |

| Image Number | Shao | Michaeli | CS-L0 1 | Ours |

|---|---|---|---|---|

| BK1 | 0.0473 | 0.0485 | 0.0481 | 0.0461 |

| BK2 | 0.0472 | 0.0438 | 0.0453 | 0.0420 |

| BK3 | 0.0467 | 0.0444 | 0.0461 | 0.0422 |

| BK4 | 0.0390 | 0.0379 | 0.0368 | 0.0353 |

| BK5 | 0.0431 | 0.0429 | 0.0435 | 0.0426 |

| BK6 | 0.0422 | 0.0406 | 0.0412 | 0.0393 |

| Image Number | TwTVSI (s) | BCS-L1 (s) | BCS-TV (s) |

|---|---|---|---|

| 1 | 15.3293 | 6.5937 | 1214.3894 |

| 2 | 14.2397 | 5.4419 | 1175.4316 |

| 3 | 14.8195 | 6.2824 | 1135.6211 |

| 4 | 15.4857 | 6.6621 | 1308.4803 |

| 5 | 15.0018 | 5.7140 | 1260.2702 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, L.; Liu, B.; Zhao, H. Research on Blind Super-Resolution Technology for Infrared Images of Power Equipment Based on Compressed Sensing Theory. Sensors 2021, 21, 4109. https://doi.org/10.3390/s21124109

Wang Y, Wang L, Liu B, Zhao H. Research on Blind Super-Resolution Technology for Infrared Images of Power Equipment Based on Compressed Sensing Theory. Sensors. 2021; 21(12):4109. https://doi.org/10.3390/s21124109

Chicago/Turabian StyleWang, Yan, Lingjie Wang, Bingcong Liu, and Hongshan Zhao. 2021. "Research on Blind Super-Resolution Technology for Infrared Images of Power Equipment Based on Compressed Sensing Theory" Sensors 21, no. 12: 4109. https://doi.org/10.3390/s21124109

APA StyleWang, Y., Wang, L., Liu, B., & Zhao, H. (2021). Research on Blind Super-Resolution Technology for Infrared Images of Power Equipment Based on Compressed Sensing Theory. Sensors, 21(12), 4109. https://doi.org/10.3390/s21124109