Optimization of Position and Number of Hotspot Detectors Using Artificial Neural Network and Genetic Algorithm to Estimate Material Levels Inside a Silo

Abstract

:1. Introduction

2. Dataset Deployment

2.1. Experimental Data Collection

2.2. Experimental Result

2.3. Feature Extraction

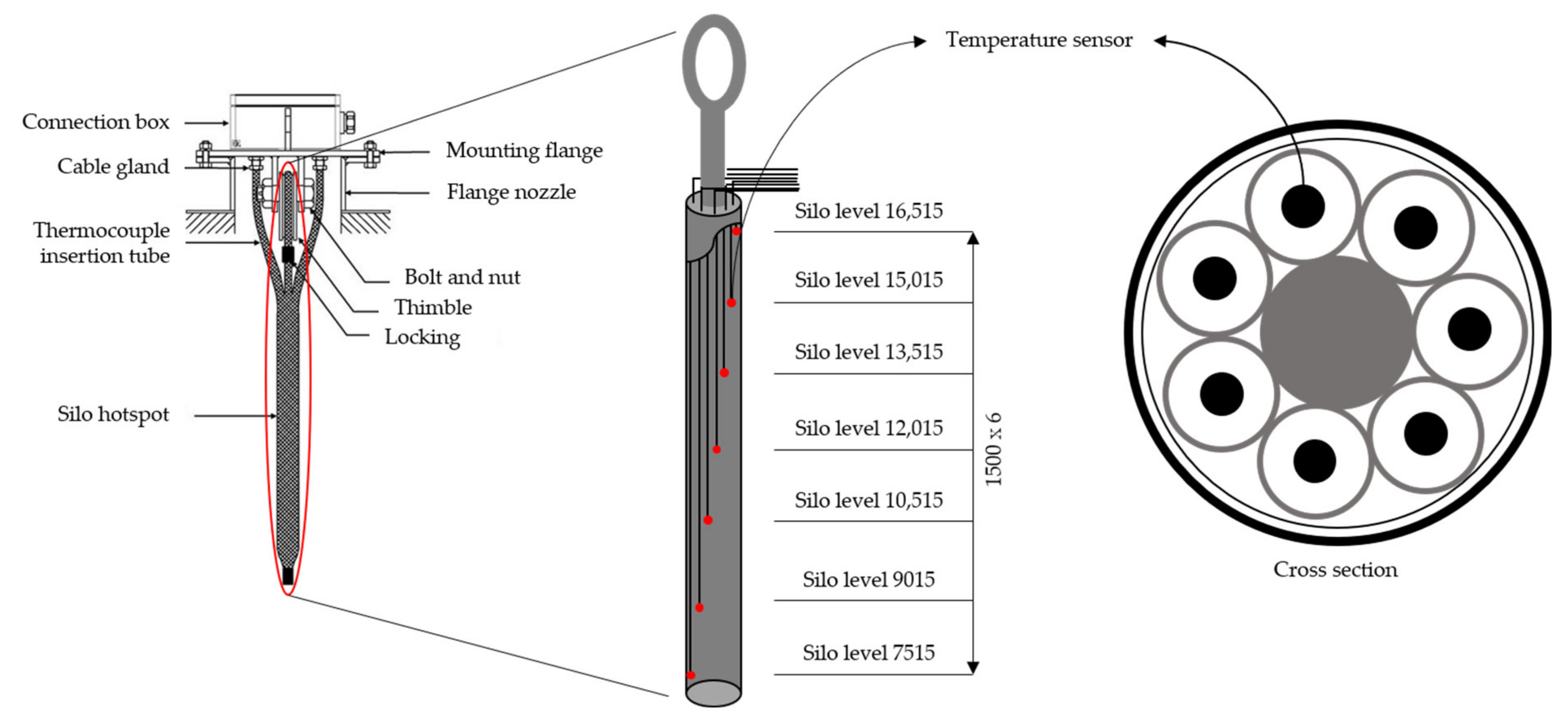

- Temperature data from the sensors (F1): The data recorded by the seven temperature sensors installed on the silo hotspot detector, which could be located above or below the material level, were included in one dataset for each level and represented the principal data in this study.

- Atmospheric temperature (F2): This refers to the ambient air temperature around the silo, which acts as a reference for determining the air temperature inside the silo.

- Number of sensors below the material level (F3): The seven temperature sensors in the silo hotspot detector could be located above or below the material level. Therefore, it is important to account for the number of internal sensors for each set of acquired data in the dataset. The number of internal sensors at different material levels is shown in Table 4.

2.4. Dataset Implementation

3. Methodology

3.1. Artificial Neural Network (ANN)

3.2. Setup of ANN Architecture and Parameters

3.2.1. Number of Hidden Layers

3.2.2. Number of Neurons in the Layers

3.2.3. Transfer and Training Function

3.3. Evaluation of the Trained Model Performance

4. Optimization

4.1. Genetic Algorithm (GA)

4.2. Optimizing Position and Number of Temperature Measurement Points

- Selection of the number of temperature measurement positions (ts) and configuring the ANN architecture. Establishing training algorithms and termination conditions; optimizing network training

- Selection of parameters, that is, the population size (Npop) and the maximum number of generations (Gen), for the optimization process.

- Determining the output value of each response using trained ANNs; determining the suitability of the output value using the objective function.

- Evaluation of conformity values using selection, crossover, and mutation.

- Generation of a new response from the previous step; evaluation of the conformity value of the new response. Determining the ranking of all responses based on the target function value with the most appropriate Npop value forming the next generation.

- Repetition of steps 3–5 until one of the following termination conditions is satisfied:

- The maximum number of generations is exceeded.

- The fitness function reaches the target value or a specific deviation from the target value.

- There is no improvement in the fitness value during the specified generation.

5. Results and Discussion

5.1. ANN Model Sensitivity Based on Input Value Combination

5.2. Training Results

5.3. Test Results

- The ANN predicted changes in the level of materials inside the silo owing to material discharge and charging.

- An inspection of the results confirmed that the temperature data could be used to predict the level of material inside the silo.

5.4. Optimization Results

6. Conclusions

- The accuracy of the material levels predicted using temperature data was sufficiently high.

- The proposed method enables simultaneous, real-time monitoring of temperature and material levels using a temperature detector, thereby ensuring efficient silo management.

- The method can accurately predict the material levels inside a silo by optimizing the number and position of the temperature-measurement points. Even when the number of temperature measurement sensors was reduced from seven to three, the material level could be predicted accurately provided that the sensors were installed at optimized positions.

- When there are more than four measurement points, the error is 1.2–1.3%. This represents a 50% decrease from the error when the number of measurement points is three. Therefore, considering the economic feasibility and temperature detection performance, which is the existing function of the detector, it is considered optimal if the number of measurement points is four or five.

- The prediction error of the proposed method was approximately 50% less than that of the existing methods.

- The proposed method is expected to increase the efficiency of the silo operation, make it more economical, and improve the silo safety management in practical applications.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vogt, M.; Gerding, M. Silo and Tank Vision: Applications, Challenges, and Technical Solutions for Radar Measurement of Liquids and Bulk Solids in Tanks and Silos. IEEE Microw. Mag. 2017, 18, 38–51. [Google Scholar] [CrossRef]

- Yigit, E. A novel compressed sensing based quantity measurement method for grain silos. Comput. Electron. Agric. 2018, 145, 179–186. [Google Scholar] [CrossRef]

- Lewis, J.D., Sr. Technology Review Level Measurement of Bulk Solids in Bins, Silos and Hoppers; Monitor Technologies LLC: Illinois, IL, USA, 2004. [Google Scholar]

- Turner, A.P.; Jackson, J.J.; Koeninger, N.K.; McNeill, S.G.; Montross, M.D.; Casada, M.E.; Boac, J.M.; Bhadra, R.; Maghirang, R.G.; Thompson, S.A. Stored grain volume measurement using a low density point cloud. Appl. Eng. Agric. 2017, 33, 105–112. [Google Scholar]

- Lewis, J.D. Ensuring Successful Use of Guided-Wave Radar Level Measurement Technology; Technical Exclusive; Monitor Technologies LLC: Illinois, IL, USA, 2007; pp. 28–33. [Google Scholar]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Yigit, E. Operating frequency estimation of slot antenna by using adapted kNN algorithm. Int. J. Intell. Syst. Appl. Eng. 2018, 1, 29–32. [Google Scholar] [CrossRef] [Green Version]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Chandwani, V.; Agrawal, V.; Nagar, R. Modeling slump of ready mix concrete using genetic algorithms assisted training of Artificial Neural Networks. Expert Syst. Appl. 2015, 42, 885–893. [Google Scholar] [CrossRef]

- Gao, Z.; Chin, C.S.; Woo, W.L.; Jia, J.; Da Toh, W. Genetic algorithm based back-propagation neural network approach for fault diagnosis in lithium-ion battery system. In Proceedings of the 6th International Conference on Power Electronics Systems and Applications, Hong Kong, China, 15–17 December 2015. [Google Scholar]

- Cui, X.; Yang, J.; Li, J.; Wu, C. Improved Genetic Algorithm to Optimize the Wi-Fi Indoor Positioning Based on Artificial Neural Network. IEEE Access 2020, 8, 74914–74921. [Google Scholar] [CrossRef]

- Zhang, Y.; Pan, G.; Chen, B.; Han, J.; Zhao, Y.; Zhang, C. Short-term wind speed prediction model based on GA-ANN improved by VMD. Renew. Energy 2020, 156, 1373–1388. [Google Scholar] [CrossRef]

- Li, Y.; Jia, M.; Han, X.; Bai, X.S. Towards a comprehensive optimization of engine efficiency and emissions by coupling artificial neural network (ANN) with genetic altorithm (GA). Energy 2021, 225, 120331. [Google Scholar] [CrossRef]

- DSánchez, D.; Melin, P.; Castillo, O. Optimization of modular granular neural networks using a firefly algorithm for human recognition. Eng. Appl. Artif. Intell. 2017, 64, 172–186. [Google Scholar] [CrossRef]

- Melin, P.; Sánchez, D. Multi-objective optimization for modular granular neural networks applied to pattern recognition. Inf. Sci. 2018, 460-461, 594–610. [Google Scholar] [CrossRef]

- Sánchez, D.; Melin, P.; Castillo, O. Comparison of particle swarm optimization variants with fuzzy dynamic parameter adaptation for modular granular neural networks for human recognition. J. Intell. Fuzzy Syst. 2020, 38, 3229–3252. [Google Scholar] [CrossRef]

- Benardos, P.; Vosniakos, G.-C. Optimizing feedforward artificial neural network architecture. Eng. Appl. Artif. Intell. 2007, 20, 365–382. [Google Scholar] [CrossRef]

- Soleimani, R.; Shoushtari, N.A.; Mirza, B.; Salahi, A. Experimental investigation, modeling and optimization of membrane separation using artificial neural network and multi-objective optimization using genetic algorithm. Chem. Eng. Res. Des. 2013, 91, 883–903. [Google Scholar] [CrossRef]

- Velásco-Mejía, A.; Vallejo-Becerra, V.; Chávez-Ramírez, A.U.; Torres-González, J.; Reyes-Vidal, Y.; Castañeda-Zaldivar, F. Modeling and optimization of a pharmaceutical crystallization process by using neural networks and genetic algorithms. Powder Technol. 2016, 292, 122–128. [Google Scholar] [CrossRef]

- Istadi, I.; Amin, N.A.S. Hybrid Artificial Neural Network−Genetic Algorithm Technique for Modeling and Optimization of Plasma Reactor. Ind. Eng. Chem. Res. 2006, 45, 6655–6664. [Google Scholar] [CrossRef] [Green Version]

- Karimi, H.; Ghaedi, M. Application of artificial neural network and genetic algorithm to modeling and optimization of removal of methylene blue using activated carbon. J. Ind. Eng. Chem. 2014, 20, 2471–2476. [Google Scholar] [CrossRef]

- Nandi, S.; Badhe, Y.; Lonari, J.; Sridevi, U.; Rao, B.; Tambe, S.S.; Kulkarni, B.D. Hybrid process modeling and optimization strategies integrating neural networks/support vector regression and genetic algorithms: Study of benzene isopropylation on Hbeta catalyst. Chem. Eng. J. 2004, 97, 115–129. [Google Scholar] [CrossRef]

- Izadifar, M.; Jahromi, M.Z. Application of genetic algorithm for optimization of vegetable oil hydrogenation process. J. Food Eng. 2007, 78, 1–8. [Google Scholar] [CrossRef]

- Rajasekaran, S.; Pai, G.A.V. Neural Networks, Fuzzy Logic and Genetic Algorithms: Synthesis & Applications; Prentice-Hall of India Private Limited: New Delhi, India, 2003. [Google Scholar]

- Chen, C.; Ramaswamy, H. Modeling and optimization of variable retort temperature (VRT) thermal processing using coupled neural networks and genetic algorithms. J. Food Eng. 2002, 53, 209–220. [Google Scholar] [CrossRef]

- Pappu, S.M.J.; Gummadi, S.N. Artificial neural network and regression coupled genetic algorithm to optimize parameters for enhanced xylitol production by Debaryomyces nepalensis in bioreactor. Biochem. Eng. J. 2017, 120, 136–145. [Google Scholar] [CrossRef]

- Sivapathasekaran, C.; Mukherjee, S.; Ray, A.; Gupta, A.; Sen, R. Artificial neural network modeling and genetic algorithm based medium optimization for the improved production of marine biosurfactant. Bioresour. Technol. 2010, 101, 2884–2887. [Google Scholar] [CrossRef]

- Pai, T.; Tsai, Y.-P.; Lo, H.; Tsai, C.; Lin, C. Grey and neural network prediction of suspended solids and chemical oxygen demand in hospital wastewater treatment plant effluent. Comput. Chem. Eng. 2007, 31, 1272–1281. [Google Scholar] [CrossRef]

- Sumpter, B.G.; Getino, C.; Noid, D.W. Theory and applications of neural computing in chemical science. Annu. Rev. Phys. Chem. 1994, 45, 439–481. [Google Scholar] [CrossRef]

- Abu Qdais, H.; Bani-Hani, K.A.; Shatnawi, N. Modeling and optimization of biogas production from a waste digester using artificial neural network and genetic algorithm. Resour. Conserv. Recycl. 2010, 54, 359–363. [Google Scholar] [CrossRef]

- Hugget, A.; Sébastian, P.; Nadeau, J.-P. Global optimization of a dryer by using neural networks and genetic algorithms. AIChE J. 1999, 45, 1227–1238. [Google Scholar] [CrossRef]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with artificial neural networks: The state of the art. Int. J. Forecast 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Nalbant, M.; Gökkaya, H.; Toktaş, I.; Sur, G. The experimental investigation of the effects of uncoated, PVD- and CVD-coated cemented carbide inserts and cutting parameters on surface roughness in CNC turning and its prediction using artificial neural networks. Robot. Comput. Manuf. 2009, 25, 211–223. [Google Scholar] [CrossRef]

- Beale, M.H.; Hagan, M.T.; Demuth, H.B. Deep Learning Toolbox. In The Math Works; User’ Guide; Incorp.: Boston, MA, USA, 2018; Volume R2018b. [Google Scholar]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Networks 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems; The University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

| Parameters | Basic Values |

|---|---|

| Mounting type | Hanging down |

| Tensile load (kN) | 126 |

| Operating temperature (°C) | 0–150 |

| Temperature sensor | K-type, 1.6 Φ, ungrounded |

| Unit material | Stainless steel |

| Unit weight (kg/m) | 3 |

| Length (mm) | 16,000 |

| Diameter (mm) | ≤35 |

| Parameters | Basic Values |

|---|---|

| Measurement principle | Electromechanical lot-sensor |

| Version | Rope version |

| Process temperature (°C) | −40–80 |

| Accuracy | 1.5% of max. range |

| Min. immersion length (mm) | 245 |

| Min. immersion length (m) | 1265 |

| Case No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Assumed value (m) | 15.765 | 15.765 | 11.265 | 11.265 | 14.265 | 14.265 | 15.765 | 15.765 | 11.265 | 11.265 |

| Measured value (m) | 16.278 | 16.44 | 15.94 | 16.083 | 12.248 | 12.381 | 14.062 | 14.2 | 15.382 | 15.552 |

| Difference value (m) | 0.513 | 0.675 | 4.675 | 4.818 | 2.017 | 1.884 | 1.703 | 1.565 | 4.117 | 4.287 |

| Level (m) | Number of Buried Sensors (ea) | Level (m) | Number of Buried Sensors (ea) |

|---|---|---|---|

| 0–7.515 | 0 | 12.015–13.515 | 4 |

| 7.515–9.015 | 1 | 13.515–15.015 | 5 |

| 9.015–10.515 | 2 | 15.015–16.515 | 6 |

| 10.515–12.015 | 3 | 16.515–23.515 | 7 |

| Data Type | Training Data | Test Data | |||

|---|---|---|---|---|---|

| Min. | Max. | Min. | Max. | ||

| Input | No. 1 (°C) | 25 | 41.5 | 25.3 | 39.6 |

| No. 2 (°C) | 22.9 | 41.8 | 24.7 | 39.8 | |

| No. 3 (°C) | 22.5 | 42.1 | 24.2 | 40.4 | |

| No. 4 (°C) | 29.5 | 42.4 | 31.9 | 40.2 | |

| No. 5 (°C) | 29.5 | 42.7 | 33 | 40.6 | |

| No. 6 (°C) | 29.3 | 45 | 31 | 41 | |

| No. 7 (°C) | 29 | 44.5 | 31.5 | 43.6 | |

| Atmospheric temperature (°C) | 16.5 | 28.1 | 16.5 | 20.1 | |

| Number of buried sensors (ea) | 2 | 7 | 3 | 7 | |

| Output | Material level (m) | 9.83 | 19.89 | 11.11 | 19.76 |

| Parameters | Basic Values |

|---|---|

| Number of input neurons | 9 |

| Number of hidden layers | 1 or 2 |

| Number of hidden neurons | 5, 9, 18, or 19 |

| Number of output neurons | 1 |

| Number of training dataset | 3080 |

| Number of test dataset | 3080 |

| Training algorithm | Scaled conjugate gradient |

| Transfer function | Logsig (hidden), purelin (output) |

| Learning rate | 0.01 |

| Momentum | 0.9 |

| Parameters | Basic Values |

|---|---|

| Number of input neurons | ts (= 3–6) |

| Number of hidden layers | 2 |

| Number of hidden neurons | Ts |

| Number of output neurons | 1 |

| Gen | 150 |

| Npop | 10 × ts |

| Case No. | Feature Combination | Performance | ||

|---|---|---|---|---|

| MAE | MSE | R | ||

| 1 | F1 | 0.4634 | 0.1156 | 0.96843 |

| 2 | F1 + F2 | 0.4266 | 0.0861 | 0.9789 |

| 3 | F1 + F3 | 0.2602 | 0.0531 | 0.98738 |

| 4 | F1 + F2 + F3 | 0.2528 | 0.0371 | 0.99107 |

| Case | Number of Hidden Layers | Structure | Performance | |||||

|---|---|---|---|---|---|---|---|---|

| Training | Test | |||||||

| MAE | MSE | R | MAE | MSE | R | |||

| 1 | 1 | 9-5-1 | 0.2768 | 0.164 | 0.98055 | 0.2859 | 0.2258 | 0.9758 |

| 2 | 9-9-1 | 0.2786 | 0.1179 | 0.98669 | 0.2878 | 0.2545 | 0.96896 | |

| 3 | 9-18-1 | 0.2587 | 0.0942 | 0.9893 | 0.2805 | 0.2771 | 0.96589 | |

| 4 | 9-19-1 | 0.2646 | 0.0786 | 0.9912 | 0.2709 | 0.1808 | 0.97421 | |

| 5 | 2 | 9-5-5-1 | 0.2528 | 0.1037 | 0.98875 | 0.2934 | 0.2088 | 0.97274 |

| 6 | 9-9-9-1 | 0.2501 | 0.0794 | 0.99082 | 0.2735 | 0.1545 | 0.98406 | |

| 7 | 9-18-18-1 | 0.2434 | 0.0615 | 0.99277 | 0.2652 | 0.2463 | 0.97135 | |

| 8 | 9-19-19-1 | 0.2377 | 0.0315 | 0.99612 | 0.2391 | 0.188 | 0.9807 | |

| Number of Measurement Points | Structure | Performance | |||||

|---|---|---|---|---|---|---|---|

| Training | Test | ||||||

| MAE | MSE | R | MAE | MSE | R | ||

| 3 | 5-11-11-1 | 0.3384 | 0.0415 | 0.98939 | 0.408 | 0.2016 | 0.97290 |

| 4 | 6-13-13-1 | 0.1772 | 0.0234 | 0.99463 | 0.2152 | 0.1087 | 0.97399 |

| 5 | 7-15-15-1 | 0.1707 | 0.0189 | 0.99561 | 0.1878 | 0.1071 | 0.97771 |

| 6 | 8-17-17-1 | 0.141 | 0.0078 | 0.99795 | 0.1541 | 0.0919 | 0.98393 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rhee, J.H.; Kim, S.I.; Lee, K.M.; Kim, M.K.; Lim, Y.M. Optimization of Position and Number of Hotspot Detectors Using Artificial Neural Network and Genetic Algorithm to Estimate Material Levels Inside a Silo. Sensors 2021, 21, 4427. https://doi.org/10.3390/s21134427

Rhee JH, Kim SI, Lee KM, Kim MK, Lim YM. Optimization of Position and Number of Hotspot Detectors Using Artificial Neural Network and Genetic Algorithm to Estimate Material Levels Inside a Silo. Sensors. 2021; 21(13):4427. https://doi.org/10.3390/s21134427

Chicago/Turabian StyleRhee, Jeong Hoon, Sang Il Kim, Kang Min Lee, Moon Kyum Kim, and Yun Mook Lim. 2021. "Optimization of Position and Number of Hotspot Detectors Using Artificial Neural Network and Genetic Algorithm to Estimate Material Levels Inside a Silo" Sensors 21, no. 13: 4427. https://doi.org/10.3390/s21134427