Validation of Architecture Effectiveness for the Continuous Monitoring of File Integrity Stored in the Cloud Using Blockchain and Smart Contracts

Abstract

:1. Introduction

2. Background

2.1. Distributed Ledger Technology

2.2. Blockchain

2.3. Smart Contracts

2.4. Blockchain Platforms

2.4.1. Ethereum

2.4.2. Hyperledger Fabric

2.5. Solidity

3. Related Works

3.1. Monitoring the Integrity of Files Stored in the Cloud

3.2. Blockchain and Smart Contracts

4. Architecture for Monitoring the Integrity of Files in the Cloud

4.1. Roles

4.1.1. Client

- to encrypt the file to be stored in the cloud;

- to generate the information necessary to verify the integrity of the copies of the file during the storage period;

- to prepare and insert an instance of CFSMC with file information for each stored copy in the BN;

- to select the CSS and submit copies of the file for storage;

- to generate challenges for audit purposes when requested by the CSS;

- to hire/renew the ICS responsible for monitoring the integrity of the file copy stored in each CSS, sending the necessary information for this service execution in the contracted period.

4.1.2. Cloud Storage Service

- to receive the requisition for storage and the client’s file contents;

- to check the integrity of the received file;

- to audit the compatibility of the contents of the received file with the integrity verification information generated by the client;

- to record the acceptance of both the file storage request and the respective CFSMC;

- to reply to challenges generated by the ICS to verify the integrity of the stored files;

- to allow, at any time, the download of a copy of a stored file exclusively to the client who submitted it.

4.1.3. Integrity Check Service

- to provide functionality that allows the client to contract their services directly and autonomously;

- after being hired, to receive information to check the integrity of the file stored in the CSS from the client and store it;

- to generate daily challenges to verify the integrity of files stored in each monitored CSS, according to the trust level assigned to it;

- to register challenges in the BN using the linked CFSMC instance of each checked file;

- to check daily for the existence and validity of pending challenges;

- to check daily the results obtained by validating the responses received to the challenges;

- to immediately inform the client whenever the ICS identifies a breach of integrity or failure in the CSS.

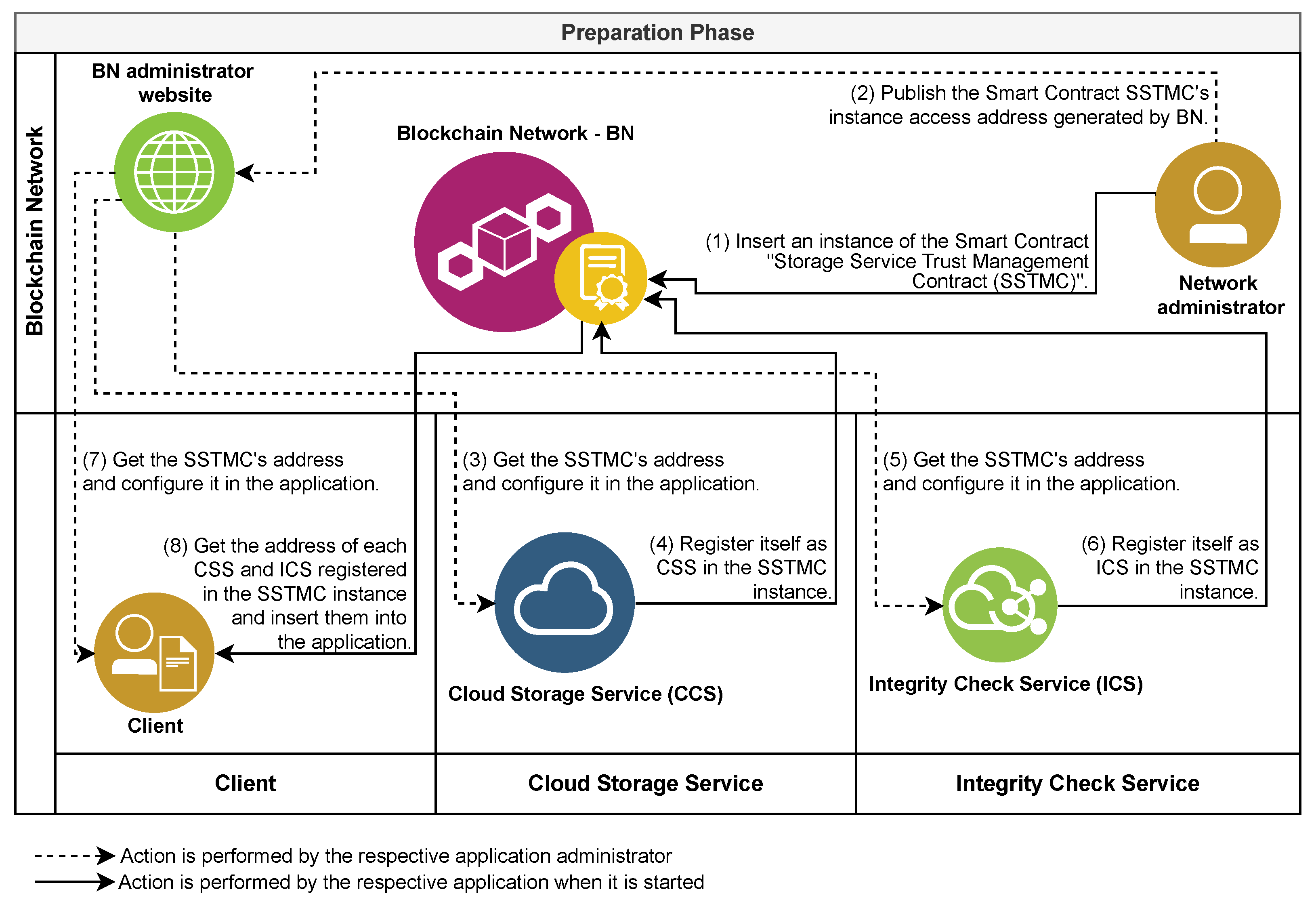

4.1.4. Blockchain Network

- to store one or more instances of SSTMCs;

- to store an instance of CFSMC for each file stored by a client on a CSS;

- to maintain a public record of CSPs interested in providing services as a CSS or ICS;

- to store both the storage contract data and information in each CFSMC instance to validate the answers to the integrity verification challenges;

- to receive, store, and make available to clients the challenge requests for auditing generated by CSSs;

- to receive, store, and make available to CSSs the challenges of verifying the integrity of the files stored therein;

- to receive and validate the responses to the challenges, storing them together with the result of the validation;

- to calculate and store a trust value for each CSS from the results of the challenges generated by all ICS providers, and share the results with all other roles.

4.2. Smart Contracts

4.2.1. Storage Service Trust Management Contract

4.2.2. Cloud File Storage and Monitoring Contract

4.3. Architecture Processes

4.4. Preparation Phase

4.5. Storage Phase

4.5.1. Selection, Preparation, and Submission of Files for Storage in the Cloud

4.5.2. File Storage Request Audit and Acceptance

4.5.3. Hiring Service for Monitoring File Integrity

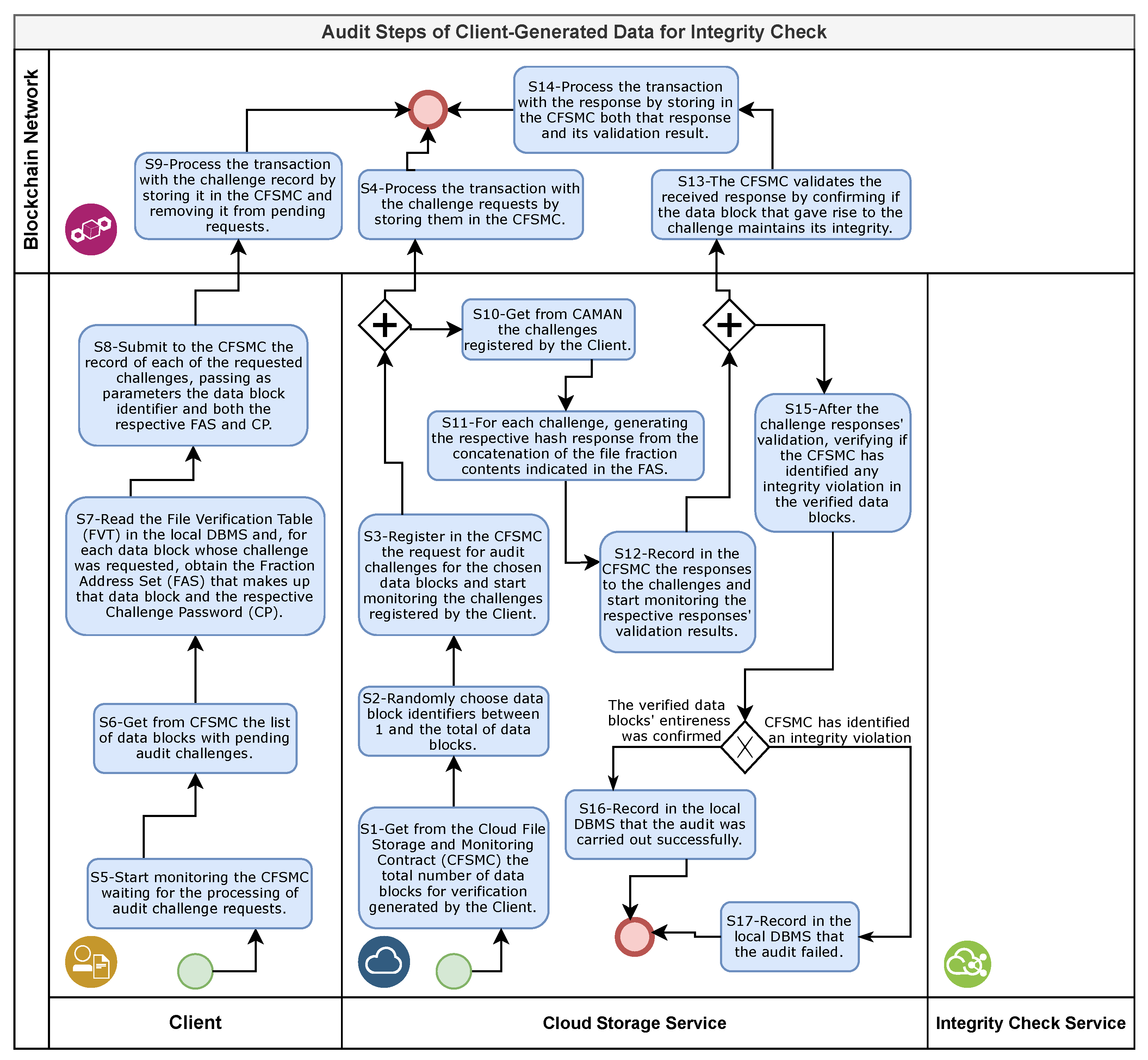

4.6. Integrity Verification Phase

4.6.1. Challenge Generation and Submission

4.6.2. Verification of Previous Challenges

4.6.3. Generation, Submission and Verification of the Responses to the Challenges

4.6.4. Trust Value Calculation

| Algorithm 1. Reduction in the trust value. |

| if then |

| else |

| if then |

| else |

| if then |

| else |

| end if |

| end if |

| end if |

| Algorithm 2. Increase in the trust value. |

| if then |

| else |

| if then |

| else |

| if then |

| else |

| end if |

| end if |

| end if |

5. Architecture Validation

5.1. Infrastructure

5.2. File Submission

5.3. Effectiveness and Efficiency Validation

6. Security Analysis

6.1. Research Limitations

6.2. Resistance against Attacks

- Attack 1.

- Attack 2.

- Attack 3.

- Attack 4.

- Attack 5.

- Attack 6.

- Attack 7.

- Attack 8.

7. Limitations

8. Conclusions

Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brazil. Medida Provisória n° 2.200-2, de 24 de agosto de 2001. In Diário Oficial [da] República Federativa do Brasil; Section 1; Poder Executivo: Brasília, Brazil, 2001. [Google Scholar]

- United States of America. Public Law 106-229: Electronic Signatures in Global and National Commerce Act. Available online: https://www.govinfo.gov/content/pkg/PLAW-106publ229/pdf/PLAW-106publ229.pdf (accessed on 27 May 2021).

- Brazil. Arquivo Nacional. Conselho Nacional de Arquivos. Classificação, Temporalidade e Destinação de Documento de Arquivo; Arquivo Nacional: Rio de Janeiro, Brazil, 2001. [Google Scholar]

- United Kingdom. The Money Laundering, Terrorist Financing and Transfer of Funds (Information on the Payer) Regulations. Available online: https://www.legislation.gov.uk/uksi/2017/692 (accessed on 27 May 2021).

- Amaral, D.M.; Gondim, J.J.; Albuquerque, R.D.O.; Orozco, A.L.S.; Villalba, L.J.G. Hy-SAIL. IEEE Access 2019, 7, 90082–90093. [Google Scholar] [CrossRef]

- Tang, X.; Huang, Y.; Chang, C.C.; Zhou, L. Efficient Real-Time Integrity Auditing With Privacy-Preserving Arbitration for Images in Cloud Storage System. IEEE Access 2019, 7, 33009–33023. [Google Scholar] [CrossRef]

- Zhao, H.; Yao, X.; Zheng, X.; Qiu, T.; Ning, H. User stateless privacy-preserving TPA auditing scheme for cloud storage. J. Netw. Comput. Appl. 2019, 129, 62–70. [Google Scholar] [CrossRef]

- Wang, F.; Xu, L.; Wang, H.; Chen, Z. Identity-based non-repudiable dynamic provable data possession in cloud storage. Comput. Electr. Eng. 2018, 69, 521–533. [Google Scholar] [CrossRef]

- Jeong, J.; Joo, J.W.J.; Lee, Y.; Son, Y. Secure cloud storage service using bloom filters for the internet of things. IEEE Access 2019, 7, 60897–60907. [Google Scholar] [CrossRef]

- Pinheiro, A.; Dias Canedo, E.; de Sousa Junior, R.; de Oliveira Albuquerque, R.; García Villalba, L.; Kim, T.H. Security Architecture and Protocol for Trust Verifications Regarding the Integrity of Files Stored in Cloud Services. Sensors 2018, 18, 753. [Google Scholar] [CrossRef] [Green Version]

- Pinheiro, A.; Canedo, E.D.; De Sousa, R.T.; Albuquerque, R.D.O. Monitoring File Integrity Using Blockchain and Smart Contracts. IEEE Access 2020, 8, 198548–198579. [Google Scholar] [CrossRef]

- Google. Google Cloud Platform—Cloud Storage. Available online: https://cloud.google.com/storage (accessed on 2 June 2021).

- Amazon. Amazon Simple Storage Service (Amazon S3). Available online: http://aws.amazon.com/pt/s3 (accessed on 2 June 2021).

- Microsoft. Microsoft Azure—Storage. Available online: https://azure.microsoft.com/en-us/services/storage (accessed on 2 June 2021).

- Walport, M. Distributed Ledger Technology; Technical Report; UK Government Office Science: London, UK, 2016. [Google Scholar]

- Hill, B.; Chopra, S.; Valencourt, P.; Prusty, N. Blockchain Developer’s Guide; Packt Publishing: Birmingham, UK, 2018. [Google Scholar]

- Sunyaev, A. Distributed ledger technology. In Internet Computing; Springer: Cham, Switzerland, 2020; pp. 265–299. [Google Scholar] [CrossRef]

- Lange, M.; Leiter, S.C.; Alt, R. Defining and Delimitating Distributed Ledger Technology. In Business Process Management: Blockchain and Central and Eastern Europe Forum. BPM 2019; Lecture Notes in Business Information Processing; Di Ciccio, C., Gabryelczyk, R., García-Bañuelos, L., Hernaus, T., Hull, R., Štemberger, M.I., Kő, A., Staples, M., Eds.; Springer: Cham, Switzerland, 2019; Volume 361, pp. 43–54. [Google Scholar] [CrossRef]

- Fernando, D.; Ranasinghe, N. Permissioned Distributed Ledgers for Land Transactions; A Case Study. In Business Process Management: Blockchain and Central and Eastern Europe Forum. BPM 2019; Lecture Notes in Business Information Processing; Di Ciccio, C., Gabryelczyk, R., García-Bañuelos, L., Hernaus, T., Hull, R., Štemberger, M.I., Kő, A., Staples, M., Eds.; Springer: Cham, Switzerland, 2019; Volume 361, pp. 136–150. [Google Scholar] [CrossRef]

- Maull, R.; Godsiff, P.; Mulligan, C.; Brown, A.; Kewell, B. Distributed ledger technology. Strateg. Chang. 2017, 26, 481–489. [Google Scholar] [CrossRef]

- Ølnes, S.; Ubacht, J.; Janssen, M. Blockchain in government. Gov. Inf. Q. 2017, 34, 355–364. [Google Scholar] [CrossRef] [Green Version]

- Alharby, M.; van Moorsel, A. Blockchain-based smart contracts. Comput. Sci. Inf. Technol. 2017, 7, 125–140. [Google Scholar] [CrossRef]

- Abeyratne, S.A.; Monfared, R.P. Blockchain ready manufacturing supply chain using distributed ledger. Int. J. Res. Eng. Technol. 2016, 5, 1–10. [Google Scholar] [CrossRef]

- Cong, L.W.; He, Z. Blockchain disruption and smart contracts. Rev. Financ. Stud. 2019, 32, 1754–1797. [Google Scholar] [CrossRef]

- Wüst, K.; Gervais, A. Do you need a blockchain? In Proceedings of the 2018 Crypto Valley Conference on Blockchain Technology (CVCBT), Zug, Switzerland, 20–22 June 2018; pp. 45–54. [Google Scholar] [CrossRef]

- Christidis, K.; Devetsikiotis, M. Blockchains and smart contracts for the internet of things. IEEE Access 2016, 4, 2292–2303. [Google Scholar] [CrossRef]

- Bitcoin.org. Bitcoin. Available online: https://bitcoin.org/ (accessed on 28 May 2021).

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System. Available online: https://bitcoin.org/bitcoin.pdf (accessed on 20 January 2020).

- Ethereum Foundation. Ethereum. Available online: https://ethereum.org/ (accessed on 28 May 2021).

- Buterin, V. Ethereum White Paper: A Next Generation Smart Contract & Decentralized Application Platform. 2013. Available online: http://bitpaper.info/paper/5634472569470976 (accessed on 2 April 2020).

- Kraft, D.; Castellucci, R.; Rand, J.; Roberts, B.; Bisch, J.; Colosimo, A.; Conrad, P.; Bodiwala, A.; Dam, L. Namecoin: Decentralize All the Things. Available online: https://www.namecoin.org/ (accessed on 30 May 2021).

- Kalodner, H.A.; Carlsten, M.; Ellenbogen, P.; Bonneau, J.; Narayanan, A. An Empirical Study of Namecoin and Lessons for Decentralized Namespace Design. In Proceedings of the Workshop on the Economics of Information Security (WEIS), Delft, The Netherlands, 22–23 June 2015. [Google Scholar]

- Coin Sciences Ltd. Multichain: Enterprise Blockchain. That Actually Works. Available online: https://www.multichain.com/ (accessed on 30 May 2021).

- Electric Coin Co. Zcash. Available online: https://z.cash/ (accessed on 30 May 2021).

- The Monero Project. Monero: A Private Digital Currency. Available online: https://www.getmonero.org/ (accessed on 28 May 2021).

- Noether, S.; Noether, S. Monero Is Not That Mysterious. 2014. Available online: https://web.getmonero.org/ru/resources/research-lab/pubs/MRL-0003.pdf (accessed on 1 June 2021).

- The Linux Foundation. Hyperledger: Advancing Business Blockchain Adoption through Global Open Source Collaboration. Available online: https://www.hyperledger.org/ (accessed on 2 June 2021).

- Androulaki, E.; Barger, A.; Bortnikov, V.; Cachin, C.; Christidis, K.; De Caro, A.; Enyeart, D.; Ferris, C.; Laventman, G.; Manevich, Y.; et al. Hyperledger fabric: A distributed operating system for permissioned blockchains. In Proceedings of the European Conference on Computer Systems (EuroSys), Porto, Portugal, 23–26 April 2018; pp. 1–15. [Google Scholar] [CrossRef] [Green Version]

- Maltseva, D. 10 Most Popular & Promising Blockchain Platforms. Available online: https://dev.to/dianamaltseva8/10-most-popular--promising-blockchain-platforms-djo (accessed on 26 May 2021).

- Subramanian, B. Top 5 Use Cases and Platforms of Blockchain Technology; Technical Report; Data Science Foundation: Altrincham, UK, 2019. [Google Scholar]

- Sonee, S. 5 Best Platform for Building Blockchain-Based Applications; Technical Report; Hash Studioz: HQ-Noida, India, 2020. [Google Scholar]

- Sajana, P.; Sindhu, M.; Sethumadhavan, M. On blockchain applications. Int. J. Pure Appl. Math. 2018, 118, 2965–2970. [Google Scholar]

- Ethereum Foundation. Solidity. Available online: https://solidity.readthedocs.io/ (accessed on 10 May 2020).

- Yuan, R.; Xia, Y.B.; Chen, H.B.; Zang, B.Y.; Xie, J. Shadoweth: Private smart contract on public blockchain. J. Comput. Sci. Technol. 2018, 33, 542–556. [Google Scholar] [CrossRef]

- Valenta, M.; Sandner, P. Comparison of Ethereum, Hyperledger Fabric and Corda; Frankfurt School Blockchain Center: Frankfurt am Main, Germany, 2017. [Google Scholar]

- Wood, G. Ethereum: A Secure Decentralised Generalised Transaction Ledger. Available online: https://files.gitter.im/ethereum/yellowpaper/VIyt/Paper.pdf (accessed on 25 April 2020).

- Vo-Cao-Thuy, L.; Cao-Minh, K.; Dang-Le-Bao, C.; Nguyen, T.A. Votereum: An Ethereum-Based E-Voting System. In Proceedings of the 2019 IEEE-RIVF International Conference on Computing and Communication Technologies (RIVF), Danang, Vietnam, 20–22 March 2019; pp. 1–6. [Google Scholar]

- Crafa, S.; Di Pirro, M.; Zucca, E. Is solidity solid enough? In Financial Cryptography and Data Security; Springer: Cham, Switzerland, 2019; pp. 138–153. [Google Scholar] [CrossRef]

- Kiayias, A.; Zindros, D. Proof-of-work sidechains. In Financial Cryptography and Data Security; Springer: Cham, Switzerland, 2019; pp. 21–34. [Google Scholar] [CrossRef]

- Maymounkov, P. Online Codes; Technical Report; New York University: New York, NY, USA, 2002. [Google Scholar]

- Pugh, W. Skip lists. Commun. ACM 1990, 33, 668–676. [Google Scholar] [CrossRef]

- Erway, C.C.; Küpçü, A.; Papamanthou, C.; Tamassia, R. Dynamic provable data possession. ACM Trans. Inf. Syst. Secur. 2015, 17, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Shacham, H.; Waters, B. Compact proofs of retrievability. In Advances in Cryptology. ASIACRYPT 2008; Lecture Notes in Computer Science; Pieprzyk, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5350, pp. 90–107. [Google Scholar] [CrossRef] [Green Version]

- Boneh, D. The decision diffie-hellman problem. In Algorithmic Number Theory. ANTS 1998; Lecture Notes in Computer Science; Buhler, J.P., Ed.; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1423, pp. 48–63. [Google Scholar] [CrossRef]

- Diffie, W.; Hellman, M. New directions in cryptography. IEEE Trans. Inf. Theory 1976, 22, 644–654. [Google Scholar] [CrossRef] [Green Version]

- Galindo, D.; Garcia, F.D. A Schnorr-like lightweight identity-based signature scheme. In Progress in Cryptology. AFRICACRYPT 2009; Lecture Notes in Computer Science; Preneel, B., Ed.; Springer: Berlin, Heidelberg, 2009; Volume 5580, pp. 135–148. [Google Scholar] [CrossRef] [Green Version]

- Bloom, B.H. Space/time trade-offs in hash coding with allowable errors. Commun. ACM 1970, 13, 422–426. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, C.; Lin, X.; Shen, X.S. Blockchain-based public integrity verification for cloud storage against procrastinating auditors. IEEE Trans. Cloud Comput. 2019. [Google Scholar] [CrossRef] [Green Version]

- Boneh, D.; Lynn, B.; Shacham, H. Short signatures from the Weil pairing. J. Cryptol. 2004, 17, 297–319. [Google Scholar] [CrossRef] [Green Version]

- Rahalkar, C.; Gujar, D. Content Addressed P2P File System for the Web with Blockchain-Based Meta-Data Integrity. In Proceedings of the 2019 International Conference on Advances in Computing, Communication and Control (ICAC3), Mumbai, India, 20–21 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Meroni, G.; Plebani, P.; Vona, F. Trusted artifact-driven process monitoring of multi-party business processes with blockchain. In Business Process Management: Blockchain and Central and Eastern Europe Forum. BPM 2019; Lecture Notes in Business Information Processing; Hildebrandt, T., van Dongen, B., Rolinger, M., Mendling, J., Eds.; Springer: Cham, Switzerland, 2019; Volume 361, pp. 55–70. [Google Scholar] [CrossRef] [Green Version]

- Christen, P.; Ranbaduge, T.; Schnell, R. Linking Sensitive Data; Springer Nature: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Wang, S.; Tang, X.; Zhang, Y.; Chen, J. Auditable Protocols for Fair Payment and Physical Asset Delivery Based on Smart Contracts. IEEE Access 2019, 7, 109439–109453. [Google Scholar] [CrossRef]

- Consensys. Ethereum Smart Contract Security Best Practices. Available online: https://consensys.github.io/smart-contract-best-practices/ (accessed on 10 December 2020).

| Method | Group | Function | Execution |

|---|---|---|---|

| authorizeContract | 2nd | Record acceptance of a storage contract (instance of the CFSMC) | CSS registered |

| decrementTrustValue | 3rd | Run the calculation process to reduce the TV/CSS | CFSMC |

| getStakeholderName | 1st | Provide the name of a CSS/ICS | Public |

| getStakeholders | 1st | Provide a list of registered and available CSSs or ICSs for hire | Public |

| getStakeholderUrl | 1st | Provide the URL to access the services of the CSS/ICS | Public |

| getTrustLevel | 3rd | Provide the trust level assigned to the CSS computed from the updated TV/CSS | Public |

| getTrustValue | 3rd | Provide the updated TV/CSS | Public |

| incrementTrustValue | 3rd | Run the calculation process to increase TV/CSS | CFSMC |

| isAuthorized | 2nd | Inform if the requesting CFSMC instance was accepted by the CSS | CFSMC |

| isStakeholderRegistered | 1st | Inform if a CSS/ICS is registered and available for hire | Public |

| registerStakeholder | 1st | Allow CSPs to perform their own registration and offer their services as a CSS or ICS | CSSs and ICSs |

| removeStakeholder | 1st | Allow a CSP to block its own registration so that clients can no longer hire it as a CSS/ICS | CSS and ICS registered |

| Trust Level | Trust Value Range | Checked per Day | ||

|---|---|---|---|---|

| % from Files | % of the File | DataBlocks | ||

| Very high trust | , | 1 | ||

| High trust | , | 2 | ||

| Medium-high trust | , | 3 | ||

| Low-medium trust | , | 4 | ||

| Low trust | , | 5 | ||

| Low distrust | , | 6 | ||

| Low-medium distrust | , | 8 | ||

| Medium-high distrust | , | 10 | ||

| High distrust | , | 12 | ||

| Very high distrust | , | 14 | ||

| Attribute | Group | Description |

|---|---|---|

| blocks | 2nd | Associative matrix key/value containing the verification hash (VH) of each data block generated by the client to verify file integrity through its fractions |

| challenges | 2nd | Associative matrix key/value containing the challenges to verify the integrity of the file |

| chunkSize | 1st | Size in bytes of each of the 4096 file fractions. |

| clientAddress | 1st | Address that identifies the file owner (client) in the BN |

| cyclesFinalized | 2nd | Associative matrix key/value containing the identifiers of the verification cycles already completed, that is, those whose 256 challenges that compose the cycle have already been answered |

| fileId | 1st | Hash used to identify the stored file and verify its integrity when uploading or downloading |

| integrityCheckAgreementDue | 1st | End date of the current integrity verification contract |

| integrityCheckServiceAddress | 1st | Address that identifies the ICS hired to monitor the file integrity in the BN |

| requestedChallenges | 2nd | Associative matrix key/value containing challenge requests for audit purposes |

| storageLimitDate | 1st | End date of the file storage period contracted with the CSS |

| storageServiceAddress | 1st | Address that identifies the CSS hired to store the file in the BN |

| totalBlocks | 1st | Number of data blocks that will be used to verify the file integrity generated by the client before storing the file in the cloud |

| trustContract | 1st | SSTMC instance responsible for managing trust in the CSS that stores the file |

| Method | Group | Function | Execution |

|---|---|---|---|

| changeIntegrityCheckService | 2nd | Receive and replace the address in the BN of the ICS hired to perform the file integrity monitoring in the attribute integrityCheckServiceAddress | Client (file owner) |

| getChallenge | 3rd | Return the data for a challenge registered by the ICS/Client and stored as an element of the attribute challenges | Public |

| getChallengeStatus | 3rd | Return the challenge status according to the receipt of the response and its validation (pending, success, or failure) obtained from the respective element stored in the attribute challenges | Public |

| getPendingChallenges | 3rd | Return a list containing the identifiers of the pending challenges stored in the attribute challenges | Public |

| getTotalFailedChallenges | 3rd | Return the number of challenges registered with the situation “failed” in the attribute challenges | Public |

| getTotalStoredBlocks | 1st | Return the amount of VHs of the data blocks that have already been inserted by the client in the CFSMC (number of elements stored in the attribute blocks) | Public |

| getTrustManagementContract | 1st | Return the access address in the BN of the CFSMC instance chosen by the client to manage the trust in the CSS that stored the file, whose object is stored in the attribute trustContract | Public |

| get{ChunkSize, ClientAddress, FileId, StorageLimitDate, TotalBlocks} | 1st | Return the value stored in the respective attribute (chunkSize, clientAddress, fileId, storageLimitDate, totalBlocks) | Public |

| get{IntegrityCheckAgreement- Due, IntegrityCheckServiceAddress, StorageServiceAddress} | 2nd | Return the value stored in the respective attribute (integrityCheckAgreementDue, integrityCheckServiceAddress, storageServiceAddress) | Public |

| insertBlock | 3rd | Receive and store a set of VHs of data blocks in the attribute blocks | Client (file owner) |

| isAccepted | 1st | Inform if this CFSMC instance has already received acceptance from the CSS in the chosen SSTMC instance | Public |

| isCycleFinalized | 3rd | Inform if a particular verification cycle has already been completed, i.e., if its identifier is on the list of elements stored in the attribute cyclesFinalized | Public |

| isReady | 1st | Inform if the CFSMC is ready to be audited, i.e., if the number of VHs inserted (number of elements in the attribute blocks) is equal to the number of data blocks generated by the Client (attribute totalBlocks) | Public |

| replyChallenge | 3rd | Receive and validate the responses to the challenges, updating the respective element of the attribute challenges with the response and the result of the validation. | CSS hired |

| requestChallenge | 3rd | Receive and store an audit challenge request in the attribute requestedChallenges | CSS hired |

| setIntegrityCheckAgreementDue | 2nd | Receive and store the end date of the current integrity verification contract in the attribute integrityCheckAgreementDue | Client (file owner) |

| submitChallenge | 3rd | Receive and store the challenges for verifying the file integrity in the attribute challenges | Client and ICS hired |

| verifyChallenge | 3rd | Perform the validity check of the pending challenge stored as an element of the attribute challenges, changing its situation to failure if the challenge exceeded the maximum waiting for a response period (expired). | ICS hired |

| Size of | Storage Time | Average of Time Spent to | |||

|---|---|---|---|---|---|

| File | (Years) | Encrypt File | Hash File | Hash Data Blocks | Save Database |

| 52 MB | 1 | ||||

| 196 MB | 2 | ||||

| 243 MB | 3 | ||||

| 593 MB | 4 | ||||

| 750 MB | 5 | ||||

| 1 GB | 10 | ||||

| 2 GB | 15 | ||||

| 5 GB | 20 | ||||

| 10 GB | 25 | ||||

| File Corrupted | Failure Identification Day | ||

|---|---|---|---|

| CSS 1 | CSS 2 | CSS 3 | |

| Size of File | Storage Time | Days to Identify the Integrity Violation | ||

|---|---|---|---|---|

| Minimum | Maximum | Average | ||

| 50 MB | 1 year | 1 | 74 | |

| 5 GB | 20 years | 15 | 98 | |

| 750 MB | 5 years | 7 | 81 | |

| 200 MB | 2 years | 15 | 92 | |

| 1 GB | 10 years | 24 | 82 | |

| 600 MB | 4 years | 8 | 88 | |

| 250 MB | 3 years | 9 | 88 | |

| 2 GB | 15 years | 24 | 95 | |

| 10 GB | 25 years | 45 | 92 | |

| CSS | Checked Files | Days to Identify the Integrity Violation | ||

|---|---|---|---|---|

| Minimum | Maximum | Average | ||

| 1 | 27 | 7 | 92 | |

| 2 | 27 | 8 | 86 | |

| 3 | 27 | 1 | 98 | |

| General | 81 | 1 | 98 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinheiro, A.; Canedo, E.D.; Albuquerque, R.d.O.; de Sousa Júnior, R.T. Validation of Architecture Effectiveness for the Continuous Monitoring of File Integrity Stored in the Cloud Using Blockchain and Smart Contracts. Sensors 2021, 21, 4440. https://doi.org/10.3390/s21134440

Pinheiro A, Canedo ED, Albuquerque RdO, de Sousa Júnior RT. Validation of Architecture Effectiveness for the Continuous Monitoring of File Integrity Stored in the Cloud Using Blockchain and Smart Contracts. Sensors. 2021; 21(13):4440. https://doi.org/10.3390/s21134440

Chicago/Turabian StylePinheiro, Alexandre, Edna Dias Canedo, Robson de Oliveira Albuquerque, and Rafael Timóteo de Sousa Júnior. 2021. "Validation of Architecture Effectiveness for the Continuous Monitoring of File Integrity Stored in the Cloud Using Blockchain and Smart Contracts" Sensors 21, no. 13: 4440. https://doi.org/10.3390/s21134440