Abstract

The visual design elements and principles (VDEPs) can trigger behavioural changes and emotions in the viewer, but their effects on brain activity are not clearly understood. In this paper, we explore the relationships between brain activity and colour (cold/warm), light (dark/bright), movement (fast/slow), and balance (symmetrical/asymmetrical) VDEPs. We used the public DEAP dataset with the electroencephalogram signals of 32 participants recorded while watching music videos. The characteristic VDEPs for each second of the videos were manually tagged for by a team of two visual communication experts. Results show that variations in the light/value, rhythm/movement, and balance in the music video sequences produce a statistically significant effect over the mean absolute power of the Delta, Theta, Alpha, Beta, and Gamma EEG bands (p < 0.05). Furthermore, we trained a Convolutional Neural Network that successfully predicts the VDEP of a video fragment solely by the EEG signal of the viewer with an accuracy ranging from 0.7447 for Colour VDEP to 0.9685 for Movement VDEP. Our work shows evidence that VDEPs affect brain activity in a variety of distinguishable ways and that a deep learning classifier can infer visual VDEP properties of the videos from EEG activity.

1. Introduction

1.1. Overview

Visual communication design is the process of creating visually engaging content for the creative industries, to communicate specific messages and persuade in a way that eases the comprehension of the message and ensures a lasting impression on its recipient. Therefore, the main objective of visual communication design as a discipline, is the creation of visually attractive content that impacts the target audience through persuasion, the stimulation of emotions and the generation of sentiments. This objective is achieved through conscious manipulation of the inherent elements and principles, such as shape, texture, direction harmony, and colour that make up the designed product [1].

The elements of visual design describe the building blocks of a product’s aesthetics, and the principles of design tell how these elements should go together for the best results. Although there is no consensus on how many visual design elements and principles (VDEPs) there are, some of these design reference values have been thoroughly studied for centuries and are considered the basis of modern graphic design [2]. One of the most important breakthroughs in graphic design history is the theory of the Gestalt in the 1930s, which studied these VDEPs from a psychological point of view, and discovered that the notion of visual experience is inherently structured by the nature of the stimulus triggering it as it interacts with the visual nervous system [3]. Since then, several studies have explored the psychological principles of different VDEPs and their influence on their observers [4,5,6]. As an example, in colour theory, some colours have been experimentally proven to convey some semantic meanings, and they are often used to elicit particular emotions in the viewers of visual content [7,8,9].

Within this context, in recent years diverse research has been carried out to deepen our understanding of brain activity and visual perception [10]. Research findings on visual perception have been applied to real-world applications in a wide range of fields, including visual arts, branding, product management, and packaging design to increase consumer spending, creating engaging pedagogic content, and survey design, among others [11,12,13,14]. These works mostly focus on the VDEPs capability of eliciting specific emotions in the viewer and how to trigger the desired response from the viewer, such as favouring the learning of content or increasing the probability of purchasing some goods. Multiple approaches have recently addressed the study of human visual perception from within the fields of attention and perception psychology and computational intelligence with studies focused on the neuropsychology of attention and brain imaging techniques and their applications (fMRI or electroencephalogram, EEG) [14,15], pupillometry [16] or eye-tracking [17,18] as a scanning technique of information processing as well as neuroaesthetics [19], computational neuroscience [20], computational aesthetics [21], or neuromarketing, and consumer neuroscience studies [22,23], among others [24].

However, it is not yet known how the VDEPs impact human brain activity. In this regard, we turn our attention to the DEAP dataset [25], a multimodal dataset for the analysis of human affective states with EEG recordings and synchronous excerpts of music videos. Previous research carried out with DEAP dataset pursued emotion recognition from the EEG records by using 3D convolutional neural networks [26], a fusing of learned multi-modal representations and dense trajectories for emotional analysis in videos [27], studying arousal and valence classification model based on long short-term memory [28], DEAP data for mental healthcare management [28], or accurate EEG-based emotion recognition on combined features using deep convolutional neural networks, among others [29].

Beyond the information about levels of valence, arousal, like/dislike, familiarity, and dominance in the DEAP dataset, we sought to analyse the EEGs and videos to extract some of the most important VDEPs—Balance, Colour, Light, and Movement—and search for measurable effects of these on the observable EEG brain activity. The Balance, Colour, Light and Movement principles are fundamental for professional design pipelines and are universally considered to influence the emotions of the viewer, as designers rely on the contrasts, schemes, harmony, and interaction of colours to evoke reactions and moods in those who look at their creations [30,31,32,33].

The Balance refers to the visual positioning or distribution of objects in a composition, gives the visual representation of a sense of equality, and can be achieved through a symmetrical or asymmetrical composition to create a relationship of force between the various elements represented, either to compensate or, to the contrary, to decompensate the composition [6,34]. We speak of symmetrical balance when the elements are placed evenly on both sides of the axes. Secondly, the Colour helps communicate the message by attracting attention, setting the tone of the message, guiding the eye to where it needs to go and determines 90% of the choice of a product [35,36]. The warmth or coldness of a colour attends to subjective thermal sensations; it can be cold or warm depending on how it is perceived by the human eye and the interpretation of the thermal sensation it causes [36]. Thirdly, the Light or “value” refers to relative lightness and darkness and is perceived in terms of varying levels of contrast; it determines how light or dark an area looks [34,37]. Finally, Movement refers to the suggestion of motion using various elements and it is the strongest visual inducement to attention. Different studies have dissected the exact nature of our eye movement habits and the patterns our eyes trace over when viewing specific things [17,18]. Strongly connected with direction VDEPs, rhythm/movement provide designers with the chance to create final pieces with good flow from top to bottom, left to right, corner A to corner B, etc., [37]. By layering simple shapes of varying opacities, an abrupt change in the camera, a counterstroke or a tracking shot, or a sudden change in the subject’s action, it is possible to create a strong sense of speed and motion and determine an effect of mobility or immobility.

1.2. Background

1.2.1. EEG Symmetry Perception

Symmetry is known to be an important determinant of aesthetic preference. Sensitivity to symmetry has been studied extensively in adults, children, and infants with diverse research ranging from behavioural psychology to neuroscience. Adults detect symmetrical visual presentations more quickly and accurately than asymmetrical ones and remember them better [38,39]. The perception and appreciation of visual symmetry have been studied in several EEG/fMRI experiments, some of the most recent studies are focused on symmetric patterns with different luminance polarity (anti-symmetry) [40], the role of colour and attention-to-colour in mirror-symmetry perception [41], or contour polarity [42], among others.

1.2.2. EEG Colour Perception

The perception of colour is an important cognitive feature of the human brain and is a powerful descriptor that considerably expands our ability to characterize and distinguish objects by facilitating interactions with a dynamic environment [43]. Some of the most recent studies that have addressed the interactions of colour and human perception through EEG are the response of a human visual system to continuous colour variation [44], human brain perception and reasoning of image complexity for synthetic colour fractal and natural texture images via EEG [45], or neuromarketing studies based on the study of colour perception and the application of EEG power for the prediction and interpretation of consumer decision-making [46], and so forth.

1.2.3. EEG Brightness Perception

Brightness is one of the most important sources of perceptual processing and may have a strong effect on brain activity during visual processing [47]. Brain responses of the brain to changes in brightness were explored in different studies centred on the luminance and spatial attention effects on early visual processing [48], the grounding valence in brightness through shared relational structures [49] or the interaction of brightness and semantic content in the extrastriate visual cortex [50].

1.2.4. EEG Visual Motion Perception

Detecting the displacement of retinal image features has been studied for many years in both psychophysical and neurobiological experiments [51]. Visual motion perception has been explored through EEG technique in several lines of research such as the speed of neural visual motion perception and processing in the visuomotor reaction time of young elite table tennis athletes [52], visual motion perception for prospective control [53], or visual perception of motion to cortical activity, by evaluation of the association of quantified EEG parameters with a video film projection [54], the analysis of neural responses to motion-in-depth using EEG [55], or the examination of the time course of motion-in-depth perception [56].

In addition, recent research has sought to delve deeper into Brain-Computer Interfaces (BCI), an emerging area of research that aims to improve the quality of human-computer applications [57], and the relationship with Steady-State Visually Evoked Potentials (SSVEPS), a stimulus-locked oscillatory response to periodic visual stimulation commonly recorded in an electroencephalogram (EEG) studies in humans, through the use and execution of Convolutional Neural Networks (CNN) [58,59]. Some of these research works have focused on the review of the steady-state evoked activity, its properties, and the mechanisms of SSVEP generation, as well as the SSVEP-BCI paradigm and the recently developed SSVEP-based BCI systems [60]; studies focused on analysing Deep Learning-based classification for BCI through comparisons among various traditional classification algorithms to the newer methods of deep learning, exploring two different types of deep learning methods: CNN and Recurrent Neural Networks (RNN) with long short-term memory (LSTM) architecture [61]. In addition, recent research works proposed a novel CNN for the robust classification of an SSVEPS paradigm, where the authors measured electroencephalogram (EEG)-based SSVEPs for a brain-controlled exoskeleton under ambulatory conditions in which numerous artefacts may deteriorate decoding [62]. Another work that analyses SSVEPS by using CNNs is the work of S.Stober et al. [63], which analysed and classified EEG data recorded within a rhythm perception study. In this last case, the authors investigated the impact of the data representation and the pre-processing steps for this classification task and compared the different network structures.

1.3. Objective

It is not known whether predictors can be constructed to classify these VDEPs based on brain activity alone. The large body of previous research explored the effects of the VDEPs as isolated features, even though during human visual processing and perception many of them act simultaneously and are not appreciated individually by the viewer in different situations.

In this work, we present a novel methodology for exploring this relationship between VDEPs and brain activity in the form of EEGs available on the DEAP dataset. Our methodology consisted of combining the expert recognition and classification of VDEPs, statistical analysis, and deep learning techniques, which we used to successfully predict VDEPs solely from the EEG of the viewer. We tested whether:

- ➢

- There is a statistical relationship between the VDEPs of video fragments and the mean EEG frequency bands (δ, θ, α, β, γ) of the viewers.

- ➢

- A simple Convolutional Neural Network model can accurately predict the VDEPs in video content from the EEG activity of the viewer.

2. Materials and Methods

2.1. DEAP Dataset

The DEAP dataset is composed of the EEG records and peripheral physiological signals of 32 participants, which were recorded as each watched 40 1-min-long excerpts of music videos, relating to the levels of valence, arousal, like/dislike, familiarity, and dominance reported by each participant. Firstly, the ratings came from an online self-assessment where 120 1-min extracts of music videos were rated by volunteers based on emotion classification variables (namely arousal, valence, and dominance) [25]. Secondly, the ratings came from the participants’ ratings on these emotion variables, face video, and physiological signals (including EEG) of an experiment where 32 volunteers watched a subset of 40 of the abovementioned videos. The official dataset also includes the YouTube links of the videos used and the pre-processed physiological data (down sampling, EOG removal, filtering, segmenting, etc.) in MATLAB and NumPy (Python) format.

DEAP dataset pre-processing:

- The data were down sampled to 128 Hz.

- EOG artefacts were removed.

- A bandpass frequency filter from 4 to 45 Hz was applied.

- The data was averaged to the common reference.

- The EEG channels were reordered so that all the samples followed the same order.

- The data was segmented into 60-s trials and a 3-s pre-trial baseline was removed.

- The trials were reordered from presentation order to video (Experiment_id) order.

In our experiments, we use the provided pre-processed EEG data in NumPy format, as recommended by the dataset summary, since it is especially useful for testing classification and regression techniques without the need of processing the electrical signal first. This pre-processed signal contains 32 arrays corresponding to each of the 32 participants, with a shape of 40 × 40 × 8064. Each array contains data for each of the 40 videos/trials of the participant, signal data from 40 different channels (the first 32 of them being EEG signals and the remainder 8 being peripheral signals such as temperature and respiration) and 8064 EEG samples (63 s × 128 Hz). As we are not working with emotion in this work, the labels provided for the videos on the DEAP dataset were not used in this experiment. Instead, we retrieved the original videos from the URLs provided and performed an exhaustive classification of them on the studied VDEPs. Of the original 40 videos, 14 URLs pointed to videos that were taken down from YouTube as of the 4 June 2019, therefore, only 26 of the videos used in the original DEAP experiment were retrieved and classified. The generated dataset was updated on the 5 May 2021.

2.2. VDEP Tagging and Timestamps Pre-Processing

The researchers retrieved the 26 1-min videos from the original DEAP experiment. These video clips accounted for 1560 1-s timestamps. These videos were presented to two experts on visual design, who were tasked to tag each second of video on the studied VDEPs (Figure A1, Figure A2, Figure A3 and Figure A4), considering the following labels:

- ○

- Colour: “cold” (class 1), “warm” (class 2) and “unclear”.

- ○

- Balance: “asymmetrical” (class 1), “symmetrical” (class 2) and “unclear”.

- ○

- Movement: “fast” (class 1), “slow” (class 2) and “unclear”.

- ○

- Light: “bright” (class 1), “dark” (class 2) and “unclear”.

The timestamps tagged as “unclear” or where experts disagreed were discarded. On the other hand, the number of timestamps per class was unbalanced, because the music videos exhibited different visual aesthetics and characteristics among different sections within the same videos; for example, most of the timestamps were tagged as asymmetrical. Therefore, we computed a sub-sample for each VDEP, selecting nv random timestamps, where nv represents the number of cases in the smaller class. The resultant number of timestamps belonging to each class is displayed in Table 1. It is important to notice that all the participants provided the same amount of data points, therefore each second from Table 1 corresponds to 32 epochs, one for each participant. The four VDEPs are being considered as independent of each other, therefore, each second of video will appear classified only once in each of the four VDEPs, either within one of the two classes or as an unclear timestamp. The VDEPs tagging of the selected video samples from the DEAP dataset is available in Appendix A (Table A11).

Table 1.

The number of video seconds for each VDEP label.

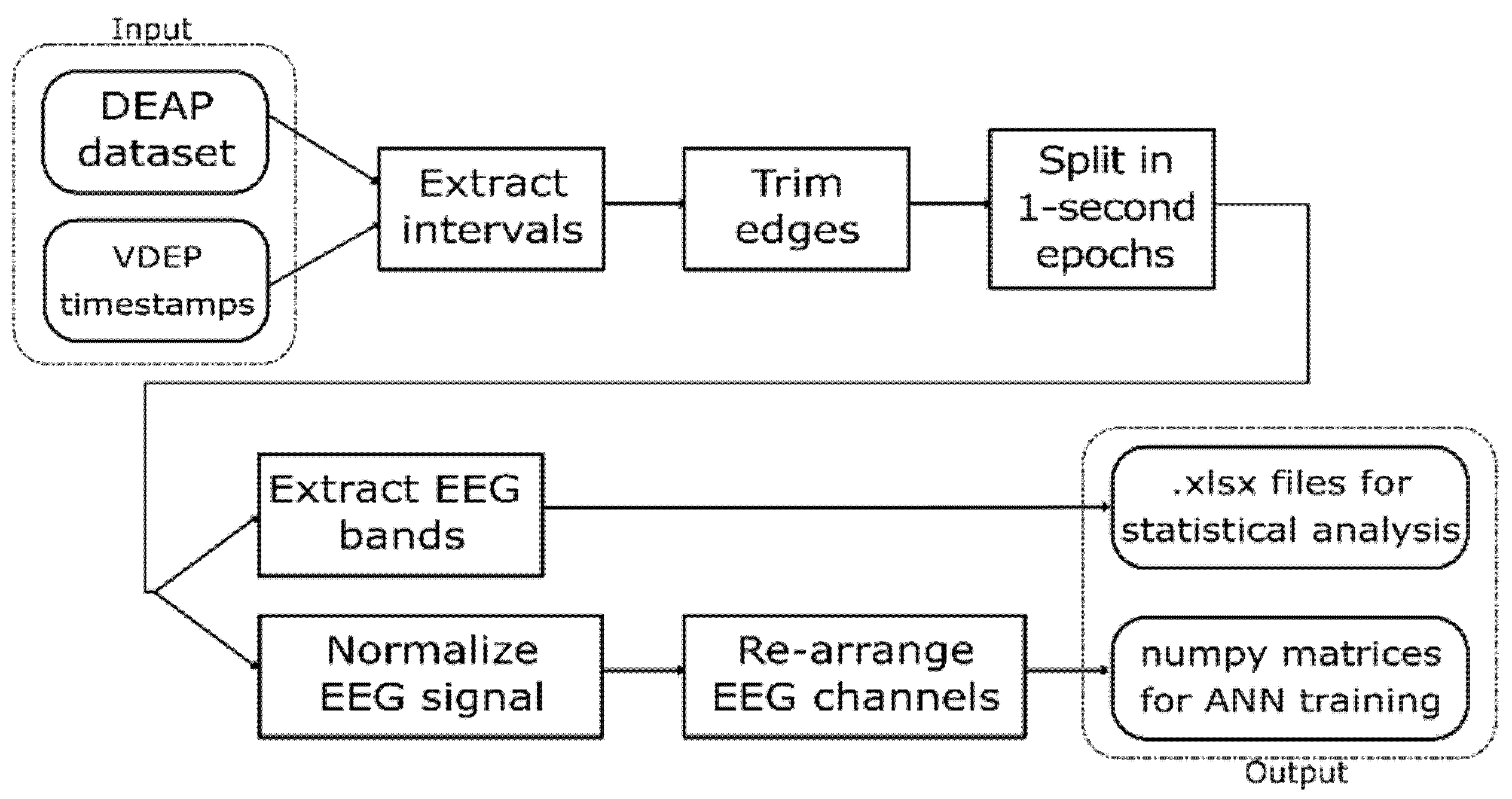

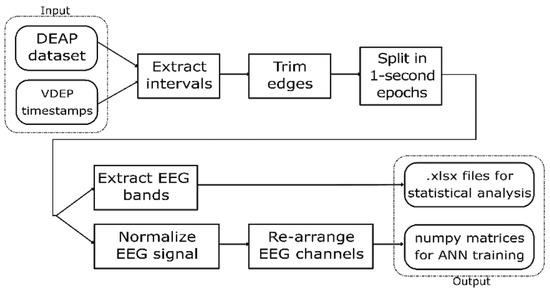

Since the DEAP dataset provides the EEG signal and the experts provided the VDEP timestamps for the videos used in the original experiment, an extraction-transformation process (ETP) was performed. In this process, we obtained a large set of 1-s samples from the DEAP dataset classified according to their VDEPs. The steps of this ETP are shown in Figure 1.

Figure 1.

VDEP extraction-transformation process (ETP) from the DEAP dataset.

This work uses statistical analysis as well as convolutional neural networks to analyse the relationship between VDEPs and the EEG, so we designed the ETP to return the outputs in two different formats, readily available to perform each of these techniques. The first output is a set of spreadsheets containing the EEG bands for the studied samples and their VDEP classes, which are used to find statistical relationships. The second output is a set of NumPy matrices with the shape of 128 × 32, each containing the 32 channels of a second of an EEG signal at 128 Hz and classified by their VDEP class that we used to train and test the artificial neural networks. This ETP was repeated four times, one for each VDEP studied in this work.

The first step of the ETP is the extraction of the intervals from the DEAP dataset according to the VDEP timestamps provided by the experts. In this step, we must take into consideration that the first 3 s of the EEG signal for each video trial in the DEAP dataset corresponds to a pre-trial for calibration purposes and therefore must be ignored when processing the signals. We also discard the channels numbered 33–40 in the DEAP dataset since they do not provide EEG data but peripheral signals, which are out of the scope of this work.

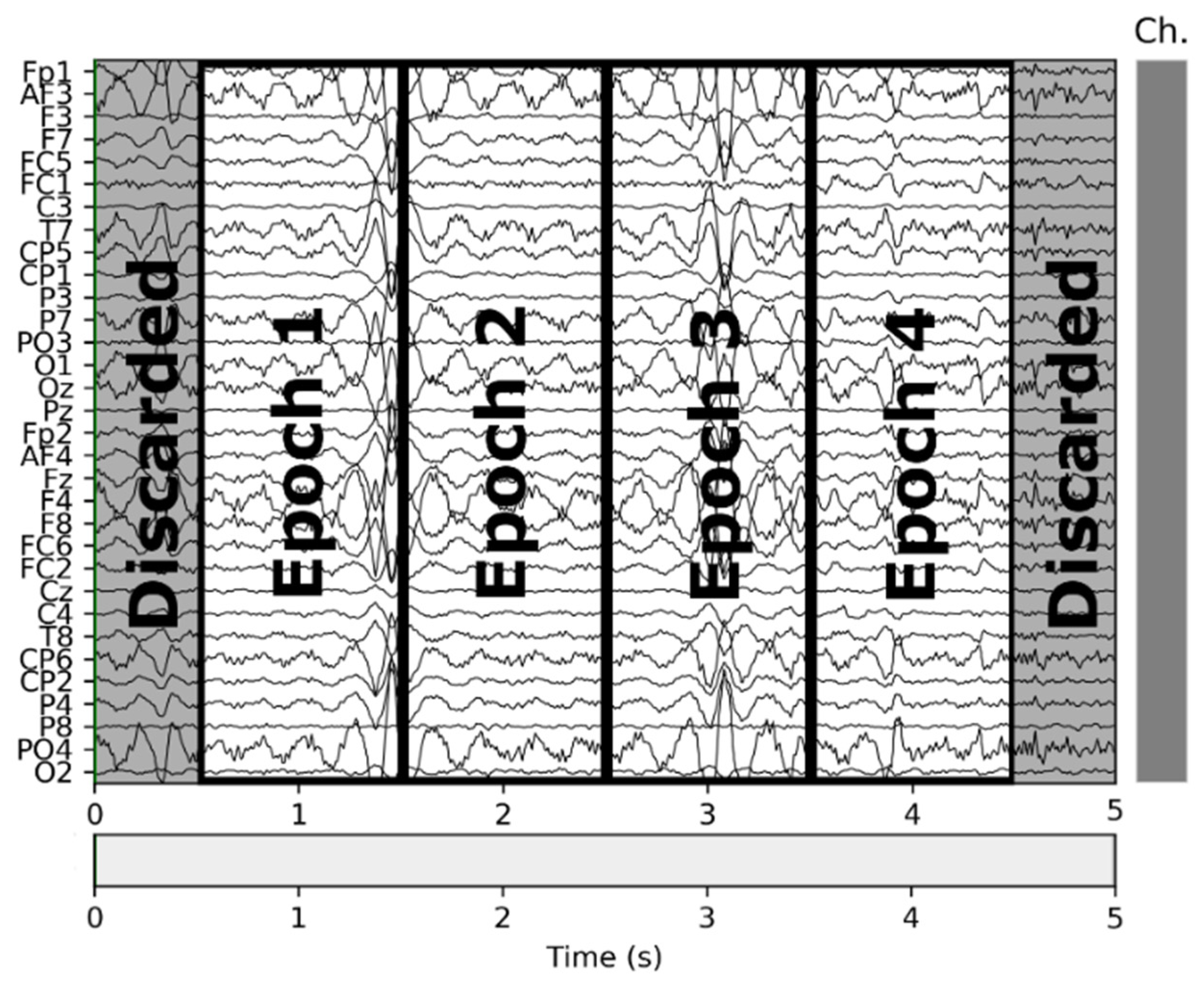

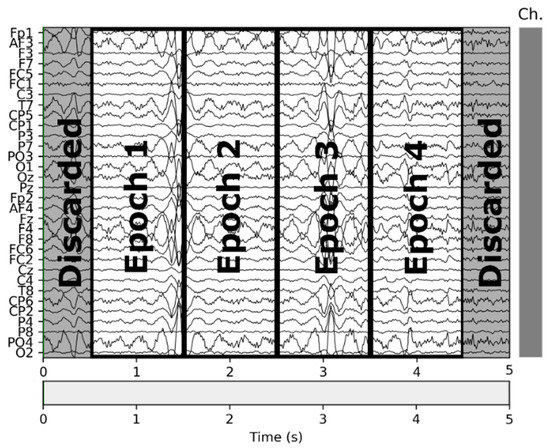

In the second step, we trimmed the first and last 0.5 s of each interval, and in the third step, we split the remainder of the signal in 1-s intervals. Given that the visual design experts extracted the VDEPs from the videos by 1-s segments, the removal of a full second of each interval (half a second on each end) strengthens the quality of the remaining samples by reducing the truncation error. An epoch is one second of a subject’s EEG with information about the VDEPs that is currently being displayed. When extracting epochs, subjects are not considered separately. For the authors, the subject has attributed a series of samples, as well as the rest of the subjects, to finally put them all together and obtain a set of epochs independently of the subject they come from. Therefore, from each second of the video, we extract 32 epochs (one for each subject). The samples are extracted from the remainder of the interval by taking 1-s samples, this approach mitigates the effects of the perception time, which is the delay between the occurrence of the stimuli and its perception by the brain [64]. In Figure 2 we show the resulting samples obtained from a 5-s interval classified in the same class.

Figure 2.

Epochs obtained from a 5-s segment.

These samples can be represented as (128 × 32) matrices and can be used to obtain EEG characteristics such as the delta, theta, alpha, beta, and gamma channels that we used in our statistical analysis. In our case, we used a basic FFT (Fast-Fourier Transform) filtering function to extract these time-domain waveforms [65].

The second output was used to train artificial neural networks (ANN), so in the ETP we normalized the values of the samples from their original values to the [0, 1] interval by using Equation (1), as normalized inputs have proven an increase on the learning rate of convolutional neural networks [66].

2.3. Convolutional Neural Network (CNN)

In this work, we used a generic CNN architecture typically used in image analysis consisting of a sequence of two-dimensional convolutional layers followed by a fully connected layer with sigmoid activation. The same network architecture was used in each of the VDEPs, and the neural network was trained using the previously extracted epochs, given that the epochs do not consider the subject from which they were extracted, it could be considered that all the subjects were equally fed into the model. The training process of the model was performed by randomly selecting 90% for the training sample and 10% for testing in a non-exhaustive 10-fold cross-validation execution. The performance metrics for the area under the ROC curve (AUC), accuracy and area under the precision-recall curve (PR-AUC) were computed for the validation set after each execution.

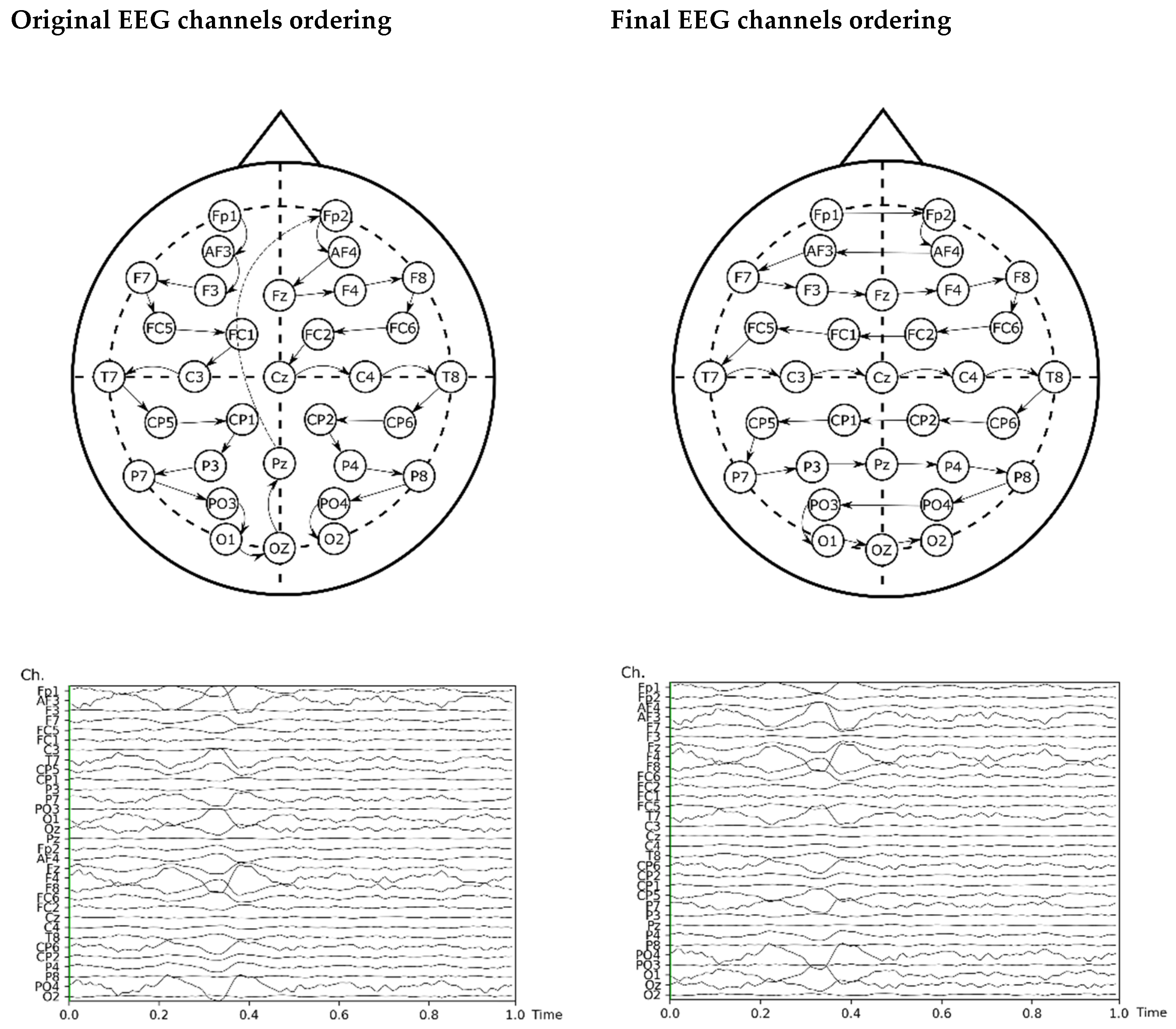

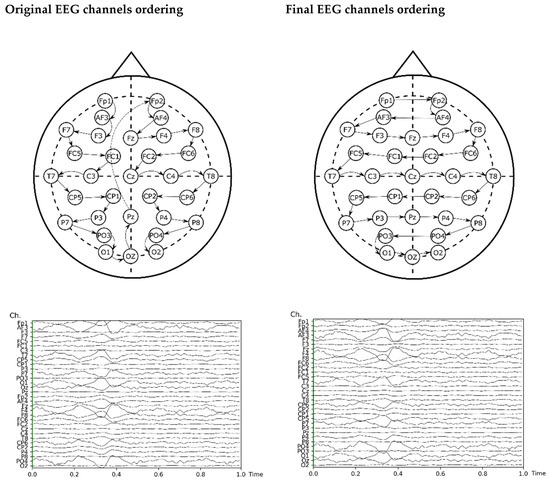

The input of the model re-arranged the EEG channels by proximity for improving the information of adjacent channels, which helps convolutional neural networks to learn more effectively [67]. The original ordering of the channels and the final order are shown in Figure 3, including a plot of input before and after this re-ordering.

Figure 3.

Re-arrangement of the EEG channels for the ANN analysis.

3. Results

We tested the normality of the distribution of measurements of the mean power measurements across all channels (δ, θ, α, β, and γ) using the Kolmogorov-Smirnov test with the Lilliefors Significance Correction. Then, we conducted Mann-Whitney U Tests to examine the differences on each of the mean power measurements according to the categories related to each of the VDEP criteria, e.g., the differences in the Alpha band between the Symmetrical and Asymmetrical categories for the Balance VDEP. The Mann-Whitney U test (also known as the Wilcoxon rank-sum test) was chosen to test the null hypothesis that there is a probability of 0.5 that a randomly drawn observation for one group is larger than a randomly drawn observation from the other.

The Kolmogorov-Smirnov test proved that the distribution of measurements of the mean δ, θ, α, β, and γ bands of the EEGs provided in the DEAP were not normally distributed, with p < 0.0001 in all cases, as shown in Table A1.

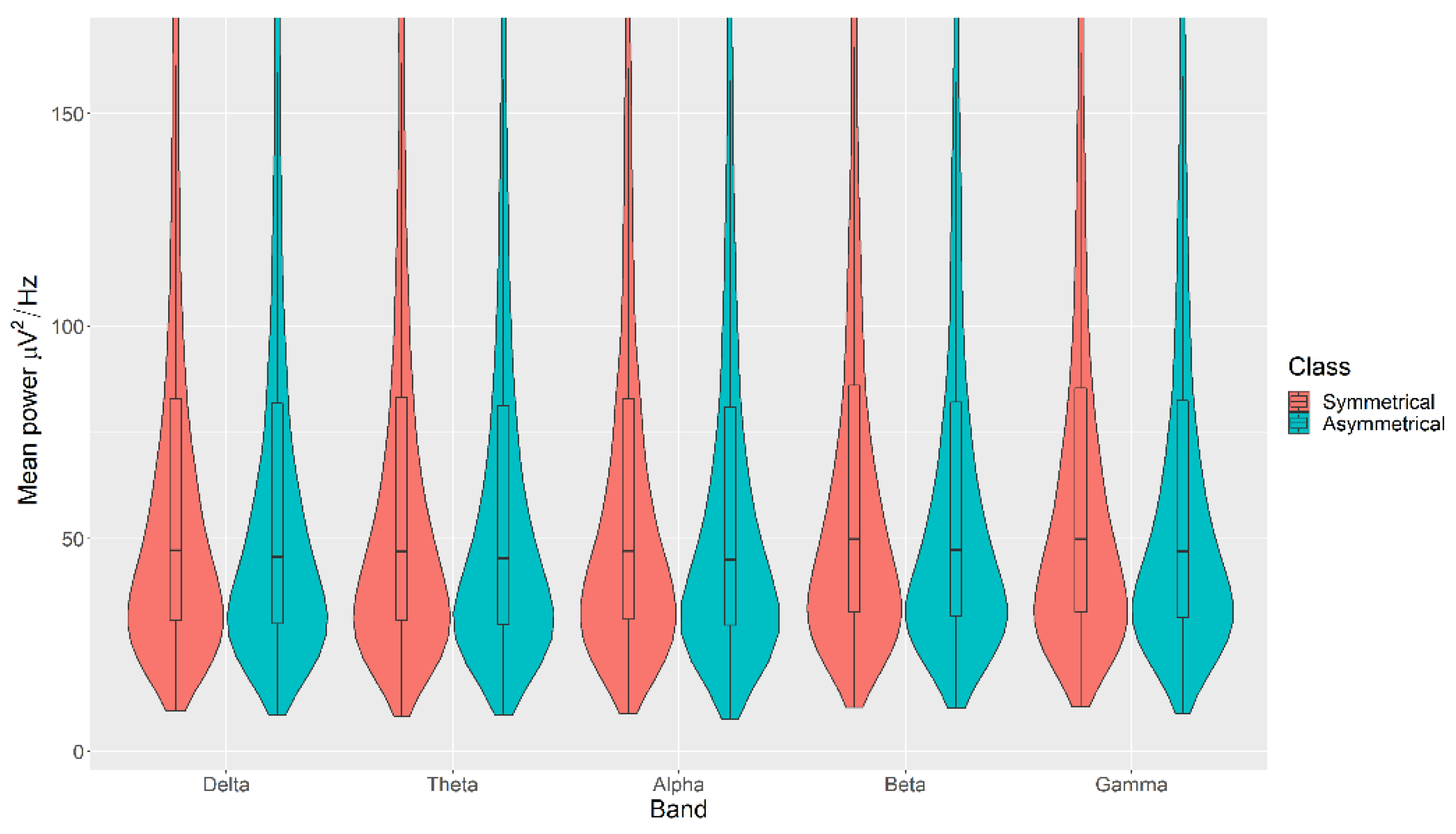

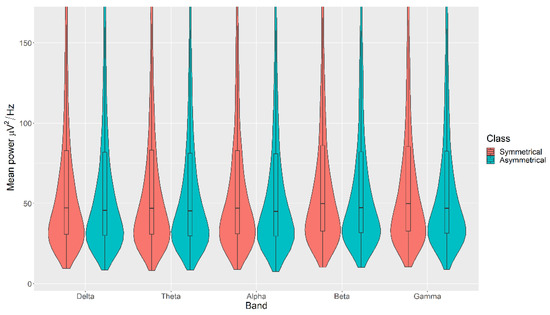

3.1. Balance

The mean power measurements across all channels were statistically significantly higher in the Symmetrical category than the Asymmetrical category for the Balance, with p < 0.05 in all cases (Figure 4). The details of the ranks and statistical average of the mean band measurements for the Balance are listed in Table A2. The full Test Statistics for Mann-Whitney U Tests conducted on the Balance are listed in Table A7.

Figure 4.

Violin plot of the average power in each of the bands for Symmetrical and Asymmetrical categories for the Balance VDEP, ranging from low (δ delta) to high (γ gamma) frequencies.

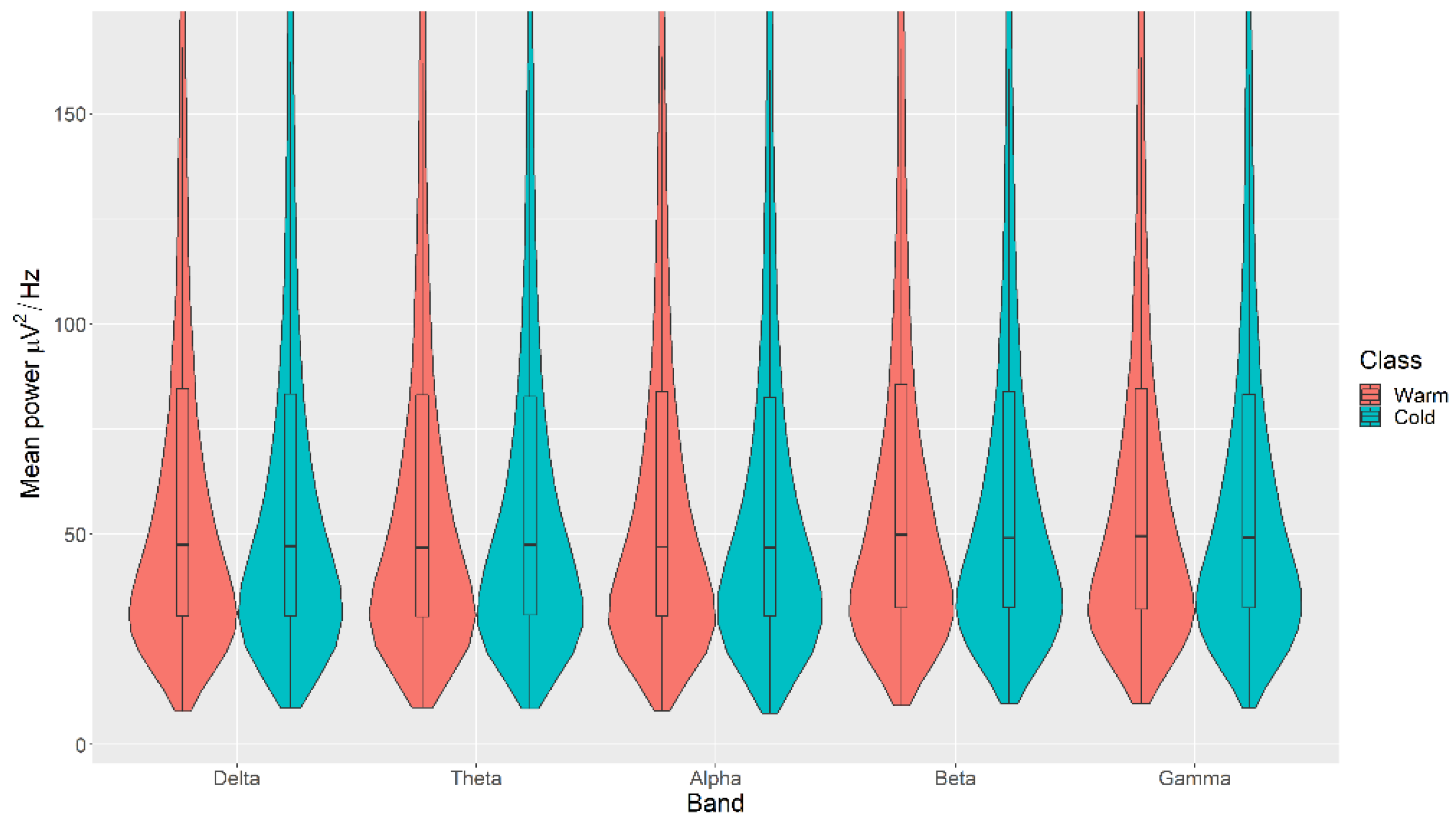

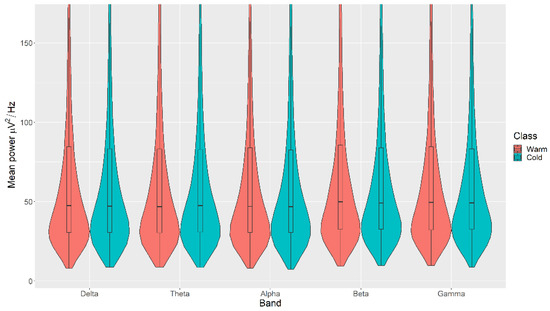

3.2. Colour

There were no statistically significant differences in the mean power measurements across all channels (δ, θ, α, β, and γ) between the Warm and Cold categories for the Colour, with p > 0.05 in all cases (Figure 5). The details of the ranks and statistical average of the mean power measurements for the Colour are listed in Table A3. The full Test Statistics for Mann-Whitney U Tests conducted on the Colour are listed in Table A8.

Figure 5.

Violin plot of the average power in each of the bands for Warm and Cold categories for the Colour VDEP, ranging from low (δ delta) to high (γ gamma) frequencies.

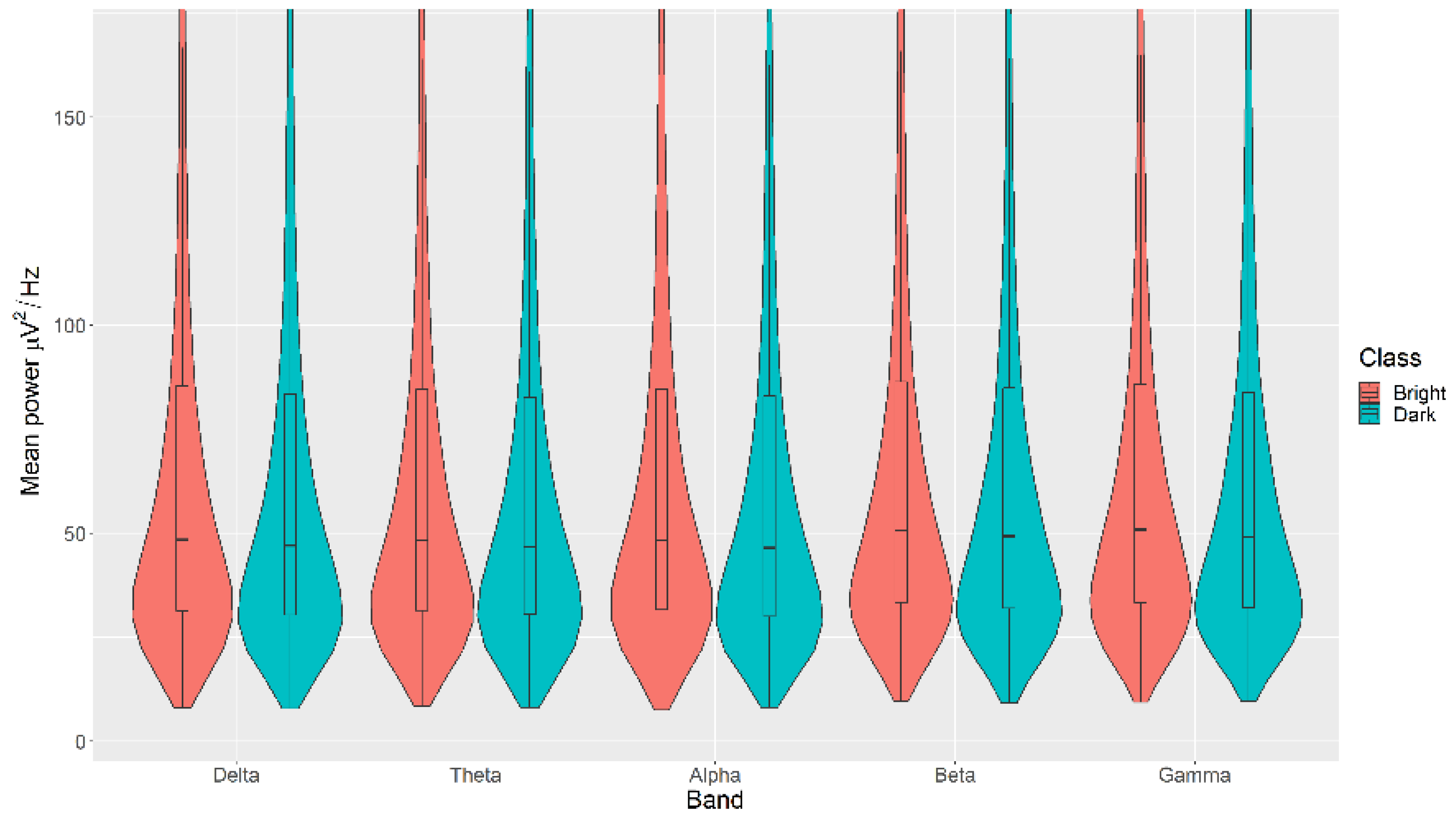

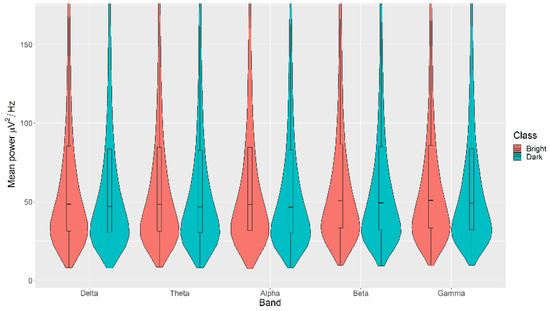

3.3. Light

All the mean power measurements across all channels (δ, θ, α, β, and γ) were statistically significantly higher in the Bright category than the Dark category for the Light, with p < 0.05 in all cases (Figure 6). The details of the ranks and statistical average of the mean power measurements for the Light are listed in Table A4. The full Test Statistics for Mann-Whitney U Tests conducted on the Light are listed in Table A9.

Figure 6.

Violin plot of the average power in each of the bands for Bright and Dark categories for the Light VDEP, ranging from low (δ delta) to high (γ gamma) frequencies.

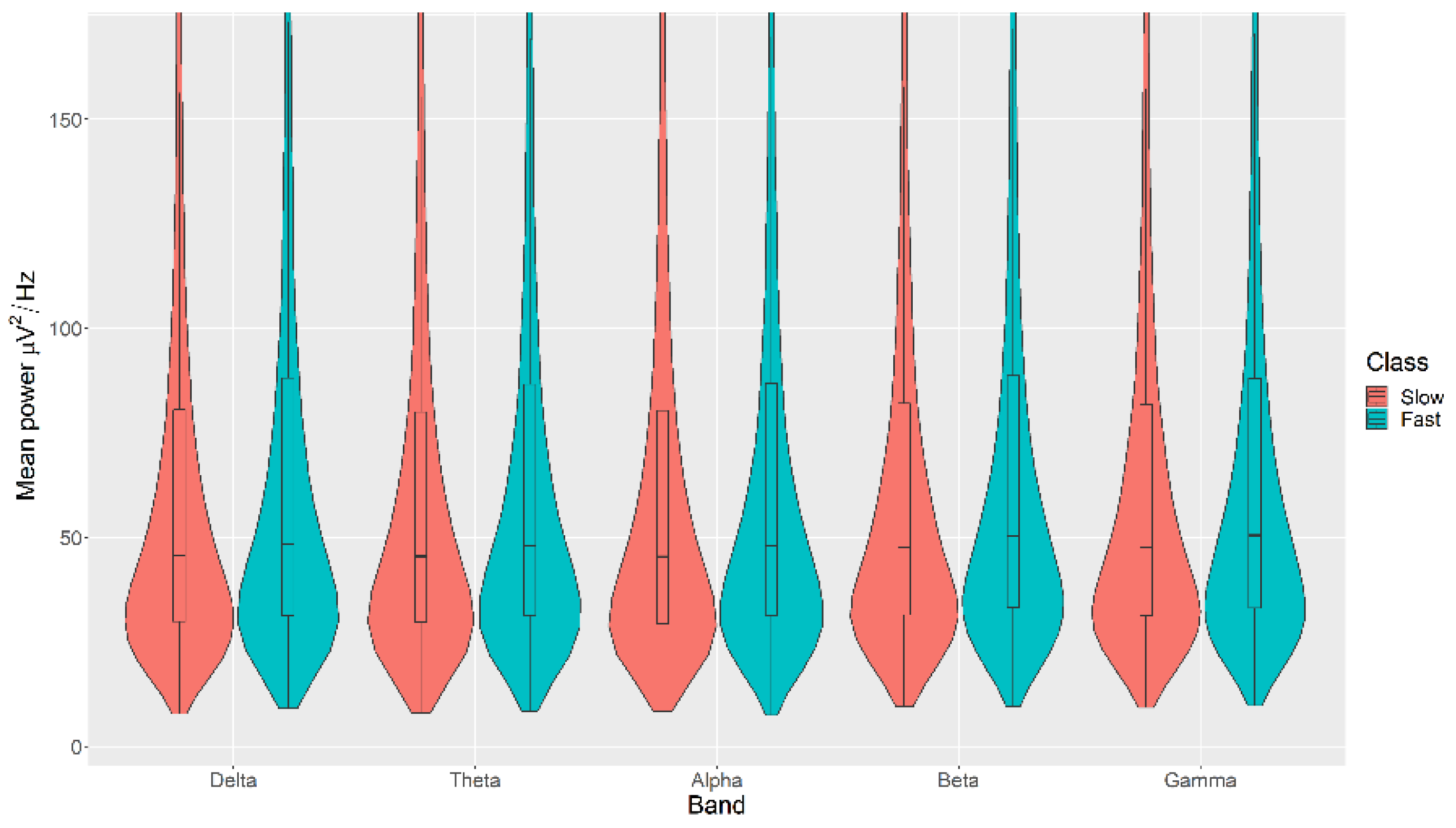

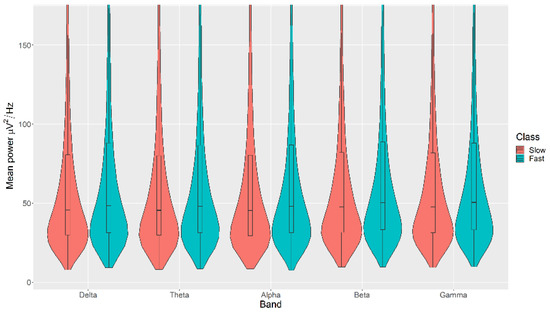

3.4. Movement

All the mean power measurements across all channels (δ, θ, α, β, and γ) were statistically significantly higher in the Fast category than the Slow category for the Movement, with p < 0.05 in all cases (Figure 7). The details of the ranks and statistical average of the mean power measurements for the Movement are listed in Table A5. The full Test Statistics for Mann-Whitney U Tests conducted on the Movement are listed in Table A10.

Figure 7.

Violin plot of the average power in each of the bands for Slow and Fast categories for the Movement VDEP, ranging from low (δ delta) to high (γ gamma) frequencies.

3.5. Convolutional Neural Networks (CNN)

All the VDEP targets were accurately predicted from the EEG signal using a simple CNN classification model trained using a non-exhaustive 10-fold cross-validation approach. The performance metrics were obtained from each training and the averages were computed. It is important to notice that we trained 40 independent models, 10 for each VDEP, which shared the same base structure (see Table A6). The prediction of Movement was the most accurate among the studied VDEPs (AUC = 0.9698, Accuracy = 0.9675, PR-AUC = 0.9569). On the other hand, the corresponding trained model struggled to predict the Colour VDEP from the EEG signal input (AUC = 0.7584, Accuracy = 0.7447, PR-AUC = 0.6940). A summary of the classification performance for each VDEP is displayed in Table 2.

Table 2.

Performance metrics of the CNN classification model for each of the target VDEPs.

4. Discussion

The VDEPs are responsible for capturing our attention, persuading us, informing us, and engaging a link with the visual information represented. The answer to what makes a good design and how you can create visual materials that stand out is in the proper use of VDEPs [9].

Past research has mostly focused on the VDEPs capability of causing specific emotions and how to activate the desired response from the spectator [33,68,69]. A severe limitation of such research has been its inability to boost the classification accuracy of various visual stimuli that are inferred to VDEPs and their impact on human brain activity. Another limitation of previous research has been its inability to demonstrate which cues related to the VDEPs and EEG are most correlated with human brain activity, and the difficulty to reveal the VDEPs that are involved and are conditioning how visual content is perceived.

We sought to address these limitations to understand the relationships between multimedia content itself with users’ physiological responses (EEG) to such content by analysing the VDEPs and their impact on human brain activity.

The results of this study suggest that variations in the light/value (Accuracy 0.90/Loss 0.23), rhythm/movement (Accuracy 0.95/Loss 0,13) and balance (Accuracy 0.81/Loss 0.76) VDEPs in the music video sequences produce a statistically significant effect over the δ, θ, α, β and γ EEG bands, and Colour is the VDEP that has produced the least variation in human brain activity (Accuracy 0.79/Loss 0.50). The CNN model successfully predicts the VDEP of a video fragment solely by the EEG signal of the viewer.

The violin plots confirm that the distributions are practically equal between classes of the same VDEP, and the fact that the p-values are significant comes from a large number of samples. We find significant differences in some bands for some VDEPs, but these are minor as the distributions show. However, this could be expected since we are simply analysing power in classical a priori defined bands. However, the CNN results are more remarkable. The fact that we obtain high ranking values with the CNN versus the similarity of the calculated powers in those bands suggests, for future work, that the relationship between VDEPs and EEG is more complex than simple power changes in predetermined frequency bands.

The results show how human brain activity is more susceptible to producing alterations to sudden changes in the visualization of movement, light, or balance in music video sequences than the impact that a colour variation can generate in human brain activity. Reddish tones transmit heat, just as blood is hot and fire burns. The bluish brushstrokes are associated with cold and lightness [70]. These are the colours with which the mind paints, effortlessly and from childhood, water, rain, or a bubble. Different studies support the results obtained on these associations that we can consider universal [68], being directly involved in the design of VDEPs. However, divergences appear when it comes to distinguishing between smooth and rough, male, or female, soft or rigid, aggressive, or calm, for example. Moreover, people disagree more, depending on the results, when judging whether the audio-visual content is interesting, and whether they like it or not.

Brain activity may be slightly altered by changes in the design of different VDEPs such as colour, brightness, or lighting. However, it may be more severely altered when one visualizes sudden changes in movement and course of action. The possible explanation for the results obtained is that the majority of the most basic processes of perception are part of the intrinsic neuronal architecture, the human being more accustomed to this type of modifications in the action that is visualized, and within this context being more susceptible to sudden and abrupt changes in the action that we observe, distorting and altering our brain activity, which has been demonstrated in various studies [69]. Most of the difficulties that arise in studies of this typology are the particularities of the individual. The same person, depending on its perceptual state, will observe the same scene differently, by existing a very wide range of experiences and individual variances [71,72,73,74,75].

Several limitations affect this study. It is important to notice that to the best of our knowledge, there is currently no established ontology for VDEPs. Although the identification of four VDEPs has been sufficient to find and determine the physiological relationships (EEG) and the VDEPs, it would be very interesting if we had a system to organize this knowledge and allow us to construct the architecture of neural networks focused and customized to specific VDEPs, which would permit us to improve accuracy and reduce loss, instead of working with generic networks.

Therefore, we consider that further research in this area should pursue the development of ontologies that let us structure the knowledge related to design, recognition, labelling, filtering, and classification of VDEPs for the improvement and optimization for their use in information systems, expert systems, and decision support systems. Moreover, this study should be extended to research about other VDEPs combinations on brain activity. Future research should also carry out investigations using multiple VDEPs in the EEG of each individual to study the relationships and interactions between different VDEPs. Furthermore, we recommend analysing the VDEPs separately in each EEG channel to understand how the synergies between various VDEPs affects visual perception and visual attention. Finally, future investigations could focus on exploring VDEPs through other models such as generative ones to visualize the topographical distribution of the EEG channels and each VDEP.

5. Conclusions

This study found evidence supporting that there is a physiological link between VDEPs and human brain activity. We found that the VDEPs expressed in music video sequences are related to statistically significant differences in the average power of classical EEG bands of the viewers. Furthermore, a CNN classifier tasked to identify the VDEP class from the EEG signals achieved accuracies of 90.44%, 79.67%, 81.25%, and 95.29% of accuracy for the Light, Colour, Balance, and Movement VDEPs, respectively. The results suggest that the relationship between the VDEPs and brain activity is more complex than simple changes in the EEG band power.

Author Contributions

Conceptualization, F.E.C., P.S.-N. and G.V.; Data curation, F.E.C. and G.V.; Formal analysis, F.E.C., P.S.-N. and G.V.; Funding acquisition, J.I.P. and J.E.; Investigation, F.E.C., P.S.-N., G.V. and J.E.; Methodology, F.E.C., P.S.-N. and G.V.; Project administration, J.I.P. and J.E.; Resources, F.E.C. and G.V.; Software, F.E.C. and G.V.; Supervision, J.I.P. and J.E.; Validation, F.E.C., P.S.-N., G.V., J.I.P. and J.E.; Visualization, F.E.C., P.S.-N. and G.V.; Writing—original draft, F.E.C., P.S.-N. and G.V.; Writing—review & editing, F.E.C., P.S.-N., G.V., J.I.P. and J.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by On the Move, an international mobility programme organized by the Society of Spanish Researchers in the United Kingdom (SRUK) and CRUE Universidades Españolas. The Article Processing Charge (APC) was funded by the Programa Operativo Fondo Europeo de Desarrollo Regional (FEDER) Andalucía 2014–2020 under Grant UMA 18-FEDERJA-148 and Plan Nacional de I+D+i del Ministerio de Ciencia e Innovación-Gobierno de España (2021-2024) under Grant PID2020-115673RB-100.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank the research groups for collecting and disseminating the DEAP dataset used in this research and for granting us access to the dataset.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Table A1.

Test of normality of the measurements of the mean Alpha, Beta, Delta, Gamma, and Theta bands, measured in the EEG records related to the Balance, Colour, Light, and Movement VDEPs.

Table A1.

Test of normality of the measurements of the mean Alpha, Beta, Delta, Gamma, and Theta bands, measured in the EEG records related to the Balance, Colour, Light, and Movement VDEPs.

| VDEP | Band | Category | Kolmogorov-Smirnov | ||

|---|---|---|---|---|---|

| Statistic | df | Sig. | |||

| Balance | Alpha | Symmetrical | 0.276 | 6528 | <0.001 |

| Asymmetrical | 0.295 | 6528 | <0.001 | ||

| Beta | Symmetrical | 0.252 | 6528 | <0.001 | |

| Asymmetrical | 0.262 | 6528 | <0.001 | ||

| Delta | Symmetrical | 0.264 | 6528 | <0.001 | |

| Asymmetrical | 0.282 | 6528 | <0.001 | ||

| Gamma | Symmetrical | 0.237 | 6528 | <0.001 | |

| Asymmetrical | 0.280 | 6528 | <0.001 | ||

| Theta | Symmetrical | 0.274 | 6528 | <0.001 | |

| Asymmetrical | 0.289 | 6528 | <0.001 | ||

| Colour | Alpha | Warm | 0.291 | 15,296 | <0.001 |

| Cold | 0.280 | 15,296 | <0.001 | ||

| Beta | Warm | 0.265 | 15,296 | <0.001 | |

| Cold | 0.263 | 15,296 | <0.001 | ||

| Delta | Warm | 0.278 | 15,296 | <0.001 | |

| Cold | 0.282 | 15,296 | <0.001 | ||

| Gamma | Warm | 0.266 | 15,296 | <0.001 | |

| Cold | 0.264 | 15,296 | <0.001 | ||

| Theta | Warm | 0.292 | 15,296 | <0.001 | |

| Cold | 0.281 | 15,296 | <0.001 | ||

| Light | Alpha | Bright | 0.269 | 20,320 | <0.001 |

| Dark | 0.286 | 20,320 | <0.001 | ||

| Beta | Bright | 0.256 | 20,320 | <0.001 | |

| Dark | 0.263 | 20,320 | <0.001 | ||

| Delta | Bright | 0.276 | 20,320 | <0.001 | |

| Dark | 0.282 | 20,320 | <0.001 | ||

| Gamma | Bright | 0.258 | 20,320 | <0.001 | |

| Dark | 0.266 | 20,320 | <0.001 | ||

| Theta | Bright | 0.279 | 20,320 | <0.001 | |

| Dark | 0.288 | 20,320 | <0.001 | ||

| Movement | Alpha | Slow | 0.282 | 16,480 | <0.001 |

| Fast | 0.273 | 16,480 | <0.001 | ||

| Beta | Slow | 0.265 | 16,480 | <0.001 | |

| Fast | 0.258 | 16,480 | <0.001 | ||

| Delta | Slow | 0.272 | 16,480 | <0.001 | |

| Fast | 0.277 | 16,480 | <0.001 | ||

| Gamma | Slow | 0.265 | 16,480 | <0.001 | |

| Fast | 0.264 | 16,480 | <0.001 | ||

| Theta | Slow | 0.284 | 16,480 | <0.001 | |

| Fast | 0.285 | 16,480 | <0.001 | ||

Table A2.

Ranks of the mean band measurements related to the Symmetrical and Asymmetrical categories for the Balance VDEP.

Table A2.

Ranks of the mean band measurements related to the Symmetrical and Asymmetrical categories for the Balance VDEP.

| Band | Category | N | Mean Rank | Sum of Ranks |

|---|---|---|---|---|

| α (alpha) | Symmetrical | 6528 | 6625.26 | 43,249,723.00 |

| Asymmetrical | 6528 | 6431.74 | 41,986,373.00 | |

| Total | 13,056 | |||

| β (beta) | Symmetrical | 6528 | 6628.52 | 43,270,953.00 |

| Asymmetrical | 6528 | 6428.48 | 41,965,143.00 | |

| Total | 13,056 | |||

| δ (delta) | Symmetrical | 6528 | 6599.36 | 43,080,616.00 |

| Asymmetrical | 6528 | 6457.64 | 42,155,480.00 | |

| Total | 13,056 | |||

| γ (gamma) | Symmetrical | 6528 | 6641.20 | 43,353,771.00 |

| Asymmetrical | 6528 | 6415.80 | 41,882,325.00 | |

| Total | 13,056 | |||

| θ (theta) | Symmetrical | 6528 | 6596.48 | 43,061,834.00 |

| Asymmetrical | 6528 | 6460.52 | 42,174,262.00 | |

| Total | 13,056 |

Table A3.

Ranks of the mean band measurements related to the Warm and Cold categories for the Colour VDEP.

Table A3.

Ranks of the mean band measurements related to the Warm and Cold categories for the Colour VDEP.

| Category | N | Mean Rank | Sum of Ranks | |

|---|---|---|---|---|

| α (alpha) | Warm | 15,296 | 15,333.69 | 234,544,166.00 |

| Cold | 15,296 | 15,259.31 | 233406362.00 | |

| Total | 30,592 | |||

| β (beta) | Warm | 15,296 | 15,345.02 | 234,717,493.00 |

| Cold | 15,296 | 15,247.98 | 233,233,035.00 | |

| Total | 30,592 | |||

| δ (delta) | Warm | 15,296 | 15,319.84 | 234,332,287.00 |

| Cold | 15,296 | 15,273.16 | 233,618,241.00 | |

| Total | 30,592 | |||

| γ (gamma) | Warm | 15,296 | 15,326.88 | 234,439,886.00 |

| Cold | 15,296 | 15,266.12 | 233,510,642.00 | |

| Total | 30,592 | |||

| θ (theta) | Warm | 15,296 | 15,276.85 | 233,674,686.00 |

| Cold | 15,296 | 15,316.15 | 234,275,842.00 | |

| Total | 30,592 |

Table A4.

Ranks of the mean band measurements related to the Bright and Dark categories for the Light VDEP.

Table A4.

Ranks of the mean band measurements related to the Bright and Dark categories for the Light VDEP.

| Category | N | Mean Rank | Sum of Ranks | |

|---|---|---|---|---|

| α (alpha) | Bright | 20,320 | 20,597.97 | 418,550,732.00 |

| Dark | 20,320 | 20,043.03 | 407,274,388.00 | |

| Total | 40,640 | |||

| β (beta) | Bright | 20,320 | 20,567.33 | 417,928,061.50 |

| Dark | 20,320 | 20,073.67 | 407,897,058.50 | |

| Total | 40,640 | |||

| δ (delta) | Bright | 20,320 | 20,532.43 | 417,218,904.00 |

| Dark | 20,320 | 20,108.57 | 408,606,216.00 | |

| Total | 40,640 | |||

| γ (gamma) | Bright | 20,320 | 20,570.53 | 417,993,114.00 |

| Dark | 20,320 | 20,070.47 | 407,832,006.00 | |

| Total | 40,640 | |||

| θ (theta) | Bright | 20,320 | 20,553.28 | 417,642,687.00 |

| Dark | 20,320 | 20,087.72 | 408,182,433.00 | |

| Total | 40,640 |

Table A5.

Ranks of the mean band measurements related to the Slow and Fast categories for the Movement VDEP.

Table A5.

Ranks of the mean band measurements related to the Slow and Fast categories for the Movement VDEP.

| Category | N | Mean Rank | Sum of Ranks | |

|---|---|---|---|---|

| α (alpha) | Slow | 16,480 | 16,084.77 | 265,076,976.00 |

| Fast | 16,480 | 16,876.23 | 278,120,304.00 | |

| Total | 32,960 | |||

| β (beta) | Slow | 16,480 | 16,066.68 | 264,778,816.00 |

| Fast | 16,480 | 16,894.32 | 278,418,464.00 | |

| Total | 32,960 | |||

| δ (delta) | Slow | 16,480 | 16,079.20 | 264,985,174.00 |

| Fast | 16,480 | 16,881.80 | 278,212,106.00 | |

| Total | 32,960 | |||

| γ (gamma) | Slow | 16,480 | 16,081.66 | 265,025,729.00 |

| Fast | 16,480 | 16,879.34 | 278,171,551.00 | |

| Total | 32,960 | |||

| θ (theta) | Slow | 16,480 | 16,087.10 | 265,115,447.00 |

| Fast | 16,480 | 16,873.90 | 278,081,833.00 | |

| Total | 32,960 |

Table A6.

Convolutional Neural Networks classifier model architecture.

Table A6.

Convolutional Neural Networks classifier model architecture.

| Layer (type) | Output Shape | Param # |

|---|---|---|

| Convolutional 2D | (None, 126, 30, 32) | 320 |

| Convolutional 2D | (None, 124, 28, 32) | 9248 |

| Batch Normalization | (None, 124, 28, 32) | 128 |

| Dropout | (None, 124, 28, 32) | 0 |

| Convolutional 2D | (None, 122, 26, 64) | 18,496 |

| Convolutional 2D | (None, 120, 24, 64) | 36,928 |

| Batch Normalization | (None, 120, 24, 64) | 256 |

| Dropout | (None, 120, 24, 64) | 0 |

| Max Pooling 2D | (None, 60, 12, 64) | 0 |

| Convolutional 2D | (None, 58, 10, 64) | 36,928 |

| Convolutional 2D | (None, 56, 8, 64) | 36,928 |

| Batch Normalization | (None, 56, 8, 64) | 256 |

| Dropout | (None, 56, 8, 64) | 0 |

| Convolutional 2D | (None, 54, 6, 128) | 73,856 |

| Convolutional 2D | (None, 52, 4, 128) | 147,584 |

| Batch Normalization | (None, 52, 4, 128) | 512 |

| Dropout | (None, 52, 4, 128) | 0 |

| Max Pooling 2D | (None, 26, 2, 128) | 0 |

| Flatten | (None, 6656) | 0 |

| Dense | (None, 16) | 106,512 |

| Dense | (None, 2) | 34 |

Table A7.

Test Statistics for Mann-Whitney U Tests conducted on the Balance VDEP.

Table A7.

Test Statistics for Mann-Whitney U Tests conducted on the Balance VDEP.

| δ (Delta) | θ (Theta) | α (Alpha) | β (Beta) | γ (Gamma) | |

|---|---|---|---|---|---|

| Mann-Whitney U | 20,844,824.000 | 20,863,606.000 | 20,675,717.000 | 20,654,487.000 | 20,571,669.000 |

| Wilcoxon W | 42,155,480.000 | 42,174,262.000 | 41,986,373.000 | 41,965,143.000 | 41,882,325.000 |

| Z | −2.148 | −2.061 | −2.933 | −3.032 | −3.417 |

| Asymptotic Significance (2-tailed) | 0.032 | 0.039 | 0.003 | 0.002 | 0.001 |

Table A8.

Test Statistics for Mann-Whitney U Tests conducted on the Colour VDEP.

Table A8.

Test Statistics for Mann-Whitney U Tests conducted on the Colour VDEP.

| δ (Delta) | θ (Theta) | α (Alpha) | β (Beta) | γ (Gamma) | |

|---|---|---|---|---|---|

| Mann-Whitney U | 116,626,785.000 | 116,683,230.000 | 116,414,906.000 | 116,241,579.000 | 116,519,186.000 |

| Wilcoxon W | 233,618,241.000 | 233,674,686.000 | 233,406,362.000 | 233,233,035.000 | 233,510,642.000 |

| Z | −0.462 | −0.389 | −0.737 | −0.961 | −0.602 |

| Asymptotic Significance (2-tailed) | 0.644 | 0.697 | 0.461 | 0.337 | 0.547 |

Table A9.

Test Statistics for Mann-Whitney U Tests conducted on the Light VDEP.

Table A9.

Test Statistics for Mann-Whitney U Tests conducted on the Light VDEP.

| δ (Delta) | θ (Theta) | α (Alpha) | β (Beta) | γ (Gamma) | |

|---|---|---|---|---|---|

| Mann-Whitney U | 202,144,856.000 | 201,721,073.000 | 200,813,028.000 | 201,435,698.500 | 201,370,646.000 |

| Wilcoxon W | 408,606,216.000 | 408,182,433.000 | 407,274,388.000 | 407,897,058.500 | 407,832,006.000 |

| Z | −3.642 | −4.000 | −4.768 | −4.241 | −4.296 |

| Asymptotic Significance (2-tailed) | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 |

Table A10.

Test Statistics for Mann-Whitney U Tests conducted on the Movement VDEP.

Table A10.

Test Statistics for Mann-Whitney U Tests conducted on the Movement VDEP.

| δ (Delta) | θ (Theta) | α (Alpha) | β (Beta) | γ (Gamma) | |

|---|---|---|---|---|---|

| Mann-Whitney U | 129,181,734.000 | 129,312,007.000 | 129,273,536.000 | 128,975,376.000 | 129,222,289.000 |

| Wilcoxon W | 264,985,174.000 | 265,115,447.000 | 265,076,976.000 | 264,778,816.000 | 265,025,729.000 |

| Z | −7.657 | −7.506 | −7.551 | −7.896 | −7.610 |

| Asymptotic Significance (2-tailed) | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 |

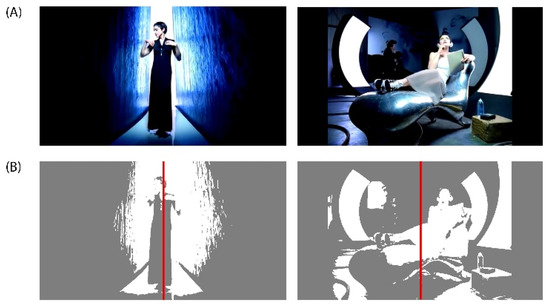

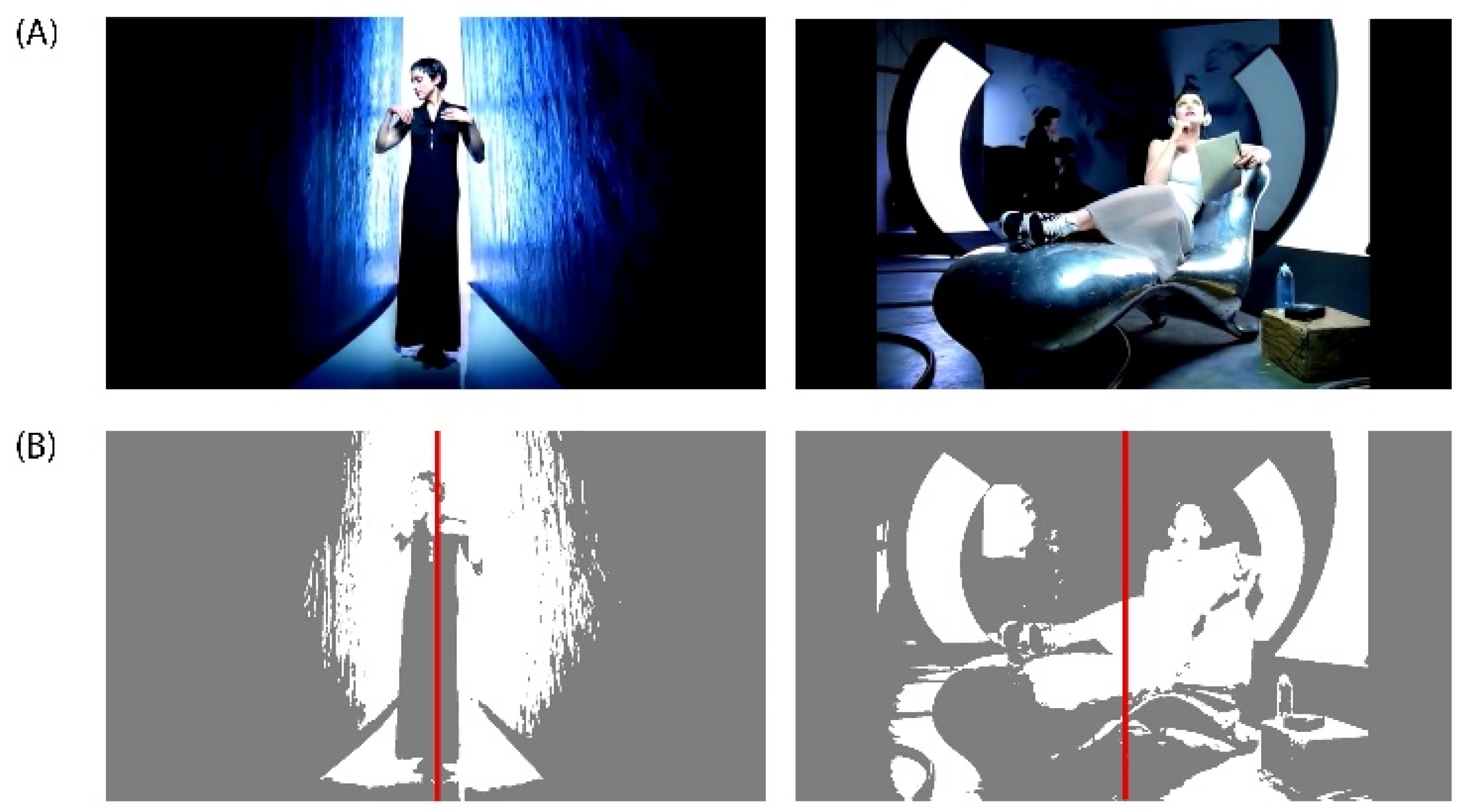

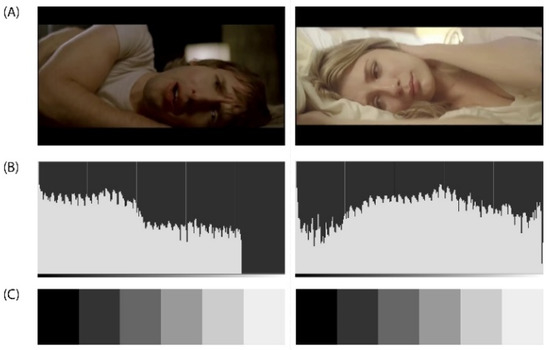

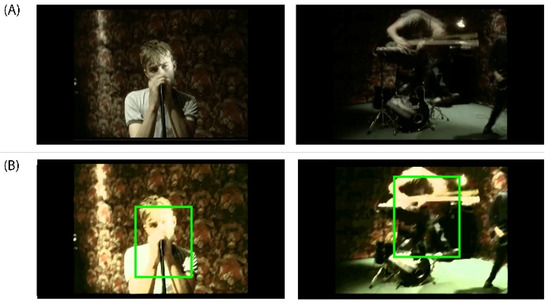

Figure A1.

(A) DEAP Dataset Experiment_id (27) ‘Rain’ Music Video from Madonna’s album ‘Erotica’ released on Sire Records in 1992. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=15kWlTrpt5k. (B) In the left music video frame, a composition with a predominantly symmetrical balance can be observed, while in the right music video frame, a composition with a predominantly asymmetrical balance can be appreciated.

Figure A1.

(A) DEAP Dataset Experiment_id (27) ‘Rain’ Music Video from Madonna’s album ‘Erotica’ released on Sire Records in 1992. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=15kWlTrpt5k. (B) In the left music video frame, a composition with a predominantly symmetrical balance can be observed, while in the right music video frame, a composition with a predominantly asymmetrical balance can be appreciated.

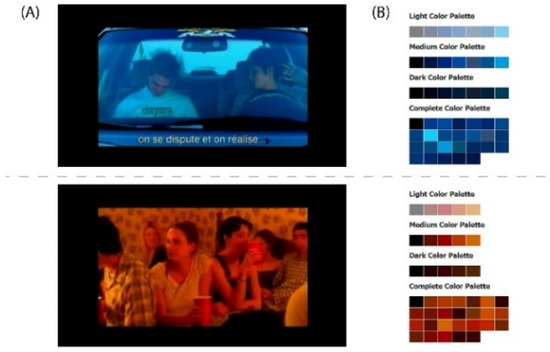

Figure A2.

(A) DEAP Dataset Experiment_id (17) ‘All I Need’ Music Video from AIR’s album ‘Moon Safari’ released on Virgin Records in 1998. DEAP Dataset. Source YouTube Link: http://www.youtube.com/watch?v=kxWFyvTg6mc. (B) The upper music video frame shows a composition where a cold colour palette predominates while the lower music video frame reveals a warm colour palette.

Figure A2.

(A) DEAP Dataset Experiment_id (17) ‘All I Need’ Music Video from AIR’s album ‘Moon Safari’ released on Virgin Records in 1998. DEAP Dataset. Source YouTube Link: http://www.youtube.com/watch?v=kxWFyvTg6mc. (B) The upper music video frame shows a composition where a cold colour palette predominates while the lower music video frame reveals a warm colour palette.

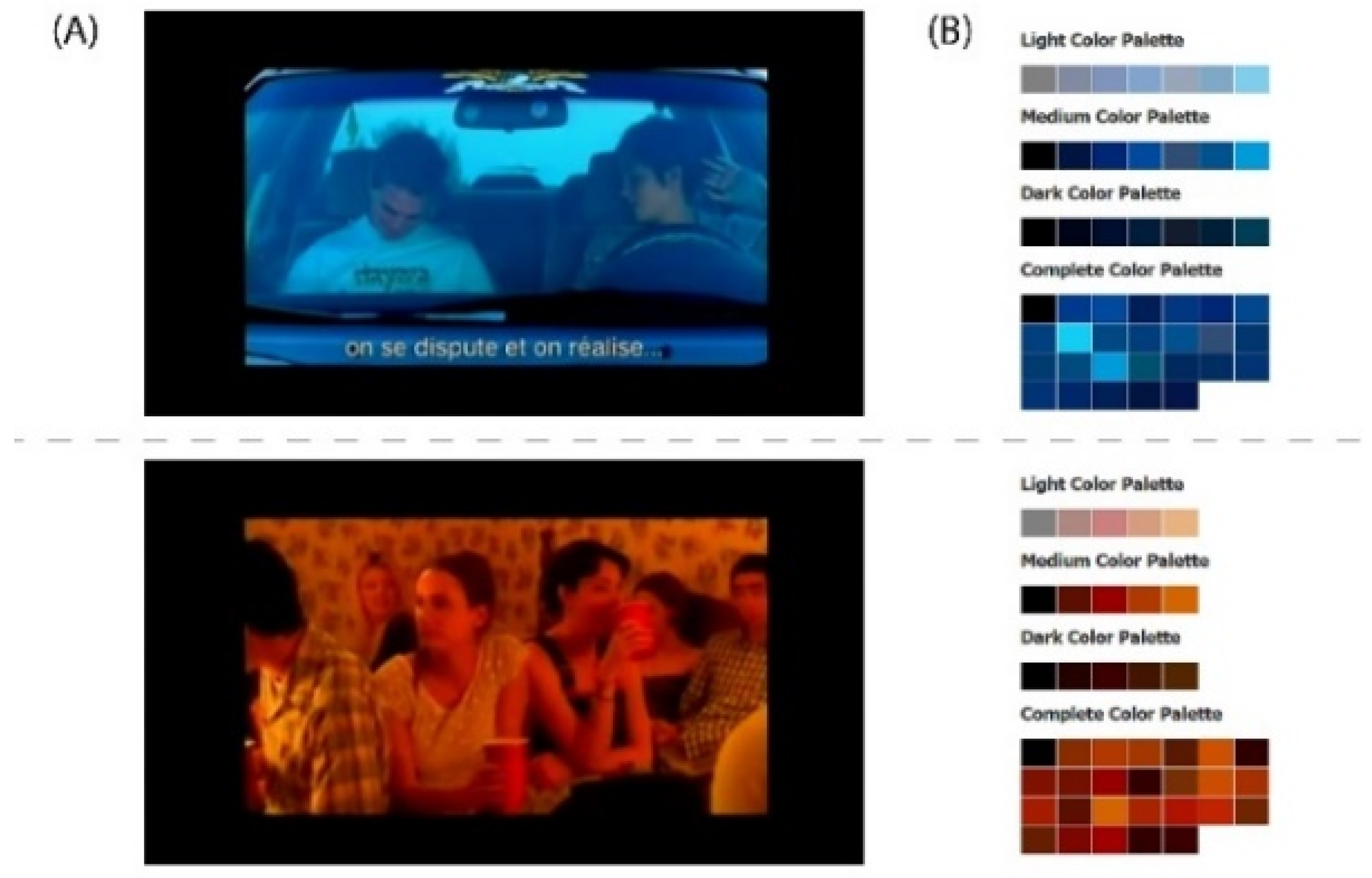

Figure A3.

(A) DEAP Dataset Experiment_id (24) ‘Goodbye My Lover’ Music Video from James Blunt’s album ‘Back to Bedlam’ released on Custard Records in 2002. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=wVyggTKDcOE. (B) The histogram (luminosity channel) is a graphic representation of the frequency of appearance of the different levels of brightness of an image or photograph. In this case were selected the following visual representation settings: Values in the perception of space/logarithmic histogram. The histogram shows how the pixels are distributed, the number of pixels (X-axis) in each of the 255 levels of illumination (Y-axis), from 0 at the lower left (absolute black) to 255 at the lower right (absolute white) of the image. Pixels with the same illumination level are stacked in columns on the horizontal axis. (C) The greyscale is used to establish comparatively both the brightness value of pure colours and the degree of clarity of the corresponding gradations of this pure colour.

Figure A3.

(A) DEAP Dataset Experiment_id (24) ‘Goodbye My Lover’ Music Video from James Blunt’s album ‘Back to Bedlam’ released on Custard Records in 2002. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=wVyggTKDcOE. (B) The histogram (luminosity channel) is a graphic representation of the frequency of appearance of the different levels of brightness of an image or photograph. In this case were selected the following visual representation settings: Values in the perception of space/logarithmic histogram. The histogram shows how the pixels are distributed, the number of pixels (X-axis) in each of the 255 levels of illumination (Y-axis), from 0 at the lower left (absolute black) to 255 at the lower right (absolute white) of the image. Pixels with the same illumination level are stacked in columns on the horizontal axis. (C) The greyscale is used to establish comparatively both the brightness value of pure colours and the degree of clarity of the corresponding gradations of this pure colour.

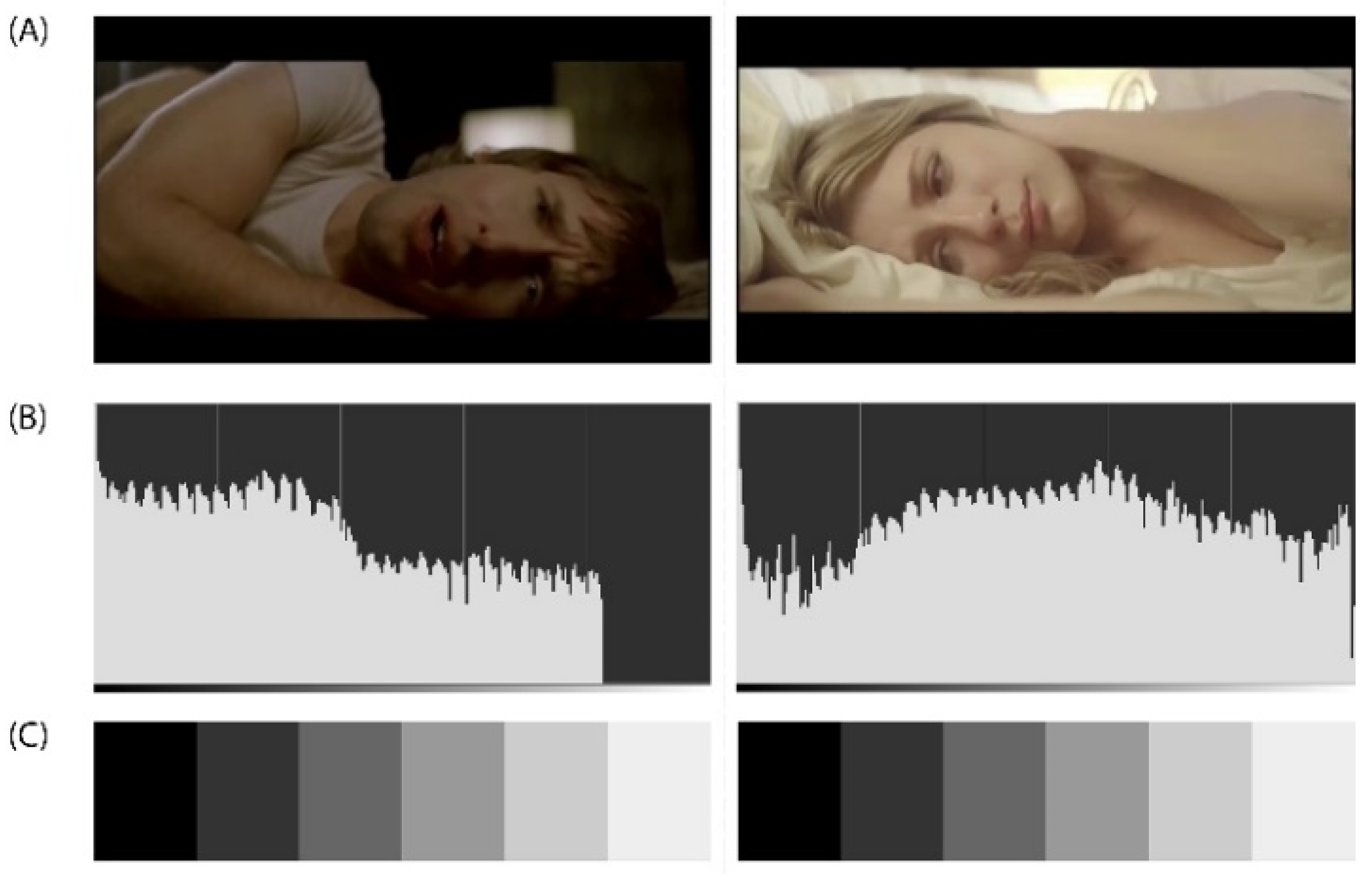

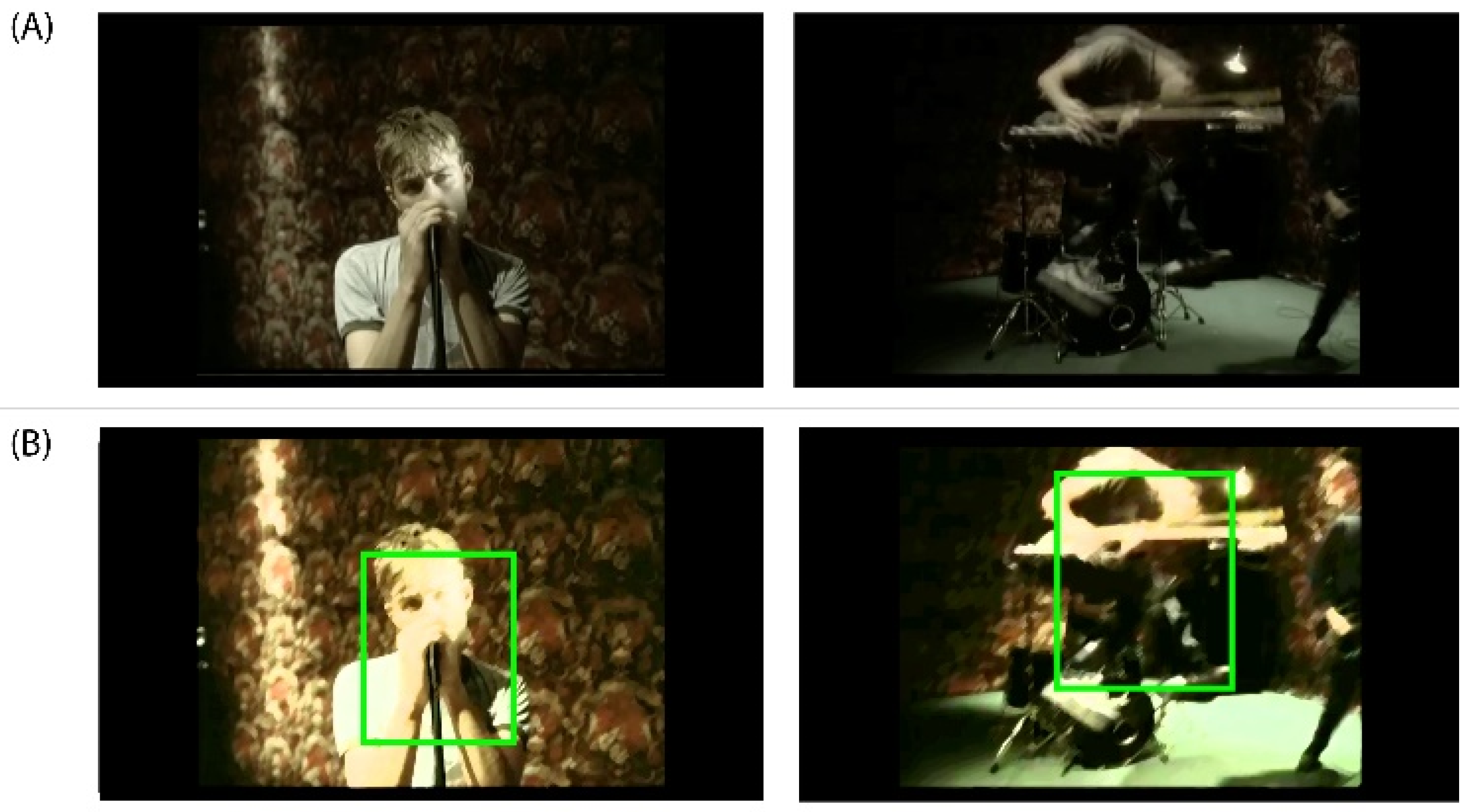

Figure A4.

(A) DEAP Dataset Experiment_id (5) ‘Song 2’ Music Video from Blur’s album ‘Blur’ released on Food Records in 1997. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=WlAHZURxRjY. (B) In the music video frame on the left, we can observe a slow movement of the subject within the action while in the capture on the right we can appreciate a fast movement of the subject within the action.

Figure A4.

(A) DEAP Dataset Experiment_id (5) ‘Song 2’ Music Video from Blur’s album ‘Blur’ released on Food Records in 1997. DEAP Dataset Source YouTube Link: http://www.youtube.com/watch?v=WlAHZURxRjY. (B) In the music video frame on the left, we can observe a slow movement of the subject within the action while in the capture on the right we can appreciate a fast movement of the subject within the action.

Table A11.

VDEPs tagging of the selected video samples from the DEAP dataset.

Table A11.

VDEPs tagging of the selected video samples from the DEAP dataset.

| Colour VDEP | LightVDEP | Balance VDEP | Movement VDEP | |||||

|---|---|---|---|---|---|---|---|---|

| Warm | Cold | Bright | Dark | Symmetrical | Asymmetrical | Slow | Fast | |

| Clip 1 | (1–16), (35–60) | (16–32) | (1–17), (33–60) | (17–33) | (3–5), (9–11), (23–24), (33–40), (45–60) | (1–10), (33–49) | ||

| Clip 4 | (1–4), (9–12), (27–49), (53–60) | (4–9), (12–15), (49–53) | (1–60) | (1–60) | (1–60) | |||

| Clip 5 | (1–60) | (1–60) | (1–60) | (16–32), (47–60) | ||||

| Clip 6 | (52–53) | (1–49), (53–60) | (1–60) | (1–60) | (1–2), (7–11) | |||

| Clip 7 | (8–15), (22–25), (26–30), (36–44), (55–60) | (1–3), (15–22), (24–25), (30–36), (44–55) | (1–60) | (27–29), (30–36), (47–55) | (1–27), (36–47), (55–60) | (1–8) | ||

| Clip 9 | (1–7), (9–60) | (7–9) | (1–6), (9–60) | 6–9 | (7–9) | (1–7), (9–60) | (9–11), (13–15) | |

| Clip 12 | (1–60) | (1–60) | (1–60) | (23–41) | ||||

| Clip 13 | (1–60) | (1–60) | (7–11), (15–23) | (1–7), (23–60) | (1–60) | |||

| Clip 14 | (22–24) | (1–22) | (37–60) | (1–37) | (1–60) | (1–60) | ||

| Clip 15 | (1–25), (29–60) | (25–29) | (1–60) | (25–38) | (1–25), (38–60) | (1–37), (43–60) | (37–43) | |

| Clip 16 | (1–60) | (1–60) | (1–60) | (1–60) | ||||

| Clip 17 | (2–6), (8–18) | (6–8), (18–28), (30–60) | (1–19), (34–60) | (19–34) | (1–60) | (1–60) | ||

| Clip 19 | (1–60) | (1–60) | (1–60) | |||||

| Clip 20 | (5–60) | (2–5) | (1–60) | (1–60) | ||||

| Clip 22 | (18–22), (24–33), (55–60) | (1–18), (22–24), (33–55) | (1–60) | (4–6), (29–32) | (32–60) | (1–60) | ||

| Clip 23 | (1–60) | (1–60) | (1–60) | (1–60) | ||||

| Clip 24 | (10–12), (15–18), (20–22), (25–26), (27–28), (35–36), (38–44) | (1–10), (12–15), (18–20), (22–25), (26–27), (28–35), (37–38), (44–60) | (15–18), (33–60) | (1–10), (19–20), (22–33) | (1–3), (40–44) | (3–40), (44–60) | (1–60) | |

| Clip 25 | (1–60) | (1–60) | (1–60) | |||||

| Clip 26 | (12–13) | (1–12), (13–60) | (1–60) | |||||

| Clip 27 | (1–9), (15–16), (19–22), (29–39), (41–44), (48–51), (52–53), (58–60) | (9–15), (16–19), (22–29), (39–41), (44–48), (51–52), (53–58) | (1–7), (15–39), (41–44), (47–60) | (7–15), (39–41), (44–47) | (43–60) | (1–43) | (1–60) | |

| Clip 31 | (1–60) | (1–60) | (8–9), (11–12), (17–18), (33–34), (36–38), (44–46), (49–50), (54–60) | (1–8), (9–11), (12–17), (18–33), (34–36), (38–44), (46–49), (50–54) | (1–60) | |||

| Clip 32 | (1–3), (7–9), (25–26), (29–32), (42–60) | (3–7), (9–25), (26–29), (32–42) | (1–60) | (35–36), (38–39), (40–41) | (1–35), (36–38), (39–40), (41–60) | (1–60) | ||

| Clip 33 | (8–11), (37–38), (41–42), (48–60) | (1–8), (11–37), (38–41), (43–48) | (1–60) | (1–60) | (1–60) | |||

| Clip 35 | (7–15), (19–23), (43–48), (54–55), (56–58) | (1–7), (15–19), (23–43), (48–54), (58–60) | (1–60) | (1–60) | ||||

| Clip 36 | (1–60) | (1–60) | (1–60) | (1–60) | ||||

| Clip 40 | (1–60) | (1–60) | (1–60) | (1–60) | ||||

References

- Frascara, J. Communication Design: Principles, Methods, and Practice; Allworth Press: New York, NY, USA, 2004; ISBN 1-58115-365-1. [Google Scholar]

- Ambrose, G.; Harris, P. The Fundamentals of Graphic Design; AVA Publishing SA Bloomsbury Publishing Plc: London, UK, 2009; ISBN 9782940476008. [Google Scholar]

- Palmer, S.E. Modern Theories of Gestalt Perception. Mind Lang. 1990, 5, 289–323. [Google Scholar] [CrossRef]

- Kepes, G. Language of Vision; Courier Corporation: Chelmsford, MA, USA, 1995; ISBN 0-486-28650-9. [Google Scholar]

- Dondis, D.A. La Sintaxis de la Imagen: Introducción al Alfabeto Visual; Editorial GG: Barcelona, Spain, 2012. [Google Scholar]

- Villafañe, J. Introducción a la Teoría de la Imagen; Piramide: Madrid, Spain, 2006. [Google Scholar]

- Won, S.; Westland, S. Colour meaning and context. Color Res. Appl. 2017, 42, 450–459. [Google Scholar] [CrossRef] [Green Version]

- Machajdik, J.; Hanbury, A. Affective image classification using features inspired by psychology and art theory. In Proceedings of the International Conference on Multimedia—MM ’10, Florence, Italy, 25–29 October 2010; ACM Press: New York, NY, USA, 2010; p. 83. [Google Scholar]

- O’Connor, Z. Colour, contrast and gestalt theories of perception: The impact in contemporary visual communications design. Color Res. Appl. 2015, 40, 85–92. [Google Scholar] [CrossRef]

- Sanchez-Nunez, P.; Cobo, M.J.; Las Heras-Pedrosa, C.D.; Pelaez, J.I.; Herrera-Viedma, E.; de las Heras-Pedrosa, C.; Pelaez, J.I.; Herrera-Viedma, E. Opinion Mining, Sentiment Analysis and Emotion Understanding in Advertising: A Bibliometric Analysis. IEEE Access 2020, 8, 134563–134576. [Google Scholar] [CrossRef]

- Chen, H.Y.; Huang, K.L. Construction of perfume bottle visual design model based on multiple affective responses. In Proceedings of the IEEE International Conference on Advanced Materials for Science and Engineering (ICAMSE), Tainan, Taiwan, 12–13 November 2016; pp. 169–172. [Google Scholar] [CrossRef]

- Fajardo, T.M.; Zhang, J.; Tsiros, M. The contingent nature of the symbolic associations of visual design elements: The case of brand logo frames. J. Consum. Res. 2016, 43, 549–566. [Google Scholar] [CrossRef]

- Dillman, D.A.; Gertseva, A.; Mahon-haft, T. Achieving Usability in Establishment Surveys Through the Application of Visual Design Principles. J. Off. Stat. 2005, 21, 183–214. [Google Scholar]

- Plassmann, H.; Ramsøy, T.Z.; Milosavljevic, M. Branding the brain: A critical review and outlook. J. Consum. Psychol. 2012, 22, 18–36. [Google Scholar] [CrossRef]

- Zeki, S.; Stutters, J. Functional specialization and generalization for grouping of stimuli based on colour and motion. Neuroimage 2013, 73, 156–166. [Google Scholar] [CrossRef] [Green Version]

- Laeng, B.; Suegami, T.; Aminihajibashi, S. Wine labels: An eye-tracking and pupillometry study. Int. J. Wine Bus. Res. 2016, 28, 327–348. [Google Scholar] [CrossRef]

- Pieters, R.; Wedel, M. Attention Capture and Transfer in Advertising: Brand, Pictorial, and Text-Size Effects. J. Mark. 2004, 68, 36–50. [Google Scholar] [CrossRef]

- Van der Laan, L.N.; Hooge, I.T.C.; de Ridder, D.T.D.; Viergever, M.A.; Smeets, P.A.M. Do you like what you see? The role of first fixation and total fixation duration in consumer choice. Food Qual. Prefer. 2015, 39, 46–55. [Google Scholar] [CrossRef]

- Ishizu, T.; Zeki, S. Toward a brain-based theory of beauty. PLoS ONE 2011, 6, e21852. [Google Scholar] [CrossRef] [Green Version]

- Qiao, R.; Qing, C.; Zhang, T.; Xing, X.; Xu, X. A novel deep-learning based framework for multi-subject emotion recognition. In Proceedings of the 4th International Conference on Information, Cybernetics and Computational Social Systems (ICCSS), Dalian, China, 24–26 July 2017; pp. 181–185. [Google Scholar]

- Marchesotti, L.; Murray, N.; Perronnin, F. Discovering Beautiful Attributes for Aesthetic Image Analysis. Int. J. Comput. Vis. 2015, 113, 246–266. [Google Scholar] [CrossRef] [Green Version]

- Siddharth, S.; Jung, T.P.; Sejnowski, T.J. Impact of Affective Multimedia Content on the Electroencephalogram and Facial Expressions. Sci. Rep. 2019, 9, 1–10. [Google Scholar] [CrossRef]

- Yadava, M.; Kumar, P.; Saini, R.; Roy, P.P.; Prosad Dogra, D. Analysis of EEG signals and its application to neuromarketing. Multimed. Tools Appl. 2017, 76, 19087–19111. [Google Scholar] [CrossRef]

- Sánchez-Núñez, P.; Cobo, M.J.; Vaccaro, G.; Peláez, J.I.; Herrera-Viedma, E. Citation Classics in Consumer Neuroscience, Neuromarketing and Neuroaesthetics: Identification and Conceptual Analysis. Brain Sci. 2021, 11, 548. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Wahby, M.A. EEG-Based Emotion Recognition using 3D Convolutional Neural Networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Acar, E.; Hopfgartner, F.; Albayrak, S. Fusion of learned multi-modal representations and dense trajectories for emotional analysis in videos. In Proceedings of the 13th International Workshop on Content-Based Multimedia Indexing (CBMI), Prague, Czech Republic, 10–12 June 2015; pp. 1–6. [Google Scholar]

- Choi, E.J.; Kim, D.K. Arousal and valence classification model based on long short-term memory and DEAP data for mental healthcare management. Healthc. Inform. Res. 2018, 24, 309–316. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.X.; Zhang, P.W.; Mao, Z.J.; Huang, Y.F.; Jiang, D.M.; Zhang, Y.N. Accurate EEG-based Emotion Recognition on Combined Features Using Deep Convolutional Neural Networks. IEEE Access 2019, 7, 1. [Google Scholar] [CrossRef]

- Kimball, M.A. Visual Design Principles: An Empirical Study of Design Lore. J. Tech. Writ. Commun. 2013, 43, 3–41. [Google Scholar] [CrossRef]

- Locher, P.J.; Jan Stappers, P.; Overbeeke, K. The role of balance as an organizing design principle underlying adults’ compositional strategies for creating visual displays. Acta Psychol. 1998, 99, 141–161. [Google Scholar] [CrossRef]

- Cyr, D.; Head, M.; Larios, H. Colour appeal in website design within and across cultures: A multi-method evaluation. Int. J. Hum. Comput. Stud. 2010, 68, 1–21. [Google Scholar] [CrossRef]

- Kahn, B.E. Using Visual Design to Improve Customer Perceptions of Online Assortments. J. Retail. 2017, 93, 29–42. [Google Scholar] [CrossRef]

- Dondis, D.A. A Primer of Visual Literacy; The MIT Press: Cambridge, MA, USA, 1973; ISBN 9780262040402. [Google Scholar]

- Albers, J. The Interaction of Color; Yale University Press: London, UK, 1963; ISBN 9780300179354. [Google Scholar]

- Karp, A.; Itten, J. The Elements of Color. Leonardo 1972, 5, 180. [Google Scholar] [CrossRef]

- Arnheim, R. Art and Visual Perception: A Psychology of the Creative Eye; University of California Press: Berkeley, CA, USA, 2004; ISBN 9780520243835. [Google Scholar]

- Makin, A.D.J.; Wilton, M.M.; Pecchinenda, A.; Bertamini, M. Symmetry perception and affective responses: A combined EEG/EMG study. Neuropsychologia 2012, 50, 3250–3261. [Google Scholar] [CrossRef]

- Huang, Y.; Xue, X.; Spelke, E.; Huang, L.; Zheng, W.; Peng, K. The aesthetic preference for symmetry dissociates from early-emerging attention to symmetry. Sci. Rep. 2018, 8, 6263. [Google Scholar] [CrossRef] [Green Version]

- Makin, A.D.J.; Rampone, G.; Bertamini, M. Symmetric patterns with different luminance polarity (anti-symmetry) generate an automatic response in extrastriate cortex. Eur. J. Neurosci. 2020, 51, 922–936. [Google Scholar] [CrossRef] [Green Version]

- Gheorghiu, E.; Kingdom, F.A.A.; Remkes, A.; Li, H.-C.O.; Rainville, S. The role of color and attention-to-color in mirror-symmetry perception. Sci. Rep. 2016, 6, 29287. [Google Scholar] [CrossRef] [Green Version]

- Bertamini, M.; Wagemans, J. Processing convexity and concavity along a 2-D contour: Figure–ground, structural shape, and attention. Psychon. Bull. Rev. 2013, 20, 191–207. [Google Scholar] [CrossRef] [Green Version]

- Chai, M.T.; Amin, H.U.; Izhar, L.I.; Saad, M.N.M.; Abdul Rahman, M.; Malik, A.S.; Tang, T.B. Exploring EEG Effective Connectivity Network in Estimating Influence of Color on Emotion and Memory. Front. Neuroinform. 2019, 13. [Google Scholar] [CrossRef]

- Tcheslavski, G.V.; Vasefi, M.; Gonen, F.F. Response of a human visual system to continuous color variation: An EEG-based approach. Biomed. Signal Process. Control 2018, 43, 130–137. [Google Scholar] [CrossRef]

- Nicolae, I.E.; Ivanovici, M. Preparatory Experiments Regarding Human Brain Perception and Reasoning of Image Complexity for Synthetic Color Fractal and Natural Texture Images via EEG. Appl. Sci. 2020, 11, 164. [Google Scholar] [CrossRef]

- Golnar-Nik, P.; Farashi, S.; Safari, M.-S. The application of EEG power for the prediction and interpretation of consumer decision-making: A neuromarketing study. Physiol. Behav. 2019, 207, 90–98. [Google Scholar] [CrossRef]

- Eroğlu, K.; Kayıkçıoğlu, T.; Osman, O. Effect of brightness of visual stimuli on EEG signals. Behav. Brain Res. 2020, 382, 112486. [Google Scholar] [CrossRef]

- Johannes, S.; Münte, T.F.; Heinze, H.J.; Mangun, G.R. Luminance and spatial attention effects on early visual processing. Cogn. Brain Res. 1995, 2, 189–205. [Google Scholar] [CrossRef]

- Lakens, D.; Semin, G.R.; Foroni, F. But for the bad, there would not be good: Grounding valence in brightness through shared relational structures. J. Exp. Psychol. Gen. 2012, 141, 584–594. [Google Scholar] [CrossRef] [Green Version]

- Schettino, A.; Keil, A.; Porcu, E.; Müller, M.M. Shedding light on emotional perception: Interaction of brightness and semantic content in extrastriate visual cortex. Neuroimage 2016, 133, 341–353. [Google Scholar] [CrossRef]

- Albright, T.D.; Stoner, G.R. Visual motion perception. Proc. Natl. Acad. Sci. USA 1995, 92, 2433–2440. [Google Scholar] [CrossRef] [Green Version]

- Hülsdünker, T.; Ostermann, M.; Mierau, A. The Speed of Neural Visual Motion Perception and Processing Determines the Visuomotor Reaction Time of Young Elite Table Tennis Athletes. Front. Behav. Neurosci. 2019, 13. [Google Scholar] [CrossRef] [Green Version]

- Agyei, S.B.; van der Weel, F.R.; van der Meer, A.L.H. Development of Visual Motion Perception for Prospective Control: Brain and Behavioral Studies in Infants. Front. Psychol. 2016, 7. [Google Scholar] [CrossRef] [Green Version]

- Cochin, S.; Barthelemy, C.; Lejeune, B.; Roux, S.; Martineau, J. Perception of motion and qEEG activity in human adults. Electroencephalogr. Clin. Neurophysiol. 1998, 107, 287–295. [Google Scholar] [CrossRef]

- Himmelberg, M.M.; Segala, F.G.; Maloney, R.T.; Harris, J.M.; Wade, A.R. Decoding Neural Responses to Motion-in-Depth Using EEG. Front. Neurosci. 2020, 14. [Google Scholar] [CrossRef]

- Wade, A.; Segala, F.; Yu, M. Using EEG to examine the timecourse of motion-in-depth perception. J. Vis. 2019, 19, 104. [Google Scholar] [CrossRef]

- He, B.; Yuan, H.; Meng, J.; Gao, S. Brain–Computer Interfaces. In Neural Engineering; Springer International Publishing: Cham, Switzerland, 2020; pp. 131–183. [Google Scholar]

- Salelkar, S.; Ray, S. Interaction between steady-state visually evoked potentials at nearby flicker frequencies. Sci. Rep. 2020, 10, 1–16. [Google Scholar] [CrossRef]

- Du, Y.; Yin, M.; Jiao, B. InceptionSSVEP: A Multi-Scale Convolutional Neural Network for Steady-State Visual Evoked Potential Classification. In Proceedings of the IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 2080–2085. [Google Scholar]

- Vialatte, F.-B.; Maurice, M.; Dauwels, J.; Cichocki, A. Steady-state visually evoked potentials: Focus on essential paradigms and future perspectives. Prog. Neurobiol. 2010, 90, 418–438. [Google Scholar] [CrossRef]

- Thomas, J.; Maszczyk, T.; Sinha, N.; Kluge, T.; Dauwels, J. Deep learning-based classification for brain-computer interfaces. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 234–239. [Google Scholar]

- Kwak, N.-S.; Müller, K.-R.; Lee, S.-W. A convolutional neural network for steady state visual evoked potential classification under ambulatory environment. PLoS ONE 2017, 12, e0172578. [Google Scholar] [CrossRef] [Green Version]

- Stober, S.; Cameron, D.J.; Grahn, J.A. Using convolutional neural networks to recognize rhythm stimuli from electroencephalography recordings. Adv. Neural Inf. Process. Syst. 2014, 2, 1449–1457. [Google Scholar]

- Schlag, J.; Schlag-Rey, M. Through the eye, slowly; Delays and localization errors in the visual system. Nat. Rev. Neurosci. 2002, 3, 191. [Google Scholar] [CrossRef]

- Jaswal, R. Brain Wave Classification and Feature Extraction of EEG Signal by Using FFT on Lab View. Int. Res. J. Eng. Technol. 2016, 3, 1208–1212. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 1, pp. 448–456. [Google Scholar]

- Wen, Z.; Xu, R.; Du, J. A novel convolutional neural networks for emotion recognition based on EEG signal. In Proceedings of the International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Shenzhen, China, 15–17 December 2017; pp. 672–677. [Google Scholar] [CrossRef]

- Yoto, A.; Katsuura, T.; Iwanaga, K.; Shimomura, Y. Effects of object color stimuli on human brain activities in perception and attention referred to EEG alpha band response. J. Physiol. Anthropol. 2007, 26, 373–379. [Google Scholar] [CrossRef] [Green Version]

- Specker, E.; Forster, M.; Brinkmann, H.; Boddy, J.; Immelmann, B.; Goller, J.; Pelowski, M.; Rosenberg, R.; Leder, H. Warm, lively, rough? Assessing agreement on aesthetic effects of artworks. PLoS ONE 2020, 15, e0232083. [Google Scholar] [CrossRef]

- Boerman, S.C.; van Reijmersdal, E.A.; Neijens, P.C. Using Eye Tracking to Understand the Effects of Brand Placement Disclosure Types in Television Programs. J. Advert. 2015, 44, 196–207. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, S.I.; de Munck, J.C.; Pouwels, P.J.W.; Schoonhoven, R.; Kuijer, J.P.A.; Maurits, N.M.; Hoogduin, J.M.; van Someren, E.J.W.; Heethaar, R.M.; Lopes da Silva, F.H. Correlating the alpha rhythm to BOLD using simultaneous EEG/fMRI: Inter-subject variability. Neuroimage 2006, 30, 203–213. [Google Scholar] [CrossRef]

- Klistorner, A.I.; Graham, S.L. Electroencephalogram-based scaling of multifocal visual evoked potentials: Effect on intersubject amplitude variability. Investig. Ophthalmol. Vis. Sci. 2001, 42, 2145–2152. [Google Scholar]

- Busch, N.A.; Dubois, J.; VanRullen, R. The phase of ongoing EEG oscillations predicts visual perception. J. Neurosci. 2009, 29, 7869–7876. [Google Scholar] [CrossRef]

- Romei, V.; Brodbeck, V.; Michel, C.; Amedi, A.; Pascual-Leone, A.; Thut, G. Spontaneous Fluctuations in Posterior Band EEG Activity Reflect Variability in Excitability of Human Visual Areas. Cereb. Cortex 2008, 18, 2010–2018. [Google Scholar] [CrossRef]

- Ergenoglu, T.; Demiralp, T.; Bayraktaroglu, Z.; Ergen, M.; Beydagi, H.; Uresin, Y. Alpha rhythm of the EEG modulates visual detection performance in humans. Cogn. Brain Res. 2004, 20, 376–383. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).