Self-Localization of Mobile Robots Using a Single Catadioptric Camera with Line Feature Extraction

Abstract

:1. Introduction

2. Related Work

3. Unified Central Catadioptric Model

4. Ground Plane Features Detection and Robot Self-Localization

4.1. Ground Region Extraction and Vertical Line Identification

4.2. Localization and Position Estimation

5. Experiments

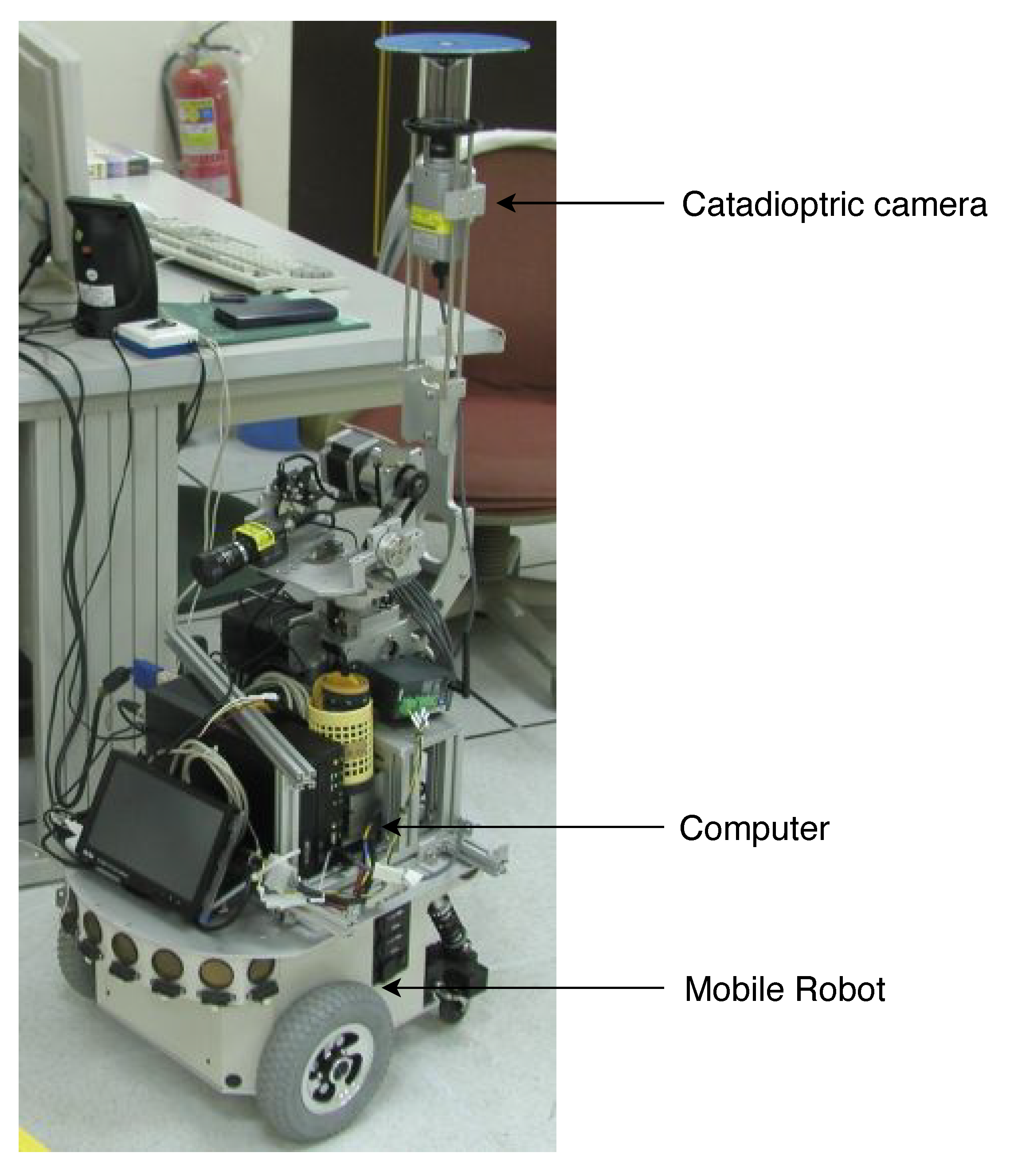

5.1. Experimental Setup

5.2. Experimental Results

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Haverinen, J.; Kemppainen, A. Global indoor self-localization based on the ambient magnetic field. Robot. Auton. Syst. 2009, 57, 1028–1035. [Google Scholar] [CrossRef]

- Khomutenko, B.; Garcia, G.; Martinet, P. An Enhanced Unified Camera Model. IEEE Robot. Autom. Lett. 2016, 1, 137–144. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.Y.; He, C.H. Mobile Robot Self-Localization using Omnidirectional Vision with Feature Matching from Real and Virtual Spaces. Appl. Sci. 2021, 11, 3360. [Google Scholar] [CrossRef]

- Grimson, W.E.L.; Lozano-Perez, T. Recognition and localization of overlapping parts from sparse data in two and three dimensions. In Proceedings of the International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; pp. 61–66. [Google Scholar]

- Drumheller, M. Mobile robot localization using sonar. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 325–332. [Google Scholar] [CrossRef] [Green Version]

- Kallasi, F.; Rizzini, D.L.; Caselli, S. Fast Keypoint Features From Laser Scanner for Robot Localization and Mapping. IEEE Robot. Autom. Lett. 2016, 1, 176–183. [Google Scholar] [CrossRef]

- Bonarini, A.; Matteucci, M.; Restelli, M. A kinematic-independent dead-reckoning sensor for indoor mobile robotics. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3750–3755. [Google Scholar] [CrossRef]

- Joo, C.K.; Kim, Y.C.; Choi, M.H.; Ryoo, Y.J. Self localization for intelligent mobile robot using multiple infrared range scanning system. In Proceedings of the 2007 International Conference on Control, Automation and Systems, Seoul, Korea, 17–20 October 2007; pp. 606–609. [Google Scholar]

- Ledergerber, A.; Hamer, M.; D’Andrea, R. A robot self-localization system using one-way ultra-wideband communication. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3131–3137. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, J.; Jiang, S.; Yang, Y.; Li, K.; Cao, J.; Liu, J. Accurate Localization of Tagged Objects Using Mobile RFID-Augmented Robots. IEEE Trans. Mob. Comput. 2021, 20, 1273–1284. [Google Scholar] [CrossRef]

- Cai, G.S.; Lin, H.Y.; Kao, S.F. Mobile Robot Localization using GPS, IMU and Visual Odometry. In Proceedings of the 2019 International Automatic Control Conference (CACS), Keelung, Taiwan, 13–16 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef] [Green Version]

- Nister, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2004), Washington, DC, USA, 27 June–2 July 2004; Volume 1. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef] [Green Version]

- Silveira, G.; Malis, E.; Rives, P. An Efficient Direct Approach to Visual SLAM. IEEE Trans. Robot. 2008, 24, 969–979. [Google Scholar] [CrossRef]

- Kerl, C.; Sturm, J.; Cremers, D. Dense visual SLAM for RGB-D cameras. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2100–2106. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.Y.; Hsu, J.L. A Sparse Visual Odometry Technique Based on Pose Adjustment with Keyframe Matching. IEEE Sens. J. 2021, 21, 11810–11821. [Google Scholar] [CrossRef]

- Simond, N.; Rives, P. Homography from a vanishing point in urban scenes. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; pp. 1005–1010. [Google Scholar]

- Lobo, J.; Dias, J. Ground plane detection using visual and inertial data fusion. In Proceedings of the 1998 IEEE/RSJ International Conference on Intelligent Robots and Systems. Innovations in Theory, Practice and Applications (Cat. No.98CH36190), Victoria, BC, Canada, 17 October 1998; p. 479. [Google Scholar]

- Pears, N.; Liang, B. Ground plane segmentation for mobile robot visual navigation. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), Maui, HI, USA, 29 October–3 November 2001; pp. 1513–1518. [Google Scholar]

- Renno, J.; Orwell, J.; Jones, G. Learning Surveillance Tracking Models for the Self-Calibrated Ground Plane. In Proceedings of the British Machine Vision Conference (BMVC 2002), Cardiff, UK, 2–5 September 2002; pp. 607–616. [Google Scholar]

- Bose, B.; Grimson, E. Ground plane rectification by tracking moving objects. In Proceedings of the Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 17–20 October 2003. [Google Scholar]

- Lu, X.; Manduchi, R. Detection and Localization of Curbs and Stairways Using Stereo Vision. In Proceedings of the IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 4648–4654. [Google Scholar]

- Marques, C.F.; Lima, P.U. Learning Surveillance Tracking Models for the Self-Calibrated Ground Plane. In Proceedings of the 2000 IEEE/RSJ International Conference on intelligent Robots and Systems, Takamatsu, Japan, 31 October–5 November 2000. [Google Scholar]

- Liu, J.G.; Shi, D.M.; Leung, M.K. Indoor Navigation System Based on Omni-directional Corridorguidelines. In Proceedings of the 2008 International Conference on Machine Learning and Cybernetics, Kunming, China, 12–15 July 2008; pp. 1271–1276. [Google Scholar]

- Calabrese, F.; Indiveri, G. An Omni-Vision Triangulation-Like Approach to Mobile Robot Localization. In Proceedings of the 2005 IEEE International Symposium on, Mediterrean Conference on Control and Automation Intelligent Control, Limassol, Cyprus, 27–29 June 2005; pp. 604–609. [Google Scholar]

- Jogan, M.; Leonardis, A.; Wildenauer, H.; Bischof, H. Mobile Robot Localization Under Varying Illumination. In Proceedings of the 16th International Conference on Pattern Recognition, Quebec, QC, Canada, 11–15 August 2002; pp. 741–744. [Google Scholar]

- Goedeme, T.; Tuytelaars, T.; Gool, L.V.; Vanacker, G.; Nuttin, M. Feature based omnidirectional sparse visual path following. In Proceedings of the 005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 1806–1811. [Google Scholar]

- Mei, C.; Rives, P. Single View Point Omnidirectional Camera Calibration from Planar Grids. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007. [Google Scholar]

- Scaramuzza, D.; Siegwart, R. A Practical Toolbox for Calibrating Omnidirectional Cameras. Vis. Syst. Appl. 2007, 17, 297–310. [Google Scholar]

- Mei, C.; Benhimane, S.; Malis, E.; Rives, P. Efficient Homography-Based Tracking and 3-D Reconstruction for Single-Viewpoint Sensors. IEEE Trans. Robot. 2008, 24, 1352–1364. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Siegwart, R.; Martinelli, A. A Robust Descriptor for Tracking Vertical Lines in Omnidirectional Images and its Use in Mobile Robotics. Int. J. Robot. Res. 2009, 28, 149–171. [Google Scholar] [CrossRef] [Green Version]

- Bresenham, J. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast normalized cross-correlation. In Vision Interface; Technical Report; Canadian Image Processing and Pattern Recognition Society: Quebec City, QC, Canada, 1995. [Google Scholar]

- Lin, H.Y.; Lin, J.H. A Visual Positioning System for Vehicle or Mobile Robot Navigation. IEICE Trans. Inf. Syst. 2006, 89, 2109–2116. [Google Scholar] [CrossRef]

| Location | Ground Truth | Estimation | Error |

|---|---|---|---|

| 1 | 1000 mm | 991.61 mm | −8.39 mm |

| 2 | 1500 mm | 1514.92 mm | 14.92 mm |

| 3 | 2000 mm | 2011.18 mm | 11.18 mm |

| 4 | 2500 mm | 2440.14 mm | −59.86 mm |

| 5 | 3000 mm | 2844.51 mm | −155.49 mm |

| 6 | 3500 mm | 3340.40 mm | −159.60 mm |

| 7 | 4000 mm | 4240.71 mm | 240.71 mm |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-Y.; Chung, Y.-C.; Wang, M.-L. Self-Localization of Mobile Robots Using a Single Catadioptric Camera with Line Feature Extraction. Sensors 2021, 21, 4719. https://doi.org/10.3390/s21144719

Lin H-Y, Chung Y-C, Wang M-L. Self-Localization of Mobile Robots Using a Single Catadioptric Camera with Line Feature Extraction. Sensors. 2021; 21(14):4719. https://doi.org/10.3390/s21144719

Chicago/Turabian StyleLin, Huei-Yung, Yuan-Chi Chung, and Ming-Liang Wang. 2021. "Self-Localization of Mobile Robots Using a Single Catadioptric Camera with Line Feature Extraction" Sensors 21, no. 14: 4719. https://doi.org/10.3390/s21144719