Abstract

Infrared sensing technology is more and more widely used in the construction of power Internet of Things. However, due to cost constraints, it is difficult to achieve the large-scale installation of high-precision infrared sensors. Therefore, we propose a blind super-resolution method for infrared images of power equipment to improve the imaging quality of low-cost infrared sensors. If the blur kernel estimation and non-blind super-resolution are performed at the same time, it is easy to produce sub-optimal results, so we chose to divide the blind super-resolution into two parts. First, we propose a blur kernel estimation method based on compressed sensing theory, which accurately estimates the blur kernel through low-resolution images. After estimating the blur kernel, we propose an adaptive regularization non-blind super-resolution method to achieve the high-quality reconstruction of high-resolution infrared images. According to the final experimental demonstration, the blind super-resolution method we proposed can effectively reconstruct low-resolution infrared images of power equipment. The reconstructed image has richer details and better visual effects, which can provide better conditions for the infrared diagnosis of the power system.

1. Introduction

The concept of the Internet of Things is proposed, and the era of the Internet of Everything is coming. One of the keys to building the power Internet of Things is to realize online monitoring and analysis and evaluation of the operating status of various power equipment. Among various monitoring technologies, infrared monitoring technology has the characteristics of being long distance and non-contact and having high levels of accuracy and speed [1,2]. The extensive and effective installation of infrared sensors will be one of the key issues that need to be solved in the construction of the power Internet of Things. However, due to the limitation of equipment installation cost, data transmission, and storage capacity, it is obviously difficult to achieve the accuracy of mainstream infrared imagers at this stage using the online monitoring infrared sensor that can be installed on a large scale. Therefore, it is necessary to process infrared images collected by low-precision infrared sensors through background algorithms to enhance their visual effects and enrich their connotative information. The super-resolution technology that has emerged in recent years provides new ideas for solving this problem.

Super-resolution (SR) aims to reconstruct a high-quality image from its degraded measurement [3]. SR is a typical ill-posed inverse problem, and it can be generally modelled as

where is a blur kernel, denotes the convolution operator, and the down-sampling operator. According to the number of input images, SR technology is divided into single-image super-resolution (SISR) technology and multi-frame image super-resolution (MISR) technology. Due to data storage, transmission pressure and other issues, the current power industry does not have the conditions to adopt MISR technology, so we focus on the SISR technology.

The SISR method can be divided into three categories according to the principle. The first type is interpolation methods, which are often simple and easy to implement, but usually excessively smooth the high-frequency details of the image, resulting in poor visual quality of the reconstructed image [4,5,6]. The second type of method is a learning-based method, which learns the correspondence between low-resolution (LR) and high-resolution (HR) image blocks from a given training sample [7,8,9,10]. This results in the effectiveness of the algorithm highly dependent on the selection of training samples. Additionally, when the application conditions change, such as the magnification and degradation information, the model needs to be retrained again which is often accompanied by high computational costs [8]. Therefore, we focus on the third type of methods, namely, reconstruction-based methods. This type of method constructs the model based on the principle of image degradation, and realizes the SR reconstruction of the image by combining prior information in the Bayesian framework or introducing regularization in its inverse problem [11,12,13,14,15,16,17]. Such methods are not limited by samples, are flexible in application and have good reconstruction effects, which are easy to be widely applied in the power grid.

In addition, the SISR method can also be divided into three categories according to the different problems it solves. The first type of method ignores the general blurring in the LR image formation process. These methods consider the LR image to be absolutely clear and only improve its resolution [4,8,11,12]. However, the research of Efrat et al. [18] shows that the influence of blur kernel on the SISR problem is even greater than the influence of the selected SR model. The second type of method is the non-blind SR method, which does not study the solution of the blur kernel, but only focuses on how to reconstruct the HR image from the LR image when the blur kernel is known [13,14,15,16,17]. For example, Glasner et al. [13] used image self-repeatability to reconstruct HR images. Šroubek et al. [14] proved that the degenerate operator can be implemented in the frequency domain and designed a fast-solving algorithm based on this. Dong et al. [15] proposed the concept of sparse coding noise and achieved the goal of image restoration by suppressing sparse coding noise. The third type of method is the blind SR method, which simultaneously solves the problem of blur kernel estimation and HR image reconstruction. However, the joint restoration of blur kernel and HR image is usually difficult, and it is easy to produce sub-optimal reconstruction results [19]. Therefore, there are few studies on blind SR methods [20,21,22,23]. Shao et al. [20] proposed a non-parametric blind SR method based on an adaptive heavy-tail prior. Qian et al. [21] proposed a blind SR restoration method based on frame-by-frame non-parametric blur estimation. Kim et al. [22] proposed a single-image blind SR method with low computational complexity, and Michaeli et al. [23] proposed a blind SR method based on the self-similarity of the spatial structure of image blocks.

In order to improve the quality of SR reconstructed images, to meet the actual needs of the power industry, we propose a blind SR method. Since the joint restoration of the blur kernel and HR image may produce sub-optimal reconstruction results, we chose to estimate the blur kernel first, and then reconstruct the LR infrared image through the non-blind SR method. For the blur kernel estimation, we improved the basic SR model of compressed sensing and introduced the image Extreme Channels Prior to the model; thus, we propose an LR image blur kernel estimation method based on the compressed sensing theory. For the non-blind reconstruction after blur kernel estimation, we propose an adaptive non-blind SR reconstruction algorithm. The algorithm uses adaptive control of the intensity coefficient of the regular term in the reconstruction process to suppress the generation of artifact ringing and improve the quality of the reconstructed image. The final experimental results show that our proposed blind SR reconstruction method for infrared images of power equipment can effectively reconstruct LR infrared images through successive blur kernel estimation and non-blind reconstruction. The reconstructed image has richer details and better visual effects, which can provide better conditions for the infrared diagnosis of the power system.

2. Blur Kernel Estimation Method

2.1. Basic SR Model of Compressed Sensing

Our blur kernel estimation model is improved from the basic SR model of compressed sensing. So, we first briefly introduce it, and its model is:

where is a one-dimensional vectorized LR image; is the down-sampling matrix, which is generally generated according to the principle of cubic interpolation; is the image degradation matrix, which is the cyclic Toeplitz matrix obtained by k; is a sparse base, which can be generated according to Fourier transform, discrete cosine transform, wavelet transform, or it can be an over-complete dictionary; is a sparse coefficient. The one-dimensional vectorized HR image . The SR reconstruction can be completed by solving the following objective function:

where represents the number of non-zero elements in . However, because Equation (3) is an NP-hard problem. Donoho [24] pointed out that the equivalent solution can be obtained by solving the L1 optimization problem. The objective function can be expressed as:

The most sparse is solved by the optimization problem of Equation (4), and the result of image SR reconstruction can be obtained by . When is also unknown, the optimization problem of Equation (4) is transformed into a blur kernel estimation problem:

Obviously, due to the undetermined nature of the problem, the estimated value of cannot be obtained directly from Equation (5), so it is necessary to introduce prior information to constrain and optimize the choice of solutions. There are obvious differences in the color brightness of infrared images with different pseudo-color conversion methods. Therefore, we chose the image Extreme Channels Prior mentioned in [25] as a constraint and introduce it into Equation (5) to improve the accuracy of blur kernel estimation.

2.2. Priori of Extreme Value Channel of Infrared Image of Power Equipment

In this section, we introduce the image Extreme Channels Prior. There are many types of prior information that can be used to estimate the image blur kernel, such as L0-regularized gradient prior, dark channel prior and Extreme Channels Prior. The reasons why we chose Extreme Channels Prior are as follows: In the infrared image of power equipment, the faulty heating part and the edge texture part of the equipment contain the most important information. The faulty heating part is the focus of infrared diagnosis, and it often appears as the brightest part in the image. The edge texture part is the main basis for image segmentation and target recognition, which is often the darkest part. The Extreme Channels Prior used in this article is calculated based on the local brightest and darkest pixel values in the infrared image before and after blurring. Therefore, Extreme Channels Prior pays more attention to the processing of local brightness extremes in the image and can use this as the focus to estimate the blur kernel, thereby improving the visual quality of the faulty heating part and the edge texture part of the final reconstructed image. This feature can make the infrared image of the power equipment reconstructed by the method more suitable for the needs of practical applications.

It should be noted that this paper does not directly process the infrared image in RGB mode, but converts it into YCbCr mode. Y is the luminance component, Cb is the blue chrominance component, and Cr is the red chrominance component. Since the human eye is more sensitive to the Y component, the visual difference caused by the subtle changes of the other two components is extremely small, so the following image Extreme Channels Prior statistical analysis will take the Y component as an example. Specifically, Extreme Channels includes a bright channel component and a dark channel component, which are, respectively, arranged by the local maximum and minimum values in the Y component according to a certain rule. For image , the components of the bright channel and dark channel are:

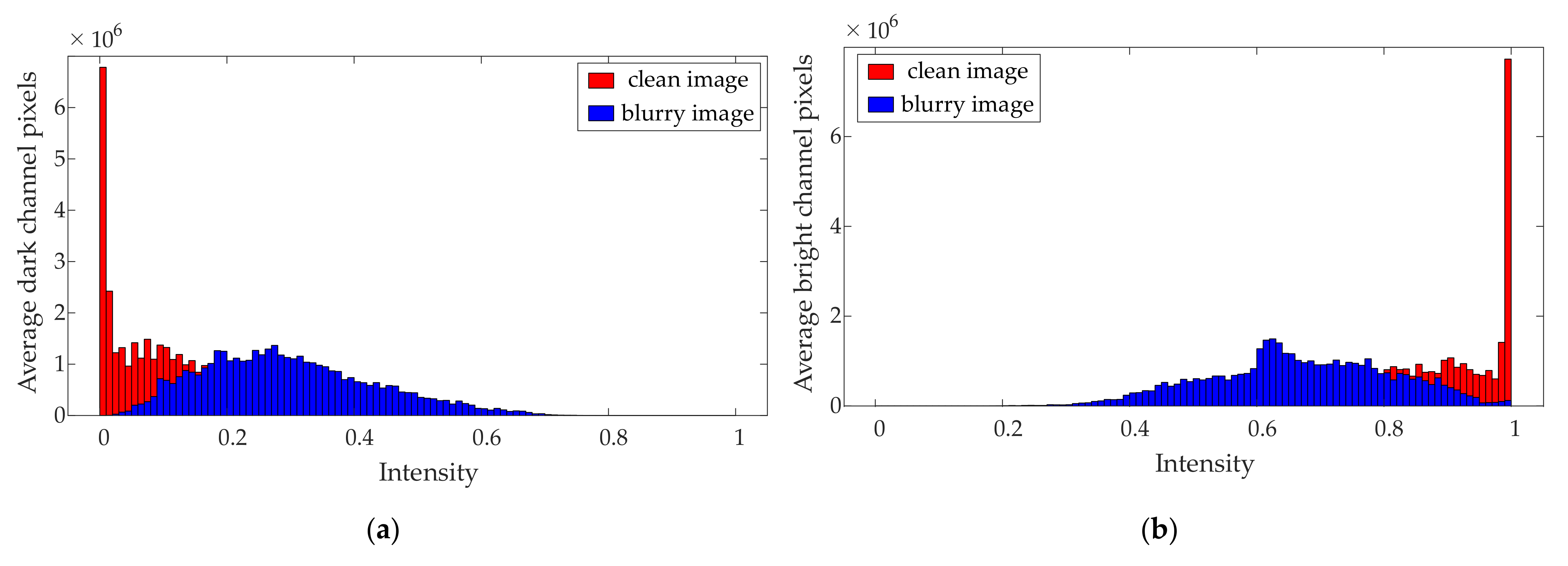

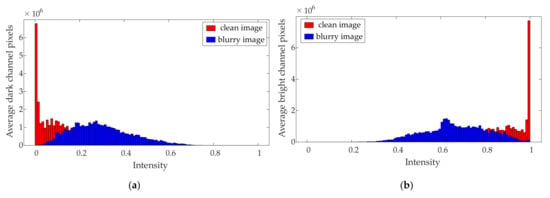

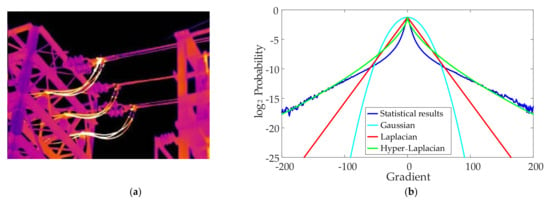

where and donate pixel locations; is an image patch centered at ; and are functions for finding the local maximum and local minimum of the image, respectively. For a general image, after the pixel brightness value is normalized, most of the values obtained by and should be distributed at both ends of the interval , respectively. However, the convolution operation of the blur kernel on the clear image will change the extreme value distribution of the image. Because the convolution operation is a weighted summation of the pixel values in the local neighborhood, it will generally cause the minimum value of the pixel value in the neighborhood to become larger and the maximum value to decrease. The mathematical basis has been proved in the literature [25,26]. Figure 1 is a statistical result of the difference between the local maximum and the local minimum distribution of 100 infrared images of power equipment under clear and blur conditions. It can be seen that the above rules are also applicable to infrared images of power equipment. Therefore, the introduction of Extreme Channels Prior can effectively distinguish between clear infrared images and blurred infrared images, prompting the intermediate latent image to move closer to the clear image, thereby ensuring the accuracy of the blur kernel estimation.

Figure 1.

Extreme channels of infrared images before and after blurring: (a) dark channel of clear and blurred images; (b) bright channel of clear and blurred images.

2.3. Blur Kernel Estimation Model

In this section, we will propose a blur kernel estimation model based on maximum a posterior probability (MAP) framework and the basic SR method of compressed sensing. The MAP framework is:

where and are the priori information of and , respectively. According to the statistics and analysis in Section 2.2, we use the norm regular term based on Extreme Channels Prior as the prior information of the image to be reconstructed:

Combined with the SR model of compressed sensing, the objective function of blur kernel estimation can be constructed:

where and are weight coefficients. The first term of the equation is the data fidelity term, which is used to ensure that there is a corresponding relationship between and and we use the norm to constrain the difference. The second term is used to preserve the significant gradient of the image and remove the small gradient, thereby improving the accuracy of the blur kernel estimation. The third term is the constraint on the sparseness of the blur kernel. The fourth item is used to preserve the sparse characteristic of the minimum value in the brightness component of the infrared image. The last item is used to ensure that the sparse coefficients obtained after the sparse transformation of the image are sparse enough and is combined with the first item to achieve the goal of SR. In order to ensure the calculation efficiency of the blur kernel estimation, we did not stretch the image into a column vector in the original compressed sensing before the calculation. The reason for this is that if the image is not divided into blocks but directly stretched as a column vector for calculation, the downsampling matrix will be too large and the calculation speed will be greatly reduced. Therefore, we modified the original model slightly, constructing a row sampling matrix and a column sampling matrix according to the principle of cubic interpolation so that the downsampling operation is performed twice. The size of the downsampling matrix and the position of non-zero elements are determined according to the downsampling rate, and the element values are determined according to the cubic interpolation downsampling function. The method of solving Equation (10) is introduced in Section 4.

3. Non-Blind SR Reconstruction Model

3.1. Non-Blind SR Objective Function

After completing the estimation of , the basic non-blind SR model is:

Equation (11) does not contain any prior information except for the sparse constraint. It is difficult to achieve the effect of deconvolution and deblurring due to the ill-posedness of the problem using the image reconstructed directly from Equation (11). Therefore, it is necessary to introduce other prior information as constraints according to the law of image statistics.

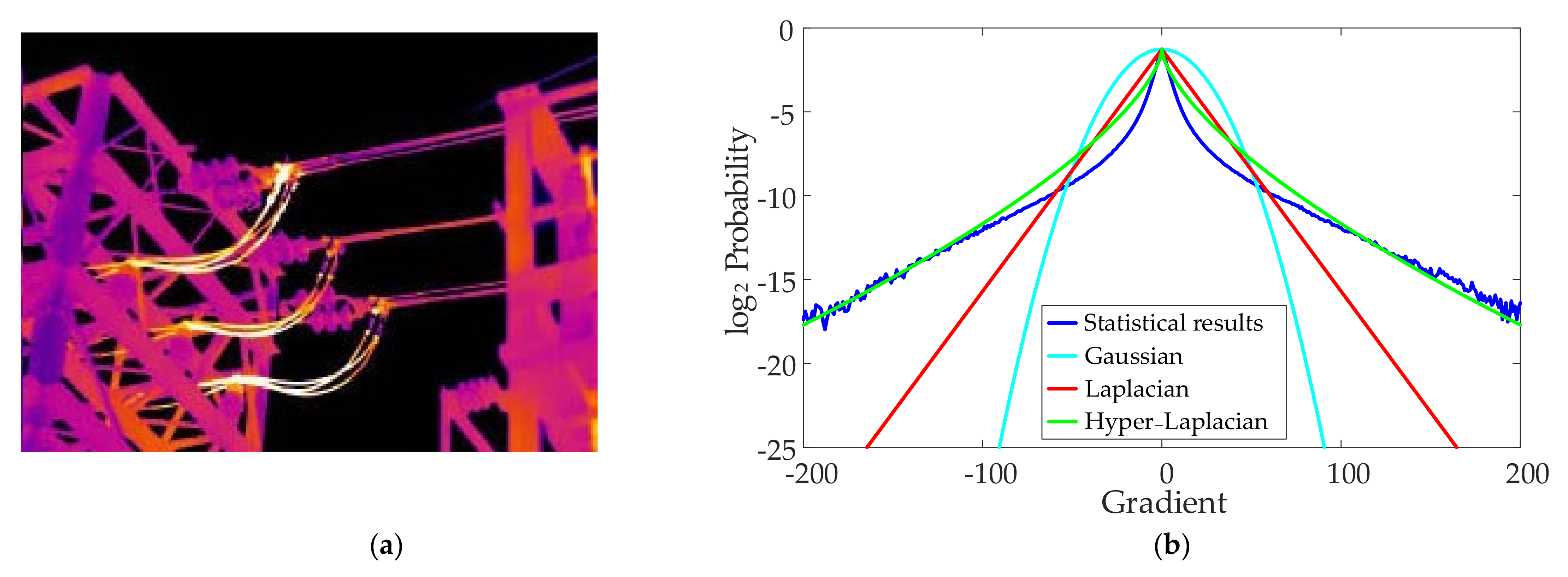

Currently, the best deblurring method generally uses a set of filter output edge statistics to match the edge statistics of the clear image as a priori constraint for the deblurring problem. The Gaussian distribution, Laplacian distribution and Hyper-Laplacian distribution are commonly used to fit the image gradient. Assuming that the edge distribution is Gaussian, the deblurring problem has an analytical solution in the frequency domain, and the image can be restored efficiently through fast Fourier transform. However, a clear infrared image usually has a non-Gaussian edge, as shown in Figure 2, so the Gaussian distribution fitting the image gradient will lead to poor visual effects of the reconstruction result. Another common method is to assume that the edges of the image conform to the Laplacian distribution. However, due to the “heavy tail” characteristic of the edge distribution of the image, the effect of Laplace distribution fitting is also not good. Therefore, most of the methods at this stage adopt the Hyper-Laplacian distribution that can better fit the “heavy tail” characteristic of the image edge as the prior information, and then achieve the purpose of deconvolution and deblurring. Figure 2 shows the probability density curve obtained by statistically calculating the gradient of the infrared image and the fitting effect of different distributions.

Figure 2.

Fitting results of infrared image gradient probability density curve and different distributions: (a) typical infrared image; (b) fitting effects of different distributions.

Therefore, it can be seen from the above discussion that a prior constraint of image edge distribution needs to be introduced for Equation (11). No matter what kind of distribution is used to fit the image edge, it can be written as , where . If , it is Hyper-Laplacian distribution, is Laplace distribution, and is Gaussian distribution. According to Bayes’ theorem, the maximum posterior probability solution of is:

where . is the strength coefficient of the prior constraint of the edge distribution. is the derivative filter of each order, . The non-blind SR objective function becomes:

3.2. Adaptive Regularization Intensity Adjustment Method

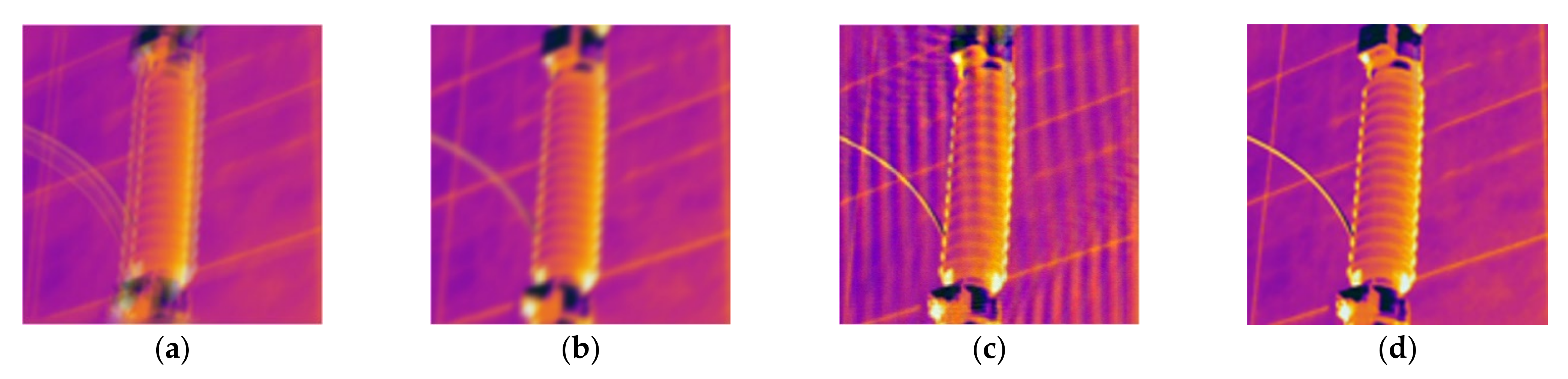

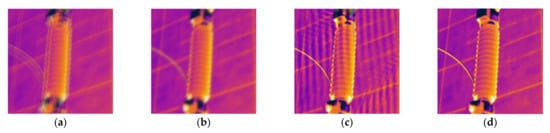

The non-blind super-resolution objective function is determined in Section 3.1, as shown in Equation (13). However, the model still needs to be further improved. The reason is that the value of needs to consider the difference in the semantics of the pixels within the image. The same value should not be used to constrain the entire picture. In this way, the edge texture of the reconstructed image is blurred when the regularization is strong, and the reconstructed image appears artifact ringing when the regularization is weak. Figure 3 shows the reconstruction results of infrared images processed with different regularization intensities and our proposed adaptive method.

Figure 3.

Infrared image reconstruction results under different regularization intensity: (a) LR image; (b) strongly regularized reconstruction results; (c) weak regularization reconstruction results; (d) results of the proposed method.

It can be seen from Figure 3c that when strong regularization is used to constrain the prior information of the image edge, that is, when , the reconstruction result does not contain ringing artifacts. However, the edge texture of the device is blurred, the image contrast is low, and the visual effect is poor. As shown in Figure 3d, when weak regularization is used for constraints, that is, when , the reconstructed image is clearer and the edge texture contrast of the device is high, but the smooth area in the image has an obvious ringing effect. However, we extract the significant edge regions in the reconstructed image by using a double-prior quadratic estimation method, distinguish the edge regions and smooth regions according to the generated label images, and adjust the intensity of the regular term with different values. Therefore, the reconstruction effect is better. As shown in Figure 3b, the edge texture of the reconstructed image is clear and does not contain ringing.

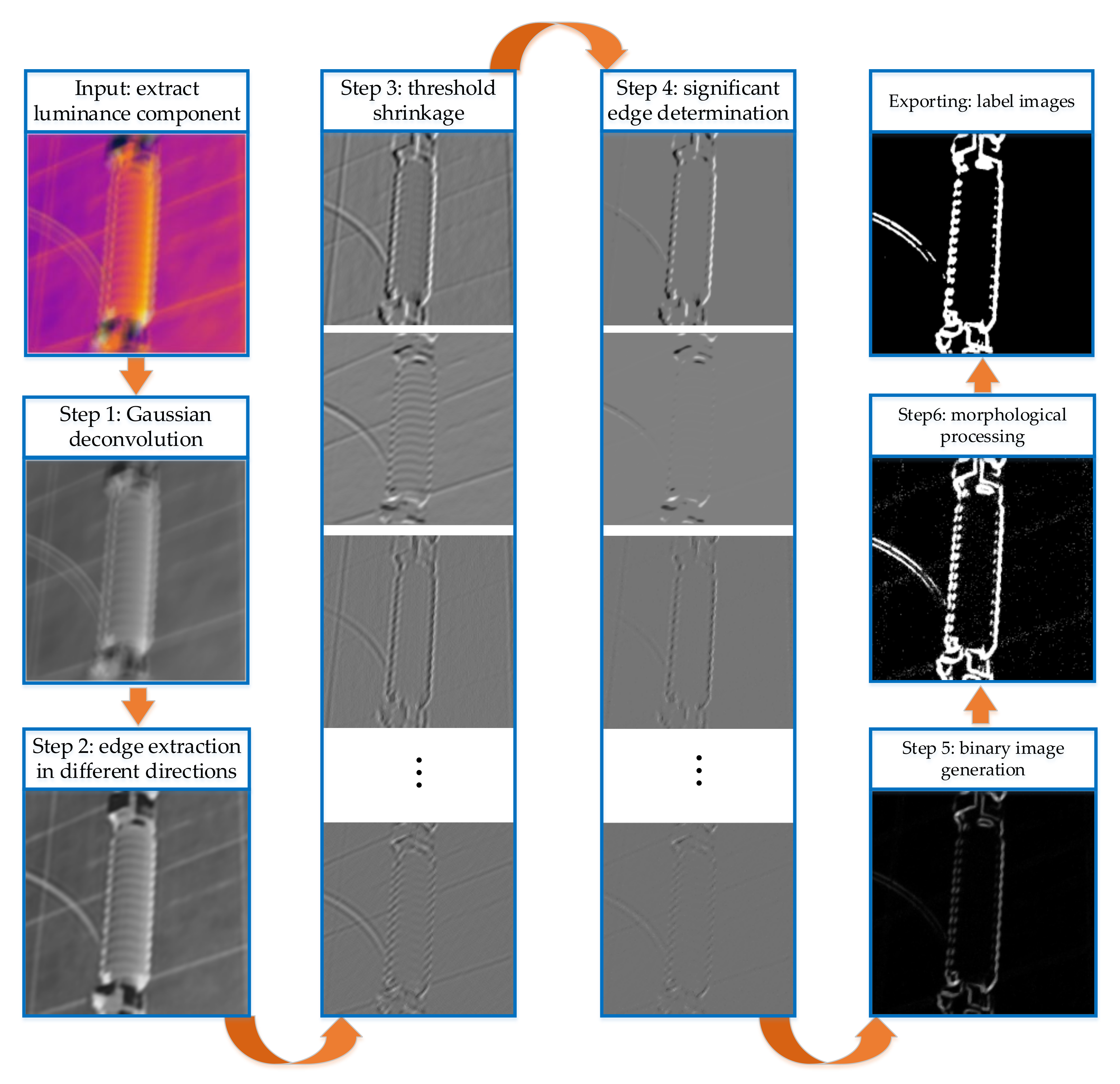

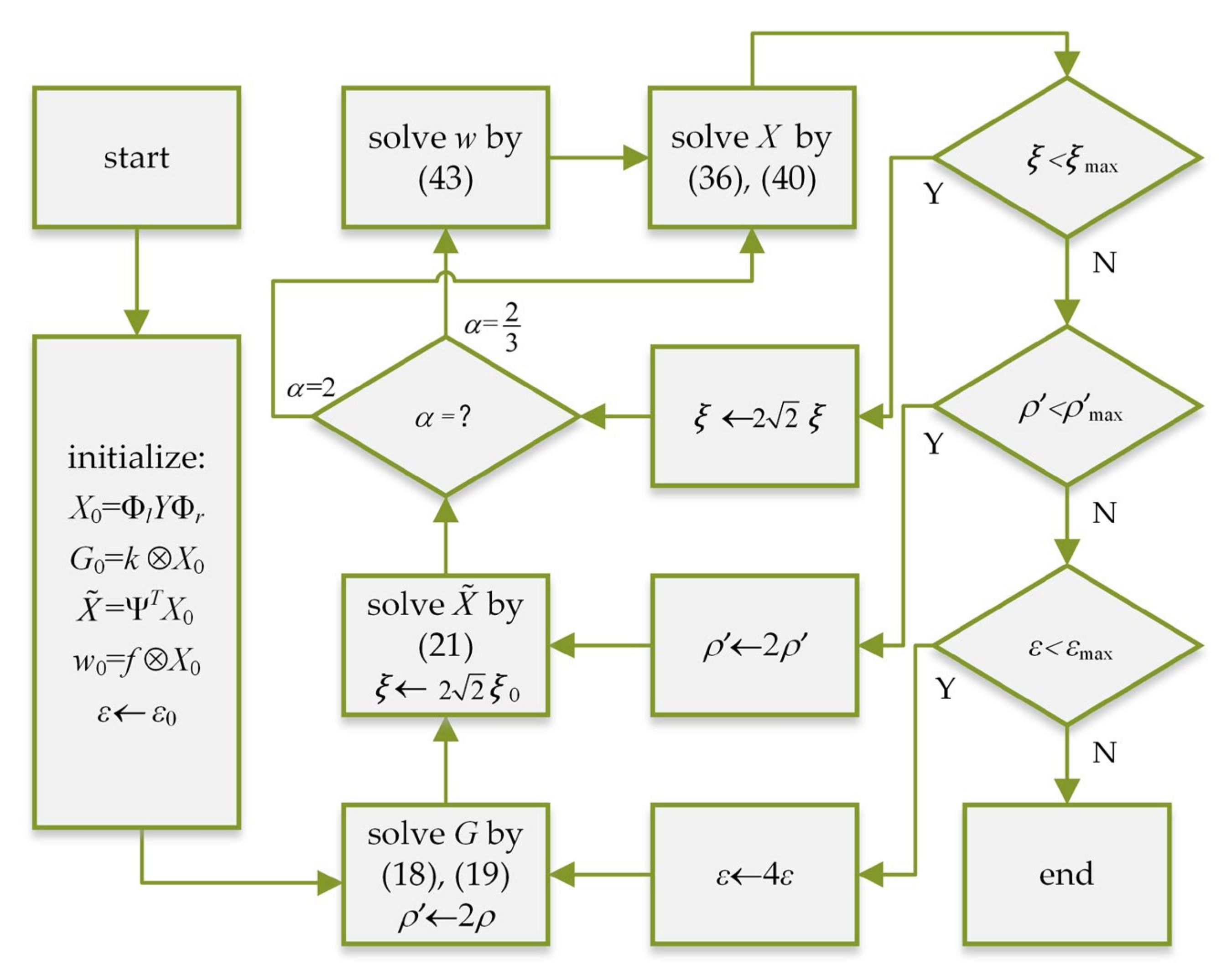

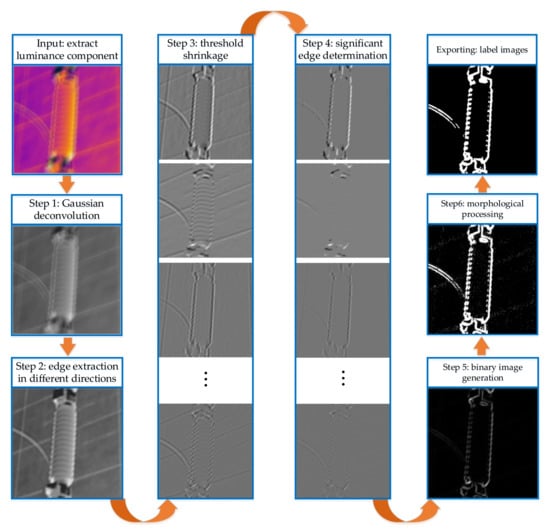

Figure 3 shows the necessity of adaptive control of the regularization intensity in the reconstruction process. The following is an introduction to the regular term intensity adjustment method of the double-prior quadratic estimation that we adopted, and the flowchart is shown in Figure 4.

Figure 4.

Flow chart of adaptive regularization intensity adjustment method.

The adaptive regular term intensity adjustment method we adopt is to use different priors as constraints to reconstruct the image twice according to the model of Equation (13). In the first reconstruction, the model uses a Gaussian prior which is easy to solve, extracts the significant edges of the reconstructed image, and generates the label image. The secondary reconstruction adopts the Hyper-Laplacian prior as the constraint, and adaptively adjusts the regularization intensity of different pixels according to the label image. The specific steps in Figure 4 are described as follows:

Step 1: Let in Equation (13), and solve it to obtain the preliminary reconstructed image .

Step 2: Use filter bank to filter to obtain edge images in all directions.

Step 3: Perform threshold shrinkage on edge images; the shrinking method is:

where is the shrinkage threshold; is the proportional coefficient; the shrinkage result is denoted as .

Step 4: Solve , and integrate the significant edges in all directions into the final image significant edge result .

Step 5: Set the elements smaller than in to 0, and set the remaining elements to 1, thereby generating a binary image.

Step 6: Perform mathematical morphological processing on the binarized image. The opening and closing operations are performed once to remove the binarized image noise, and the final label image is obtained.

After is obtained, the value of can be adaptively controlled by:

Let in Equation (13), and substitute into Equation (13) to complete image SR reconstruction. In addition, the method of solving Equation (13) under different values of is introduced in Section 4.

4. Model Solution

In Section 2 and Section 3, we, respectively, established the blur kernel estimation model and the non-blind SR reconstruction model as shown in Equations (10) and (13). This section will introduce their solution methods. In order to facilitate the solution, we use the semi-quadratic split method to introduce auxiliary variables for them, and then use the alternate minimization method to solve the unknown variables in the model. After introducing auxiliary variables, the blur kernel estimation model becomes:

where and are penalty parameters. , , are the row and column difference operators, respectively. After the introduction of auxiliary variables, Equation (13) becomes:

Equations (16) and (17) both contain variables and , and we solve them by the same objective function, so they are solved in the same way. We solve for by:

Equation (18) is a typical least squares problem, which can be solved by the gradient descent method. The derivative of Equation (18) with respect to :

The number of iterations and step length are determined by the one-step steepest descent scheme introduced in [27]. We solve for by:

which can be solved by shrinking the soft threshold:

In addition to the common variables, Equations (16) and (17) also contain their own unique variables, and their solutions are introduced separately below. The variables and in Equation (16) are all constrained by the norm, which can be solved by hard threshold shrinkage. We solve for them by:

Their solutions are:

The variables and in Equation (16) are both constrained by the norm, which can be solved by the method of fast Fourier transform. We solve for by:

In order to maintain the consistency between and to facilitate the solution, by the operational nature of the and functions, can be equivalent to . In addition, due to the non-linearity of the function , the equivalent linear operator is introduced for it. is essentially a mapping matrix, and its construction method is:

The function of the matrix is to transfer the minimum value in the image block centered on the pixel (i.e., the value of the pixel) to the pixel. As the transposed matrix of , plays a role of reverse rearrangement during operation. Reverse rearrangement means that the pixel value at position is used to reversely replace the pixel value at position . Therefore, Equation (28) can be expressed as:

The solution of (30) can be obtained by FFT:

where is ; is ; and denote the fast Fourier transform and inverse fast Fourier transform, respectively; is the complex conjugate operator; denotes component multiplication, and the division in Formula (31) is component division. It should be noted that and , as linear operators, did not actually generate a matrix and perform matrix multiplication during the calculation process, but instead set up a lookup table according to its meaning. For example, does not actually calculate the product of the matrix and . Instead, according to the relationship that is approximately equal to , the minimum value element in is replaced with the element in to obtain the result of . This avoids the generation and calculation of large matrices in the algorithm, and significantly improves the running speed.

We estimate the blur kernel by:

For the subproblem , directly using the intermediate latent image to estimate the blur kernel is not accurate [28]; therefore, the gradient image is used to estimate the blur kernel. Then, the solution of can be obtained by solving the following:

The solution of (33) can be obtained by FFT:

Since the blur kernel and , after each iteration of the subproblem, we set the negative elements of to zero and normalize at the end. The solution of all variables in the process of blur kernel estimation has been given, and Algorithm 1 shows the main steps for the blur kernel estimation algorithm. As suggested by [25,26,29], we decrease gradually to make more information available for kernel estimation.

| Algorithm 1: Blur Kernel Estimation Algorithm |

| Input: Blurred image generate the initial value of each variable do repeat solve for using the gradient descent method, . repeat solve for using (26), solve for using (27), . repeat solve for using (21), . repeat solve for using (25),solve for using (31), . until . until . and . until solve for using (34). . end for Output: blur kernel. |

Finally, only the solution of in Equation (17) has not been given yet. When the value of is different, the solution method is different. When , we solve for by:

The purpose of initial reconstruction using Gaussian prior is only to extract the significant edges of the image. Therefore, should be a larger value to suppress the generation of ringing artifacts,. The solution of (35) can be obtained by FFT:

When, the non-blind SR model is constructed by adaptive regularization, and we solve for by:

where and are the number of pixels in the row and column direction of the image, respectively; can be obtained according to Equation (15). In order to facilitate the solution, the auxiliary variable is introduced by the semi-quadratic split method. Equation (37) can be expressed as:

Equation (38) can be divided into the sub-problem and the sub-problem to be solved separately. We solve the sub-problem by:

The solution is the same as (35). can be obtained by FFT:

After obtaining , we solve the sub-problem. Let , then the objective function can be abbreviated as:

We set the derivative of Equation (41) to be 0:

We further transform Equation (42) into:

The root of Equation (43) is r. According to [30], when is between and , the solution of Equation (43) is , otherwise . However, since the solution of Equation (43) only depends on the ratio of and and the value of the variable , it can be solved by a lookup table (LUT). Where is an integer power of between 1 and 256, is or , and is different values between and . Solving Equation (43) in turn can form an offline lookup table. The LUT can give a solution to the objective function with an accuracy close to that of the analytical method at a faster speed [30].

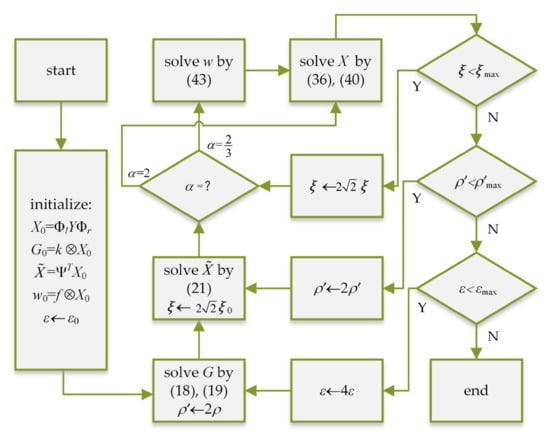

We, respectively, give the solution of each unknown variable in the objective function of blur kernel estimation and non-blind SR. When takes different values, the non-blind SR reconstruction process is shown in Figure 5.

Figure 5.

Non-blind SR flow chart.

5. Experiment and Result Analysis

Our test environment parameters were as follows: Intel(R) Core(TM)i5-9300H CPU @2.40 GHz; memory: 16.00 GB; operating system: Windows 10; MATLAB R2019a. We obtained the following fixed parameters through repeated experiments and adjustments: ; ; ; . The image block size used for the dark channel search was 35 × 35. The sparse base used the Daubechies 8 wavelet base.

In order to make the experimental results more convincing, in addition to comparing our method with the classical blind SR method, we also design comparative experiments for the blur kernel estimation and non-blind SR reconstruction in our method. In the blind SR comparison experiment, we compared our method with the methods proposed by Keys [31], Shao [20], Michaeli [23], and Kim [22]. Since the actual infrared image of the power equipment did not have the original, clear HR image, we adopted two other objective evaluation indicators: average gradient (AG) and information entropy (IE). The calculation method of AG is as follows:

where and are the image convolution results of the difference operator in the row and column directions, respectively. The larger the AG value is, the more drastically the grayscale changes in the image and the more the image levels, that is, the clearer the image is.

Entropy represents the uniformity of a system in physics. The more uniformly a system is distributed, the greater its information entropy is. The concept of image information entropy is derived from this, which can be defined as follows:

where represents the frequency of the pixel point with the gray value of in the image. The larger the IE value, the richer the information contained in the image.

In addition, in order to prove the effectiveness of the blur kernel estimation method in this paper, we compared the blur kernels estimated by our method and the algorithms proposed in [20,23,32]. We used the sum of the squared differences error (SSDE) to evaluate the accuracy of the estimated blur kernel:

where represents the estimated blur kernel and represents the true blur kernel of the image.

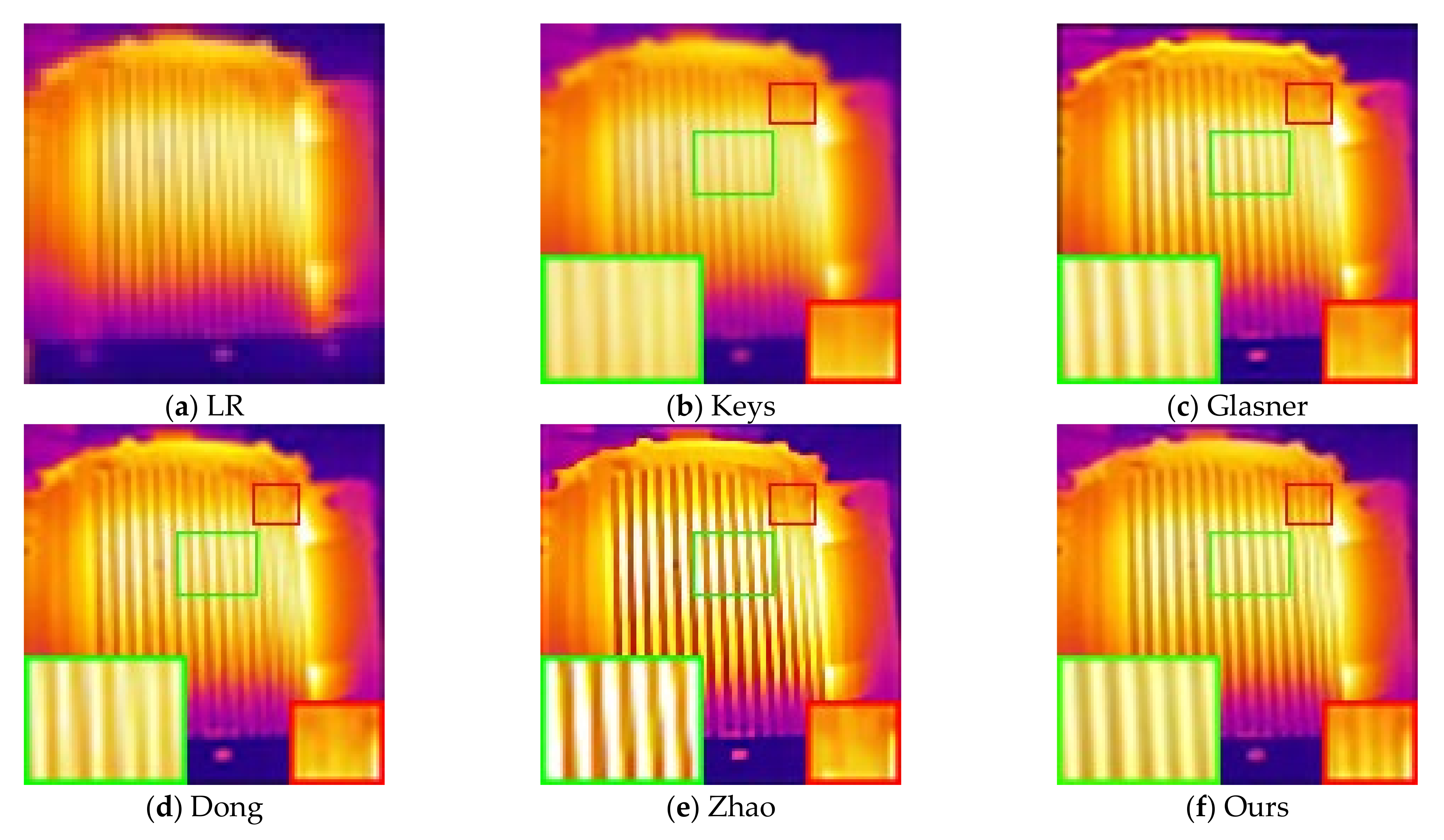

Finally, in order to verify the performance of the non-blind SR reconstruction method in this paper, we use the known blur kernel to process the HR and clear infrared image according to Equation (1). Taking the synthetic infrared image and the known blur kernel as input, we compare our method with the existing non-blind SR methods. We select the methods proposed by Keys [31], Glasner [13], Dong [15] and Zhao [17] as the comparison method. Since the artificially synthesized infrared image had the original, clear HR image, the PSNR and SSIM evaluation indicators could be used to evaluate the reconstruction results.

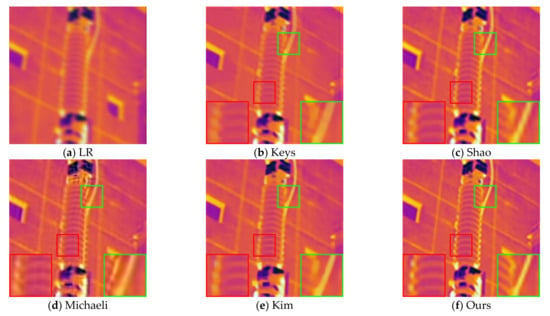

5.1. Blind SR Comparison Experiment

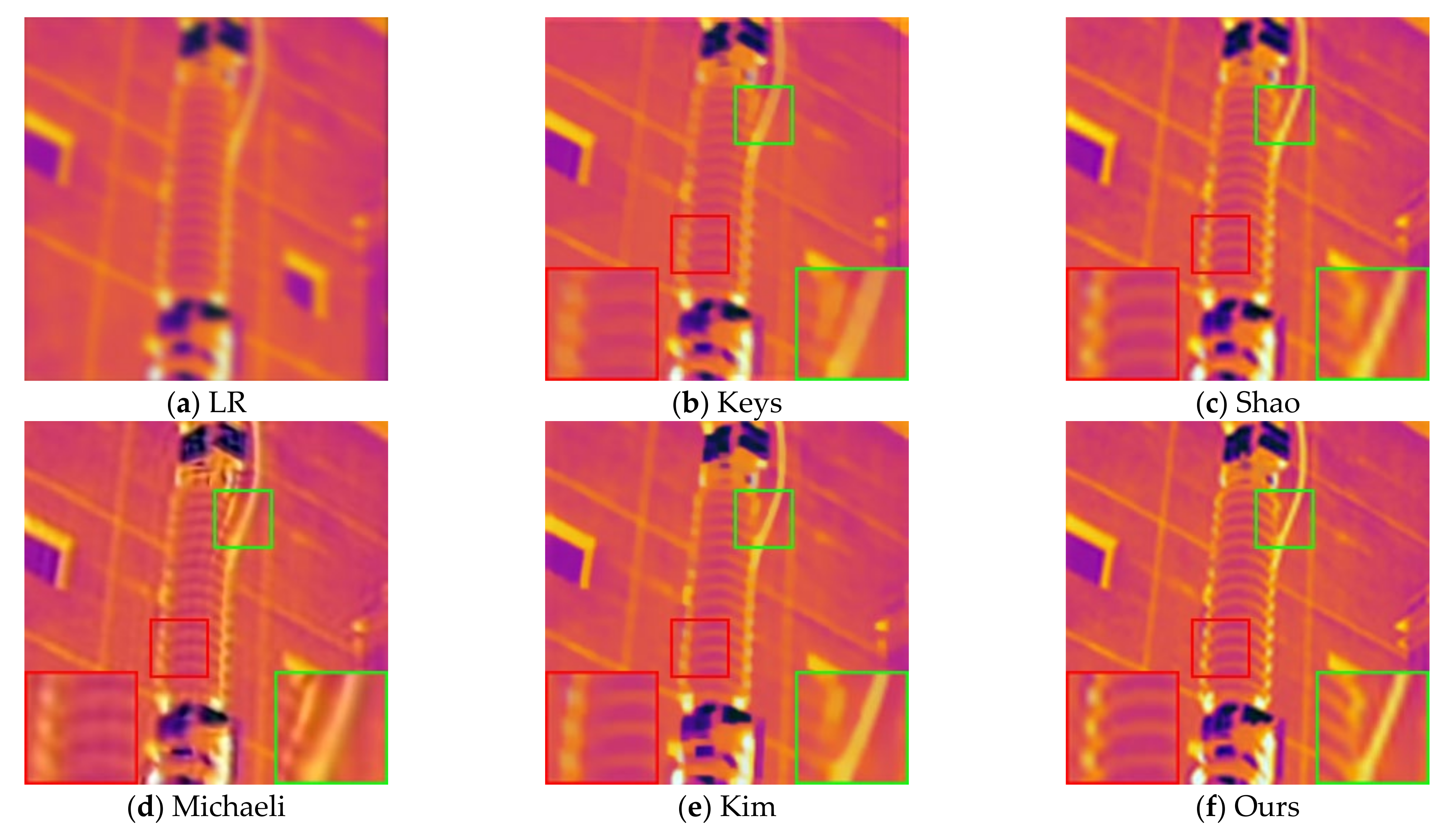

First, we reconstruct the LR infrared images of the power equipment that are actually collected to verify the effectiveness of the blind SR method in the practical application. For the experiment, we used eleven infrared images taken on site with a resolution of 128 × 128 for SR reconstruction. Figure 6 shows the images reconstructed using different methods for the 11th LR infrared image.

Figure 6.

Reconstruction results of different methods: (a) LR infrared image; (b) reconstruction results using the method in [31] (AG = 32.169; IE = 5.932); (c) reconstruction results using the method in [20] (AG = 35.921; IE = 5.991); (d) reconstruction results using the method in [23] (AG = 40.862; IE = 6.073); (e) reconstruction results using the method in [22] (AG = 38.910; IE = 6.040); (f) reconstruction results using our method (AG = 43.280; IE = 6.161).

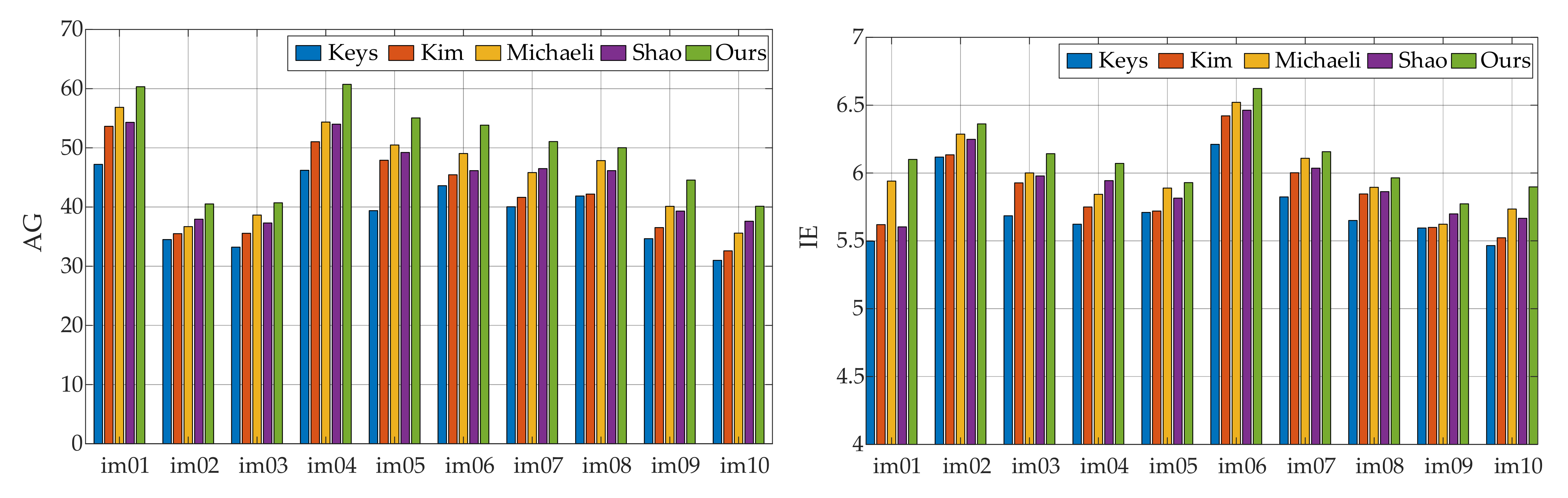

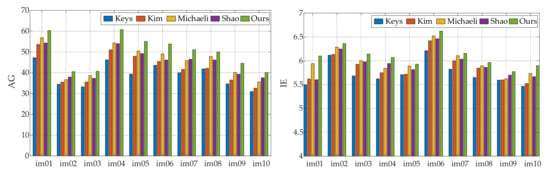

As shown in Figure 6, the method proposed by Key does not consider the influence of the blur kernel when reconstructing the image, and there is an inherent smoothness benefit of interpolation algorithms. The reconstruction result obtained by this method has the worst visual effect, and there is no obvious difference from the LR image. Compared with the original low-resolution image, the visual quality of the reconstructed image by Shao’s method has been significantly improved, but the detailed texture is still not clear enough. Obvious artifacts and ringing appear in the reconstruction results of Michaeli’s method. This is a common problem caused by improper regularization intensity in the SR reconstruction process, and it is also a problem that this paper focuses on improving and solving. Although the image reconstructed by Kim has higher contrast and brighter colors, according to the enlarged part in the green box, the edges are too smooth. On the whole, the edge texture of the image reconstructed by our method is the clearest, and there are no artifacts and ringing. This shows that our method has certain performance advantages compared with the comparison method. The AG and IE values of the remaining 10 images reconstructed using different methods are given in Figure 7. It can be seen that Kim and Shao’s methods are similar in performance. Michaeli’s method has a significantly higher reconstruction image index, which is due to improper control of the regularization intensity. Generally speaking, the infrared image reconstructed by our method has obvious advantages compared with the comparison methods in the evaluation index.

Figure 7.

AG and IE parameter values of reconstruction results of different methods.

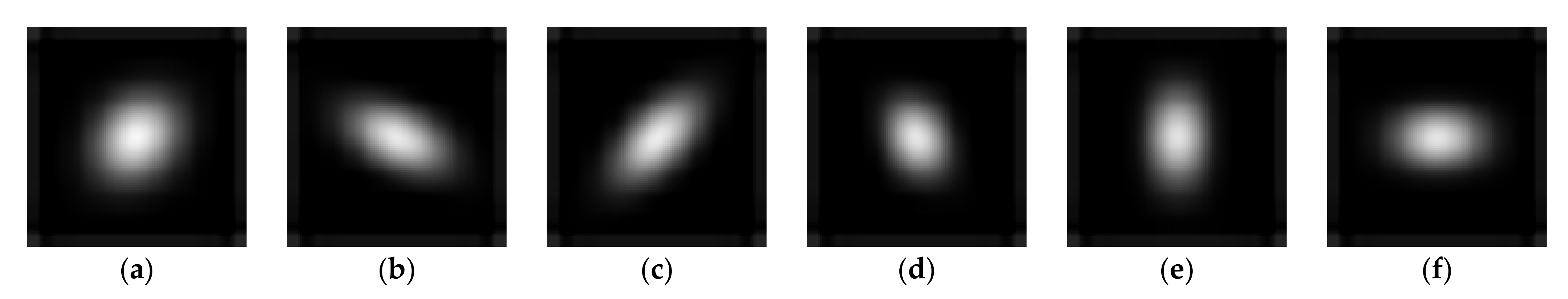

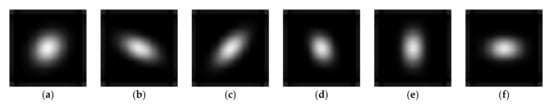

5.2. Experiment of Blur Kernel Estimation

In order to prove the effectiveness of the blur kernel estimation method in this paper, we used the six blur kernels shown in Figure 8 to sequentially blur 100 infrared images and perform double downsampling. We used our method and the comparison methods to estimate the blur kernel based on the LR blurred image. For each blur kernel, the average SSDE parameters of the blur kernel estimated by the different methods on 100 synthetic blurred infrared images are shown in Table 1. It can be seen from the data in Table 1 that compared with the comparison methods, our blur kernel estimation method has achieved better results in accuracy.

Figure 8.

Six different blur kernels: (a) BK1; (b) BK2; (c) BK3; (d) BK4; (e) BK5; (f) BK6.

Table 1.

The mean SSDE of each method for each BK on all 100 synthetic blurred images.

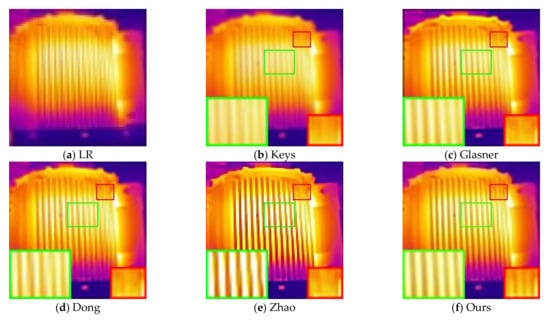

5.3. Non-Blind SR Comparison Experiment

In this section, we use the six blur kernels in Section 5.2 to process 10 HR and clear infrared images according to Equation (1) to obtain 60 artificially synthesized LR images. Figure 9 shows the LR infrared image of the 10th HR image synthesized by BK6, as well as the reconstruction results of different non-blind SR methods.

Figure 9.

Reconstruction results of different methods when using BK6: (a) HR infrared image; (b) reconstruction results using the method in [31] (PSNR = 28.338 dB; SSIM = 0.911); (c) reconstruction results using the method in [13] (PSNR = 30.542 dB; SSIM = 0.927); (d) reconstruction results using the method in [15] (PSNR = 31.213 dB; SSIM = 0.952); (e) reconstruction results using the method in [17] (PSNR = 29.372 dB; SSIM = 0.940); (f) reconstruction results using our method (PSNR = 32.503 dB; SSIM = 0.963).

It can be seen from Figure 9 that the method proposed by Keys has the worst visual effect due to the inherent smoothing benefits of interpolation algorithms, and the transformer texture is almost invisible. The visual quality of Glasner and Dong’s methods are similar, but the texture is not as clear as our proposed method, especially in small local details. The reconstruction result of Zhao’s method is somewhat distorted, and the image is too sharp, resulting in too high brightness. This will have a very bad influence in infrared diagnosis, and it is easy to cause the operation and maintenance personnel to misjudge the operating temperature of the equipment. Additionally, according to the part marked in the red box, its ability to reconstruct small textures obviously has a certain gap compared with our method. Due to the large number of images used in the experiment, the reconstruction results of the remaining images are given in the form of evaluation parameters. The PSNR and SSIM values of the reconstructed image of different algorithms are shown in Figure 10. Due to the large amount of data, for the reconstruction results of the same HR image processed by different blur kernels, the objective evaluation parameters are averaged and displayed. It can be seen from Table 2 and Table 3 that the performance of our method is significantly improved compared to the comparison methods.

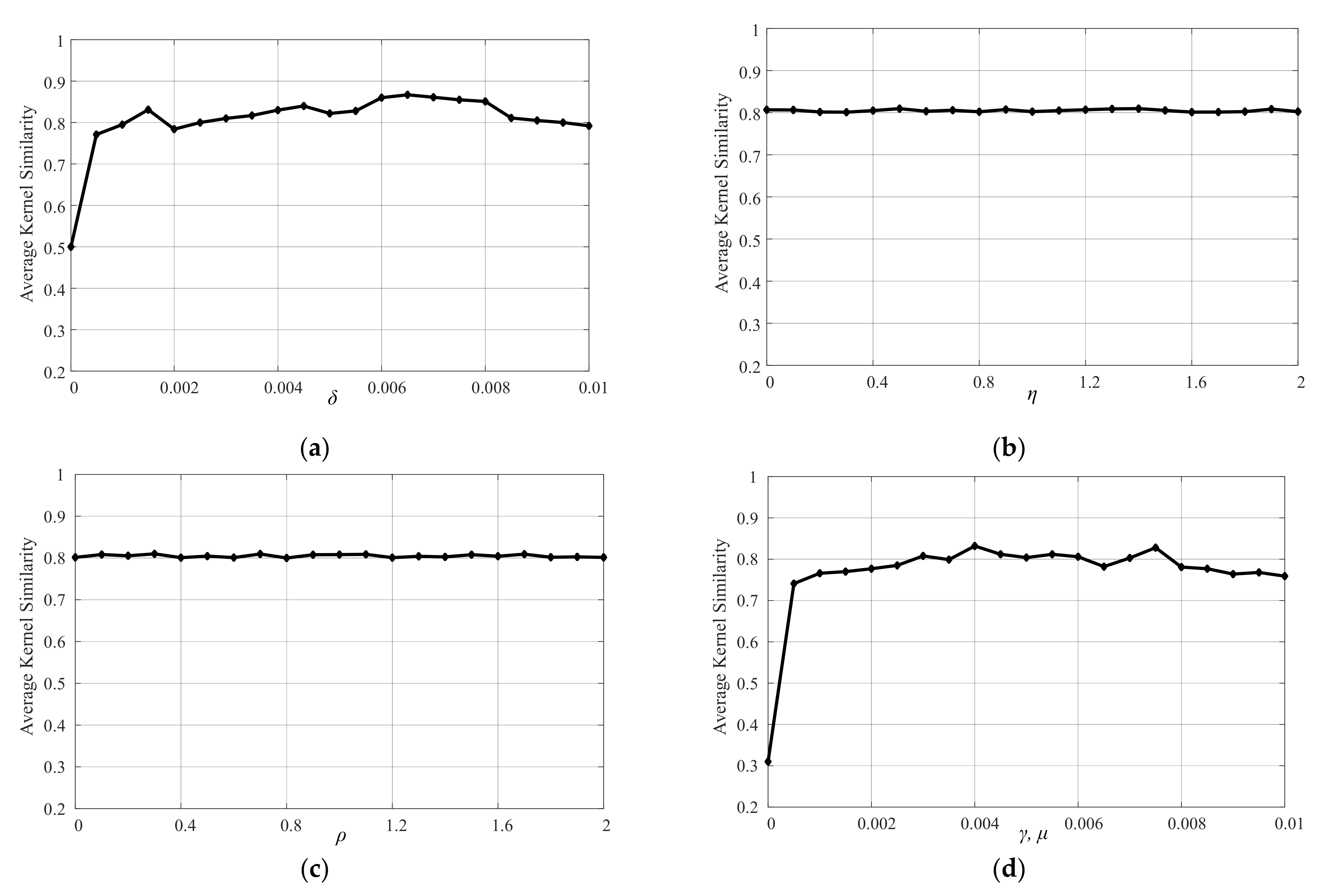

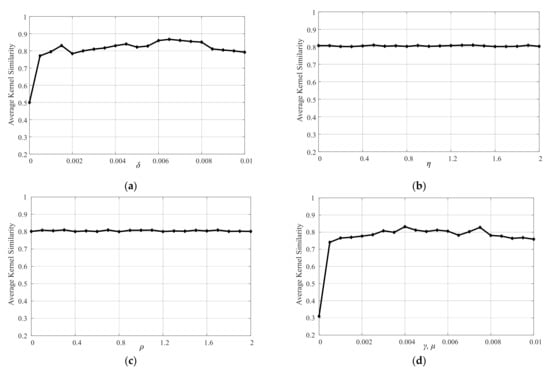

Figure 10.

Sensitivity analysis of and for the blur kernel estimation model: (a) parameter; (b) parameter; (c) parameter; (d) and parameters.

Table 2.

PSNR parameter values of reconstruction results of different methods.

Table 3.

SSIM parameter values of reconstruction results of different methods.

5.4. Sensitivity Analysis

In this paper, the blur kernel estimation model involves many parameters. In this section, we analyze the influence of its value. The blur kernel estimation model involves five main parameters and . In order to analyze the influence of these parameters on the blur kernel estimation, we collect 10 blurred images for tests. For each parameter, we carry out experiments with different parameter settings by varying one and fixing the others with the kernel similarity metric to measure the accuracy of estimated kernels. For parameter , we set its values from to 0.01 with the step size of . Figure 10a demonstrates that blur kernels can be well estimated by a wide range of , i.e., within . Similarly, we set the values of and from 0 to 2 with the increment of 0.1, and the values of and from 0 to 0.01 with the increase of . The experimental results of and parameters are shown in Figure 10b,c. Since in the actual calculation process, the result is displayed by one curve, as shown in Figure 10d. The experimental results show that the proposed blur kernel estimation algorithm performs well with a wide range of parameter settings. In addition, when , it can be seen from Figure 10d that the blur kernel estimation effect is extremely poor, which also proves the necessity of introducing Extreme Channels Prior to the blur kernel estimation model in this paper.

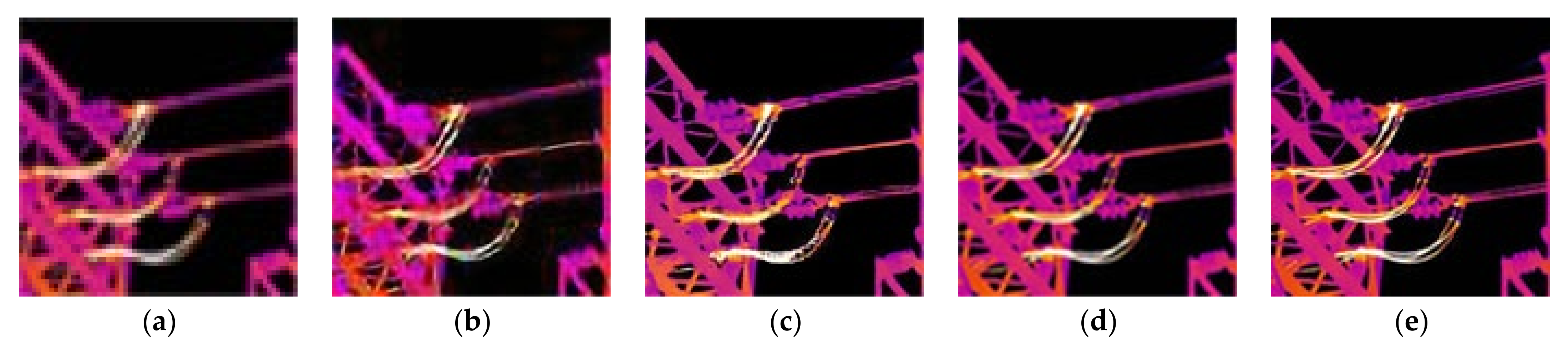

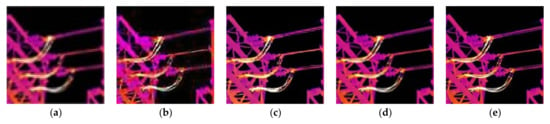

5.5. Comparison with the Deep Learning Method

In order to reflect the superiority of this algorithm in practical applications, this section selects the advanced methods of deep learning algorithms [33] and our super-resolution reconstruction method for comparison experiments. At this stage, deep learning-based super-resolution algorithms require a large number of high-definition images as training samples. When training resources are insufficient, the performance of the method will decrease significantly. The algorithm in this paper can achieve high-quality image reconstruction without training samples. Figure 11 shows the comparison of the reconstruction results of the deep learning method with 500 and 2000 infrared images after training the model. It can be seen from Figure 11 that the deep learning algorithm has an obvious grid phenomenon and color distortion when the training data are insufficient. When the training data are sufficient, the contrast of some edge detail textures is slightly higher than that of our method. However, the result of the learning method contains a certain amount of false texture, which has a bad influence on infrared diagnosis. It can be seen that our method does not require training samples, and the reconstruction results are more accurate, so it has better practical application value in the electric power field.

Figure 11.

Comparison between deep learning and image reconstruction: (a) composite LR image; (b) inadequate training; (c) adequate training; (d) our method; (e) original image.

6. Conclusions

In order to improve the quality of SR reconstructed images, so as to facilitate the status monitoring and fault analysis of power equipment, we propose a blind SR method of compressed sensing. Our method is divided into two parts: blur kernel estimation and non-blind SR reconstruction. For the blur kernel estimation part, we improved the basic SR model of compressed sensing and get the basic blur kernel estimation model. In order to improve the estimation accuracy of the blur kernel, we introduce Extreme Channels Prior based on the color characteristics of the infrared image. For the non-blind SR reconstruction method, we propose an adaptive non-blind SR reconstruction algorithm. It controls the intensity coefficient of the regular term adaptively during the reconstruction process to suppress the generation of artifact ringing and improve the quality of the reconstructed image. The above blur kernel estimation method and the non-blind SR method are combined to form our blind SR method. In the experimental part, we compare the two parts of the blind SR method with their corresponding existing classical methods to illustrate the superiority of the performance of our method. According to the experimental results, it can be seen that our method can estimate the blur kernel more accurately, which can complete the non-blind SR reconstruction of LR infrared images with higher quality. The HR infrared image reconstructed by our method has more detailed textures and better visual effects, which can provide better conditions for the application of power system infrared diagnosis. Under the current background of the power industry actively carrying out the construction of the IoT, our method provides a feasible way to reduce the hardware cost of its construction. We think it enjoys broad application prospects to use the mode of front-end using low-cost sensors to collect information and back-end using algorithms to recover the high-quality collected images. This method can effectively reduce the construction cost of IoT and the cost of data transmission and storage. Because the construction of power IoT is still in its infancy, we do not choose to use the data-driven method for infrared image super-resolution reconstruction. When the data acquisition and storage system become more standardized and mature in the future, we think that it will also be an interesting idea to train dictionaries according to the types of power equipment and update them online to achieve sparse representation of different images, which may be able to achieve a better reconstruction effect on the premise of ensuring the accuracy of image information.

Author Contributions

The conceptualization, data curation, formal analysis, and methodology were performed by H.Z. The software, supervision, formal analysis, validation, and writing—original draft preparation, review, and editing were performed by L.W. and B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jalil, B.; Leone, G.R.; Martinelli, M.; Moroni, D.; Pascali, M.A.; Berton, A. Fault Detection in Power Equipment via an Unmanned Aerial System Using Multi Modal Data. Sensors 2019, 19, 3014. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Zhang, Z. Improving Neural Network Detection Accuracy of Electric Power Bushings in Infrared Images by Hough Transform. Sensors 2020, 20, 2931. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, J.; Huang, H.; Hu, S.; Zhang, A.; Ma, H.; Sun, W. Super-resolution based on compressive sensing and structural self-similarity for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4864–4876. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Lukac, R.; Shi, G. Sparse representation-based image interpolation with nonlocal autoregressive modeling. IEEE Trans. Image Process. 2013, 22, 1382–1394. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, L.; Wu, H.; Pan, C. Fast image upsampling via the displacement field. IEEE Trans. Image Process. 2014, 23, 5123–5135. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Fan, Q.; Bao, F.; Liu, Y.; Zhang, C. Single-image super-resolution based on rational fractal interpolation. IEEE Trans. Image Process. 2018, 27, 3782–3797. [Google Scholar] [PubMed]

- Yang, J.; Wang, Z.; Lin, Z.; Cohen, S.; Thomas, H. Coupled dictionary training for image super-resolution. IEEE Trans. Image Process. 2012, 21, 3467–3478. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the 13th European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Yang, W.; Feng, J.; Yang, J.; Zhao, F.; Liu, J.; Guo, Z.; Yan, S. Deep edge guided recurrent residual learning for image super-resolution. IEEE Trans. Image Process. 2017, 26, 5895–5907. [Google Scholar] [CrossRef] [Green Version]

- Schulter, S.; Leistner, C.; Bischof, H. Fast and accurate image upscaling with super-resolution forests. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; pp. 3791–3799. [Google Scholar]

- Li, L.; Xie, Y.; Hu, W.; Zhang, W. Single image super-resolution using combined total variation regularization by split Bregman Iteration. Neurocomputing 2014, 142, 551–560. [Google Scholar] [CrossRef]

- Rasti, P.; Demirel, H.; Anbarjafari, G. Improved iterative back projection for video super-resolution. In Proceedings of the 22nd Signal Processing and Communications Applications Conference (SIU 2014), Trabzon, Turkey, 23–25 April 2014. [Google Scholar]

- Glasner, D.; Bagon, S.; Irani, M. Super-resolution from a single image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2009), Kyoto, Japan, 29 September–2 October 2009; pp. 349–356. [Google Scholar]

- Šroubek, F.; Kamenický, J.; Milanfar, P. Superfast superresolution. In Proceedings of the IEEE International Conference on Image Processing (IEEE ICP 2011), Brussels, Belgium, 11–14 September 2011; pp. 1153–1156. [Google Scholar]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 2012, 22, 1620–1630. [Google Scholar] [CrossRef] [Green Version]

- Yanovsky, I.; Lambrigtsen, B.H.; Tanner, A.B.; Luminita, A.V. Efficient deconvolution and super-resolution methods in microwave imagery. IEEE J. Stars 2015, 8, 4273–4283. [Google Scholar] [CrossRef]

- Zhao, N.; Wei, Q.; Basarab, A.; Dobigeon, N.; Kouamé, D.; Tourneret, J.Y. Fast Single Image Super-Resolution Using a New Analytical Solution for l2–l2 Problems. IEEE Trans. Image Process. 2016, 25, 3683–3697. [Google Scholar] [CrossRef] [Green Version]

- Efrat, N.; Glasner, D.; Apartsin, A.; Nadler, B.; Levin, A. Accurate blur models vs. in image priors in single image super-resolution. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV 2013), Sydney, NSW, Australia, 1–8 December 2013; pp. 2832–2839. [Google Scholar]

- Riegler, G.; Schulter, S.; Ruther, M.; Bischof, H. Conditioned regression models for non-blind single image super-resolution. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2015), Santiago, Chile, 7–13 December 2015; pp. 522–530. [Google Scholar]

- Shao, W.Z.; Ge, Q.; Wang, L.Q.; Lin, Y.Z.; Deng, H.S.; Li, H.B. Nonparametric Blind Super-Resolution Using Adaptive Heavy-Tailed Priors. J. Math. Imaging Vis. 2019, 61, 885–917. [Google Scholar] [CrossRef]

- Qian, Q.; Gunturk, B.K. Blind super-resolution restoration with frame-by-frame nonparametric blur estimation. Multidimens Syst. Signal. Process. 2016, 27, 255–273. [Google Scholar] [CrossRef]

- Kim, W.H.; Lee, J.S. Blind single image super resolution with low computational complexity. Multimed. Tools. Appl. 2017, 76, 7235–7249. [Google Scholar] [CrossRef]

- Michaeli, T.; Irani, M. Nonparametric Blind Super-resolution. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 945–952. [Google Scholar]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Yan, Y.; Ren, W.; Guo, Y.; Rui, W.; Xiaochun, C. Image deblurring via extreme channels prior. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CCVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4003–4011. [Google Scholar]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M.H. Blind image deblurring using dark channel prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1628–1636. [Google Scholar]

- Li, C. An Efficient Algorithm for Total Variation Regularization with Applications to the Single Pixel Camera and Compressive Sensing. Master’s Thesis, Rice University, Houston, TX, USA, 2010. [Google Scholar]

- Tang, S.; Zheng, W.; Xie, X.; He, T.; Yang, P.; Luo, L.; Zhao, H. Multi-regularization-constrained blur kernel estimation method for blind motion deblurring. IEEE Access 2019, 7, 5296–5311. [Google Scholar] [CrossRef]

- Cho, S.; Lee, S. Fast Motion Deblurring. Acm Trans. Graph. 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Krishnan, D.; Fergus, R. Fast image deconvolution using hyper-Laplacian priors. Adv. Neural Inf. Process. Syst. 2009, 22, 1033–1041. [Google Scholar]

- Keys, R.G. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 2003, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Liang, J.; Zhang, K.; Gu, S.; Gool, L.V.; Timofte, R. Flow-based Kernel Prior with Application to Blind Super-Resolution. arXiv 2021, arXiv:2103.15977. [Google Scholar]

- Zhang, K.; Gool, L.V.; Timofte, R. Deep unfolding network for image super-resolution. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2020), Seattle, WA, USA, 13–19 June 2020; pp. 3217–3226. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).