Identification of Autism Subtypes Based on Wavelet Coherence of BOLD FMRI Signals Using Convolutional Neural Network

Abstract

:1. Introduction

Classification of ASD Using Functional Connectivity (FC)—Related Works

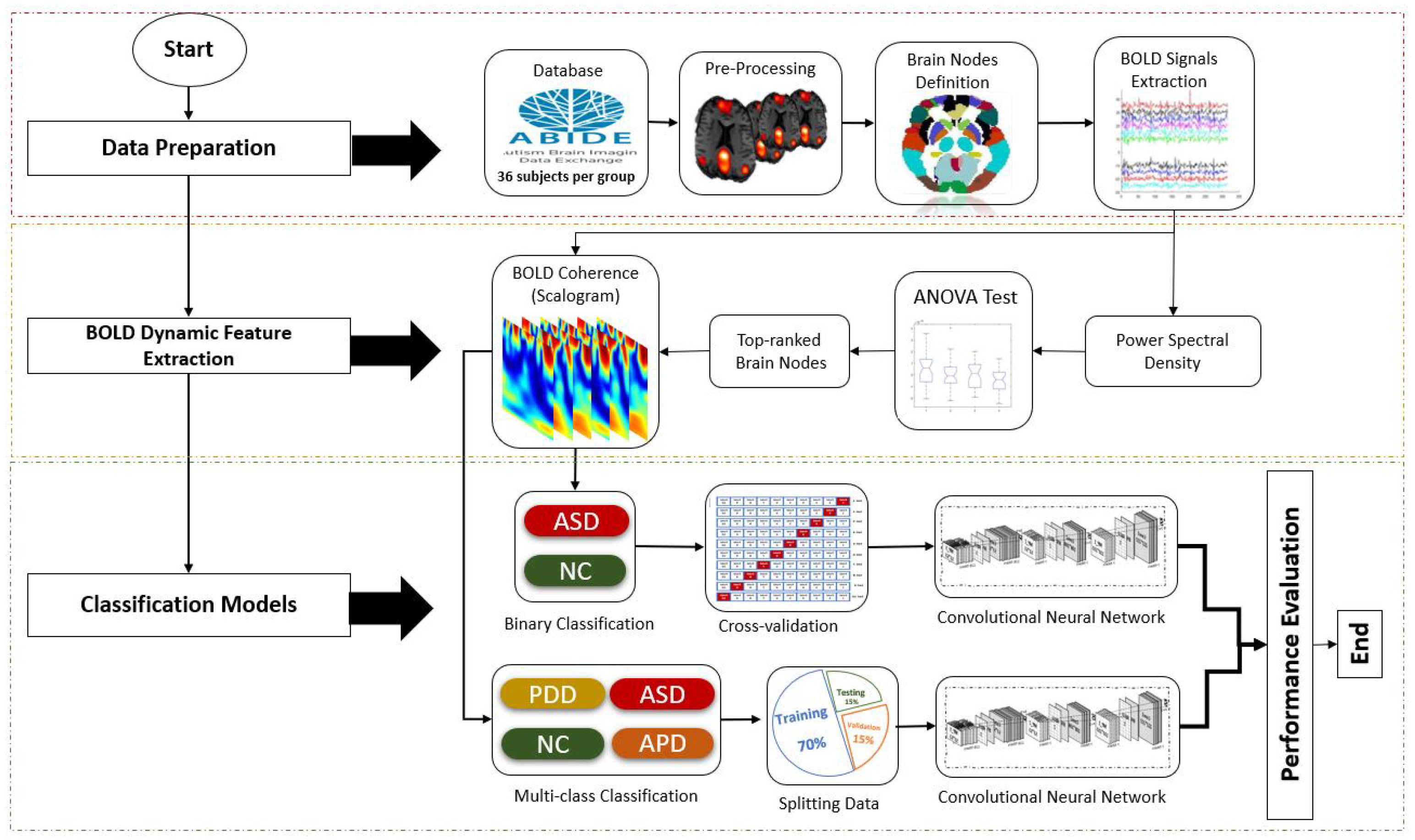

2. Materials and Methods

2.1. Data Preparation

2.2. Statistical Analysis Using Power Spectral Density (PSD)

| Algorithm 1: Method of finding the top-ranked node in discriminating 3-level ASD subtypes and NC using mean value of PSD. |

|

2.3. Wavelet Coherence of BOLD Time-Series Signals

2.4. Convolutional Neural Network (CNN)

2.5. Performance Evaluation Metric

3. Results and Discussion

3.1. Selection of Top-Ranked Brain Node for Classification of ASD Subtypes via Statistical Analysis

3.2. Binary Classification Using Wavelet Coherence of Top Three Significant Nodes

3.3. Binary Classification Using Wavelet Coherence of Putamen_R Node

3.4. Multi-Class Classification

4. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Region Label | p-Value | No. | Region Label | p-Value | No. | Region Label | p-Value | No. | Region Label | p-Value |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Precentral_L | 0.191 | 31 | Cingulum_Ant_L | 0.485 | 61 | Parietal_Inf_L | 0.971 | 91 | Cerebelum_Crus1_L | 0.570 |

| 2 | Precentral_R | 0.115 | 32 | Cingulum_Ant_R | 0.911 | 62 | Parietal_Inf_R | 0.862 | 92 | Cerebelum_Crus1_R | 0.862 |

| 3 | Frontal_Sup_L | 0.061 | 33 | Cingulum_Mid_L | 0.653 | 63 | SupraMarginal_L | 0.912 | 93 | Cerebelum_Crus2_L | 0.352 |

| 4 | Frontal_Sup_R | 0.138 | 34 | Cingulum_Mid_R | 0.820 | 64 | SupraMarginal_R | 0.162 | 94 | Cerebelum_Crus2_R | 0.662 |

| 5 | Frontal_Sup_Orb_L | 0.052 | 35 | Cingulum_Post_L | 0.998 | 65 | Angular_L | 0.452 | 95 | Cerebelum_3_L | 0.010 |

| 6 | Frontal_Sup_Orb_R | 0.294 | 36 | Cingulum_Post_R | 0.146 | 66 | Angular_R | 0.414 | 96 | Cerebelum_3_R | 0.539 |

| 7 | Frontal_Mid_L | 0.365 | 37 | Hippocampus_L | 0.847 | 67 | Precuneus_L | 0.890 | 97 | Cerebelum_4_5_L | 0.653 |

| 8 | Frontal_Mid_R | 0.333 | 38 | Hippocampus_R | 0.389 | 68 | Precuneus_R | 0.396 | 98 | Cerebelum_4_5_R | 0.412 |

| 9 | Frontal_Mid_Orb_L | 0.733 | 39 | ParaHippocampal_L | 0.052 | 69 | Paracentral_Lobule_L | 0.771 | 99 | Cerebelum_6_L | 0.425 |

| 10 | Frontal_Mid_Orb_R | 0.779 | 40 | ParaHippocampal_R | 0.455 | 70 | Paracentral_Lobule_R | 0.910 | 100 | Cerebelum_6_R | 0.868 |

| 11 | Frontal_Inf_Oper_L | 0.800 | 41 | Amygdala_L | 0.176 | 71 | Caudate_L | 0.012 | 101 | Cerebelum_7b_L | 0.044 |

| 12 | Frontal_Inf_Oper_R | 0.470 | 42 | Amygdala_R | 0.386 | 72 | Caudate_R | 0.279 | 102 | Cerebelum_7b_R | 0.423 |

| 13 | Frontal_Inf_Tri_L | 0.300 | 43 | Calcarine_L | 0.490 | 73 | Putamen_L | 0.143 | 103 | Cerebelum_8_L | 0.951 |

| 14 | Frontal_Inf_Tri_R | 0.417 | 44 | Calcarine_R | 0.714 | 74 | Putamen_R | 0.008 | 104 | Cerebelum_8_R | 0.900 |

| 15 | Frontal_Inf_Orb_L | 0.283 | 45 | Cuneus_L | 0.732 | 75 | Pallidum_L | 0.646 | 105 | Cerebelum_9_L | 0.836 |

| 16 | Frontal_Inf_Orb_R | 0.973 | 46 | Cuneus_R | 0.750 | 76 | Pallidum_R | 0.561 | 106 | Cerebelum_9_R | 0.096 |

| 17 | Rolandic_Oper_L | 0.075 | 47 | Lingual_L | 0.685 | 77 | Thalamus_L | 0.990 | 107 | Cerebelum_10_L | 0.903 |

| 18 | Rolandic_Oper_R | 0.131 | 48 | Lingual_R | 0.256 | 78 | Thalamus_R | 0.594 | 108 | Cerebelum_10_R | 0.836 |

| 19 | Supp_Motor_Area_L | 0.698 | 49 | Occipital_Sup_L | 0.615 | 79 | Heschl_L | 0.095 | 109 | Vermis_1_2 | 0.649 |

| 20 | Supp_Motor_Area_R | 0.473 | 50 | Occipital_Sup_R | 0.608 | 80 | Heschl_R | 0.160 | 110 | Vermis_3 | 0.329 |

| 21 | Olfactory_L | 0.982 | 51 | Occipital_Mid_L | 0.514 | 81 | Temporal_Sup_L | 0.045 | 111 | Vermis_4_5 | 0.762 |

| 22 | Olfactory_R | 0.913 | 52 | Occipital_Mid_R | 0.090 | 82 | Temporal_Sup_R | 0.830 | 112 | Vermis_6 | 0.772 |

| 23 | Frontal_Sup_Medial_L | 0.340 | 53 | Occipital_Inf_L | 0.487 | 83 | Temporal_Pole_Sup_L | 0.070 | 113 | Vermis_7 | 0.738 |

| 24 | Frontal_Sup_Medial_R | 0.183 | 54 | Occipital_Inf_R | 0.282 | 84 | Temporal_Pole_Sup_R | 0.917 | 114 | Vermis_8 | 0.867 |

| 25 | Frontal_Med_Orb_L | 0.928 | 55 | Fusiform_L | 0.749 | 85 | Temporal_Mid_L | 0.900 | 115 | Vermis_9 | 0.592 |

| 26 | Frontal_Med_Orb_R | 0.769 | 56 | Fusiform_R | 0.938 | 86 | Temporal_Mid_R | 0.113 | 116 | Vermis_10 | 0.272 |

| 27 | Rectus_L | 0.096 | 57 | Postcentral_L | 0.878 | 87 | Temporal_Pole_Mid_L | 0.364 | |||

| 28 | Rectus_R | 0.871 | 58 | Postcentral_R | 0.108 | 88 | Temporal_Pole_Mid_R | 0.860 | |||

| 29 | Insula_L | 0.075 | 59 | Parietal_Sup_L | 0.984 | 89 | Temporal_Inf_L | 0.566 | |||

| 30 | Insula_R | 0.744 | 60 | Parietal_Sup_R | 0.144 | 90 | Temporal_Inf_R | 0.343 |

References

- Pagnozzi, A.M.; Conti, E.; Calderoni, S.; Fripp, J.; Rose, S.E. A systematic review of structural MRI biomarkers in autism spectrum disorder: A machine learning perspective. Int. J. Dev. Neurosci. 2018, 71, 68–82. [Google Scholar] [CrossRef]

- Autism Spectrum Disorders. Available online: https://www.who.int/news-room/fact-sheets/detail/autism-spectrum-disorders (accessed on 7 February 2021).

- Hansen, S.N.; Schendel, D.E.; Parner, E.T. Explaining the increase in the prevalence of autism spectrum disorders: The proportion attributable to changes in reporting practices. JAMA Pediatr. 2015, 169, 56–62. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Witwer, A.N.; Lecavalier, L. Examining the validity of autism spectrum disorder subtypes. J. Autism Dev. Disord. 2008, 38, 1611–1624. [Google Scholar] [CrossRef]

- Mazurek, M.O.; Lu, F.; Symecko, H.; Butter, E.; Bing, N.M.; Hundley, R.J.; Poulsen, M.; Kanne, S.M.; Macklin, E.A.; Handen, B.L. A prospective study of the concordance of DSM-IV and DSM-5 diagnostic criteria for autism spectrum disorder. J. Autism Dev. Disord. 2017, 47, 2783–2794. [Google Scholar] [CrossRef]

- Conti, E.; Mitra, J.; Calderoni, S.; Pannek, K.; Shen, K.; Pagnozzi, A.; Rose, S.; Mazzotti, S.; Scelfo, D.; Tosetti, M.; et al. Network over-connectivity differentiates autism spectrum disorder from other developmental disorders in toddlers: A diffusion MRI study. Hum. Brain Mapp. 2017, 38, 2333–2344. [Google Scholar] [CrossRef] [Green Version]

- Thabtah, F. Machine learning in autistic spectrum disorder behavioral research: A review and ways forward. Inform. Health Soc. Care 2019, 44, 278–297. [Google Scholar] [CrossRef]

- Yin, W.; Li, L.; Wu, F.X. Deep learning for brain disorder diagnosis based on fMRI images. Neurocomputing 2020. [Google Scholar] [CrossRef]

- Kassraian-Fard, P.; Matthis, C.; Balsters, J.H.; Maathuis, M.H.; Wenderoth, N. Promises, pitfalls, and basic guidelines for applying machine learning classifiers to psychiatric imaging data, with autism as an example. Front. Psychiatry 2016, 7, 177. [Google Scholar] [CrossRef] [Green Version]

- Sherkatghanad, Z.; Akhondzadeh, M.; Salari, S.; Zomorodi-Moghadam, M.; Abdar, M.; Acharya, U.R.; Khosrowabadi, R.; Salari, V. Automated detection of autism spectrum disorder using a convolutional neural network. Front. Neurosci. 2020, 13, 1325. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iidaka, T. Resting state functional magnetic resonance imaging and neural network classified autism and control. Cortex 2015, 63, 55–67. [Google Scholar] [CrossRef] [PubMed]

- Heinsfeld, A.S.; Franco, A.R.; Craddock, R.C.; Buchweitz, A.; Meneguzzi, F. Identification of autism spectrum disorder using deep learning and the ABIDE dataset. NeuroImage Clin. 2018, 17, 16–23. [Google Scholar] [CrossRef]

- Hutchison, R.M.; Womelsdorf, T.; Allen, E.A.; Bandettini, P.A.; Calhoun, V.D.; Corbetta, M.; Della Penna, S.; Duyn, J.H.; Glover, G.H.; Gonzalez-Castillo, J.; et al. Dynamic functional connectivity: Promise, issues, and interpretations. Neuroimage 2013, 80, 360–378. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Menon, S.S.; Krishnamurthy, K. A comparison of static and dynamic functional connectivities for identifying subjects and biological sex using intrinsic individual brain connectivity. Sci. Rep. 2019, 9, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yahata, N.; Kasai, K.; Kawato, M. Computational neuroscience approach to biomarkers and treatments for mental disorders. Psychiatry Clin. Neurosci. 2017, 71, 215–237. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, H.; Duan, X.; Liu, F.; Lu, F.; Ma, X.; Zhang, Y.; Uddin, L.Q.; Chen, H. Multivariate classification of autism spectrum disorder using frequency-specific resting-state functional connectivity—A multi-center study. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2016, 64, 1–9. [Google Scholar] [CrossRef]

- Abraham, A.; Milham, M.P.; Di Martino, A.; Craddock, R.C.; Samaras, D.; Thirion, B.; Varoquaux, G. Deriving reproducible biomarkers from multi-site resting-state data: An Autism-based example. NeuroImage 2017, 147, 736–745. [Google Scholar] [CrossRef] [Green Version]

- Chaitra, N.; Vijaya, P.; Deshpande, G. Diagnostic prediction of autism spectrum disorder using complex network measures in a machine learning framework. Biomed. Signal Process. Control 2020, 62, 102099. [Google Scholar] [CrossRef]

- Bernas, A.; Aldenkamp, A.P.; Zinger, S. Wavelet coherence-based classifier: A resting-state functional MRI study on neurodynamics in adolescents with high-functioning autism. Comput. Methods Programs Biomed. 2018, 154, 143–151. [Google Scholar] [CrossRef]

- Yu, K.; Fu, Q.; Ma, H.; Lin, T.R.; Li, X. Simulation data driven weakly supervised adversarial domain adaptation approach for intelligent cross-machine fault diagnosis. Struct. Health Monit. 2021, 20. [Google Scholar] [CrossRef]

- Al-Hiyali, M.I.; Yahya, N.; Faye, I.; Khan, Z.; Alsaih, K. Classification of BOLD FMRI signals using wavelet transform and transfer learning for detection of autism spectrum disorder. In Proceedings of the 2020 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Langkawi Island, Malaysia, 1–3 March 2021; pp. 94–98. [Google Scholar] [CrossRef]

- Zhai, T.; Koriche, F.; Wang, H.; Gao, Y. Tracking sparse linear classifiers. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 2079–2092. [Google Scholar] [CrossRef]

- Billings, J.M.; Eder, M.; Flood, W.C.; Dhami, D.S.; Natarajan, S.; Whitlow, C.T. Machine learning applications to resting-state functional (MR) imaging analysis. Neuroimaging Clin. 2017, 27, 609–620. [Google Scholar] [CrossRef]

- Craddock, C.; Benhajali, Y.; Chu, C.; Chouinard, F.; Evans, A.; Jakab, A.; Khundrakpam, B.S.; Lewis, J.D.; Li, Q.; Milham, M.; et al. The neuro bureau preprocessing initiative: Open sharing of preprocessed neuroimaging data and derivatives. Front. Neuroinform. 2013. Available online: https://www.frontiersin.org/10.3389/conf.fninf.2013.09.00041/event_abstract (accessed on 28 July 2021).

- Yan, C.; Zang, Y. (DPARSF): A MATLAB toolbox for pipeline data analysis of resting-state (fMRI). Front. Syst. Neurosci. 2010, 4, 13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Yuan, Z.; Huang, J.; Yang, Q.; Chen, H. Quantification of the power changes in BOLD signals using Welch spectrum method during different single-hand motor imageries. Magn. Reson. Imaging 2014, 32, 1307–1313. [Google Scholar] [CrossRef]

- Cribben, I.; Haraldsdottir, R.; Atlas, L.Y.; Wager, T.D.; Lindquist, M.A. Dynamic connectivity regression: Determining state-related changes in brain connectivity. Neuroimage 2012, 61, 907–920. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torrence, C.; Compo, G.P. A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 1998, 79, 61–78. [Google Scholar] [CrossRef] [Green Version]

- Morabito, F.C.; Campolo, M.; Mammone, N.; Versaci, M.; Franceschetti, S.; Tagliavini, F.; Sofia, V.; Fatuzzo, D.; Gambardella, A.; Labate, A.; et al. Deep learning representation from electroencephalography of early-stage Creutzfeldt-Jakob disease and features for differentiation from rapidly progressive dementia. Int. J. Neural Syst. 2017, 27, 1650039. [Google Scholar] [CrossRef] [PubMed]

- Yahya, N.; Musa, H.; Ong, Z.Y.; Elamvazuthi, I. Classification of Motor Functions from Electroencephalogram (EEG) Signals Based on an Integrated Method Comprised of Common Spatial Pattern and Wavelet Transform Framework. Sensors 2019, 19, 4878. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rodríguez-Murillo, J.C.; Filella, M. Significance and Causality in Continuous Wavelet and Wavelet Coherence Spectra Applied to Hydrological Time Series. Hydrology 2020, 7, 82. [Google Scholar] [CrossRef]

- Grinsted, A.; Moore, J.C.; Jevrejeva, S. Application of the cross wavelet transform and wavelet coherence to geophysical time series. Nonlinear Process. Geophys. 2004, 11, 561–566. [Google Scholar] [CrossRef]

- Choe, J.; Lee, S.M.; Do, K.H.; Lee, G.; Lee, J.G.; Lee, S.M.; Seo, J.B. Deep Learning–based Image Conversion of CT Reconstruction Kernels Improves Radiomics Reproducibility for Pulmonary Nodules or Masses. Radiology 2019, 292, 365–373. [Google Scholar] [CrossRef]

- Albahri, O.; Zaidan, A.; Albahri, A.; Zaidan, B.; Abdulkareem, K.H.; Al-Qaysi, Z.; Alamoodi, A.; Aleesa, A.; Chyad, M.; Alesa, R.; et al. Systematic review of artificial intelligence techniques in the detection and classification of COVID-19 medical images in terms of evaluation and benchmarking: Taxonomy analysis, challenges, future solutions and methodological aspects. J. Infect. Public Health 2020, 13, 1381–1396. [Google Scholar] [CrossRef] [PubMed]

- Sato, W.; Kubota, Y.; Kochiyama, T.; Uono, S.; Yoshimura, S.; Sawada, R.; Sakihama, M.; Toichi, M. Increased putamen volume in adults with autism spectrum disorder. Front. Hum. Neurosci. 2014, 8, 957. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Luo, X.; Mao, Q.; Shi, J.; Wang, X.; Li, C.S.R. Putamen gray matter volumes in neuropsychiatric and neurodegenerative disorders. World J. Psychiatry Ment. Health Res. 2019, 3, 1020. [Google Scholar] [PubMed]

| Site | Country | Vendor | Voxel Size (mm) | Flip Angle (deg) | TR (sec) | Time Points (sec) | Subjects | Total-per Site | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| ASD | APD | PDD-NOS | NC | ||||||||

| NYU | USA | Siemens | 1.3 | 7 | 2 | 175 | 9 | 8 | 5 | 9 | 31 |

| SBL | Netherlands | Philips | 1 | 8 | 2.2 | 195 | 9 | 5 | 6 | 9 | 29 |

| SDSU | USA | GE | 1 | 4.5 | 2 | 175 | 9 | 6 | 2 | 9 | 26 |

| Trinity | Ireland | Philips | 1 | 8 | 2 | 145 | - | 4 | 7 | - | 11 |

| Yale | USA | Siemens | 1 | 9 | 2 | 195 | 9 | 5 | 14 | 9 | 37 |

| USM | USA | Siemens | 1 | 9 | 2 | 235 | - | - | 1 | - | 1 |

| KKI | USA | Philips | 1 | 8 | 2.5 | 151 | - | 8 | - | - | 8 |

| UM1 | USA | GE | 1.2 | 15 | 2 | 295 | - | - | 1 | - | 1 |

| Total | 36 | 36 | 36 | 36 | 144 | ||||||

| Node for WCT | Number of WCT Images per Class | Accuracy (%) |

|---|---|---|

| 1st-node | 4140 | 89.2 |

| 2nd-node | 4140 | 84.9 |

| 3rd-node | 4140 | 83.1 |

| 1st + 2nd-nodes | 8280 | 85.5 |

| 1st + 3rd-nodes | 8280 | 84.7 |

| 1st + 2nd + 3rd-nodes | 12,420 | 81.7 |

| Optimizer | Accuracy | Sensitivity | Specificity | Precision | F-Score |

|---|---|---|---|---|---|

| RMSPROP | 84.5 ± 1.8 | 85.1 ± 2.5 | 84.3 ± 2.2 | 84.2 ± 2.8 | 84.6 ± 1.9 |

| SGDM | 87.2 ± 0.9 | 87.1 ± 1.4 | 87.4 ± 1.5 | 87.4 ± 1.5 | 87.2 ± 0.9 |

| ADAM | 89.2 ± 0.7 | 89.1 ± 2.5 | 89.5 ± 1.9 | 89.5 ± 2.5 | 89.2 ± 0.5 |

| k-Folds | Accuracy | Sensitivity | Specificity | Precision | F-Score |

|---|---|---|---|---|---|

| 5-fold | 88.6 ± 1.5 | 88.7 ± 2.3 | 88.7 ± 2.3 | 88.6 ± 2.6 | 88.6 ± 1.5 |

| 10-fold | 89.2 ± 0.7 | 89.1 ± 2.5 | 89.5 ± 1.9 | 89.5 ± 2.5 | 89.2 ± 0.5 |

| 15-fold | 89.6 ± 1.6 | 88.9 ± 2.4 | 90.5 ± 1.8 | 90.6 ± 2.1 | 89.7 ± 1.5 |

| 20-fold | 89.8 ± 1.7 | 90.1 ± 2.6 | 89.7 ± 2.2 | 89.6 ± 2.5 | 89.8 ± 1.7 |

| Site | Accuracy | Sensitivity | Specificity | Precision | F-Score |

|---|---|---|---|---|---|

| NYU | 87.5 | 88.3 | 86.8 | 86.5 | 87.4 |

| SBL | 86.9 | 87.6 | 86.2 | 85.9 | 86.7 |

| SDSU | 86.9 | 88.4 | 85.4 | 84.8 | 86.5 |

| Yale | 85.8 | 85.4 | 86.2 | 86.3 | 85.8 |

| Mean | 86.8 | 87.4 | 86.1 | 85.9 | 86.6 |

| Paper | Classifier | FC Modelling | Method | Subject | Accuracy (%) |

|---|---|---|---|---|---|

| Chen et al. 2016 [16] | SVM | Static FC | Pearson correlation | 240 | 79.2 |

| Abraham et al. 2017 [17] | SVM | Static FC | Covariance matrix | 871 | 67 |

| Heinsfeld et al. 2018 [12] | DNN | Static FC | Pearson correlation | 1035 | 70 |

| Bernas et al. 2018 [19] | SVM | Dynamic FC | Wavelet coherence | 54 | 80 |

| Sherkatghanad et al. 2020 [10] | DNN | Static FC | Pearson correlation | 871 | 70.2 |

| Our proposed method | CNN | Dynamic FC | Wavelet coherence | 72 | 89.8 |

| Optimizer | F1-Score(%) | Accuracy (%) | ||

|---|---|---|---|---|

| ASD | APD | PDD-NOS | Overall | |

| RMSPROP | 79.6 | 80.7 | 81.7 | 80.2 |

| SGDM | 80.9 | 79.8 | 80.6 | 80.3 |

| ADAM | 81.7 | 82.3 | 83.6 | 82.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Hiyali, M.I.; Yahya, N.; Faye, I.; Hussein, A.F. Identification of Autism Subtypes Based on Wavelet Coherence of BOLD FMRI Signals Using Convolutional Neural Network. Sensors 2021, 21, 5256. https://doi.org/10.3390/s21165256

Al-Hiyali MI, Yahya N, Faye I, Hussein AF. Identification of Autism Subtypes Based on Wavelet Coherence of BOLD FMRI Signals Using Convolutional Neural Network. Sensors. 2021; 21(16):5256. https://doi.org/10.3390/s21165256

Chicago/Turabian StyleAl-Hiyali, Mohammed Isam, Norashikin Yahya, Ibrahima Faye, and Ahmed Faeq Hussein. 2021. "Identification of Autism Subtypes Based on Wavelet Coherence of BOLD FMRI Signals Using Convolutional Neural Network" Sensors 21, no. 16: 5256. https://doi.org/10.3390/s21165256

APA StyleAl-Hiyali, M. I., Yahya, N., Faye, I., & Hussein, A. F. (2021). Identification of Autism Subtypes Based on Wavelet Coherence of BOLD FMRI Signals Using Convolutional Neural Network. Sensors, 21(16), 5256. https://doi.org/10.3390/s21165256