1. Introduction

Fractures of the wrist and forearm are common injuries, especially among older and frail persons who may slip and extend their arm to protect themselves [

1]. In some cases, the person involved may think that they have not injured themselves seriously, and the fractures are ignored and left untreated [

2]. These fractures can provoke impairment in the wrist movement [

3]. In more serious cases, fractures can lead to complications such as ruptured tendons or long-lasting stiffness of the fingers [

4] and can impact the quality of life [

5].

Treatment of fractures through immobilisation and casting is an old, tried-and-tested technique. There are Egyptian records describing the re-positioning of bones, fixing with wood and covering with linen [

6], and there are also records of fracture treatment in the Iron Age and Roman Britain where “skilled practitioners” treated fractures and even “minimised the patient’s risk of impairment” [

7]. The process of immobilisation is now routinely performed in the Accidents and Emergency (A&E) departments of hospitals under local anaesthesia and is known as

Manipulation under Anaesthesia (MUA) [

8], or closed reduction and casting. MUA interventions in many cases represent a significant proportion of the Emergency Department workload. In many hospitals, patients are initially treated with a temporary plaster cast, then return afterwards for the manipulation as a planned procedure. MUA, although simple, is not entirely free of risks. Some of the problems include bruising, tears of the skin, complications related to the local anaesthetic, and there is discomfort for the patients. It should be noted that a large proportion of MUA procedures fail. It has been reported that 41% of Colles’ fractures treated with MUA required alternative treatment [

9]. The alternative to MUAis open surgery, which is also known as

Open Reduction and Internal Fixation (ORIF) [

10], and can be performed with local or general anaesthesia [

11,

12] to manipulate the fractured bones and fixate them with metallic pins, plates or screws. The surgical procedure is more complicated and expensive than MUA. In some cases, it can also lead to serious complications, especially with metallic elements that can interfere with the tendons and cut through subchondral bones [

13,

14]. ORIF is more reliable as a long term treatment.

Despite the considerable research in the area [

8,

10,

13,

15,

16,

17,

18], there is no certainty into which procedure to follow for wrist fractures [

19,

20,

21]. The main tool to examine wrist fractures is through diagnostic imaging, e.g., X-ray or Computed Tomography (CT). The images produced are observed by highly skilled radiologist and radiographers in search for anomalies, and based on experience, they then determine the most appropriate procedure for each case. The volume of diagnostic images has increased significantly [

22], and work overload is further exacerbated by a shortage of qualified radiologists and radiographers as exposed by The Royal College of Radiologists [

23]. Thus, the possibility of providing computational tools to assess radiographs of wrist fractures is attractive. Traditional analysis of wrist fractures has focused on geometric measurements that are extracted either manually [

24,

25,

26,

27] or through what is now considered traditional image processing [

28]. The geometric measurements that have been of interest are, amongst others: radial shortening [

29], radial length [

25], volar and dorsal displacements [

30], palmar tilt and radial inclination [

31], ulnar variance [

24], articular stepoff [

26], and metaphyseal collapse ratio [

27]. Non-geometric measurements such as bone density [

32,

33] as well as other osteoporosis-related measurements, e.g., cortical thickness, internal diameter, and cortical area [

34], have also been considered to evaluate bone fragility.

However, in recent years, computational advances have been revolutionised by the use of machine learning and artificial intelligence (AI), especially with

deep learning architectures [

35]. Deep learning is a part of the machine learning method where input data is provided to a model to discover or learn the representations that are required to perform a classification [

36]. These models have a large number levels, far more than the input/hidden/output layers of the early configurations, and thus are considered

deep. At each level, nonlinear modules transform the representation of the data from the input data into a more abstract representation [

37].

Deep learning has had significant impact in many areas of image processing and computer vision, for instance, it provides outstanding results in difficult tasks like the classification of the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [

38] and it has been reported that deep learning architectures have in some cases outperformed expert dermatologists in classification of skin cancer [

39]. Deep learning has been widely applied for segmentation and classification [

40,

41,

42,

43,

44,

45,

46,

47,

48].

Deep learning applied system versus radiologists’ interpretation on detection and localisation of distal radius fractures has been reported by [

49]. Diagnostic improvements have been studied by [

50], where deep learning supports the medical specialist to a better outcome to the patient care. Automated fracture detection and localisation for wrist radiographs are also feasible for further investigation [

51].

Notwithstanding their merits, deep learning architectures have several well-known limitations: significant computational power is required together with large amounts of training data. There is a large number of architectures, and each of them will require a large number of parameters to be fine tuned. Many publications will use one or two of these architectures and compare against a baseline, like human observers or a traditional image processing methodology. However, a novice user may struggle to select one particular architecture, which in turn may not necessarily be the most adequate for a certain purpose. In addition, one recurrent criticism is their

black box nature [

52,

53,

54,

55], which implies that it is not always easy or simple to understand how the networks perform in the way they do. One method to address this

opacity is through explainable techniques, such as activation maps [

56,

57] as a tool explain visually the localisation of class-specific image regions.

In this work, the classification of radiographs into two classes, normal and abnormal, with eleven convolutional neural network (CNN) architectures was investigated. The architectures compared were the following: (

GoogleNet, VGG-19, AlexNet, SqueezeNet, ResNet-18, Inception-v3, ResNet-50, VGG-16, ResNet-101, DenseNet-201 and Inception-ResNet-v2). This paper extends a preliminary version of this work [

58]. Here, we extended the work by applying data augmentation to the two models that provided the best results, that is, ResNet-50 and Inception-ResNet-v2. Furthermore, class activation maps were generated analysed.

The dataset used to compare the architectures was the

Stanford MURA (musculoskeletal radiographs) radiographs [

59]. This is a database that contains a large number of radiographs; 40,561 images from 14,863 studies, where each study is manually labelled by radiologists as either normal/abnormal. The radiographs cover seven anatomical regions, namely Elbow, Finger, Forearm, Hand, Humerus, Shoulder and Wrist. This paper focused mainly on the wrist images. The main contributions of this work are the following: (1) an objective comparison of the classification results of 11 architectures, which can help the selection of a particular architecture in future studies, (2) the comparison of the classification with and without data augmentation, which resulted in significantly better results, and (3) the use of class activation mapping to analyse the regions of interest of the radiographs.

The rest of the manuscript is organised as follows.

Section 2 describes the materials, that is, the data base of radiographs, and the methods that describe the Deep Learning models that were compared and the class activation mapping (CAM) to visualise the activated regions. The performance metrics of accuracy and Cohen’s kappa coefficient are described at the end of this section.

Section 3 present the results of all the experiments and the effect of the different hyper-parameters. Predicted abnormality in the radiographic images will also be visualised by using class activation mapping. The manuscript finishes with a discussion of the results in

Section 4.

3. Results

The effect of the number of epochs mini-batch sizing and data augmentation was evaluated on the classification of wrist radiographs in eleven CNN architectures.

Table 6 and

Table 7 present the aggregated best results for each architecture in prediction accuracy and Cohen’s kappa score, respectively.

For the 11 architectures with no data augmentation, Inception-Resnet-v2 performs the best with an accuracy (

) and Cohen’s kappa (

). DenseNet-201 fares slightly lower (

,

). The lowest results were obtained with GoogleNet (

,

). This potentially indicates better feature extraction with deeper network architectures.

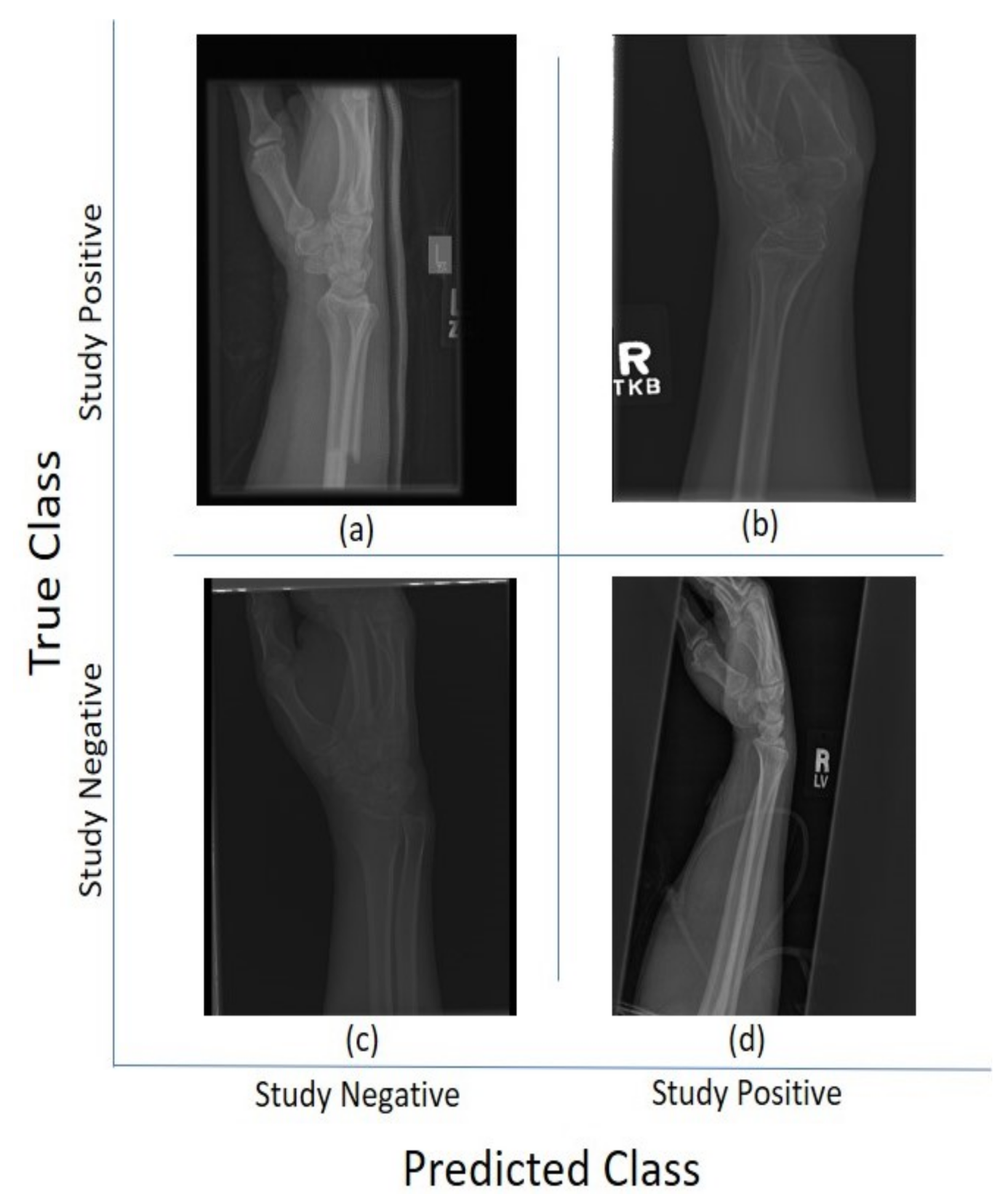

Figure 5 and

Figure 6 illustrate some cases of the classification for Lateral and Postero-anterior views of wrist radiographs.

The comparison between ADAM, SGDM, and RMSprop shows no indicative superiority implying that each of these optimisers were capable of achieving the optimal solution. Incremental change to the number of epochs beyond step 30 yields no improvement in accuracy indicating that the models have converged. The choice of the attempted mini-batches show no difference in results. With data augmentation, the results show significant improvement, e.g., accuracy increases by approximately 19% (up by 0.134) and Cohen’s kappa by 39% (up by 0.197) for the Inception-ResNet-v2 architecture.

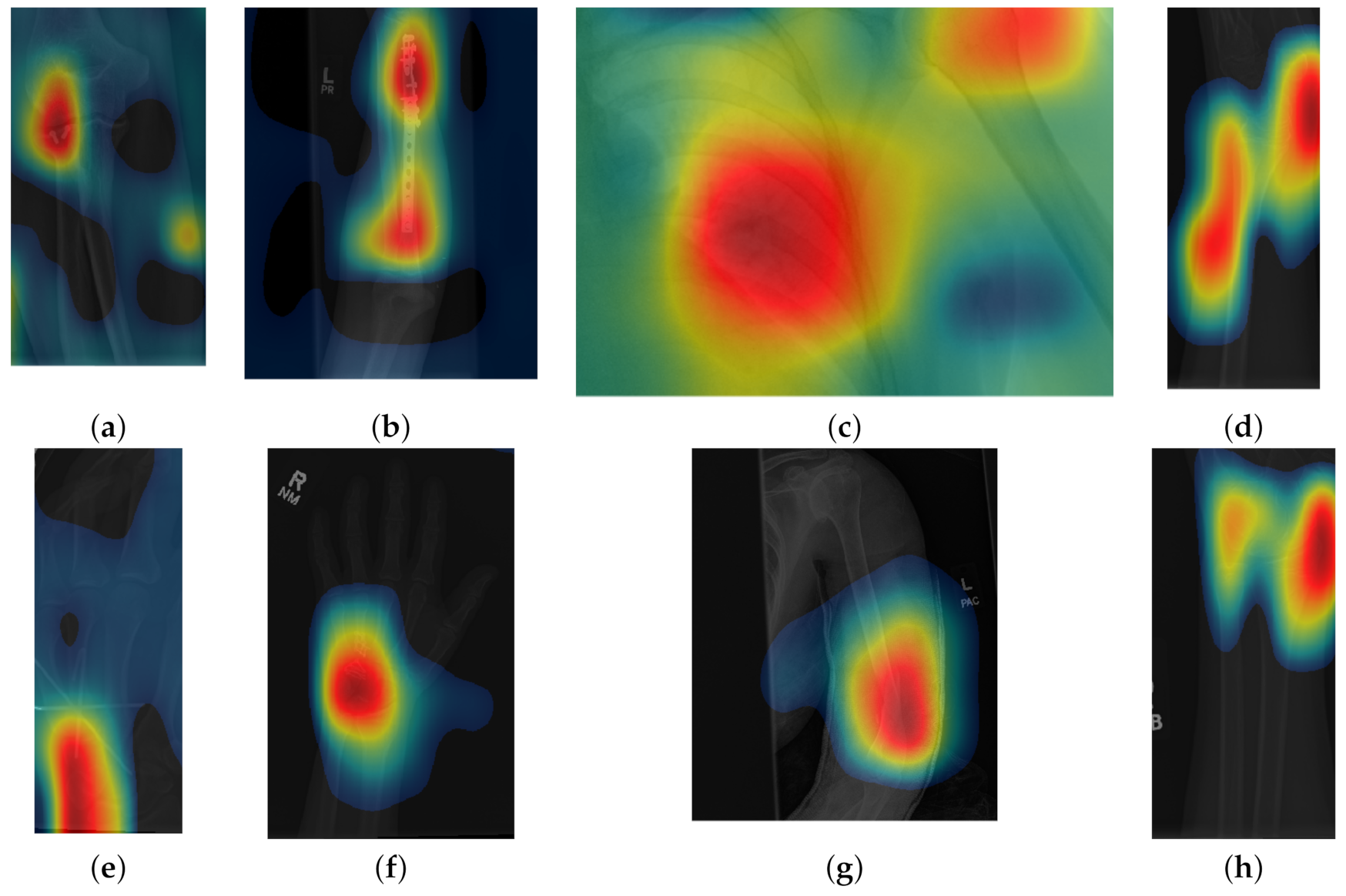

Class activation maps were obtained and overlaid on top of the representative images in

Figure 1 and

Figure 2. The CAMs obtained for ResNet50 are shown in

Figure 7 and

Figure 8, while those for Inception-ResNet-v2 are shown in

Figure 9 and

Figure 10. In all cases, the CAMs were capable of indicating the region of attention used in the two architectures applied. This is especially valuable for identifying where the abnormalities are in

Figure 8 and

Figure 10. While both models indicate similar regions of attention, Inception-ResNet-v2 appears to have smaller attention regions (i.e., more focused) than those in ResNet50. This may indicate a better extraction of features in the Inception-ResNet-v2 leading to better prediction results. Finally, the activation maps corresponding to

Figure 5 and

Figure 6 are presented in

Figure 11.

4. Discussion

In this paper, eleven CNN architectures for the classification of wrist x-rays were compared. Various hyper-parameters were attempted during the experiments. It was observed that Inception-ResNet-v2 provided the best results (, ), which were compared with leaders of the MURA challenge, which reports 70 entries. The top three places of the leaderboard were , the lowest score was and the best performance for a radiologist was . Thus, without data augmentation, the results of all the networks were close to the bottom of the table. Data augmentation significantly improved the results to achieve the 25th place of the leaderboard with (, ). Whilst this result was above the average of the table, the positive effect of data augmentation was confirmed to be close to human-level performance.

The CAM provides a channel to interpret how a CNN architecture is trained for feature extraction and the visualisation of the CAMs in the representative images was interesting in several aspects. First, the activated regions in ResNet-50 appeared more

broad-brushed than those of the Inception-ResNet-v2. This applied both to the cases without abnormalities (

Figure 7 and

Figure 9) and those with abnormalities (

Figure 8 and

Figure 10); second, the localisation of regions of attention by Inception-ResNet-v2 also appeared more precise than the ResNet-50. This can be appreciated in several cases, for instance the forearm that contains a metallic implant (b) and the humerus with a fracture (g); third, the activation on the cases without abnormalities provides a consistent focus in areas where abnormalities are expected to appear. This suggests that the network has appropriately learned regions essential to the correct class prediction.

One important point to notice is that all the architectures provided lower results than those at the top of the MURA leaderboard table, even those tested with data augmentation. The top 3 architectures in the MURA leaderboard are: (1) base-comb2-xuan-v3 (ensemble) by jzhang Availink, (2) base-comb2-xuan (ensemble), also by jzhang Availink and (3) muti_type (ensemble model) by SCU_ MILAB. These reported the following Cohen’s Kappa values of (1) 0.843, (2) 0.834 and (3) 0.833 respectively. Ensemble models are reported for the top 11 architectures and the highest single model is located in position 12 with a value of 0.773. Whilst in this paper only the wrist subset of the MURA dataset was analysed, it is not considered that these would be more difficult to classify than the other anatomical parts. When data augmentation was applied to the input of the architectures, the results were significantly better, but still lower than the leaderboard winners. We speculate further steps could improve the performance of CNN-based classification. Specifically:

- 1.

Data Pre-Processing: In addition to a grid search of the hyper-parameters, image pre-processing to remove irrelevant features (e.g., text labels) may help the network to target its attention. Appropriate data augmentations (e.g., rotation, reflection, etc.) will allow better pattern recognition to be trained and, in turn, provides higher prediction accuracy.

- 2.

Post Training Evaluation: class activation map provides an interpretable visualisation for clinicians and radiologists to understand how a prediction was made. It allows the model to be re-trained with additional data to mitigate any model bias and discrepancy. Having a clear association of the key features with the prediction classes [

70] will aid in developing a more trustworthy CNN-based classification especially in a clinical setting.

- 3.

Model Ensemble: [

71,

72] or combination of the results of different architectures have also shown better results than an individual configuration. This is also observed in the leaderboard for the original MURA competition.

- 4.

Domain Knowledge: The knowledge of anatomy (e.g., bone structure in elbow or hands [

73]) or the location/orientation of bones [

28] can be supplemented in a CNN-based classification to provide further fine tuning in anomaly detection as well as guiding the attention of the network for better results [

74].