1. Introduction

In the field of surface defect detection, traditional manual inspection has the disadvantages of low accuracy, poor real-time defect detection, low efficiency, and high labor intensity. Machine-vision-based methods have the advantages of non-contact, high real-time, and no manual participation [

1], these methods are increasingly being applied in modern industries [

2,

3,

4,

5,

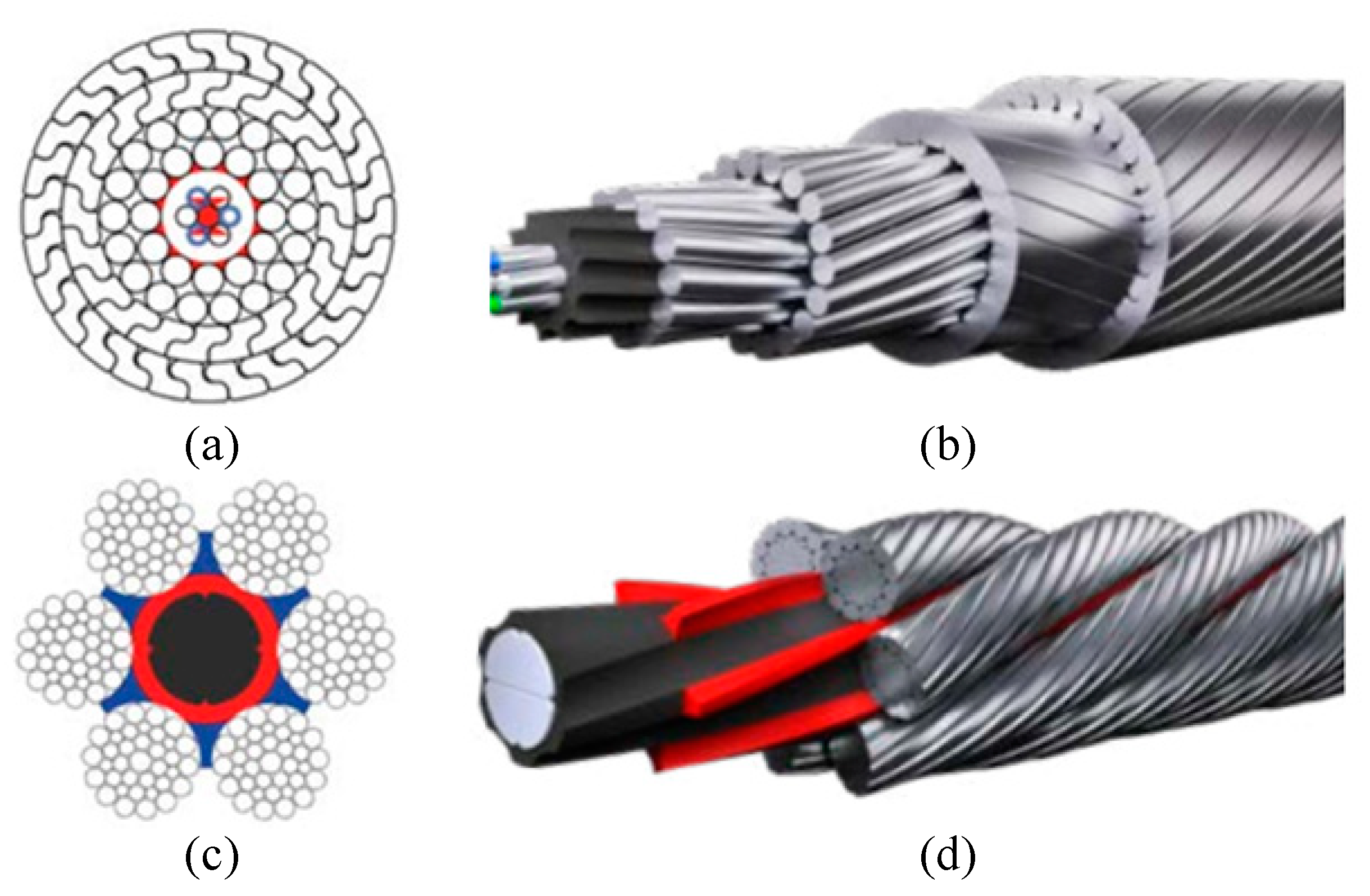

6]. In this study, we investigated machine-vision-based methods for detecting defects on the surface of steel wire ropes, using sealed wire ropes as the research object. Sealed wire ropes are widely used in aerial ropeway systems in mines or at scenic spots for transporting goods or carrying people. This type of wire rope is usually twisted from one to three layers of shaped steel wire wrapped with multiple strands of round heart steel wire, with a tight structure, a smooth surface, no rotation, and good sealing characteristics, with the section structure and appearance shown in

Figure 1a,b. The sealed wire rope surface defects occur mainly because the wire rope is pulled apart by the pressure of the surface rope strand fracture to form fracture defects. As a result of the force exerted by the weight of goods and people, local broken wire develops into a concentrated broken strand in a short period. When the concentrated broken strand exceeds the scrap standard, the whole rope needs to be replaced, leading to a short life cycle. At the same time, in this short life cycle, the quantity of fracture defect data is small, which makes it difficult to use supervised learning methods to detect defects [

7].

There is little literature on machine-vision-based defect detection for sealed wire ropes, but researchers have carried out a lot of work on surface defect detection for non-sealed ordinary wire ropes. A non-sealed wire rope plays an important role in lifting and traction systems. The difference between sealed wire rope and non-sealed wire rope lies in the composition and twisting method of wire rope. The main performance is that the appearance of non-sealed wire rope has finer texture than that of sealed wire rope, and these textures are more prone to wear, broken wire, corrosion, and other defects. The section structure and appearance are shown in

Figure 1c,d.

Zhou et al. [

8,

9,

10,

11] studied in-depth non-sealed wire ropes and proposed a wear detection method based on a deep convolutional neural network that involved training a large number of labeled wear sample images to detect the wear degree of wire ropes. To address the influence of light noise, using texture features, the uniform local binary pattern operator (u-LBP) and principal component analysis methods were proposed to detect the wire rope defect area. In addition, to solve the wire rope surface defect classification problem, an optimized support vector machine method based on uniform local binary features and grayscale co-occurrence moment features was proposed. Xh et al. [

12] proposed a convolutional-neural-network-based intelligent method for detecting wire rope damage by training a model with a large number of damage sample images. Shi et al. [

13] used the infrared measurement method to determine wire rope wear using the Canny edge detection method with an infrared camera. From the perspective of structural modeling, Wacker et al. [

14] proposed a combination of structure- and appearance-enhanced wire rope anomaly detection to design probabilistic appearance models to detect defects. To achieve better-supervised learning of defect models, Platzer et al. [

15] proposed a new strategy for wire rope defect localization using hidden Markov models. To solve the problem of wire rope twist distance measurement, Vallan et al. [

16] established a mathematical model of a wire rope profile and a vision-based twist distance measurement technique for a metal wire rope. Dong et al. [

17] detected wire rope defects by extracting texture features such as the smoothness and entropy of the wire rope surface. Ho et al. [

18] combined image enhancement techniques with principal component analysis (PCA) for detecting wire rope surface defects. The above methods have achieved good results in the surface defect detection of unsealed steel wire rope, but they depend on a large amount of defect data and are not suitable for the defect detection of sealed steel wire rope.

When the outdoor camera is fixed, the changing area in the fixed scene is extracted, and the dynamic background modeling method is usually used. The background modeling process is shown in

Figure 2. The background model is dynamically constructed for the real-time image sequence, and the scene change area, that is, the foreground area, is obtained through the difference between the dynamic background and the real-time image frame. For the continuously collected outdoor sealed steel wire rope images, the change of pixel gray in the surface area of steel wire rope is similar to the application scene of the background modeling method. In the time domain, the pixels in the image sequence of wire rope surface area at the same position may be rope strand edge, rope strand surface, oil sludge, or fracture, but the gray levels of pixels in one type are similar in the time domain. At the same time, the gray levels of rope strand edge and fracture are similar, the gray levels of wire rope surface and oil sludge are similar, but the gap gray level is significantly different from that of wire rope surface; In the spatial domain, the gray level of the rope strand area pixels or the rope strand edge area pixels in the wire rope surface area image is similar. According to the above wire rope image characterization, the change model of a single-pixel in the image can be established, and the defect area on the wire rope surface can be extracted by using a methodology similar to dynamic background modeling. The classical dynamic background modeling methods such as the visual background extractor (VIBE) method proposed by O. Barnich et al. [

19,

20], which established a random replacement background model for each pixel; an adaptive background mixing model based on KNN (k-nearest neighbor) proposed by Stauffer C et al. [

21], which modeled each pixel and realized foreground segmentation by using the idea of nonparametric probability density estimation and KNN classification; the MOG2 method of Gaussian mixture model proposed by Zivkovic Z [

21,

22,

23], which realized dynamic background modeling by using parametric probability density estimation, Gaussian mixture distribution, and shadow detection [

24].

The sealed steel wire rope of freight ropeway studied in this paper is applied to the outdoor environment. The collected surface images of steel wire rope are difficult to distinguish defects due to the following reasons: (1) the edge of the rope strand is blurred due to strong light, weak light, reflection, uneven illumination, and shadow, as shown in

Figure 3a–c; (2) It is difficult to distinguish the edge of the rope strand due to oil stain on the surface, as shown in

Figure 3d; (3) The surface texture of steel wire rope changes irregularly along the motion direction between adjacent image frames. The above characteristics make it difficult for the existing dynamic background modeling methods to effectively segment the strand on the surface of steel wire rope and detect fracture defects.

In order to solve the above problems, this paper proposes a steel wire rope surface defect detection method based on the segmentation template and the spatiotemporal gray sample set. The technical route of the method is shown in

Figure 4. The steel wire rope segmentation template is constructed in advance, the rope strands of the real-time steel wire rope image are segmented and corrected by using the template, and the pixel information is extracted from continuous multi-frame images to build a dynamic pixel queue, combined with the rope strand information, the spatiotemporal gray sample set is constructed, the similarity between the real-time image and the spatiotemporal gray sample set is compared, and the defect area in the steel rope surface image is extracted and marked. The main contributions of this paper are as follows:

- (1)

Based on the geometric and texture features of the sealing wire rope, the segmentation template of the sealing wire rope is created for the first time;

- (2)

The strand segmentation and correction method based on the steel wire rope segmentation template is proposed, which effectively solves the problem of strand segmentation caused by the variable speed movement and vibration of the ore hopper car;

- (3)

The spatiotemporal gray sample set of pixel points in the relative motion environment between the camera and the scene is constructed for the first time and is used for wire rope defect detection, which effectively solves the problem of defect detection caused by light and oil pollution.

The remainder of this paper is organized as follows: a steel wire rope surface defect detection method based on steel wire rope segmentation template and spatiotemporal gray sample set is proposed in

Section 2.

Section 3 describes the content of algorithm implementation. In

Section 4 and

Section 5, the validity of the method is verified experimentally and the conclusions of this study are given.

2. Steel Wire Rope Surface Defect Detection Method Based on Segmentation Template and Spatiotemporal Gray Sample Set

2.1. Creation of Wire Rope Segmentation Template

Rope strand information is one of the bases for judging the surface defects of steel wire rope. The fracture defects connect at least one pair of adjacent rope strand edges. When the camera is fixed on the bucket car and moves along the rope direction, according to the structural characteristics of the sealing wire rope, the texture law of the strand edge in the wire rope surface image is summarized, and the sealing wire rope segmentation template is constructed. The template is a priori knowledge of strand segmentation and defect detection. Each new steel wire rope creates a steel wire rope segmentation template before usage, which only needs to be created once in the service life cycle of the steel wire rope.

In the preparation stage, in order to create a steel wire rope segmentation template, wipe the oil stain on the surface of the steel wire rope until the texture is clear, add a white background plate, and collect a steel wire rope image; the line is detected by Hough transform to obtain the left boundary

and the right boundary

of the steel wire rope, given by:

where

,

,

is the image width,

is the image height, and

are linear formula coefficients. OSTU (Nobuyuki Otsu Method) [

25] adaptive threshold segmentation is performed on the collected image. The gray value of darker pixels is set to 1 and the gray value of brighter pixels is set to 0. The connected domain of the resulting image is analyzed and the connected domain with smaller areas is removed. Finally, the marked steel wire rope segmentation template

is obtained.

Figure 5a is the steel wire rope image and

Figure 5b is the segmentation template.

2.2. Strand Segmentation and Correction Based on Wire Rope Segmentation Template

In practical application, the image acquisition equipment is erected on the axle of the ore bucket car. As shown in

Figure 6, the image acquisition equipment is in relative motion with the steel wire rope, the image of the upper surface of the wire rope collected by the MV-CA030-10GC industrial camera (Hikvision, Hangzhou, China). The left and right positions of the rope in the real-time steel wire image collected by the equipment are basically consistent. Although the surface texture of the steel wire rope changes rapidly along the rope direction, the steel wire rope segmentation template can be translated a certain distance along the rope direction to make the edge information of the rope strand in the template coincide with the edge information in the real-time image. Based on the above characteristics, in order to solve the problem of rope strand segmentation under the condition of oil sludge coverage, reflection, and uneven illumination, a rope strand segmentation and correction method based on the steel wire rope segmentation template is proposed in this paper.

The method is based on edge information. The rope strand edge texture in the image is seriously affected by oil sludge, uneven illumination, and reflection, and the rope strand edge information is incomplete. In order to solve the problem of edge extraction of wire rope surface image affected by noise, the FoGDbED method proposed by Zhang G et al. [

26] was used to extract edge pixels in the image, which could effectively extract edges from the image affected by noise. The edge image

of the current image is given by:

where

is the current image,

is edge detection function of the FoGDbED method.

The method proposed in this paper uses the steel wire rope segmentation template to translate in the rope extension direction, match with the edge information, calculate the coincidence degree response value, and select the corresponding position of the maximum coincidence degree response value as the best matching position. Where the translation operation can be seen as a translation calculation using the vector

for the wire rope segmentation template

;

where

is the steel wire rope segmentation template and

is the translated image. The translation operation is carried out along the extension direction of the rope. The translation angle can be calculated from the slope

in Formula (1). The single translation vector

satisfies the constraint:

where

is the slope of

or

. Each translation distance is the downward movement distance 1 along the rope direction. The farthest translation distance of the translation operation satisfies the constraint:

where

is the number of times of translation, and the final translation distance is less than the gap distance

between adjacent strands in the extension direction of the steel wire rope, as shown in

Figure 7. The reason is that the steel wire rope division template must coincide with the gap between the steel wire rope strands within the translation distance

in the extension direction of the steel wire rope, as shown in the figure,

is the angle of steel wire rope, satisfied

.

After the

j-th translation operation of the wire rope segmentation template, the calculation rule of coincidence degree response value

between image

and real-time image edge extraction result

is as follows:

where

is the matching value between the wire rope segmentation template

and the edge image

of the real-time image after the j-th displacement at the coordinate

.

When the steel wire rope segmentation template is known, the specific implementation process of the rope strand segmentation and correction method (RSCM) based on the steel wire rope segmentation template is shown in Algorithm 1.

| Algorithm 1. Rope-strand segmentation and correction method based on steel wire rope segmentation template. |

Inputs: wire rope image , steel wire rope segmentation template

Output: with strand edge markers

1: detect ’s edge with FoGDbED detector and get edge image

2:

3: detect edge image with FoGDbED detector

4: Shift distance 1 to get with vector

5: get with Formula (7) and get with Formula (8)

6:

7: while

8: ++;

9: Shift distance to get with vector

10: get with Formula (7) and get with Formula (8)

11: if

12:

13: end if

14: end while

15:

16: return |

The effect of strand segmentation and correction is shown in

Figure 8.

Figure 8a shows the steel wire strand segmentation template. In order to facilitate viewing, the grayscale with the median value of 1 in the template is replaced with 255.

Figure 8b shows the edge information extracted by FoGDbED from the surface image of steel wire rope with defects.

Figure 8c shows the results of strand cutting by this method. In order to better show the segmentation effect, in

Figure 8c, the best matching template area is marked in red. The results show that the method in this paper can simply and directly segment the steel wire rope strands.

2.3. Creation of Spatiotemporal Gray Sample Set

In view of the structural particularity of steel wire rope, the image representation of different positions of normal steel wire rope is similar. The similarity is mainly reflected in the images of the upper surface of steel wire rope at different positions collected in real-time under outdoor natural light. If the same position in the image is the edge of rope strand, rope strand surface, or oil sludge surface, the gray value is similar; The difference mainly occurs in the case of gray mutation, and the mutation area is usually shown as a fracture defect.

Classical background modeling methods such as VIBE, KNN, and MOG2 will appear in a large number of foreground regions when processing images with rapidly changing texture, which cannot be directly used for wire rope surface defect segmentation. In this paper, a new temporal and spatial gray sample set is constructed to solve the problem of defect detection in the case of rapid texture change. Inspired by the dynamic background modeling method and combined with the gray change characteristics of wire rope surface image, this paper constructs the gray sample set in the space-time domain for the first time when the camera moves relative to the scene. The wire rope defect detection process is divided into dynamic wire rope background model construction and foreground defect detection process. The dynamic wire rope background model is realized by building a grayscale sample set in the space-time domain, and the detailed construction process is as follows:

Considering the sequence of pixel grayscale values of the same point in the time domain as a process of pixel grayscale changes with time, for the point

, the set of pixel grayscale history values

in time

is

where

are the grayscale values of the corresponding images at

from moment 1 to moment

. In practical application, the pixels in set

may be in different positions at different times, and may belong to the rope strand edge or rope strand surface. The average gray level of the pixels at the rope strand edge is lower than the average gray level of the pixels on the rope strand surface. If they are not distinguished, the gray level estimation value will be in an unstable state, affecting the accuracy of defective pixel segmentation. Therefore, the composition of the sequential gray sample set of the wire rope image sequence is shown in

Figure 9. Continuously take the gray value of the pixels at the same position as the

-frame images. If the point is on the rope strand, the set is recorded as

; If the point is in the edge area of the rope strand, the set is recorded as

, and the sequential gray sample set is

.

In the spatial domain of the current wire rope image, combined with the structural characteristics of the sealed wire rope, if the pixels at position

and the adjacent pixels in the wire rope surface image are the same rope strand or the same rope strand, the gray values of the edge are similar. The spatial gray level set of pixel points is shown in

Figure 10. Take point

as the 5 ∗ 5 area of the midpoint, and take the same area in the mask image obtained after rope strand segmentation. If the pixel is located in the rope strand area (i.e., the corresponding value in the mask image obtained by rope strand segmentation is 0), take all pixel gray level value sets of 0 in the 5 ∗ 5 pixel area as

; If the pixel is located in the rope strand edge area (that is, the corresponding value in the mask image obtained by rope strand segmentation is 1), take the gray value set of all pixels with 1 in the 5 ∗ 5 pixel area as

; Finally, the spatial gray sample set is

.

The spatiotemporal gray sample set constructed in this paper can be expressed as two subsets:

where

refers to the spatiotemporal gray sample set at

on the rope strand, and

refers to the spatiotemporal gray sample set at

on the edge of the rope strand. The spatiotemporal gray sample set constructed in this paper has good adaptability to gradual illumination, uneven illumination, reflective shadows, oil sludge, and so on.

2.4. Wire Rope Defect Detection

In practical application, the starting point of wire rope detection can be intervened manually to ensure that there is no fracture in the initialization stage of the spatiotemporal sample set. When the model is initialized, the -frame images are used for spatiotemporal gray modeling. When is set appropriately, a stable dynamic spatiotemporal gray sample set can be obtained.

The principle of pixel gray replacement in the spatiotemporal gray sample set is to calculate the gradient value between the current pixel value and the pixel mean value in the spatiotemporal sample set. If the gradient value is less than the threshold ( is the empirical threshold), it is determined that the current pixel is updated into the sample set, otherwise, it is not updated because the larger the pixel value gradient, it indicates that the pixel may be a defective pixel and should not be updated into the background. According to the principle of time-space first out of the queue, the current sample set updating principle will not introduce defective pixels due to the gradual illumination between image frames, uneven illumination inside the image, reflective shadow, oil sludge, and other conditions in the natural environment, so it has good adaptability to the complex environment.

The spatiotemporal gray sample set is used to detect defects in the process of traversal each pixel in the current image. Take as an example to calculate the sample value distance in the sample set with its corresponding sample number . To calculate the distance, we need to select the sub-set of the rope strand or the sub-set of the rope strand edge according to the pixel location, determine whether the distance is less than the corresponding set threshold (rope strand pixel threshold) or (rope strand edge pixel threshold), and count the number of samples less than the set threshold .

Then, the proportion of similar samples

is:

where

is the number of the sample set,

is the number satisfied with the distance constraints of

or

. When

and

is the empirical threshold, the current pixel

is the pixel of the background; when

, the current pixel

may be the pixel of the defect. If the gray value of the current pixel is greater than the average gray value of its corresponding sample set and exceeds the threshold

(empirical threshold), the current pixel

is determined to be an anomalous pixel. However, the pixel does not belong to the defect area, because the average of a defective pixel is smaller than that of surrounding pixels and the current pixel may be a pixel of the strong reflection region.

5. Conclusions

In this study, we proposed a wire rope surface defect detection method based on a segmentation template and spatiotemporal gray sample set in a complex environment. Different from previous work, the proposed method has strong adaptability to the surface defect detection of sealed steel wire ropes, and its principles can be extended to other types of sealed steel wire rope defect detection work. In addition, as it has good adaptability, this method does not require a large number of samples of defects arising from lubricating oil, adhering dust, natural light, metal or oil reflection, and other types of complex environments. It can be directly used for wire rope strand division and fracture detection. In this study, we constructed a steel wire rope segmentation template, and the position of the rope strand in a real-time image could be marked and segmented by calculating the best overlap response value after shift operation along the rope strand direction. In the relative motion environment between the camera and the scene, the gray sample set in the space-time domain was constructed for the first time to update the dynamic background of the wire rope surface in real-time, so as to realize the robust detection of wire rope surface defects in complex environments such as uneven light, strong light, weak light, and reflection. By constructing a real-world experimental environment for wire rope defect detection, collecting real data to verify the effectiveness of the proposed method, and comparing the results with those of classical background modeling methods VIBE, KNN, and MOG2, the proposed method was verified to have better performance in sealed wire rope defect detection applications, and it was more accurate and more adaptable to a complex environment.