Sensing and Rendering Method of 2-Dimensional Haptic Texture

Abstract

:1. Introduction

2. Presentation of Tactile Texture Information Using Independent Vibration

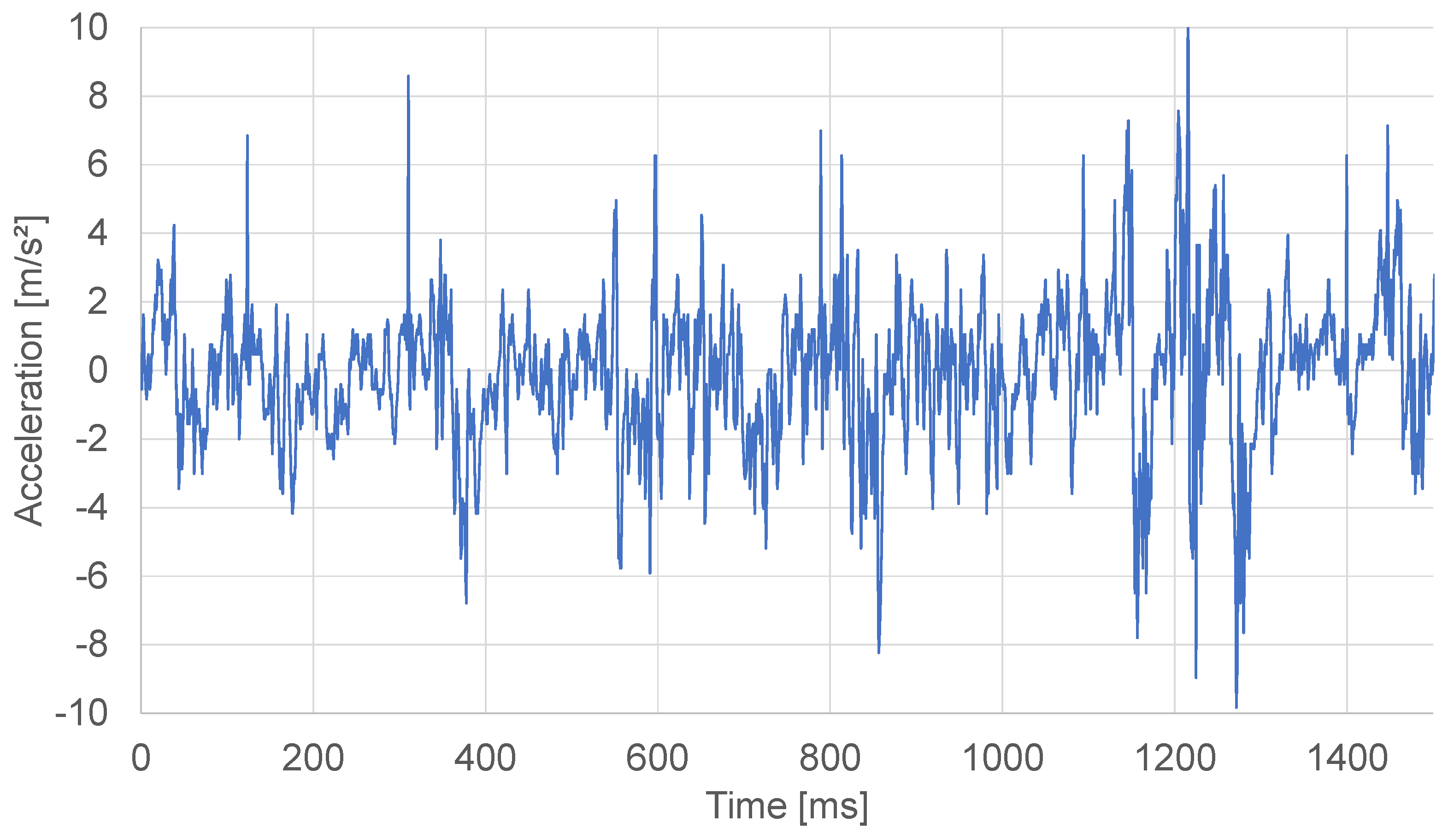

2.1. Recording State

2.2. Playing State and Its Compensation Method

3. Evaluation of the Proposed Method

3.1. Comparison of Reproducibility between Presentation Stimulus Methods

3.2. Discussion

4. Augmenting Information of Image Features

4.1. Recording of Image Features

- Acquire features from texture images using AKAZE

- Extract the size information representing the diameter of the important region around the feature

- Obtain one-dimensional information by averaging information in each of the X-axis and Y-axis directions and then normalize

- Augment the size information corresponding to the display position on the vibration information and presented

4.2. Presentation of Vibration Information Using Image Feature

5. Experiment

5.1. Experiment Procedure

- Ask the subject to touch the sample texture placed on the weighing scale and train them so that the pressing force to be kept about 50 gf for 5 min

- Have they touch the real texture for 10 s to learn the tactile sensation

- Ask them to touch the texture presented on display for 10 s and evaluate it in five steps how much the texture have fidelity

- Change the presentation method and have it evaluated in the same way as steps 2 and 3.

- After completing steps 2 to 4 for all ten types of textures, we finished the experiment.

5.2. Result

5.3. Discussion

5.4. Evaluation of Image Feature Superposition Method

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chubb, E.C.; Colgate, J.E.; Peshkin, M.A. ShiverPaD: A Glass Haptic Surface That Produces Shear Force on a Bare Finger. IEEE Trans. Haptics 2010, 3, 189–198. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, A.; Ishii, T.; Higuchi, T. Electrostatic tactile display for presenting surface roughness sensation. In Proceedings of the 2003 IEEE International Conference on Industrial Technology, Maribor, Slovenia, 10–12 December 2003; IEEE: Piscataway, NJ, USA, 2004; Volume 2, pp. 680–684. [Google Scholar] [CrossRef]

- Takasaki, M.; Tamon, R.; Kotani, H.; Mizuno, T. Pen tablet type surface acoustic wave tactile display integrated with visual information. In Proceedings of the 2008 IEEE International Conference on Mechatronics and Automation, Takamatsu, Japan, 5–8 August 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 357–362. [Google Scholar] [CrossRef]

- Konyo, M.; Yamada, H.; Okamoto, S.; Tadokoro, S. Alternative Display of Friction Represented by Tactile Stimulation without Tangential Force. Haptics: Perception, Devices and Scenarios. In Proceedings of the 6th International Conference, EuroHaptics 2008, Madrid, Spain, 11–13 June 2008; pp. 619–629. [Google Scholar] [CrossRef]

- Makino, Y.; Saeki, M.; Maeno, T. Tactile Feedback for Handheld Touch Panel Device with Dual Vibratory Stimulus. In Proceedings of the Asian Conference on Design and Digital Engineering, Seoul, Korea, 25–28 August 2010. [Google Scholar]

- Wang, D.; Tuer, K.; Rossi, M.; Shu, J. Haptic overlay device for flat panel touch displays. In Proceedings of the 12th International Symposium on HAPTICS’04, Chicago, IL, USA, 27–28 March 2004; IEEE: Piscataway, NJ, USA, 2004; p. 290. [Google Scholar] [CrossRef]

- Bau, O.; Poupyrev, I.; Israr, A.; Harrison, C. TeslaTouch: Electrovibration for touch surfaces. In Proceedings of the 23nd Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, 3–6 October 2010; ACM: New York, NY, USA, 2010; pp. 283–292. [Google Scholar] [CrossRef]

- Visell, Y.; Law, A.; Cooperstock, J.R. Touch Is Everywhere: Floor Surfaces as Ambient Haptic Interfaces. IEEE Trans. Haptics 2009, 2, 148–159. [Google Scholar] [CrossRef] [PubMed]

- Kuchenbecker, K.J.; Gewirtz, J.; McMahan, W.; Standish, D.; Martin, P.; Bohren, J.; Mendoza, P.J.; Lee, D.I. VerroTouch: High-frequency acceleration feedback for telerobotic surgery. Haptics Gener. Perceiving Tang. Sensat. 2010, 6191, 189–196. [Google Scholar] [CrossRef] [Green Version]

- Romano, J.M.; Kuchenbecker, K.J. Creating Realistic Virtual Textures from Contact Acceleration Data. IEEE Trans. Haptics 2012, 5, 109–119. [Google Scholar] [CrossRef] [PubMed]

- Minamizawa, K.; Kakehi, Y.; Nakatani, M.; Mihara, S.; Tachi, S. TECHTILE toolkit—A prototyping tool for design and education of haptic media. In Proceedings of the Virtual Reality International Conference (Laval Virtual) 2012, Laval, France, 28–30 March 2012; p. 22. [Google Scholar] [CrossRef]

- Saga, S.; Raskar, R. Simultaneous geometry and texture display based on lateral force for touchscreen. In Proceedings of the World Haptics Conference (WHC), Daejeon, Korea, 14–17 April 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 437–442. [Google Scholar] [CrossRef]

- Brisben, A.; Hsiao, S.; Johnson, K. Detection of vibration transmitted through an object grasped in the hand. J. Neurophysiol. 1999, 81, 1548–1558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Visell, Y.; Law, A.; Cooperstock, J.R. Toward iconic vibrotactile information display using floor surfaces. In Proceedings of the World Haptics 2009-Third Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Salt Lake City, CT, USA, 18–20 March 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 267–272. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of sift, surf, kaze, akaze, orb, and brisk. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–10. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saga, S.; Kurogi, J. Sensing and Rendering Method of 2-Dimensional Haptic Texture. Sensors 2021, 21, 5523. https://doi.org/10.3390/s21165523

Saga S, Kurogi J. Sensing and Rendering Method of 2-Dimensional Haptic Texture. Sensors. 2021; 21(16):5523. https://doi.org/10.3390/s21165523

Chicago/Turabian StyleSaga, Satoshi, and Junya Kurogi. 2021. "Sensing and Rendering Method of 2-Dimensional Haptic Texture" Sensors 21, no. 16: 5523. https://doi.org/10.3390/s21165523