Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing

Abstract

:1. Introduction

- In terms of infection risk, proximity becomes irrelevant if there is a physical barrier in between. Such barriers are often used in supermarkets, restaurants and offices. People may also often sit next to each other distance-wise but be separated by a thin wall. Often such barriers are more or less transparent to 2.4 GHz signals so that BLE RSSI-based contact tracing systems mistakenly assume an infection risk.

- While under ideal conditions (i.e., in empty space) distance can be estimated exactly using signal attenuation, in the real world, RF signal strength is determined by a combination of factors such as absorption, reflection and diffraction. In particular, the human body is highly absorbent at 2.4 GHz, which means that for the same distance between two people facing each other, the RSSI value will be very different depending on whether phones are in front or back pockets. In general, the phone orientation, placement and other factors make RSSI alone deficient for estimating the distance between people across scenarios [2]. In cases where people are close in metal enclosures such as public transportation, research has also shown that there is little or weak correlation between phone distance and measured RSSI [3] and thus close contact cannot be correctly detected by the current Google/Apple Bluetooth contact tracing systems.

1.1. Paper Contributions

- Our proposed concept uses privacy-preserving ambient sound intensity fingerprints for social distancing monitoring. The idea is to leverage the fact that ambient sound received by a mobile device is a superposition of sounds from sources at many different locations in the environment. Such a superposition is determined by the relative position of those sources with respect to the receiver. Thus two receivers at the same location are likely to see a very similar superposition and receivers further away will see more different superpositions. To preserve privacy, we work with temporal patterns of intensity profiles at a single fixed frequency which does not allow the reconstruction of sensitive information such as spoken words or speaker identity.

- We have designed and implemented a signal processing chain for translating differences in the fingerprint received into proximity indication.

- We demonstrate how this proximity information can be used to classify distance between users carrying a mobile device with respect to the 1.5 m social distancing threshold. This includes sound-only classification as well as fusion with a classical BLE RSSI approach.

- We validate our method on a real-life data set from different locations in a city (supermarket, hardware store, train station, office building, mall, etc.), showing an accuracy of up to 80% for sound and up to 86% combined (Bluetooth alone 77%).

- We present different evaluation modes to demonstrate the strengths and limitations of each of the modalities and to help users understand where and how sound can help. This includes showing in an additional small lab experiment that sound can detect physical barriers that prevent infections even when two users are physically close (e.g., glass or office walls).

1.2. Related Work

- (A)

- Social Distancing

- (B)

- Bluetooth-Based Position Estimation

- (C)

- Sound Based Proximity Sensing

2. Approach

2.1. General Idea

2.2. Sound Processing

- From the 200 Hz amplitude feature vector recorded on the device, calculate the root mean square (RMS) on 1 s jumping windows. Note: storing this as a float value needs 4 bytes per second, for a total of 240 bytes per minute. This is a consideration since for the following steps, we assume that people share the information recorded in step 1) with fellow tracing app users.

- Assuming we call our own signal S and the set of n other people who are at least in Bluetooth visible range to : divide the signal into windows centered on the current data point extending 5 s into the past and future between every pair (S, ). Note: this leaves the temporal granularity unchanged at 1 s. For each of those windows, create a histogram distribution of the sound amplitudes (empirically, 20 bins has proven to be both robust and detailed enough). For each pair of histograms between S and each other device, calculate the Kullback–Leibler divergence as a measure of similarity. The Kullback–Leibler divergence is defined as Since this is not commutative for P and Q, we calculate the average of both orders of inputs, i.e.,

- Finally, calculate a moving average of 1 min.

- Devices may not record any sound whatsoever. This may happen due to technical reasons, incoming calls, etc.

- Devices may not record a significant level of ambient sound. One may argue that periods of silence interrupted by interesting intervals of sound are actually the norm in many environments.

2.3. Bluetooth Processing

- Transmission power compensationA smartphone’s Bluetooth transmission power setting plays a considerable role in RSSI-based distance estimation [41]. Mimonah et al. [42] validated that by considering transmission power when estimating the distance, reduced the mean errors by over 35% in varied distance estimating models, and increased the proximity classification accuracy by over 70%. RSSI data is essentially describing the relationship between transmitted power and received power of an RF signal. This relationship is stated in the following Equation (1) [43]:where is the receiving power, is the transmission power and d is the distance between them. By performing the logarithm of both sides 10 times, we get the expression:Equation (2) shows a linear relationship of the transmission power and the received power for a certain distance. In our app, the initial transmission power was included in each received data package and was recorded together with the signal strength data. Thus the first step we applied to the recorded Bluetooth RSSI signal was the compensation. By simply mining the power of each phone, we kept the power consistency of every smartphone as if they had the same value with zero .

- Moving-average smoothingRegarding the instability of the RSSI data caused by the antenna’s surrounding and environmental complexity, we then performed a moving-average smoothing process on the compensated RSSI data. The smoothing was based on a ten second sliding window with every new RSSI value as one forward step, so that the delay caused by the smoothing can be neglected. Figure 3 depicts the RSSI values recorded by P4’s iPhone after steps of transmission power compensation and smoothing, which shows a more stable RSSI path of tested phones.

2.4. Signal Fusion and Mapping onto Proximity Classes

- Our baseline, i.e., classification using only the Bluetooth data.

- Classification using only the sound data. This can only be done when both phones have good sound, so this restricts the number of windows that can be classified. When comparing this approach with the Bluetooth one, we will compare predictions on only those windows.

- The combination of both, which is our proposed approach. It consists of training one classifier with only Bluetooth features that will be applied if there is no sound feature for a window and another classifier that was trained using sound and Bluetooth that will predict labels for windows that also have sound distances.

3. Experiments

3.1. Controlled Lab Scenario

3.2. Real-Life “City Scale” Data Recording

4. Results

4.1. Effect of Physical Barrier in Lab Setup

4.2. Social Distance Detection in Real Life Environments

4.2.1. Evaluation Methodology

- Sensing modality.

- We considered the classification using Bluetooth alone, sound alone and the fusion of both modalities.

- Window vs. Event-based evaluation.

- The most basic test for classification performance is to look at each individual window and compare the results achieved with the three possible sensing modes above. When doing this we must however consider that sound is not present in all windows (as at times the environment may be silent). We thus do the comparison only on windows that have valid sound. For the purpose of social distancing monitoring and contact tracing 5 s temporal resolution is clearly not needed. It thus makes sense to consider aggregated predictions in larger windows. We found 4 min (=48 windows) to be a good trade-off between temporal resolution and accuracy. Again, the fact that not all windows had sound needed to be taken into account (see below). Finally since typically 15 min periods are used in most contact tracing approaches, we will also investigate the performance of the system with respect to “events” of two people being close to each other for a period of at least 15 min.In summary we will present the following evaluation modes:

- Evaluating both modalities and their fusion, using only windows where there is a valid sound signal. This is the “baseline” for a comparison between all modalities on specific data points (Section 4.2.2).

- Aggregation over groups of 48 windows of 5 s windows with valid sound. Windows that do not have valid sound are ignored. This is the most effective way to use sound. However it overestimates the usefulness of sound information by ignoring the fact that, as opposed to Bluetooth, sound does not provide a prediction for all windows (Section 4.2.3).

- Aggregation over 4 min intervals always taking into account all consecutive windows, no matter if they have valid sound data or not. Thus we include sound information wherever it is available and make a pure Bluetooth-based decision wherever there is no sound. This accounts for the fact, that no matter how useful the sound information is, it only helps with a certain fraction of windows (Section 4.2.4).

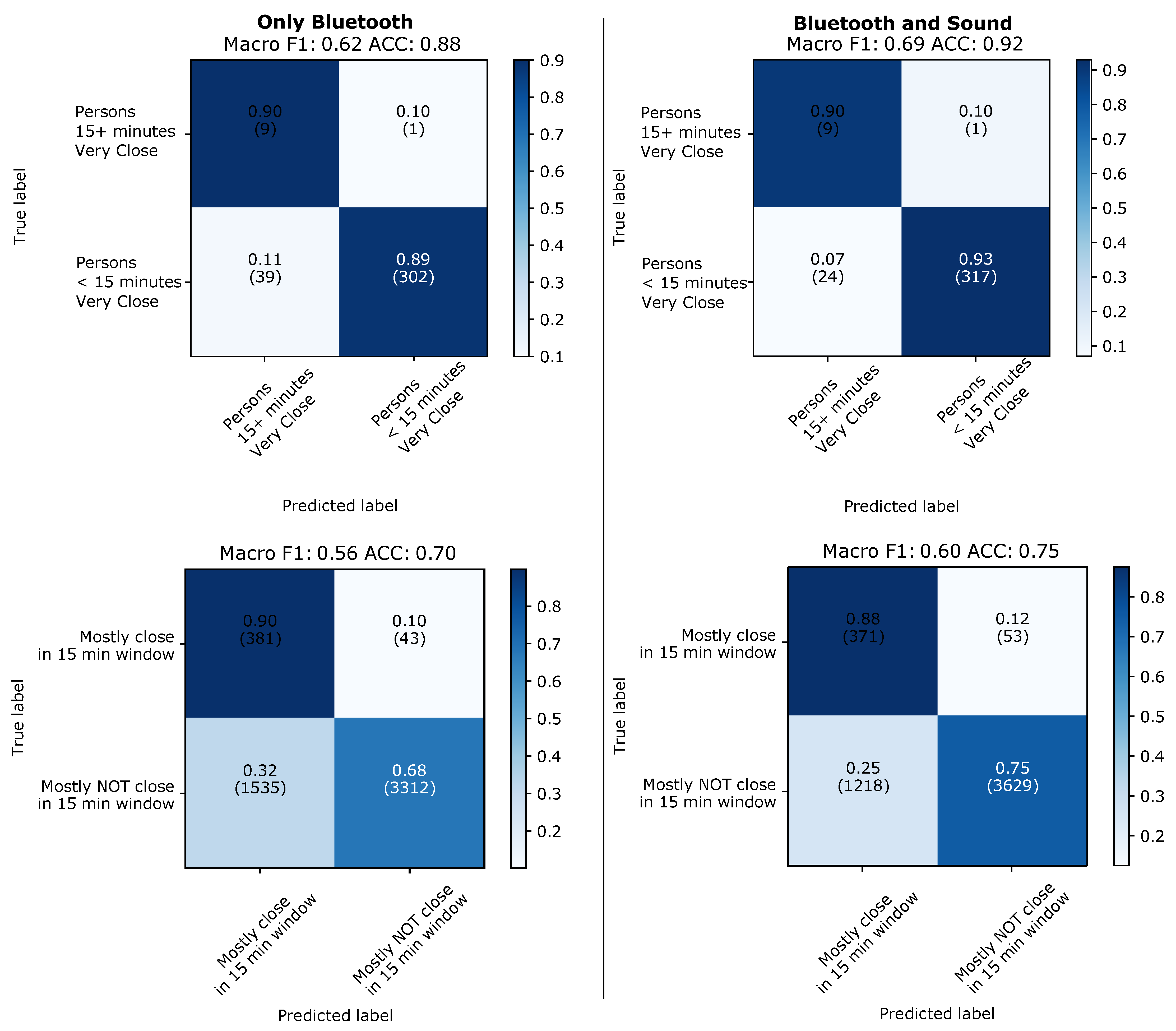

- Performance on aggregated decisions in 15 min windows to account for typical time scales in most existing contact tracing apps. This includes plain aggregation on 15 min and an analysis of all pairs of subjects to determine if those two subjects spent at least one 15 min time interval together below the 1.5 m social distancing limit (Section 4.2.5).

- Number of classes.

- The key performance metric is the ability to distinguish between being below and above the social distancing boundary of 1.5 m. This is our main evaluation metric. However, in order to understand the sensitivity of the sound information better we will also present results for a three class problem as described in Section 2.4.

- Choice of training/testing days.

- As described in Section Figure 8 of the four days on which we recorded data, each two were in different types of environments (first: inside shops, offices, street, second: train station, mall, university canteen). As, especially for Bluetooth, signal attenuation is highly environment dependent we use the different types of data to investigate the sensitivity of the performance of each of the modalities to the similarity between the training and the testing environments.

4.2.2. Evaluation on 5 s Windows with Valid Sound Signal

4.2.3. Aggregation over 48 Windows of 5 s with Valid Sound Signal

4.2.4. Aggregation over 4 min on all Windows

4.2.5. “Event” Based Evaluation on 15 min Windows

5. Conclusions

- As shown in Section 4.1 and Figure 6 sound fingerprints can reliably detect physical barriers which negate proximity as a potential infection risk. By contrast using Bluetooth alone two people sitting on opposite sides of an office wall could be detected as a potential infectious contact.

- As outlined in Section 4.2.3 and shown in Figure 13 and Figure 14 focusing on windows that have good sound signal and aggregating over 48 such windows allows the system to recognize being below the social distancing range with an accuracy of over 80%, improving the purely Bluetooth based recognition.

- Bluetooth and sound complement each other in many ways beyond the ability of sound to detect physical barriers. Most importantly, the majority of factors that lead to errors are very different due to the different physical nature of the signals. Bluetooth tends to have problems with environments with a lot of metallic structures and many people moving, which are both not an issue for sound. On the other hand, fairly empty environments (except for a few people who are potentially infectious contacts) tend to be quiet and thus challenging for sound analysis, but are very well suited for Bluetooth. Finally, while there are some conditions in which sound does not work at all, it is much less sensitive than Bluetooth to the specific conditions in which the system is trained being identical to the conditions where it is deployed.

- While we have conducted an initial small experiment to get an indication of the influence of different phone storage locations, further more detailed studies are clearly needed. This includes the problem of noises that are generated very close to the microphone, which can be expected to occur, e.g., when the phone’s microphone rubs against a tight pocket.

- Our sound analysis approach performs poorly for the middle class in the scenario with three classes (around 1.5m as opposed to very close and very far). The physical principle that we use does not in any way imply such a limitations, although it is clear that the two class problem is harder. For practical reasons we have optimized our system to distinguish very far and very close. In future work a more fine grained resolution needs to be specifically addressed.

- The current method of all or nothing distinction between valid and invalid sound signal in each window clearly has limitations. So does the notion of a static weight for the sound signal. It is likely a key constraint on the performance of the current system. In the long term an adaptive dynamic approach is needed, which reflects the level of uncertainty in both the Bluetooth and sound signal and weights the inputs accordingly.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bluetooth Market Update 2020. Available online: https://www.bluetooth.com/wp-content/uploads/2020/03/2020_Market_Update-EN.pdf (accessed on 24 May 2021).

- Leith, D.J.; Farrell, S. Coronavirus contact tracing: Evaluating the potential of using bluetooth received signal strength for proximity detection. ACM SIGCOMM Comput. Commun. Rev. 2020, 50, 66–74. [Google Scholar] [CrossRef]

- Leith, D.J.; Farrell, S. Measurement-based evaluation of Google/Apple Exposure Notification API for proximity detection in a light-rail tram. PLoS ONE 2020, 15, e0239943. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Shen, C.; Xia, N.; Song, W.; Fan, M.; Cowling, B.J. Rational use of face masks in the COVID-19 pandemic. Lancet Respir. Med. 2020, 8, 434–436. [Google Scholar] [CrossRef]

- Lau, H.; Khosrawipour, V.; Kocbach, P.; Mikolajczyk, A.; Schubert, J.; Bania, J.; Khosrawipour, T. The positive impact of lockdown in Wuhan on containing the COVID-19 outbreak in China. J. Travel Med. 2020, 27, taaa037. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pearce, K. What is social distancing and how can it slow the spread of covid-19. March 2020, 13, 2020. [Google Scholar]

- Amft, O.; Lopera, L.; Lukowicz, P.; Bian, S.; Burggraf, P. Wearables to Fight COVID-19: From Symptom Tracking to Contact Tracing. IEEE Ann. Hist. Comput. 2020, 19, 53–60. [Google Scholar]

- Qi, J.; Liu, G.P. A robust high-accuracy ultrasound indoor positioning system based on a wireless sensor network. Sensors 2017, 17, 2554. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Malik, N. Social Distancing Sensor: Devices that Use Ultrasound and Radio Frequency Communication to Facilitate Social Distancing. 2020. Available online: https://digitalcommons.dartmouth.edu/cgi/viewcontent.cgi?article=1012&context=engs86 (accessed on 2 May 2021).

- Akbari, M.; Carriere, J.; Meyer, T.; Sloboda, R.; Husain, S.; Usmani, N.; Tavakoli, M. Robotic Ultrasound Scanning With Real-Time Image-Based Force Adjustment: Quick Response for Enabling Physical Distancing during the COVID-19 Pandemic. Front. Robot. AI 2021, 8, 62. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yang, L.; Li, J. Robust UWB indoor position tracking using TDOA measurements. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018; IEEE: New York, NY, USA, 2018; pp. 736–743. [Google Scholar]

- Reddy, T.N.A.; Ch, N.D.; Padmaja, V. Social Distance Alert System to Control Virus Spread using UWB RTLS in Corporate Environments. In Proceedings of the 2020 IEEE International Conference on Advent Trends in Multidisciplinary Research and Innovation (ICATMRI), Buldhana, India, 30 December 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Istomin, T.; Leoni, E.; Molteni, D.; Murphy, A.L.; Picco, G.P. Dual-radio discovery and ranging for infrastructure-less social distancing with Janus. In Proceedings of the 18th Conference on Embedded Networked Sensor Systems, Yokohama, Japan, 16–19 November 2020; pp. 760–761. [Google Scholar]

- Bian, S.; Zhou, B.; Bello, H.; Lukowicz, P. A wearable magnetic field based proximity sensing system for monitoring COVID-19 social distancing. In Proceedings of the 2020 International Symposium on Wearable Computers, New York, NY, USA, 12–17 September 2020; pp. 22–26. [Google Scholar]

- Bian, S.; Zhou, B.; Lukowicz, P. Social distance monitor with a wearable magnetic field proximity sensor. Sensors 2020, 20, 5101. [Google Scholar] [CrossRef] [PubMed]

- Bian, S.; Rey, V.F.; Younas, J.; Lukowicz, P. Wrist-Worn Capacitive Sensor for Activity and Physical Collaboration Recognition. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019; IEEE: New York, NY, USA, 2019; pp. 261–266. [Google Scholar]

- Sathyamoorthy, A.J.; Patel, U.; Savle, Y.A.; Paul, M.; Manocha, D. COVID-robot: Monitoring social distancing constraints in crowded scenarios. arXiv 2020, arXiv:2008.06585. [Google Scholar]

- Chawathe, S.S. Beacon placement for indoor localization using bluetooth. In Proceedings of the 2008 11th International IEEE Conference on Intelligent Transportation Systems, Beijing, China, 12–15 October 2008; IEEE: New York, NY, USA, 2008; pp. 980–985. [Google Scholar]

- Fischer, G.; Dietrich, B.; Winkler, F. Bluetooth indoor localization system. In Proceedings of the 1st Workshop on Positioning, Navigation and Communication, Hannover, Germany, 26 March 2004; pp. 147–156. [Google Scholar]

- Ghose, A.; Bhaumik, C.; Chakravarty, T. Blueeye: A system for proximity detection using bluetooth on mobile phones. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013; pp. 1135–1142. [Google Scholar]

- Liu, S.; Jiang, Y.; Striegel, A. Face-to-face proximity estimationusing bluetooth on smartphones. IEEE Trans. Mob. Comput. 2013, 13, 811–823. [Google Scholar] [CrossRef]

- Chandel, V.; Banerjee, S.; Ghose, A. ProxiTrak: A robust solution to enforce real-time social distancing & contact tracing in enterprise scenario. In Proceedings of the 2020 ACM International Joint Conference on Pervasive and Ubiquitous Computing and the 2020 ACM International Symposium on Wearable Computers, Virtual. 12–17 September 2020; pp. 503–511. [Google Scholar]

- Arun, A.; Gupta, A.; Bhatka, S.; Komatineni, S.; Bharadia, D. BluBLE, space-time social distancing to monitor the spread of COVID-19. In Proceedings of the 18th Conference on Embedded Networked Sensor Systems, Yokohama, Japan, 16–19 November 2020; pp. 750–751. [Google Scholar]

- Vaughan, A. The Problems with Contact-Tracing Apps. 2020. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7194927/ (accessed on 2 May 2021).

- Cebrian, M. The past, present and future of digital contact tracing. Nat. Electron. 2021, 4, 2–4. [Google Scholar] [CrossRef]

- Google/Apple. Privacy-Preserving Contact Tracing. Available online: https://covid19.apple.com/contacttracing (accessed on 2 May 2021).

- Leith, D.J.; Farrell, S. Measurement-based evaluation of google/apple exposure notification api for proximity detection in a commuter bus. arXiv 2020, arXiv:2006.08543. [Google Scholar]

- Shankar, S.; Kanaparti, R.; Chopra, A.; Sukumaran, R.; Patwa, P.; Kang, M.; Singh, A.; McPherson, K.P.; Raskar, R. Proximity Sensing: Modeling and Understanding Noisy RSSI-BLE Signals and Other Mobile Sensor Data for Digital Contact Tracing. arXiv 2020, arXiv:2009.04991. [Google Scholar]

- Hatke, G.F.; Montanari, M.; Appadwedula, S.; Wentz, M.; Meklenburg, J.; Ivers, L.; Watson, J.; Fiore, P. Using Bluetooth Low Energy (BLE) signal strength estimation to facilitate contact tracing for COVID-19. arXiv 2020, arXiv:2006.15711. [Google Scholar]

- Scott, J.; Dragovic, B. Audio Location: Accurate Low-Cost Location Sensing. In Pervasive Computing; Gellersen, H.W., Want, R., Schmidt, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–18. [Google Scholar]

- Girod, L.; Lukac, M.; Trifa, V.; Estrin, D. The Design and Implementation of a Self-Calibrating Distributed Acoustic Sensing Platform. In Proceedings of the 4th International Conference on Embedded Networked Sensor Systems (SenSys ’06), Boulder, CO, USA, 31 October–3 November 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 71–84. [Google Scholar] [CrossRef] [Green Version]

- Murata, S.; Yara, C.; Kaneta, K.; Ioroi, S.; Tanaka, H. Accurate indoor positioning system using near-ultrasonic sound from a smartphone. In Proceedings of the 2014 Eighth International Conference on Next Generation Mobile Apps, Services and Technologies, Oxford, UK, 10–12 September 2014; IEEE: New York, NY, USA, 2014; pp. 13–18. [Google Scholar]

- Rossi, M.; Seiter, J.; Amft, O.; Buchmeier, S.; Tröster, G. RoomSense: An indoor positioning system for smartphones using active sound probing. In Proceedings of the 4th Augmented Human International Conference, Stuttgart, Germany, 7–8 March 2013; pp. 89–95. [Google Scholar]

- Thiel, B.; Kloch, K.; Lukowicz, P. Sound-based proximity detection with mobile phones. In Proceedings of the Third International Workshop on Sensing Applications on Mobile Phones, Toronto, ON, Canada, 6–9 November 2012; pp. 1–4. [Google Scholar]

- Ward, J.A.; Lukowicz, P.; Troster, G.; Starner, T.E. Activity recognition of assembly tasks using body-worn microphones and accelerometers. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1553–1567. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rossi, M.; Feese, S.; Amft, O.; Braune, N.; Martis, S.; Tröster, G. AmbientSense: A real-time ambient sound recognition system for smartphones. In Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), San Diego, CA, USA, 18–22 March 2013; IEEE: New York, NY, USA, 2013; pp. 230–235. [Google Scholar]

- Garg, S.; Lim, K.M.; Lee, H.P. An averaging method for accurately calibrating smartphone microphones for environmental noise measurement. Appl. Acoust. 2019, 143, 222–228. [Google Scholar] [CrossRef]

- Peng, C.; Shen, G.; Zhang, Y.; Li, Y.; Tan, K. BeepBeep: A High Accuracy Acoustic Ranging System Using COTS Mobile Devices. In Proceedings of the 5th International Conference on Embedded Networked Sensor Systems (SenSys ’07), Sydney, NSW, Australia, 6–9 November 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 1–14. [Google Scholar] [CrossRef]

- Qiu, J.; Chu, D.; Meng, X.; Moscibroda, T. On the Feasibility of Real-Time Phone-to-Phone 3D Localization. In Proceedings of the 9th ACM Conference on Embedded Networked Sensor Systems; SenSys ’11; Association for Computing Machinery: New York, NY, USA, 2011; pp. 190–203. [Google Scholar] [CrossRef]

- Wirz, M.; Roggen, D.; Tröster, G. A wearable, ambient sound-based approach for infrastructureless fuzzy proximity estimation. In Proceedings of the International Symposium on Wearable Computers (ISWC) 2010, Seoul, Korea, 10–13 October 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Castillo-Cara, M.; Lovón-Melgarejo, J.; Bravo-Rocca, G.; Orozco-Barbosa, L.; García-Varea, I. An empirical study of the transmission power setting for bluetooth-based indoor localization mechanisms. Sensors 2017, 17, 1318. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Al Qathrady, M.; Helmy, A. Improving BLE distance estimation and classification using TX power and machine learning: A comparative analysis. In Proceedings of the 20th ACM International Conference on Modelling, Analysis and Simulation of Wireless and Mobile Systems, Miami, FL, USA, 21–25 November 2017; pp. 79–83. [Google Scholar]

- Jianwu, Z.; Lu, Z. Research on distance measurement based on RSSI of ZigBee. In Proceedings of the 2009 ISECS International Colloquium on Computing, Communication, Control, and Management, Sanya, China, 8–9 August 2009; IEEE: New York, NY, USA, 2009; Volume 3, pp. 210–212. [Google Scholar]

- Exposure Notification Bluetooth Specification. Available online: https://covid19-static.cdn-apple.com/applications/covid19/current/static/contact-tracing/pdf/ExposureNotification-BluetoothSpecificationv1.2.pdf?1 (accessed on 30 April 2021).

- Loh, W.Y. Classification and regression trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Celosia, G.; Cunche, M. Saving private addresses: An analysis of privacy issues in the bluetooth-low-energy advertising mechanism. In Proceedings of the 16th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Houston, TX, USA, 12–14 November 2019; pp. 444–453. [Google Scholar]

| Day | Type | Places Visited | Windows | Windows | Duration |

|---|---|---|---|---|---|

| (Bluetooth) | (Good Sound) | (Minutes) | |||

| 1 | A | train station, university | 92.7% | 48.2% | 61 |

| 2 | A | train station, university | 92.4% | 47.1% | 86 |

| 3 | B | research center, media store, hardware store, supermarket | 79.67% | 18.9% | 30 |

| 4 | B | hardware store, shopping center | 94.2% | 37.2% | 16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bahle, G.; Fortes Rey, V.; Bian, S.; Bello, H.; Lukowicz, P. Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing. Sensors 2021, 21, 5604. https://doi.org/10.3390/s21165604

Bahle G, Fortes Rey V, Bian S, Bello H, Lukowicz P. Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing. Sensors. 2021; 21(16):5604. https://doi.org/10.3390/s21165604

Chicago/Turabian StyleBahle, Gernot, Vitor Fortes Rey, Sizhen Bian, Hymalai Bello, and Paul Lukowicz. 2021. "Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing" Sensors 21, no. 16: 5604. https://doi.org/10.3390/s21165604

APA StyleBahle, G., Fortes Rey, V., Bian, S., Bello, H., & Lukowicz, P. (2021). Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing. Sensors, 21(16), 5604. https://doi.org/10.3390/s21165604