Assessing the Bowing Technique in Violin Beginners Using MIMU and Optical Proximity Sensors: A Feasibility Study

Abstract

:1. Introduction

2. Materials and Methods

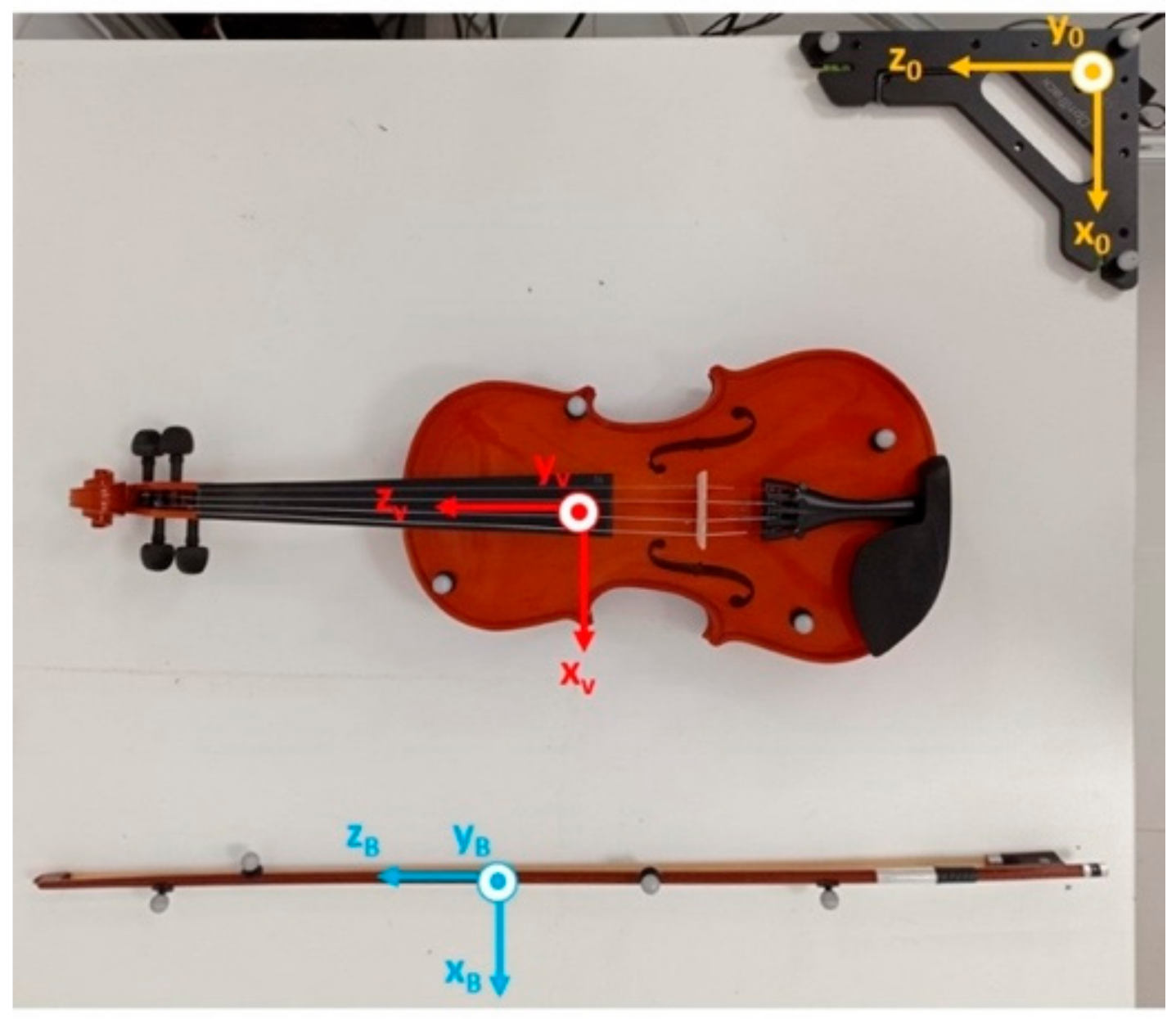

2.1. Measuring Bow–Violin Orientation

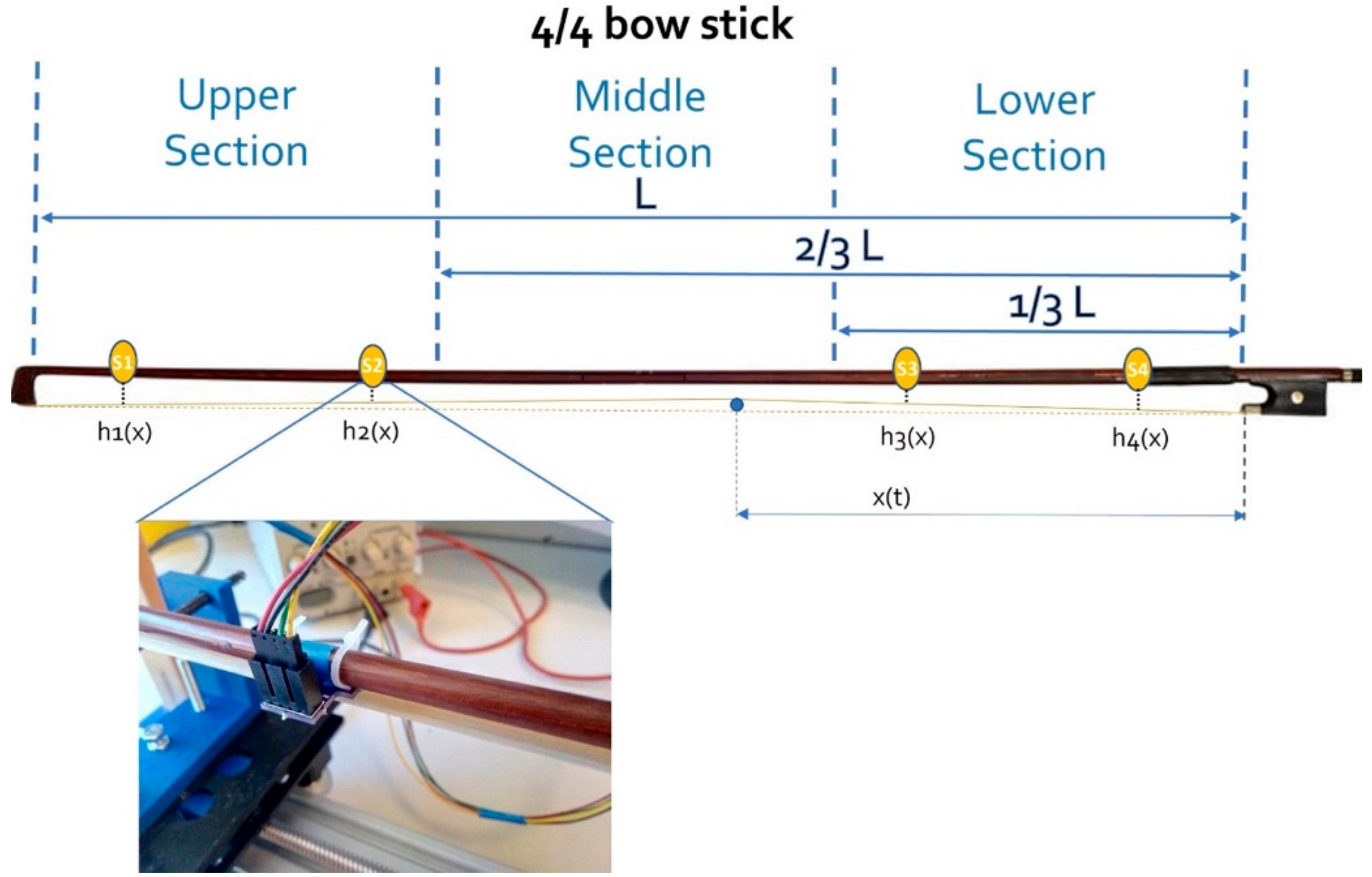

2.2. Estimating the Bow Section

3. Results

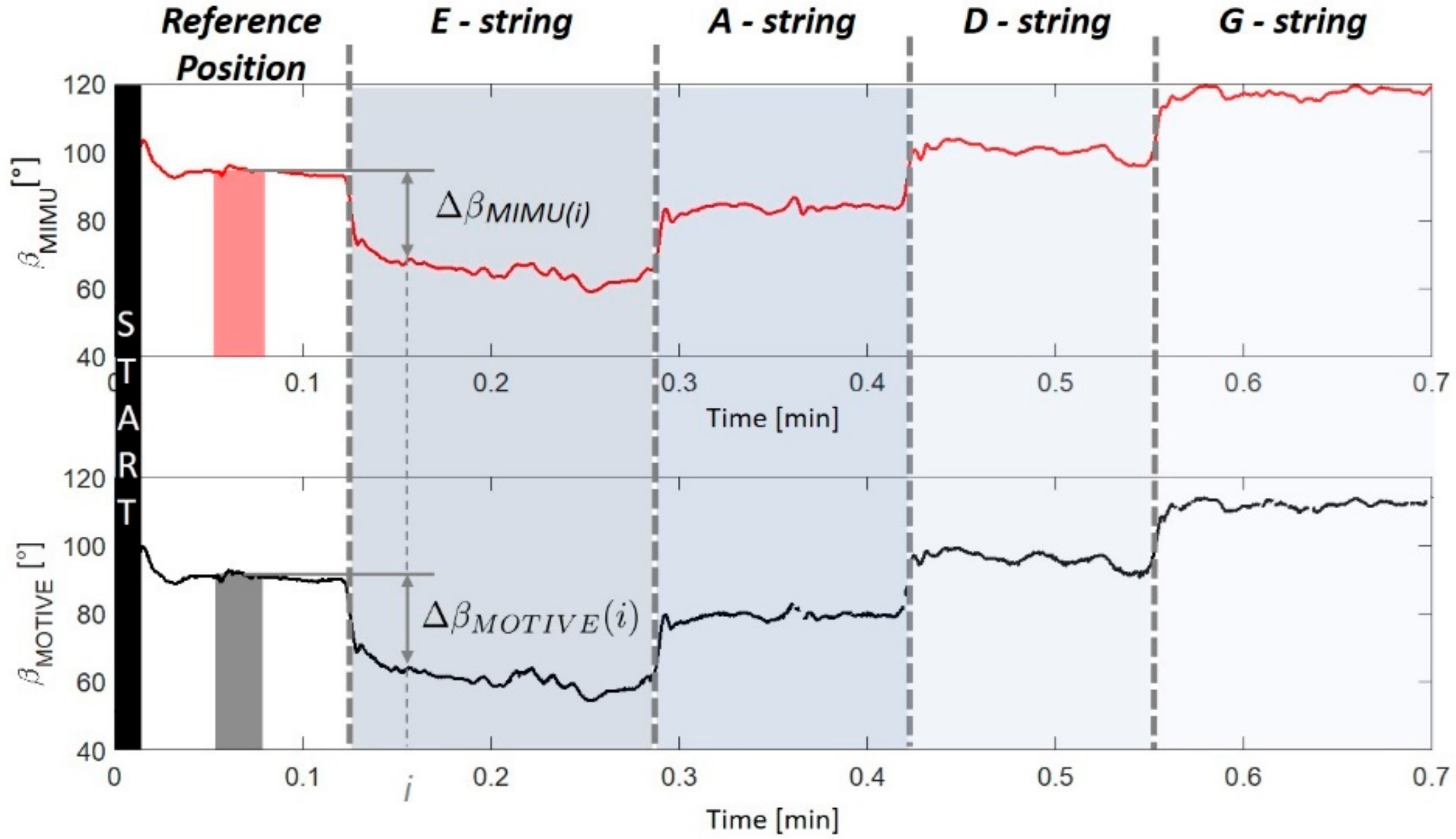

3.1. Measuring Bow–Violin Orientation

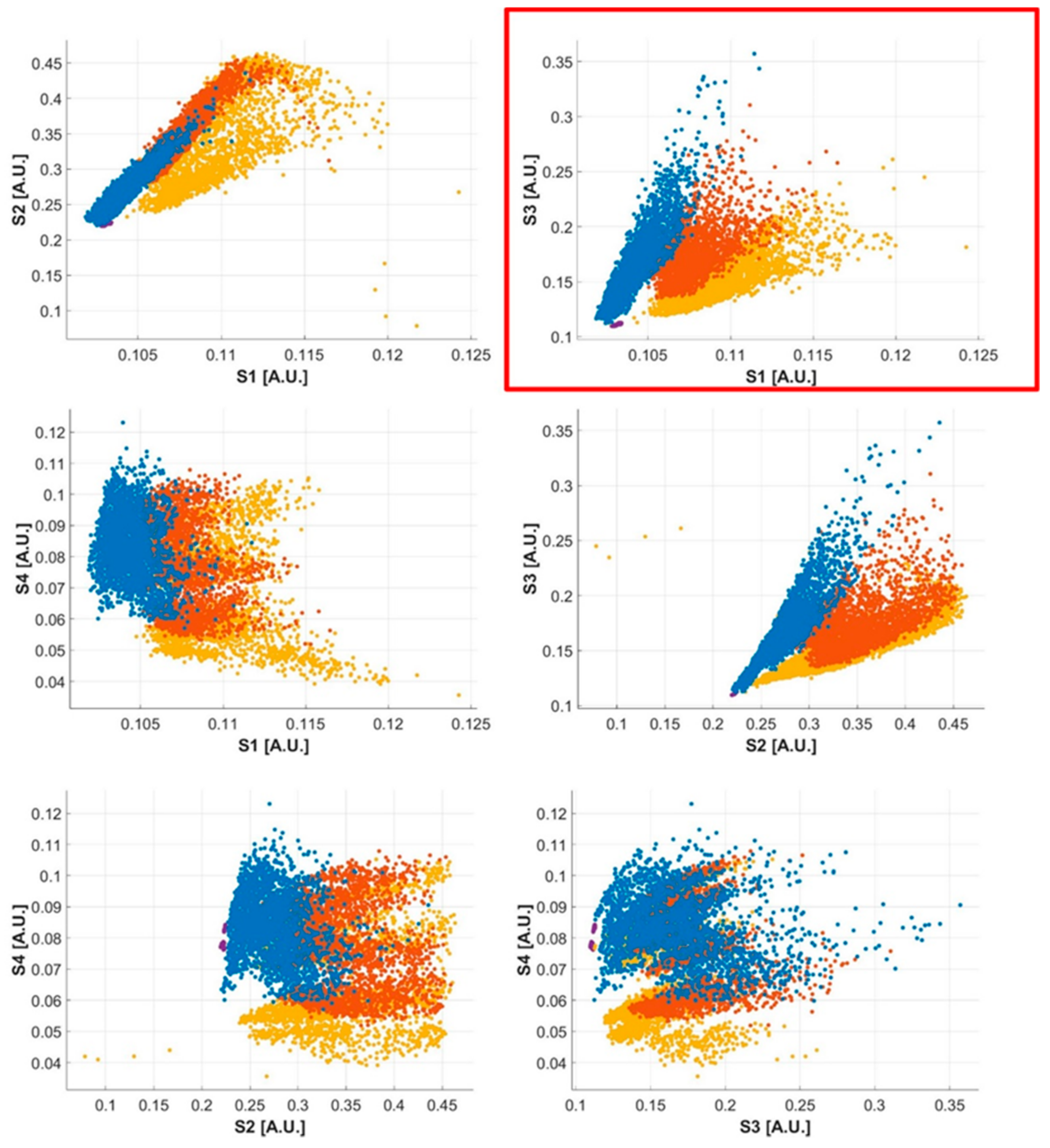

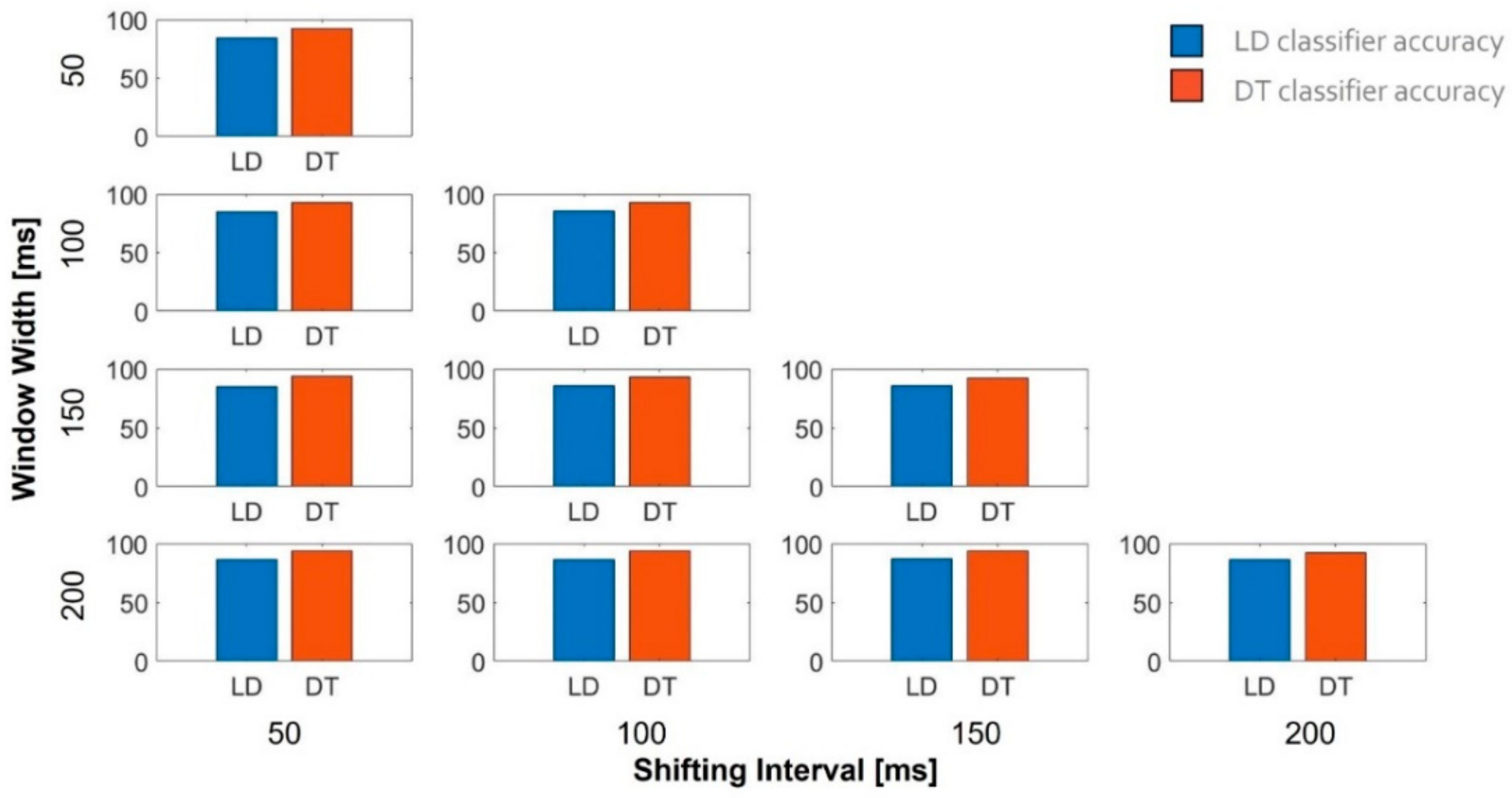

3.2. Estimating the Bow Section

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gladwell, M. Outliers, 1st ed.; Little, Brown and Co.: Boston, MA, USA, 2008. [Google Scholar]

- Helmholtz, H.V. On the Sensation of Tone, 3rd ed.; Longmans, Green and Co.: London, UK, 1985. [Google Scholar]

- Schelleng, J.C. La fisica delle corde di violino. Sci. Am. 1974, 68, 82–90. [Google Scholar]

- Askenfelt, A. Measurement of the Bowing Parameters in Violin Playing. J. Acoust. Soc. Am. 1988, 29, 1–30. [Google Scholar] [CrossRef]

- Young, D. The Hyperbow Controller: Real-Time Dynamics Measurement of Violin Performance. In Proceedings of the 2002 Conference on New Interfaces for Musical Expression, Dublin, Ireland, 24–26 May 2002; National University of Singapore: Singapore, 2002; pp. 1–6. [Google Scholar]

- Paradiso, J.A.; Gershenfeld, N. Musical Applications of Electric Field Sensing. Comput. Music J. 1997, 21, 69–89. [Google Scholar] [CrossRef]

- Demoucron, M.; Askenfelt, A.; Caussé, R. Measuring Bow Force in Bowed String Performance: Theory and Implementation of a Bow Force Sensor. Acta Acust United Acust. 2009, 95, 718–732. [Google Scholar] [CrossRef] [Green Version]

- Pardue, L.S.; Harte, C.; McPherson, A.P. A Low-Cost Real-Time Tracking System for Violin. J. New Music Res. 2015, 44, 305–323. [Google Scholar] [CrossRef] [Green Version]

- Pardue, L.S. Violin Augmentation Techniques for Learning Assistance. Ph.D. Thesis, Queen Mary University of London, London, UK, 2017. [Google Scholar]

- Rasamimanana, N.; Kaiser, F.; Bevilacqua, F. Perspectives on Gesture–Sound Relationships Informed from Acoustic Instrument Studies. Org. Sound 2009, 14, 208–216. [Google Scholar] [CrossRef] [Green Version]

- Schoonderwaldt, E.; Sinclair, S.; Wanderley, M.M. Why Do We Need 5-DOF Force Feedback? The Case of Violin Bowing. In Proceedings of the 4th International Conference on Enactive Interfaces, Grenoble, France, 19–24 November 2007. [Google Scholar]

- Ancillao, A.; Savastano, B.; Galli, M.; Albertini, G. Three Dimensional Motion Capture Applied to Violin Playing: A Study on Feasibility and Characterization of the Motor Strategy. Comput. Meth. Programs Biomed 2017, 149, 19–27. [Google Scholar] [CrossRef] [PubMed]

- Linden, J.; Schoonderwaldt, E.; Bird, J.; Johnson, R. MusicJacket—Combining Motion Capture and Vibrotactile Feedback to Teach Violin Bowing. IEEE Trans. Instrum. Meas. 2010, 60, 104–113. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. In Usability Evaluation in Industry; Taylor and Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Curci, A. Tecnica Fondamentale Del Violino; Edizioni Curci: Milan, Italy, 1980. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 25 August 2021).

- Matlab. Choose Classifier Options. Available online: https://it.mathworks.com/help/stats/choose-a-classifier.html (accessed on 12 August 2021).

- Safavian, S.R.; Landgrebe, D. A Survey of Decision Tree Classifier Methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef] [Green Version]

- Bakdash, J.Z.; Marusich, L.R. Repeated Measures Correlation. Front. Psychol. 2017, 8, 456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leman, M.; Nijs, L.; Di Stefano, N. On the Role of the Hand in the Expression of Music. In The Hand Studies in Applied Philosophy, Epistemology and Rational Ethics; Bertolaso, M., Di Stefano, N., Eds.; Springer Science and Business Media LLC: Cham, Switzerland, 2017; Volume 38. [Google Scholar] [CrossRef]

- Leman, M.; Nijs, L. Cognition and technology for instrumental music learning. In The Routledge Companion to Music, Technology, and Education; Routledge: London, UK, 2017; pp. 47–60. [Google Scholar]

- Tuuri, K.; Koskela, O. Understanding Human–Technology Relations Within Technologization and Appification of Musicality. Front. Psychol. 2020, 11, 416. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Di Tocco, J.; Massaroni, C.; Di Stefano, N.; Formica, D.; Schena, E. Wearable System Based on Piezoresistive Sensors for Monitoring Bowing Technique in Musicians. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; pp. 1–4. [Google Scholar]

| Technology | Variables to Be Monitored (Accuracy: High, Medium, Low) | Usability Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Bow Section | Bow–Bridge Distance | Bow Velocity | Bow Acceleration | Bow Force | Bow Orientation | Invasiveness | Weight | Integration Difficulties | Cost | |

| Resistive strip and capacitive coupling | + (High) | + (High) | d (Medium) | d (Medium) | − (N.A.) | − (N.A.) | Low | Low | High | Low |

| Optical sensors | + (High) | − (N.A.) | − (N.A.) | − (N.A.) | d (Medium) | − (N.A.) | Low | Low | Medium | Low |

| Strain gauges | − (N.A.) | − (N.A.) | − (N.A.) | − (N.A.) | + (High) | − (N.A.) | Low | Low | Low | Low |

| MEMS accelerometers | − (N.A.) | − (N.A.) | − (N.A.) | + (High) | − (N.A.) | − (N.A.) | Low | Low | Low | Low |

| Optical marker systems | + (High) | + (Low) | d (Medium) | d (Medium) | − (N.A.) | + (High) | High | Low | High | High |

| MIMU | − (N.A.) | − (N.A.) | − (N.A.) | + (High) | − (N.A.) | + (High) | Low | Low | Low | Low |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Provenzale, C.; Di Stefano, N.; Noccaro, A.; Taffoni, F. Assessing the Bowing Technique in Violin Beginners Using MIMU and Optical Proximity Sensors: A Feasibility Study. Sensors 2021, 21, 5817. https://doi.org/10.3390/s21175817

Provenzale C, Di Stefano N, Noccaro A, Taffoni F. Assessing the Bowing Technique in Violin Beginners Using MIMU and Optical Proximity Sensors: A Feasibility Study. Sensors. 2021; 21(17):5817. https://doi.org/10.3390/s21175817

Chicago/Turabian StyleProvenzale, Cecilia, Nicola Di Stefano, Alessia Noccaro, and Fabrizio Taffoni. 2021. "Assessing the Bowing Technique in Violin Beginners Using MIMU and Optical Proximity Sensors: A Feasibility Study" Sensors 21, no. 17: 5817. https://doi.org/10.3390/s21175817

APA StyleProvenzale, C., Di Stefano, N., Noccaro, A., & Taffoni, F. (2021). Assessing the Bowing Technique in Violin Beginners Using MIMU and Optical Proximity Sensors: A Feasibility Study. Sensors, 21(17), 5817. https://doi.org/10.3390/s21175817