1. Introduction

In the recent decade, image processing and computer vision techniques have gained recognition in the engineering society as an important element of inspection and monitoring systems for mechanical and civil engineering structures [

1,

2,

3]. For object surface state assessment, images capture the same information as that usually found by human inspectors. Additionally, image and video files encode full-field displacements or deformation courses. A series of images captured from distinct viewpoints provide information on the 3D structure of the object [

4]. Videos, as a time series of images, additionally contain temporal information that can be utilized to find changes in the observed object or obtain dynamic response data if one applies a measurement camera with a frame rate that is sufficiently high [

5]. A lot of research reported in the literature has been carried out for image-based remote inspection of civil engineering structures, using high-resolution camera systems mounted on a tripod [

3] or unmanned aerial vehicles [

6] (UAVs) to record necessary image data. Additionally, one can observe a significant development in artificial intelligence (AI) approaches in computer vision systems [

7].

There are two major groups of computer vision applications for structure state assessment: image-based inspection of the surface and vision-based monitoring to obtain the current static state and/or dynamic behavior of the structure. Researchers have developed methods for the detection of various types of damage: concrete cracks, delamination and spalling of concrete, asphalt cracks, steel fatigue cracks and corrosion [

8]. In a recent study [

9], the authors proposed a tracking measurement of the full-field surface deformation of large-field recycled concrete-filled steel tube columns via a mark-free, four-ocular, stereoscopic visual system. Achieved results proved the high accuracy of this method.

Earlier developed methods of surface damage detection are heuristic and were designed for specific tasks. However, in these methods, all parameters must be chosen manually based on the image data and the user’s practical knowledge. Deep learning introduction expanded the capability and robustness compared to the classical vision [

10]. A lot of studies have been conducted to increase the automation level of image-based damage detection. An interesting application is the structural element recognition algorithm. First, the structural elements are localized in the image (e.g., columns, beams) [

11]. Next, damage detection is carried out on each of them, and its severity is evaluated with the application of specific technical standards. The entire inspection process is performed with the application of UAV robots [

12]. Object detection methods are also used in other vision system applications, e.g., fruit detection systems, such as that presented by Li et al. [

13].

Another big area of image processing application in structure state evaluation is static [

14,

15] and dynamic vision-based measurement [

16,

17]. Structural deformation is often computed by means of the digital image correlation (DIC) method, a well-known approach in the field of laboratory testing of mechanical properties [

18]. In this approach, a high-contrast visual optical noise pattern has to be placed on the object’s surface before an experiment is carried out to increase the performance of the method. The DIC method has also been applied in the displacement measurement of large-scale civil engineering structures. In such cases, a camera equipped with a telephoto lens is used. Usually, it observes a single point, a marker or a natural feature; however, a larger field of view may be observed also by means of the application of a synchronized camera network [

19,

20]. Stereovision systems are applied to recover the 3D structure of observed objects [

21]. Such systems may also be augmented by RGB-D sensors [

22]. Multicamera reconstruction can also be performed using even more cameras. For example, Chen et al. [

23] and Tang et al. [

24] used four-camera vision systems for a tube-like shape object reconstruction.

Most often, the development of image processing algorithms requires access to a large amount of test data. These are video sequences or single images showing objects whose displacement or change in shape is the subject of detection. The structure is usually equipped with visual markers or has an applied optical noise on its surface. This requirement is related to how the algorithms determine changes in the shape of the structure in the image. This is performed by measuring the position of individual markers on the system under study. The design and development of damage detection require vision data (such as images or video sequences) of analyzed structure. Usually, it is necessary to collect data for loaded and unloaded states, as well as for various damage scenarios. This approach requires careful preparation of laboratory setups and performing time-consuming experiments, as damaged structures are not common in practice. It requires the preparation of the test stand, i.e., sample manufacturing, configuration of the test rig and the vision system. Dynamic experiment observations recorded with the use of high-speed cameras are particularly time-consuming due to the large amount of data recorded.

A lot of progress has been made in the field of computer graphics and augmented reality that has allowed the generation of photorealistic images and videos. In the literature, such data have been used to train deep neural networks for the segmentation of images and object classification problems [

25]. This significantly increased the available sizes of training data sets for neural networks and allowed the introduction of more variable imaging conditions for synthetic cameras. Two approaches to the generation of synthetic images are available. The first approach uses game engines to render images in a shorter time but with limited capabilities for realism, and the second approach allows rendering scenes using a physics-based ray tracing algorithm to produce high-quality, photorealistic images. However, this approach requires more computational cost. Synthetic images generated in one of these approaches provide a structural model with controllable and repeatable loading and damage conditions. Additionally, the effects of external lighting and vision system optical parameters can be easily simulated. A practical implementation of this idea was presented in the work by Spencer et al. [

1]. The authors presented a method of using physical-based models of structures and synthetic image generation to obtain images of structures with different damage conditions. They used synthetic data of a miter gate and a deep neural network to identify changes occurring on the gate. The generated training set of images included models of various damage conditions such as cracks and corrosion. Synthetic images in the data set were generated under different environmental factors such as variable lighting conditions and vegetation growth.

The results of the review presented in [

25] indicate that the currently used methods of synthetic image generation are most often based on existing graphics engines dedicated to games or embedded algorithms in rendering programs, e.g., in Blender or Autodesk Maya. It should be emphasized that the main goals of these solutions are visual effects. When it is more important to reflect the actual deformation of the structure (e.g., under loads), the available engines may not be sufficient and reliable solutions. To the best of our knowledge, there are no published validation results that can definitely prove that the mechanical deformations in synthetic images are realistic.

This article addresses this challenge. As part of this work, the authors propose a solution that aims at synthetic image generation based on finite element analysis results, which exemplifies the novelty of this work. In general, the proposed solution consists of using a model formulated with the finite element method (FEM), which is a widely recognized simulation method that precisely simulates the deformation of the studied model. Then, the graphics program Blender is used to generate synthetic images using computer resources (renderings).

The limitations of the proposed method are mainly related to the detailed representation of the simulated scene. The complicated shape of the observed structure requires the formulation of a dense FEM mesh. This results in a significant extension of computation time. Moreover, another limitation of the applied method is the complex state of illumination of the scene and a detailed representation of texture reflectance, as well as the size of the generated image. All these factors increase the rendering time of the scene. Overlapping these factors can make synthetically producing images more time-consuming than conducting real experiments in extreme cases. However, in most practical applications, these limitations are limited, and the computational efficiency of the followed numerical approach is satisfactorily high.

FEM simulations are widely used in the analysis of engineering problems, including multidomain simulations, in which an important role is played by coupling between various physical domains, such as thermal and mechanical [

26]. This method is also used to analyze other problems, such as metal forming [

27] or the analysis of components made with composite materials [

28]. The joint simulation of the Blender graphics program and the FEM has been presented recently [

29]. Nevertheless, the Blender program was only used to define a finite element mesh. The second example of Blender integration, this time in the computer-aided design (CAD) environment, was presented by Vasilev et al. [

30]. The images were rendered in Blender, but the whole process did not involve FEM calculations to obtain the deformation of objects.

The novelty of the simulation approach introduced in this paper is the combination of the FEM method and the ability to render synthetic images in the Blender program. Owing to the use of FEM simulation models, high accuracy of simulated displacements is ensured. Moreover, it is possible to simulate any complex mechanical structure. The authors of this work have developed their own numerical environment. It allows customizing the simulation and automatically renders images of statically or dynamically loaded mechanical structures. In the proposed solution, FEM models are computed in the MSC.Marc solver, which is dedicated to nonlinear analyses (e.g., with contacts) and multidomain couplings. Moreover, owing to the developed simulation setup, the FEM analysis can be individually customized to a specific problem (e.g., modeling of vision markers). The proposed algorithm is discussed in detail in

Section 2. Subsequently,

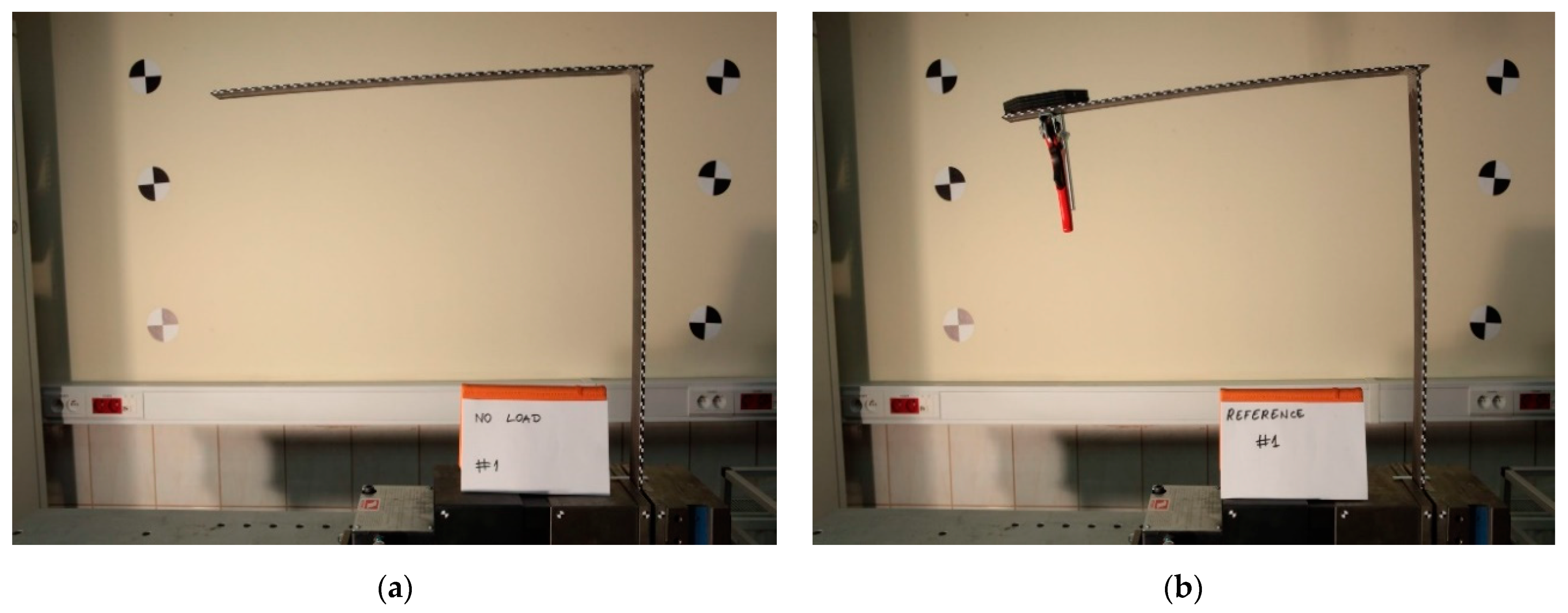

Section 3 presents two examples of applications for generating synthetic images. The first example includes experimental validation of the proposed simulation approach, while the second example presents the simulation results for a multicamera system.

Section 4 summarizes the presented work.

2. Materials and Methods

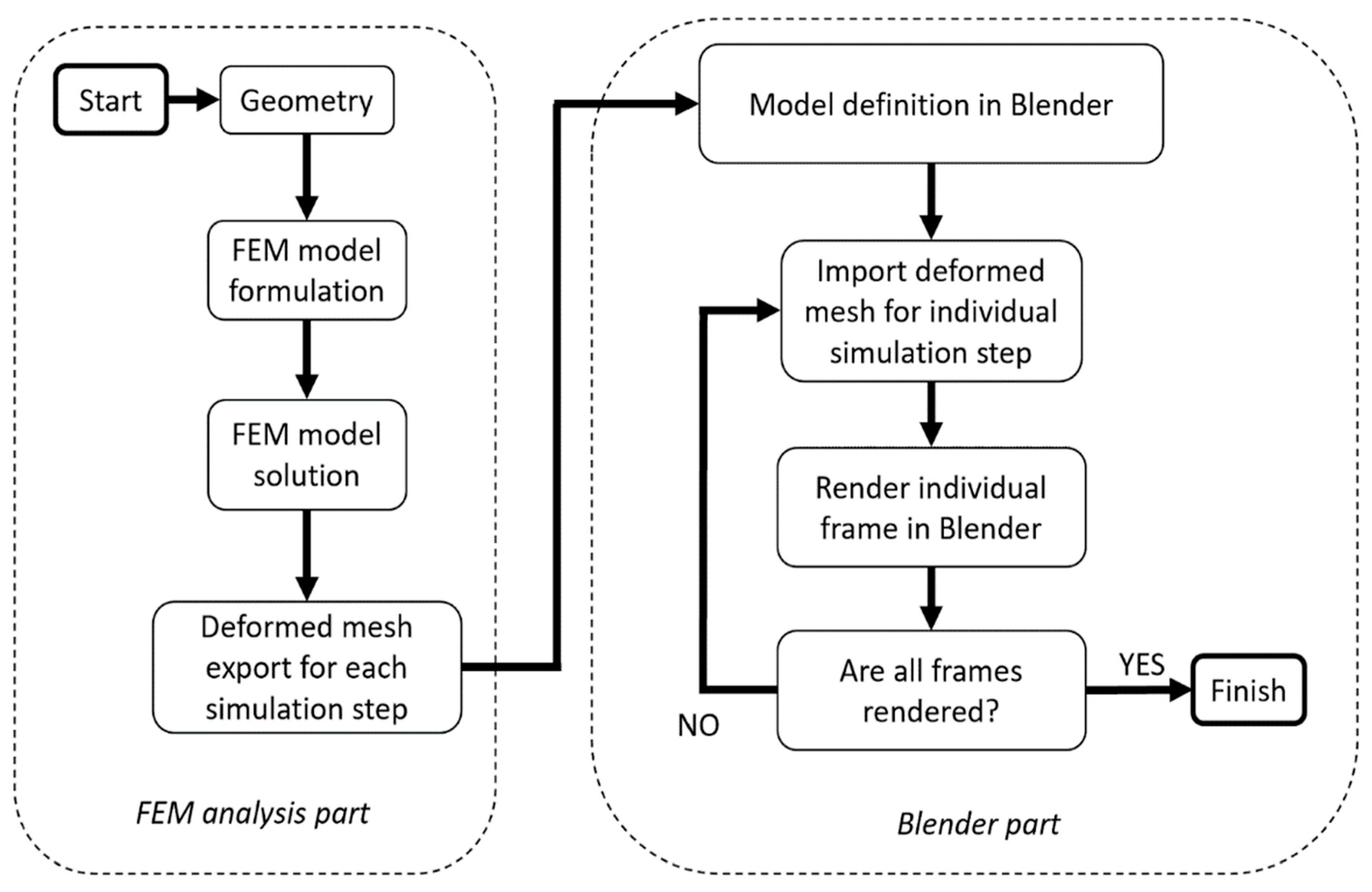

The proposed computing environment uses FEM simulation tools and the Blender graphics program. The diagram of the proposed algorithm is shown in

Figure 1. Synthetic images of the structure under study are obtained as a result of the algorithm’s operation. The aim of the proposed method is to synthetically produce images of mechanical structures subjected to loads. It is also necessary to ensure real deformations and parameters of realistic vision systems (lens, camera resolution, lighting conditions). Further use of the images produced can vary. It can be focused on line deflection calculation or defect detection based on image processing. The simulation methodology begins with a definition of a model geometry using a CAD program or directly in the FEM preprocessor. More complex components are usually more convenient to be modeled in a dedicated CAD program.

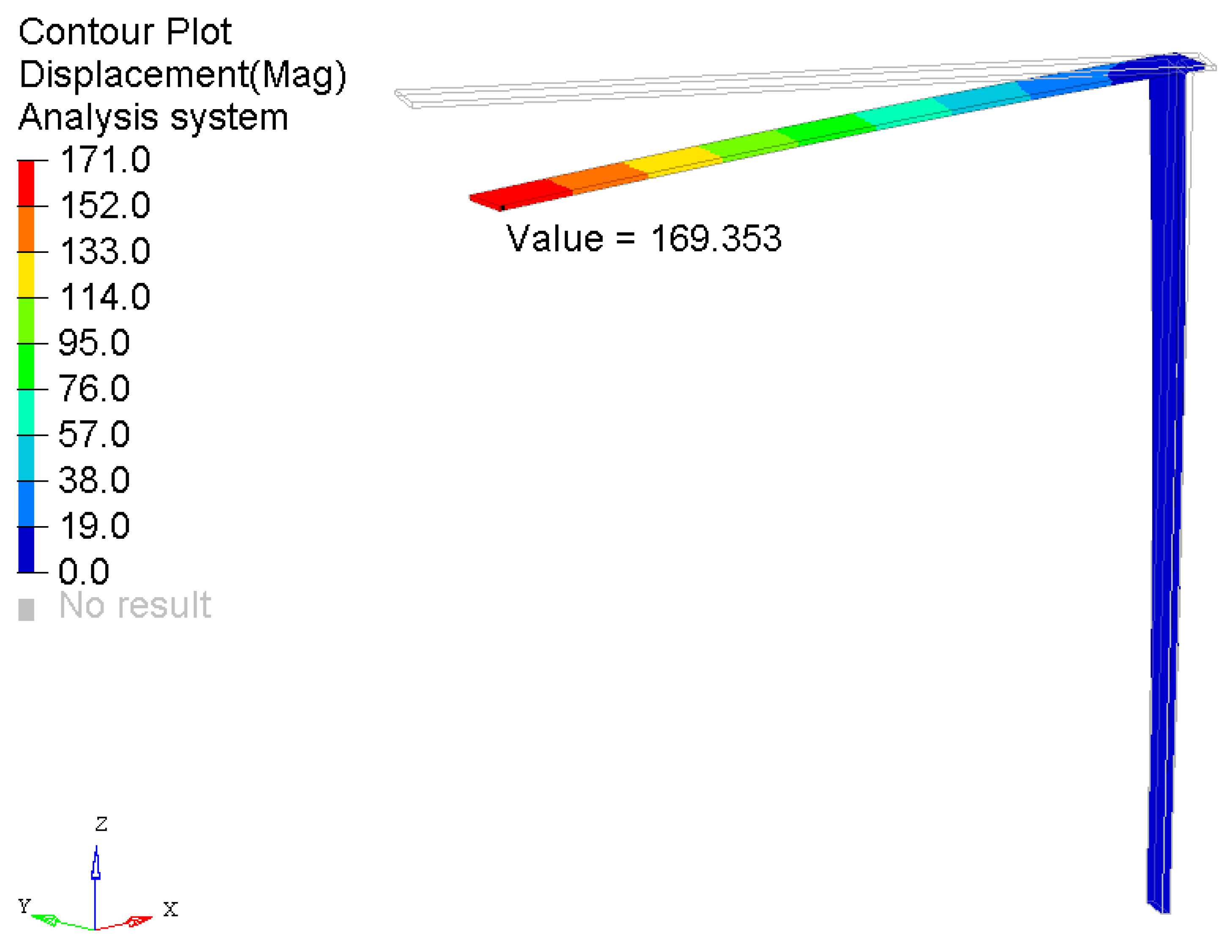

Next, the FEM model is formulated in the FEM preprocessor. The authors used the Altair HyperMesh program. Essential steps in this stage are as follows: defining a finite element mesh, assigning material parameters and defining the boundary conditions and the load step. The finite elements should be of a solid type (3D elements, e.g., in hexahedral, tetrahedral or pentahedral shape). This requirement is dictated by the requirement to render a closed volume in the next stage of the algorithm’s work. Moreover, to enable the export of the FEM mesh into the Blender graphics program, it is necessary to cover solid elements with a membrane of 2D elements in the model. Its thickness and stiffness should be selected so as not to affect the simulation results. The calculations assumed a membrane thickness of 0.001 mm and a Young’s modulus 10 times lower than the native material of 3D elements. The membrane mesh is deformed together with the native material. It has common nodes with the 3D mesh. Owing to this, and ensuring the unambiguity of the displacements, it is possible to correctly project the deformed mesh in Blender (at a later stage of the algorithm). Degrees of freedom of the elements should correspond to the type of analysis being carried out, e.g., displacements and rotations in nodes for the mechanical domain. In this analysis domain, with the elastic deformation regime, the most important mechanical properties of the material to be modeled are Young’s modulus, Poisson’s ratio and density. The boundary conditions should reflect the actual loads as closely as possible. In most cases for mechanical domain analysis, there are pressure loads and constraints on appropriate degrees of freedom. The equation of the formulated FEM model in the general form is given by Equation (1).

where:

The definition load step describes the sequence in which the boundary conditions are applied to the structure. It also defines the type of analysis to be performed (e.g., static, quasi-static or dynamic transient). Then, the formulated FEM model is solved using a dedicated solver. In this step, the global displacement vector is determined. The solution proposed by the authors of this work is based on the MSC.Marc solver. The convergence condition of the analysis dictates the step size in the FEM simulation. The second criterion for the minimum number of steps in the FEM load step is imposed by the number of required rendered frames (in Blender). In quasi-static problems, it is usually two frames (before and after load application).

The next step of the algorithm is to export the deformed finite element mesh to a *.STL file for individual time steps in the simulation. The authors developed the scripts to automate exporting the current state of the structure’s deformation in the required time intervals, which is especially important for simulating a dynamic phenomenon. The mesh of the system under study must be divided into several parts. The number of exported meshes depends on the number of textures used further in the algorithm in Blender. In other words, if the resulting rendered image presents, for example, three textures or colors on the object, then the export of the deformed mesh must be done separately for each of these areas.

Next, the Blender program is involved in the proposed solution. The essential Blender model components and parameters are defined at first. These are: cameras (position, orientation, sensor size, lens focal length), lighting points (light shade, intensity) and textures that are later applied to the imported mesh. These parameters should correspond to real-file experimental conditions. In the next step, the import process of the deformed mesh begins. The rendering of a single frame/image is performed next and is repeated for all required frames. The task becomes much more time-consuming when it is necessary to generate a large number of images. This can be necessary for synthetic images generation of dynamic problems. A Python script was developed to automate this task.

As discussed before, the purpose of the proposed method is to produce synthetic images. Nevertheless, it seems necessary to report on potential application areas of the method. Therefore, in

Section 3, three case studies of the use of the FEM+Blender simulation are presented. These are not the only possible fields of application of the proposed approach but only representative examples. They are limited to relatively simple mechanical structures but take into account various load conditions and the occurrence of damage. The application of this approach for arbitrarily complex objects is possible but requires the formulation of complex FEM and Blender models. In the presented case studies, the proposed FEM+Blender simulation is limited to quasi-static cases.

4. Conclusions

This paper presents a new computer simulation methodology for synthetic image creation. The solution is dedicated to the presentation of mechanical structures under the influence of external forces. This approach uses the FEM to determine the deformation of the tested system under the influence of loads acting on it. The resulting deformed finite element mesh is imported into the Blender environment, and synthetic images are rendered using a GPU. Camera and lens parameters, light source, reflections, material textures and shadows are considered during the rendering process. The proposed approach can produce synthetic data that can be used as the input data to test image processing algorithms, and this is the main area of application of this approach. In some cases, the proposed methodology can introduce a significant reduction in the time required to obtain the data compared to the actual experiments. The authors are aware that the application of the proposed approach does not allow for the omission of experiments but may, for example, help in choosing the appropriate camera positioning during the actual experiment and introduce significant time savings.

The aim of the proposed method, the same as for other simulation methods, is to obtain certain results in a numerical manner with the use of computer resources. The obtained video data can be further analyzed, depending on the specific need. The proposed simulation approach can be used to generate synthetic data, e.g., to increase training sets for neural networks to interpret data in images. It may also help in selecting components of the target configuration of the vision measurement system in engineering operation conditions. In such an application, it is necessary to predict the expected displacements of the structure’s component, which is provided by our solution. It is also necessary to adjust vision system settings to make the structure’s displacements observable by the vision measurement system, which can also be simulated by the developed numerical environment.

The presented application examples of the proposed algorithm at the moment are limited to the simulation of static scenes, such as structural deflection under load. Sample analyses were presented using generated synthetic vision data. The study focused on determining the deflection of the tested structure with the introduced damage (limited to material discontinuity) and simple and complex load conditions. The last case study allowed generating images of the structure deflected in 3D. The obtained images allowed for the 3D reconstruction of the sample in the simulated multicamera system. The selected results in the paper have been successfully validated experimentally.

As part of the ongoing further work, the authors develop a simulation environment for synthetic video sequence generation for dynamic phenomena. Such data are needed to test, for example, motion magnification algorithms or normal modes identification algorithms based on video sequences. The simulation setup will be also improved to include depth of field effects in renderings. Initial work in this area has been undertaken, but it requires a thorough quantitative analysis, which is a part of further research.