Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study

Abstract

1. Introduction

1.1. Industrial Context

1.2. Engine Blade Inspection

2. Literature Review

2.1. Research on Visual Inspection

2.1.1. Parameters Affecting Visual Inspection

2.1.2. Inspection Performance

2.2. Eye Tracking

2.3. Gaps in the Body of Knowledge

3. Materials and Methods

3.1. Research Objective and Methodology

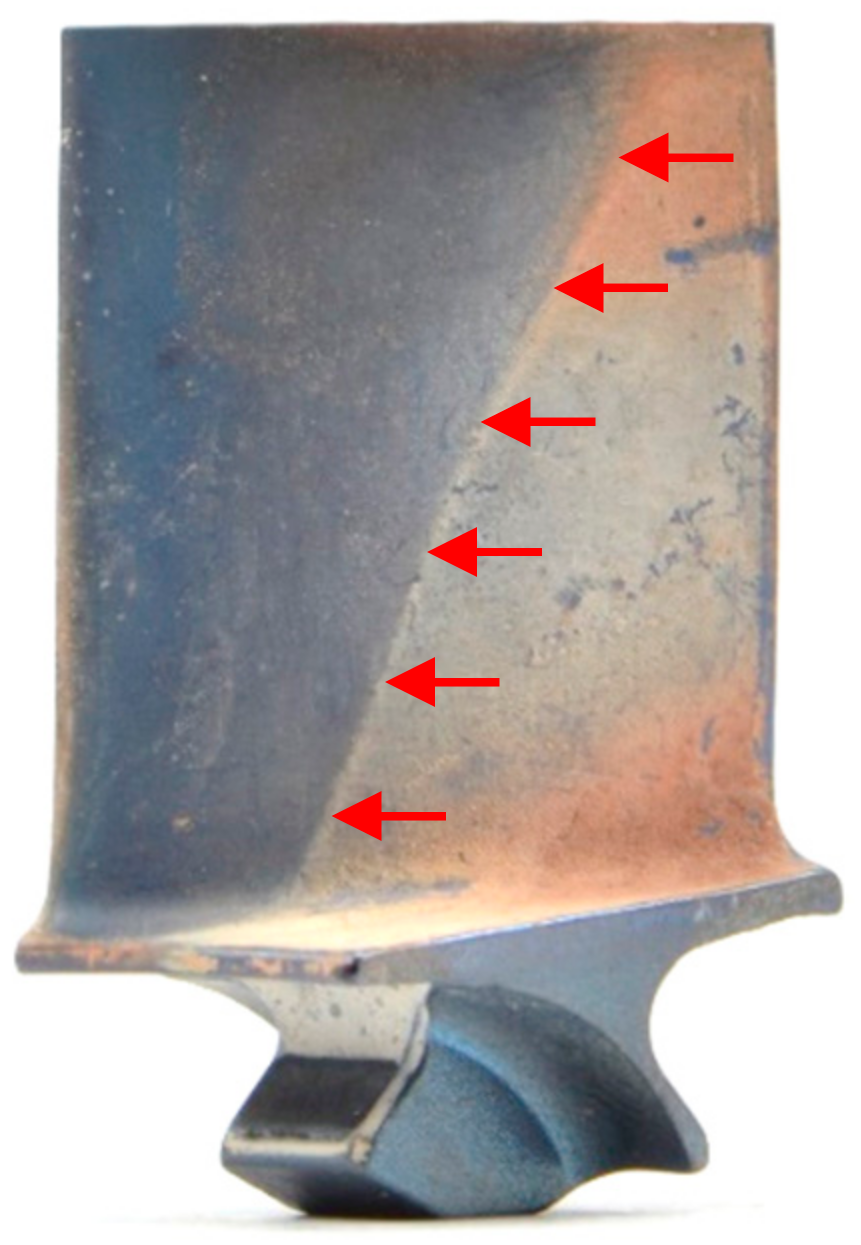

3.2. Research Sample (Stimuli)

3.3. Research Population

3.4. Eye Tracking Approach

3.4.1. Technology Setup

3.4.2. Stimuli Presentation

3.5. Data Collection

3.6. Determination of Ground Truth

3.7. Data Analysis

4. Statistical Results

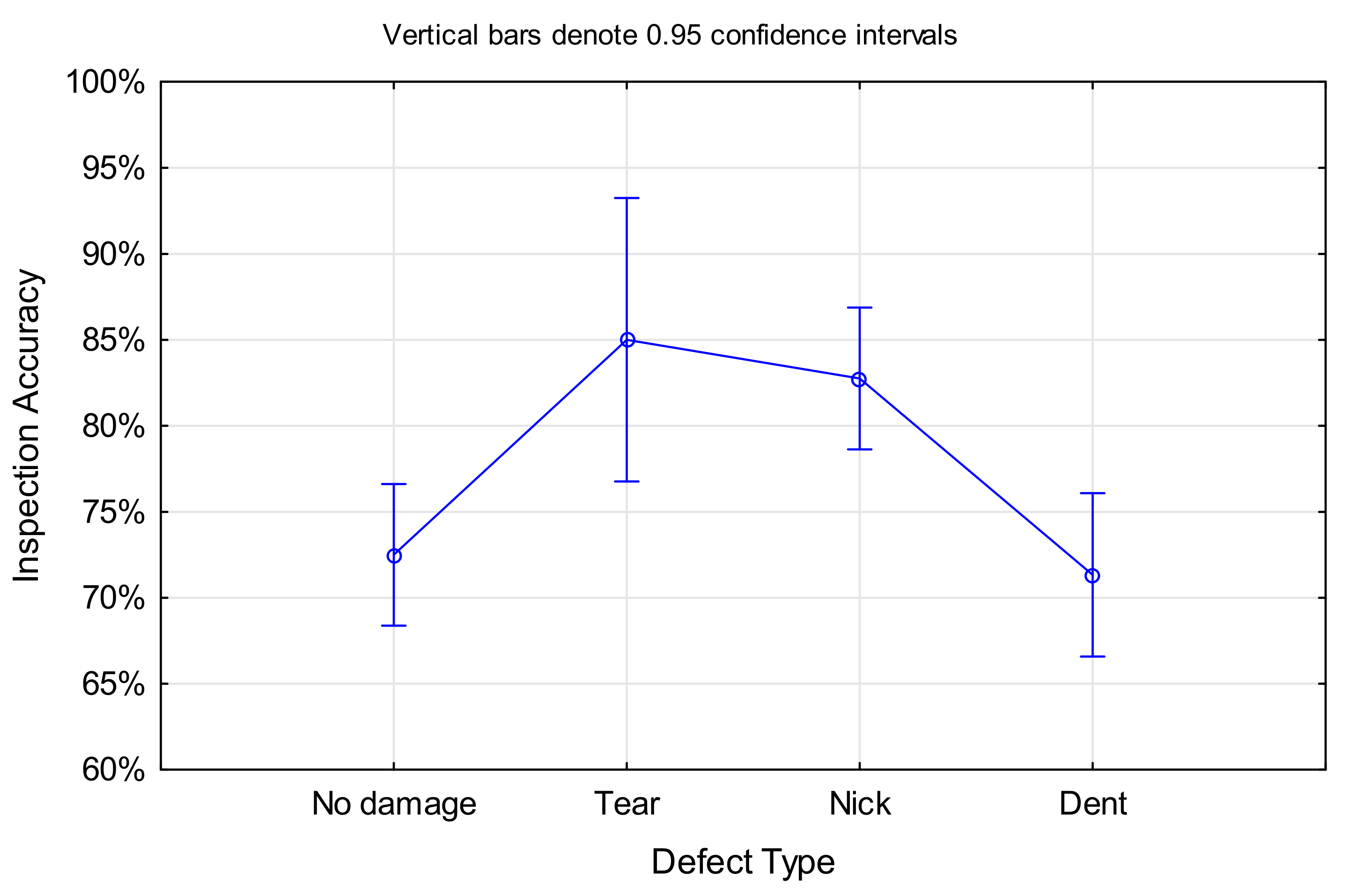

4.1. Inspection Accuracy

4.1.1. Hypothesis Testing

4.1.2. Statistical Model for Inspection Accuracy

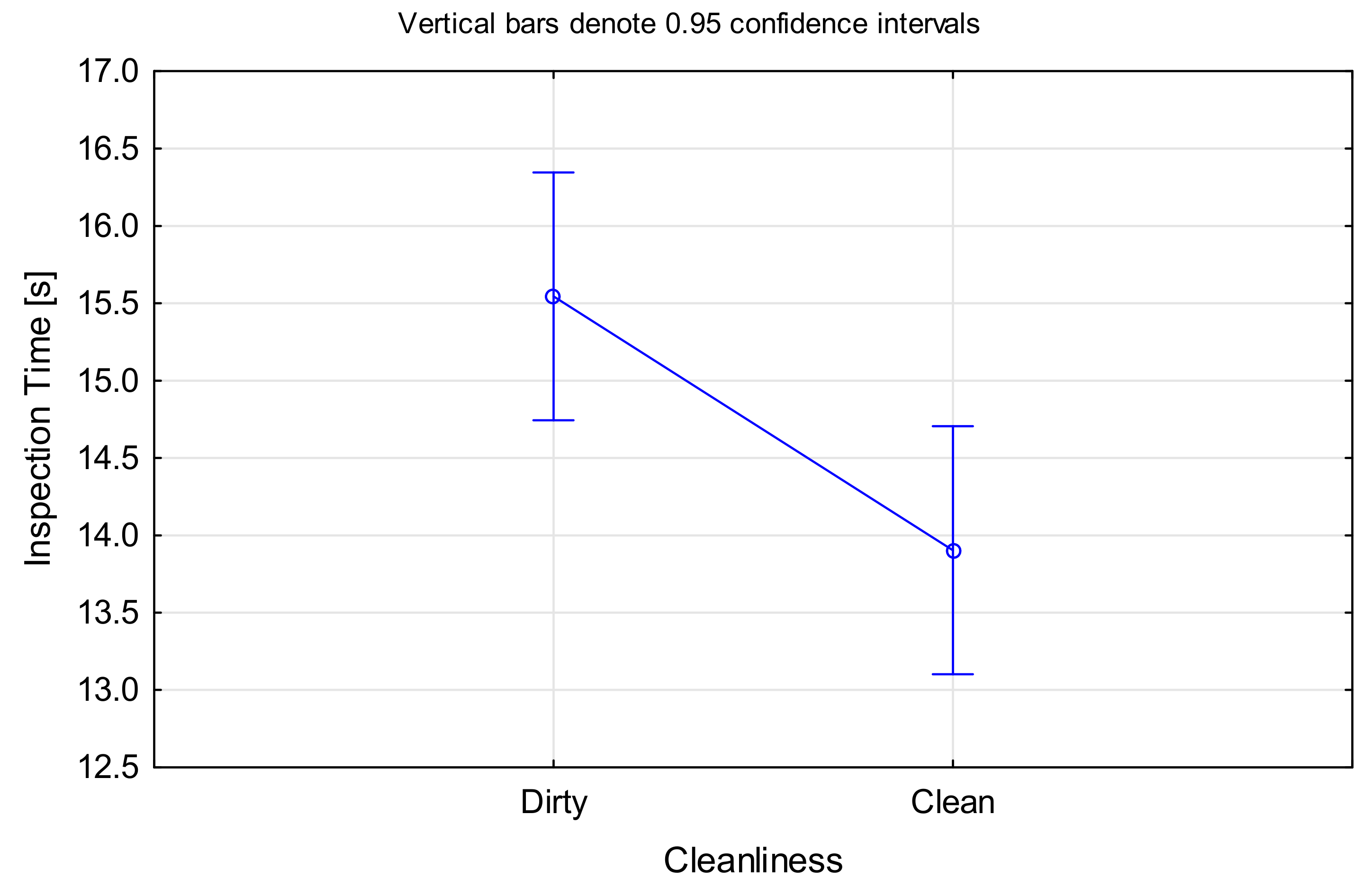

4.2. Inspection Time

4.2.1. Hypothesis Testing

4.2.2. Statistical Model for Inspection Time

5. Evaluation of the Eye Tracking Data

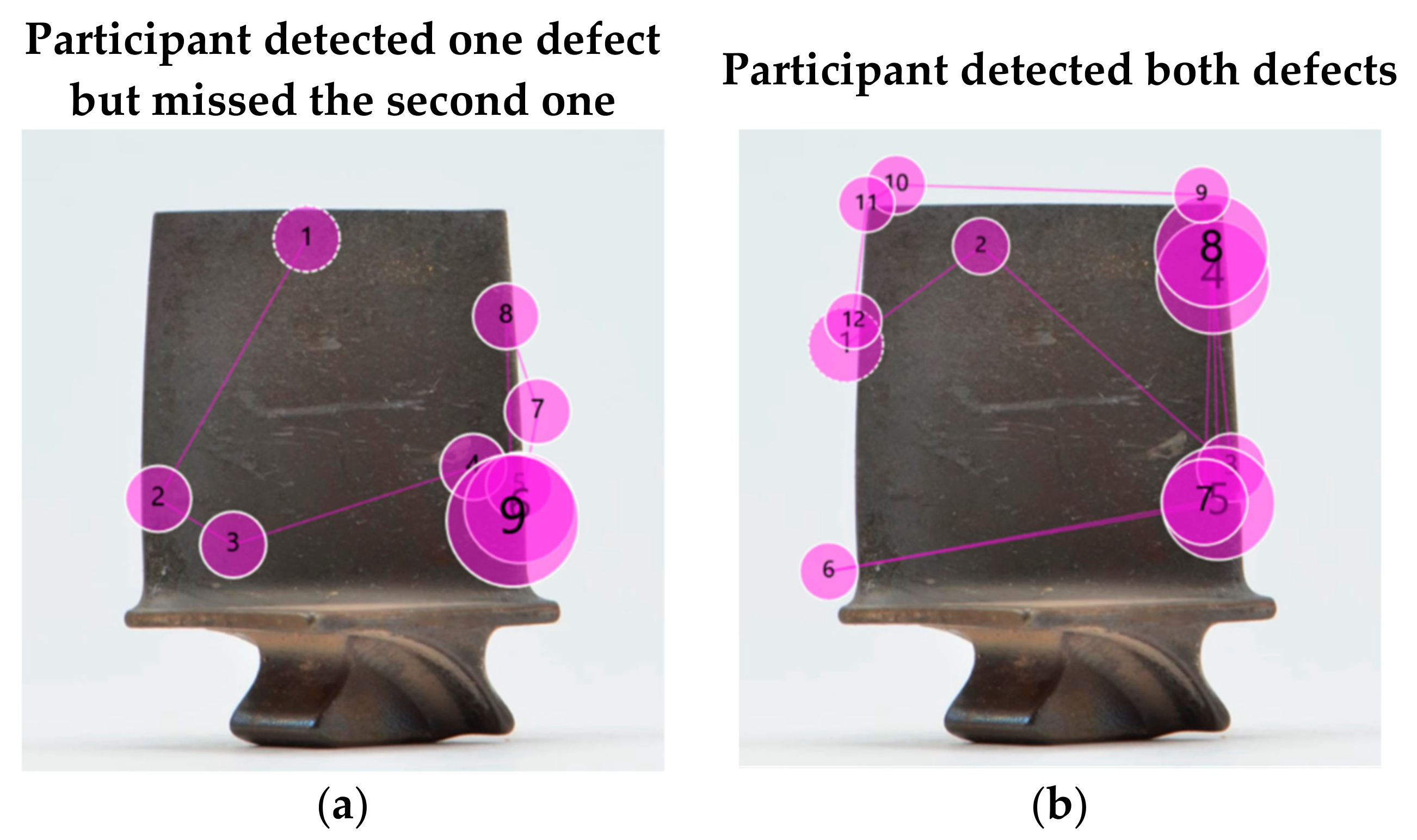

5.1. Observations about the Experimental Arrangements

5.2. Visual Search Strategies

5.3. Example of a Structured Search

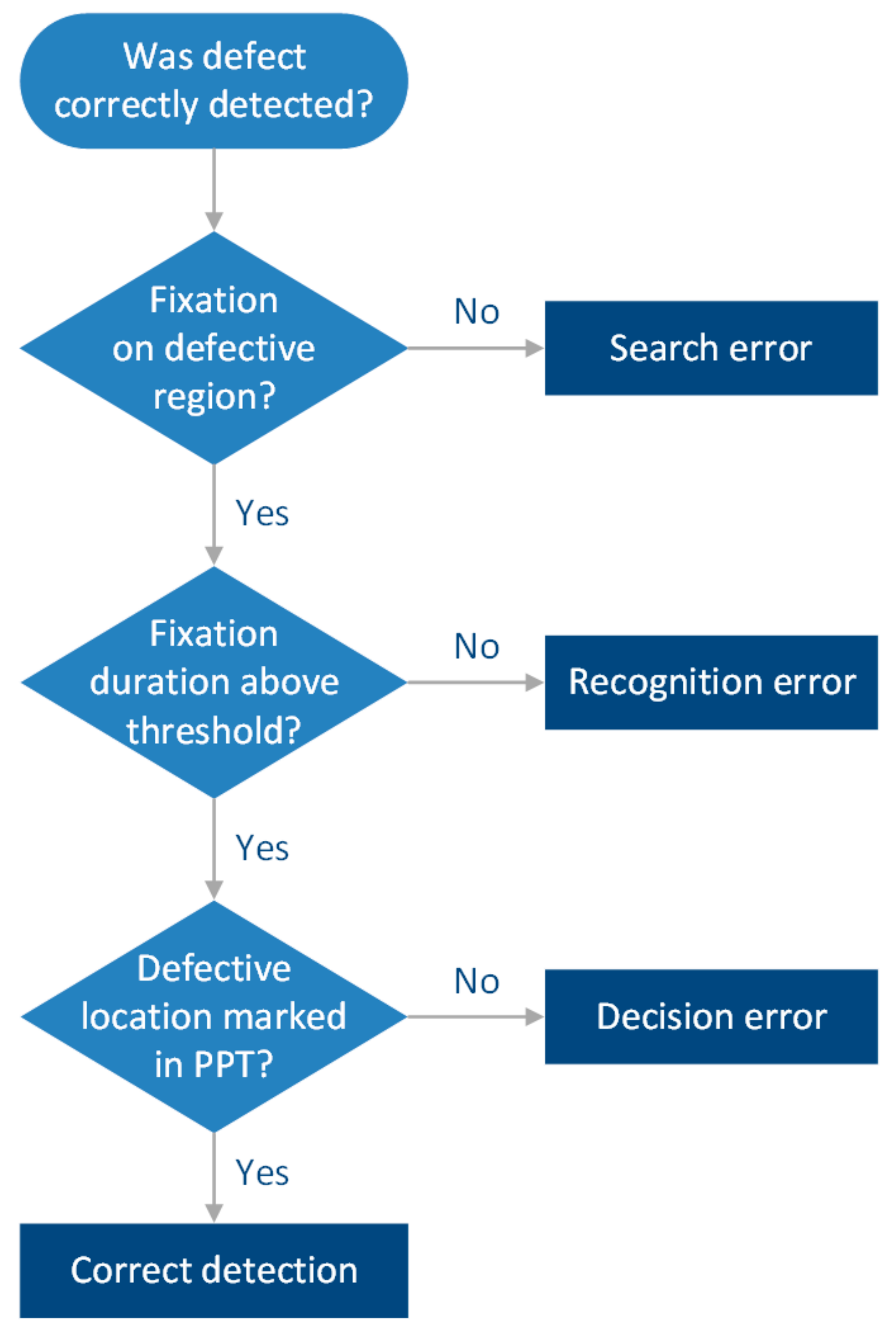

5.4. Types of Inspection Errors Leading to Missed Defects

5.5. Improved Inspection Accuracy for Clean Blades

5.6. Decreased Inspection Accuracy for Clean Blades

5.7. Comparison of Search Strategies between Expertise Groups

5.8. Towards a Mental Model of Visual Inspection

6. Discussion

6.1. Summary of Work and Comparison with Other Studies

6.2. Implications for Practitioners

6.3. Limitations

6.4. Challenges in Eye Tracking Technology

6.5. Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ackert, S. Engine Maintenance Concepts for Financiers. Aircr. Monit. 2011, 2, 1–43. Available online: http://www.aircraftmonitor.com/uploads/1/5/9/9/15993320/engine_mx_concepts_for_financiers___v2.pdf (accessed on 7 September 2021).

- Latorella, K.; Prabhu, P. A review of human error in aviation maintenance and inspection. Int. J. Ind. Ergon. 2000, 14653, 133–161. [Google Scholar] [CrossRef]

- Gramopadhye, A.K.; Drury, C.G. Human factors in aviation maintenance: How we got to where we are. Int. J. Ind. Ergon. 2000, 26, 125–131. [Google Scholar] [CrossRef]

- Drury, C.G.; Fox, J.G. The Imperfect Inspector. In Human Reliability in Quality Control; Taylor & Francis Ltd.: London, UK, 1975; pp. 11–16. [Google Scholar]

- Jacob, R.J.; Raina, S.; Regunath, S.; Subramanian, R.C.; Gramopadhye, A.K. Improving Inspector’s Performance and Reducing Errors—General Aviation Inspection Training Systems (GAITS). Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2004, 48, 203–207. [Google Scholar] [CrossRef]

- Marais, K.; Robichaud, M. Analysis of trends in aviation maintenance risk: An empirical approach. Reliab. Eng. Syst. Saf. 2012, 106, 104–118. [Google Scholar] [CrossRef]

- Rankin, W.L.; Shappell, S.; Wiegmann, D. Error and error reporting systems. In Human Factors Guide for Aviation Maintenance and Inspection; Federal Aviation Administration: Washington, DC, USA, 2003. Available online: https://www.faa.gov/about/initiatives/maintenance_hf/training_tools/media/hf_guide.pdf (accessed on 7 September 2021).

- Carter, T.J. Common failures in gas turbine blades. Eng. Fail. Anal. 2005, 12, 237–247. [Google Scholar] [CrossRef]

- Kumari, S.; Satyanarayana, D.; Srinivas, M. Failure analysis of gas turbine rotor blades. Eng. Fail. Anal. 2014, 45, 234–244. [Google Scholar] [CrossRef]

- Dewangan, R.; Patel, J.; Dubey, J.; Prakash, K.; Bohidar, S. Gas turbine blades—A critical review of failure at first and second stages. Int. J. Mech. Eng. Robot. Res. 2015, 4, 216–223. [Google Scholar]

- Rao, N.; Kumar, N.; Prasad, B.; Madhulata, N.; Gurajarapu, N. Failure mechanisms in turbine blades of a gas turbine Engine—An overview. Int. J. Eng. Res. Dev. 2014, 10, 48–57. [Google Scholar] [CrossRef]

- Rani, S. Common Failures in Gas Turbine Blade: A critical Review. Int. J. Eng. Sci. Res. Technol. 2018, 3, 799–803. [Google Scholar] [CrossRef]

- Nickles, G.; Him, H.; Koenig, S.; Gramopadhye, A.; Melloy, B. A Descriptive Model of Aircraft Inspection Activities. 2019. Available online: https://www.faa.gov/about/initiatives/maintenance_hf/library/documents/media/human_factors_maintenance/a_descriptive_model_of_aircraft_inspection_activities.pdf (accessed on 7 September 2021).

- Yuan, Z. Borescope inspection for HPT blade of CFM56-7B engine. IOP Conf. Ser.: Mater. Sci. Eng. 2018, 382. [Google Scholar] [CrossRef]

- See, J.E. Visual Inspection: A Review of the Literature; Sandia National Laboratories: Albuquerque, NM, USA, 2012. [Google Scholar] [CrossRef]

- Mishra, R.; Thomas, J.; Srinivasan, K.; Nandi, V.; Raghavendra Bhatt, R. Failure analysis of an un-cooled turbine blade in an aero gas turbine engine. Eng. Fail. Anal. 2017, 79, 836–844. [Google Scholar] [CrossRef]

- Hobbs, A.; Williamson, A.; Van Dongen, H.P. A circadian rhythm in skill-based errors in aviation maintenance. Chronobiol. Int. 2010, 27, 1304–1316. [Google Scholar] [CrossRef] [PubMed]

- Civil Aviation Authority (CAA). CAP 715—An Introduction to Aircraft Maintenance Engineering Human Factors for JAR 66. 2002. Available online: https://publicapps.caa.co.uk/docs/33/CAP715.PDF (accessed on 7 September 2021).

- Aust, J.; Pons, D. Taxonomy of Gas Turbine Blade Defects. Aerospace 2019, 6, 58. [Google Scholar] [CrossRef]

- Gordon, R.O. Visual Inspection for Aircraft; Federal Aviation Administration: Washington, DC, USA, 1997. [Google Scholar]

- Aust, J.; Pons, D. A Systematic Methodology for Developing Bowtie in Risk Assessment: Application to Borescope Inspection. Aerospace 2020, 7, 86. [Google Scholar] [CrossRef]

- Aust, J.; Pons, D. Bowtie Methodology for Risk Analysis of Visual Borescope Inspection during Aircraft Engine Maintenance. Aerospace 2019, 6, 110. [Google Scholar] [CrossRef]

- Cook, L. Visual Inspection Reliability for Composite Aircraft Structures; Cranfield University: Cranfield, UK, 2009. [Google Scholar]

- Baaran, J. Visual Inspection of Composite Structures; European Aviation Safety Agency (EASA): Cologne, Germany, 2009. [Google Scholar]

- Spencer, F.W. Visual Inspection Research Project Report on Benchmark Inspections; Aging Aircraft NDI Validation Center (AANC), Sandia National Labs: Albuquerque, NM, USA, 1996. [Google Scholar]

- Erhart, D.; Ostrom, L.T.; Wilhelmsen, C.A. Visual detectibility of dents on a composite aircraft inspection specimen: An initial study. Int. J. Appl. Aviat. Stud. 2004, 4, 111–122. [Google Scholar]

- See, J.E. Visual Inspection Reliability for Precision Manufactured Parts. Hum. Factors 2015, 57, 1427–1442. [Google Scholar] [CrossRef] [PubMed]

- Leach, J.; Morris, P.E. Cognitive Factors in the Close Visual and Magnetic Particle Inspection of Welds Underwater. Hum. Factors 1998, 40, 187–197. [Google Scholar] [CrossRef] [PubMed]

- Hayes, A.S. Control of visual inspection. Ind. Qual. Control. 1950, 6, 73–76. [Google Scholar]

- Graybeal, B.A.; Phares, B.M.; Rolander, D.D.; Moore, M.; Washer, G. Visual Inspection of Highway Bridges. J. Nondestruct. Eval. 2002, 21, 67–83. [Google Scholar] [CrossRef]

- Carter, C.W. Quality control of visual characteristics. In ASQC Convention Transactions; Milwaukee: Milwaukee, WI, USA, 1957; p. 634. [Google Scholar]

- Drury, C.G.; Spencer, F.W.; Schurman, D.L. Measuring Human Detection Performance in Aircraft Visual Inspection. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1997, 41, 304–308. [Google Scholar] [CrossRef]

- Sadasivan, S.; Greenstein, J.S.; Gramopadhye, A.K.; Duchowski, A.T. Use of eye movements as feedforward training for a synthetic aircraft inspection task. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 141–149. [Google Scholar]

- Heida, J.H. Characterization of inspection performance. In Proceedings of the 12th World Conference on NDT, Amsterdam, The Netherlands, 23–28 April 1989; pp. 1711–1716. [Google Scholar]

- Chen, X.; Ren, H.; Bil, C. Inspection Intervals Optimization for Aircraft Composite Structures Considering Dent Damage. J. Aircr. 2014, 51, 303–309. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 3rd ed.; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- Funke, G.; Greenlee, E.; Carter, M.; Dukes, A.; Brown, R.; Menke, L. Which Eye Tracker Is Right for Your Research? Performance Evaluation of Several Cost Variant Eye Trackers. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 1240–1244. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Mulvey, F. Eye tracker data quality: What it is and how to measure it. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 45–52. [Google Scholar]

- Titz, J.; Scholz, A.; Sedlmeier, P. Comparing eye trackers by correlating their eye-metric data. Behav. Res. Methods 2018, 50, 1853–1863. [Google Scholar] [CrossRef] [PubMed]

- Meißner, M.; Oll, J. The Promise of Eye-Tracking Methodology in Organizational Research: A Taxonomy, Review, and Future Avenues. Organ. Res. Methods 2019, 22, 590–617. [Google Scholar] [CrossRef]

- Geisen, E.; Romano Bergstrom, J. Chapter 4—Planning for Usability Testing. In Usability Testing for Survey Research; Geisen, E., Romano Bergstrom, J., Eds.; Morgan Kaufmann: Boston, MA, USA, 2017; pp. 79–109. [Google Scholar]

- Horsley, M.; Eliot, M.; Knight, B.A.; Reilly, R. Current Trends in Eye Tracking Research, 1st ed.; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Duerrschmid, K.; Danner, L. Chapter 12—Eye Tracking in Consumer Research. In Methods in Consumer Research, Volume 2; Ares, G., Varela, P., Eds.; Woodhead Publishing: Sawston, UK, 2018; pp. 279–318. [Google Scholar]

- Lévêque, L.; Bosmans, H.; Cockmartin, L.; Liu, H. State of the Art: Eye-Tracking Studies in Medical Imaging. IEEE Access 2018, 6, 37023–37034. [Google Scholar] [CrossRef]

- Antonioli, L.; Pella, A.; Ricotti, R.; Rossi, M.; Fiore, M.R.; Belotti, G.; Magro, G.; Paganelli, C.; Orlandi, E.; Ciocca, M.; et al. Convolutional Neural Networks Cascade for Automatic Pupil and Iris Detection in Ocular Proton Therapy. Sensors 2021, 21, 4400. [Google Scholar] [CrossRef]

- Nam, U.; Lee, K.; Ko, H.; Lee, J.-Y.; Lee, E.C. Analyzing Facial and Eye Movements to Screen for Alzheimer’s Disease. Sensors 2020, 20, 5349. [Google Scholar] [CrossRef]

- Lai, M.-L.; Tsai, M.-J.; Yang, F.-Y.; Hsu, C.-Y.; Liu, T.-C.; Lee, S.W.-Y.; Lee, M.-H.; Chiou, G.-L.; Liang, J.-C.; Tsai, C.-C. A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ. Res. Rev. 2013, 10, 90–115. [Google Scholar] [CrossRef]

- Molina, A.I.; Navarro, Ó.; Ortega, M.; Lacruz, M. Evaluating multimedia learning materials in primary education using eye tracking. Comput. Stand. Interfaces 2018, 59, 45–60. [Google Scholar] [CrossRef]

- Brückner, S.; Schneider, J.; Zlatkin-Troitschanskaia, O.; Drachsler, H. Epistemic Network Analyses of Economics Students’ Graph Understanding: An Eye-Tracking Study. Sensors 2020, 20, 6908. [Google Scholar] [CrossRef]

- Rayner, K. Eye Movements and Cognitive Processes in Reading, Visual Search, and Scene Perception. In Studies in Visual Information Processing; Findlay, J.M., Walker, R., Kentridge, R.W., Eds.; Springer: Berlin/Heidelberg, Germany, 1995; Volume 6, pp. 3–22. [Google Scholar]

- Ashraf, H.; Sodergren, M.H.; Merali, N.; Mylonas, G.; Singh, H.; Darzi, A. Eye-tracking technology in medical education: A systematic review. Med. Teach. 2018, 40, 62–69. [Google Scholar] [CrossRef]

- Wedel, M.; Pieters, R. Eye Tracking for Visual Marketing; Now Publishers Inc.: Delft, The Netherlands, 2008; Volume 1, p. 104. [Google Scholar]

- Brandes, S.; Sicks, F.; Berger, A. Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation. Sensors 2021, 21, 2229. [Google Scholar] [CrossRef]

- Martinez-Marquez, D.; Pingali, S.; Panuwatwanich, K.; Stewart, R.A.; Mohamed, S. Application of Eye Tracking Technology in Aviation, Maritime, and Construction Industries: A Systematic Review. Sensors 2021, 21, 4289. [Google Scholar] [CrossRef]

- Kapitaniak, B.; Walczak, M.; Kosobudzki, M.; Jóźwiak, Z.; Bortkiewicz, A. Application of eye-tracking in the testing of drivers: A review of research. Int. J. Occup. Med. Environ. Health. 2015, 28, 941–954. [Google Scholar] [CrossRef] [PubMed]

- Ziv, G. Gaze Behavior and Visual Attention: A Review of Eye Tracking Studies in Aviation. Int. J. Aviat. Psychol. 2016, 26, 75–104. [Google Scholar] [CrossRef]

- Strzelecki, A. Eye-Tracking Studies of Web Search Engines: A Systematic Literature Review. Information 2020, 11, 300. [Google Scholar] [CrossRef]

- Corcoran, P.M.; Nanu, F.; Petrescu, S.; Bigioi, P. Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Trans. Consum. Electron. 2012, 58, 347–355. [Google Scholar] [CrossRef]

- Scott, N.; Zhang, R.; Le, D.; Moyle, B. A review of eye-tracking research in tourism. Curr. Issues Tour. 2019, 22, 1244–1261. [Google Scholar] [CrossRef]

- Shokishalov, Z.; Wang, H. Applying Eye Tracking in Information Security. Procedia Comput. Sci. 2019, 150, 347–351. [Google Scholar] [CrossRef]

- Richardson, D.C.; Spivey, M. Eye Tracking: Research areas and applications. In Encyclopedia of Biomaterials and Biomedical Engineering, 2nd ed.; Wnek, G., Bowlin, G., Eds.; CRC Press: Boca Raton, FL, USA, 2008; p. 3552. [Google Scholar]

- King, A.J.; Bol, N.; Cummins, R.G.; John, K.K. Improving Visual Behavior Research in Communication Science: An Overview, Review, and Reporting Recommendations for Using Eye-Tracking Methods. Commun. Methods Meas. 2019, 13, 149–177. [Google Scholar] [CrossRef]

- Stolińska, A.; Andrzejewska, M. Comparing the Difficulty of Tasks Using Eye Tracking Combined with Subjective and Behavioural Criteria. J. Eye Mov. Res. 2016, 9, 3. [Google Scholar] [CrossRef]

- Ghanbari, L.; Wang, C.; Jeon, H.W. Industrial Energy Assessment Training Effectiveness Evaluation: An Eye-Tracking Study. Sensors 2021, 21, 1584. [Google Scholar] [CrossRef]

- Clemons, A.J. Training Methods for Visual Inspection Tasks; Iowa State University: Ames, IA, USA, 2013. [Google Scholar]

- Ayiei, A. The Use of Eye Tracking in Assessing Visual Attention. J. Aircr. Spacecr. Technol. 2020, 4, 117–124. [Google Scholar] [CrossRef]

- Ho, H.-F.; Su, H.-S.; Li, W.-C.; Yu, C.-S.; Braithwaite, G. Pilots’ Latency of First Fixation and Dwell Among Regions of Interest on the Flight Deck; Springer: Cham, Switzerland, 2016; pp. 389–396. [Google Scholar]

- Kirby, C.E.; Kennedy, Q.; Yang, J.H. Helicopter Pilot Scan Techniques During Low-Altitude High-Speed Flight. Aviat. Space Environ. Med. 2014, 85, 740–744. [Google Scholar] [CrossRef] [PubMed]

- Gray, R.; Navia, J.A.; Allsop, J. Action-Specific Effects in Aviation: What Determines Judged Runway Size? Perception 2014, 43, 145–154. [Google Scholar] [CrossRef]

- Xiong, W.; Wang, Y.; Zhou, Q.; Liu, Z.; Zhang, X. The Research of Eye Movement Behavior of Expert and Novice in Flight Simulation of Landing. In Proceedings of the 13th International Conference on Engineering Psychology and Cognitive Ergonomics, Toronto, ON, Canada, 17–22 July 2016; pp. 485–493. [Google Scholar]

- Ottati, W.L.; Hickox, J.C.; Richter, J. Eye Scan Patterns of Experienced and Novice Pilots during Visual Flight Rules (VFR) Navigation. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1999, 43, 66–70. [Google Scholar] [CrossRef]

- Russi-Vigoya, M.N.; Patterson, P. Analysis of Pilot Eye Behavior during Glass Cockpit Simulations. Procedia Manuf. 2015, 3, 5028–5035. [Google Scholar] [CrossRef][Green Version]

- Williams, K.W. Impact of Aviation Highway-in-the-Sky Displays on Pilot Situation Awareness. Hum. Factors 2002, 44, 18–27. [Google Scholar] [CrossRef]

- Oseguera-Lohr, R.M.; Nadler, E. Effects of an Approach Spacing Flight Deck Tool on Pilot Eyescan; National Aeronautics and Space Administration: Washington, DC, USA, 2004. [Google Scholar]

- Li, W.-C.; White, J.; Braithwaite, G.; Greaves, M.; Lin, J.-H. The evaluation of pilot’s situational awareness during mode changes on flight mode annunciators. In Proceedings of the International Conference on Engineering Psychology and Cognitive Ergonomics, Toronto, ON, Canada, 17–22 July 2016; pp. 409–418. [Google Scholar]

- Ineson, J.; Durnell, L.; Ebbage, J.L.; Jarrett, D.N.; Neary, C.; Reed, M.A. Speed and accuracy of head-and eye-based aiming systems at high vertical acceleration. Aviat. Space Environ. Med. 2004, 75, 420–428. [Google Scholar] [PubMed]

- Carroll, M.; Surpris, G.; Strally, S.; Archer, M.; Hannigan, F.; Hale, K.; Bennett, W. Enhancing HMD-Based F-35 Training through Integration of Eye Tracking and Electroencephalography Technology; Springer: Berlin/Heidelberg, Germany, 2013; pp. 21–30. [Google Scholar]

- Jones, D.H. The Effectiveness of an Oculometer Training Tape on Pilot and Copilot Trainees in a Commercial Flight Training Program; Dennis, H., Jones Glynn, D.C., Raymond, H., Eds.; Prepared for Langley Research Center; National Aeronautics and Space Administration, Scientific and Technical Information Branch: Washington, DC, USA, 1983. [Google Scholar]

- Wetzel, P.A.; Anderson, G.M.; Barelka, B.A. Instructor Use of Eye Position Based Feedback for Pilot Training. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1998, 42, 1388–1392. [Google Scholar] [CrossRef]

- Martin, C.; Cegarra, J.; Averty, P. Analysis of Mental Workload during En-route Air Traffic Control Task Execution Based on Eye-Tracking Technique; Springer: Berlin/Heidelberg, Germany, 2011; pp. 592–597. [Google Scholar]

- McClung, S.N.; Kang, Z. Characterization of Visual Scanning Patterns in Air Traffic Control. Comput. Intell. Neurosci. 2016, 2016, 8343842. [Google Scholar] [CrossRef] [PubMed]

- McCarley, J. Effects of speed-accuracy instructions on oculomotor scanning and target recognition in a simulated baggage X-ray screening task. Ergonomics 2009, 52, 325–333. [Google Scholar] [CrossRef] [PubMed]

- McCarley, J.S.; Kramer, A.F.; Wickens, C.D.; Vidoni, E.D.; Boot, W.R. Visual Skills in Airport-Security Screening. Psychol. Sci. 2004, 15, 302–306. [Google Scholar] [CrossRef] [PubMed]

- Sterchi, Y.; Hättenschwiler, N.; Schwaninger, A. Detection measures for visual inspection of X-ray images of passenger baggage. Atten. Percept. Psychophys. 2019, 81, 1297–1311. [Google Scholar] [CrossRef]

- Al-Moteri, M.O.; Symmons, M.; Plummer, V.; Cooper, S. Eye tracking to investigate cue processing in medical decision-making: A scoping review. Comput. Hum. Behav. 2017, 66, 52–66. [Google Scholar] [CrossRef]

- Megaw, E.D.; Richardson, J. Eye movements and industrial inspection. Appl. Ergon. 1979, 10, 145–154. [Google Scholar] [CrossRef]

- Drury, C.G.; Watson, J. Good Practices in Visual Inspection. Available online: https://www.faa.gov/about/initiatives/maintenance_hf/library/documents/#HumanFactorsMaintenance (accessed on 14 June 2021).

- Van der Gijp, A.; Ravesloot, C.J.; Jarodzka, H.; van der Schaaf, M.F.; van der Schaaf, I.C.; van Schaik, J.P.J.; ten Cate, T.J. How visual search relates to visual diagnostic performance: A narrative systematic review of eye-tracking research in radiology. Adv. Health Sci. Educ. 2017, 22, 765–787. [Google Scholar] [CrossRef]

- Lévêque, L.; Berg, B.V.; Bosmans, H.; Cockmartin, L.; Keupers, M.; Ongeval, C.V.; Liu, H. A statistical evaluation of eye-tracking data of screening mammography: Effects of expertise and experience on image reading. Signal Process. Image Commun. 2019, 78, 86–93. [Google Scholar] [CrossRef]

- Karargyris, A.; Kashyap, S.; Lourentzou, I.; Wu, J.T.; Sharma, A.; Tong, M.; Abedin, S.; Beymer, D.; Mukherjee, V.; Krupinski, E.A.; et al. Creation and validation of a chest X-ray dataset with eye-tracking and report dictation for AI development. Sci. Data 2021, 8, 92. [Google Scholar] [CrossRef]

- Bertram, R.; Kaakinen, J.; Bensch, F.; Helle, L.; Lantto, E.; Niemi, P.; Lundbom, N. Eye Movements of Radiologists Reflect Expertise in CT Study Interpretation: A Potential Tool to Measure Resident Development. Radiology 2016, 281, 805–815. [Google Scholar] [CrossRef]

- Moraal, J. The analysis of an inspection task in the steel industry. Hum. Reliab. Qual. Control. 1975, 20, 217–230. [Google Scholar]

- Ohtani, A. Eye Movements during Visual Inspection Task. In Proceedings of the 16th International Congress on Occupational Health, Tokyo, Japan, 11–12 November 1969; pp. 88–90. [Google Scholar]

- Saito, M. A study on bottle inspection speed-determination of appropriate work speed by means of electronystagmography. J. Sci. Labor 1972, 48, 395–400. [Google Scholar] [CrossRef]

- Schoonard, J.W.; Gould, J.D.; Miller, L.A. Studies of visual inspection. Ergonomics 1973, 16, 365–379. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Medlin, E.; Gramopadhye, A.; Melloy, B.; Nair, S. Binocular eye tracking in VR for visual inspection training. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Baniff, AB, Canada, 1–4 November 2001; pp. 1–8. [Google Scholar]

- Duchowski, A.T.; Medlin, E.; Cournia, N.; Gramopadhye, A.; Melloy, B.; Nair, S. 3D eye movement analysis for VR visual inspection training. In Proceedings of the Symposium on Eye Tracking Research & Applications, New Orleans, LA, USA, 25–27 March 2002; pp. 103–110. [Google Scholar]

- Duchowski, A.T.; Shivashankaraiah, V.; Rawls, T.; Gramopadhye, A.; Melloy, B.; Kanki, B. Binocular eye tracking in virtual reality for inspection training. In Proceedings of the Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 89–96. [Google Scholar]

- Vora, J.; Nair, S.; Gramopadhye, A.K.; Duchowski, A.T.; Melloy, B.J.; Kanki, B. Using virtual reality technology for aircraft visual inspection training: Presence and comparison studies. Appl. Ergon. 2002, 33, 559–570. [Google Scholar] [CrossRef]

- Aust, J.; Shankland, S.; Pons, D.; Mukundan, R.; Mitrovic, A. Automated Defect Detection and Decision-Support in Gas Turbine Blade Inspection. Aerospace 2021, 8, 30. [Google Scholar] [CrossRef]

- Tobii, A.B. Participant Management & Recruitment. Available online: https://www.tobiipro.com/learn-and-support/learn/steps-in-an-eye-tracking-study/design/participant-management-and-recruitment/ (accessed on 19 October 2020).

- Tobii, A.B. Eye Tracker Data Quality Test Report: Accuracy, Precision and Detected Gaze under Optimal Conditions—Controlled Environment. Available online: https://www.tobiipro.com/siteassets/tobii-pro/accuracy-and-precision-tests/tobii-pro-spectrum-accuracy-and-precision-test-report.pdf/?v=1.1 (accessed on 17 November 2020).

- Tobii Pro Insight. Health and Safety Recommendations for Data Collection During the COVID-19 Pandemic. 2020. Available online: https://www.tobiipro.com/contentassets/a9bbc515abdc45609215146d8f5946b8/covid-bestpractices-tobiipro_v5final.pdf?v=5 (accessed on 7 September 2021).

- Tobii, A.B. Using Face Masks with Tobii Eye Trackers. Available online: https://connect.tobiipro.com/s/article/Using-masks-with-Tobii-Eye-Trackers?language=en_US (accessed on 8 December 2020).

- Microsoft Support. Draw on Slides during a Presentation. Available online: https://support.microsoft.com/en-us/office/draw-on-slides-during-a-presentation-80a78a11-cb5d-4dfc-a1ad-a26e877da770 (accessed on 25 September 2020).

- Pernice, K.; Nielsen, J. Eyetracking Methodology: How to Conduct and Evaluate Usability Studies Using Eyetracking; Nielsen Norman Group Technical Report; Nielsen Norman Group: Fremont, CA, USA, 2009. [Google Scholar]

- Tobii, A.B. Tobii Pro Lab. 2021. Available online: https://www.tobiipro.com/product-listing/tobii-pro-lab/ (accessed on 7 September 2021).

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Halszka, J.; van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Wong, W. Digging Into Areas of Interest. Available online: https://www.tobiipro.com/learn-and-support/learn/steps-in-an-eye-tracking-study/data/digging-into-areas-of-interest/ (accessed on 18 October 2020).

- Schaeffer-Lacour, J. What Is an Area of Interest? Available online: https://intercom.help/sticky/en/articles/1633964-what-is-an-area-of-interest (accessed on 18 October 2020).

- Schaeffer-Lacour, J. Why Is There a Minimum Size for AOIs? Available online: https://intercom.help/sticky/en/articles/1633999-why-is-there-a-minimum-size-for-aois (accessed on 18 October 2020).

- Pomplun, M.; Garaas, T.W.; Carrasco, M. The effects of task difficulty on visual search strategy in virtual 3D displays. J. Vis. 2013, 13, 24. [Google Scholar] [CrossRef]

- Gramopadhye, A.K.; Drury, C.G.; Prabhu, P.V. Training strategies for visual inspection. Hum. Factors Ergon. Manuf. Serv. Ind. 1997, 7, 171–196. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Drew, T.; Weaver, D.L.; Elmore, J.G. A review of eye tracking for understanding and improving diagnostic interpretation. Cogn. Res. Princ. Implic. 2019, 4, 7. [Google Scholar] [CrossRef] [PubMed]

- Cain, M.S.; Adamo, S.H.; Mitroff, S.R. A taxonomy of errors in multiple-target visual search. Vis. Cogn. 2013, 21, 899–921. [Google Scholar] [CrossRef]

- Kundel, H.L.; Nodine, C.F. Studies of Eye Movements and Visual Search in Radiology, 1st ed.; Senders, J.W., Fisher, D.F., Monty, R.A., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1978; pp. 317–327. [Google Scholar]

- Mello-Thoms, C.; Hardesty, L.; Sumkin, J.; Ganott, M.; Hakim, C.; Britton, C.; Stalder, J.; Maitz, G. Effects of Lesion Conspicuity on Visual Search in Mammogram Reading. Acad. Radiol. 2005, 12, 830–840. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vis. Res. 2000, 40, 1489–1506. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Mercan, E.; Weaver, D.L.; Elmore, J.G. Accuracy is in the eyes of the pathologist: The visual interpretive process and diagnostic accuracy with digital whole slide images. J. Biomed. Inform. 2017, 66, 171–179. [Google Scholar] [CrossRef]

- Chen, S.; Epps, J.; Ruiz, N.; Chen, F. Eye activity as a measure of human mental effort in HCI. In Proceedings of the 16th international conference on Intelligent user interfaces, Palo Alto, CA, USA, 13–16 February 2011; pp. 315–318. [Google Scholar]

- Debue, N.; van de Leemput, C. What does germane load mean? An empirical contribution to the cognitive load theory. Front. Psychol. 2014, 5, 1099. [Google Scholar] [CrossRef] [PubMed]

- Fabio, R.A.; Incorpora, C.; Errante, A.; Mohammadhasni, N.; Caprì, T.; Carrozza, C.; De Santis, S.; Falzone, A. The Influence of Cognitive Load and Amount of Stimuli on Entropy Through Eye Tracking Measures. 2015. Available online: http://hdl.handle.net/11570/3064917 (accessed on 7 September 2021).

- Hooge, I.T.; Erkelens, C.J. Control of fixation duration in a simple search task. Percept Psychophys. 1996, 58, 969–976. [Google Scholar] [CrossRef]

- Jacobs, A.M.; O’Regan, J.K. Spatial and/or temporal adjustments of scanning behavior to visibility changes. Acta Psychol. 1987, 65, 133–146. [Google Scholar] [CrossRef]

- Hart, B.M.; Schmidt, H.C.E.F.; Klein-Harmeyer, I.; Einhäuser, W. Attention in natural scenes: Contrast affects rapid visual processing and fixations alike. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2013, 368, 20130067. [Google Scholar] [CrossRef]

- Kundel, H.; Nodine, C.F. A short history of image perception in medical radiology. In The Handbook of Medical Image Perception and Techniques; Cambridge University Press: Cambridge, UK, 2010; pp. 9–20. [Google Scholar] [CrossRef]

- Duchowski, A.T. A breadth-first survey of eye-tracking applications. Behav. Res. Methods Instrum. Comput. 2002, 34, 455–470. [Google Scholar] [CrossRef]

- Medin, D.L.; Altom, M.W.; Murphy, T.D. Given versus induced category representations: Use of prototype and exemplar information in classification. J. Exp. Psychol. Learn Mem. Cogn. 1984, 10, 333–352. [Google Scholar] [CrossRef] [PubMed]

- Dreyfus, H.; Dreyfus, S.E.; Athanasiou, T. Mind over Machine: The Power of Human Intuition and Expertise in the Era of the Computer; The Free Press: New York, NY, USA, 1986; p. 231. [Google Scholar]

- Brunyé, T.T.; Carney, P.A.; Allison, K.H.; Shapiro, L.G.; Weaver, D.L.; Elmore, J.G. Eye Movements as an Index of Pathologist Visual Expertise: A Pilot Study. PLoS ONE 2014, 9, e103447. [Google Scholar] [CrossRef]

- Berbaum, K.S.; Franken, E.A.J.; Dorfman, D.D.; Rooholamini, S.A.; Kathol, M.H.; Barloon, T.J.; Behlke, F.M.; Sato, Y.; Lu, C.H.; El-Khoury, G.Y.; et al. Satisfaction of Search in Diagnostic Radiology. Investig. Radiol. 1990, 25, 133–140. [Google Scholar] [CrossRef]

- Jacobson, H.J. A study of inspector accuracy. Ind. Qual. Control. 1952, 9, 16–25. [Google Scholar]

- Wang, M.-J.; Lin, S.-C.; Drury, C. Training for strategy in visual search. Int. J. Ind. Ergon. 1997, 20, 101–108. [Google Scholar] [CrossRef]

- Nickles, G.M.; Sacrez, V.; Gramopadhye, A.K. Can we Train Humans to be Systematic Inspectors? Proc. Hum. Factors Ergon. Soc. Annu. Meet. 1998, 42, 1165–1169. [Google Scholar] [CrossRef]

- Findlay, J.M. Saccade Target Selection During Visual Search. Vis. Res. 1997, 37, 617–631. [Google Scholar] [CrossRef]

- Theeuwes, J.; Kramer, A.F.; Hahn, S.; Irwin, D.E.; Zelinsky, G.J. Influence of attentional capture on oculomotor control. J. Exp. Psychol. Hum. Percept. Perform. 1999, 25, 1595–1608. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.S.A.; Tien, G.; Atkins, M.S.; Zheng, B.; Panton, O.N.M.; Meneghetti, A.T. Analysis of eye gaze: Do novice surgeons look at the same location as expert surgeons during a laparoscopic operation? Surg. Endosc. 2012, 26, 3536–3540. [Google Scholar] [CrossRef]

- Egglin, T.K.; Feinstein, A.R. Context bias. A problem in diagnostic radiology. JAMA 1996, 276, 1752–1755. [Google Scholar] [CrossRef]

- Fu, H.; Wei, Y.; Camastra, F.; Arico, P.; Sheng, H. Advances in Eye Tracking Technology: Theory, Algorithms, and Applications. Comput. Intell. Neurosci. 2016, 2016, 7831469. [Google Scholar] [CrossRef] [PubMed]

- Cognolato, M.; Atzori, M.; Müller, H. Head-mounted eye gaze tracking devices: An overview of modern devices and recent advances. J. Rehabil. Assist. Technol. Eng. 2018, 5, 2055668318773991. [Google Scholar] [CrossRef] [PubMed]

- Mercan, E.; Aksoy, S.; Shapiro, L.G.; Weaver, D.L.; Brunyé, T.T.; Elmore, J.G. Localization of Diagnostically Relevant Regions of Interest in Whole Slide Images: A Comparative Study. J. Digit. Imaging 2016, 29, 496–506. [Google Scholar] [CrossRef]

- Shareghi Najar, A.; Mitrovic, A.; Neshatian, K. Eye Tracking and Studying Examples: How Novices and Advanced Learners Study SQL Examples. J. Comput. Inf. Technol. 2015, 23, 171. [Google Scholar] [CrossRef]

- Tobii, A.B. What Does Eye Tracking Data Tell Us? Available online: https://www.tobiipro.com/learn-and-support/learn/steps-in-an-eye-tracking-study/interpret/what-does-eye-tracking-data-tell-us/ (accessed on 16 June 2021).

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef] [PubMed]

- Niehorster, D.C.; Cornelissen, T.H.W.; Holmqvist, K.; Hooge, I.T.C.; Hessels, R.S. What to expect from your remote eye-tracker when participants are unrestrained. Behav. Res. Methods 2018, 50, 213–227. [Google Scholar] [CrossRef]

- Tobii, A.B. Tobii Pro Spectrum: Captures the Fastest Eye Movements for Extensive Behavioral Research. Available online: https://www.tobiipro.com/siteassets/tobii-pro/brochures/tobii-pro-spectrum-brochure.pdf/?v=5.1 (accessed on 16 June 2021).

- Tobii, A.B. Chin Rest. Available online: https://www.tobiipro.com/product-listing/chin-rest/ (accessed on 17 June 2021).

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Jeelani, I.; Albert, A.; Han, K. Automating and scaling personalized safety training using eye-tracking data. Autom. Constr. 2018, 93, 6. [Google Scholar] [CrossRef]

- Tien, T.; Pucher, P.H.; Sodergren, M.H.; Sriskandarajah, K.; Yang, G.-Z.; Darzi, A. Eye tracking for skills assessment and training: A systematic review. J. Surg. Res. 2014, 191, 169–178. [Google Scholar] [CrossRef] [PubMed]

- Brunyé, T.; Drew, T.; Kerr, K.; Shucard, H.; Weaver, D.; Elmore, J. Eye tracking reveals expertise-related differences in the time-course of medical image inspection and diagnosis. J. Med. Imaging 2020, 7, 051203. [Google Scholar] [CrossRef]

- Papesh, M.H.; Hout, M.C.; Guevara Pinto, J.D.; Robbins, A.; Lopez, A. Eye movements reflect expertise development in hybrid search. Cogn. Res. Princ. Implic. 2021, 6, 7. [Google Scholar] [CrossRef] [PubMed]

- Stember, J.N.; Celik, H.; Krupinski, E.; Chang, P.D.; Mutasa, S.; Wood, B.J.; Lignelli, A.; Moonis, G.; Schwartz, L.H.; Jambawalikar, S.; et al. Eye Tracking for Deep Learning Segmentation Using Convolutional Neural Networks. J. Digit. Imaging 2019, 32, 597–604. [Google Scholar] [CrossRef]

- Buettner, R. Cognitive Workload of Humans Using Artificial Intelligence Systems: Towards Objective Measurement Applying Eye-Tracking Technology; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–48. [Google Scholar]

- Ratwani, R.M.; Trafton, J.G. A Real-Time Eye Tracking System for Predicting and Preventing Postcompletion Errors. Hum.–Comput. Interact. 2011, 26, 205–245. [Google Scholar] [CrossRef]

- Tourassi, G.D.; Mazurowski, M.A.; Harrawood, B.P.; Krupinski, E.A. Exploring the potential of context-sensitive CADe in screening mammography. Med. Phys. 2010, 37, 5728–5736. [Google Scholar] [CrossRef] [PubMed]

- Voisin, S.; Pinto, F.; Morin-Ducote, G.; Hudson, K.B.; Tourassi, G.D. Predicting diagnostic error in radiology via eye-tracking and image analytics: Preliminary investigation in mammography. Med. Phys. 2013, 40, 101906. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zou, L.; Fan, C.; Liu, Y. Feature weighting network for aircraft engine defect detection. Int. J. Wavelets Multiresolution Inf. Process. 2020, 18, 2050012. [Google Scholar] [CrossRef]

- Wong, C.Y.; Seshadri, P.; Parks, G.T. Automatic Borescope Damage Assessments for Gas Turbine Blades via Deep Learning. In Proceedings of the AIAA Scitech 2021 Forum, Virtual, 11–15 January 2021. [Google Scholar]

| Inspectors (N = 18) | Engineers (N = 16) | Assembly Operator (N = 16) | |

|---|---|---|---|

| Gender | |||

| Male | 17 | 15 | 15 |

| Female | 1 | 1 | 1 |

| Corrected vision | 10 | 10 | 5 |

| Currently assigned to inspection | 17 | 0 | 0 |

| Previously been assigned to inspection (how many years ago) | |||

| Never | - | 9 | 12 |

| 1 to 4 | Currently | 0 | 1 |

| 5 to 10 | Currently | 1 | 2 |

| 10 to 20 | Currently | 6 | 0 |

| 20 and more | Currently | 0 | 1 |

| Work experience in the field in years | |||

| 1 to 4 | 2 | 0 | 3 |

| 5 to 9 | 2 | 0 | 3 |

| 10 to 19 | 6 | 3 | 8 |

| 20 and more | 8 | 13 | 2 |

| Highest qualification | |||

| Trade certificate | 10 | 3 | 13 |

| Diploma | 3 | 6 | 1 |

| University degree | 5 | 7 | 2 |

| Hypothesis | |

|---|---|

| H1 | Inspectors perform better in terms of (a) inspection accuracy and (b) inspection time than non-inspecting staff. |

| H2 | Cleaned blades lead to improved inspection performance measured in (a) inspection accuracy and (b) inspection time, compared to blades in dirty condition. |

| Metrics | Description |

|---|---|

| Decision | Determination whether a part is defective or non-defective. Takes the value of one (1) when a correct decision was made and zero (0) when an incorrect decision was made. |

| True Positive (TP) | Blade was correctly identified as defective (hit). |

| False Negative (FN) | Blade was incorrectly identified as non-defective and thus the defect was missed (miss). |

| False Positive (FP) | A non-defective blade was incorrectly classified as defective (false alarm). |

| True Negative (TN) | A non-defective blade was correctly classified as non-defective (correct acceptance). |

| Inspection Accuracy (IA) | Measure for the inspection performance taking into account the correct decisions (TP and TN), and the total population; also referred to as Decision Accuracy. |

| Improvement Rate (IR) | Inspection accuracy improvement between dirty and clean condition. |

| Inspection Time (IT) | Time needed to inspect a blade. |

| Confidence Rating (CR) | Self-rate confidence level of participant on a scale from one to five. |

| Expertise | Dirty Blades M (SD) | Clean Blades M (SD) | Improvement M (SD) |

|---|---|---|---|

| Inspectors (N = 18) | 68.1 (13.8) | 87.0 (10.4) | 33.6 (33.1) |

| Engineers (N = 16) | 63.0 (10.5) | 87.0 (8.03) | 42.5 (32.8) |

| Assembly Ops. (N = 16) | 69.3 (10.4) | 86.5 (11.3) | 29.0 (32.8) |

| All participants (N = 50) | 66.8 (11.8) | 86.8 (9.83) | 35.0 (32.7) |

| Effect | Reference Level | Level of Effect | Estimate | Wald. Stat | Odds Ratio | Lower CL 95% | Upper CL 95% | p |

|---|---|---|---|---|---|---|---|---|

| Expertise | Assembly Operator | Engineer | −0.104208 | 1.1711 | 0.853871 | 0.612345 | 1.190663 | 0.279170 |

| Expertise | Assembly Operator | Inspector | 0.050441 | 0.2794 | 0.996679 | 0.716831 | 1.385778 | 0.597094 |

| Effect | Reference Level | Level of Effect | Estimate | Wald. Stat | Odds Ratio | Lower CL 95% | Upper CL 95% | p |

|---|---|---|---|---|---|---|---|---|

| Cleanliness | Dirty | Clean | 0.578399 | 61.4089 | 3.179736 | 2.380880 | 4.246631 | 0.000000 |

| Effect | Reference Level | Level of Effect | Wald. Stat | Odds Ratio | Lower CL 95% | Upper CL 95% | p |

|---|---|---|---|---|---|---|---|

| Work Experience | 0.23356 | 0.994938 | 0.974668 | 1.015629 | 0.628900 | ||

| Inspection Time | 0.10953 | 0.997451 | 0.982491 | 1.012640 | 0.740683 | ||

| Confidence Rating | 0.62841 | 1.087622 | 0.883665 | 1.338654 | 0.427940 | ||

| Expertise | Assembly Operator | Engineer | 0.53783 | 1.167187 | 0.758731 | 1.795531 | 0.477357 |

| Expertise | Assembly Operator | Inspector | 1.036288 | 0.667100 | 1.609792 | 0.832337 | |

| Visual Acuity | No glasses | Glasses | 1.40159 | 0.821929 | 0.594074 | 1.137178 | 0.236457 |

| Education | Trade Cert. | Diploma | 1.22683 | 1.214756 | 0.802685 | 1.838372 | 0.615918 |

| Education | Trade Cert. | Bachelor | 1.210193 | 0.804527 | 1.820406 | 0.631881 | |

| Prev. Inspection Experience | No | Yes | 0.23881 | 0.901183 | 0.593719 | 1.367870 | 0.625066 |

| Cleanliness * | Dirty | Clean | 62.72209 | 0.305089 | 0.227422 | 0.409279 | 0.000000 |

| Defect Type * | No damage | Tear | 20.75906 | 0.451933 | 0.245067 | 0.833419 | 0.041402 |

| Defect Type * | No damage | Nick | 0.533789 | 0.375063 | 0.759687 | 0.028675 | |

| Defect Type * | No damage | Dent | 1.068135 | 0.755904 | 1.509335 | 0.001932 |

| Expertise | Dirty Blades M (SD) | Clean Blades M (SD) | Time Savings M (SD) |

|---|---|---|---|

| Inspectors (N = 18) | 11.914 (4.302) | 10.390 (3.827) | 1.524 (2.263) |

| Engineers (N = 16) | 17.773 (7.315) | 16.757 (6.124) | 1.016 (3.632) |

| Assembly Ops (N = 16) | 17.400 (8.341) | 15.001 (7.944) | 2.399 (2.723) |

| All participants (N = 50) | 15.545 (7.189) | 13.903 (6.593) | 1.641 (2.899) |

| Effect | Reference Level | Level of Effect | Wald. Stat | Estimate | Lower CL 95% | Upper CL 95% | p |

|---|---|---|---|---|---|---|---|

| Work Experience | 0.2720 | −0.001457 | −0.006932 | 0.004018 | 0.601996 | ||

| Confidence Rating | 0.0786 | −0.007156 | −0.057186 | 0.042874 | 0.779215 | ||

| Expertise * | Assembly Operator | Engineer | 11.1835 | 0.104217 | 0.043137 | 0.165298 | 0.000825 |

| Expertise * | Assembly Operator | Inspector | 69.1614 | −0.286814 | −0.354409 | −0.219218 | 0.000000 |

| Visual Acuity * | No glasses | Glasses | 56.4609 | 0.154085 | 0.113893 | 0.194276 | 0.000000 |

| Education | Trade Cert. | Diploma | 2.3705 | 0.051595 | −0.014086 | 0.117275 | 0.123651 |

| Education | Trade Cert. | Bachelor | 0.0172 | 0.004301 | −0.059895 | 0.068497 | 0.895535 |

| Prev. Inspection Experience | No | Yes | 0.0072 | −0.002031 | −0.049062 | 0.045001 | 0.932561 |

| Cleanliness * | Dirty | Clean | 11.5048 | −0.061458 | −0.096971 | −0.025945 | 0.000694 |

| Defect Type * | No damage | Tear | 13.0585 | 0.148160 | 0.067801 | 0.228518 | 0.000302 |

| Defect Type * | No damage | Nick | 4.2438 | 0.056915 | 0.002765 | 0.111064 | 0.039393 |

| Defect Type | No damage | Dent | 3.3302 | −0.058704 | −0.121753 | 0.004345 | 0.068017 |

| Decision | 0 | 1 | 1.1045 | 0.023340 | −0.020188 | 0.066868 | 0.293284 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aust, J.; Mitrovic, A.; Pons, D. Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study. Sensors 2021, 21, 6135. https://doi.org/10.3390/s21186135

Aust J, Mitrovic A, Pons D. Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study. Sensors. 2021; 21(18):6135. https://doi.org/10.3390/s21186135

Chicago/Turabian StyleAust, Jonas, Antonija Mitrovic, and Dirk Pons. 2021. "Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study" Sensors 21, no. 18: 6135. https://doi.org/10.3390/s21186135

APA StyleAust, J., Mitrovic, A., & Pons, D. (2021). Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study. Sensors, 21(18), 6135. https://doi.org/10.3390/s21186135