Towards Fully Autonomous UAVs: A Survey

Abstract

:1. Introduction

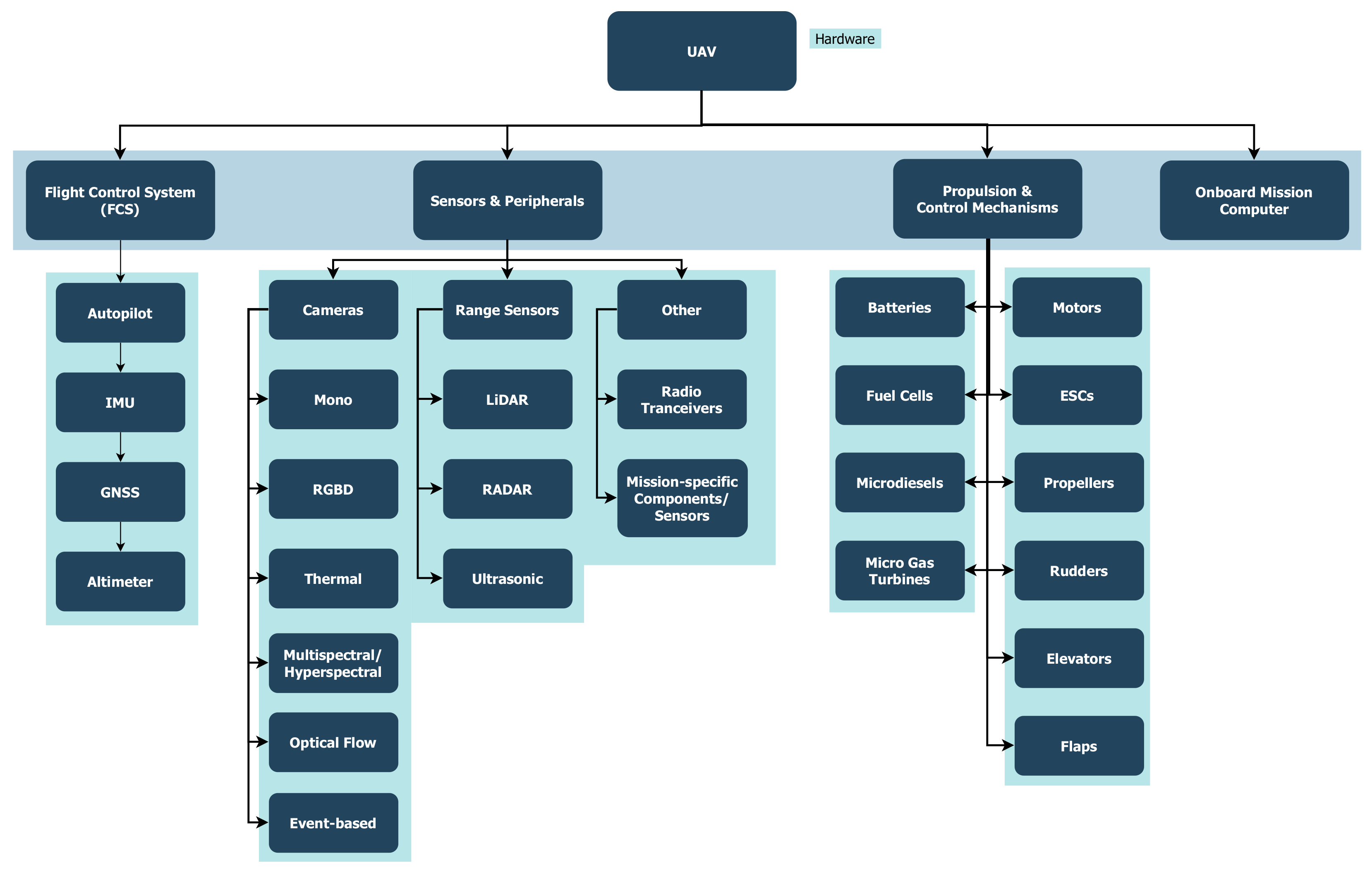

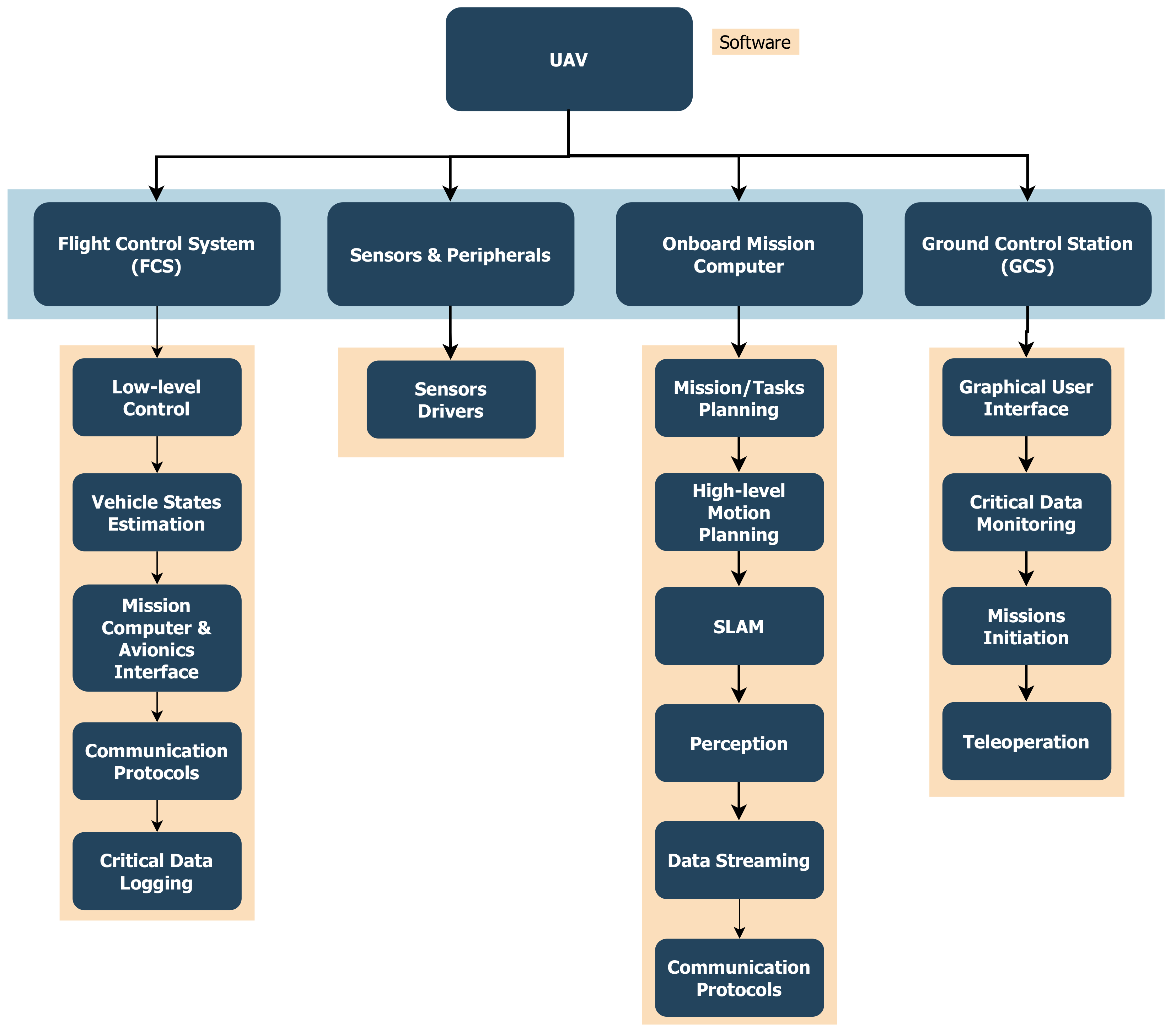

2. UAV Types, Autonomy and System Architectures

2.1. UAV Types

- Micro: less than 250 g;

- Very Small: 0.25–2 kg;

- Small: 2–25 kg;

- Medium: 25–150 kg;

- Large: More than 150 kg.

2.2. Autonomy Levels

- Fully autonomous: UAV can carry out a delegated task/mission without human interaction where all decisions are made onboard based on sensors observations adapting to operational and environmental changes.

- Semi-autonomous: A human operator is needed for high-level mission planning and for interaction during the movement when some decisions are needed that the UAV is not capable of making. The vehicle can maintain autonomous operation in between these interactions. For example, an operator can provide a list of waypoints to guide the vehicle where it can manage to move safely towards these positions with obstacle avoidance capability.

- Teleoperated: The remote operator relies on feedback from onboard sensors to move the vehicle either by directly sending control commands or intermediate goals with no obstacle avoidance capabilities. This mode can be used in Beyond-Line-of-Sight (BLOS) applications.

- Remotely controlled: A remote pilot is needed to manually control the UAV without sensors feedback which can be used in Line-of-Sight (LOS) applications.

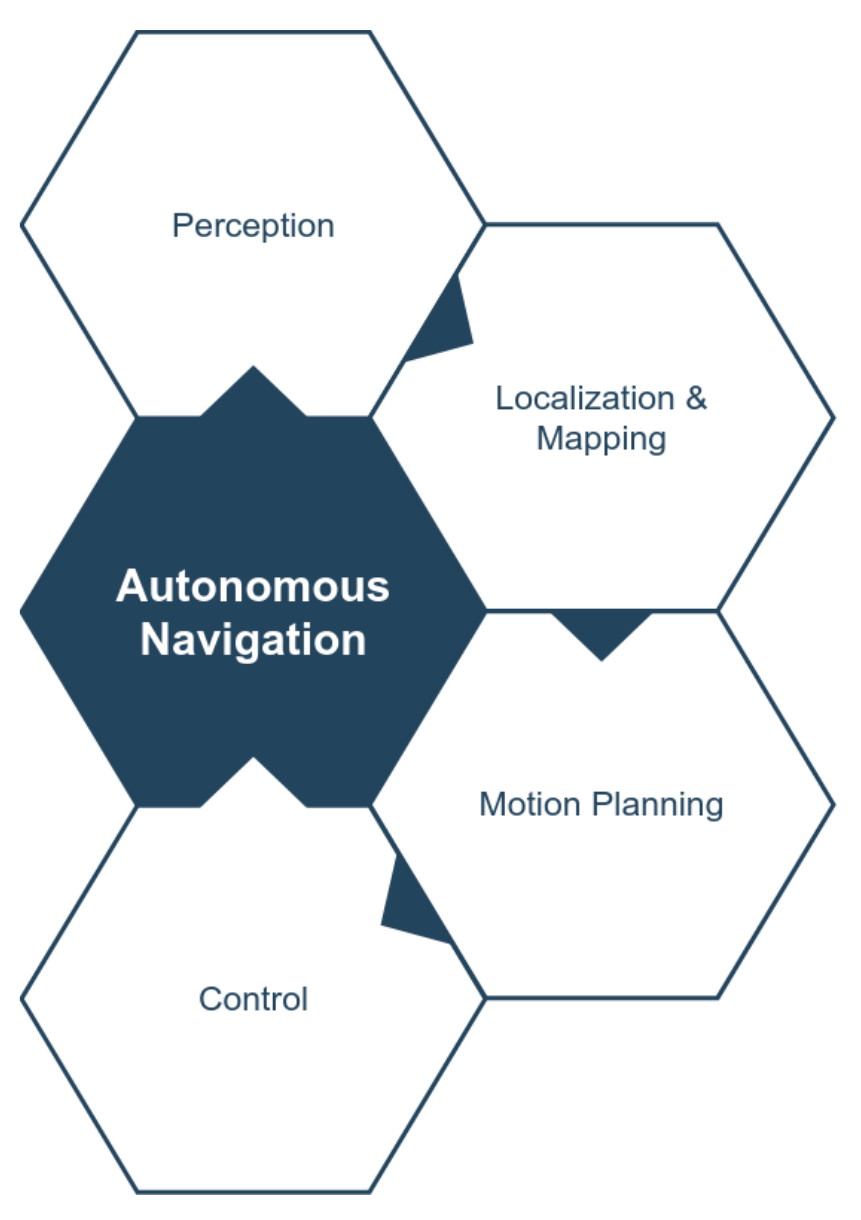

2.3. Towards Fully Autonomous Operations

- Perception;

- Localization and Mapping;

- Motion Planning and Obstacle Avoidance;

- Control.

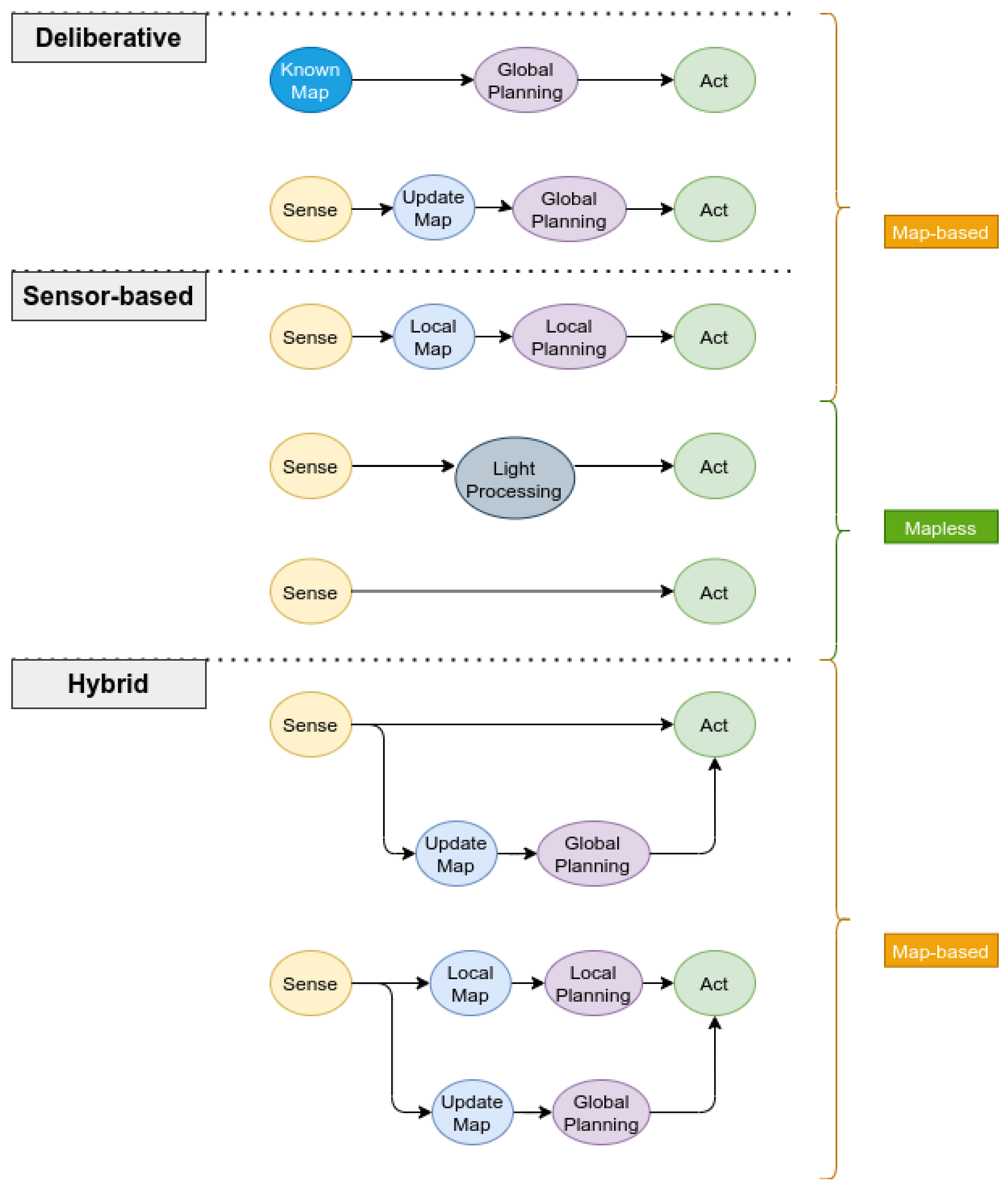

3. Navigation Techniques

3.1. Navigation Paradigms

- Search-based methods (ex. Dijkstra, , , etc.);

- Potential field methods (ex. navigation function, wavefront planner, etc.);

- Geometric methods (ex. cell decomposition, generalized Voronoi diagrams, visibility graphs, etc.);

- Sampling-based methods (ex. PRM, RRT, RRT*, FMT, BIT, etc.);

- Optimization-based methods (PSO, genetic algorithms, etc.).

3.2. Map-Based vs. Mapless Methods

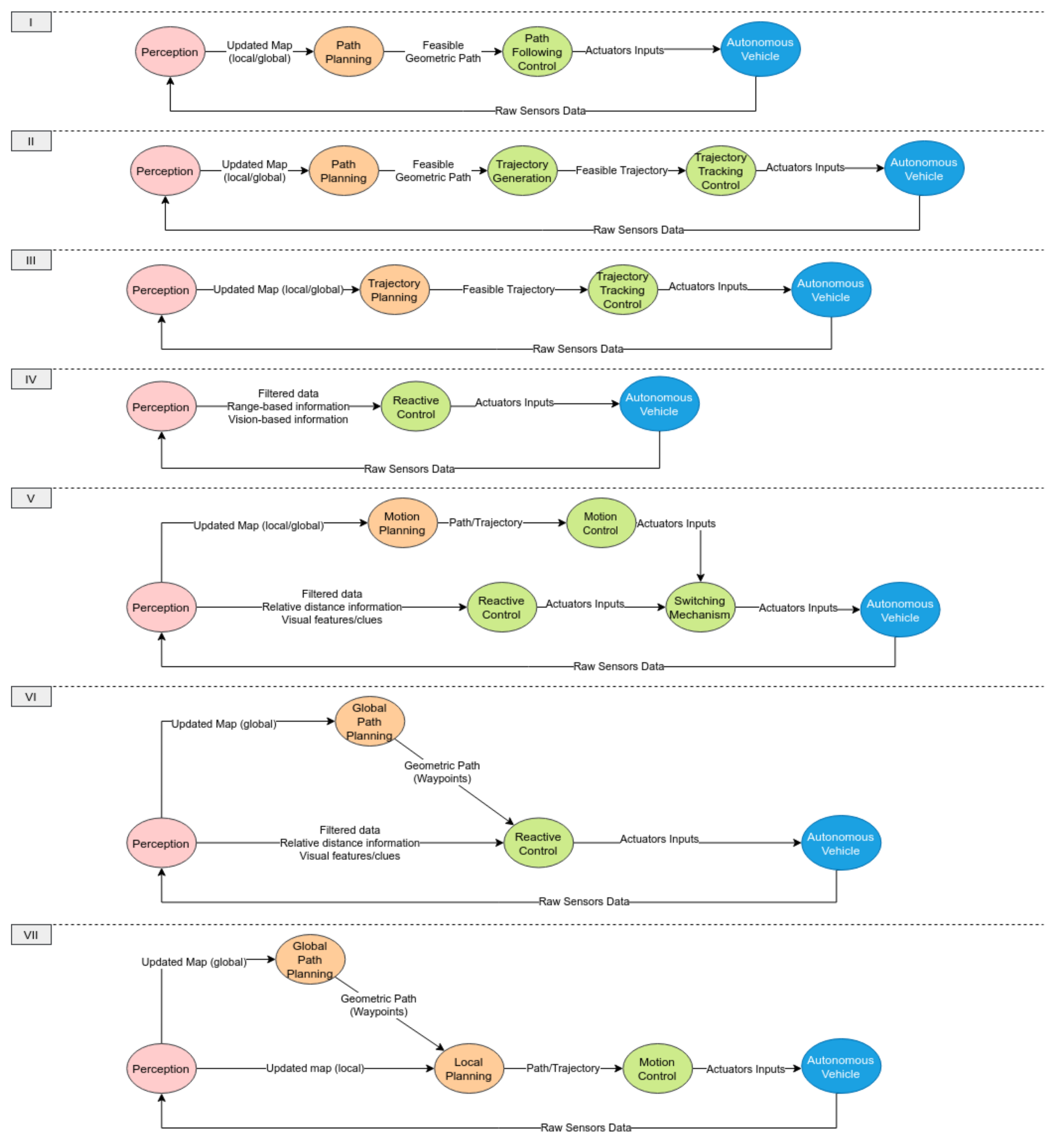

3.3. Overall Navigation Control Structure

3.4. Local Path Planning

3.5. Local Trajectory Planning

3.6. Reactive Methods

4. UAV Modeling and Control

4.1. Modeling

4.2. Low-Level Control

5. Simultaneous Localization and Mapping (SLAM)

6. Summary of Recent Developments

7. Open-Source Projects

8. Research Challenges

8.1. UAV Applications

8.1.1. Precision Agriculture

8.1.2. Search and Rescue

8.1.3. Animal Control and Wildlife Monitoring

8.1.4. Weather Forecast

8.1.5. Construction

8.1.6. Oil and Gas

8.1.7. Other

8.2. Multi-UAV and Networked Systems

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Goodrich, M.A.; Morse, B.S.; Gerhardt, D.; Cooper, J.L.; Quigley, M.; Adams, J.A.; Humphrey, C. Supporting wilderness search and rescue using a camera-equipped mini UAV. J. Field Robot. 2008, 25, 89–110. [Google Scholar] [CrossRef]

- Li, H.; Savkin, A.V. Wireless sensor network based navigation of micro flying robots in the industrial internet of things. IEEE Trans. Ind. Inform. 2018, 14, 3524–3533. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V. Towards the Internet of Flying Robots: A Survey. Sensors 2018, 18, 4038. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Savkin, A.V.; Huang, H. A method for optimized deployment of a network of surveillance aerial drones. IEEE Syst. J. 2019, 13, 4474–4477. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V. An algorithm of reactive collision free 3-D deployment of networked unmanned aerial vehicles for surveillance and monitoring. IEEE Trans. Ind. Inform. 2020, 16, 132–140. [Google Scholar] [CrossRef]

- Savkin, A.V.; Huang, H. Navigation of a network of aerial drones for monitoring a frontier of a moving environmental disaster area. IEEE Syst. J. 2020, 14, 4746–4749. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Korpela, C.M.; Danko, T.W.; Oh, P.Y. MM-UAV: Mobile manipulating unmanned aerial vehicle. J. Intell. Robot. Syst. 2012, 65, 93–101. [Google Scholar] [CrossRef]

- Ruggiero, F.; Lippiello, V.; Ollero, A. Aerial manipulation: A literature review. IEEE Robot. Autom. Lett. 2018, 3, 1957–1964. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Savkin, A.V.; Vucetic, B. Autonomous Area Exploration and Mapping in Underground Mine Environments by Unmanned Aerial Vehicles. Robotica 2020, 38, 442–456. [Google Scholar] [CrossRef]

- Elmokadem, T.; Savkin, A.V. A Method for Autonomous Collision-Free Navigation of a Quadrotor UAV in Unknown Tunnel-Like Environments. Robotica 2021, 1–27. [Google Scholar] [CrossRef]

- Roderick, W.R.; Cutkosky, M.R.; Lentink, D. Touchdown to take-off: At the interface of flight and surface locomotion. Interface Focus 2017, 7, 20160094. [Google Scholar] [CrossRef]

- Valavanis, K.P.; Vachtsevanos, G.J. Handbook of Unmanned Aerial Vehicles; Springer: Berlin/Heidelberg, Germany, 2015; Volume 1. [Google Scholar]

- Cai, G.; Peng, K.; Chen, B.M.; Lee, T.H. Design and assembling of a UAV helicopter system. In Proceedings of the 2005 International Conference on Control and Automation (ICCA 2005), Budapest, Hungary, 26–29 June 2005; Volume 2, pp. 697–702. [Google Scholar]

- Cai, G.; Feng, L.; Chen, B.M.; Lee, T.H. Systematic design methodology and construction of UAV helicopters. Mechatronics 2008, 18, 545–558. [Google Scholar] [CrossRef]

- Godbolt, B.; Vitzilaios, N.I.; Lynch, A.F. Experimental validation of a helicopter autopilot design using model-based PID control. J. Intell. Robot. Syst. 2013, 70, 385–399. [Google Scholar] [CrossRef]

- Cai, G.; Wang, B.; Chen, B.M.; Lee, T.H. Design and implementation of a flight control system for an unmanned rotorcraft using RPT control approach. Asian J. Control 2013, 15, 95–119. [Google Scholar] [CrossRef] [Green Version]

- Mahony, R.; Kumar, V.; Corke, P. Multirotor aerial vehicles: Modeling, estimation, and control of quadrotor. IEEE Robot. Autom. Mag. 2012, 19, 20–32. [Google Scholar] [CrossRef]

- Phang, S.K.; Li, K.; Yu, K.H.; Chen, B.M.; Lee, T.H. Systematic design and implementation of a micro unmanned quadrotor system. Unmanned Syst. 2014, 2, 121–141. [Google Scholar] [CrossRef]

- Segui-Gasco, P.; Al-Rihani, Y.; Shin, H.S.; Savvaris, A. A novel actuation concept for a multi rotor UAV. J. Intell. Robot. Syst. 2014, 74, 173–191. [Google Scholar] [CrossRef]

- Verbeke, J.; Hulens, D.; Ramon, H.; Goedeme, T.; De Schutter, J. The design and construction of a high endurance hexacopter suited for narrow corridors. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS 2014), Orlando, FL, USA, 27–30 May 2014; pp. 543–551. [Google Scholar]

- Kamel, M.; Verling, S.; Elkhatib, O.; Sprecher, C.; Wulkop, P.; Taylor, Z.; Siegwart, R.; Gilitschenski, I. The voliro omniorientational hexacopter: An agile and maneuverable tiltable-rotor aerial vehicle. IEEE Robot. Autom. Mag. 2018, 25, 34–44. [Google Scholar] [CrossRef] [Green Version]

- Rashad, R.; Goerres, J.; Aarts, R.; Engelen, J.B.; Stramigioli, S. Fully actuated multirotor UAVs: A literature review. IEEE Robot. Autom. Mag. 2020, 27, 97–107. [Google Scholar] [CrossRef]

- Shkarayev, S.V.; Ifju, P.G.; Kellogg, J.C.; Mueller, T.J. Introduction to the Design of Fixed-Wing Micro Air Vehicles Including Three Case Studies; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2007. [Google Scholar]

- Keane, A.J.; Sóbester, A.; Scanlan, J.P. Small Unmanned Fixed-Wing Aircraft Design: A Practical Approach; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Zhao, A.; He, D.; Wen, D. Structural design and experimental verification of a novel split aileron wing. Aerosp. Sci. Technol. 2020, 98, 105635. [Google Scholar] [CrossRef]

- Cetinsoy, E.; Dikyar, S.; Hançer, C.; Oner, K.; Sirimoglu, E.; Unel, M.; Aksit, M. Design and construction of a novel quad tilt-wing UAV. Mechatronics 2012, 22, 723–745. [Google Scholar] [CrossRef]

- Ozdemir, U.; Aktas, Y.O.; Vuruskan, A.; Dereli, Y.; Tarhan, A.F.; Demirbag, K.; Erdem, A.; Kalaycioglu, G.D.; Ozkol, I.; Inalhan, G. Design of a commercial hybrid VTOL UAV system. J. Intell. Robot. Syst. 2014, 74, 371–393. [Google Scholar] [CrossRef]

- Ke, Y.; Wang, K.; Chen, B.M. Design and implementation of a hybrid UAV with model-based flight capabilities. IEEE/ASME Trans. Mechatron. 2018, 23, 1114–1125. [Google Scholar] [CrossRef]

- Chipade, V.S.; Kothari, M.; Chaudhari, R.R. Systematic design methodology for development and flight testing of a variable pitch quadrotor biplane VTOL UAV for payload delivery. Mechatronics 2018, 55, 94–114. [Google Scholar] [CrossRef] [Green Version]

- Gerdes, J.W.; Gupta, S.K.; Wilkerson, S.A. A review of bird-inspired flapping wing miniature air vehicle designs. J. Mech. Robot. 2012, 4, 021003. [Google Scholar] [CrossRef] [Green Version]

- Karásek, M. Robotic Hummingbird: Design of a Control Mechanism for a Hovering Flapping Wing Micro Air Vehicle; Universite Libre de Bruxelles: Brussels, Belgium, 2014. [Google Scholar]

- Gerdes, J.; Holness, A.; Perez-Rosado, A.; Roberts, L.; Greisinger, A.; Barnett, E.; Kempny, J.; Lingam, D.; Yeh, C.H.; Bruck, H.A.; et al. Robo Raven: A flapping-wing air vehicle with highly compliant and independently controlled wings. Soft Robot. 2014, 1, 275–288. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A.; Wei, M.; Ziaei-Rad, S. A novel methodology for wing sizing of bio-inspired flapping wing micro air vehicles: Theory and prototype. Acta Mech. 2017, 228, 1097–1113. [Google Scholar] [CrossRef]

- İşbitirici, A.; Altuğ, E. Design and control of a mini aerial vehicle that has four flapping-wings. J. Intell. Robot. Syst. 2017, 88, 247–265. [Google Scholar] [CrossRef]

- Holness, A.E.; Bruck, H.A.; Gupta, S.K. Characterizing and modeling the enhancement of lift and payload capacity resulting from thrust augmentation in a propeller-assisted flapping wing air vehicle. Int. J. Micro Air Veh. 2018, 10, 50–69. [Google Scholar] [CrossRef] [Green Version]

- Yousaf, R.; Shahzad, A.; Qadri, M.M.; Javed, A. Recent advancements in flapping mechanism and wing design of micro aerial vehicles. Proc. Inst. Mech. Eng. Part J. Mech. Eng. Sci. 2020. [Google Scholar] [CrossRef]

- Kaufman, E.; Caldwell, K.; Lee, D.; Lee, T. Design and development of a free-floating hexrotor UAV for 6-DOF maneuvers. In Proceedings of the 2014 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2014; pp. 1–10. [Google Scholar]

- Allenspach, M.; Bodie, K.; Brunner, M.; Rinsoz, L.; Taylor, Z.; Kamel, M.; Siegwart, R.; Nieto, J. Design and optimal control of a tiltrotor micro-aerial vehicle for efficient omnidirectional flight. Int. J. Robot. Res. 2020, 39, 1305–1325. [Google Scholar] [CrossRef]

- Stewart, W.; Weisler, W.; MacLeod, M.; Powers, T.; Defreitas, A.; Gritter, R.; Anderson, M.; Peters, K.; Gopalarathnam, A.; Bryant, M. Design and demonstration of a seabird-inspired fixed-wing hybrid UAV-UUV system. Bioinspir. Biomimetics 2018, 13, 056013. [Google Scholar] [CrossRef] [PubMed]

- Stewart, W.; Weisler, W.; Anderson, M.; Bryant, M.; Peters, K. Dynamic modeling of passively draining structures for aerial–aquatic unmanned vehicles. IEEE J. Ocean. Eng. 2019, 45, 840–850. [Google Scholar] [CrossRef]

- Tan, Y.H.; Chen, B.M. Design of a morphable multirotor aerial-aquatic vehicle. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; pp. 1–8. [Google Scholar]

- Kalantari, A.; Spenko, M. Modeling and performance assessment of the HyTAQ, a hybrid terrestrial/aerial quadrotor. IEEE Trans. Robot. 2014, 30, 1278–1285. [Google Scholar] [CrossRef]

- Mulgaonkar, Y.; Araki, B.; Koh, J.S.; Guerrero-Bonilla, L.; Aukes, D.M.; Makineni, A.; Tolley, M.T.; Rus, D.; Wood, R.J.; Kumar, V. The flying monkey: A mesoscale robot that can run, fly, and grasp. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA 2016), Stockholm, Sweden, 16–21 May 2016; pp. 4672–4679. [Google Scholar]

- Yamada, M.; Nakao, M.; Hada, Y.; Sawasaki, N. Development and field test of novel two-wheeled UAV for bridge inspections. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS 2017), Miami, FL, USA, 13–16 June 2017; pp. 1014–1021. [Google Scholar]

- Sabet, S.; Agha-Mohammadi, A.A.; Tagliabue, A.; Elliott, D.S.; Nikravesh, P.E. Rollocopter: An energy-aware hybrid aerial-ground mobility for extreme terrains. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–8. [Google Scholar]

- Atay, S.; Bryant, M.; Buckner, G.D. The Spherical Rolling-Flying Vehicle: Dynamic Modeling and Control System Design. J. Mech. Robot. 2021, 13, 050901. [Google Scholar] [CrossRef]

- Huang, H.M. Autonomy Levels for Unmanned Systems (ALFUS) Framework Volume I: Terminology; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2004. [Google Scholar]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef] [Green Version]

- Tobaruela, J.A.; Rodríguez, A.O. Reactive navigation in extremely dense and highly intricate environments. PLoS ONE 2017, 12, e0189008. [Google Scholar]

- DeSouza, G.N.; Kak, A.C. Vision for mobile robot navigation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef] [Green Version]

- Bonin-Font, F.; Ortiz, A.; Oliver, G. Visual Navigation for Mobile Robots: A survey. J. Intell. Robot. Syst. 2008, 53, 263–296. [Google Scholar] [CrossRef]

- Aoude, G.S.; Luders, B.D.; Joseph, J.M.; Roy, N.; How, J.P. Probabilistically safe motion planning to avoid dynamic obstacles with uncertain motion patterns. Auton. Robot. 2013, 35, 51–76. [Google Scholar] [CrossRef]

- Yang, K.; Gan, S.K.; Sukkarieh, S. An Efficient Path Planning and Control Algorithm for RUAV’s in Unknown and Cluttered Environments. J. Intell. Robot. Syst. 2010, 57, 101. [Google Scholar] [CrossRef]

- Lin, Y.; Saripalli, S. Sampling-based path planning for UAV collision avoidance. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3179–3192. [Google Scholar] [CrossRef]

- Schmid, L.; Pantic, M.; Khanna, R.; Ott, L.; Siegwart, R.; Nieto, J. An efficient sampling-based method for online informative path planning in unknown environments. IEEE Robot. Autom. Lett. 2020, 5, 1500–1507. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Watterson, M.; Tang, S.; Kumar, V. High speed navigation for quadrotors with limited onboard sensing. In Proceedings of the 2016 IEEE international conference on robotics and automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1484–1491. [Google Scholar]

- Sanchez-Lopez, J.L.; Wang, M.; Olivares-Mendez, M.A.; Molina, M.; Voos, H. A Real-Time 3D Path Planning Solution for Collision-Free Navigation of Multirotor Aerial Robots in Dynamic Environments. J. Intell. Robot. Syst. 2019, 93, 33–53. [Google Scholar] [CrossRef] [Green Version]

- Miller, B.; Stepanyan, K.; Miller, A.; Andreev, M. 3D path planning in a threat environment. In Proceedings of the 2011 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 6864–6869. [Google Scholar]

- Chen, Y.b.; Luo, G.c.; Mei, Y.s.; Yu, J.q.; Su, X.l. UAV path planning using artificial potential field method updated by optimal control theory. Int. J. Syst. Sci. 2016, 47, 1407–1420. [Google Scholar] [CrossRef]

- Roberge, V.; Tarbouchi, M.; Labonté, G. Comparison of parallel genetic algorithm and particle swarm optimization for real-time UAV path planning. IEEE Trans. Ind. Inform. 2012, 9, 132–141. [Google Scholar] [CrossRef]

- Roberge, V.; Tarbouchi, M.; Labonté, G. Fast genetic algorithm path planner for fixed-wing military UAV using GPU. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 2105–2117. [Google Scholar] [CrossRef]

- Sahingoz, O.K. Generation of bezier curve-based flyable trajectories for multi-UAV systems with parallel genetic algorithm. J. Intell. Robot. Syst. 2014, 74, 499–511. [Google Scholar] [CrossRef]

- Yang, K.; Sukkarieh, S. An analytical continuous-curvature path-smoothing algorithm. IEEE Trans. Robot. 2010, 26, 561–568. [Google Scholar] [CrossRef]

- Gasparetto, A.; Boscariol, P.; Lanzutti, A.; Vidoni, R. Path planning and trajectory planning algorithms: A general overview. Motion Oper. Plan. Robot. Syst. 2015, 29, 3–27. [Google Scholar]

- Mellinger, D.; Kumar, V. Minimum snap trajectory generation and control for quadrotors. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2520–2525. [Google Scholar]

- Richter, C.; Bry, A.; Roy, N. Polynomial trajectory planning for aggressive quadrotor flight in dense indoor environments. In Robotics Research; Springer International Publishing: Basel, Switzerland, 2016; pp. 649–666. [Google Scholar]

- Deits, R.; Tedrake, R. Efficient mixed-integer planning for UAVs in cluttered environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 25–30 May 2015; pp. 42–49. [Google Scholar]

- Oleynikova, H.; Burri, M.; Taylor, Z.; Nieto, J.; Siegwart, R.; Galceran, E. Continuous-time trajectory optimization for online UAV replanning. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 5332–5339. [Google Scholar] [CrossRef]

- Liu, S.; Watterson, M.; Mohta, K.; Sun, K.; Bhattacharya, S.; Taylor, C.J.; Kumar, V. Planning dynamically feasible trajectories for quadrotors using safe flight corridors in 3-d complex environments. IEEE Robot. Autom. Lett. 2017, 2, 1688–1695. [Google Scholar] [CrossRef]

- Watterson, M.; Kumar, V. Safe receding horizon control for aggressive MAV flight with limited range sensing. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September– 3 October 2015; pp. 3235–3240. [Google Scholar]

- Liu, Y.; Rajappa, S.; Montenbruck, J.M.; Stegagno, P.; Bülthoff, H.; Allgöwer, F.; Zell, A. Robust nonlinear control approach to nontrivial maneuvers and obstacle avoidance for quadrotor UAV under disturbances. Robot. Auton. Syst. 2017, 98, 317–332. [Google Scholar] [CrossRef]

- Spedicato, S.; Notarstefano, G. Minimum-time trajectory generation for quadrotors in constrained environments. IEEE Trans. Control Syst. Technol. 2017, 26, 1335–1344. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Wu, W.; Lin, Y.; Shen, S. Online safe trajectory generation for quadrotors using fast marching method and bernstein basis polynomial. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 344–351. [Google Scholar]

- Ryll, M.; Ware, J.; Carter, J.; Roy, N. Efficient trajectory planning for high speed flight in unknown environments. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 732–738. [Google Scholar]

- Tordesillas, J.; Lopez, B.T.; How, J.P. FASTER: Fast and Safe Trajectory Planner for Flights in Unknown Environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019. [Google Scholar]

- Tordesillas, J.; How, J.P. MADER: Trajectory Planner in Multiagent and Dynamic Environments. IEEE Trans. Robot. 2021. [Google Scholar] [CrossRef]

- Tordesillas, J.; How, J.P. PANTHER: Perception-Aware Trajectory Planner in Dynamic Environments. arXiv 2021, arXiv:2103.06372. [Google Scholar]

- Chen, G.; Sun, D.; Dong, W.; Sheng, X.; Zhu, X.; Ding, H. Computationally Efficient Trajectory Planning for High Speed Obstacle Avoidance of a Quadrotor With Active Sensing. IEEE Robot. Autom. Lett. 2021, 6, 3365–3372. [Google Scholar] [CrossRef]

- Ye, H.; Zhou, X.; Wang, Z.; Xu, C.; Chu, J.; Gao, F. TGK-Planner: An Efficient Topology Guided Kinodynamic Planner for Autonomous Quadrotors. IEEE Robot. Autom. Lett. 2020, 6, 494–501. [Google Scholar] [CrossRef]

- Bucki, N.; Lee, J.; Mueller, M.W. Rectangular Pyramid Partitioning Using Integrated Depth Sensors (RAPPIDS): A Fast Planner for Multicopter Navigation. IEEE Robot. Autom. Lett. 2020, 5, 4626–4633. [Google Scholar] [CrossRef]

- Ji, J.; Wang, Z.; Wang, Y.; Xu, C.; Gao, F. Mapless-Planner: A Robust and Fast Planning Framework for Aggressive Autonomous Flight without Map Fusion. arXiv 2020, arXiv:2011.03975. [Google Scholar]

- Quan, L.; Zhang, Z.; Xu, C.; Gao, F. EVA-Planner: Environmental Adaptive Quadrotor Planning. arXiv 2020, arXiv:2011.04246. [Google Scholar]

- Lee, J.; Wu, X.; Lee, S.J.; Mueller, M.W. Autonomous flight through cluttered outdoor environments using a memoryless planner. arXiv 2021, arXiv:2103.12156. [Google Scholar]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and efficient quadrotor trajectory generation for fast autonomous flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef] [Green Version]

- Mohta, K.; Watterson, M.; Mulgaonkar, Y.; Liu, S.; Qu, C.; Makineni, A.; Saulnier, K.; Sun, K.; Zhu, A.; Delmerico, J.; et al. Fast, autonomous flight in GPS-denied and cluttered environments. J. Field Robot. 2018, 35, 101–120. [Google Scholar] [CrossRef]

- Deits, R.; Tedrake, R. Computing large convex regions of obstacle-free space through semidefinite programming. In Algorithmic Foundations of Robotics XI; Springer International Publishing: Basel, Switzerland, 2015; pp. 109–124. [Google Scholar]

- Mueller, M.W.; Hehn, M.; D’Andrea, R. A computationally efficient motion primitive for quadrocopter trajectory generation. IEEE Trans. Robot. 2015, 31, 1294–1310. [Google Scholar] [CrossRef]

- Paranjape, A.A.; Meier, K.C.; Shi, X.; Chung, S.J.; Hutchinson, S. Motion primitives and 3D path planning for fast flight through a forest. Int. J. Robot. Res. 2015, 34, 357–377. [Google Scholar] [CrossRef] [Green Version]

- Lopez, B.T.; How, J.P. Aggressive 3-D collision avoidance for high-speed navigation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands, Singapore, 29 May–3 June 2017; pp. 5759–5765. [Google Scholar]

- Tordesillas, J.; Lopez, B.T.; Carter, J.; Ware, J.; How, J.P. Real-time planning with multi-fidelity models for agile flights in unknown environments. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 725–731. [Google Scholar]

- González-Sieira, A.; Cores, D.; Mucientes, M.; Bugarín, A. Autonomous navigation for UAVs managing motion and sensing uncertainty. Robot. Auton. Syst. 2020, 126, 103455. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner Jr, J.J. Randomized Kinodynamic Planning. Int. J. Robot. Res. 2001, 20, 378–400. [Google Scholar] [CrossRef]

- Lindqvist, B.; Mansouri, S.S.; Agha-mohammadi, A.a.; Nikolakopoulos, G. Nonlinear MPC for collision avoidance and control of UAVs with dynamic obstacles. IEEE Robot. Autom. Lett. 2020, 5, 6001–6008. [Google Scholar] [CrossRef]

- Sathya, A.; Sopasakis, P.; Van Parys, R.; Themelis, A.; Pipeleers, G.; Patrinos, P. Embedded nonlinear model predictive control for obstacle avoidance using PANOC. In Proceedings of the 2018 European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018; pp. 1523–1528. [Google Scholar]

- Stella, L.; Themelis, A.; Sopasakis, P.; Patrinos, P. A simple and efficient algorithm for nonlinear model predictive control. In Proceedings of the 2017 IEEE 56th Annual Conference on Decision and Control (CDC), Melbourne, Australia, 12–15 December 2017; pp. 1939–1944. [Google Scholar]

- Sopasakis, P.; Fresk, E.; Patrinos, P. OpEn: Code generation for embedded nonconvex optimization. arXiv 2020, arXiv:2003.00292. [Google Scholar]

- Mansouri, S.S.; Kanellakis, C.; Lindqvist, B.; Pourkamali-Anaraki, F.; Agha-Mohammadi, A.A.; Burdick, J.; Nikolakopoulos, G. A Unified NMPC Scheme for MAVs Navigation with 3D Collision Avoidance Under Position Uncertainty. IEEE Robot. Autom. Lett. 2020, 5, 5740–5747. [Google Scholar] [CrossRef]

- Zhang, Z.; Scaramuzza, D. Perception-aware receding horizon navigation for MAVs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2534–2541. [Google Scholar]

- Falanga, D.; Foehn, P.; Lu, P.; Scaramuzza, D. PAMPC: Perception-Aware Model Predictive Control for Quadrotors. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Murali, V.; Spasojevic, I.; Guerra, W.; Karaman, S. Perception-aware trajectory generation for aggressive quadrotor flight using differential flatness. In Proceedings of the 2019 American Control Conference (ACC), Philadelphia, PA, USA, 10–12 July 2019; pp. 3936–3943. [Google Scholar]

- Spasojevic, I.; Murali, V.; Karaman, S. Perception-aware time optimal path parameterization for quadrotors. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual, 31 May–15 June 2020; pp. 3213–3219. [Google Scholar]

- Sheckells, M.; Garimella, G.; Kobilarov, M. Optimal visual servoing for differentially flat underactuated systems. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 5541–5548. [Google Scholar]

- Toibero, J.M.; Roberti, F.; Carelli, R. Stable contour-following control of wheeled mobile robots. Robotica 2009, 27, 1–12. [Google Scholar] [CrossRef]

- Teimoori, H.; Savkin, A.V. A biologically inspired method for robot navigation in a cluttered environment. Robotica 2010, 28, 637–648. [Google Scholar] [CrossRef]

- Matveev, A.S.; Teimoori, H.; Savkin, A.V. A method for guidance and control of an autonomous vehicle in problems of border patrolling and obstacle avoidance. Automatica 2011, 47, 515–524. [Google Scholar] [CrossRef]

- Matveev, A.S.; Wang, C.; Savkin, A.V. Real-time navigation of mobile robots in problems of border patrolling and avoiding collisions with moving and deforming obstacles. Robot. Auton. Syst. 2012, 60, 769–788. [Google Scholar] [CrossRef]

- Savkin, A.V.; Wang, C. A simple biologically inspired algorithm for collision-free navigation of a unicycle-like robot in dynamic environments with moving obstacles. Robotica 2013, 31, 993–1001. [Google Scholar] [CrossRef]

- Matveev, A.; Savkin, A.; Hoy, M.; Wang, C. Safe Robot Navigation among Moving and Steady Obstacles; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Choi, Y.; Jimenez, H.; Mavris, D.N. Two-layer obstacle collision avoidance with machine learning for more energy-efficient unmanned aircraft trajectories. Robot. Auton. Syst. 2017, 98, 158–173. [Google Scholar] [CrossRef]

- McGuire, K.; De Croon, G.; De Wagter, C.; Tuyls, K.; Kappen, H. Efficient optical flow and stereo vision for velocity estimation and obstacle avoidance on an autonomous pocket drone. IEEE Robot. Autom. Lett. 2017, 2, 1070–1076. [Google Scholar] [CrossRef] [Green Version]

- Matveev, A.S.; Hoy, M.C.; Savkin, A.V. A Globally Converging Algorithm for Reactive Robot Navigation among Moving and Deforming Obstacles. Automatica 2015, 54, 292–304. [Google Scholar] [CrossRef]

- Mujumdar, A.; Padhi, R. Reactive collision avoidance of using nonlinear geometric and differential geometric guidance. J. Guid. Control. Dyn. 2011, 34, 303–311. [Google Scholar] [CrossRef]

- Wang, C.; Savkin, A.V.; Garratt, M. A strategy for safe 3D navigation of non-holonomic robots among moving obstacles. Robotica 2018, 36, 275–297. [Google Scholar] [CrossRef]

- Lin, Z.; Castano, L.; Mortimer, E.; Xu, H. Fast 3D Collision Avoidance Algorithm for Fixed Wing UAS. J. Intell. Robot. Syst. 2020, 97, 577–604. [Google Scholar] [CrossRef]

- Belkhouche, F.; Bendjilali, B. Reactive path planning for 3-d autonomous vehicles. IEEE Trans. Control Syst. Technol. 2012, 20, 249–256. [Google Scholar] [CrossRef]

- Belkhouche, F. Reactive optimal UAV motion planning in a dynamic world. Robot. Auton. Syst. 2017, 96, 114–123. [Google Scholar] [CrossRef]

- Wiig, M.S.; Pettersen, K.Y.; Krogstad, T.R. A 3D reactive collision avoidance algorithm for underactuated underwater vehicles. J. Field Robot. 2020, 37, 1094–1122. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Wang, H.; Zhang, M.; Yu, Y. On obstacle avoidance path planning in unknown 3D environments: A fluid-based framework. ISA Trans. 2021, 111, 249–264. [Google Scholar] [CrossRef]

- Elmokadem, T. A 3D Reactive Collision Free Navigation Strategy for Nonholonomic Mobile Robots. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 4661–4666. [Google Scholar] [CrossRef]

- Elmokadem, T. A Reactive Navigation Method of Quadrotor UAVs in Unknown Environments with Obstacles based on Differential-Flatness. In Proceedings of the 2019 Australasian Conference on Robotics and Automation (ACRA), Adelaide, Australia, 9–11 December 2019. [Google Scholar]

- Elmokadem, T.; Savkin, A.V. A Hybrid Approach for Autonomous Collision-Free UAV Navigation in 3D Partially Unknown Dynamic Environments. Drones 2021, 5, 57. [Google Scholar] [CrossRef]

- Yang, X.; Alvarez, L.M.; Bruggemann, T. A 3D collision avoidance strategy for UAVs in a non-cooperative environment. J. Intell. Robot. Syst. 2013, 70, 315–327. [Google Scholar] [CrossRef] [Green Version]

- Tan, C.Y.; Huang, S.; Tan, K.K.; Teo, R.S.H. Three Dimensional Collision Avoidance for Multi Unmanned Aerial Vehicles using Velocity Obstacle. J. Intell. Robot. Syst. 2020, 97, 227–248. [Google Scholar] [CrossRef]

- Zhu, L.; Cheng, X.; Yuan, F.G. A 3D collision avoidance strategy for UAV with physical constraints. Measurement 2016, 77, 40–49. [Google Scholar] [CrossRef]

- Roussos, G.; Dimarogonas, D.V.; Kyriakopoulos, K.J. 3D navigation and collision avoidance for nonholonomic aircraft-like vehicles. Int. J. Adapt. Control Signal Process. 2010, 24, 900–920. [Google Scholar] [CrossRef]

- Santos, M.C.P.; Rosales, C.D.; Sarcinelli-Filho, M.; Carelli, R. A novel null-space-based UAV trajectory tracking controller with collision avoidance. IEEE/ASME Trans. Mechatron. 2017, 22, 2543–2553. [Google Scholar] [CrossRef]

- Hrabar, S. Reactive obstacle avoidance for rotorcraft UAVs. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 4967–4974. [Google Scholar]

- Nguyen, P.D.; Recchiuto, C.T.; Sgorbissa, A. Real-time path generation and obstacle avoidance for multirotors: A novel approach. J. Intell. Robot. Syst. 2018, 89, 27–49. [Google Scholar] [CrossRef]

- Iacono, M.; Sgorbissa, A. Path following and obstacle avoidance for an autonomous UAV using a depth camera. Robot. Auton. Syst. 2018, 106, 38–46. [Google Scholar] [CrossRef]

- Elmokadem, T. A Control Strategy for the Safe Navigation of UAVs Among Dynamic Obstacles using Real-Time Deforming Trajectories. In Proceedings of the 2020 Australian and New Zealand Control Conference (ANZCC), Gold Coast, Australia, 26–27 November 2020; pp. 97–102. [Google Scholar]

- Oleynikova, H.; Honegger, D.; Pollefeys, M. Reactive avoidance using embedded stereo vision for MAV flight. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 25–30 May 2015; pp. 50–56. [Google Scholar] [CrossRef]

- Potena, C.; Nardi, D.; Pretto, A. Joint vision-based navigation, control and obstacle avoidance for UAVs in dynamic environments. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; pp. 1–7. [Google Scholar]

- Ross, S.; Melik-Barkhudarov, N.; Shankar, K.S.; Wendel, A.; Dey, D.; Bagnell, J.A.; Hebert, M. Learning monocular reactive UAV control in cluttered natural environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1765–1772. [Google Scholar]

- Zhang, B.; Mao, Z.; Liu, W.; Liu, J. Geometric reinforcement learning for path planning of UAVs. J. Intell. Robot. Syst. 2015, 77, 391–409. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.; Zhang, X.; Zhang, X. Autonomous navigation of UAV in large-scale unknown complex environment with deep reinforcement learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 858–862. [Google Scholar]

- Ma, Z.; Wang, C.; Niu, Y.; Wang, X.; Shen, L. A saliency-based reinforcement learning approach for a UAV to avoid flying obstacles. Robot. Auton. Syst. 2018, 100, 108–118. [Google Scholar] [CrossRef]

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-based deep reinforcement learning for obstacle avoidance in UAV with limited environment knowledge. IEEE Trans. Intell. Transp. Syst. 2019, 22, 107–118. [Google Scholar] [CrossRef]

- Walker, O.; Vanegas, F.; Gonzalez, F.; Koenig, S. A deep reinforcement learning framework for UAV navigation in indoor environments. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–14. [Google Scholar]

- Yan, C.; Xiang, X.; Wang, C. Towards real-time path planning through deep reinforcement learning for a UAV in dynamic environments. J. Intell. Robot. Syst. 2019, 98, 297–309. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.; Shen, Y.; Zhang, X. Autonomous navigation of UAVs in large-scale complex environments: A deep reinforcement learning approach. IEEE Trans. Veh. Technol. 2019, 68, 2124–2136. [Google Scholar] [CrossRef]

- Padhy, R.P.; Verma, S.; Ahmad, S.; Choudhury, S.K.; Sa, P.K. Deep neural network for autonomous UAV navigation in indoor corridor environments. Procedia Comput. Sci. 2018, 133, 643–650. [Google Scholar] [CrossRef]

- Dionisio-Ortega, S.; Rojas-Perez, L.O.; Martinez-Carranza, J.; Cruz-Vega, I. A deep learning approach towards autonomous flight in forest environments. In Proceedings of the 2018 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 1–23 February 2018; pp. 139–144. [Google Scholar]

- Dai, X.; Mao, Y.; Huang, T.; Qin, N.; Huang, D.; Li, Y. Automatic obstacle avoidance of quadrotor UAV via CNN-based learning. Neurocomputing 2020, 402, 346–358. [Google Scholar] [CrossRef]

- Back, S.; Cho, G.; Oh, J.; Tran, X.T.; Oh, H. Autonomous UAV trail navigation with obstacle avoidance using deep neural networks. J. Intell. Robot. Syst. 2020, 100, 1195–1211. [Google Scholar] [CrossRef]

- Lee, H.; Ho, H.; Zhou, Y. Deep Learning-based Monocular Obstacle Avoidance for Unmanned Aerial Vehicle Navigation in Tree Plantations. J. Intell. Robot. Syst. 2021, 101, 1–18. [Google Scholar] [CrossRef]

- Yang, X.; Chen, J.; Dang, Y.; Luo, H.; Tang, Y.; Liao, C.; Chen, P.; Cheng, K.T. Fast Depth Prediction and Obstacle Avoidance on a Monocular Drone Using Probabilistic Convolutional Neural Network. IEEE Trans. Intell. Transp. Syst. 2019, 22, 156–167. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Sanket, N.J.; Parameshwara, C.M.; Singh, C.D.; Kuruttukulam, A.V.; Fermüller, C.; Scaramuzza, D.; Aloimonos, Y. EVDodgeNet: Deep dynamic obstacle dodging with event cameras. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual, 31 May–15 June 2020; pp. 10651–10657. [Google Scholar]

- Hamel, T.; Mahony, R.; Lozano, R.; Ostrowski, J. Dynamic modelling and configuration stabilization for an X4-flyer. IFAC Proc. Vol. 2002, 35, 217–222. [Google Scholar] [CrossRef]

- Faessler, M.; Franchi, A.; Scaramuzza, D. Differential flatness of quadrotor dynamics subject to rotor drag for accurate tracking of high-speed trajectories. IEEE Robot. Autom. Lett. 2017, 3, 620–626. [Google Scholar] [CrossRef] [Green Version]

- Lesprier, J.; Biannic, J.M.; Roos, C. Modeling and robust nonlinear control of a fixed-wing UAV. In Proceedings of the 2015 IEEE Conference on Control Applications (CCA), Sydney, NSW, Australia, 21–23 September 2015; pp. 1334–1339. [Google Scholar]

- Foehn, P.; Falanga, D.; Kuppuswamy, N.; Tedrake, R.; Scaramuzza, D. Fast Trajectory Optimization for Agile Quadrotor Maneuvers with a Cable-Suspended Payload; Robotics: Science and Systems Foundation: Cambridge, MA, USA, 2017. [Google Scholar]

- Tang, S.; Wüest, V.; Kumar, V. Aggressive flight with suspended payloads using vision-based control. IEEE Robot. Autom. Lett. 2018, 3, 1152–1159. [Google Scholar] [CrossRef]

- Bangura, M.; Mahony, R. Real-time model predictive control for quadrotors. IFAC Proc. Vol. 2014, 47, 11773–11780. [Google Scholar] [CrossRef]

- Yamasaki, T.; Takano, H.; Bab, Y. Robust Path-Following for UAV Using Pure Pursuit Guidance; IntechOpen: London, UK, 2009; pp. 671–690. [Google Scholar] [CrossRef] [Green Version]

- Zheng, E.H.; Xiong, J.J.; Luo, J.L. Second order sliding mode control for a quadrotor UAV. ISA Trans. 2014, 53, 1350–1356. [Google Scholar] [CrossRef] [PubMed]

- Ambrosino, G.; Ariola, M.; Ciniglio, U.; Corraro, F.; De Lellis, E.; Pironti, A. Path generation and tracking in 3-D for UAVs. IEEE Trans. Control Syst. Technol. 2009, 17, 980–988. [Google Scholar] [CrossRef]

- Yang, K.; Kang, Y.; Sukkarieh, S. Adaptive nonlinear model predictive path-following control for a fixed-wing unmanned aerial vehicle. Int. J. Control. Autom. Syst. 2013, 11, 65–74. [Google Scholar] [CrossRef]

- Bicego, D.; Mazzetto, J.; Carli, R.; Farina, M.; Franchi, A. Nonlinear model predictive control with enhanced actuator model for multi-rotor aerial vehicles with generic designs. J. Intell. Robot. Syst. 2020, 100, 1213–1247. [Google Scholar] [CrossRef]

- Li, B.; Zhou, W.; Sun, J.; Wen, C.Y.; Chen, C.K. Development of model predictive controller for a Tail-Sitter VTOL UAV in hover flight. Sensors 2018, 18, 2859. [Google Scholar] [CrossRef] [Green Version]

- Kamel, M.; Burri, M.; Siegwart, R. Linear vs nonlinear MPC for trajectory tracking applied to rotary wing micro aerial vehicles. IFAC-PapersOnLine 2017, 50, 3463–3469. [Google Scholar] [CrossRef]

- Mellinger, D.; Michael, N.; Kumar, V. Trajectory generation and control for precise aggressive maneuvers with quadrotors. Int. J. Robot. Res. 2012, 31, 664–674. [Google Scholar] [CrossRef]

- Bry, A.; Richter, C.; Bachrach, A.; Roy, N. Aggressive flight of fixed-wing and quadrotor aircraft in dense indoor environments. Int. J. Robot. Res. 2015, 34, 969–1002. [Google Scholar] [CrossRef]

- Loianno, G.; Brunner, C.; McGrath, G.; Kumar, V. Estimation, control, and planning for aggressive flight with a small quadrotor with a single camera and IMU. IEEE Robot. Autom. Lett. 2016, 2, 404–411. [Google Scholar] [CrossRef]

- Michael, N.; Fink, J.; Kumar, V. Cooperative manipulation and transportation with aerial robots. Auton. Robot. 2011, 30, 73–86. [Google Scholar] [CrossRef] [Green Version]

- Fink, J.; Michael, N.; Kim, S.; Kumar, V. Planning and control for cooperative manipulation and transportation with aerial robots. Int. J. Robot. Res. 2011, 30, 324–334. [Google Scholar] [CrossRef]

- Sreenath, K.; Kumar, V. Dynamics, control and planning for cooperative manipulation of payloads suspended by cables from multiple quadrotor robots. rn 2013, 1, r3. [Google Scholar]

- Lee, T.; Leok, M.; McClamroch, N.H. Nonlinear robust tracking control of a quadrotor UAV on SE (3). Asian J. Control 2013, 15, 391–408. [Google Scholar] [CrossRef] [Green Version]

- Omari, S.; Hua, M.D.; Ducard, G.; Hamel, T. Nonlinear control of VTOL UAVs incorporating flapping dynamics. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2419–2425. [Google Scholar]

- Manjunath, A.; Mehrok, P.; Sharma, R.; Ratnoo, A. Application of virtual target based guidance laws to path following of a quadrotor UAV. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 252–260. [Google Scholar]

- Lee, H.; Kim, H.J. Trajectory tracking control of multirotors from modelling to experiments: A survey. Int. J. Control Autom. Syst. 2017, 15, 281–292. [Google Scholar] [CrossRef]

- Nascimento, T.P.; Saska, M. Position and attitude control of multi-rotor aerial vehicles: A survey. Annu. Rev. Control 2019, 48, 129–146. [Google Scholar] [CrossRef]

- Sujit, P.B.; Saripalli, S.; Sousa, J.B. An evaluation of UAV path following algorithms. In Proceedings of the 2013 European Control Conference (ECC), Zurich, Switzerland, 17–19 July 2013; pp. 3332–3337. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef] [Green Version]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 13–15 July 2015; Volume 2. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable 3D lidar-based system for long-term and wide-area people behavior measurement. IEEE Trans. Hum. Mach. Syst. 2018, 16, 1729881419841532. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Daniela, R. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, Nevada, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In The 13th European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Whelan, T.; Kaess, M.; Johannsson, H.; Fallon, M.; Leonard, J.J.; McDonald, J. Real-time large-scale dense RGB-D SLAM with volumetric fusion. Int. J. Robot. Res. 2015, 34, 598–626. [Google Scholar] [CrossRef] [Green Version]

- Naudet-Collette, S.; Melbouci, K.; Gay-Bellile, V.; Ait-Aider, O.; Dhome, M. Constrained RGBD-SLAM. Robotica 2021, 39, 277–290. [Google Scholar] [CrossRef]

- Holz, D.; Nieuwenhuisen, M.; Droeschel, D.; Schreiber, M.; Behnke, S. Towards Multimodal Omnidirectional Obstacle Detection for Autonomous Unmanned Aerial Vehicles. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 201–206. [Google Scholar] [CrossRef] [Green Version]

- Hoy, M.; Matveev, A.S.; Garratt, M.; Savkin, A.V. Collision-free navigation of an autonomous unmanned helicopter in unknown urban environments: Sliding mode and MPC approaches. Robotica 2012, 30, 537–550. [Google Scholar] [CrossRef]

- Yang, K.; Keat Gan, S.; Sukkarieh, S. A Gaussian process-based RRT planner for the exploration of an unknown and cluttered environment with a UAV. Adv. Robot. 2013, 27, 431–443. [Google Scholar] [CrossRef]

- Oleynikova, H.; Taylor, Z.; Siegwart, R.; Nieto, J. Safe Local Exploration for Replanning in Cluttered Unknown Environments for Microaerial Vehicles. IEEE Robot. Autom. Lett. 2018, 3, 1474–1481. [Google Scholar] [CrossRef] [Green Version]

- Meera, A.A.; Popović, M.; Millane, A.; Siegwart, R. Obstacle-aware adaptive informative path planning for UAV-based target search. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 718–724. [Google Scholar]

- Blösch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision based MAV navigation in unknown and unstructured environments. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 21–28. [Google Scholar] [CrossRef] [Green Version]

- Shen, S.; Michael, N.; Kumar, V. Autonomous multi-floor indoor navigation with a computationally constrained MAV. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 20–25. [Google Scholar]

- Perez-Grau, F.J.; Ragel, R.; Caballero, F.; Viguria, A.; Ollero, A. An architecture for robust UAV navigation in GPS-denied areas. J. Field Robot. 2018, 35, 121–145. [Google Scholar] [CrossRef]

- Bachrach, A.; Winter, A.D.; He, R.; Hemann, G.; Prentice, S.; Roy, N. RANGE—Robust autonomous navigation in GPS-denied environments. J. Field Robot. 2011, 28, 644–666. [Google Scholar] [CrossRef]

- Oleynikova, H.; Lanegger, C.; Taylor, Z.; Pantic, M.; Millane, A.; Siegwart, R.; Nieto, J. An open-source system for vision-based micro-aerial vehicle mapping, planning, and flight in cluttered environments. J. Field Robot. 2020, 37, 642–666. [Google Scholar] [CrossRef]

- Palossi, D.; Conti, F.; Benini, L. An open source and open hardware deep learning-powered visual navigation engine for autonomous nano-UAVs. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 604–611. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. Long-term online multi-session graph-based SPLAM with memory management. Auton. Robot. 2018, 42, 1133–1150. [Google Scholar] [CrossRef]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B.; Zhang, Y.; Chen, X.; Shen, S. FUEL: Fast UAV Exploration Using Incremental Frontier Structure and Hierarchical Planning. IEEE Robot. Autom. Lett. 2021, 6, 779–786. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, F.; Pan, J.; Shen, S. Robust real-time UAV replanning using guided gradient-based optimization and topological paths. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1208–1214. [Google Scholar]

- Pham, H.; Pham, Q. A new approach to time-optimal path parameterization based on reachability analysis. IEEE Trans. Robot. 2018, 34, 645–659. [Google Scholar] [CrossRef] [Green Version]

- Sundermeyer, M.; Marton, Z.C.; Durner, M.; Brucker, M.; Triebel, R. Implicit 3D Orientation Learning for 6D Object Detection from RGB Images. In Proceedings of the The European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wada, K.; Sucar, E.; James, S.; Lenton, D.; Davison, A.J. Morefusion: Multi-object reasoning for 6D pose estimation from volumetric fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14540–14549. [Google Scholar]

- Zampogiannis, K.; Fermuller, C.; Aloimonos, Y. cilantro: A Lean, Versatile, and Efficient Library for Point Cloud Data Processing. In Proceedings of the 26th ACM International Conference on Multimedia, MM’18, Seoul, Republic of Korea, 22–26 October 2018; ACM: New York, NY, USA, 2018; pp. 1364–1367. [Google Scholar] [CrossRef] [Green Version]

- Furrer, F.; Burri, M.; Achtelik, M.; Siegwart, R. RotorS—A Modular Gazebo MAV Simulator Framework. In Robot Operating System (ROS): The Complete Reference; Springer International Publishing: Cham, Switzerland, 2016; Volume 1, pp. 595–625. [Google Scholar] [CrossRef]

- Wächter, A.; Biegler, L.T. On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 2006, 106, 25–57. [Google Scholar] [CrossRef]

- Winkler, A.W. Ifopt–A Modern, Light-Weight, Eigen-Based C++ Interface to Nonlinear Programming Solvers Ipopt and Snopt. Available online: https://zenodo.org/record/1135085 (accessed on 15 September 2021).

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous UAV with vision based on-board decision making for remote sensing and precision agriculture. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Albani, D.; Nardi, D.; Trianni, V. Field coverage and weed mapping by UAV swarms. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4319–4325. [Google Scholar]

- Albani, D.; IJsselmuiden, J.; Haken, R.; Trianni, V. Monitoring and mapping with robot swarms for agricultural applications. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Gonzalez-de Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of robots for environmentally-safe pest control in agriculture. Precis. Agric. 2017, 18, 574–614. [Google Scholar] [CrossRef]

- Xue, X.; Lan, Y.; Sun, Z.; Chang, C.; Hoffmann, W.C. Develop an unmanned aerial vehicle based automatic aerial spraying system. Comput. Electron. Agric. 2016, 128, 58–66. [Google Scholar] [CrossRef]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- de Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Van Henten, E.J.; Hemming, J.; Van Tuijl, B.A.J.; Kornet, J.G.; Meuleman, J.; Bontsema, J.; Van Os, E.A. An autonomous robot for harvesting cucumbers in greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- De Cubber, G.; Doroftei, D.; Serrano, D.; Chintamani, K.; Sabino, R.; Ourevitch, S. The EU-ICARUS project: Developing assistive robotic tools for search and rescue operations. In Proceedings of the 2013 IEEE international symposium on safety, security, and rescue robotics (SSRR), Linkoping, Sweden, 21–26 October 2013; pp. 1–4. [Google Scholar]

- Scherer, J.; Yahyanejad, S.; Hayat, S.; Yanmaz, E.; Andre, T.; Khan, A.; Vukadinovic, V.; Bettstetter, C.; Hellwagner, H.; Rinner, B. An autonomous multi-UAV system for search and rescue. In Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, Florence, Italy, 18 May 2015; pp. 33–38. [Google Scholar]

- Qi, J.; Song, D.; Shang, H.; Wang, N.; Hua, C.; Wu, C.; Qi, X.; Han, J. Search and rescue rotary-wing UAV and its application to the lushan ms 7.0 earthquake. J. Field Robot. 2016, 33, 290–321. [Google Scholar] [CrossRef]

- Silvagni, M.; Tonoli, A.; Zenerino, E.; Chiaberge, M. Multipurpose UAV for search and rescue operations in mountain avalanche events. Geomat. Nat. Hazards Risk 2017, 8, 18–33. [Google Scholar] [CrossRef] [Green Version]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef] [Green Version]

- Verykokou, S.; Ioannidis, C.; Athanasiou, G.; Doulamis, N.; Amditis, A. 3D reconstruction of disaster scenes for urban search and rescue. Multimed. Tools Appl. 2018, 77, 9691–9717. [Google Scholar] [CrossRef]

- Mittal, M.; Mohan, R.; Burgard, W.; Valada, A. Vision-based autonomous UAV navigation and landing for urban search and rescue. arXiv 2019, arXiv:1906.01304. [Google Scholar]

- Dang, T.; Mascarich, F.; Khattak, S.; Nguyen, H.; Nguyen, H.; Hirsh, S.; Reinhart, R.; Papachristos, C.; Alexis, K. Autonomous search for underground mine rescue using aerial robots. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; pp. 1–8. [Google Scholar]

- Zimroz, P.; Trybała, P.; Wróblewski, A.; Góralczyk, M.; Szrek, J.; Wójcik, A.; Zimroz, R. Application of UAV in Search and Rescue Actions in Underground Mine—A Specific Sound Detection in Noisy Acoustic Signal. Energies 2021, 14, 3725. [Google Scholar] [CrossRef]

- Yeong, S.; King, L.; Dol, S. A review on marine search and rescue operations using unmanned aerial vehicles. Int. J. Mar. Environ. Sci. 2015, 9, 396–399. [Google Scholar]

- Xiao, X.; Dufek, J.; Woodbury, T.; Murphy, R. UAV assisted USV visual navigation for marine mass casualty incident response. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6105–6110. [Google Scholar]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised human detection with an embedded vision system on a fully autonomous UAV for search and rescue operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qingqing, L.; Taipalmaa, J.; Queralta, J.P.; Gia, T.N.; Gabbouj, M.; Tenhunen, H.; Raitoharju, J.; Westerlund, T. Towards active vision with UAVs in marine search and rescue: Analyzing human detection at variable altitudes. In Proceedings of the 2020 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Abu Dhabi, UAE, 4–6 November 2020; pp. 65–70. [Google Scholar]

- Chamoso, P.; Raveane, W.; Parra, V.; González, A. UAVs applied to the counting and monitoring of animals. In Ambient Intelligence-Software and Applications; Springer: Cham, Switzerland, 2014; pp. 71–80. [Google Scholar]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Sun, Z.; Li, C. Livestock classification and counting in quadcopter aerial images using Mask R-CNN. Int. J. Remote Sens. 2020, 41, 8121–8142. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (UAVs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [Green Version]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 331. [Google Scholar] [CrossRef] [Green Version]

- Su, X.; Dong, S.; Liu, S.; Cracknell, A.P.; Zhang, Y.; Wang, X.; Liu, G. Using an unmanned aerial vehicle (UAV) to study wild yak in the highest desert in the world. Int. J. Remote Sens. 2018, 39, 5490–5503. [Google Scholar] [CrossRef]

- Fortuna, J.; Ferreira, F.; Gomes, R.; Ferreira, S.; Sousa, J. Using low cost open source UAVs for marine wild life monitoring-Field Report. IFAC Proc. Vol. 2013, 46, 291–295. [Google Scholar] [CrossRef]

- Paranjape, A.A.; Chung, S.J.; Kim, K.; Shim, D.H. Robotic herding of a flock of birds using an unmanned aerial vehicle. IEEE Trans. Robot. 2018, 34, 901–915. [Google Scholar] [CrossRef] [Green Version]

- Yaxley, K.J.; McIntyre, N.; Park, J.; Healey, J. Sky shepherds: A tale of a UAV and sheep. In Shepherding UxVs for Human-Swarm Teaming: An Artificial Intelligence Approach to Unmanned X Vehicles; Springer: Cham, Switzerland, 2021; pp. 189–206. [Google Scholar]

- Scobie, C.A.; Hugenholtz, C.H. Wildlife monitoring with unmanned aerial vehicles: Quantifying distance to auditory detection. Wildl. Soc. Bull. 2016, 40, 781–785. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Koh, L.P. Best practice for minimising unmanned aerial vehicle disturbance to wildlife in biological field research. Curr. Biol. 2016, 26, R404–R405. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eheim, C.; Dixon, C.; Argrow, B.; Palo, S. Tornadochaser: A remotely-piloted UAV for in situ meteorological measurements. In Proceedings of the 1st UAV Conference, Portsmouth, VA, USA, 20–23 May 2002; p. 3479. [Google Scholar]

- DeBusk, W. Unmanned aerial vehicle systems for disaster relief: Tornado alley. In Proceedings of the AIAA Infotech@ Aerospace 2010, Atlanta, GA, USA, 20–22 April 2010; p. 3506. [Google Scholar]

- Witte, B.M.; Schlagenhauf, C.; Mullen, J.; Helvey, J.P.; Thamann, M.A.; Bailey, S. Fundamental Turbulence Measurement with Unmanned Aerial Vehicles. In Proceedings of the 8th AIAA Atmospheric and Space Environments Conference, Washington, DC, USA, 13–17 June 2016; p. 3584. [Google Scholar]

- Avery, A.S.; Hathaway, J.D.; Jacob, J.D. Sensor Suite Development for a Weather UAV. In Proceedings of the 7th AIAA Atmospheric and Space Environments Conference, Dallas, TX, USA, 22–26 June 2015; p. 3022. [Google Scholar]

- Zhou, S.; Gheisari, M. Unmanned aerial system applications in construction: A systematic review. In Construction Innovation; Emerald Publishing Limited: Bingley, UK, 2018. [Google Scholar]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- De Melo, R.R.S.; Costa, D.B.; Álvares, J.S.; Irizarry, J. Applicability of unmanned aerial system (UAS) for safety inspection on construction sites. Saf. Sci. 2017, 98, 174–185. [Google Scholar] [CrossRef]

- Li, Y.; Liu, C. Applications of multirotor drone technologies in construction management. Int. J. Constr. Manag. 2019, 19, 401–412. [Google Scholar] [CrossRef]

- Jacob-Loyola, N.; Rivera, M.L.; Herrera, R.F.; Atencio, E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors 2021, 21, 4227. [Google Scholar] [CrossRef] [PubMed]

- Nikulin, A.; de Smet, T.S. A UAV-based magnetic survey method to detect and identify orphaned oil and gas wells. Lead. Edge 2019, 38, 447–452. [Google Scholar] [CrossRef]

- Gómez, C.; Green, D.R. Small unmanned airborne systems to support oil and gas pipeline monitoring and mapping. Arab. J. Geosci. 2017, 10, 202. [Google Scholar] [CrossRef]

- Shukla, A.; Xiaoqian, H.; Karki, H. Autonomous tracking and navigation controller for an unmanned aerial vehicle based on visual data for inspection of oil and gas pipelines. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, South Korea, 16–19 October 2016; pp. 194–200. [Google Scholar]

- Yan, Y.; Ma, S.; Yin, S.; Hu, S.; Long, Y.; Xie, C.; Jiang, H. Detection and numerical simulation of potential hazard in oil pipeline areas based on UAV surveys. Front. Earth Sci. 2021, 9, 272. [Google Scholar] [CrossRef]

- Bretschneider, T.R.; Shetti, K. UAV-based gas pipeline leak detection. In Proceedings of the ARCS, Porto, Portugal, 24–27 March 2015. [Google Scholar]

- Quater, P.B.; Grimaccia, F.; Leva, S.; Mussetta, M.; Aghaei, M. Light Unmanned Aerial Vehicles (UAVs) for cooperative inspection of PV plants. IEEE J. Photovoltaics 2014, 4, 1107–1113. [Google Scholar] [CrossRef] [Green Version]

- Vega Díaz, J.J.; Vlaminck, M.; Lefkaditis, D.; Orjuela Vargas, S.A.; Luong, H. Solar panel detection within complex backgrounds using thermal images acquired by UAVs. Sensors 2020, 20, 6219. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Yuan, X.; Li, W.; Chen, S. Automatic power line inspection using UAV images. Remote Sens. 2017, 9, 824. [Google Scholar] [CrossRef] [Green Version]

- Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar]

- Cheng, L.; Tan, X.; Yao, D.; Xu, W.; Wu, H.; Chen, Y. A Fishery Water Quality Monitoring and Prediction Evaluation System for Floating UAV Based on Time Series. Sensors 2021, 21, 4451. [Google Scholar] [CrossRef] [PubMed]

- Lipovskỳ, P.; Draganová, K.; Novotňák, J.; Szoke, Z.; Fil’ko, M. Indoor Mapping of Magnetic Fields Using UAV Equipped with Fluxgate Magnetometer. Sensors 2021, 21, 4191. [Google Scholar] [CrossRef] [PubMed]

- Klausen, K.; Fossen, T.I.; Johansen, T.A. Nonlinear control with swing damping of a multirotor UAV with suspended load. J. Intell. Robot. Syst. 2017, 88, 379–394. [Google Scholar] [CrossRef]

- Villa, D.K.; Brandao, A.S.; Sarcinelli-Filho, M. A survey on load transportation using multirotor UAVs. J. Intell. Robot. Syst. 2019, 98, 267–296. [Google Scholar] [CrossRef]

- González-deSantos, L.M.; Martínez-Sánchez, J.; González-Jorge, H.; Ribeiro, M.; de Sousa, J.B.; Arias, P. Payload for contact inspection tasks with UAV systems. Sensors 2019, 19, 3752. [Google Scholar] [CrossRef] [Green Version]

- Kanistras, K.; Martins, G.; Rutherford, M.J.; Valavanis, K.P. A survey of unmanned aerial vehicles (UAVs) for traffic monitoring. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 221–234. [Google Scholar]

- Silva, L.A.; Sanchez San Blas, H.; Peral García, D.; Sales Mendes, A.; Villarubia González, G. An architectural multi-agent system for a pavement monitoring system with pothole recognition in UAV images. Sensors 2020, 20, 6205. [Google Scholar] [CrossRef]

- Outay, F.; Mengash, H.A.; Adnan, M. Applications of unmanned aerial vehicle (UAV) in road safety, traffic and highway infrastructure management: Recent advances and challenges. Transp. Res. Part A Policy Pract. 2020, 141, 116–129. [Google Scholar] [CrossRef]

- Mademlis, I.; Mygdalis, V.; Nikolaidis, N.; Pitas, I. Challenges in autonomous UAV cinematography: An overview. In Proceedings of the 2018 IEEE international conference on multimedia and expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Augugliaro, F.; Schoellig, A.P.; D’Andrea, R. Dance of the flying machines: Methods for designing and executing an aerial dance choreography. IEEE Robot. Autom. Mag. 2013, 20, 96–104. [Google Scholar] [CrossRef]

- Schoellig, A.P.; Siegel, H.; Augugliaro, F.; D’Andrea, R. So you think you can dance? Rhythmic flight performances with quadrocopters. In Controls and Art; Springer International Publishing: Basel, Switzerland, 2014; pp. 73–105. [Google Scholar]

- Kim, S.J.; Jeong, Y.; Park, S.; Ryu, K.; Oh, G. A survey of drone use for entertainment and AVR (augmented and virtual reality). In Augmented Reality and Virtual Reality; Springer: Cham, Switzerland, 2018; pp. 339–352. [Google Scholar]

- Ang, K.Z.; Dong, X.; Liu, W.; Qin, G.; Lai, S.; Wang, K.; Wei, D.; Zhang, S.; Phang, S.K.; Chen, X.; et al. High-precision multi-UAV teaming for the first outdoor night show in Singapore. Unmanned Syst. 2018, 6, 39–65. [Google Scholar] [CrossRef]

- Imdoukh, A.; Shaker, A.; Al-Toukhy, A.; Kablaoui, D.; El-Abd, M. Semi-autonomous indoor firefighting UAV. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 310–315. [Google Scholar]

- Qin, H.; Cui, J.Q.; Li, J.; Bi, Y.; Lan, M.; Shan, M.; Liu, W.; Wang, K.; Lin, F.; Zhang, Y.; et al. Design and implementation of an unmanned aerial vehicle for autonomous firefighting missions. In Proceedings of the 2016 12th IEEE International Conference on Control and Automation (ICCA), Kathmandu, Nepal, 1–3 June 2016; pp. 62–67. [Google Scholar]

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Chung, S.J.; Paranjape, A.A.; Dames, P.; Shen, S.; Kumar, V. A survey on aerial swarm robotics. IEEE Trans. Robot. 2018, 34, 837–855. [Google Scholar] [CrossRef] [Green Version]

- Oh, K.K.; Park, M.C.; Ahn, H.S. A survey of multi-agent formation control. Automatica 2015, 53, 424–440. [Google Scholar] [CrossRef]

- Saif, O.; Fantoni, I.; Zavala-Río, A. Distributed integral control of multiple UAVs: Precise flocking and navigation. IET Control Theory Appl. 2019, 13, 2008–2017. [Google Scholar] [CrossRef] [Green Version]

- Mercado, D.; Castro, R.; Lozano, R. Quadrotors flight formation control using a leader-follower approach. In Proceedings of the 2013 European Control Conference (ECC), Zurich, Switzerland, 17–19 July 2013; pp. 3858–3863. [Google Scholar]

- Hou, Z.; Fantoni, I. Distributed leader-follower formation control for multiple quadrotors with weighted topology. In Proceedings of the 2015 10th System of Systems Engineering Conference (SoSE), San Antonio, TX, USA, 17–20 May 2015; pp. 256–261. [Google Scholar]

- Dehghani, M.A.; Menhaj, M.B. Communication free leader-follower formation control of unmanned aircraft systems. Robot. Auton. Syst. 2016, 80, 69–75. [Google Scholar] [CrossRef]

- Xuan-Mung, N.; Hong, S.K. Robust adaptive formation control of quadcopters based on a leader-follower approach. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419862733. [Google Scholar] [CrossRef] [Green Version]

- Walter, V.; Staub, N.; Franchi, A.; Saska, M. UVDAR System for Visual Relative Localization with Application to Leader-Follower Formations of Multirotor UAVs. IEEE Robot. Autom. Lett. 2019, 4, 2637–2644. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Shen, L.; Liu, Z.; Zhao, S.; Cong, Y.; Li, Z.; Jia, S.; Chen, H.; Yu, Y.; Chang, Y.; et al. Coordinated flight control of miniature fixed-wing UAV swarms: Methods and experiments. Sci. China Inf. Sci. 2019, 62, 212204. [Google Scholar] [CrossRef] [Green Version]

- Tagliabue, A.; Kamel, M.; Siegwart, R.; Nieto, J. Robust collaborative object transportation using multiple MAVs. Int. J. Robot. Res. 2019, 38, 1020–1044. [Google Scholar] [CrossRef] [Green Version]

- Ren, W.; Beard, R.W. Decentralized scheme for spacecraft formation flying via the virtual structure approach. J. Guid. Control. Dyn. 2004, 27, 73–82. [Google Scholar] [CrossRef]

- Li, N.H.; Liu, H.H. Formation UAV flight control using virtual structure and motion synchronization. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 1782–1787. [Google Scholar]

- Yoshioka, C.; Namerikawa, T. Formation control of nonholonomic multi-vehicle systems based on virtual structure. IFAC Proc. Vol. 2008, 41, 5149–5154. [Google Scholar] [CrossRef] [Green Version]

- Bayezit, İ.; Fidan, B. Distributed cohesive motion control of flight vehicle formations. IEEE Trans. Ind. Electron. 2012, 60, 5763–5772. [Google Scholar] [CrossRef]

- Kushleyev, A.; Mellinger, D.; Powers, C.; Kumar, V. Towards a swarm of agile micro quadrotors. Auton. Robot. 2013, 35, 287–300. [Google Scholar] [CrossRef]

- Zhihao, C.; Longhong, W.; Jiang, Z.; Kun, W.; Yingxun, W. Virtual target guidance-based distributed model predictive control for formation control of multiple UAVs. Chin. J. Aeronaut. 2020, 33, 1037–1056. [Google Scholar]

- Reynolds, C.W. Flocks, herds and schools: A distributed behavioral model. In ACM SIGGRAPH Computer Graphics; ACM: New York, NY, USA, 1987; Volume 21, pp. 25–34. [Google Scholar]

- Olfati-Saber, R. Flocking for multi-agent dynamic systems: Algorithms and theory. IEEE Trans. Autom. Control 2006, 51, 401–420. [Google Scholar] [CrossRef] [Green Version]

- Tanner, H.G.; Jadbabaie, A.; Pappas, G.J. Flocking in fixed and switching networks. IEEE Trans. Autom. Control 2007, 52, 863–868. [Google Scholar] [CrossRef]

- Savkin, A.V.; Teimoori, H. Decentralized navigation of groups of wheeled mobile robots with limited communication. IEEE Trans. Robot. 2010, 26, 1099–1104. [Google Scholar] [CrossRef]

- Reyes, L.A.V.; Tanner, H.G. Flocking, formation control, and path following for a group of mobile robots. IEEE Trans. Control Syst. Technol. 2015, 23, 1268–1282. [Google Scholar] [CrossRef]

- Khaledyan, M.; Liu, T.; Fernandez-Kim, V.; de Queiroz, M. Flocking and target interception control for formations of nonholonomic kinematic agents. IEEE Trans. Control Syst. Technol. 2019, 28, 1603–1610. [Google Scholar] [CrossRef] [Green Version]

- Do, K.D. Flocking for multiple elliptical agents with limited communication ranges. IEEE Trans. Robot. 2011, 27, 931–942. [Google Scholar] [CrossRef]

- Antonelli, G.; Arrichiello, F.; Chiaverini, S. Flocking for multi-robot systems via the null-space-based behavioral control. Swarm Intell. 2010, 4, 37. [Google Scholar] [CrossRef]

- Ghapani, S.; Mei, J.; Ren, W.; Song, Y. Fully distributed flocking with a moving leader for Lagrange networks with parametric uncertainties. Automatica 2016, 67, 67–76. [Google Scholar] [CrossRef] [Green Version]

- Jafari, M.; Xu, H. A biologically-inspired distributed fault tolerant flocking control for multi-agent system in presence of uncertain dynamics and unknown disturbance. Eng. Appl. Artif. Intell. 2019, 79, 1–12. [Google Scholar] [CrossRef]

- Ren, W.; Atkins, E. Distributed multi-vehicle coordinated control via local information exchange. Int. J. Robust Nonlinear Control. IFAC-Affil. J. 2007, 17, 1002–1033. [Google Scholar] [CrossRef] [Green Version]

- Saulnier, K.; Saldana, D.; Prorok, A.; Pappas, G.J.; Kumar, V. Resilient flocking for mobile robot teams. IEEE Robot. Autom. Lett. 2017, 2, 1039–1046. [Google Scholar] [CrossRef]

- Cao, Y.; Stuart, D.; Ren, W.; Meng, Z. Distributed containment control for multiple autonomous vehicles with double-integrator dynamics: Algorithms and experiments. IEEE Trans. Control Syst. Technol. 2011, 19, 929–938. [Google Scholar] [CrossRef]

- Dimarogonas, D.V.; Kyriakopoulos, K.J. A connection between formation infeasibility and velocity alignment in kinematic multi-agent systems. Automatica 2008, 44, 2648–2654. [Google Scholar] [CrossRef]

- Yang, D.; Ren, W.; Liu, X. Fully distributed adaptive sliding-mode controller design for containment control of multiple Lagrangian systems. Syst. Control Lett. 2014, 72, 44–52. [Google Scholar] [CrossRef]

- Augugliaro, F.; Schoellig, A.P.; D’Andrea, R. Generation of collision-free trajectories for a quadrocopter fleet: A sequential convex programming approach. In Proceedings of the 2012 IEEE/RSJ international conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1917–1922. [Google Scholar]

- Alonso-Mora, J.; Naegeli, T.; Siegwart, R.; Beardsley, P. Collision avoidance for aerial vehicles in multi-agent scenarios. Auton. Robot. 2015, 39, 101–121. [Google Scholar] [CrossRef]

- Preiss, J.A.; Hönig, W.; Ayanian, N.; Sukhatme, G.S. Downwash-aware trajectory planning for large quadrotor teams. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 250–257. [Google Scholar]

- Hönig, W.; Preiss, J.A.; Kumar, T.S.; Sukhatme, G.S.; Ayanian, N. Trajectory planning for quadrotor swarms. IEEE Trans. Robot. 2018, 34, 856–869. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Montijano, E.; Nägeli, T.; Hilliges, O.; Schwager, M.; Rus, D. Distributed multi-robot formation control in dynamic environments. Auton. Robot. 2019, 43, 1079–1100. [Google Scholar] [CrossRef] [Green Version]

- Madridano, Á.; Al-Kaff, A.; Martín, D. 3D Trajectory Planning Method for UAVs Swarm in Building Emergencies. Sensors 2020, 20, 642. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Wang, Z.; Wen, X.; Zhu, J.; Xu, C.; Gao, F. Decentralized Spatial-Temporal Trajectory Planning for Multicopter Swarms. arXiv 2021, arXiv:2106.12481. [Google Scholar]

- Zhou, X.; Wen, X.; Zhu, J.; Zhou, H.; Xu, C.; Gao, F. Ego-swarm: A fully autonomous and decentralized quadrotor swarm system in cluttered environments. arXiv 2020, arXiv:2011.04183. [Google Scholar]

- Park, J.; Kim, H.J. Online Trajectory Planning for Multiple Quadrotors in Dynamic Environments Using Relative Safe Flight Corridor. IEEE Robot. Autom. Lett. 2020, 6, 659–666. [Google Scholar] [CrossRef]

- Park, J.; Kim, J.; Jang, I.; Kim, H.J. Efficient multi-agent trajectory planning with feasibility guarantee using relative bernstein polynomial. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 434–440. [Google Scholar]

- Jackson, B.E.; Howell, T.A.; Shah, K.; Schwager, M.; Manchester, Z. Scalable cooperative transport of cable-suspended loads with UAVs using distributed trajectory optimization. IEEE Robot. Autom. Lett. 2020, 5, 3368–3374. [Google Scholar] [CrossRef]

- Lee, T.; Sreenath, K.; Kumar, V. Geometric control of cooperating multiple quadrotor UAVs with a suspended payload. In Proceedings of the 52nd IEEE Conference on Decision and Control, Firenze, Italy, 10–13 December 2013; pp. 5510–5515. [Google Scholar]

- Tognon, M.; Gabellieri, C.; Pallottino, L.; Franchi, A. Aerial co-manipulation with cables: The role of internal force for equilibria, stability, and passivity. IEEE Robot. Autom. Lett. 2018, 3, 2577–2583. [Google Scholar] [CrossRef] [Green Version]

- Loianno, G.; Spurny, V.; Thomas, J.; Baca, T.; Thakur, D.; Hert, D.; Penicka, R.; Krajnik, T.; Zhou, A.; Cho, A.; et al. Localization, grasping, and transportation of magnetic objects by a team of mavs in challenging desert-like environments. IEEE Robot. Autom. Lett. 2018, 3, 1576–1583. [Google Scholar] [CrossRef]

- Lindsey, Q.; Mellinger, D.; Kumar, V. Construction of cubic structures with quadrotor teams. In Proceedings of the Robotics: Science & Systems VII, Los Angeles, CA, USA, 27–30 June 2011. [Google Scholar]

- Mellinger, D.; Shomin, M.; Michael, N.; Kumar, V. Cooperative grasping and transport using multiple quadrotors. In Distributed Autonomous Robotic Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 545–558. [Google Scholar]

- Loianno, G.; Kumar, V. Cooperative transportation using small quadrotors using monocular vision and inertial sensing. IEEE Robot. Autom. Lett. 2017, 3, 680–687. [Google Scholar] [CrossRef]

- Sanalitro, D.; Savino, H.J.; Tognon, M.; Cortés, J.; Franchi, A. Full-pose Manipulation Control of a Cable-suspended load with Multiple UAVs under Uncertainties. IEEE Robot. Autom. Lett. 2020, 5, 2185–2191. [Google Scholar] [CrossRef] [Green Version]