Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios

Abstract

:1. Introduction

2. Related Works

3. Proposed Method

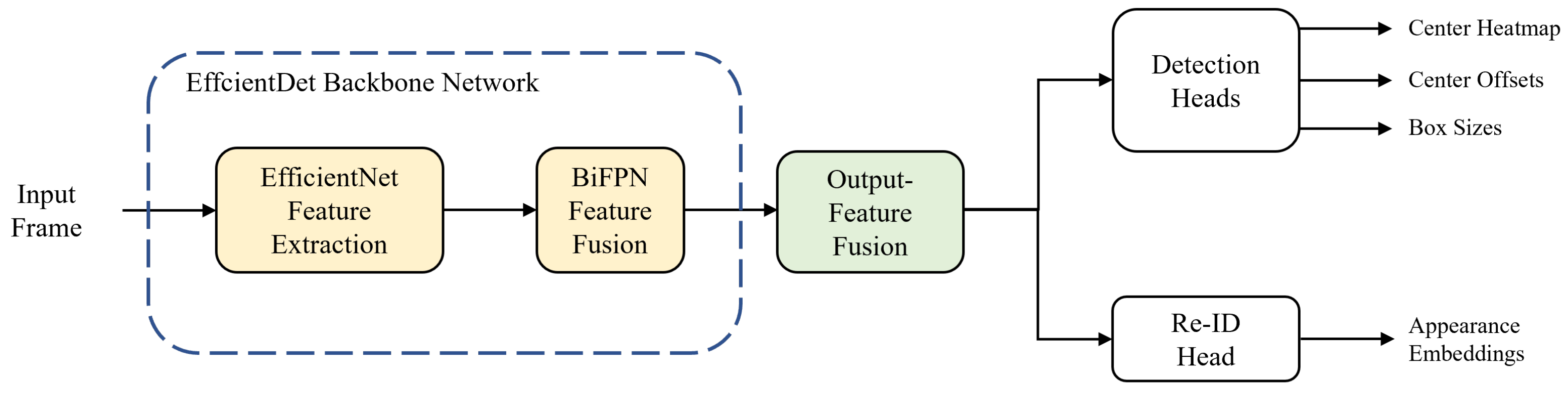

3.1. Backbone Network Architecture

3.2. Output-Feature Fusion

3.3. Prediction Heads

3.4. Online Association

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

5. Results

5.1. Object Detection

5.2. Multi-Object Tracking

5.2.1. CLEAR MOT Metrics

5.2.2. UA-DETRAC Metrics

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Yu, F.; Li, W.; Li, Q.; Liu, Y.; Shi, X.; Yan, J. POI: Multiple object tracking with high performance detection and appearance feature. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 36–42. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. arXiv 2019, arXiv:1909.12605. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the fairness of detection and re-identification in multiple object tracking. arXiv 2020, arXiv:2004.01888. [Google Scholar]

- Voigtlaender, P.; Krause, M.; Osep, A.; Luiten, J.; Sekar, B.B.G.; Geiger, A.; Leibe, B. MOTS: Multi-object tracking and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7942–7951. [Google Scholar]

- Sun, S.; Akhtar, N.; Song, X.; Song, H.; Mian, A.; Shah, M. Simultaneous detection and tracking with motion modelling for multiple object tracking. arXiv 2020, arXiv:2008.08826. [Google Scholar]

- Peri, N.; Khorramshahi, P.; Rambhatla, S.S.; Shenoy, V.; Rawat, S.; Chen, J.C.; Chellappa, R. Towards real-time systems for vehicle re-identification, multi-camera tracking, and anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 622–623. [Google Scholar]

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-time multiple people tracking with deeply learned candidate selection and person re-identification. In Proceedings of the IEEE International Conference on Multimedia and Expo, San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Zhao, D.; Fu, H.; Xiao, L.; Wu, T.; Dai, B. Multi-object tracking with correlation filter for autonomous vehicle. Sensors 2018, 18, 2004. [Google Scholar] [CrossRef] [Green Version]

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.C.; Qi, H.; Lim, J.; Yang, M.H.; Lyu, S. UA-DETRAC: A new benchmark and protocol for multi-object detection and tracking. arXiv 2015, arXiv:1511.04136. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; Department of Computer Science University of North Carolina: Chapel Hill, NC, USA, 1995; pp. 41–95. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep layer aggregation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2403–2412. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast feature pyramids for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cai, Z.; Saberian, M.; Vasconcelos, N. Learning complexity-aware cascades for deep pedestrian detection. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 3361–3369. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally-optimal greedy algorithms for tracking a variable number of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1201–1208. [Google Scholar]

- Andriyenko, A.; Schindler, K. Multi-target tracking by continuous energy minimization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1265–1272. [Google Scholar]

- Andriyenko, A.; Schindler, K.; Roth, S. Discrete-continuous optimization for multi-target tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1926–1933. [Google Scholar]

- Dicle, C.; Sznaier, M.; Camps, O. The way they move: Tracking multiple targets with similar appearance. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2304–2311. [Google Scholar]

- Wen, L.; Li, W.; Yan, J.; Lei, Z.; Yi, D.; Li, S.Z. Multiple target tracking based on undirected hierarchical relation hypergraph. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1282–1289. [Google Scholar]

- Bae, S.; Yoon, K. Robust online multi-object tracking based on tracklet confidence and online discriminative appearance learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1218–1225. [Google Scholar]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU based multi-object tracking by visual information. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance, Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. Proc. Mach. Learn. Res. 2019, 97, 6105–6114. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; Kaiming, H.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. MnasNet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 483–499. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7482–7491. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Everingham, M.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Chu, P.; Ling, H. FAMNet: Joint learning of feature, affinity and multi-dimensional assignment for online multiple object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6172–6181. [Google Scholar]

- Wang, L.; Lu, Y.; Wang, H.; Zheng, Y.; Ye, H.; Xue, X. Evolving boxes for fast vehicle detection. In Proceedings of the IEEE International Conference on Multimedia and Expo, Hong Kong, 10–14 July 2017; pp. 1135–1140. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.; Shah, M. Deep affinity network for multiple object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 104–119. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Operation | Input Resolution (Width × Height) | # Output Channels | # Layers |

|---|---|---|---|

| Conv, k3×3 | 1024 × 512 | 32 | 1 |

| MBConv1, k3×3 | 512 × 256 | 16 | 1 |

| MBConv6, k3×3 | 512 × 256 | 24 | 2 |

| MBConv6, k5×5 | 256 × 128 | 40 | 2 |

| MBConv6, k3×3 | 128 × 64 | 80 | 3 |

| MBConv6, k5×5 | 64 × 32 | 112 | 3 |

| MBConv6, k5×5 | 64 × 32 | 192 | 4 |

| MBConv6, k3×3 | 32 × 16 | 320 | 1 |

| Method | Type | Overall | Easy | Medium | Hard | Cloudy | Rainy | Sunny | Night |

|---|---|---|---|---|---|---|---|---|---|

| DPM * [29] | D | 25.74 | 34.55 | 30.33 | 17.68 | 24.82 | 25.59 | 31.84 | 30.95 |

| ACF * [30] | 46.44 | 54.37 | 51.69 | 38.14 | 58.44 | 37.19 | 66.69 | 35.35 | |

| R-CNN * [17] | 49.23 | 59.88 | 54.33 | 39.63 | 60.12 | 39.27 | 67.92 | 39.56 | |

| CompACT * [31] | 53.31 | 64.94 | 58.80 | 43.22 | 63.30 | 44.28 | 71.25 | 46.47 | |

| DMM-Net [7] | S | 20.69 | 31.21 | 23.60 | 14.03 | 26.10 | 14.56 | 36.89 | 15.01 |

| JDE [4] | 63.57 | 79.84 | 70.46 | 49.88 | 76.02 | 50.42 | 73.00 | 58.92 | |

| FairMOT [5] | 67.74 | 81.33 | 73.32 | 56.78 | 77.21 | 55.46 | 75.44 | 69.05 | |

| EMOT (Ours) | 69.93 | 86.19 | 74.26 | 59.00 | 79.20 | 59.00 | 77.55 | 69.32 |

| Method | MOTA ↑ | MT ↑ | ML ↓ | IDS ↓ | FM ↓ | FP ↓ | FN ↓ | FPS ↑ |

|---|---|---|---|---|---|---|---|---|

| DMM-Net [7] | 20.3 | 19.9 | 30.3 | 498 | 1428 | 104,142 | 399,586 | 101.2 |

| JDE [4] | 55.1 | 68.4 | 6.5 | 2169 | 4224 | 128,069 | 153,609 | 17.6 |

| FairMOT [5] | 63.4 | 64.0 | 7.8 | 784 | 4443 | 71,231 | 159,523 | 18.9 |

| EMOT (Ours) | 68.5 | 68.4 | 7.3 | 836 | 3681 | 50,754 | 147,383 | 17.3 |

| Subset | MOTA ↑ | MT ↑ | ML ↓ | IDS ↓ | FM ↓ | FP ↓ | FN ↓ |

|---|---|---|---|---|---|---|---|

| Easy | 82.8/84.9 | 81.8/86.0 | 1.2/1.4 | 62/121 | 498/379 | 10,159/9276 | 11,304/9468 |

| Medium | 65.3/69.6 | 61.0/66.8 | 8.7/8.0 | 445/421 | 2590/2056 | 34,081/27,169 | 89,466/80,934 |

| Hard | 42.5/52.2 | 46.8/47.9 | 14.5/13.5 | 277/294 | 1355/1246 | 26,991/14,309 | 58,753/56,981 |

| Cloudy | 77.0/79.5 | 71.1/80.0 | 3.5/3.5 | 147/141 | 1216/738 | 12,749/12,272 | 32,191/27,800 |

| Rainy | 50.9/57.4 | 46.8/54.2 | 16.4/14.5 | 264/270 | 1553/1413 | 24,689/17,300 | 76,002/70,055 |

| Sunny | 68.7/73.1 | 77.0/78.1 | 3.7/2.4 | 65/95 | 488/343 | 8913/8253 | 14,217/11,605 |

| Night | 60.3/67.4 | 64.2/63.9 | 6.6/7.5 | 308/330 | 1186/1187 | 24,880/12,929 | 37,113/37,923 |

| Detection | Association | PR-MOTA ↑ | PR-MT ↑ | PR-ML ↓ | PR-IDS ↓ | PR-FM ↓ | PR-FP ↓ | PR-FN ↓ |

|---|---|---|---|---|---|---|---|---|

| DPM [29] | GOG * [32] | 5.5 | 4.1 | 27.7 | 1873.9 | 1988.5 | 38,957.6 | 230,126.6 |

| CEM * [33] | 3.3 | 1.3 | 37.8 | 265.0 | 317.1 | 13,888.7 | 270,718.5 | |

| DCT * [34] | 2.7 | 0.5 | 42.7 | 72.2 | 68.8 | 7785.8 | 280,762.2 | |

| ACF [30] | GOG * [32] | 10.8 | 12.2 | 22.3 | 3950.8 | 3987.3 | 45,201.5 | 197,094.2 |

| DCT * [34] | 7.9 | 4.8 | 34.4 | 108.1 | 101.4 | 13,059.7 | 251,166.4 | |

| HT * [36] | 8.2 | 13.1 | 21.3 | 1122.8 | 1445.8 | 71,567.4 | 189,649.1 | |

| R-CNN [17] | DCT * [34] | 11.7 | 10.1 | 22.8 | 758.7 | 742.9 | 36,561.2 | 210,855.6 |

| HT * [36] | 11.1 | 14.6 | 19.8 | 1481.9 | 1820.8 | 66,137.2 | 184,358.2 | |

| CMOT * [37] | 11.0 | 15.7 | 19.0 | 506.2 | 2551.1 | 74,253.6 | 177,532.6 | |

| CompACT [31] | GOG * [32] | 14.2 | 13.9 | 19.9 | 3334.6 | 3172.4 | 32,092.9 | 180,183.8 |

| CEM * [33] | 5.1 | 3.0 | 35.3 | 267.9 | 352.3 | 12,341.2 | 260,390.4 | |

| DCT * [34] | 10.8 | 6.7 | 29.3 | 141.4 | 132.4 | 13,226.1 | 223,578.8 | |

| IHTLS * [35] | 11.1 | 13.8 | 19.9 | 953.6 | 3556.9 | 53,922.3 | 180,422.3 | |

| HT * [36] | 12.4 | 14.8 | 19.4 | 852.2 | 1117.2 | 51,765.7 | 173,899.8 | |

| CMOT * [37] | 12.6 | 16.1 | 18.6 | 285.3 | 1516.8 | 57,885.9 | 167,110.8 | |

| FAMNet [59] | 19.8 | 17.1 | 18.2 | 617.4 | 970.2 | 14,988.6 | 164,432.6 | |

| EB [60] | IOU [61] | 19.4 | 17.7 | 18.4 | 2311.3 | 2445.9 | 14,796.5 | 171,806.8 |

| DAN [62] | 20.2 | 14.5 | 18.1 | 518.2 | - | 9747.8 | 135,978.1 | |

| Mask R-CNN [20] | IOU [61] | 30.7 | 30.3 | 21.5 | 668.0 | 733.6 | 17,370.3 | 179,505.9 |

| V-IOU [38] | 30.7 | 32.0 | 22.6 | 162.6 | 286.2 | 18,046.2 | 179,191.2 | |

| FairMOT [5] | 22.7 | 23.7 | 10.0 | 347.1 | 2993.6 | 49,385.4 | 123,124.5 | |

| EMOT (Ours) | 24.5 | 25.2 | 9.3 | 379.0 | 2957.3 | 43,940.6 | 116,860.7 | |

| Method | Tasks | Environment (CPU & GPU) | FPS |

|---|---|---|---|

| DPM * [29] | D | 4 Intel Core i7-6600U (2.60 GHz) | 0.17 |

| - | |||

| ACF * [30] | 2 Intel Xeon E5-2470v2 (2.40 GHz) | 0.67 | |

| - | |||

| R-CNN * [17] | 2 Intel Xeon E5-2470v2 (2.40 GHz) | 0.10 | |

| Tesla K40 | |||

| CompACT * [31] | 2 Intel Xeon E5-2470v2 (2.40 GHz) | 0.22 | |

| Tesla K40 | |||

| EB * [60] | 4 Intel Core i7-4770 (3.40 GHz) | 11.0 | |

| Titan X | |||

| Mask R-CNN [20] | Intel Core i7-10700K (3.80 GHz) | 3.4 | |

| Nvidia Geforce RTX 2080 SUPER | |||

| FairMOT [5] | D + E | Intel Core i7-10700K (3.80 GHz) | 18.9 |

| Nvidia Geforce RTX 2080 SUPER | |||

| EMOT | Intel Core i7-10700K (3.80 GHz) | 17.3 | |

| Nvidia Geforce RTX 2080 SUPER |

| Subset | PR-MOTA ↑ | PR-MT ↑ | PR-ML ↓ | PR-IDS ↓ | PR-FM ↓ | PR-FP ↓ | PR-FN ↓ |

|---|---|---|---|---|---|---|---|

| Easy | 29.7/30.8 | 30.8/32.3 | 5.2 /5.2 | 42.7 /56.8 | 644.5 /648.3 | 10,371.5/10,022.7 | 15,027.4/14,017.6 |

| Medium | 24.3/25.8 | 22.4/24.3 | 10.7/9.9 | 189.0/199.0 | 1652.4/1613.1 | 23,031.3/21,497.6 | 68,472.9/64,824.3 |

| Hard | 12.8/16.2 | 17.1/17.6 | 15.1/13.4 | 115.4/123.1 | 696.7 /695.8 | 15,982.6/12,420.3 | 39,624.1/38,018.9 |

| Cloudy | 29.4/30.9 | 25.4/28.7 | 7.8 /7.6 | 70.3 /76.0 | 973.0 /925.5 | 8485.1 /8481.9 | 31,822.1/28,943.4 |

| Rainy | 18.5/20.9 | 17.4/19.6 | 16.0/14.0 | 111.0/122.3 | 866.0 /849.2 | 13,710.9/11,493.5 | 50,931.3/48,182.2 |

| Sunny | 20.5/22.8 | 28.5/29.5 | 5.8 /5.4 | 30.6 /41.5 | 370.6 /390.4 | 9823.6 /9354.7 | 11,964.8/10,777.2 |

| Night | 20.7/22.1 | 24.5/23.9 | 9.6 /9.3 | 135.2/139.2 | 783.9 /792.2 | 17,365.8/14,610.5 | 28,406.3/28,958.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, Y.; Lee, S.-h.; Yoo, J.; Kwon, S. Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios. Sensors 2021, 21, 6358. https://doi.org/10.3390/s21196358

Lee Y, Lee S-h, Yoo J, Kwon S. Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios. Sensors. 2021; 21(19):6358. https://doi.org/10.3390/s21196358

Chicago/Turabian StyleLee, Youngkeun, Sang-ha Lee, Jisang Yoo, and Soonchul Kwon. 2021. "Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios" Sensors 21, no. 19: 6358. https://doi.org/10.3390/s21196358

APA StyleLee, Y., Lee, S.-h., Yoo, J., & Kwon, S. (2021). Efficient Single-Shot Multi-Object Tracking for Vehicles in Traffic Scenarios. Sensors, 21(19), 6358. https://doi.org/10.3390/s21196358