Abstract

The radar geometry defined by a spatially separated transmitter and receiver with a moving object crossing the baseline is considered as a Bistatic Forward Inverse Synthetic Aperture Radar (BFISAR). As a transmitter of opportunity, a Digital Video Broadcast-Terrestrial (DVB-T) television station emitting DVB-T waveforms was used. A system of vector equations describing the kinematics of the object was derived. A mathematical model of a BFISAR signal received after the reflection of DVB-T waveforms from the moving object was described. An algorithm for extraction of the object’s image including phase correction and two Fourier transformations applied over the received BFISAR signal—in the range and azimuth directions—was created. To prove the correctness of mathematical models of the object geometry, waveforms and signals, and the image extraction procedure, graphical results of simulation numerical experiments were provided.

1. Introduction

Synthetic Aperture Radar (SAR) and Inverse Synthetic Aperture Radar (ISAR) are microwave-imaging systems with military and civil applications functioning under all meteorological conditions, day and night [1,2]. To realize a cross-range or azimuth resolution, SAR uses the SAR carrier’s displacement in respect of the observed object, whereas ISAR uses the object’s movement in respect of a stationary system of observation. To achieve a range resolution, both systems utilize high-information wideband waveforms. Bistatic Synthetic Aperture Radar and Multi-static Synthetic Aperture Radar (BSAR/MSAR) with spatially separated transmitters, receivers, and objects are a subclass of SAR/ISAR systems. Nowadays, BSAR/MSAR technologies and corresponding signal processing methods and algorithms are experiencing a renaissance in their development [2,3,4,5,6] due to their exceptional properties of stealth target detection, covert surveillance, detection, imaging, recognition, and tracking, as well as using radio-emitters of opportunity such as satellite communication transmitters, GPS and SAR transmitters, other space systems and bodies such as the sun, pulsars, etc.

The Bistatic SAR and Multistatic SAR (BSAR/MSAR) systems have stimulated development of new signal and image processing techniques. Original theoretical conceptions and their experimental verifications were presented in [3,4]. System model’s constructions, imaging algorithms, mission designs, as well as interferometric SAR considered as a bistatic system, are the focus. The spatial separation of the transmitter and receiver in the BSAR topology makes it a promising and useful supplement to classical monostatic SAR/ISAR systems. An integrated time and phase synchronization strategy for a multichannel spaceborne-stationary BSAR system was proposed in [5].

The problem of migration through resolution cells induced by the rotational motion of a large space target, and sparse high-resolution imaging based on compressed sensing in high-resolution BSAR systems were discussed in [6].

Signal processing algorithms as remote sensing instruments in passive bistatic SAR with navigation satellites (e.g., GPS, GLONASS, or Galileo) as transmitters of opportunity were discussed in [7]. Signal synchronization and image formation algorithms were described. Image products such as two-color multi-view, image quality, image characteristics, and coherent change detection formed from BSAR signals during the validation stage were presented in [8]. A ground moving target detection (GMTD) method based on joint clutter cancellation in the echo-image domain for forward-looking BSAR to achieve effective GMTD in heterogeneous clutter was suggested in [9].

For human positioning and activity recognition, millimeter-wave radar, as an emerging technique, is exceptionally appropriate. In contrast to traditional sensors and radars, millimeter-wave radars give detailed information on objects from the range domain to the Doppler domain. The short wavelength allows millimeter-wave radars to achieve a high resolution and a small antenna size, but also makes them prone to noise. In [10], a system framework for human detection and tracking using millimeter-wave radars was designed.

A k-nearest neighbors approach to the design of radar detectors was considered in [11]. The idea is to start with either raw data or well-known radar statistics as a feature vector used in the definition of the decision rule. Simulation experimental results obtained using real clutter recordings were provided to illustrate the behavior of detectors derived using the proposed approach.

Constant false alarm rate (CFAR) detection algorithms have been the focus of the BSAR/MSAR systems’ implementation. A generalized likelihood ratio test-based adaptive detection algorithm defined in the CFAR feature space, where observed data are mapped to clusters, was analytically described in [12]. Linear and nonlinear detection algorithms with robust selective properties were proposed.

An along-track multistatic SAR system with a high azimuth resolution maintaining a large swath width was discussed in [13]. A SIMO (Single-Input Multiple-Output) construction with one transmitting sensor and multiple receiving sensors providing low azimuth ambiguity and a recombination gain close to the theoretical one was analyzed.

Automotive radar with its multiple sensors is a multistatic radar with specific requirements such as high resolution, low hardware cost, and small size satisfied by a multiple-input, multiple-output (MIMO) radar configuration. It provides a high angular resolution with relatively small numbers of antennas. This technology is embedded in the current-generation automotive radar for advanced driver-assistance systems, as well as in next-generation high-resolution imaging radar for autonomous driving. A review of MIMO radar basics that highlights the features of the automotive radar and provides important theoretical results for increasing the angular resolution was suggested in [14].

Hardware architecture, video signal processing, and field experimental results of a multirotor Video SAR imaging system with ultrahigh resolution were provided in [15]. A Field-Programmable Gate Array (FPGA)-based unified signal processing architecture was used to accelerate the generation of massive Video SAR sequences in terms of both circular spotlight and strip map modes.

An analytical model based on the Kirchhoff and geometrical optics approximation for evaluation of the electromagnetic field, single- and multiple-bounce scattering from a composite target in a generic bistatic radar configuration, was derived in [16]. Machine learning and deep learning with sensors data fusion are widely used in computer vision, natural language understanding, and data analytics. Hence, machine-learning algorithms are appropriate to solve identification problems that inherently include multi-modal data [17].

Modern radar systems, especially SAR systems, pose high requirements in respect of accuracy, robustness, and real-time operability in increasingly complex electromagnetic environments. Classical radar signal and image processing methods experience limitations when meeting such requirements, particularly in the area of target classification. Machine-learning, especially deep learning, can be applied to solve problems such as signal and image processing. A comprehensive, structured, and reasoned literature overview of machine learning, signal, and image processing techniques was provided in [18]. The survey disclosed the essence and application of machine learning techniques in SAR signal and image processing.

Contemporary radar surveillance systems consist of highly complex tracking, sensor data fusion, and identification algorithms tracking the trajectories of moving objects. These algorithms are embedded in a real-time middleware with a straightforward processing chain according to the fusion model. New technologies such as distributed data processing and machine learning disclose new possibilities for surveillance systems. An overview of how these technologies, in combination with the big data of trajectories, can be integrated into existing surveillance systems and how machine learning can help to improve situational awareness was suggested in [19].

Artificial intelligence due to recent breakthroughs in deep learning changes applications and services in all scientific domains, including radar technology. Deep learning relies on large amounts of training data that are mostly generated at the network edge by Internet of Things devices and sensors. Bringing the sensed data from the edge of a distributed network to a centralized cloud is often infeasible because of the massive data volume, limited network bandwidth, and real-time application constraints. A framework for data fusion and artificial intelligence processing at the edge was proposed in [20]. A comparative discussion of different data fusion and artificial intelligence models and architectures was provided. Multiple levels of fusion and different types of artificial intelligence were also discussed.

The application of artificial intelligence in target surveillance based on radar sensors is enabled through today’s computational capacities. A survey of past approaches and recent hot topics in the area of artificial intelligence applicable for target surveillance with radar sensors that reveals new potential for the development of novel approaches in radar research and practice was presented in [21]. The focus is on clutter identification, target classification, and target tracking, which are not only of great importance for an adequate operation of radar applications, but are also well suited for the use of artificial intelligence.

Analysis of the signal classification performances of various classifiers for deterministic signals under the additive white Gaussian noise in a wide range of signal-to-noise ratio (SNR) levels was performed in [22]. The matched filter bank classifier, convolutional neural networks, and the minimum distance classifier using spectral-domain features for the radar signal classification were derived. An unsupervised generative convolutional neural network approach for interferometric phase filtering and coherence estimation was suggested in [23].

An original approach in SAR/BSAR signal and image processing known as Compressed Sensing (CS) based on sparse decomposition was applied for improving target detection, parameter estimation, and identification [24,25]. A simulation of inverse synthetic aperture radar (ISAR) imaging of a space target and reconstruction under sparse sampling via CS was developed in [26]. Based on the linear dependence between the LFM rate of an ISAR signal in cross-range and the slant range, a parametric sparse representation method for ISAR imaging of rotating targets was proposed in [27]. CS-based models for inverse synthetic aperture radar (ISAR) imaging, which can sustain strong clutter noise and provide high-quality images with extremely limited measurements, were proposed in [28,29].

Based on sparsity in the ISAR azimuth frequency domain on the cross-range direction, a Fourier basis was applied as a sparse basis to achieve high cross-range resolution imaging by using a compressed sensing method. An improved Fourier basis for ISAR signal’s sparse representation to achieve robust recovery performance via CS was presented in [30]. Bistatic forward inverse synthetic aperture radar systems are a subclass of bistatic inverse synthetic aperture radars and characterized by a bistatic angle of the transmitter-object-receiver close or equal to 180° [31,32]. BFISAR geometry and kinematics can be considered as scenarios with a spatially separated stationary transmitter, stationary receiver, and an object crossing the baseline transmitter-receiver. The electromagnetic field emitted by the transmitter antenna is reemitted by the object and scatters forward to the receiver. Received signals are registered in two orthogonal coordinates, with the range measured along the line of sight and the cross-range or azimuth measured along the length of the inverse synthetic aperture [31,32,33].

BFISAR models, imaging methods, and algorithms have attracted considerable attention in the field of radar research in the last twenty years. A time–frequency approach for target imaging applied in forward scattering radar (FSR) using DVB-T transmitters of opportunity operating in a Very High Frequency (VHF) band was presented in [34].

A compressive sensing algorithm for BISAR imaging with DVB-T waveforms was discussed in [35]. BFISARs are exploited for air traffic monitoring and airport surveillance. As an example, a passive radar system for airport surveillance using a signal of opportunity from DVB-S (Digital Video Broadcasting—Satellite) was presented in [36]. Bistatic forward scattering radar detection and imaging was analyzed in [37]. An electromagnetic model of scattering response measured by FSR in far and near fields for surveillance air-traffics was suggested in [38]. Moving target shadows using passive forward scatter radar systems were studied in [39,40,41].

From the authors’ point of view, the available literature lacks a detailed description of the 3-D geometry and kinematics of the BFISAR scenario, the signal model based on the DVB-T waveform, and the 3-D geometry of the observed object. There is no analytical structure of the object’s image extraction process or its interpretation, based on which the algorithm for signal processing and image reconstruction is built.

The aim of the present work was to define the geometrical and kinematical features of the BFISAR system with a transmitter of opportunity, emitting BVB-T waveforms, to synthesize the BFISAR signal model based on the DVB-T waveform, and based on the vector description of the scenario and signal model, to build an algorithm for extraction of the BFISAR image.

The paper is structured in the following manner. Section 2 presents BFISAR geometric and kinematic vector equations describing the positions of the transmitter, receiver, and object. Section 3 describes stages of the algorithm for extraction of the object image. Section 4 presents the source data and graphical results of simulation experiments. In Section 5, concluding remarks are drawn.

2. BFISAR Geometrical and Kinematical Equations

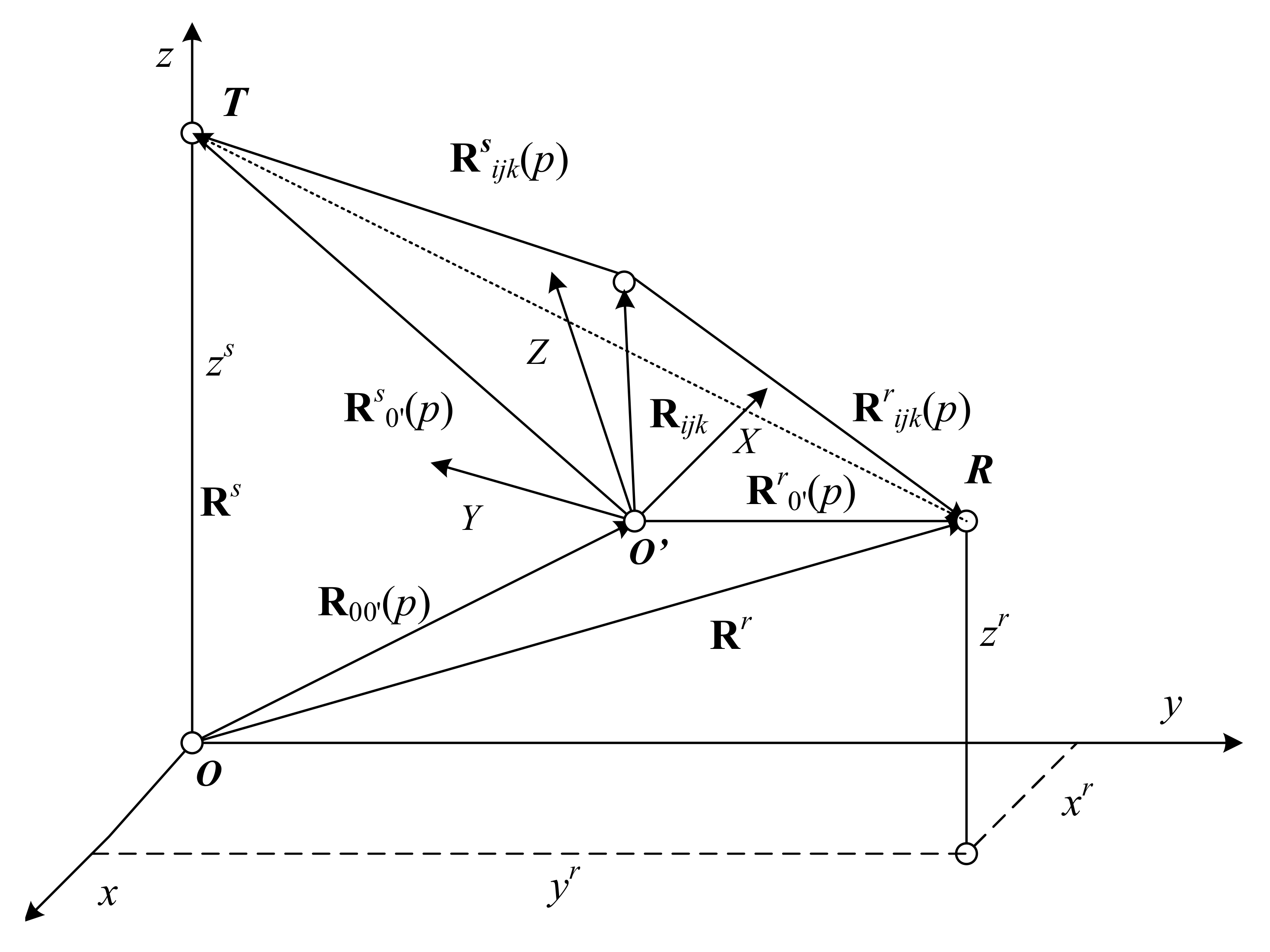

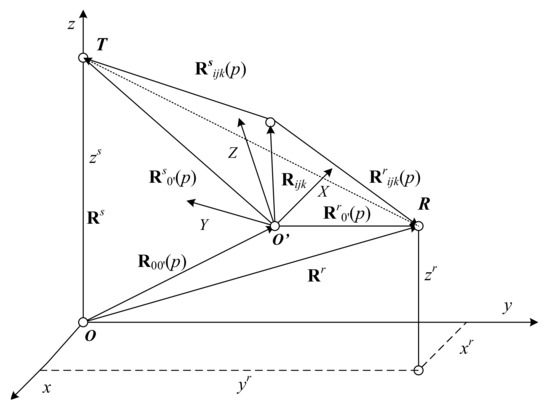

Consider a BFISAR scenario with a DVB-T stationary transmitter T, receiver R, and moving object presented in a Cartesian coordinate system , as shown in Figure 1. The object’s geometry depicted in its own coordinate system is presented as an assembly of reference points placed in nodes of a regular grid. The reference points, whose intensities describe the object’s geometry, are scattering points. Intensities of the remaining reference points are zero or noise-defined. The object is moving near the base line, while the transmitter and receiver can be placed on hills, mountain heights, or roofs of high buildings near the area of surveillance.

Figure 1.

BFISAR geometry.

Vectors and define the positions of the transmitter and receiver, respectively, in a coordinate system . The line TR is the baseline. The distance vector defines the position of the -th scattering point in the coordinate system . The vector defines the position of the object’s mass-center at the -th-emitted DVB-T segment. The geometrical and kinematical ratios in Figure 1 can be described by the vector equations as follows.

The vector distance of the transmitter–object’s mass center:

where is the object’s vector velocity, is the index of the emitted segment, is the total number of DVB-T segments emitted during bistatic inverse aperture synthesis, and is the segments’ time repetition period for aperture synthesis.

The vector distance of an object’s mass center–DVB-T receiver:

The vector distance of a DVB-T transmitter–-th object’s scattering point:

where A is the Euler transformation matrix.

The vector distance of an -th object’s scattering point–DVB-T receiver:

The total distance of a DVB-T transmitter--th object’s scattering point–DVB-T receiver is defined by:

Expression (5) is applied in the determination of the signal time delays from the object’s scattering points and synthesis of the BFISAR signal.

3. DVB-T Waveform and BFISAR Signal Synthesis

3.1. DVB-T Waveform Synthesis

DVB-T is a terrestrial-based digital video broadcasting system that transmits compressed digital audio, digital video, and other data in a Moving Picture Experts Group (MPEG) transport stream, using coded orthogonal frequency-division multiplexing (OFDM) modulation. The DVB-T television station emits waveforms, whose analytical description is based on the multiplication of the following complex exponential functions [42]:

where is the central carrier frequency, is the central wavelength, and c is the speed of light:

stands for the DVB-T frame index;

stands for the Orthogonal Frequency Division Multiplexing (OFDM) symbol index;

stands for the index of the carrier frequency, denotes the total number of DVB-T carrier frequencies, and denotes a Quadrature Phase Shift Keying (QPSK) or Quadrature Amplitude Modulation (QAM) amplitude defined for the -th carrier, -th data symbol, and -th frame.

The values of the exponential function depend on the time intervals in which it is defined. If the current time accepts values in the interval , the exponential function can be written as:

where is the general symbol duration, ms is the symbol’s part duration, ms is the guard interval in the general symbol, and ms.

Otherwise, the exponential function is equal to zero, i.e., .

A DVB-T waveform-synthesizing algorithm includes the following steps.

- For , , compute . If , then .

- For , , compute , then .

- When , then , go to step 2.

Denote as a slow time parameter measured on the azimuth direction. The complex DVB-T waveform can be written as

which can be rewritten as:

where is the carriers’ spacing.

3.2. BFISAR Signal Synthesis

Assume the object depicted in a 3-D regular grid with scattering points placed in its nodes is illuminated by DVB-T waveforms. Partial signals reflected from the target’s scattering points are superposed according to time delays defined by distances and the light speed. The segments’ repetition period during the aperture synthesis can be defined by , where is the interval between segments’ indices used. The total time of the aperture synthesis can be calculated by , where the parameters’ values, and , depend on the synthetic aperture length, object’s velocity, and realized azimuth resolution. For example, to realize an appropriate segments’ repetition period, symbols indexed by for frames can be registered and used for aperture synthesis.

The complex BFISAR signal reflected from the -th scattering point can be expressed as:

for , and , where is the intensity of the -th scattering point, and is the signal time delay from the -th scattering point.

The rectangular function determines the DVB-T symbol size in the synthesized BFISAR signal, and can be defined as:

The complex BFISAR signal reflected from the 3-D object can be written as:

for and .

While BFISAR signal modeling, the current time t measured in the range direction is defined by the expression:

where is the reference time defined by the signal time delay from the nearest scattering point, is the fast time, μs is the time width of the DVB-T waveform’s sample, kHz is the carrier spacing, and MHz is the DVB-T sample’s frequency bandwidth.

Equation (12) describes the process of the BFISAR signal formation and can be interpreted as a spatial transformation of the 3-D image function into a 2-D BFISAR signal , defined in discrete range k and cross-range p coordinates.

4. BFISAR Image Reconstruction Algorithm

The image extraction is the inverse operation to Equation (12). From the 2-D BFISAR signal , a 2-D image can be extracted, i.e.,

where are the new unknown coordinate indices of the -th scattering point.

To describe this process, the transformation of the 3-D image into a 2-D image, whose plane coincides with a signal plane, has to be considered. The 3-D coordinates of each scattering point are transformed into 2-D coordinates. The problem can be referred to a 3-D to 2-D projection, a design technique used to display the 3D object on the 2D surface. The exponential term in Equation (12) is a 3-D to 2-D projective operator. Consider the argument of the exponential term and denote as:

Based on the 2-D Taylor expansion of in the vicinity of the imaging point defined by signal coordinates , the following expression can be written:

where is the constant phase term that does not influence the imaging process; is the -th derivative of , where , on the slow time ; is the -th derivative of , where , on the fast time calculated for .

In the case , the first derivative of for is equal to , where defines the radial velocity of the scattering point. The linear term can be modified as follows.

Multiply and divide the expression with the period of the aperture synthesis and 2 (two), i.e.,

Denote as the number of emitted segments for aperture synthesis, as the Doppler bandwidth, as the Doppler frequency of the scattering point, and as the Doppler index, the dimensionless coordinate of the -th scattering point in the projection signal plane. In the case of , the first linear term can be presented as:

In the case of , the second linear term is modified as follows. For the fast time, expression (15) is rewritten as:

Multiply and divide the right part of the expression Equation (20) with , and denote , i.e.,

The first derivative of the phase in respect of the fast time is written as:

For , the second linear term is expressed by:

Denoting the number of range samples as , the range resolution as , and the dimensionless range coordinate of the scattering point as , the second linear term can be written as:

Based on Equations (19) and (24), the phase term Equation (15) can be written as:

where is the sequence of higher-order terms.

Then, the image reconstruction Equation (14) can be expressed as:

for each .

Based on Equation (26), it can be concluded that the image extraction is a procedure of a total phase compensation applied to the BFISAR signal. Phases defined by distances to scattering points at the moment of imaging only remain in the image. Expression (25) can be interpreted as a 2-D spatial transformation of the signal to the image . Operations of linear phase compensations and higher-order phase compensations can be divided. Then, Equation (26) can be rewritten as:

Based on Equation (27), stages of image extraction from the BFISAR signal can be derived.

Basic Operations

First, based on the object’s geometry and kinematics, and the DVB-T waveform, a BFISAR signal reflected from the 3-D object space is created using expression (12). Second, the demodulation is executed by the multiplication of with the complex-conjugated waveform , i.e.,

After demodulation of the BFISAR signal, a range compression is performed by applying an inverse Fourier transform:

The range-compressed BFSAR signal is azimuth-compressed by applying an inverse Fourier transform:

In case the obtained image is blurred, a higher-order phase correction is applied. An image contrast function or an entropy function is used to evaluate the quality of the image. The higher-order phase correction is performed by multiplication of the BFISAR signal with a phase correction function , i.e.,

The image reconstruction procedure is repeated with the phase-corrected signal. The coefficients of higher-order terms in the Taylor expansion of are calculated iteratively by minimizing the image cost function that evaluates the image quality. The procedure lasts until global minimum of the image cost function is achieved. The main stages of the image reconstruction algorithm are illustrated by the simulation experimental results.

5. Numerical Simulation Experiment

To illustrate the adequacy of the BFISAR geometrical and kinematical analysis, synthesis of the DVB-T waveform and BFISAR signal, and the algorithms for BFISAR signal formation and image reconstruction, a simulation experiment was carried out. Consider the following scenario depicted in the Cartesian coordinate system (Figure 1). The stationary DVB-T transmitter and receiver were placed at heights near an airport or other zones under defense.

The coordinates of the DVB-T transmitter: m; m; m.

The coordinates of the DVB-T receiver: km; m; m.

Object’s trajectory parameters:

Vector velocity modulus: m/s;

Vector velocity’s guiding angles: ; ; .

The coordinates of the mass-center at the moment of imaging :

m; m; m.

A flying object, helicopter, was illuminated by DVB-T waveform segments with the following parameters:

Segment repetition period ms;

DVB-T symbol’s width ms;

DVB-T segment’s width ms;

DVB-T segment’s samples K;

Central carrier frequency GHz;

DVB-T sample’s time width μs;

Frequency bandwidth MHz;

Number of segments for synthesis of the aperture .

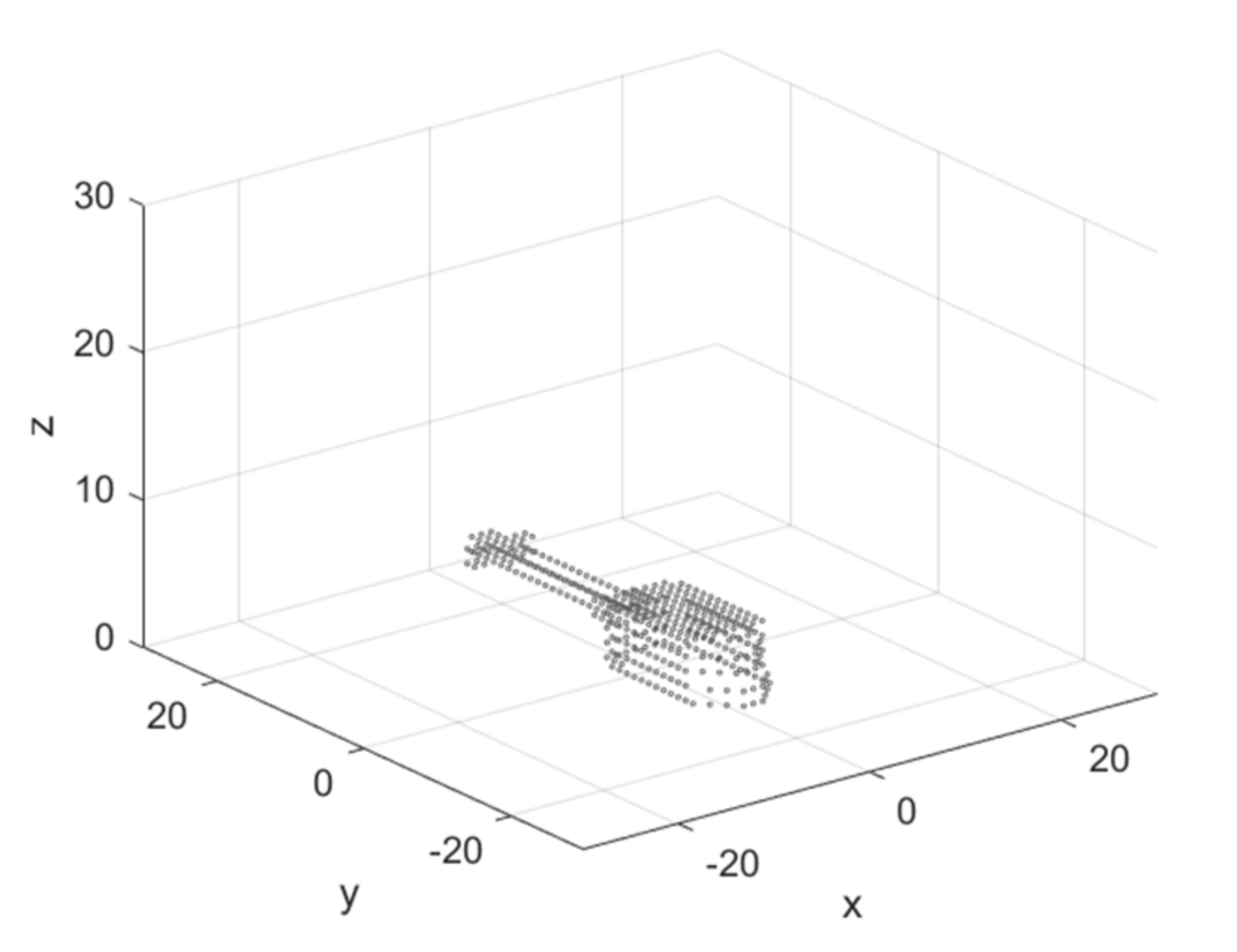

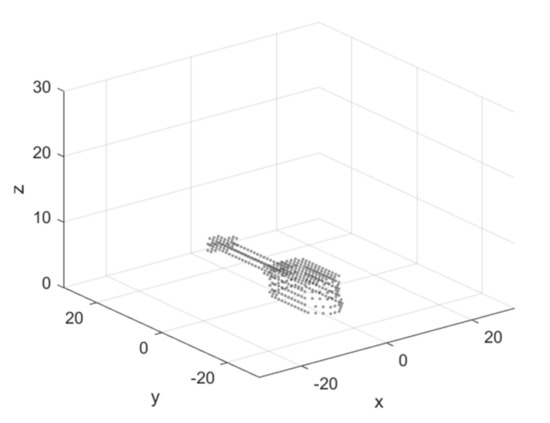

The object’ geometry is described in a 3-D coordinate system with a normalized intensity of scattering points in nodes (Figure 2). The image of the helicopter is without propellers. During the flight, propellers rotate at a high speed and can be accepted as invisible. However, it is not very correct for microwaves at a frequency of 0.9 GHz. The propellers induce additional correlation noise that was ignored in the present work.

Figure 2.

Geometry of the flying object—helicopter.

The simulation experiment was carried out in the following order. First, the DVB-T waveform’s coefficients were calculated. Second, geometrical and kinematical parameters and distances to the object’s scattering points were calculated and sorted. Third, the BFISAR signal was created. Fourth, the image reconstruction based on the 2-D Fourier transformation realized by inverse fast Fourier transforms was implemented. The pseudo code of these stages is presented in Appendix A.

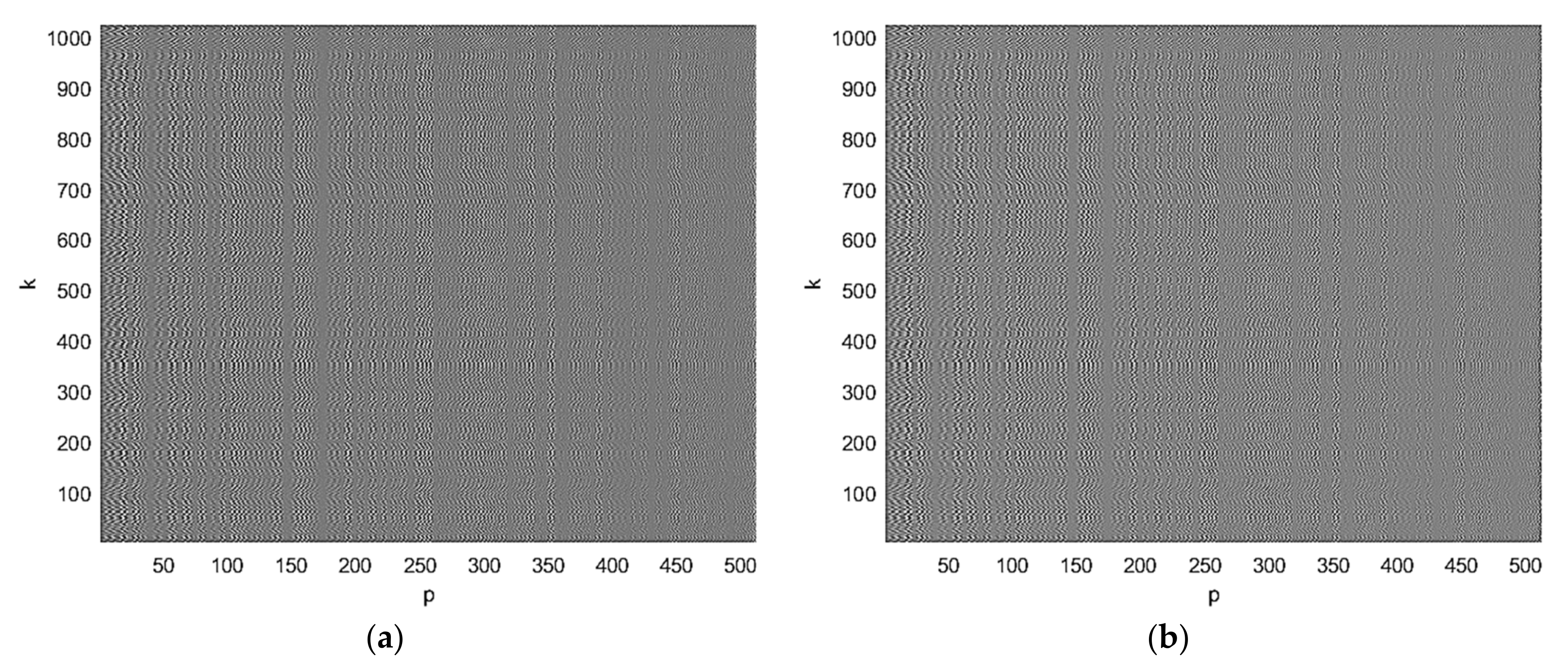

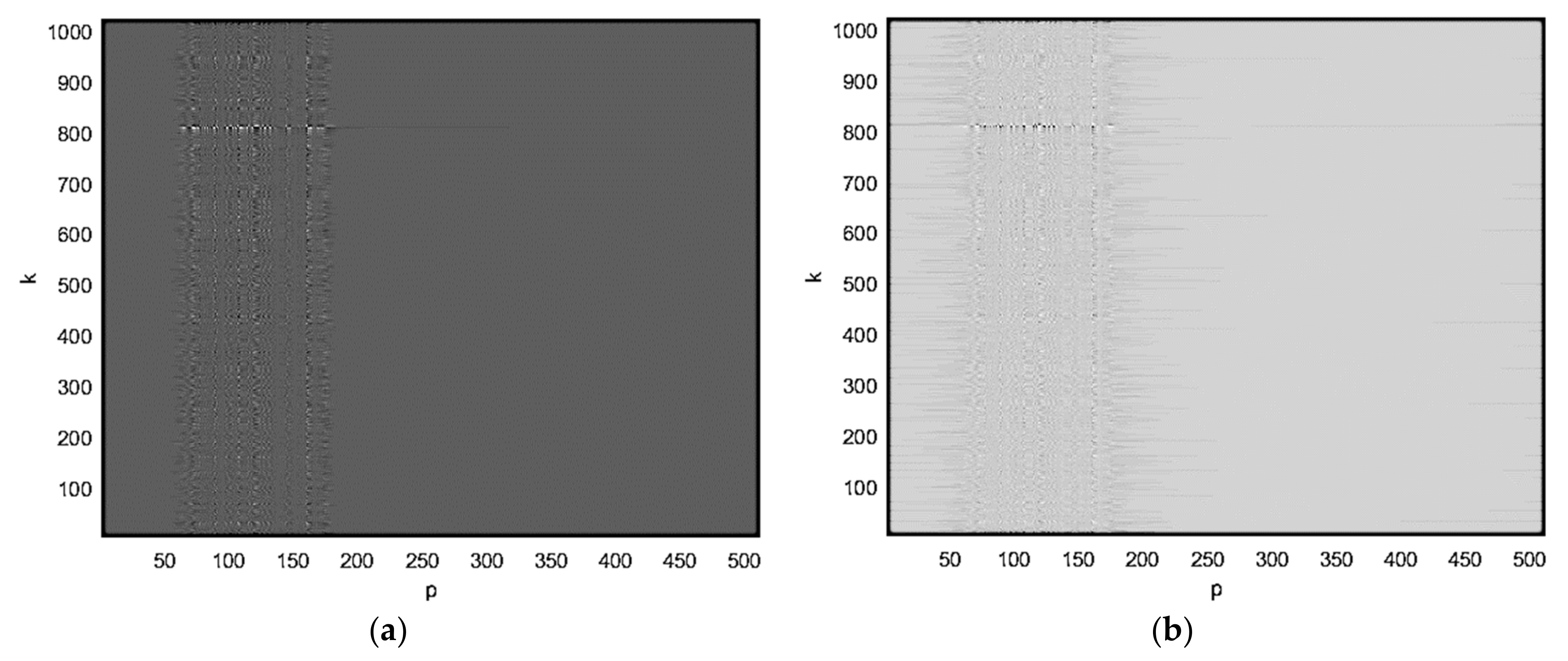

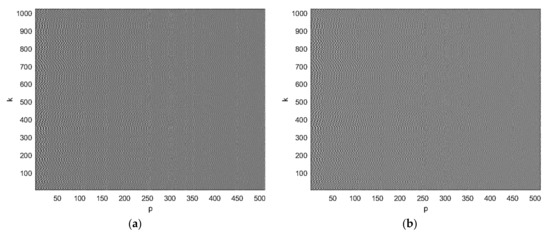

The real (a) and imaginary (b) parts of the complex BFISAR signal are presented in Figure 3. The BFISAR signal structure has properties of a complex microwave hologram.

Figure 3.

Complex BFISAR signal presented by (a) real and (b) imaginary parts.

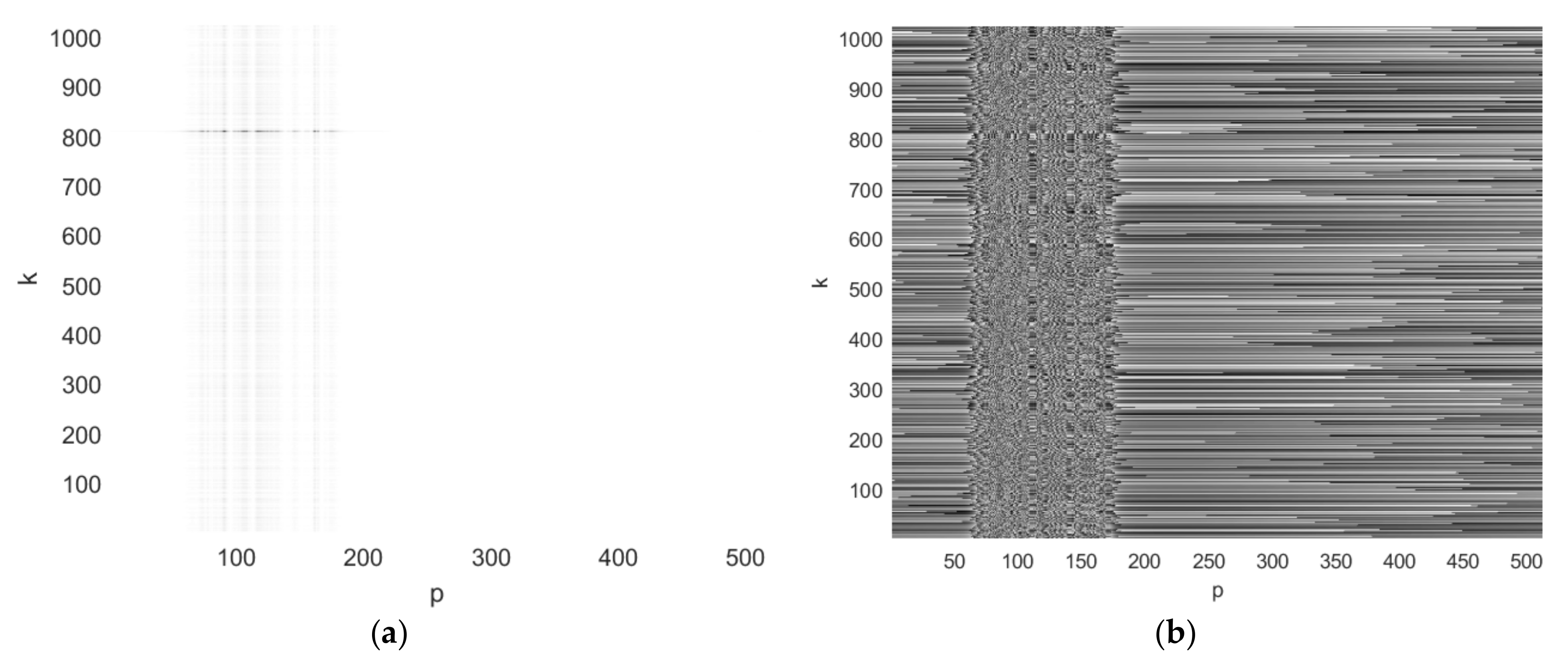

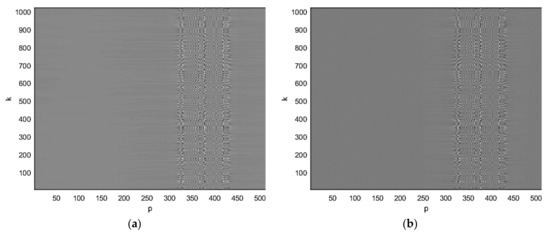

The first step of the image reconstruction is the range compression of the BFISAR signal. The pseudo code of the range compression realized by the first inverse fast Fourier transform is presented in Appendix A. The real (a) and the imaginary parts of the complex BFISAR signal compressed in the range direction are presented in Figure 4. It can be seen that the interfering structure of the BFISAR signal occupies a particular area in the range-compressed signal.

Figure 4.

BFISAR signal after range compression: (a) real and (b) imaginary parts.

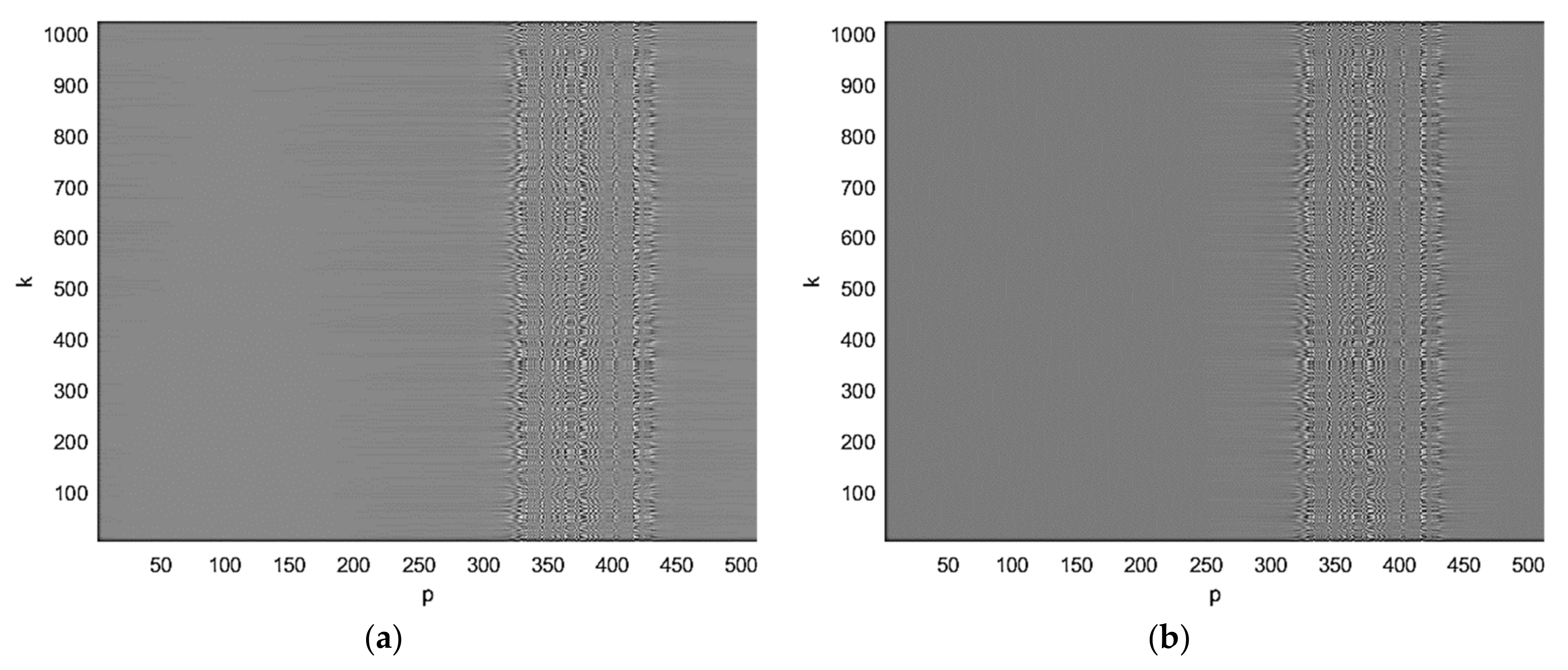

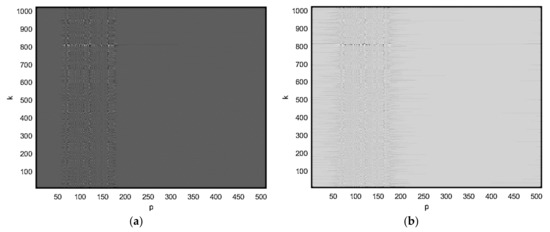

The second step of the image reconstruction is the azimuth compression of the range-compressed BFISAR signal. The pseudo code of the azimuth compression realized by the second inverse fast Fourier transform is presented in Appendix A. The quadrature components of the final BFISAR complex image, i.e., the real (a) and imaginary (b) parts of the azimuth-compressed BFISAR signal obtained after range compression, are presented in Figure 5.

Figure 5.

BFISAR complex image after azimuth compression and shifting: (a) real and (b) imaginary parts.

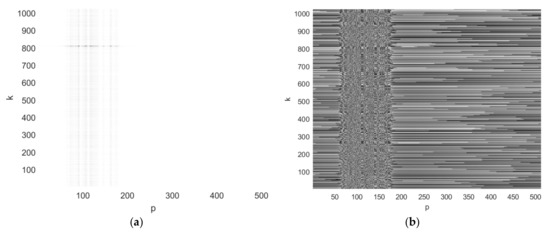

In Figure 6, the amplitude and phase of the final BFISAR complex image extracted from the DVB-T BFISAR signal by applying all steps of the imaging algorithm are depicted.

Figure 6.

DVB-T BFISAR complex image: (a) amplitude image and (b) phase image.

The final amplitude image of the object has a poor range and not very satisfactory azimuth resolutions. The lower range resolution is due to the narrow frequency bandwidth of the DVB-T waveform, MHz, corresponding to a range resolution equal to 20 m. In addition, the DVB-T waveform such as a stepped frequency modulation waveform is characterized with a comparably high level of side lobes and a narrow main lobe width that can be noticed in Figure 5 and Figure 6b. The low level of scattering points’ intensities is due to the high levels of side lobes and sparse structure of the DVB-T coefficients, whose absolute values randomly accept zero values. This phenomenon is the reason the azimuth resolution to be not very satisfactory. The impact of the noise on the object’s image was not considered [43]. This important issue is the subject of future study.

6. Discussion

An analytical and geometrical approach was applied to describe the BFISAR scenario. Dynamic position vectors of the object’s mass-center and scattering point from the object space were derived. Based on the mathematical description of the DVB-T waveform, a model of the BFISAR signal reflected from a 3-D object was created and analyzed. BFISAR signal synthesis was interpreted as a spatial transformation of the 3-D object’s image function into a 2-D signal function with a projective phase operator. Based on the Taylor expansion of the BFISAR signal phase, linear and higher-order phase terms were defined and physically interpreted. The linear phase terms have a Fourier structure and define an object motion of first order, i.e., radial displacement. The higher-order terms define an object’s motion of higher order, i.e., tangential and radial complex maneuvering. In other words, the BFISAR signal formation is a 2-D Fourier transformation of the 3-D image function with a higher-order phase correction.

The image reconstruction is an inverse operation, i.e., an inverse spatial transformation of the 2-D signal function into a 2-D image function with an inverse projective phase operator with the same structure. Thus, the image reconstruction can be interpreted as an inverse 2-D Fourier transformation of the higher-order phase-compensated BFISAR signal.

The analytical description of the BFISAR image allows the derivation of the image reconstruction algorithm, including higher-order phase compensation of the BFISAR signal, range compression by the inverse Fourier transform in the range direction, and azimuth compression by the inverse Fourier transform in the azimuth direction of the range-compressed signal. The complex image preserves only phases defined by distances to scattering points at the moment of imaging. The higher-order terms’ coefficients are calculated iteratively using an image quality-evaluating cost function.

7. Conclusions

BFISAR geometrical and kinematical features, models of the waveform and signal structure, and an algorithm for extraction of the image were the focus of the present study. A BFISAR scenario with a DVB-T transmitting station of opportunity and receiver, and an object crossing baseline was analytically described by kinematical and position vector equations. The DVB-T waveform and BFISAR signal were defined by mathematical expressions. Based on the model of the BFISAR signal, an algorithm for image extraction was drawn. Transformation of the 3-D coordinates of the scattering points into their 2-D coordinates on the imaging plane was described in detail. The imaging algorithm includes the following steps: signal compression in range direction by inverse Fourier transformation, and compression in the azimuth direction by inverse Fourier transformation; the image was improved by compensation of the phases induced by object motion of higher order. To justify the correctness of the analytical and geometrical models describing the BFISAR scenario, waveform, signals, and the algorithm for extraction of the object image, graphical results of the simulation experiment were provided.

Author Contributions

Conceptualization, methodology, formal analysis, investigation, writing—original draft preparation, writing—review and editing final manuscript, A.D.L.; data curation and processing, software implementation, T.P.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

A pseudo code for calculation of DVB-T coefficients c(m, l, k):

fqam = 20*10^8;

quadrature = nchoosek ([−4,−2,2,4],2);

qam_coeff = [2 2 4 4 −2 −2 −4 −4 −2 −2 −4 −4 2 2 4 4; 2 4 2 4 2 4 2 4 −2 −4 −2 −4 −2 −4 −2 −4];

am = sqrt(qam_coeff(1,:).^2 + qam_coeff(2,:).^2);

ph = exp(1j.*(2.*pi.*fqam + atan(qam_coeff(1,:)./qam_coeff(2,:)).*180./pi));

qam = am.*ph;

c_mlk = zeros(M,68,6817);

pilot = [0 48 54 87 141 156 192 201 255 279 282 333 432 450 483 525 531 618 636 714 759 765 780 804 873 888 918 939 942 969 984 1050 1101 1107 1110 1137 1140 1146 1206 1269 1323 1377 1491 1683 1704 1752 1758 1791 1845 1860 1896 1905 1959 1983 1986 2037 2136 2154 2187 2229 2235 2322 2340 2418 2463 2469 2484 2508 2577 2592 2622 2643 2646 2673 2688 2754 2805 2811 2814 2841 2844 2850 2910 2973 3027 3081 3195 3387 3408 3456 3462 3495 3549 3564 3600 3609 3663 3687 3690 3741 3840 3858 3891 3933 3939 4026 4044 4122 4167 4173 4188 4212 4281 4296 4326 4347 4350 4377 4392 4458 4509 4515 4518 4545 4548 4554 4614 4677 4731 4785 4899 5091 5112 5160 5166 5199 5253 5268 5304 5313 5367 5391 5394 5445 5544 5562 5595 5637 5643 5730 5748 5826 5871 5877 5892 5916 5985 6000 6030 6051 6054 6081 6096 6162 6213 6219 6222 6249 6252 6258 6318 6381 6435 6489 6603 6795 6816] + 1;

c_mlk(1:M,:,pilot + 1) = 4/3;

data = find(c_mlk = =0);

for f = 1: length(data)

c_mlk(data(f,1)) = ph(round((rand)*15) + 1);

end

Pseudo code for calculation of coordinates and distances of scattering points:

p_v= (0: N−1); % N is the number of emitted segments.

Xs = −kron(x00,ones(num,N)) + kron(xrs,ones(num,N))-kron(Vx*(N/2-p_v)*Tp,ones(num,1)) + kron(transf_xyz(1,:),ones(N,1)).’;

Ys = −kron(y00,ones(num,N)) + kron(yrs,ones(num,N))-kron(Vy*(N/2-p_v)*Tp,ones(num,1)) + kron(transf_xyz(2,:),ones(N,1)).’;

Zs = −kron(z00,ones(num,N)) + kron(zrs,ones(num,N))-kron(Vz*(N/2-p_v)*Tp,ones(num,1)) + kron(transf_xyz(3,:),ones(N,1)).’;

Xr = kron(xrr,ones(num,N))-kron(x00,ones(num,N))-kron(Vx*(N/2-p_v)*Tp,ones(num,1))-kron(transf_xyz(1,:),ones(N,1)).’;

Yr = kron(yrr,ones(num,N))-kron(y00,ones(num,N))-kron(Vy*(N/2-p_v)*Tp,ones(num,1))-kron(transf_xyz(2,:),ones(N,1)).’;

Zr = kron(zrr,ones(num,N))-kron(z00,ones(num,N))-kron(Vz*(N/2-p_v)*Tp,ones(num,1))-kron(transf_xyz(3,:),ones(N,1)).’;

Rs = abs(sqrt((Xs).^2 + (Ys).^2 + (Zs).^2));

Rr = abs(sqrt((Xr).^2 + (Yr).^2 + (Zr).^2));

t = (Rs + Rr)./c; % Calculation of signals time delays

t = sort(t); % Sortation in ascending order

Pseudo code for calculation of the complex BFISAR signal:

for q = 1: num

for p = 1: N

for kp = 1: Kp % Kp is the number of range samples.

S(p,kp,1) = S(p,kp,1) + a*c_mlk(m,l,kp)*exp(1j*2*pi*((Wc + (kp-1)/Tu)*(-t(q,p))-((kp-1)/Tu)*(delta + l*Ts + 68*m*Ts))); % Demodulated BFISAR signal

end

end

end

Pseudo code for calculation of the complex BFISAR signal:

A1 = fft(S);

A2 = fft(A1’);

A3 = fftshift(A2);

absolute = abs(A3);

phase = angle (A3).

References

- Martorella, M.; Gelli, S.; Bacci, A. Ground Moving Target Imaging via SDAP-ISAR Processing: Review and New Trends. Sensors 2021, 21, 2391. [Google Scholar] [CrossRef]

- Chen, V.C.; Martorella, M. Inverse Synthetic Aperture Radar Imaging: Principles, Algorithms and Applications; Institution of Engineering and Technology: Perth, Australia, 2014; p. 303. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Deng, Y. Bistatic SAR System and Signal Processing Technology; Engineering, Signals & Communication; Springer: Singapore, 2018; ISBN 978-981-10-3078-9. [Google Scholar] [CrossRef]

- Cataldo, D.; Gentile, L.; Ghio, S.; Giusti, E.; Tomei, S.; Martorella, M. Multibistatic Radar for Space Surveillance and Tracking. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 14–30. [Google Scholar] [CrossRef]

- Hong, F.; Wang, R.; Zhang, Z.; Lu, P.; Balz, T. Integrated Time and Phase Synchronization Strategy for a Multichannel Spaceborne-Stationary Bistatic SAR System. Remote Sens. 2016, 8, 628. [Google Scholar] [CrossRef] [Green Version]

- Shi, L.; Zhu, X.; Shang, C.; Guo, B.; Ma, J.; Han, N. High-Resolution Bistatic ISAR Imaging of a Space Target with Sparse Aperture. Electronics 2019, 8, 874. [Google Scholar] [CrossRef] [Green Version]

- Antoniou, M.; Cherniakov, M. GNSS-based bistatic SAR: A signal processing view. EURASIP J. Adv. Signal Process. 2013, 2013, 98. [Google Scholar] [CrossRef]

- Yocky, D.A.; Wahl, D.E.; Jakowatz, C.V. Bistatic SAR: Imagery & Image Products; Sandia National Lab.: Albuquerque, NM, USA, 2014. [CrossRef] [Green Version]

- Liu, Z.; Li, Z.; Yu, H.; Wu, J.; Huang, Y.; Yang, J. Bistatic Forward-Looking SAR Moving Target Detection Method Based on Joint Clutter Cancellation in Echo-Image Domain with Three Receiving Channels. Sensors 2018, 18, 3835. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cui, H.; Dahnoun, N. High Precision Human Detection and Tracking Using Millimeter-Wave Radars. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 22–32. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Ricci, G. A k-nearest neighbors approach to the design of radar detectors. Signal Process. 2020, 174, 107609. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Ricci, G. CFAR Feature Plane: A Novel Framework for the Analysis and Design of Radar Detectors. IEEE Trans. Signal Process. 2020, 68, 3903–3916. [Google Scholar] [CrossRef]

- Guccione, P.; Monti Guarnieri, A.; Rocca, F.; Giudici, D.; Gebert, N. Along-Track Multistatic Synthetic Aperture Radar For-mations of Minisatellites. Remote Sens. 2020, 12, 124. [Google Scholar] [CrossRef] [Green Version]

- Sun, S.; Petropulu, A.P.; Poor, H.V. MIMO Radar for Advanced Driver-Assistance Systems and Autonomous Driving: Advantages and Challenges. IEEE Signal Process. Mag. 2020, 37, 98–117. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, D.; Mao, X.; Yu, X.; Zhang, J.; Li, Y. Multirotors Video Synthetic Aperture Radar: System Development and Signal Processing. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 32–43. [Google Scholar] [CrossRef]

- Di Simone, A.; Fuscaldo, W.; Millefiori, L.M.; Riccio, D.; Ruello, G.; Braca, P.; Willett, P. Analytical Models for the Electromagnetic Scattering from Isolated Targets in Bistatic Configuration: Geometrical Optics Solution. IEEE Trans. Geosci. Remote Sens. 2019, 58, 861–880. [Google Scholar] [CrossRef]

- Blasch, E.; Pham, T.; Chong, C.-Y.; Koch, W.; Leung, H.; Braines, D.; Abdelzaher, T. Machine Learning/Artificial Intelligence for Sensor Data Fusion–Opportunities and Challenges. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 80–93. [Google Scholar] [CrossRef]

- Lang, P.; Fu, X.; Martorella, M.; Dong, J.; Qin, R.; Meng, X.; Xie, M. A Comprehensive Survey of Machine Learning Applied to Radar Signal Processing. arXiv 2020, arXiv:2009.13702. Available online: https://arxiv.org/pdf/2009.13702.pdf (accessed on 25 September 2020).

- Dastner, K.; Haaga, S.; Roseneckh-Kohler, B.V.H.Z.; Mohrdieck, C.; Opitz, F.; Schmid, E. Machine Learning Support for Radar-Based Surveillance Systems. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 8–25. [Google Scholar] [CrossRef]

- Munir, A.; Blasch, E.; Kwon, J.; Kong, J.; Aved, A. Artificial Intelligence and Data Fusion at the Edge. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 62–78. [Google Scholar] [CrossRef]

- Wrabel, A.; Graef, R.; Brosch, T. A Survey of Artificial Intelligence Approaches for Target Surveillance with Radar Sensors. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 26–43. [Google Scholar] [CrossRef]

- Yildirim, A.; Kiranyaz, S. 1D Convolutional Neural Networks Versus Automatic Classifiers for Known LPI Radar Signals Under White Gaussian Noise. IEEE Access 2020, 8, 180534–180543. [Google Scholar] [CrossRef]

- Mukherjee, S.; Zimmer, A.; Sun, X.; Ghuman, P.; Cheng, I. An Unsupervised Generative Neural Approach for InSAR Phase Filtering and Coherence Estimation. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Misiurewicz, J. Compressed sensing for velocity measurements in MTI/MTD radar. In Proceedings of the 2013 14th International Radar Symposium, Dresden, Germany, 19–21 June 2013; pp. 125–130. [Google Scholar]

- Kulpa, J.S.; Misiurewicz, L. Compressed sensing application for target elevation estimation via multipath effect in a passive radar. In Proceedings of the 2013 14th International Radar Symposium, Dresden, Germany, 19–21 June 2013; pp. 119–124. [Google Scholar]

- Wang, F.; Eibert, T.F.; Jin, Y.-Q. Simulation of ISAR Imaging for a Space Target and Reconstruction Under Sparse Sampling via Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3432–3441. [Google Scholar] [CrossRef]

- Rao, W.; Li, G.; Wang, X.; Xia, X.-G. Parametric sparse representation method for ISAR imaging of rotating targets. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 910–919. [Google Scholar] [CrossRef]

- Feng, C.; Xiao, L.; Wei, Z. Compressive sensing ISAR imaging with stepped frequency continuous wave via Gini sparsity. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013; pp. 2063–2066. [Google Scholar]

- Giusti, E.; Cataldo, D.; Bacci, A.; Tomei, S.; Martorella, M. ISAR Image Resolution Enhancement: Compressive Sensing Versus State-of-the-Art Super-Resolution Techniques. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 1983–1997. [Google Scholar] [CrossRef]

- Zhang, S.; Xiao, B.; Zong, Z. Improved compressed sensing for high-resolution ISAR image reconstruction. Chin. Sci. Bull. 2014, 59, 2918–2926. [Google Scholar] [CrossRef]

- Martorella, M.; Palmer, J.; Homer, J.; Littleton, B. On bi-static ISAR. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1125–1134. [Google Scholar] [CrossRef]

- Zeng, T.; Cherniakov, M.; Long, T. Generalized approach to resolution analysis in bi-static SAR. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 461–473. [Google Scholar] [CrossRef]

- Antoniou, M.; Sizov, V.; Hu, C.; Jančovič, P.; Abdullah, R.; Rashid, N.; Cherniakov, M. The concept of a forward scattering micro-sensors radar network for situational awareness. In Proceedings of the International Radar Conference, Adelaide, Australia, 2–5 September 2008. [Google Scholar]

- Płotka, M.; Abratkiewicz, K.; Malanowski, M.; Samczyński, P.; Kulpa, K. The Use of the Reassignment Technique in the Time-Frequency Analysis Applied in VHF-Based Passive Forward Scattering Radar. Sensors 2020, 20, 3434. [Google Scholar] [CrossRef] [PubMed]

- Qiu, W.; Giusti, E.; Bacci, A.; Martorella, M.; Berizzi, F.; Zhao, H.; Fu, Q. Compressive sensing–based algorithm for passive bistatic ISAR with DVB-T signals. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 2166–2180. [Google Scholar] [CrossRef]

- Abdullah, R.R.; Musa, S.A.; Rashid, N.A.; Sali, A.; Salah, A.; Ismail, A. Passive Forward-Scattering Radar Using Digital Video Broadcasting Satellite Signal for Drone Detection. Remote Sens. 2020, 12, 3075. [Google Scholar] [CrossRef]

- Cheng, H.; Changjiang, L.; Tao, Z. Bistatic forward scattering radar detection and imaging. J. Radars 2016, 5, 229. [Google Scholar] [CrossRef]

- Falconi, M.T.; Comite, D.; Galli, A.; Pastina, D.; Lombardo, P.; Marzano, F.S. Forward Scatter Radar for Air Surveillance: Characterizing the Target-Receiver Transition from Far-Field to Near-Field Regions. Remote Sens. 2017, 9, 50. [Google Scholar] [CrossRef] [Green Version]

- Kabakchiev, C.; Garvanov, I.; Behar, V.; Daskalov, P.; Rohling, H. Study of moving target shadows using passive Forward Scatter radar systems. In Proceedings of the 15th International Radar Symposium, Gdansk, Poland, 16–18 June 2014; pp. 1–4. [Google Scholar]

- Kabakchiev, C.; Garvanov, I.; Behar, V.; Kabakchieva, D.; Kabakchiev, K.; Rohling, H.; Kulpa, K.; Yarovoy, A. The study of target shadows using passive FSR systems. In Proceedings of the 2015 16th International Radar Symposium, Dresden, Germany, 24–26 June 2015; pp. 628–633.

- Sizov, V.I.; Cherniakov, M.; Antoniou, M. Forward scatter RCS estimation for ground targets. In Proceedings of the EuRAD2007, Munich, Germany, 10–12 October 2007. [Google Scholar]

- Diaz, D.C. End of Degree Project, DVB-T Pilot Sensing Algorithms, Telecommunication Engineering, Linkoping University, EE Com. Div. June 2010. Available online: https://upcommons.upc.edu/bitstream/handle/2099.1/10420/DVBT%20Project%20Final%20Draft.pdf?sequence=1&isAllowed=y (accessed on 9 June 2021).

- Nikolova, M.; Dimitrova, T. Computer Restoration of 2D Medical Diagnostic Signals with Noise Frequency Spectrum. Elektron. Elektrotech. 1970, 111, 133–136. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).