1. Introduction

Improving the immersion and presence of users is the principal issue in virtual reality (VR), and several studies have explored this subject. Most of these studies have focused on improving the immersion and presence of head-mounted display (HMD) users. Recently, there have been proposals [

1] to enhance the immersion and presence of non-HMD users to enable both types of users to achieve a co-located, although asymmetric, VR experience. Such proposals are driven by the different engagement levels required by various virtual environment (VE) participants. Numerous VR applications and scenarios have been employed in diverse fields such as education, health care, physical training, and home entertainment. Among these applications, in addition to the participating HMD users, the proportion of non-HMD users who experience the VR environment via a desktop PC or projector display [

2] is also large and increasing. Particularly, given the popularity of social VR applications such as VR Chat [

3], several users who may not wear an HMD device for certain personal reasons (such as VR sickness, unaffordable cost, inconvenient locations, or preference for a brief VR experience) may still like to participate. This makes enabling the non-HMD users to engage in a VE and improving their immersion and presence become critical issues.

Non-HMD and HMD users have asymmetric experiences, devices, and roles in immersive VR. In a previous study [

4], asymmetric collaboration was divided into low, medium, and high levels of asymmetry. Among these, high-level asymmetry refers to a substantial difference in the VR experience of HMD and non-HMD users, such as in the verbal conveyance of information. In contrast, the difference between HMD and non-HMD users in low-level asymmetry can be small, such as the non-HMD users being able to change the perspective or influence the environment. Setups with various levels of asymmetry differently influence the capability of users to complete tasks in a VE, thereby affecting presence and immersion. The authors of [

5] proposed an asymmetric user interface to enhance the interaction between non-HMD and HMD users and to provide non-HMD users with first- and third-person perspectives to enhance user immersion. ShareVR’s research [

1] explored the effectiveness of co-located asymmetric interaction between HMD and non-HMD users and used a tracked display and floor projection to visualize the virtual space for the non-HMD users, thereby increasing their immersion and enjoyment. In these studies, because both types of users could change the perspective and influence the environment, the related setups correspond to the low-asymmetry realm. However, because the non-HMD visual display is generally a fixed desktop PC [

5] or a wired tracked display [

1] instead of a portable wireless device, the studies have limitations arising from the difficulties experienced by non-HMD users in engaging with the VE in any location. In addition, realistic locomotion contributes substantially to the immersion and presence in VR [

6]. In existing methods, a non-HMD user navigating a VE usually employs keyboards and controller devices or their position is tracked via an external projection sensor [

2,

7]. These methods are more likely to cause simulation sickness and general dissatisfaction, as the body presence of non-HMD users is low and the tracking equipment can be burdensome and/or expensive. Moreover, nearly all these studies may apply well to one-to-one environments involving HMD and non-HMD users; however, they will have a limited application in one-to-many environments (one HMD user and multiple non-HMD users) because of the size of the real activity space or the restricted projection range of the projectors used.

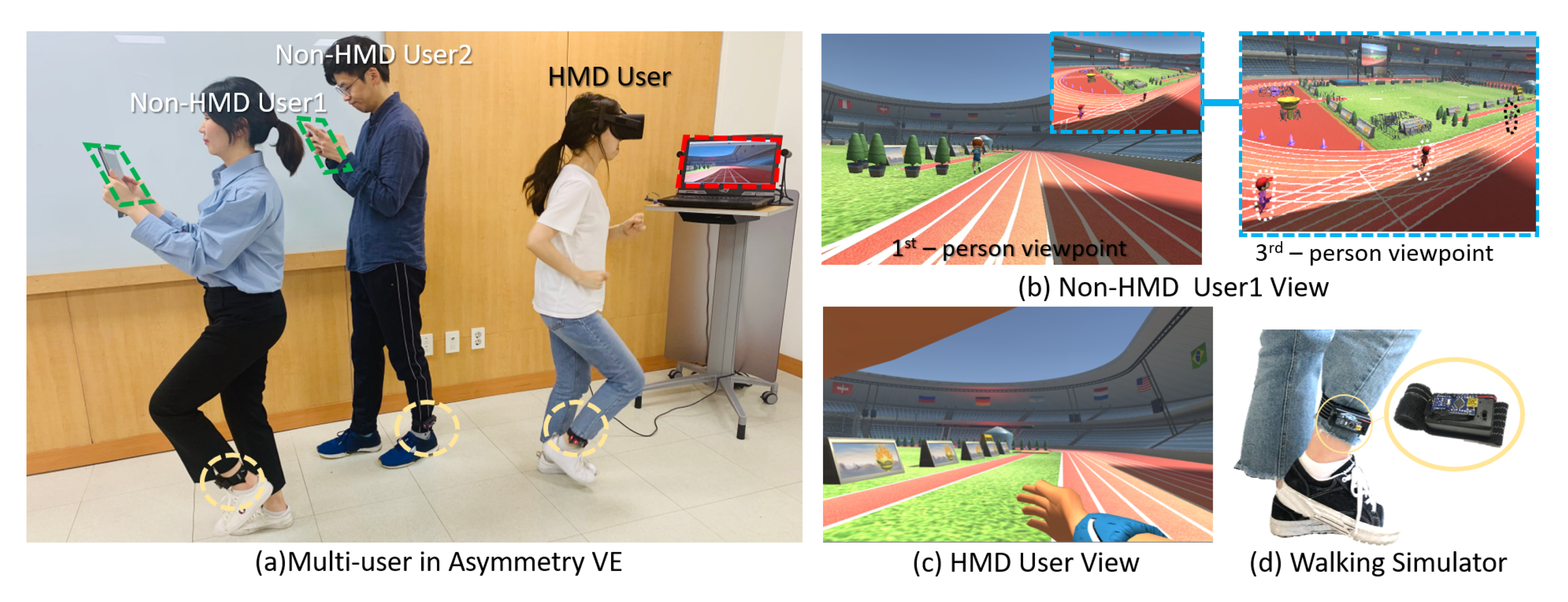

In this paper, we propose a low-asymmetry interface to enable an increased presence of non-HMD users in a locomotion VE, so that both types of users can experience similar presence and enjoyment, and enable multiple non-HMD users to engage in the VE in any location. In the proposed system, to ensure the freedom of a non-HMD user’s viewing perspective, we provide a portable mobile device as a visual display and track the non-HMD user’s head direction via the gyro sensor of the mobile device. Inspired by [

8] (whose research presented a walking simulator device for HMD users to reduce VR sickness and enabled walking realism), we provide a walking simulator for HMD users and a mobile device for non-HMD users, enabling physical movement to be translated to movement in the VE for all users. We simplified the walking simulator and algorithm proposed by [

8] to reduce the burden of wearing the simulator and to measure the user’s walking motion more effectively. In addition, while using the proposed low-asymmetry interface, both HMD and non-HMD users can experience the same level of involvement in the locomotion VE because the interface can provide the same first-person viewpoint and natural walking interaction even to non-HMD users. Such users can then become the main actors, similar to HMD users, instead of playing secondary roles or acting as observers; thus, a comparable presence of the two types of users is achieved in the virtual world.

To demonstrate that our low-asymmetry interface can enable an increased presence of non-HMD users, on par with that of HMD users, and enhance the enjoyment of the non-HMD users by providing physical navigation ability to the VE participants, we performed a case study to evaluate the user experience. Our experiments involved a simple locomotion scenario with both cooperation and competition modes to prove the effectiveness of the proposed interface. Moreover, the scenario supports open participation, the hardware equipment is easy to manufacture and is wireless for non-HMD users, and the non-HMD users have no positional limitations with regard to engagement. Therefore, the proposed setup can be employed in one-to-many HMD to non-HMD user environments (as shown in

Figure 1).

In summary, our contributions are as follows:

We propose a low-asymmetry interface for increasing the presence of non-HMD users for a shared VR experience with HMD users. By using a portable mobile device and a convenient walking simulator, non-HMD users can have their own viewpoints and realistic physical movement experiences in VE.

We conducted a user study that engaged both HMD and non-HMD user relationships at the same level. The performed experiments confirmed that non-HMD users feel a presence similar to that of HMD users when performing equivalent tasks in a VE.

Our system offers a one-to-many participation experience with one HMD and multiple non-HMD users. Our experiments show that this can satisfy non-HMD users in terms of increased enjoyment.

3. Low-Asymmetry Interface

Our key idea is to increase the presence of non-HMD users by building a low-asymmetry interface that will lead to non-HMD users having a similar presence to that of HMD users. For visualization, we provided a portable mobile device to each non-HMD user to enable them to have an independent perspective and direction of movement. To incorporate movement, we provided both HMD and non-HMD users with a convenient single-leg walking simulator that ensured that the actual physical movement remained consistent with the movement in the VE. In addition, owing to the absence of immersion headsets, non-HMD users may misinterpret the walking distances in reality compared to those in the virtual space while interacting with locomotion VR content. Therefore, we provided non-HMD users with a third-person viewpoint (an overhead view) to enable them to estimate distances in the VE, thereby improving their immersion (

Figure 1b). To investigate the effectiveness of the proposed low-asymmetry system, we conducted experiments with a simple application and simple rules. In this application, both HMD and non-HMD users are the main actors, enabling their virtual experiences to be compared fairly.

In the remainder of this section, we first describe immersive locomotion interactions in the low-asymmetry system (see

Section 3.1). Next, we describe the viewpoint provided to non-HMD users (see

Section 3.2). Finally, the implementation of the system is discussed in

Section 3.3.

3.1. Immersive Locomotion Interaction

In a VE, users’ movements constitute fundamental VR interactions. Several tasks that require VR controllers to control user movement will cause “VR sickness”, thereby decreasing immersion. This is caused by differences among the movement frequencies of the eyes, body, and controller. Improved user immersion by detecting movement through a walking simulator or by tracking a user’s movement with a projection sensor has been demonstrated in several studies [

8,

43]. Out of the two approaches, directly wearing a walking simulator can allow for a wider range of movements than projector tracking, particularly when using a treadmill-style walking simulator. Because the user’s movement can be detected in-situ, there is no limitation on the size of the real physical space, enabling free movement within the VE. This has been verified experimentally [

8].

In this work, we employed an easy-to-wear treadmill-style walking simulator similar to that proposed by [

8]. However, we have simplified the walking decision algorithm from the original two-leg method to a single-leg method. This means that we only detect the movement of one leg (leg one), and the movement of the other leg (leg two) depends on the detected result of leg one and the corresponding movement (as shown in

Figure 2). This is because users’ legs are usually in continuous alternating movement while walking, excluding slow-motion cases. The time difference between each leg’s stride when walking or running normally is very short (we observed the average time to be approximately 1 s), which means that single-leg-based walking estimation involves only a small error, reducing the user’s equipment burden while effectively detecting the walking movement. We prove the usability and effectiveness of the proposed single-leg-based walking decision approach in

Section 4.2.

Movement detection is performed with an MPU-6050 gyro sensor, which calculates the gradient value along the x(Roll), y(Pitch), and z(Yaw) axes, and the value along the x-axis is used to judge whether walking has occurred [

8]. From these measurements, we detected the axis gradient for each frame, and if the difference between the current and previous frames was greater than a specified threshold value (we used 0.5), we evaluated the movement state as true. Conversely, if the difference was less than the threshold value, the movement state was considered false. The full working of our single-leg-based in-place walking detection algorithm is shown in Algorithm 1.

In addition, while the movement state was true (while walking or running), we used the user’s calculated difference value (appropriately weighted, we used 0.01) as the speed of movement to calculate the change in distance in the VE. The user’s displacement value

D corresponding to each frame

i is given as

Here, is the forward direction of the user camera, is the speed of movement, and is the time step. The new position of the user in the VE is calculated as .

| Algorithm 1. Single-leg-based in-place walking detection algorithm. |

| 1: Value ← user’s motion value (leg with walking simulator). |

| 2: State ← user’s motion state (leg with walking simulator). |

| 3: Roll ← roll gradient values of gyro sensor (leg with walking simulator). |

| 4: T ← threshold for identifying movement (leg with walking simulator). |

| 5: Value_L2 ← user’s motion value (leg without walking simulator) |

| 6: State_L2 ← user’s motion state (leg without walking simulator) |

| 7: procedure MOTION DECISION(Value, State, Roll, T) |

| 8: curr_Roll ← current roll gradient value. |

| 9: pre_Roll ← previous roll gradient value. |

| 10: curr_Roll = pre _ Roll |

| 11: if abs (pre _Roll-curr_Roll) > T then |

| 12: Value = abs (pre _Roll-curr_Roll) |

| 13: State = True (Walking) |

| 14: else |

| 15: Value = 0 |

| 16: State = False (Stop) |

| 17: end if |

| 18: pre_Value ← previous user’s motion value (leg with walking simulator) |

| 19: pre_State ← previous user’s motion state (leg with walking simulator) |

| 20: Value_L2 = pre_Value |

| 21: State_L2 = pre_State |

| 22: pre_Value = Value |

| 23: pre_State = State |

| 24: pre_Roll = curr_Roll |

| 25: end procedure |

We used a Bluetooth module, HC-06, to transfer the data (gradient value of axis) captured by the MPU-6050 gyro sensor. Because the HMD and non-HMD users used different devices, the HMD data were received by the master device connected via a USB cable [

8], whereas non-HMD users directly used Bluetooth in their portable mobile devices to receive data, as shown in

Figure 3. Therefore, by employing mobile devices and walking simulators, our system enables a non-HMD user to navigate physically in the VE with freedom of movement, thereby increasing the immersion and presence experience.

3.2. Viewpoint for Non-HMD Users

Because non-HMD users do not wear headset devices, when walking with HMD users in a co-located environment, their field of vision will include the HMD user in the actual physical space. When moving in the VE, being presented with both the actual physical distance and the virtual distance may cause feelings of uncertainty for non-HMD users, which distracts from an immersive experience.

To decrease this influence for non-HMD users, we provide not only a first-person viewpoint but also a third-person viewpoint for reference. This third-person viewpoint of the scene is provided via a small window whereby non-HMD users can observe their relative distance from the HMD users in the virtual space, which improves their assessment of distance (as shown in

Figure 4). In addition, when non-HMD users remotely engage in the VE with HMD users, confirming their position in the VE through a third-person viewpoint helps improve their awareness and understanding of the activities of other users in the VE, thereby increasing immersion and co-presence. In addition, we added a text-based UI to alert the user if any other user is close by (as shown on the left side of

Figure 4). The distance between the users is calculated from the position coordinates (x, z-axes) of the VE user. If the distance is close to the threshold value, the text information provides hints to users.

3.3. Implemented Experiences

The main goal of the proposed low-asymmetry interface was to enable non-HMD and HMD users to feel a similar level of presence and enjoyment. To verify the effectiveness of the proposed system, we implemented two applications (maze, racing game), and based on the two applications, three experiments were conducted to investigate our system.

For the first experiment, we designed a maze game to test the main functions based on a walking task to compare the non-HMD users’ immersion and the system usability when moving with the single walking simulator and touch input in a low-asymmetry environment. For the second and third experiments, we designed a running race game for a comparative study with all users. The second experiment analyzed the HMD and non-HMD users’ experience of presence in a shared environment with the proposed low-asymmetry interface and basic interface (the details are described in

Section 4.3). The third experiment compared the enjoyment between HMD user and non-HMD users, also compared the all users’ enjoyment between one-to-one and one-to-N environments. A racing game was especially chosen because in most multiuser collaborative applications, the user relationships at the same experience level are either competitive or cooperative. We designed the racing game scenario in two forms, i.e., “general running” and “relay running”, representing the competitive and cooperative cases, respectively, for HMD and non-HMD users, and both types of users played equally influential roles (the details of the experiments are presented in

Section 4.3 and

Section 4.4).

4. Experiments and Analysis of Results

4.1. Environments and Participants

The application and environment developed for the HMD and non-HMD users based on a low-asymmetry system were implemented using Unity 3D 2019.2.17f1, Oculus SDK, and a walking simulator used for user walking motion determination, as presented by [

8]. The functionality of the walking simulator was implemented using an Arduino Sketch 1.8.12. The network implemented for the HMD and non-HMD users to join the same virtual world was based on photon unity networking. The mobile-device system for non-HMD users was based on Android 4.3. The PC configuration used for the system design and experiments was Intel Core i7-6700, 16 GB RAM, and a GeForce 1080 graphics card.

We conducted experiments in a 7 m × 7 m room, because in our system the non-HMD user devices are wireless and can move freely, and therefore, we did not restrict the location of the non-HMD users. Because the VR application of our system involved movement determination, the users were standing up during the experiments (as shown in

Figure 5).

To achieve a systematic analysis of the proposed system, we surveyed 12 participants (5 female and 7 male), who were 19 to 35 years old, with previous VR experience and an average age of 24.3. Each participant engaged in all the experiments. In the first experiment, the participants were tested independently. In the second experiment, we divided the participants into six pairs. In the third experiment, we divided the participants into four groups of three participants.

4.2. Immersion of Non-HMD Users

Experiment: The first experiment aimed to evaluate the immersive properties and usability of the system for non-HMD users while they were moving with the walking simulator and performing touch inputs in a mobile environment. Previous researchers [

8] analyzed the walking realism for HMD users when using a walking simulator and keyboard, concluding that moving via a walking simulator in a maze environment is more realistic and immersive than moving via keyboard actions. In our experiment, we also conducted the experiment in a maze environment, but we focused on non-HMD users performing the same task of moving in a maze to reach all coins (as shown in

Figure 6).

User Study: For this study, we recruited 12 participants to compare movement using the walking simulator with that via the touch input. To evaluate the experience provided by the walking simulator, in the first survey we asked the three questions referenced by [

8,

44] to analyze the immersive quality for non-HMD users. The Likert scales ranged from 1 (not at all) to 5 (extremely) underlying continuous measures and assigned equally spaced scores to response categories.

- Q1.

How easy was it to control movement in the VE?

- Q2.

Was the movement accurately controlled in the VE?

- Q3.

Did you feel that the VE provided an immersive experience?

Result Analysis: The first question checked the convenience and intuitiveness experienced by the user during movement when using the provided mobile device. According to the results shown in

Figure 7, the mean values for walking simulator-based and touch input-based movements were 4.316 (SD = 0.42) and 3.208 (SD = 0.33), respectively. The scores were analyzed using the independent

t-test, showing that the score for walking simulator-based movement was significantly higher than that for touch input-based movement (

). This shows that mobile control using a walking action is more convenient and intuitive than moving by using manual touch operations on a mobile device. The second question was regarding the accurate control of movement in the VE, and the results showed that the mean values for both movement control methods were similar, that is, both methods controlled the movement accurately (see

Figure 7). The third question was regarding the immersive experience of the users, and the results showed that the mean value for walking simulator-based movement is higher than that for touch input-based movement (

). The scores were 4.208 (SD = 0.33) and 3.167 (SD = 0.39), respectively.

In addition to the above analysis, we conducted a user study using the system usability scale (SUS) [

45] to verify the effectiveness of the single-leg-based walking decision method and compared the two movement-control methods. The study included a 10-item Likert 5-point scale (1 to 5 points). The average SUS score was 68, according to the results shown in

Figure 8, the scores for walking simulator-based movement and touch input-based movement were 85.8 and 79.5, respectively. We can conclude that although both movement types achieve an above-average score, the single-leg-based walking decision method is useable and reasonable, and the usability of the walking simulator is higher than that of the touch input. The survey results confirmed that walking simulator-based movement has a higher satisfaction for non-HMD users, although the experience is comparable to touch input-based movement.

4.3. Presence

Experiment: This experiment analyzed the presence experienced by HMD and non-HMD users in the proposed low-asymmetry and the basic interface systems. We applied the two systems to a VR environment representing a simple running scenario (as shown in

Figure 9), where both HMD and non-HMD users play equally influential roles. In these experiments, the relay running mode requires the users to be involved in a cooperative relationship. The HMD and non-HMD users stand at different starting positions in the same lane to enable relay running. We set the total distance for the relay run as 100 m (distance measured by x-axis coordinate); further, the initial position of the non-HMD user is at the start position of the race, whereas that of the HMD user is 50 m away from the non-HMD user’s initial position. In the relay running mode, we used a text-based UI to alert the user to start running (as shown in

Figure 10a).

As mentioned before, we investigated the presence experienced by both HMD and non-HMD users with equally significant roles in the low-asymmetry interface system and the basic interface system. For movement control, the basic interface provides a controller to the HMD user and a mobile tablet to non-HMD users, which they control according to touch input.

User Study: We used the presence questionnaire [

44], which includes questions regarding realism, possibility of action, quality of interface, possibility of examination, and self-evaluation of performance, to analyze the HMD and non-HMD users’ presence in the shared environment. For this study, we recruited 12 participants in pairs (one HMD and one non-HMD user), with each of them having experience with both wearing and not wearing the HMD.

Result Analysis:

Figure 11 shows the results of the presence questionnaire that examined the experiences of HMD and non-HMD users in the two interface systems. According to the results, we found that the difference in the total presence experienced by the non-HMD and HMD users for the low-asymmetry interface (

) was not statistically significant; however, in the basic interface (

), the experienced presence was significantly different.

Notably, the realism experienced by the non-HMD users (

) in the low-asymmetry interface (

) is significantly higher than in the basic interface (

). The term realism signifies whether it is possible to control the movement naturally and the VE experience is comparable with the real-world experience. Realism in controlling movement is especially important because the walking simulator detects real actions to ensure that the user’s movement in the VE is controlled by actual leg movements. Therefore, it can reduce the sense of inconsistency between the VE and the real world. Simultaneously, visual and perspective information is also an important factor affecting realism. In previous studies [

5,

46], it was shown that using both first- and third-person viewpoints can significantly improve the realism and presence experienced by non-HMD users. In our system, we ensured that in addition to a first-person viewpoint, non-HMD users were also provided with a third-person viewpoint, enabling them to grasp their own position in the VE and the distance from HMD users. Thus, the interference of the physical environment with the VR vision can be reduced for non-HMD users, and the experienced realism and presence can be enhanced.

Finally, for the question “How much delay did you experience between your actions and expected outcomes” in the questionnaire, we found that the low-asymmetry interface does experience a latency problem compared with the basic interface because of the time taken for transferring the walking simulator data over a network, and the corresponding difference is significant for the non-HMD users ().

4.4. Enjoyment

Experiment: In the final experiment, we not only experimented with the case of a one-to-one relationship between an HMD user and a non-HMD user but also considered the one-to-many case of one HMD user and multiple non-HMD users in the shared VE. We analyzed the enjoyment of the users of our system via the running application, which is different from the relay running mode described in

Section 4.3. In this experiment, we analyzed the user experiences with regard to the general 100 meter race mode. Here, the users’ relationship is competitive, and the users will be running from the same starting position but in different lanes.

User Study: For this study, we analyzed the users’ enjoyment by evaluating their positive feelings according to their responses to the post-game module (the questions related to the positive experience) of the game experience questionnaire (GEQ) [

47]. We divided the 12 participants into four teams, each with one HMD user and two non-HMD users (see

Figure 10b). Each team participated in the race game three times, with each user in a team playing the non-HMD role twice and the HMD role once.

Result Analysis:

Figure 12 illustrates the results for enjoyment when HMD and non-HMD participants played in the one-to-one and one-to-two environments. As shown on the

Figure 12, the difference in the positive experience of non-HMD users between the one-to-one and one-to-N environments is significant (

), however, the difference of HMD user between one-to-one and one-to-N environments is not statistically significant (

). Furthermore, in the one-to-one and one-to-N environments, the difference between HMD and non-HMD users is not significant (

), respectively.

We can conclude that in the one-to-one and one-to-N environments the enjoyment of non-HMD users is similar to the HMD user and the non-HMD user’s enjoyment increased in the one-to-N environment. When the multiple non-HMD users engaged in the VE, the score as both “I felt satisfied” and “I felt proud” in the questionnaire is significantly higher than when one non-HMD user engaged (), respectively. Because in this competitive running game content, the participation of multiple users increased competitiveness, and since the non-HMD users can confirm the other participants’ position in VE at any time through the third-person viewpoint and observe the real physical reaction when users are co-located, the one-to-N cases enable to enhance the non-HMD users’ experience more than one-to-one cases.

However, the enjoyment of the HMD user is not increased significantly. The previous work [

48] have proved that to design optimized roles for HMD and non-HMD users can increase their enjoyments. According to the previous work [

48], we analyzed one of the reasons for the result is that the experiment content and the role of users are designed relatively simple. In this experiment, all users are assigned the same role and as mentioned before, the third-person viewpoint enables the non-HMD users to confirm the participates’ position and number at any time in VE, while in the case of HMD user, if other participants’ position is behind of HMD user, the HMD user is difficult to perceive the number and position of other participants. The HMD user is affected by the content and the single role to a degree so that the difference was not significant between one-to-one and one-to-many cases in this experiment.

4.5. Discussion

In this study, the main goal was to examine the effect of a low-asymmetry interface on the presence and experience of all users in a shared environment. First, we conducted an analysis of the proposed single-leg-based walking decision method and found that its usability in the walking-detection task, as well as the users’ immersion while using physical navigation was significantly higher than that of virtual navigation (touch-input-based movement). In addition, we compared the proposed interface with the basic interface to analyze the experience of presence. The result shows that non-HMD users experience a presence similar to that of the HMD users in our low-asymmetry interface. In terms of realism, there is a significant difference between the proposed interface and the basic interface. In addition, we compared the one-to-one and one-to-two engagement cases to analyze the experience of enjoyment. According to the results, we found that the experience between HMD and non-HMD users were similar in the both environments. In the one-to-many environment, as more participants enriched the content, the enjoyment of non-HMD users was significantly more positive than that in the one-to-one environment.

These findings confirm that a low-asymmetry interface can increase the presence of non-HMD users such that it is comparable to that of HMD users. In addition, these findings demonstrate that in the proposed system, the greater the participation of non-HMD users, the more enhanced is the enjoyment of non-HMD users. Furthermore, because the non-HMD users’ enjoyment is similar to the HMD user, the finding confirms the low-asymmetry interface can provide immersion to non-HMD users in the VE.

When compared with existing approaches [

1], our system enables open participation. Because the device used by non-HMD users in our system has no space restrictions, they can either be co-located or remote, enabling a shared VR experience with HMD users in one-to-many environments. A non-HMD device impacts the field of vision of the non-HMD users and decreases their immersion; however, by using a portable mobile device, non-HMD users can positively perform physical interactions (e.g., physical touch) with HMD users in a co-located shared VR experience, the feeling of presence can be improved, and the non-HMD users’ enjoyment can be increased. Although only simple scenarios were examined in our experiment, the locomotion-based shared experience achieved in our system can be subsequently applied to a wide variety of applications, such as a competitive game of PacMan or a running game, or to cooperative tasks such as a VR maze and relay running. Here, non-HMD users can contribute independently or collaboratively with HMD users in the shared environment.

5. Conclusions

In this paper, we propose a low-asymmetry system that can improve the immersion and presence experienced by non-HMD users in a VR environment shared with HMD users. Our proposal includes providing a portable mobile device for non-HMD users to ensure that they have their own first-person viewpoint and a walking simulator for all users to ensure movement realism. Because the proposed walking module supports in-place movement, even a small physical experimental space can allow wide-ranging movement in the VE. Further, as we are able to utilize single-leg-based walking measurements, the walking simulator itself is more convenient and simpler than those used in most existing systems. Using a portable mobile device and a walking simulator can offer non-HMD users similar perspectives and movement capabilities as those available to HMD users, enabling non-HMD users to play the same roles as HMD users in an equally rewarding shared environment. Because the devices used in our system for non-HMD users are low-cost and portable, our system supports simultaneous participation by multiple non-HMD users. To investigate the HMD and non-HMD user experiences of the proposed system with respect to presence and immersion, we created a simple running application in a low-asymmetry VR environment in which the main interaction is the users’ movement. According to the experimental results, the non-HMD users reported similar levels of immersion and presence to those experienced by the HMD users.

One of the limitations of our current system is that it is restricted to a single HMD user to avoid the chance of a physical collision between users in a co-located environment. In addition, immersive interaction via hands was not considered. We aim to address the hand interaction issue in future work by incorporating a hand-tracking sensor similar to Leap Motion controller and a system for hand gripping [

49]. In addition, the third-person viewpoint with a fixed position, which was used in this study, cannot be applied to large-scale scenes. In future work, we will improve the third-person viewpoint to a movable view suitable for a large-scale scene. In addition, we aim to improve the walking simulator for determining more varied motions. Finally, because the proposed low-asymmetry interface can be adopted in a wide variety of basic movement interactions, we will apply it to various popular VR applications to improve the quality of our experimental results.