A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Preprocessing

2.2. Lane Discrimination

2.2.1. Segmentation Network

2.2.2. Optical Flow Estimation Network and Training Method

2.3. Wrapping Unit Based on Bilinear Interpolation

2.4. Optimizing Convolutional Layer

2.5. Adaptive Scheduling Network

2.6. Mapping

3. Experiments

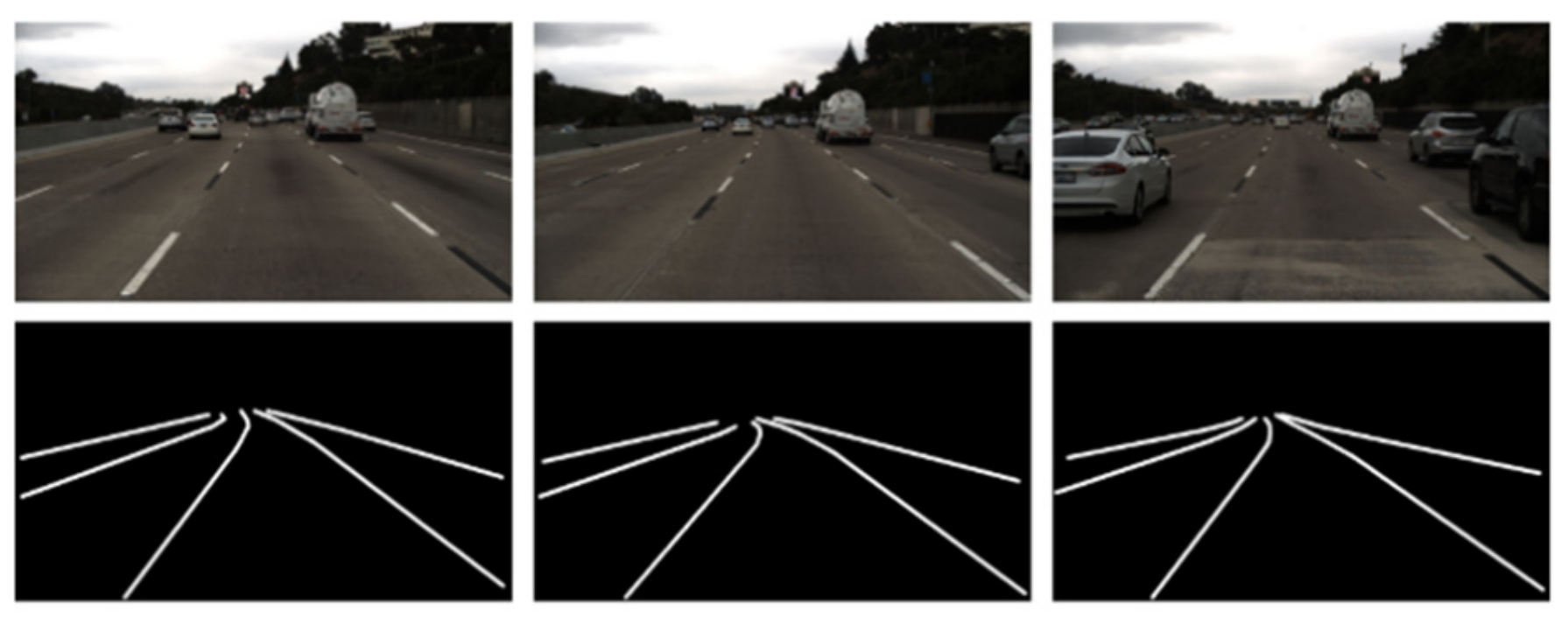

3.1. Experiment of Lane Segmentation Model

3.2. Experiment of Lane Discrimination

3.3. Experiment of Mapping

- Lane segmentation;

- Lane discrimination;

- Mapping pixels from the pixel coordinate system to the camera coordinate system;

- Curving fitting based on least square method; and

- Calculating the distance and error;

4. Conclusions and Discussions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and Gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar]

- Mccall, J.; Trivedi, M. Video-based lane estimation and tracking for driver assistance: Survey, system, and evaluation. In Proceedings of the IEEE Transactions on Intelligent Transportation Systems, New York, NY, USA, 6 March 2006; pp. 20–27. [Google Scholar]

- Loose, H.; Franke, U.; Stiller, C. Kalman particle filter for lane recognition on rural roads. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 60–65. [Google Scholar]

- Chiu, K.; Lin, S. Lane detection using color-based segmentation. In Proceedings of the 2005 IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 706–711. [Google Scholar]

- López, A.; Serrat, J.; Canero, C.; Lumbreras, F.; Graf, T. Robust lane markings detection and road geometry computation. Int. J. Automot. Technol. 2010, 11, 395–407. [Google Scholar] [CrossRef]

- Teng, Z.; Kim, J.H.; Kang, D.J. Real-time Lane detection by using multiple cues. In Proceedings of the 2010 International Conference on Control, Automation and Systems, Gyeonggi-do, Korea, 27–30 October 2010; pp. 2334–2337. [Google Scholar]

- Borkar, A.; Hayes, M.; Smith, M. Polar randomized Hough Transform for lane detection using loose constraints of parallel lines. In Proceedings of the 2011 International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republic, 22–27 May 2011; pp. 1037–1040. [Google Scholar]

- Hur, J.; Kang, S.N.; Seo, S.W. Multi-lane detection in urban driving environments using conditional random fields. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium, Gold Coast, QLD, Australia, 23–26 June 2013; pp. 1297–1302. [Google Scholar]

- Neven, D.; Brabandere, B.D.; Georgoulis, S. Towards end-to-end lane detection: An instance segmentation approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; pp. 268–291. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A deep neural network architecture for real-time semantic segmentation. arXiv, 2016; arXiv:1606.02147. [Google Scholar]

- Brabandere, B.D.; Neven, D.; Gool, L.V. Semantic instance segmentation for autonomous driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Eindhoven, The Netherlands, 21–26 July 2017; pp. 478–480. [Google Scholar]

- Ghafoorian, M.; Nugteren, C.; Baka, N.; Booij, O.; Hofmann, M. EL-GAN: Embedding loss driven generative adversarial networks for lane detection. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 8–14 September 2018; pp. 256–272. [Google Scholar]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schrof, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazırbas, C.; Golkov, V. FlowNet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Liu, P.; King, I.; Lyu, M.R.; Xu, J. DDFlow: Learning optical flow with unlabeled data distillation. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8770–8777. [Google Scholar]

- Yu, H.; Zhang, W. Method of vehicle distance measurement for following car based on monocular vision. J. Southeast Univ. Nat. Sci. Ed. 2012, 3, 542–546. [Google Scholar]

- Aswini, N.; Uma S, V. Obstacle avoidance and distance measurement for unmanned aerial vehicles using monocular vision. Int. J. Electr. Comput. Eng. 2019, 9, 3504. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 334–349. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Eindhoven, The Netherlands, 27 April 2017. [Google Scholar]

| Method | Acc% | Precision/% | Recall/% | MIoU/% | Fps |

|---|---|---|---|---|---|

| ENet [11] | 96.2 | 88.2 | 95.2 | 81.1 | 135 |

| Bisenet [19] | 96.1 | 88.5 | 95.8 | 81.3 | 106 |

| ICNet [20] | 96.3 | 88.4 | 96.1 | 81.8 | 39 |

| Deeplab [21] | 96.2 | 88.9 | 96.0 | 82.6 | 30 |

| PSP Net [22] | 96.4 | 90.2 | 96.4 | 85.6 | 21 |

| Deeplabv3plus [14] | 96.5 | 90.6 | 96.5 | 87.7 | 17 |

| Proposed method-threshold (0.978) | 96.4 | 90.0 | 96.1 | 85.9 | 47 |

| Proposed method-threshold (0.970) | 96.3 | 89.5 | 95.6 | 85.2 | 52 |

| Method | Acc/% | Precision/% | Recall/% | MIoU/% | Fps |

|---|---|---|---|---|---|

| ENet [11] | 87.8 | 75.4 | 75.9 | 72.3 | 135 |

| Bisenet [19] | 88.1 | 76.4 | 75.4 | 73.1 | 107 |

| ICNet [20] | 88.5 | 77.1 | 77.8 | 74.0 | 39 |

| Deeplab [21] | 88.8 | 78.8 | 78.4 | 74.9 | 30 |

| PSP Net [22] | 90.2 | 84.5 | 85.2 | 76.1 | 21 |

| Deeplabv3plus [14] | 94.5 | 88.3 | 87.6 | 80.1 | 17 |

| Proposed method-threshold (0.978) | 93.9 | 86.6 | 85.4 | 76.5 | 47 |

| Proposed method-threshold (0.970) | 93.1 | 85.9 | 85.1 | 76.2 | 52 |

| Dataset | Method | Accuracy/% | FDR/% |

|---|---|---|---|

| Tusimple | Proposed method | 94.2 | 5.8 |

| Aslarry | 95.6 | 4.4 | |

| Dpantoja | 95.2 | 4.8 | |

| LaneNet | 95.5 | 4.5 | |

| Self-collected | Proposed method | 90.0 | 10.0 |

| Aslarry | 84.1 | 15.9 | |

| Dpantoja | 83.8 | 16.2 | |

| LaneNet | 84.3 | 15.7 |

| Longitudinal Distance/m | Transverse Error/% |

|---|---|

| 0 | 7.6 |

| 10 | 3.0 |

| 20 | 3.2 |

| 30 | 3.4 |

| 40 | 4.0 |

| 50 | 4.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, S.; Luo, Z.; Gao, F.; Liu, M.; Chang, K.; Piao, C. A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation. Sensors 2021, 21, 400. https://doi.org/10.3390/s21020400

Lu S, Luo Z, Gao F, Liu M, Chang K, Piao C. A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation. Sensors. 2021; 21(2):400. https://doi.org/10.3390/s21020400

Chicago/Turabian StyleLu, Sheng, Zhaojie Luo, Feng Gao, Mingjie Liu, KyungHi Chang, and Changhao Piao. 2021. "A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation" Sensors 21, no. 2: 400. https://doi.org/10.3390/s21020400

APA StyleLu, S., Luo, Z., Gao, F., Liu, M., Chang, K., & Piao, C. (2021). A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation. Sensors, 21(2), 400. https://doi.org/10.3390/s21020400