1. Introduction

With the increasing demand for accelerating plant breeding and improving crop-management efficiency, it is necessary to measure various phenotypic traits of plants in a high-throughput and accurate manner [

1]. The fast development of advanced sensors and automation and computation tools further promotes the capability and throughput of plant-phenotyping techniques, which allows the nondestructive measurement of complex plant parameters or traits [

2]. Plant three-dimensional (3D) morphological structure is an important descriptive trait of plant growth and development, as well as biotic/abiotic stress responses [

3]. 3D plant phenotyping has great potential for multiscale analyses of the 3D morphological structures of plant organs, individuals and canopies; for building functional structure plant models (FSPM) [

4], for evaluating the performance of different genotypes in adaptation to the environment, for predicting yield potential [

5] and for facilitating the accurate management of breeding and crop production with key technical support.

Different 3D sensors and imaging techniques have been developed to quantify plants’ 3D morphological structural parameters at different scales. These sensors can be classified into passive and active sensors [

6]. Generally, passive sensors build a 3D model from the images of different views. Some systems have been developed for obtaining a 3D model, such as an RGB camera combined with a structure from motion (SFM) algorithm and a multiview stereo vision system [

7,

8]. Rose et al. [

9] found that the SFM-based photogrammetric method can yield high correlations to the measurements and was suitable for the task of organ-level plant phenotyping. Xiang et al. [

10] developed a PhenoStereo system for field-based plant phenotyping and used a set of customized strobe lights for lighting influence. Rossi et al. [

11] provided references for optimizing the reconstruction process of SFM in terms of input and time requirements. They found the proper balance between number of images and their quality for an efficient and accurate measurement of individual structural parameters for species with different canopy structures. However, methods combined with passive sensors have high requirements for images with complex features in the surface texture for image matching [

6], and the methods are limited by lighting condition as well as the complexity of algorithm.

Active sensors acquire distance information from the active emission of signals [

12]. Laser scanning is considered to be a universal, high-precision and wide-scale detection method for plant-growth status [

5]. Paulus et al. [

13] conducted a growth analysis experiment on eight pots of spring barley plants under different drought conditions in an industrial environment. Single leaf area, single stem height, plant height and plant width were determined with a laser-scanning system combined with an articulated measuring arm. These measurements had high correlations (R

2, 0.85–0.97) with manual measurements. Based on such accuracy, they were also able to effectively monitor the growth and quantify the growth processes of barley plants. However, the small scanning field and small arm size necessitated multiple-location scans for whole plants, which made the system expensive and inefficient. Sun et al. [

14] developed a system consisting of a 2D light detection and ranging (LiDAR) and real time kinematic global positioning system (RTK-GPS) for high-throughput phenotyping. They built a model to obtain the height of cotton plants, considering the angular resolution, sensor mounting height, tractor speed and so on. This system performed well in estimating the heights of cotton plants. However, many factors such as the angular resolution and uneven ground affected the measurements, and the data were noisy, which made it impossible to accurately measure other parameters such as the leaf area. Su et al. [

15] proposed a difference-of-normals (DoN) method to separate corn leaves and stalks based on laser point clouds in a greenhouse. However, it took 20 min for each scan per position. Ana et al. [

16] proposed a vine-shaped artificial object (VSAO) calibration method, based on which they implemented a static terrestrial laser scanner (TLS) and a mobile scanning system (MMS) with six algorithms to determine the trunk volumes of vines in a real vineyard. The results showed that the relative errors of the different sensors, combined with different algorithms, were 2.46%–6.40%. The limitations of these two systems included long scanning time, tedious processing and environmental factors. Laser scanner had high detection accuracy for individual plants and groups in industrial or field environments, but many factors such as the topography still impacted the measurements [

17]. However, cost and the efficiency were the mainly bottlenecks that restricted the application of this technology in actual production.

Some other detection methods have been proposed, and laser scanning has become a common means of evaluating these methods [

18,

19,

20]. Compared to laser scanning, the time-of-flight (TOF) camera has the advantages of speed, simplicity and low cost and the potential for use in 3D phenotyping research [

20,

21,

22,

23,

24,

25,

26]. For example, Microsoft Kinect is widely used as a typical TOF camera. Paulus et al. [

19] proved that a low-cost system based on the Microsoft Kinect device can effectively estimate the phenotypes of sugar beets. They used the David laser scanner system as a reference method. The results showed that the Kinect performed as well as the laser scanner for sugar-beet taproots in terms of height, width, volume and surface area estimation. However, the Kinect performed poorly in estimating wheat-ear parameters, due to the low resolution, while the laser scanner still performed well. The R

2s of the maximum length and alpha shape volume were 0.02 and 0.40, respectively, when using Kinect, and the R

2s of these two parameters were greater than 0.84 for the laser scan. Sugar beet is simple in morphology and structure; the potential of Kinect for other plants remains to be seen. Xia et al. [

27] used a mean-shift-clustering algorithm to segment the leaves in depth images obtained from Kinect, and removed the background in both RGB and depth images. Based on the adjacent-pixel-gradient-vector-field of depth image, they achieved segmentation of shade leaves. This approach can be effectively applied to automatic fruit harvest and other agricultural automation work. However, their work only focused on a single-frame point cloud, which led to incomplete data for the plant. Meanwhile, the complete plant point clouds were more complex, with more noise and a layered-points phenomenon, which their algorithm could not solve. Anduja et al. [

28] proposed reconstructing maize in the field with the Kinect Fusion algorithm. They monitored segmentation of maize, weeds and soil through height and RGB information and studied the correlation between volume and biomass. The coefficient of the correlation between the maize biomass and volume was 0.77, while that between the weed volume and biomass was 0.83. It was clear that the correlation coefficient was not as high as that in Paulus’s study [

19] because of rough point clouds with poor quality caused by the complex field environment and complexity of the plants, and they did not perform point-cloud optimization. Wang et al. [

29] measured the height of sorghum in the field using five different sensors and established digital elevation models. All the coefficients of correlation between the values generated by the models and those measured manually were above 0.9. They proposed that the Kinect could provide color and morphology information about plants for identification and counting. However, the data acquired by Kinect were, again, rough and noisy, and they were not suitable for the extraction of other parameters.

According to the above studies, multiple complex parameters were effectively extracted using laser scans because of the high-quality point clouds. Kinect, by contrast, performed well in height estimation and object segmentation because these two tasks do not require high-quality data. To extract more parameters efficiently in a low-cost platform, it was necessary to obtain complete and high-quality plant 3D data using a TOF camera. However, there was a layered-points phenomenon in the plant point clouds based on multiple frames [

30] because of the errors from the TOF camera and registration algorithm, a common problem.

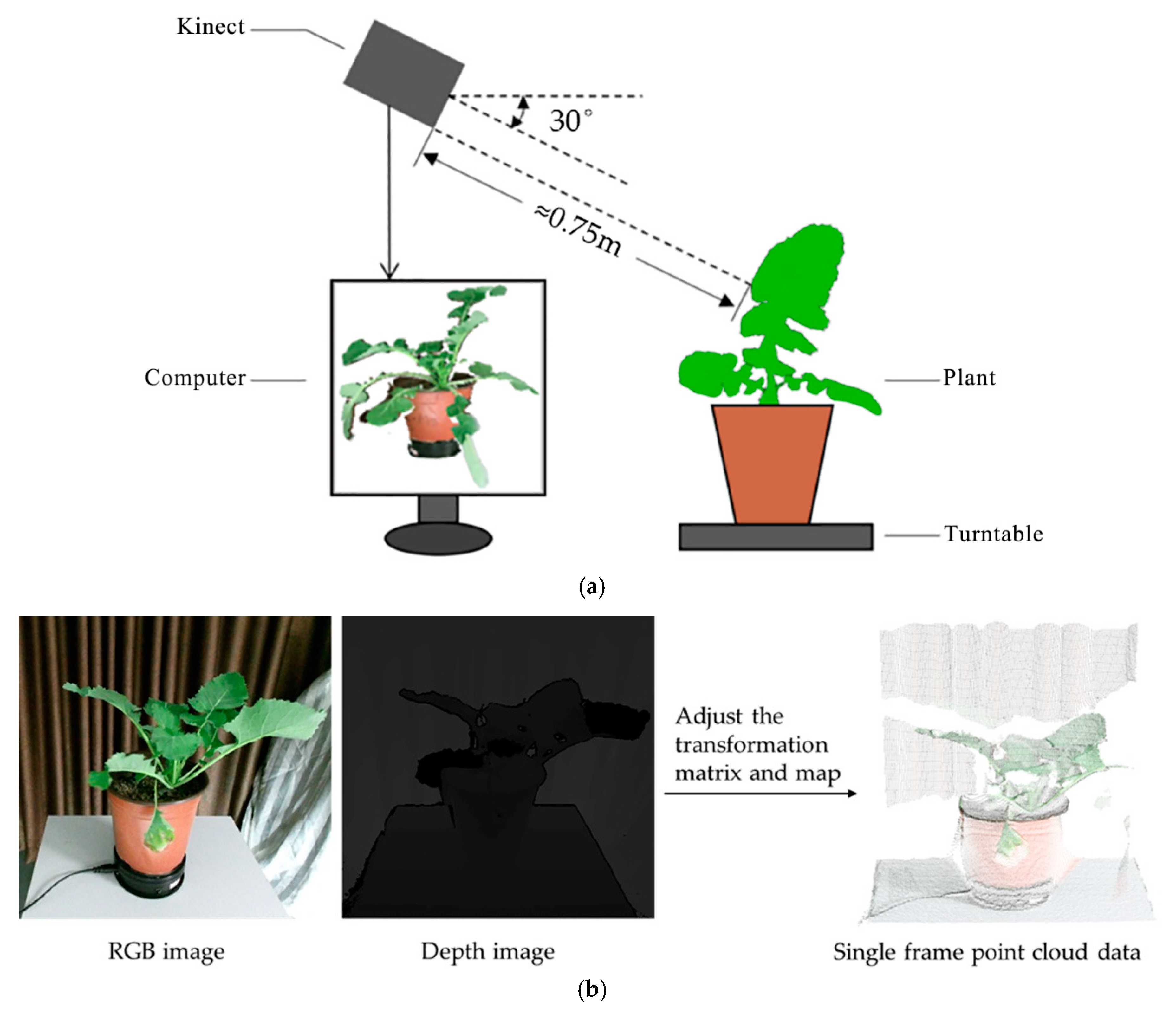

To improve the quality of the plant point cloud, we proposed an optimization method to reduce the impact of noise and layered-points. A simple and low-cost platform based on Kinect was used for data acquisition, which makes the proposed method more widely applicable. In this study, we optimized the quality of single-frame point clouds by removing all types of noise while preserving the integrity of the plant data. We also eliminated the local layered-points phenomenon to improve the quality of plant point clouds registered from multiple frames.