On the Development of Autonomous Vehicle Safety Distance by an RSS Model Based on a Variable Focus Function Camera

Abstract

:1. Introduction

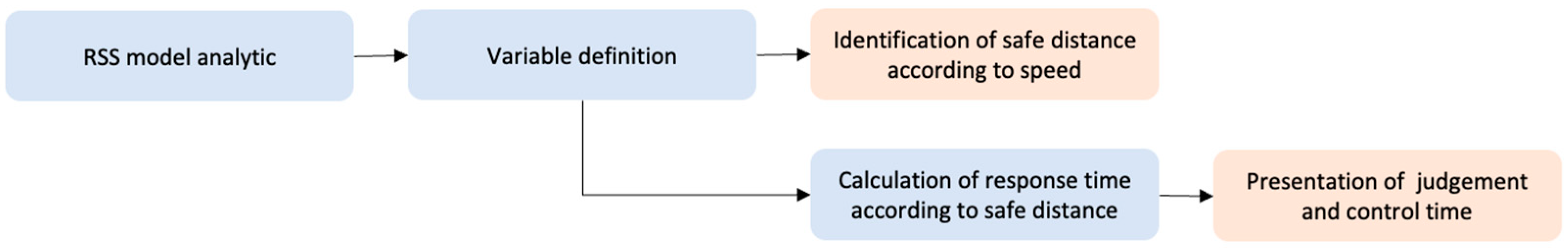

1.1. Related Work

1.2. Problem Definition

2. The Necessity of Variable Focus Function Camera Based on RSS Model

2.1. Limitations of the ACC System as an ADAS

2.2. Limitations of Distance Measurement Using Sensors

2.3. Importance of Applying Variable Focus Function Camera RSS Model

3. Build RSS Model for Variable Angle Application

3.1. Features of RSS Model and Variable Focus Function Camera

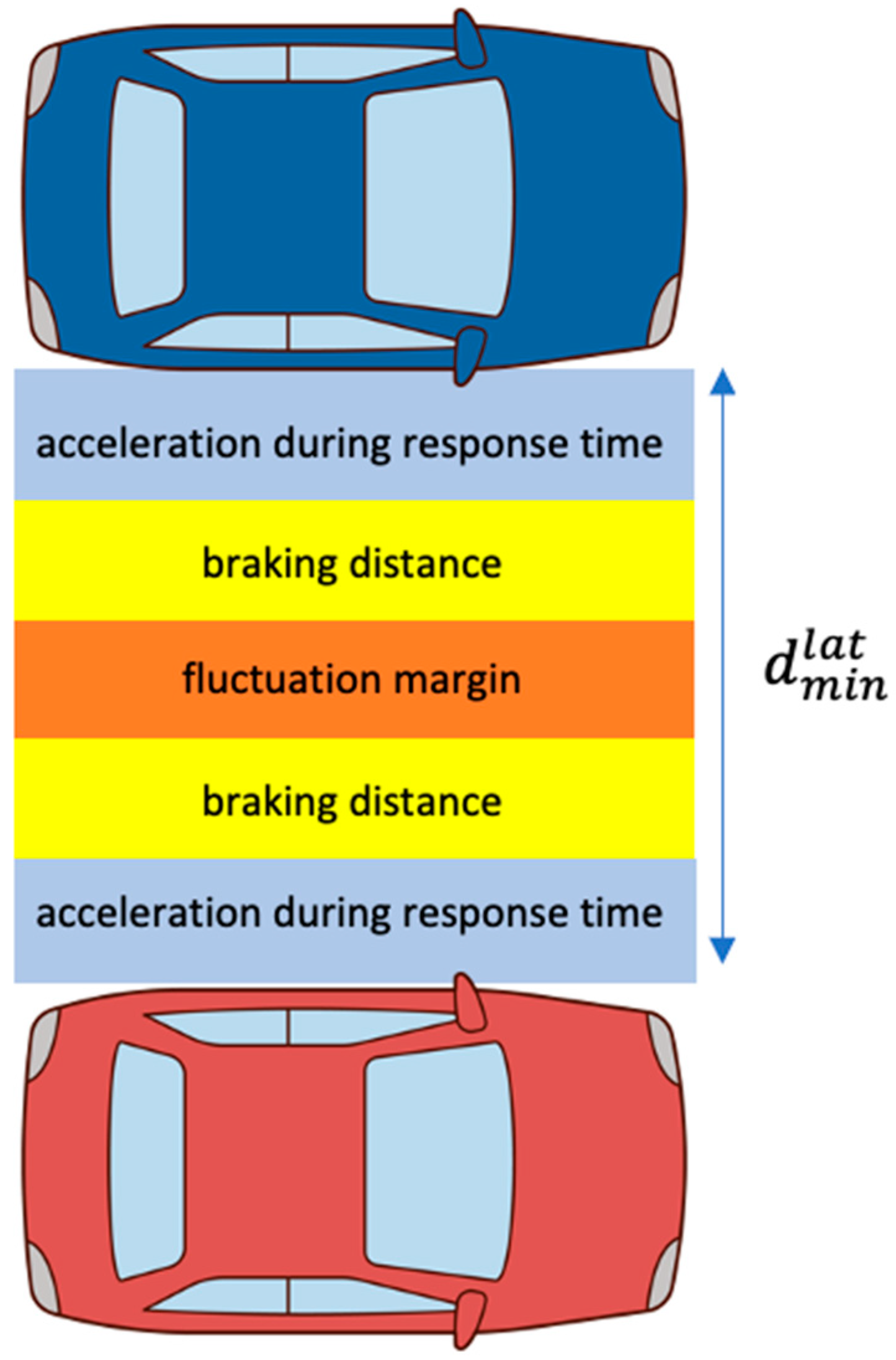

3.2. Identification of RSS Model Criteria for Variable Focus Function Application

3.3. Derive RSS Models and Identify Safe Distances by Speed

4. Verification of Suitability of RSS Model Application

4.1. Scenario Setup for RSS Model Validation

4.2. Identification of Response Time Using RSS Safety Distance

4.3. Validation of Response Time Using Safety Distance of Variable Focus Function Fitted RSS Model

5. Results

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Hörl, S.; Ciari, F.; Axhausen, K.W. Recent perspectives on the impact of autonomous vehicles. Arb. Verk. Und Raumplan. 2016, 1216. [Google Scholar] [CrossRef]

- Riedmaier, S.; Ponn, T.; Ludwig, D.; Schick, B.; Diermeyer, F. Survey on scenario-based safety assessment of automated vehicles. IEEE Access 2020, 8, 87456–87477. [Google Scholar] [CrossRef]

- Dixit, V.V.; Chand, S.; Nair, D.J. Autonomous vehicles: Disengagements, accidents and reaction times. PLoS ONE 2016, 11, e0168054. [Google Scholar] [CrossRef] [Green Version]

- Rieger, G.; Joachim, S.; Holger, B.; Michael, S.; Robert, Z. Active safety systems change accident environment of vehicles significantly challenge for vehicle design. In Proceedings of the 19th International Technical Conference on the Enhanced Safety of Vehicles (ESV), Washington, DC, USA, 6–9 June 2005. [Google Scholar]

- Magdici, S.; Matthias, A. Adaptive cruise control with safety guarantees for autonomous vehicles. IFAC-PapersOnLine 2017, 50, 5774–5781. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Shaked, S.; Amnon, S. On a formal model of safe and scalable self-driving cars. arXiv 2017, arXiv:1708.06374. [Google Scholar]

- Mobileye. Implementing the RSS Model on NHTSAPre-Crash Scenarios; Mobileye: Jerusalem, Israel, 2018. [Google Scholar]

- De Iaco, R.; Smith, S.L.; Czarnecki, K. Safe Swerve Maneuvers for Autonomous Driving. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1941–1948. [Google Scholar]

- De Iaco, R.; Smith, S.L.; Czarnecki, K. Universally safe swerve manoeuvres for autonomous driving. arXiv 2020, arXiv:2001.11159. [Google Scholar]

- Zhu, M.; Wang, X.; Tarko, A. Modeling car-following behavior on urban expressways in Shanghai: A naturalistic driving study. Transp. Res. Part C Emerg. Technol. 2018, 93, 425–445. [Google Scholar] [CrossRef] [Green Version]

- Xu, X.; Wang, X.; Wu, X.; Hassanin, O.; Chai, C. Calibration and evaluation of the Responsibility-Sensitive Safety model of autonomous car-following maneuvers using naturalistic driving study data. Transp. Res. Part C Emerg. Technol. 2021, 123, 102988. [Google Scholar] [CrossRef]

- Li, L.; Peng, X.; Wang, F.Y.; Cao, D.; Li, L. A situation-aware collision avoidance strategy for car-following. IEEE/CAA J. Autom. Sin. 2018, 5, 1012–1016. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Wang, X.; Hassanin, O.; Xu, X.; Yang, M.; Hurwitz, D.; Wu, X. Calibration and evaluation of responsibility-sensitive safety (RSS) in automated vehicle performance during cut-in scenarios. Transp. Res. Part C Emerg. Technol. 2021, 125, 103037. [Google Scholar] [CrossRef]

- Zhao, C.; Xing, Y.; Li, Z.; Li, L.; Wang, X.; Wang, F.Y.; Wu, X. A Negotiation-based Right-of-way Assignment Strategy to Ensure Traffic Safetyand Efficiency in Lane Changes. arXiv 2019, arXiv:1904.06500. [Google Scholar]

- Khayatian, M.; Mehrabian, M.; Allamsetti, H.; Liu, K.W.; Huang, P.Y.; Lin, C.W.; Shrivastava, A. Cooperative driving of connected autonomous vehicles using responsibility-sensitive safety (RSS) rules. In Proceedings of the ACM/IEEE 12th International Conference on Cyber-Physical Systems, Nashville, TN, USA, 19–21 May 2021; pp. 11–20. [Google Scholar]

- Orzechowski, P.F.; Li, K.; Lauer, M. Towards Responsibility-Sensitive Safety of Automated Vehicles with Reachable Set Analysis. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–6. [Google Scholar]

- Chai, C.; Zeng, X.; Alvarez, I.; Elli, M.S. Evaluation of Responsibility-Sensitive Safety (RSS) Model based on Human-in-the-loop Driving Simulation. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–7. [Google Scholar]

- Chai, C.; Zeng, X.; Wu, X.; Wang, X. Safety Evaluation of Responsibility-Sensitive Safety (RSS) on Autonomous Car-Following Maneuvers Based on Surrogate Safety Measurements. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 175–180. [Google Scholar]

- Rödel, C.; Stadler, S.; Meschtscherjakov, A.; Tscheligi, M. Towards autonomous cars: The effect of autonomy levels on acceptance and user experience. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar]

- Lee, D.; Han, K.; Huh, K. Collision detection system design using a multi-layer laser scanner for collision mitigation. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2012, 226, 905–914. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather Influence and Classification with Automotive Lidar Sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar]

- Davis, L.C. Effect of adaptive cruise control systems on traffic flow. Phys. Rev. E 2004, 69, 066110. [Google Scholar] [CrossRef]

- Marsden, G.; McDonald, M.; Brackstone, M. Towards an understanding of adaptive cruise control. Transp. Res. Part C Emerg. Technol. 2001, 9, 33–51. [Google Scholar] [CrossRef]

- Papis, M.; Matyjewski, M. Assessment of the influence of the advanced emergency braking systems on pedestrian safety. Arch. Motoryz. 2017, 77. [Google Scholar] [CrossRef]

- Bours, R.; Rauf, K.; Kietlinski, K. A method for developing aeb systems based on integration of virtual and experimental tools. In Proceedings of the 23rd International Technical Conference on the Enhanced Safety of Vehicles (ESV) National Highway Traffic Safety Administration, Seoul, Korea, 27–30 May 2013; No. 13-0347. [Google Scholar]

- Abou-Jaoude, R. ACC radar sensor technology, test requirements, and test solutions. IEEE Trans. Intell. Transp. Syst. 2003, 4, 115–122. [Google Scholar] [CrossRef]

- Pananurak, W.; Thanok, S.; Parmochkun, M. Adaptive cruise control for an intelligent vehicle. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2009; pp. 1794–1799. [Google Scholar]

- Ploeg, J.; Scheepers, B.T.; Van Nunen, E.; Van de Wouw, N.; Nijmeijer, H. Design and experimental evaluation of cooperative adaptive cruise control. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 260–265. [Google Scholar]

- Takahama, T.; Akasaka, D. Model predictive control approach to design practical adaptive cruise control for traffic jam. Int. J. Automot. Eng. 2018, 9, 99–104. [Google Scholar] [CrossRef] [Green Version]

- Chavez-Garcia, R.O.; Burlet, J.; Vu, T.D.; Aycard, O. Frontal object perception using radar and mono-vision. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 159–164. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Cho, H.; Seo, Y.W.; Kumar, B.V.; Rajkumar, R.R. A multi-sensor fusion system for moving object detection and tracking in urban driving environments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1836–1843. [Google Scholar]

- Premebida, C.; Ludwig, O.; Nunes, U. LIDAR and vision-based pedestrian detection system. J. Field Robot. 2009, 26, 696–711. [Google Scholar] [CrossRef]

- Heuel, S.; Rohling, H. Pedestrian recognition in automotive radar sensors. In Proceedings of the 2013 14th International Radar Symposium (IRS), Dresden, Germany, 19–21 June 2013; pp. 732–739. [Google Scholar]

- Kutila, M.; Pyykönen, P.; Ritter, W.; Sawade, O.; Schäufele, B. Automotive LIDAR sensor development scenarios for harsh weather conditions. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 265–270. [Google Scholar]

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef] [Green Version]

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. Vision zero: Can roadway accidents be eliminated without compromising traffic throughput. arXiv 2018, arXiv:1901.05022. [Google Scholar]

- Wishart, J.; Como, S.; Elli, M.; Russo, B.; Weast, J.; Altekar, N.; James, E.; Chen, Y. Driving safety performance assessment metrics for ads-equipped vehicles. SAE Tech. Paper 2020, 2, 2881–2899. [Google Scholar]

- Gassmann, B.; Oboril, F.; Buerkle, C.; Liu, S.; Yan, S.; Elli, M.; Alvarez, I.; Aerrabotu, N.; Jaber, S.; van Beek, P.; et al. Towards standardization of AV safety: C++ library for responsibility sensitive safety. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Ding, N.; Cui, S.; Zhao, C.; Wang, Y.; Chen, B. Multi-Link Scheduling Algorithm of LLC Protocol in Heterogeneous Vehicle Networks Based on Environment and Vehicle-Risk-Field Model. IEEE Access 2020, 8, 224211–224223. [Google Scholar] [CrossRef]

| Rules | Common Sense |

|---|---|

| Rule 1 | Safe Distance |

| Rule 2 | Cutting In |

| Rule 3 | Right of Way |

| Rule 4 | Limited Visibility |

| Rule 5 | Avoid Crashes |

| GV80 | 2.5 T Gasoline | 3.5 T Gasoline | 3.0 Diesel |

|---|---|---|---|

| 0~100 km/h | 6.9 s | 5.5 s | 6.8 s |

| (max) acceleration [] | 4.03 | 5.05 | 4.08 |

| 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | 110 | 120 | 130 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ] | 30 | 6.07 | 2.69 | - | - | - | - | - | - | - | - | - |

| 40 | 12.53 | 9.15 | 4.81 | - | - | - | - | - | - | - | - | |

| 50 | 20.52 | 17.14 | 12.80 | 7.50 | 1.23 | - | - | - | - | - | - | |

| 60 | 30.03 | 26.66 | 22.32 | 17.01 | 10.74 | 3.54 | - | - | - | - | - | |

| 70 | 41.07 | 37.70 | 33.36 | 28.05 | 21.78 | 14.55 | 6.35 | - | - | - | - | |

| 80 | 53.64 | 50.27 | 45.93 | 40.62 | 34.35 | 27.12 | 18.92 | 9.76 | - | - | - | |

| 90 | 67.74 | 64.37 | 60.03 | 54.72 | 48.45 | 41.22 | 33.02 | 23.86 | 13.73 | 2.64 | - | |

| 100 | 83.37 | 79.99 | 75.65 | 70.35 | 64.08 | 56.82 | 48.65 | 39.48 | 29.36 | 18.27 | 6.21 | |

| 110 | 100.52 | 97.15 | 92.81 | 87.50 | 81.23 | 74.00 | 65.80 | 56.64 | 46.51 | 35.42 | 23.36 | |

| 120 | 119.21 | 115.83 | 111.49 | 106.19 | 99.92 | 92.68 | 84.48 | 75.32 | 65.19 | 54.10 | 42.05 | |

| 130 | 139.42 | 136.04 | 131.70 | 126.40 | 120.13 | 112.89 | 104.69 | 95.53 | 85.40 | 74.31 | 65.26 | |

| Velocity of Vehicle [km/h] | Longitudinal Safety Distance [m] |

|---|---|

| 30 | 24.25 |

| 40 | 31.78 |

| 50 | 39.87 |

| 60 | 48.52 |

| 70 | 57.74 |

| 80 | 67.52 |

| 90 | 77.87 |

| 100 | 88.78 |

| 110 | 100.25 |

| 120 | 112.28 |

| 130 | 124.88 |

| Driving Status | Velocity | Safety Distance |

|---|---|---|

| High speed | ||

| Fast speed | ||

| Medium speed | ||

| Low speed | ||

| Slow speed |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.-J.; Yu, S.-H.; Kim, T.-H.; Kim, J.-U.; Kim, Y.-M. On the Development of Autonomous Vehicle Safety Distance by an RSS Model Based on a Variable Focus Function Camera. Sensors 2021, 21, 6733. https://doi.org/10.3390/s21206733

Kim M-J, Yu S-H, Kim T-H, Kim J-U, Kim Y-M. On the Development of Autonomous Vehicle Safety Distance by an RSS Model Based on a Variable Focus Function Camera. Sensors. 2021; 21(20):6733. https://doi.org/10.3390/s21206733

Chicago/Turabian StyleKim, Min-Joong, Sung-Hun Yu, Tong-Hyun Kim, Joo-Uk Kim, and Young-Min Kim. 2021. "On the Development of Autonomous Vehicle Safety Distance by an RSS Model Based on a Variable Focus Function Camera" Sensors 21, no. 20: 6733. https://doi.org/10.3390/s21206733

APA StyleKim, M.-J., Yu, S.-H., Kim, T.-H., Kim, J.-U., & Kim, Y.-M. (2021). On the Development of Autonomous Vehicle Safety Distance by an RSS Model Based on a Variable Focus Function Camera. Sensors, 21(20), 6733. https://doi.org/10.3390/s21206733