Modeling of a Generic Edge Computing Application Design

Abstract

:1. Introduction

- Outline on convolutional neural networks;

- Overview of fog computing;

- Overview of edge computing;

- Modeling of generic edge computing in ACP;

- Modeling of generic edge computing in Promela.

2. Convolutional Neural Networks

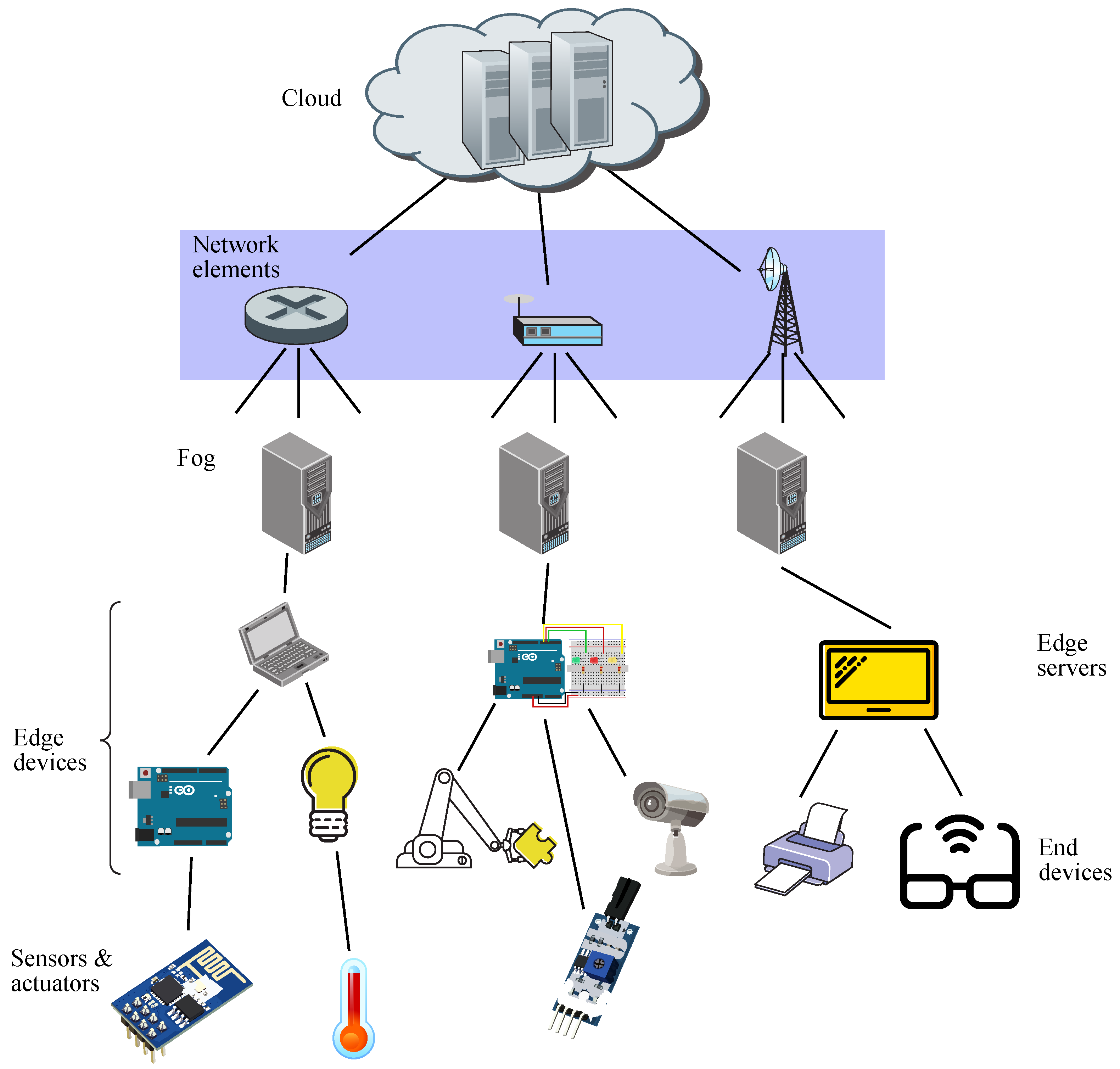

3. Fog Computing and IoT

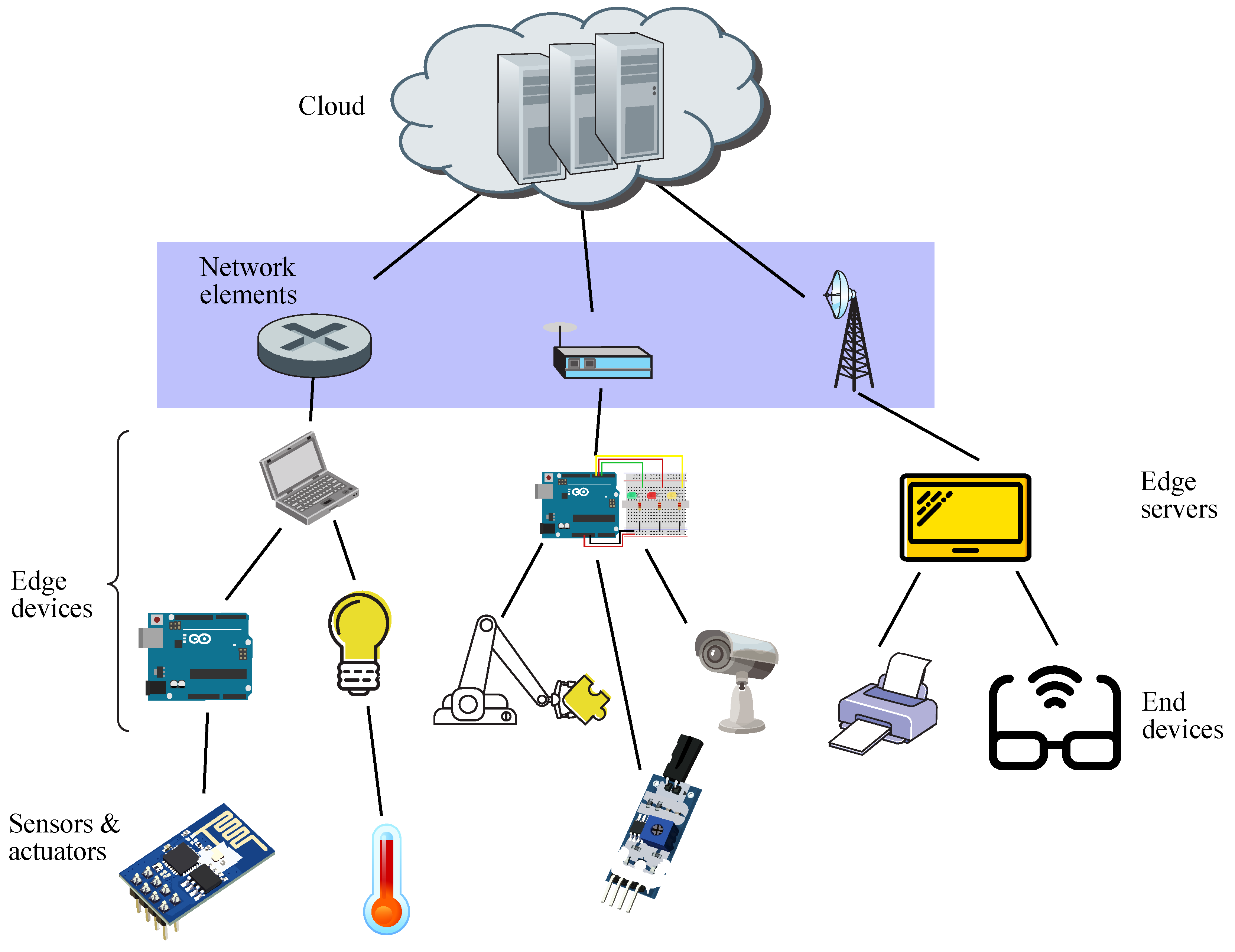

4. Edge Computing and IoT

4.1. Edge AI

4.2. Edge Computing Applications

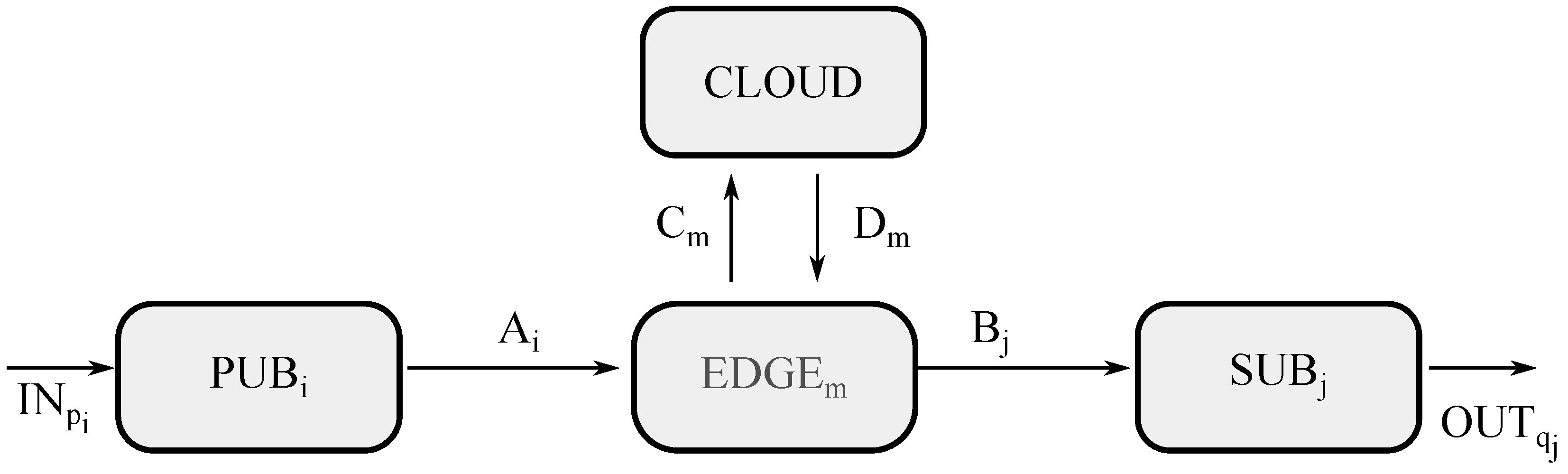

5. ACP Model

5.1. Edge Scenario

5.2. Fog Scenario

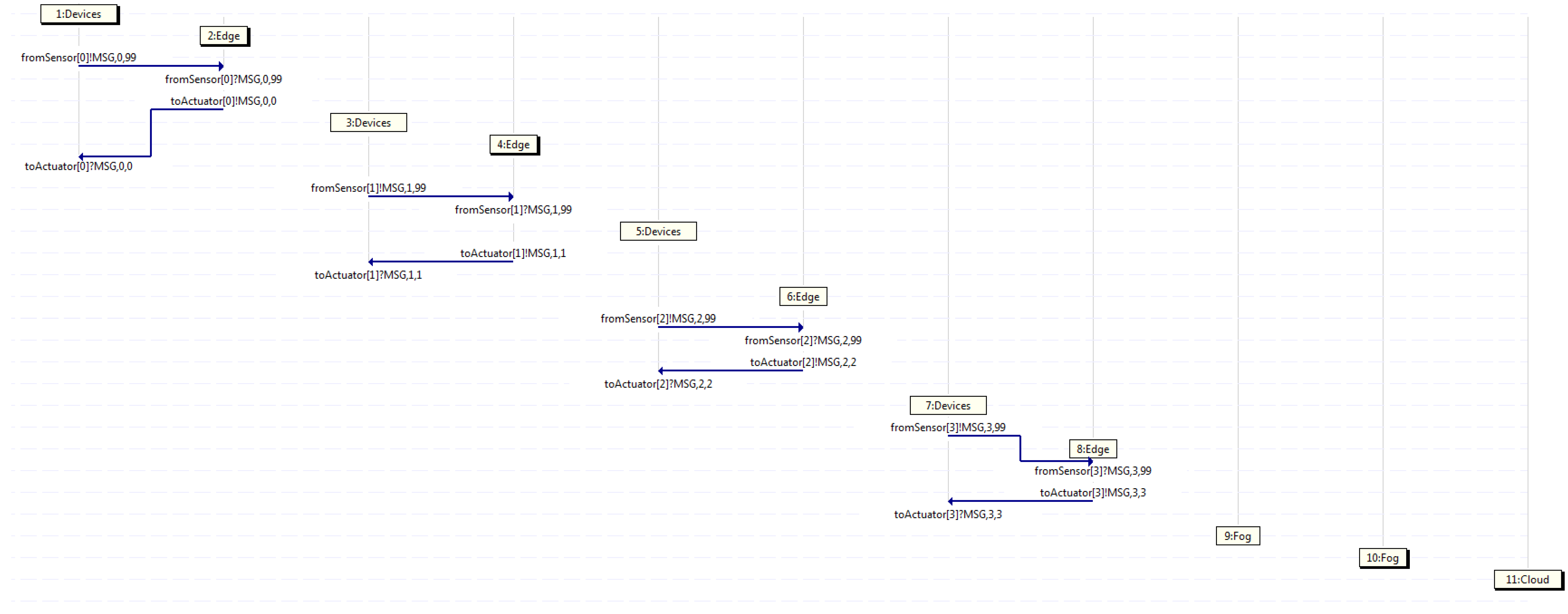

6. Spin/Promela Scenario

- Devices;

- Edge;

- Fog;

- Cloud.

| Algorithm 1 Fog model coded in Promela |

|

#define N 2

#define INF 99 mtype = MSG chan fromSensor[N*N] = [1] of mtype,byte,byte chan toActuator[N*N] = [1] of mtype,byte,byte chan Fog2Edge[N*N] = [1] of mtype,byte,byte chan Edge2Fog[N*N] = [1] of mtype,byte,byte chan Fog2Cloud[N] = [1] of mtype,byte,byte chan Cloud2Fog[N] = [1] of mtype,byte,byte proctype Devices (byte id) { byte x,y,n=0; do :: n<1 -> fromSensor[id] ! MSG(id,INF); n++ :: toActuator[id] ? MSG(x,y) od } proctype Edge (byte id) { byte x,y; do :: if :: fromSensor[id] ? MSG(x,y) -> if :: toActuator[id] ! MSG(x,id) :: Edge2Fog[id] ! MSG(x,y) fi :: Fog2Edge[id] ? MSG(x,y)->toActuator[id] ! MSG(x,y) fi od } proctype Fog (byte id) { byte x,y; do :: Edge2Fog[id*2] ? MSG(x,y) -> if :: Fog2Edge[id*2+1] ! MSG(x,id*2+1) :: Fog2Cloud[id] ! MSG(x,y) fi :: Edge2Fog[id*2+1] ? MSG(x,y) -> if :: Fog2Edge[id*2] ! MSG(x,id*2) :: Fog2Cloud[id] ! MSG(x,y) fi :: Cloud2Fog[id] ? MSG(x,y) -> Fog2Edge[y] ! MSG(x,y) od } proctype Cloud (byte id) { byte x,y; do ::Fog2Cloud[0] ? MSG(x,y) -> select(y:N..N+1) -> Cloud2Fog[1] ! MSG(x,y) ::Fog2Cloud[1] ? MSG(x,y) -> select(y:0..1)-> Cloud2Fog[0] ! MSG(x,y) od } init { byte i; for (i : 0..(N*N-1)) run Devices (i) run Edge(i) for (i : 0..(N-1)) run Fog (i) run Cloud(0) } |

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ACP | Algebra of Communicating Processes |

| AI | Artificial Intelligence |

| AIoT | Artificial Intelligence of Things |

| ANN | Artificial Neural Networks |

| AR | Augmented Reality |

| Baas | Backend as a Service |

| CL | Centralized Learning |

| CNN | Convolutional Neural Networks |

| CPS | Cyber Physical Systems |

| DCE | Digital Circular Economy |

| DL | Deep Learning |

| DNN | Deep Neural Networks |

| DNS | Domain Name System |

| DT | Digital Twins |

| FaaS | Function as a Service |

| FDT | Formal Description Techniques |

| FL | Federated Learning |

| G-IoT | Green Internet of Things |

| GRU | Gated Recurrent Units |

| HFCL | Hybrid Federated Centralized Learning |

| IIoT | Industrial Internet of Things |

| IoMT | Internet of Medical Things |

| IoT | Internet of Things |

| LAN | Local Area Network |

| LSTM | Long Short-Term Memory |

| MEC | Multi-Access Edge Computing |

| MSC | Message Sequence Chart |

| MSG | Message |

| ML | Machine Learning |

| MR | Mixed Reality |

| Pub/Sub | Publisher/Subscriber |

| PS | Parameter Server |

| PROMELA | PROtocol/PROcess MEta LAnguage |

| QoS | Quality of Service |

| RAN | Radio Access Network |

| RNN | Recurrent Neural Networks |

| SPIN | Simple Promela INterpreter |

| V2R | Vehicle to Roadside Unit |

| V2V | Vehicle to Vehicle |

| VANET | Vehicular Ad-hoc Network |

| VEC | Vehicular Edge Computing |

| VR | Virtual Reality |

| WAN | Wide Area Network |

References

- Carvalho, G.; Cabral, B.; Pereira, V.; Bernardino, J. Edge computing: Current trends, research challenges and future directions. Computing 2021, 103, 993–1023. [Google Scholar] [CrossRef]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An Overview on Edge Computing Research. IEEE Access 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- A 2021 Perspective on Edge Computing. Available online: https://atos.net/wp-content/uploads/2021/08/atos-2021-perspective-on-edge-computing-white-paper.pdf/ (accessed on 18 September 2021).

- Rahimi, H.; Picaud, Y.; Singh, K.; Madhusudan, G.; Costanzo, S.; Boissier, O. Design and Simulation of a Hybrid Architecture for Edge Computing in 5G and Beyond. IEEE Trans. Comput. 2021, 70, 1213–1224. [Google Scholar] [CrossRef]

- Agarwal, G.K.; Magnusson, M.; Johanson, A. Edge AI Driven Technology Advancements Paving Way towards New Capabilities. IEEE Int. J. Innov. Technol. Manag. 2020, 18, 2040005. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, W.; Huang, J.; Yang, C.; Lu, J.; Tan, H. Artificial Intelligence for Securing IoT Services in Edge Computing: A Survey. Secur. Commun. Netw. 2020, 2020, 8872586. [Google Scholar] [CrossRef]

- Hamdan, S.; Ayyash, M.; Almajali, S. Edge-Computing Architectures for Internet of Things Applications: A Survey. Sensors 2020, 20, 6641. [Google Scholar] [CrossRef]

- Mrabet, H.; Belgith, S.; Alhomoud, A.; Jemai, A. A Survey of IoT Security Based on a Layered Architecture of Sensing and Data Analysis. Sensors 2020, 20, 3625. [Google Scholar] [CrossRef] [PubMed]

- Fokkink, W. Introduction to Process Algebra, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Ben-Ari, M. Principles of the Spin Model Checker, 1st ed.; Springer: London, UK, 2008. [Google Scholar]

- Smoliński, M. Resolving Classical Concurrency Problems Using Outlier Detection. J. Appl. Comput. Sci. 2017, 25, 69–88. [Google Scholar]

- Ozkaya, M. Do the informal & formal software modeling notations satisfy practitioners for software architecture modeling? Inf. Softw. Technol. 2018, 95, 15–33. [Google Scholar]

- Yu, Z.; Ouyang, J.; Li, S.; Peng, X. Formal modeling and control of cyber-physical manufacturing systems. Adv. Mech. Eng. 2017, 9, 1–12. [Google Scholar] [CrossRef]

- Hofer-Schmitz, K.; Stojanovic, B. Towards Formal Methods of IoT Application Layer Protocols. In Proceedings of the 12th CMI Conference on Cybersecurity and Privacy, Copenhagen, Denmark, 28–29 November 2019. [Google Scholar]

- Guizzardi, G. Ontological Foundations for Structural Conceptual Models. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2005. [Google Scholar]

- Gleirscher, M.; Marmsoler, D. Formal Methods in Dependable Systems Engineering: A Survey of Professionals from Europe and North America. Empir. Softw. Eng. 2020, 25, 4473–4546. [Google Scholar] [CrossRef]

- Casale, G.; Gribaudo, M.; Serazzi, G. Tools for Performance Evaluation of Computer Systems: Historical Evolution and Perspectives. In Performance Evaluation of Computer and Communication Systems. Milestones and Future Challenges; Springer: Heidelberg, Germany, 2010. [Google Scholar]

- Molero, X.; Juiz, C.; Rodeño, M. Evaluación y Modelado del Rendimiento de los Sistemas Informáticos, 3rd ed.; Pearson Prentince Hall: Hoboken, NJ, USA, 2004. [Google Scholar]

- Iqbal, I.M.; Adzkiya, D.; Mukhlash, I. Formal verification of automated teller machine systems using SPIN. In Proceedings of the AIP Conference, Surabaya, Indonesia, 23 November 2016; Volume 1867, p. 020045. [Google Scholar]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [PubMed]

- Hart, G.L.W.; Mueller, T.; Toher, C.; Curtarolo, S. Machine learning for alloys. Nature 2021, 6, 730–755. [Google Scholar]

- Wichert, A.; Sa-Couto, L. Machine Learning—A Journey to Deep Learning, 1st ed.; Machine Learning for Alloys; World Scientific Singapore: Singapore, 2021. [Google Scholar]

- Teslyuk, V.; Kazarian, A.; Kryvinska, N.; Tsmots, I. Optimal Artificial Neural Network Type Selection Method for Usage in Smart House Systems. Sensors 2021, 21, 47. [Google Scholar] [CrossRef] [PubMed]

- Poggio, T.; Mhaskar, H.; Rosasco, L.; Miranda, B.; Liao, Q. Why and When Can Deep-but Not Shallow-networks Avoid the Curse of Dimensionality: A Review. Int. J. Autom. Comput. 2019, 14, 503–519. [Google Scholar] [CrossRef] [Green Version]

- CNN vs. RNN vs. ANN—Analyzing 3 Types of Neural Networks in Deep Learning. Available online: https://www.analyticsvidhya.com/blog/2020/02/cnn-vs-rnn-vs-mlp-analyzing-3-types-of-neural-networks-in-deep-learning/ (accessed on 18 September 2021).

- Rehmer, A.; Kroll, A. On the vanishing and exploding gradient problem in Gated Recurrent Units. In Proceedings of the 21st IFAC World Congress, Berlin, Germany, 12–17 July 2020; Volume 54, pp. 1243–1248. [Google Scholar]

- Véstias, M.P. A Survey of Convolutional Neural Networks on Edge with Reconfigurable Computing. Algorithms 2019, 12, 154. [Google Scholar] [CrossRef] [Green Version]

- Cho, K.O.; Jang, H.J. Comparison of different input modalities and network structures for deep learning-based seizure detection. Sci. Rep. 2020, 10, 122. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Xie, X.; Gool, L.; Timofte, R. Learning Filter Basis for Convolutional Neural Network Compression. IEEE Int. Conf. Comput. Vis. (ICCV) 2019, 1, 5622–5631. [Google Scholar]

- Azulay, A.; Weiss, Y. Why do deep convolutional networks generalize so poorly to small image transformations? J. Mach. Learn. Res. 2019, 20, 1–25. [Google Scholar]

- Li, L.; Ma, L.; Jiao, L.; Liu, F.; Sun, Q.; Zhao, J. Complex Contourlet-CNN for polarimetric SAR image classification. Pattern Recognit. 2020, 100, 107110. [Google Scholar] [CrossRef]

- Image Classification of Rock-Paper-Scissors Pictures Using Convolutional Neural Network (CNN). Available online: https://medium.com/mlearning-ai/image-classification-of-rock-paper-scissors-pictures-using-convolutional-neural-network-cnn-c3d2db127cdb/ (accessed on 18 September 2021).

- Meier, D.; Wuthrich, M.V. Convolutional Neural Network Case Studies: (1) Anomalies in Mortality Rates (2) Image Recognition. SSRN 2020, 1, 3656210. [Google Scholar] [CrossRef]

- CS231n Convolutional Neural Networks for Visual Recognition. Available online: https://cs231n.github.io/convolutional-networks/ (accessed on 18 September 2021).

- Wang, W.; Yang, Y.; Wang, X.; Wang, W.Z.; Li, J. Development of convolutional neural network and its application in image classification: A survey. Opt. Eng. 2019, 58, 040901. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Ren, Q.; Zhao, J. Optical-numerical method based on a convolutional neural network for full-field subpixel displacement measurements. Opt. Express 2021, 29, 9137–9156. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Peng, J.; Song, W.; Gao, X.; Zhang, Y.; Zhang, X.; Xiao, L.; Ma, L. A Convolutional Neural Network-Based Classification and Decision-Making Model for Visible Defect Identification of High-Speed Train Images. J. Sens. 2021, 2021, 5554920. [Google Scholar]

- Miles, C.; Bohrdt, A.; Wu, R.; Chiu, C.; Xu, M.; Ji, G.; Greiner, M.; Weinberger, K.Q.; Demler, E.; Kim, E.A. Correlator convolutional neural networks as an interpretable architecture for image-like quantum matter data. Nat. Commun. 2021, 12, 3905. [Google Scholar] [CrossRef]

- Höhlein, K.; Kern, M.; Hewson, T.; Westermann, R. A comparative study of convolutional neural network models for wind field downscaling. Meteorol. Appl. 2020, 27, 1961. [Google Scholar] [CrossRef]

- Liu, T.; Xie, X.; Zhang, Y. zkCNN: Zero Knowledge Proofs for Convolutional Neural Network Predictions and Accuracy. Cryptol. ePrint Arch. 2021, 2021, 673. [Google Scholar]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef] [Green Version]

- Wasay, A.; Idreos, S. More or Less: When and How to Build Convolutional Neural Network Ensembles. In Proceedings of the 9th International Conference on Learning Representation (ICLR 2021), Virtual. 3–7 May 2021; pp. 1–17. [Google Scholar]

- Su, R.; Liu, T.; Sun, C.; Jin, Q.; Jennane, R.; Wei, L. Fusing convolutional neural network features with hand-crafted features for osteoporosis diagnoses. Neurocomputing 2020, 385, 300–309. [Google Scholar] [CrossRef]

- Shaban, M.; Ogur, Z.; Mahmoud, A.; Switala, A.; Shalaby, A.; Khalifeh, H.A.; Ghazal, M.; Fraiwan, L.; Giridharan, G.; Sandhu, H.; et al. A convolutional neural network for the screening and staging of diabetic retinopathy. PLoS ONE 2020, 15, e0233514. [Google Scholar] [CrossRef]

- Touloupas, G.; Lauber, A.; Henneberger, J.; Beck, A.; Lucchi, A. A convolutional neural network for classifying cloud particles recorded by imaging probes. Atmos. Meas. Tech. 2020, 13, 2219–2239. [Google Scholar] [CrossRef]

- Dong, H.; Butler, K.T.; Matras, D.; Price, S.W.T.; Odarchenko, Y.; Khatry, R.; Thompson, A.; Middelkoop, V.; Jacques, S.D.M.; Beale, A.M.; et al. A deep convolutional neural network for real-time full profile analysis of big powder diffraction data. Comput. Mater. 2021, 7, 74. [Google Scholar] [CrossRef]

- Satu, S.; Ahammed, K.; Abedin, M.Z.; Rahman, A.; Islam, S.M.S.; Azad, A.K.M.; Alyami, S.A.; Moni, M.A. Convolutional Neural Network Model to Detect COVID-19 Patients Utilizing Chest X-ray Images. Mach. Learn. Appl. 2021. under review. [Google Scholar]

- Bonomi, F.; Milito, R.; Natarajan, P.; Zhu, J. A platform for internet of things and analytics. In Big Data and Internet of Things: A Roadmap for Smart Environments; Springer: Cham, Switzerland, 2014; pp. 169–186. [Google Scholar]

- Saba, U.K.; Islam, S.; Ijaz, H.; Rodrigueds, J. Planning Fog networks for time-critical IoT requests. Comput. Commun. 2021, 172, 75–83. [Google Scholar] [CrossRef]

- Sabireen, H.; Neelanarayanan, V. A Review on Fog Computing: Architecture, Fog with IoT, Algorithms and Research Challenges. ICT Express 2021, 7, 162–176. [Google Scholar]

- Ma, K.; Bagula, A.; Nyirenda, C.; Ajayi, O. An IoT-Based Fog Computing Model. Sensors 2019, 19, 2783. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Donno, M.; Tange, K.; Dragoni, N. Foundations and Evolution of Modern Computing Paradigms: Cloud, IoT, Edge, and Fog. IEEE Access 2019, 7, 150936–150948. [Google Scholar] [CrossRef]

- Pham, L.M.; Nguyen, T.-T.; Hoang, T.Q. Towards an Elastic Fog-Computing Framework for IoT Big Data Analytics Applications. Wirel. Commun. Mob. Comput. 2021, 2021, 3833644. [Google Scholar] [CrossRef]

- Meena, V.; Gorripatti, M.; Praba, T.S. Trust Enforced Computational Offloading for Health Care Applications in Fog Computing. Wirel. Pers. Commun. 2021, 119, 1369–1386. [Google Scholar] [CrossRef] [PubMed]

- Al-khafajiy, M.; Baker, T.; Al-Libawy, H.A.; Maamar, Z.; Aloqaily, M.; Jararweh, Y. Improving fog computing performance via Fog-2-Fog collaboration. Future Gener. Comput. Syst. 2019, 100, 266–280. [Google Scholar] [CrossRef]

- Karakaya, A.; Akleylek, S. A novel IoT-based health and tactical analysis model with fog computing. PeerJ Comput. Sci. 2021, 7, e342. [Google Scholar] [CrossRef]

- de Moura-Donassolo, B. IoT Orchestration in the Fog. Ph.D. Thesis, Université Grenoble Alpes, Grenoble, France, 2020. [Google Scholar]

- Kaur, J.; Agrawal, A.; Khan, R.A. Security Issues in Fog Environment: A Systematic Literature Review. Int. J. Wirel. Inf. Netw. 2020, 27, 467–483. [Google Scholar] [CrossRef]

- Gharbi, C.; Hsairi, L.; Zagrouba, E. A Secure Integrated Fog Cloud-IoT Architecture based on Multi-Agents System and Blockchain. In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021), Vienna, Austria, 4–6 February 2021; Volume 2, pp. 1184–1191. [Google Scholar]

- Alzoubi, Y.I.; Osmanaj, V.H.; Jaradat, A.; Al-Ahmad, A. Fog computing security and privacy for the Internet of Thing applications: State-of-the-art. Secur. Priv. 2021, 4, 145. [Google Scholar]

- Toor, A.; Ismal, S.U.; Sohail, N.; Akhunzada, A.; Boudjadar, J.; Khattak, H.A.; Din, I.U.; Rodrigues, J. Energy and performance aware fog computing: A case of DVFS and green renewable energy. Future Gener. Comput. Syst. 2019, 101, 1112–1121. [Google Scholar] [CrossRef]

- Alenizi, F.; Rana, O. Minimizing Delay and Energy in Online Dynamic Fog Systems. arXiv 2020, arXiv:2012.12745. [Google Scholar]

- Nayeri, Z.M.; Ghafarian, T.; Javadi, B. Application placement in Fog computing with AI approach: Taxonomy and a state of the art survey. J. Netw. Comput. Appl. 2021, 185, 103078. [Google Scholar] [CrossRef]

- Singh, J.; Singh, P.; Gill, S.S. Fog computing: A taxonomy, systematic review, current trends and research challenges. J. Parallel Distrib. Comput. 2021, 157, 56–85. [Google Scholar] [CrossRef]

- Caminero, A.C.; Muñoz-Mansilla, R. Quality of Service Provision in Fog Computing: Network-Aware Scheduling of Containers. Sensors 2021, 21, 3978. [Google Scholar] [CrossRef]

- Ijaz, M.; Li, G.; Wang, H.; El-Sherbeeny, A.M.; Awelisah, Y.M.; Lin, L.; Koubaa, A.; Noor, A. Fog computing: Intelligent Fog-Enabled Smart Healthcare System for Wearable Physiological Parameter Detection. Electronics 2020, 9, 2015. [Google Scholar] [CrossRef]

- Tang, C.; Xia, S.; Li, Q.; Chen, W.; Fang, W. Resource pooling in vehicular fog computing. J. Cloud Comput. 2021, 10, 19. [Google Scholar] [CrossRef]

- Gaouar, N.; Lehsaini, M. Toward vehicular cloud/fog communication: A survey on data dissemination in vehicular ad hoc networks using vehicular cloud/fog computing. Int. J. Commun. Syst. 2021, 134, e4906. [Google Scholar]

- Sengupta, J.; Ruj, S.; Bit, S.D. A Secure Fog-Based Architecture for Industrial Internet of Things and Industry 4.0. IEEE Trans. Ind. Inform. 2021, 17, 2316–2324. [Google Scholar] [CrossRef]

- Ungurean, I.; Gaitán, N.C. Software Architecture of a Fog Computing Node for Industrial Internet of Things. Sensors 2021, 21, 3715. [Google Scholar] [CrossRef] [PubMed]

- Ogundoyin, S.O.; Kamil, I.A. A trust management system for fog computing services. Internet Things 2021, 14, 100382. [Google Scholar] [CrossRef]

- Al-Khafajiy, M.; Baker, T.; Asim, M.; Guo, Z.; Ranjan, R.; Longo, A.; Puthal, D.; Taylor, M.J. COMITMENT: A Fog Computing Trust Management Approach. J. Parallel Distrib. Comput. 2020, 137, 1–16. [Google Scholar] [CrossRef]

- Solomon, F.A.M.; Sathianesan, G.W. Fog Level Trust for Internet of Things Devices Using Node Feedback Aggregation. J. Comput. Theor. Nanosci. 2020, 17, 100382. [Google Scholar] [CrossRef]

- Patwary, A.A.; Naha, R.K.; Garg, S.; Battula, S.K.; Patwary, A.K.; Aghasian, E.; Amin, M.B.; Mahanti, A.; Gong, M. Towards Secure Fog Computing: A Survey on Trust Management, Privacy, Authentication, Threats and Access Control. Electronics 2021, 10, 1171. [Google Scholar] [CrossRef]

- Manvi, S.S.; Gowda, N.C. Trust Management in Fog Computing: A Survey. In Applying Integration Techniques and Methods in Distributed Systems and Technologies; Kecskemeti, G., Ed.; IGI Global: Hershey, PA, USA, 2019; pp. 34–48. [Google Scholar]

- Hussain, Y.; Zhiqiu, H.; Akbar, M.A.; Alsanad, A.; Alsanad, A.A.; Nawaz, A.; Khan, I.A.; Khan, Z.U. Context-Aware Trust and Reputation Model for Fog-Based IoT. IEEE Access 2020, 8, 31622–31632. [Google Scholar] [CrossRef]

- Hallappanavar, V.L.; Birje, M.N. A Reliable Trust Computing Mechanism in Fog Computing. Int. J. Cloud Appl. Comput. 2021, 11, 1–20. [Google Scholar] [CrossRef]

- Iqbal, R.; Butt, T.A.; Afzaal, M.; Salah, K. Trust management in social Internet of vehicles: Factors, challenges, blockchain, and fog solutions. Int. J. Distrib. Sens. Netw. 2019, 15, 1550147719825820. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Wu, J.; Cao, J.; Chen, N.; Zhang, Q.; Buyya, R. Blockchain-based trust management in cloud computing systems: A taxonomy, review and future directions. J. Cloud Comput. 2021, 10, 35. [Google Scholar] [CrossRef]

- Rasheed, A.; Chong, P.H.J.; Ho, I.W.; Li, X.J.; Liu, W. An Overview of Mobile Edge Computing: Architecture, Technology and Direction. Trans. Internet Inf. Syst. (KSII) 2019, 13, 4849–4864. [Google Scholar]

- Cloud Edge Computing: Beyond the Data Center. Available online: https://www.openstack.org/use-cases/edge-computing/cloud-edge-computing-beyond-the-data-center/ (accessed on 18 September 2021).

- What Is Edge Computing? A Practical Overview. Available online: https://viso.ai/edge-ai/edge-computing-a-practical-overview/ (accessed on 18 September 2021).

- El Fog Pasa a un Segundo Plano en la Internet Industrial de las Cosas. Available online: https://www.infoplc.net/plus-plus/tecnologia/item/108281-magazine-16-fog-computing-iic/ (accessed on 18 September 2021).

- Saad, A.; Faddel, S.; Mohammed, O. IoT-Based Digital Twin for Energy Cyber-Physical Systems: Design and Implementation. Energies 2019, 13, 4762. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, Y.; Li, H.; Yang, W.; Qi, Q. Dynamic resource provisioning for cyber-physical systems in cloud-fog-edge computing. J. Cloud Comput. Adv. Syst. Appl. 2020, 9, 1–16. [Google Scholar] [CrossRef]

- ETSI GS MEC 003 v2.2.1. Multi-Access Edge Computing (MEC): Framework and Reference Architecture; ETSI: Sophia Antipolis, France, 2020.

- Ali, B.; Gregory, M.A.; Li, S. Multi-Access Edge Computing Architecture, Data Security and Privacy: A Review. IEEE Access 2021, 9, 18706–18721. [Google Scholar] [CrossRef]

- Edge Computing in the Context of Open Manufacturing; Open Manufacturing Platform: Berlin, Germany, 2021.

- Fondo-Ferreiro, P.; Estévez-Caldas, A.; Pérez-Vaz, R.; Gil-Castiñeira, F.; González-Castaño, F.J.; Rodríguez-García, S.; Sousa-Vázquez, X.R.; López, D.; Guerrero, C. Seamless Multi-Access Edge Computing Application Handover Experiments. In Proceedings of the IEEE 22nd International Conference on High Performance Switching and Routing (HPSR 2021), Paris, France, 7–10 June 2021; pp. 85714–85728. [Google Scholar]

- Edge Computing Market. Available online: https://www.factmr.com/report/4761/edge-computing-market/ (accessed on 18 September 2021).

- Krishnasamy, E.; Varrette, S.; Mucciardi, M. (Partnership for Advanced Computing in Europe—Technical Report, EU). Edge Computing: An Overview of Framework and Applications. Available online: https://orbilu.uni.lu/handle/10993/46573 (accessed on 18 September 2021).

- Song, Z. Self-Adaptive Edge Services: Enhancing Reliability, Efficiency, and Adaptiveness under Unreliable, Scarce, and Dissimilar Resources. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, VA, USA, 2020. [Google Scholar]

- Edge AI and Cloud AI Use Cases. Available online: https://barbaraiot.com/blog/aiot-the-perfect-union-between-the-internet-of-things-and-artificial-intelligence/ (accessed on 18 September 2021).

- Rong, G.; Xu, Y.; Tong, X.; Fan, H. An edge-cloud collaborative computing platform for building AIoT applications efficiently. J. Cloud Comput. 2021, 10, 36. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Pirbhulal, S.; Alburquerque, V.H.C. Artificial Intelligence-Driven Mechanism for Edge Computing-Based Industrial Applications. IEEE Trans. Ind. Inform. 2019, 15, 4235–4243. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Han, Y.; Leung, V.C.M.; Niyato, D.; Yan, X.; Chen, X. Edge AI (Artificial Intelligence Applications on Edge), 3rd ed.; Springer: Singapore, 2020; pp. 51–63. [Google Scholar]

- Debouche, O.; Mahmoudi, S.; Mahmoudi, S.A.; Manneback, P.; Bindelle, J.; Lebeau, F. Edge Computing and Artificial Intelligence for Real-time Poultry Monitoring. Procedia Comput. Sci. 2020, 175, 534–541. [Google Scholar] [CrossRef]

- Vecchio, M.; Azzoni, P.; Menychtas, A.; Maglogiannis, I.; Felfernig, A. A Fully Open-Source Approach to Intelligent Edge Computing: AGILE’s Lesson. Sensors 2021, 21, 1309. [Google Scholar] [CrossRef] [PubMed]

- AI-Based Video Analytics for Pandemic Management. Available online: https://www.ntu.edu.sg/rose/research-focus/deep-learning-video-analytics/ai-based-video-analytics-for-pandemic-management/ (accessed on 18 September 2021).

- Al-Habob, A.A.; Dobre, O.A. Mobile Edge Computing and Artificial Intelligence: A Mutually-Beneficial Relationship. IEEE TCN 2019, 1, 103146. [Google Scholar]

- Wang, F.; Zhang, M.; Wang, X.; Ma, X.; Liu, J. Deep Learning for Edge Computing Applications: A State-of-the-Art Survey. IEEE Access 2020, 8, 58322–58336. [Google Scholar] [CrossRef]

- Jin, X.; Li, L.; Dang, F.; Chen, X.; Liu, Y. A survey on edge computing for wearable technology. Digit. Signal Process. 2021, 2021, 103146. [Google Scholar] [CrossRef]

- Covi, E.; Donati, E.; Heidari, H.; Kappel, D.; Liang, X.; Payvand, M.; Wang, W. Adaptive Extreme Edge Computing for Wearable Devices. arXiv 2020, arXiv:2012.14937. [Google Scholar]

- Silva, M.C.; da Silva, J.C.F.; Delabrida, S.; Bianchi, A.G.C.; Ribeiro, S.P.; Silva, J.S.; Oliveira, R.A.R. Wearable Edge AI Applications for Ecological Environments. Sensors 2021, 15, 5082. [Google Scholar] [CrossRef] [PubMed]

- Greco, L.; Ritrovato, P.; Xhafa, F. An edge-stream computing infrastructure for real-time analysis of wearable sensors data. Future Gener. Comput. Syst. 2019, 93, 515–528. [Google Scholar] [CrossRef]

- Salkic, S.; Ustundag, B.C.; Uzunovic, T.; Golubovic, E. Edge Computing Framework for Wearable Sensor-Based Human Activity Recognition. In Proceedings of the International Symposium on Innovative and Interdisciplinary Applications of Advanced Technologies (IAT 2019), Sarajevo, Bosnia-Herzegovina, 20–23 June 2019; pp. 376–387. [Google Scholar]

- Hartmann, M.; Hashmi, U.; Imran, A. Edge computing in smart health care systems: Review, challenges, and research directions. Trans. Emerg. Telecommun. Technol. 2019, 1, 201127345. [Google Scholar] [CrossRef]

- Ray, P.P.; Dash, D.; De, D. Edge computing for Internet of Things: A survey, e-healthcare case study and future direction. J. Netw. Comput. Appl. 2019, 140, 1–22. [Google Scholar] [CrossRef]

- Abdellatif, A.A.; Mohamed, A.; Chiasserini, C.F.; Tlili, M.; Erbad, A. Edge Computing For Smart Health: Context-aware Approaches, Opportunities, and Challenges. arXiv 2020, arXiv:2004.07311. [Google Scholar] [CrossRef] [Green Version]

- Pazienza, A.; Mallardi, G.; Fasciano, C.; Vitulano, F. Artificial Intelligence on Edge Computing: A Healthcare Scenario in Ambient Assisted Living. In Proceedings of the Artificial Intelligence for Ambient Assisted Living (AI*AAL.it 2019), Rende, Italy, 22 November 2019; pp. 22–37. [Google Scholar]

- Sun, L.; Jiang, X.; Ren, H.; Guo, Y. Edge-Cloud Computing and Artificial Intelligence in Internet of Medical Things: Architecture, Technology and Application. IEEE Access 2020, 8, 101079–101092. [Google Scholar] [CrossRef]

- Qiu, T.; Chi, J.; Zhou, X.; Ning, Z.; Atiquzzaman, M.; Wu, D.O. Edge Computing in Industrial Internet of Things: Architecture, Advances and Challenges. IEEE Commun. Surv. Tutor. 2020, 22, 2462–2488. [Google Scholar] [CrossRef]

- Craciunescu, M.; Chenaru, O.; Dobrescu, R.; Florea, G.; Mocanu, S. IIoT Gateway for Edge Computing Applications. In Service Oriented, Holonic and Multi-Agent Manufacturing Systems for Industry of the Future, 1st ed.; Springer: Cham, Switzerland, 2020; pp. 220–231. [Google Scholar]

- Basir, R.; Qaisar, S.; Ali, M.; Aldwairi, M.; Ashraf, M.I.; Mahmood, A.; Gidlund, M. Fog Computing Enabling Industrial Internet of Things: State-of-the-Art and Research Challenges. Sensors 2019, 19, 4807. [Google Scholar] [CrossRef] [Green Version]

- Liao, H.; Zhou, Z.; Zhao, X.; Zhang, L.; Mumtaz, S.; Jolfaei, A.; Ahmed, S.H.; Bashir, A.K. Learning-Based Context-Aware Resource Allocation for Edge-Computing-Empowered Industrial IoT. IEEE Internet Things J. 2020, 7, 4260–4277. [Google Scholar] [CrossRef]

- Xu, X.; Zeng, Z.; Yang, S.; Shao, H. A Novel Blockchain Framework for Industrial IoT Edge Computing. Sensors 2020, 20, 2061. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koh, L.; Orzes, G.; Jia, F. The fourth industrial revolution (Industry 4.0): Technologies disruption on operations and supply chain management. Int. J. Oper. Prod. Manag. 2019, 39, 817–828. [Google Scholar] [CrossRef]

- Javaid, M.; Haleel, A. Critical Components of Industry 5.0 Towards a Successful Adoption in the Field of Manufacturing. J. Ind. Integr. Manag. 2020, 5, 327–348. [Google Scholar] [CrossRef]

- Özdemir, V.; Hekim, M. Birth of Industry 5.0: Making Sense of Big Data with Artificial Intelligence, “The Internet of Things” and Next-Generation Technology Policy. OMICS J. Integr. Biol. 2019, 22, 65–76. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, M.; Zhang, Z.; Chen, Z.; Shi, Q.; Shan, X.; Yeow, R.C.H.; Lee, C. Artificial Intelligence of Things (AIoT) Enabled Virtual Shop Applications Using Self-Powered Sensor Enhanced Soft Robotic Manipulator. Adv. Sci. 2021, 8, 2100230. [Google Scholar] [CrossRef] [PubMed]

- Fraga-Lamas, P.; Lopes, S.I.; Fernández-Caramés, T.M. Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case. IEEE Sens. 2021, 21, 5745. [Google Scholar] [CrossRef] [PubMed]

- Industry 5.0. Towards a Sustainable, Human-Centric and Resilient European Industry; Publications Office of the European Union: Brussels, Belgium, 2021.

- Xie, R.; Tang, Q.; Wang, Q.; Liu, X.; Yu, F.R.; Huang, T. Collaborative Vehicular Edge Computing Networks: Architecture Design and Research Challenges. IEEE Access 2019, 7, 178942–178952. [Google Scholar] [CrossRef]

- Raza, S.; Wang, S.; Ahmed, M.; Anwar, M. A Survey on Vehicular Edge Computing: Architecture, Applications, Technical Issues, and Future Directions. Wirel. Commun. Mob. Comput. 2019, 2019, 3159762. [Google Scholar] [CrossRef]

- Liu, L.; Chen, C.; Pei, Q.; Maharjan, S.; Zhang, Y. Vehicular Edge Computing and Networking: A Survey. arXiv 2019, arXiv:1908.06849. [Google Scholar] [CrossRef]

- Dharminder, D.; Kumar, U.; Gupta, P. Edge based authentication protocol for vehicular communications without trusted party communication. J. Syst. Archit. 2021, 119, 102242. [Google Scholar] [CrossRef]

- Raza, S.; Liu, W.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Sun, Q.; Wang, S. An efficient task offloading scheme in vehicular edge computing. J. Cloud Comput. 2020, 9, 28. [Google Scholar] [CrossRef]

- Abdulrahman, S.; Tout, H.; Ould-Slimane, H.; Mourad, A.; Talhi, C.; Guizani, M. A Survey on Federated Learning: The Journey From Centralized to Distributed On-Site Learning and Beyond. IEEE Internet Things J. 2020, 8, 5476–5497. [Google Scholar] [CrossRef]

- An introduction to Federated Learning: Challenges and Applications. Available online: https://viso.ai/deep-learning/federated-learning/ (accessed on 18 September 2021).

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. Found. Trends Mach. Learn. 2021, 14, 1–121. [Google Scholar] [CrossRef]

- Zhang, W.; Cui, X.; Finkler, U.; Saon, G.; Kayi, A.; Buysktosunoglu, A.; Kingsbury, B.; Kung, D.; Picheny, M. A Highly Efficient Distributed Deep Learning System For Automatic Speech Recognition. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 2628–2632. [Google Scholar]

- Elbir, A.M.; Papazafeiropoulos, A.K.; Chatzinotas, S. Federated Learning for Physical Layer Design. arXiv 2021, arXiv:2012.11777. [Google Scholar]

- Kjorveziroski, V.; Filiposka, S.; Trajkovic, V. IoT Serverless Computing at the Edge: Open Issues and Research Direction. Computers 2021, 10, 130. [Google Scholar] [CrossRef]

- Aslanpour, M.S.; Toosi, A.N.; Cicconetti, C.; Javadi, B.; Sbarski, P.; Taibi, D.; Assunção, M.; Gill, S.S.; Gaire, R.; Dustdar, S. Serverless Edge Computing: Vision and Challenges. In Proceedings of the Australasian Computer Science Week (ASCW 2021), Dunedin, New Zealand, 1–5 February 2021; p. 10. [Google Scholar]

- Zhang, M.; Krintz, C.; Wolski, R. Edge-adaptable serverless acceleration for machine learning Internet of Things applications. J. Softw. Pract. Exp. 2020, 51, 2944. [Google Scholar]

- Benedetti, P.; Femminella, M.; Reali, G.; Steenhaul, K. Experimental Analysis of the Application of Serverless Computing to IoT Platforms. Sensors 2021, 21, 928. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Ali-Eldin, A.; Shenoy, P. LaSS: Running Latency Sensitive Serverless Computations at the Edge. arXiv 2021, arXiv:2104.14087. [Google Scholar]

- Ghaemi, S.; Rouhani, S.; Belchior, R.; Cruz, R.S.; Khazaei, H.; Musilek, P. A Pub-Sub Architecture to Promote Blockchain Interoperability. arXiv 2021, arXiv:2101.12331. [Google Scholar]

- Edge Computing and Thermal Management. Available online: https://www.qats.com/cms/2020/01/14/edge-computing-and-thermal-management/ (accessed on 18 September 2021).

- Alcaraz, S.; Roig, P.J.; Gilly, K.; Filiposka, S.; Aknin, N. Formal Algebraic Description of a Fog/IoT Computing Environment. In Proceedings of the 24th International Conference Electronics, Palanga, Lithuania, 15–17 June 2020. [Google Scholar]

- Bergstra, J.A.; Middleburg, C.A. Using Hoare Logic in a Process Algebra Setting. arXiv 2020, arXiv:1906.04491. [Google Scholar]

- Fokkink, W. Modelling Distributed Systems, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Roig, P.J.; Alcaraz, S.; Gilly, K.; Juiz, C.; Aknin, N. MQTT Algebraic Formal Modelling Using ACP. In Proceedings of the 24th International Conference Electronics, Palanga, Lithuania, 15–17 June 2020. [Google Scholar]

- Krishnan, R.; Lalithambika, V.R. Modeling and Validating Launch Vehicle Onboard Software Using the SPIN Model Checker. J. Aerosp. Inf. Syst. 2020, 17, 695–699. [Google Scholar] [CrossRef]

- Ponomarenko, A.A.; Garanina, N.O.; Staroletov, S.M.; Zyubin, V.E. Towards the Translation of Reflex Programs to Promela: Model Checking Wheelchair Lift Software. In Proceedings of the IEEE 22nd International Conference of Young Professionals in Electron Devices and Materials (EDM), Souzga, Russia, 30 June–4 July 2021. [Google Scholar]

- Comini, M.; Gallardo, M.M.; Villanueva, A. A denotational semantics for PROMELA addressing arbitrary jumps. arXiv 2021, arXiv:2108.12348. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roig, P.J.; Alcaraz, S.; Gilly, K.; Bernad, C.; Juiz, C. Modeling of a Generic Edge Computing Application Design. Sensors 2021, 21, 7276. https://doi.org/10.3390/s21217276

Roig PJ, Alcaraz S, Gilly K, Bernad C, Juiz C. Modeling of a Generic Edge Computing Application Design. Sensors. 2021; 21(21):7276. https://doi.org/10.3390/s21217276

Chicago/Turabian StyleRoig, Pedro Juan, Salvador Alcaraz, Katja Gilly, Cristina Bernad, and Carlos Juiz. 2021. "Modeling of a Generic Edge Computing Application Design" Sensors 21, no. 21: 7276. https://doi.org/10.3390/s21217276

APA StyleRoig, P. J., Alcaraz, S., Gilly, K., Bernad, C., & Juiz, C. (2021). Modeling of a Generic Edge Computing Application Design. Sensors, 21(21), 7276. https://doi.org/10.3390/s21217276