Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand

Abstract

:1. Introduction

- (1)

- We collected a long-term dataset containing video images of a beach in New Zealand for more than 20 years.

- (2)

- We propose an innovative method based on deep learning for automated beach classification which can dynamically extract location and shape information of offshore dam, coastline, and wave of coastal dam trough for classification decision. Moreover, a self-training mechanism is introduced to make full use of unlabeled images which improves the generalization ability of the CNN model. Further, through the strategy, our proposed ResNext achieves the state-of-the-art results (F1-score = 0.9014).

- (3)

- A motif discovery algorithm is proposed to recognize beach patterns. After grouping and serializing the data of beach state over the past 20 years which can be discerned by proposed CNN model, the frequent beach patterns and beach state transitions could be recognized every year.

2. Previous Work

3. Methodology

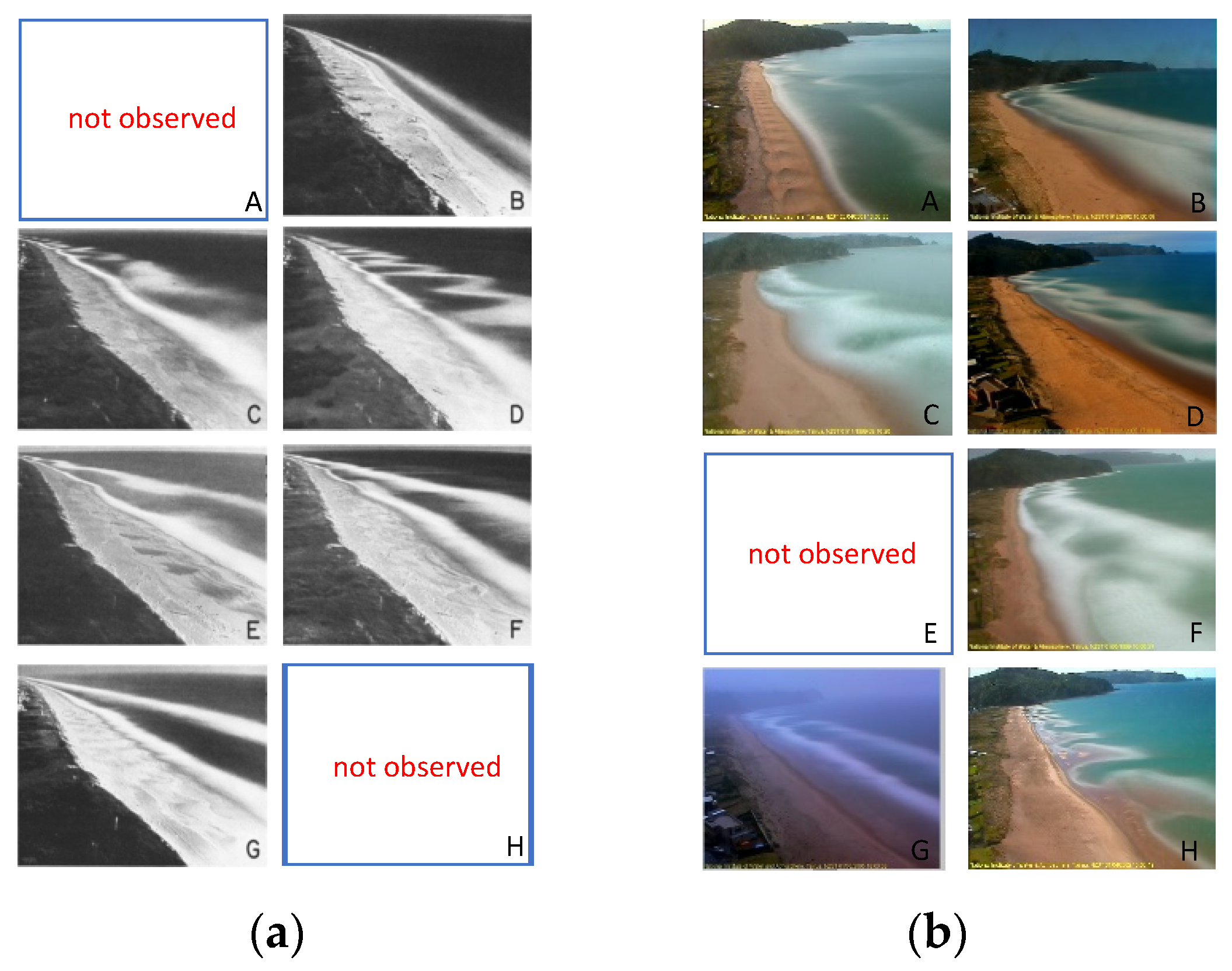

3.1. Coastal Image Classification

3.1.1. Dataset Pre-Processing

3.1.2. Model Design

3.1.3. Self-Training Strategy

| Algorithm 1. Self-training. |

| 1: Input: Given labeled images and unlabeled images 2: Output: Joint model 3: Train a standard teacher classifier on 4: While stopping criteria not met do 5: Use to predict class label of 6: Select confidence sample 7: Remove selected unlabeled data 8: Combine newly labeled data 9: Train student model with data argument(noisy) on 10: Generate new teacher model 11: end while |

3.2. Motif Discovery

4. Results

4.1. Experiments on Coastal Image Classification

4.1.1. Experiment Setting

4.1.2. Classification Results

4.1.3. Self-Training

4.1.4. Comparison with Other Methods

4.1.5. Saliency Maps

4.2. Experiments on Motif Discovery

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sonu, C.J. Three-Dimensional Beach Changes. J. Geol. 1973, 81, 42–64. [Google Scholar] [CrossRef]

- Wright, L.D.; Short, A.D. Morphodynamic Variability of Surf Zones and Beaches: A Synthesis. Mar. Geol. 1984, 56, 93–118. [Google Scholar] [CrossRef]

- Lippmann, T.C.; Holman, R.A. The Spatial and Temporal Variability of Sand Bar Morphology. J. Geophys. Res. 1990, 95, 11575–11590. [Google Scholar] [CrossRef]

- Short, A.D. Macro-Meso Tidal Beach Morphodynamics—An Overview. J. Coast. Res. 1991, 7, 417–436. [Google Scholar]

- Masselink, G.; Short, A.D. The Effect of Tide Range on Beach Morphodynamics and Morphology: A Conceptual Beach Model. J. Coast. Res. 1993, 9, 785–800. [Google Scholar]

- Short, A.D. The Role of Wave Height, Period, Slope, Tide Range and Embaymentisation in Beach Classifications: A Review. Rev. Chil. Hist. Nat. 1996, 69, 589–604. [Google Scholar]

- Da Fontoura Klein, A.H.; De Menezes, J.T. Beach Morphodynamics and Profile Sequence for a Headland Bay Coast. J. Coast. Res. 2001, 17, 812–835. [Google Scholar]

- Yu, J.; Ding, Y.; Cheng, H.; Cai, L.; Chen, Z. Wave-Dominated, Mesotidal Headland-Bay Beach Morphodynamic Classsfications of the Shuidong Bay in South China. Acta Oceanol. Sin. 2016, 35, 87–95. [Google Scholar] [CrossRef]

- Viles, H.A. Book Review: Handbook of Beach and Shoreface Morphodynamics. Prog. Phys. Geogr. Earth Environ. 2001, 25, 583–584. [Google Scholar] [CrossRef]

- Travers, A.; Eliot, M.J.; Eliot, I.G.; Jendrzejczak, M. Sheltered Sandy Beaches of Southwestern Australia. Geol. Soc. Spec. Publ. 2010, 346, 23–42. [Google Scholar] [CrossRef] [Green Version]

- Travers, A. Low-Energy Beach Morphology with Respect to Physical Setting: A Case Study from Cockburn Sound, Southwestern Australia. J. Coast. Res. 2007, 23, 429–444. [Google Scholar] [CrossRef]

- Aagaard, T.; Holm, J. Digitization of Wave Run-up Using Video Records. J. Coast. Res. 1989, 5, 547–551. [Google Scholar]

- Yoo, J.; Fritz, H.M.; Haas, K.A.; Work, P.A.; Barnes, C.F. Depth Inversion in the Surf Zone with Inclusion of Wave Nonlinearity Using Video-Derived Celerity. J. Waterw. Port. Coast. Ocean Eng. 2011, 137, 95–106. [Google Scholar] [CrossRef]

- Shand, T.D.; Bailey, D.G.; Shand, R.D. Automated Detection of Breaking Wave Height Using an Optical Technique. J. Coast. Res. 2012, 28, 671. [Google Scholar] [CrossRef]

- Andriolo, U. Nearshore Wave Transformation Domains from Video Imagery. J. Mar. Sci. Eng. 2019, 7, 186. [Google Scholar] [CrossRef] [Green Version]

- Beuzen, T.; Goldstein, E.B.; Splinter, K.D. Ensemble Models from Machine Learning: An Example of Wave Runup and Coastal Dune Erosion. Nat. Hazards Earth Syst. Sci. 2019, 19, 2295–2309. [Google Scholar] [CrossRef] [Green Version]

- Buscombe, D.; Carini, R.J.; Harrison, S.R.; Chickadel, C.C.; Warrick, J.A. Optical Wave Gauging Using Deep Neural Networks. Coast. Eng. 2020, 155, 103593. [Google Scholar] [CrossRef]

- Hoonhout, B.M.; Radermacher, M.; Baart, F.; van der Maaten, L.J.P. An Automated Method for Semantic Classification of Regions in Coastal Images. Coast. Eng. 2015, 105, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Vos, K.; Splinter, K.D.; Harley, M.D.; Simmons, J.A.; Turner, I.L. CoastSat: A Google Earth Engine-Enabled Python Toolkit to Extract Shorelines from Publicly Available Satellite Imagery. Environ. Model. Softw. 2019, 122, 104528. [Google Scholar] [CrossRef]

- Jackson, D.W.T.; Cooper, J.A.G.; Del Rio, L. Geological Control of Beach Morphodynamic State. Mar. Geol. 2005, 216, 297–314. [Google Scholar] [CrossRef]

- Plant, N.G.; Holland, K.T.; Holman, R.A. A Dynamical Attractor Governs Beach Response to Storms. Geophys. Res. Lett. 2006, 33. [Google Scholar] [CrossRef] [Green Version]

- McCarroll, R.J.; Brander, R.W.; Turner, I.L.; Van Leeuwen, B. Shoreface Storm Morphodynamics and Mega-Rip Evolution at an Embayed Beach: Bondi Beach, NSW, Australia. Cont. Shelf Res. 2016, 116, 74–88. [Google Scholar] [CrossRef]

- Splinter, K.D.; Holman, R.A.; Plant, N.G. A Behavior-Oriented Dynamic Model for Sandbar Migration and 2DH Evolution. J. Geophys. Res. Ocean. 2011, 116, 116. [Google Scholar] [CrossRef] [Green Version]

- Short, A.D. Wave Power and Beach-Stages: A Global Model. In Proceedings of the Sixteenth Conference on Coastal Engineering, Hamburg, Germany, 27 August–3 September 1978. [Google Scholar]

- Short, A.D. Three Dimensional Beach-Stage Model. J. Geol. 1979, 87, 553–571. [Google Scholar] [CrossRef]

- Wright, L.D.; Chappell, J.; Thom, B.G.; Bradshaw, M.P.; Cowell, P. Morphodynamics of Reflective and Dissipative Beach and Inshore Systems: Southeastern Australia. Mar. Geol. 1979, 32, 105–140. [Google Scholar] [CrossRef]

- Short, A.D. Australian Beach Systems—Nature and Distribution. J. Coast. Res. 2006, 22, 11–27. [Google Scholar] [CrossRef]

- Jennings, R.; Shulmeister, J. A Field Based Classification Scheme for Gravel Beaches. Mar. Geol. 2002, 186, 211–228. [Google Scholar] [CrossRef]

- Kzmr, M.; Taboroši, D. Rapid Profiling of Marine Notches Using a Handheld Laser Distance Meter. J. Coast. Res. 2012, 28, 964–969. [Google Scholar] [CrossRef] [Green Version]

- Almeida, L.P.; Masselink, G.; Russell, P.E.; Davidson, M.A. Observations of Gravel Beach Dynamics during High Energy Wave Conditions Using a Laser Scanner. Geomorphology 2015, 228, 15–27. [Google Scholar] [CrossRef] [Green Version]

- Scott, T.; Masselink, G.; Russell, P. Morphodynamic Characteristics and Classification of Beaches in England and Wales. Mar. Geol. 2011, 286, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Armaroli, C.; Ciavola, P. Dynamics of a Nearshore Bar System in the Northern Adriatic: A Video-Based Morphological Classification. Geomorphology 2011, 126, 201–216. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016. [Google Scholar]

- Ellenson, A.N.; Simmons, J.A.; Wilson, G.W.; Hesser, T.J.; Splinter, K.D. Beach State Recognition Using Argus Imagery and Convolutional Neural Networks. Remote Sens. 2020, 12, 3953. [Google Scholar] [CrossRef]

- Coco, G.; Bryan, K.R.; Green, M.O.; Ruessink, B.G.; Turner, I.L.; Van Enckevort, I.M.J. Video Observations of Shoreline and Sandbar Coupled Dynamics. In Proceedings of the 17th Australasian Coastal and Ocean Engineering Conference 2005 and the 10th Australasian Port and Harbour Conference 2005, Adelaide, Australia, 20–23 September 2005. [Google Scholar]

- Valentini, N.; Saponieri, A.; Molfetta, M.G.; Damiani, L. New Algorithms for Shoreline Monitoring from Coastal Video Systems. Earth Sci. Inform. 2017, 10, 495–506. [Google Scholar] [CrossRef]

- Andriolo, U.; Mendes, D.; Taborda, R. Breaking Wave Height Estimation from Timex Images: Two Methods for Coastal Video Monitoring Systems. Remote Sens. 2020, 12, 204. [Google Scholar] [CrossRef] [Green Version]

- Trogu, D.; Buosi, C.; Ruju, A.; Porta, M.; Ibba, A.; De Muro, S. What Happens to a Mediterranean Microtidal Wave-Dominated Beach during Significant Storm Events? The Morphological Response of a Natural Sardinian Beach (Western Mediterranean). J. Coast. Res. 2020, 95, 695–700. [Google Scholar] [CrossRef]

- Brodie, K.L.; Bruder, B.L.; Slocum, R.K.; Spore, N.J. Simultaneous Mapping of Coastal Topography and Bathymetry from a Lightweight Multicamera UAS. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6844–6864. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Liu, B.; Li, J.; Chen, C.; Tan, W.; Chen, Q.; Zhou, M. Efficient Motif Discovery for Large-Scale Time Series in Healthcare. IEEE Trans. Ind. Inform. 2015, 11, 583–590. [Google Scholar] [CrossRef]

- Lin, B.; Wei, X.; Junjie, Z. Automatic Recognition and Classification of Multi-Channel Microseismic Waveform Based on DCNN and SVM. Comput. Geosci. 2019, 123, 111–120. [Google Scholar] [CrossRef]

- Zhang, S.; Xiao, K.; Carranza, E.J.M.; Yang, F.; Zhao, Z. Integration of Auto-Encoder Network with Density-Based Spatial Clustering for Geochemical Anomaly Detection for Mineral Exploration. Comput. Geosci. 2019, 130, 43–56. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 5987–5995. [Google Scholar] [CrossRef] [Green Version]

- Baldi, P.; Brunak, S.; Chauvin, Y.; Andersen, C.A.F.; Nielsen, H. Assessing the Accuracy of Prediction Algorithms for Classification: An Overview. Bioinformatics 2000, 16, 412–424. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sokolova, M.; Lapalme, G. A Systematic Analysis of Performance Measures for Classification Tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Pei, J.; Mortazavi-Asl, B.; Chen, Q.; Dayal, U.; Hsu, M.C. FreeSpan: Frequent Pattern-Projected Sequential Pattern Mining. In Proceedings of the Sixth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Boston, MA, USA, 20–23 August 2000. [Google Scholar]

- Pei, J.; Han, J.; Mortazavi-Asl, B.; Pinto, H.; Chen, Q.; Dayal, U.; Hsu, M.C. PrefixSpan: Mining Sequential Patterns Efficiently by Prefix-Projected Pattern Growth. In Proceedings of the 17th International Conference on Data Engineering, Heidelberg, Germany, 2–6 April 2001. [Google Scholar]

| Data Processing | Algorithm | Top-1 Accuracy |

|---|---|---|

| No image enhancement | ResNet50 | 0.9028 |

| ResNext50 | 0.9146 | |

| Image enhancement | ResNet50 | 0.9278 |

| ResNext50 | 0.9365 |

| A | B | C | D | F | G | H | Avg | |

|---|---|---|---|---|---|---|---|---|

| ResNext50 | 0.9470 | 0.7960 | 0.9462 | 0.9343 | 0.8881 | 0.9550 | 0.8435 | 0.9014 |

| ResNext50 + ST | 0.9547 | 0.8479 | 0.9572 | 0.9149 | 0.9085 | 0.9883 | 0.8518 | 0.9176 |

| A | B | C | D | F | G | H | Avg | |

|---|---|---|---|---|---|---|---|---|

| ResNet50 + ST | 0.9487 | 0.7891 | 0.9475 | 0.9182 | 0.8790 | 0.9550 | 0.8095 | 0.8924 |

| ResNext50 + ST | 0.9547 | 0.8479 | 0.9572 | 0.9149 | 0.9085 | 0.9883 | 0.8518 | 0.9176 |

| Method | Precision | Recall | F1 Score | Referring Time (ms) |

|---|---|---|---|---|

| VGG16 | 0.8754 | 0.8875 | 0.8858 | 50.0 |

| DesNet121 | 0.8960 | 0.8870 | 0.8890 | 35.6 |

| ResNet50 | 0.8870 | 0.9014 | 0.8924 | 17.3 |

| ResNext50 | 0.8714 | 0.9308 | 0.9014 | 18.4 |

| FP-Growth | Prefixspan | MDLats |

|---|---|---|

| {0} | [0, 0, 0, 0, 0, 0, 0, 0] | [0, 0, 0, 0, 0, 0] |

| {2} | [0, 0, 0, 0, 0, 0, 0, 0, 0] | [0, 0, 0, 0, 0, 0, 0] |

| {2, 0} | [2, 2, 2, 2, 2] | [0, 0, 0, 0, 0, 0, 0, 0] |

| {4} | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,0] | [0, 0, 0, 0, 0, 0, 0, 0, 0] |

| {2, 4} | [2, 2, 2, 2, 2, 2, 2, 2, 2, 2] | [2, 2, 2, 2, 2, 2, 2, 2, 2] |

| {4, 0} | [2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2] | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] |

| {6} | [0, 0, 0, 0, 0, 0, 2] | [2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2] |

| {1} | [0, 0, 0, 0, 0, 2, 2] | [4, 4, 4, 4, 4, 4, 4, 4] |

| {0, 6} | [0, 0, 0, 6] | [2, 0, 0, 0, 0, 0] |

| {2, 4, 0} | [2, 2, 2, 2, 2, 2, 2, 2, 0] | [0, 0, 0, 0, 0, 2] |

| {1, 2} | [4, 4, 4, 4, 4, 4, 4] | [4, 0, 0, 0, 0, 0, 0, 0] |

| {1, 0} | [4, 0, 0, 0, 0] | [0, 0, 0, 0, 0, 0, 6] |

| {2, 6} | [0, 0, 0, 0, 0, 0, 2, 0] | [4, 4, 4, 4, 4, 4, 4, 4, 4, 4] |

| {2, 0, 6} | [6, 0, 0, 0, 0, 0, 0] | [6, 0, 0, 0, 0, 0, 0] |

| {1, 2, 0} | [0, 6, 0, 0, 0, 0, 0] | [0, 0, 0, 0, 0, 2, 2] |

| {1, 4} | [0, 0, 0, 0, 0, 6] | [5, 5, 5, 5, 5, 5] |

| {5} | [0, 0, 0, 2, 2, 2, 0] | [0, 0, 0, 2, 2, 2] |

| {3} | [4, 2, 2, 2, 2, 2] | [0, 0, 0, 6, 6, 6] |

| {4, 6} | [2, 2, 2, 2, 2, 2, 2, 2, 2, 0] | [2, 2, 2, 2, 2, 0] |

| {1, 2, 4} | [0, 0, 2, 2, 2, 2, 2, 2, 2] | [0, 0, 0, 0, 0, 6] |

| {1, 4, 0} | [2, 2, 2, 4, 2, 2] | [6, 0, 0, 0, 0, 0, 0, 0, 0] |

| {4, 6, 0} | [6, 0, 0, 0, 0, 0, 0, 0] | [3, 3, 3, 3, 3, 3] |

| {5, 0} | [0, 0, 0, 0, 0, 0, 4] | [1, 1, 1, 1, 1, 1, 1, 1, 1] |

| {2, 3} | [2, 2, 2, 6] | [2, 2, 2, 0, 0, 0, 0] |

| {0, 3} | [0, 6, 6, 0, 0, 0] | [6, 6, 6, 6, 6, 6] |

| {2, 4, 6} | [6, 6, 6, 6, 6] | [5, 5, 5, 5, 5, 5, 5] |

| {5, 2} | [0, 1, 2, 2] | [2, 2, 2, 2, 2, 3] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Yang, B.; Masoud-Ansari, S.; Wang, H.; Gahegan, M. Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand. Sensors 2021, 21, 7352. https://doi.org/10.3390/s21217352

Liu B, Yang B, Masoud-Ansari S, Wang H, Gahegan M. Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand. Sensors. 2021; 21(21):7352. https://doi.org/10.3390/s21217352

Chicago/Turabian StyleLiu, Bo, Bin Yang, Sina Masoud-Ansari, Huina Wang, and Mark Gahegan. 2021. "Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand" Sensors 21, no. 21: 7352. https://doi.org/10.3390/s21217352

APA StyleLiu, B., Yang, B., Masoud-Ansari, S., Wang, H., & Gahegan, M. (2021). Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand. Sensors, 21(21), 7352. https://doi.org/10.3390/s21217352