Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis

Abstract

:1. Introduction

2. Materials and Methods

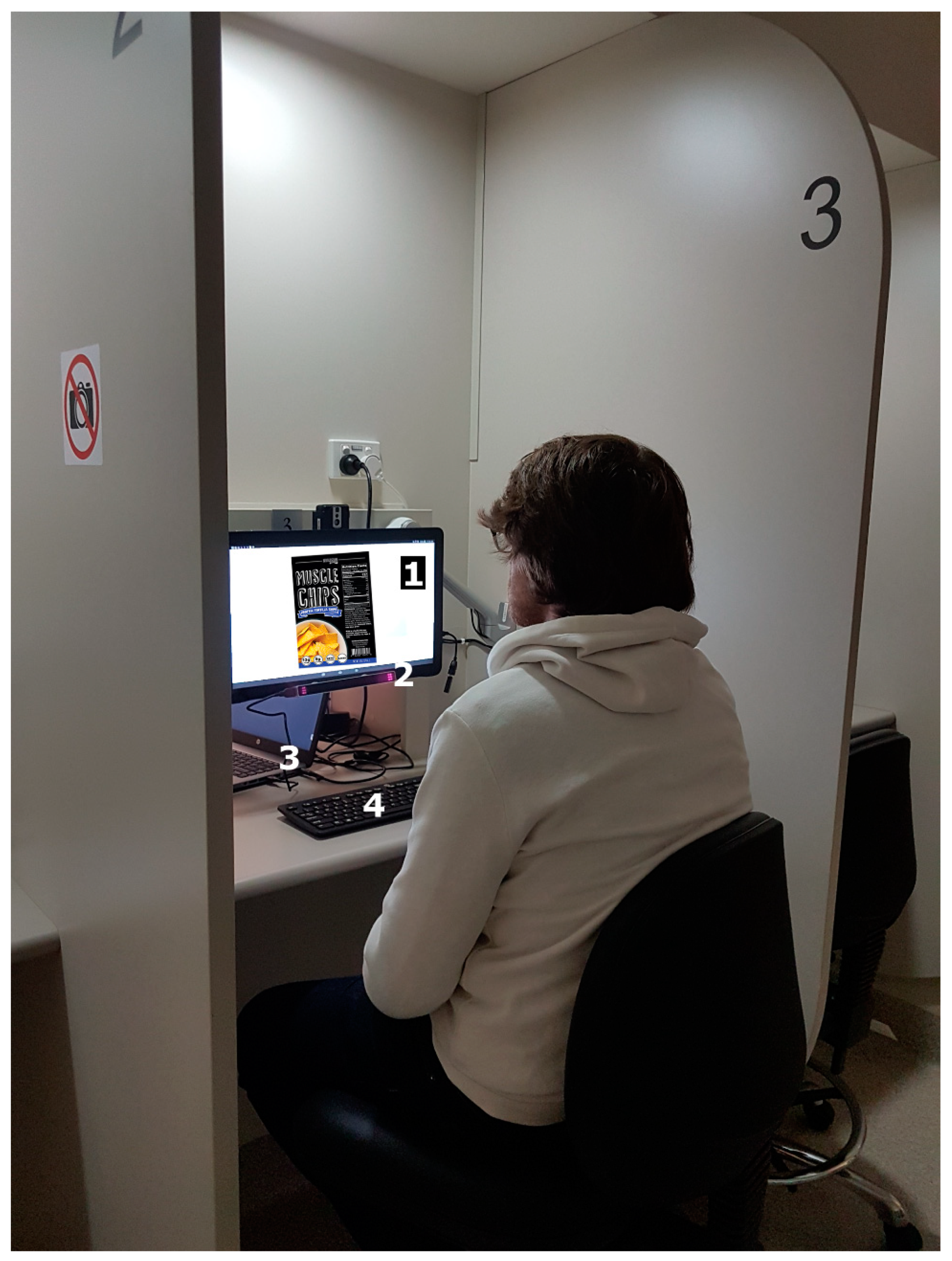

2.1. Sensory Session Description

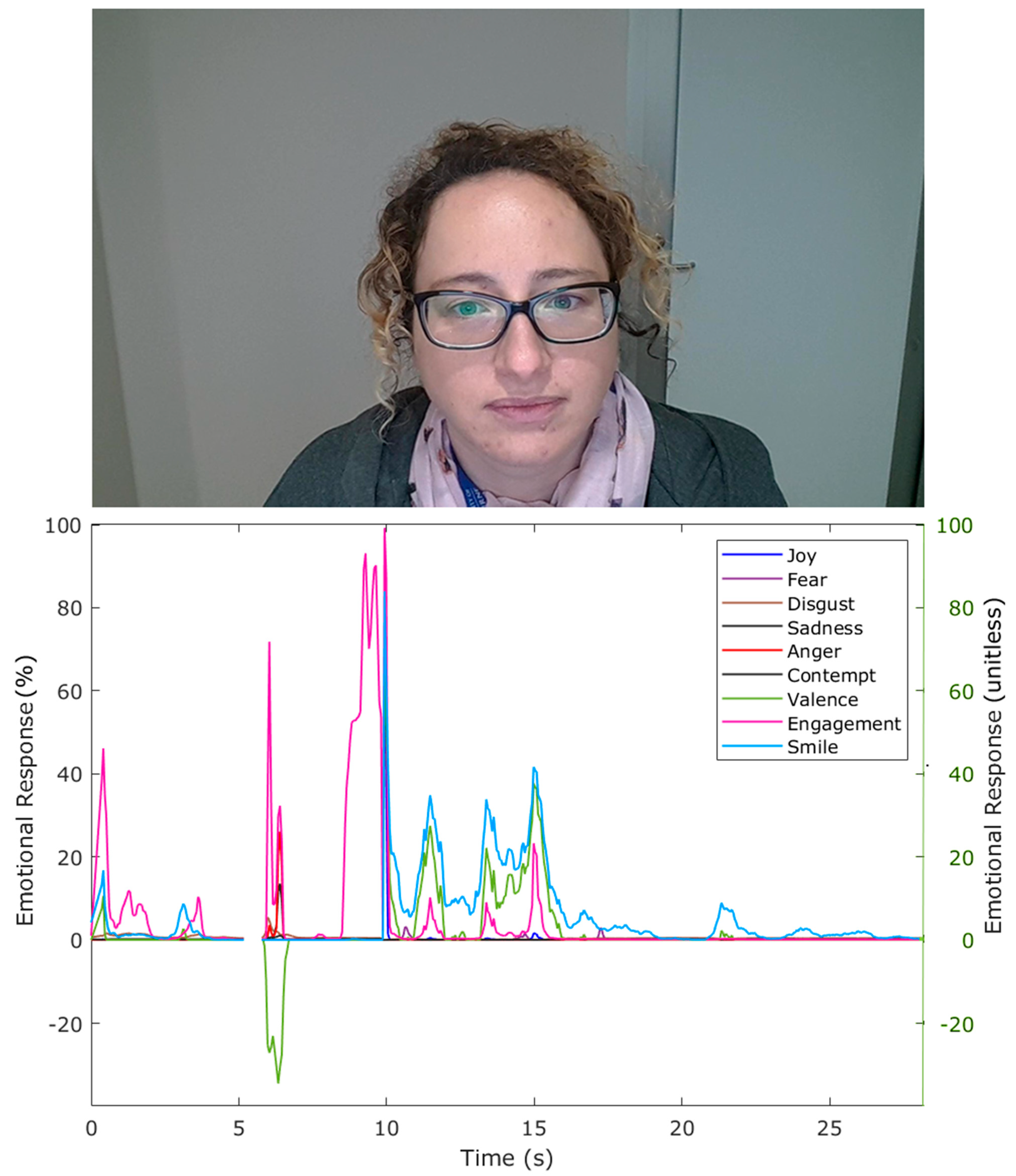

2.2. Biometrics

2.3. Statistical Analysis

3. Results and Discussion

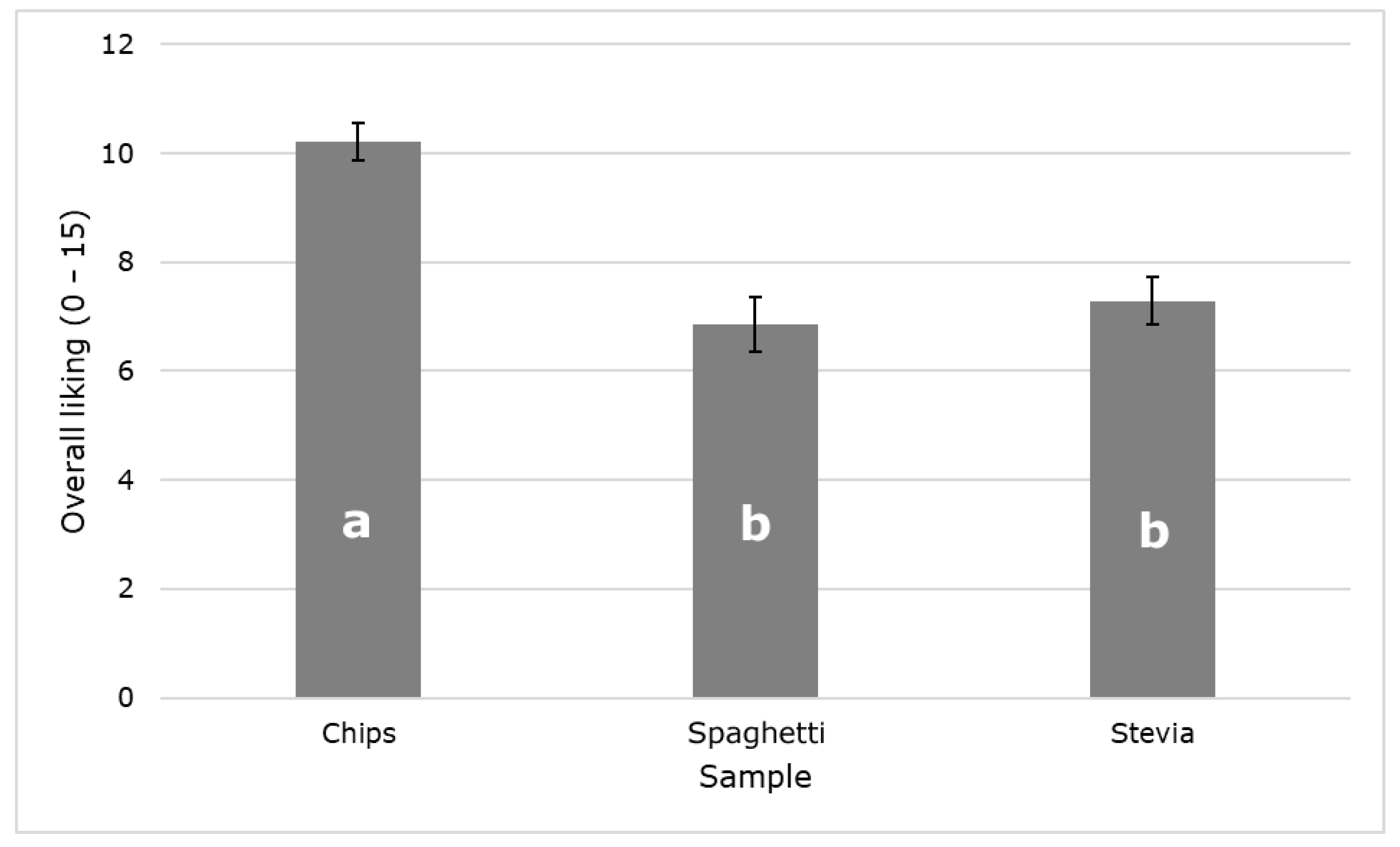

3.1. Overall Label Liking End Emotional Response from Label Components

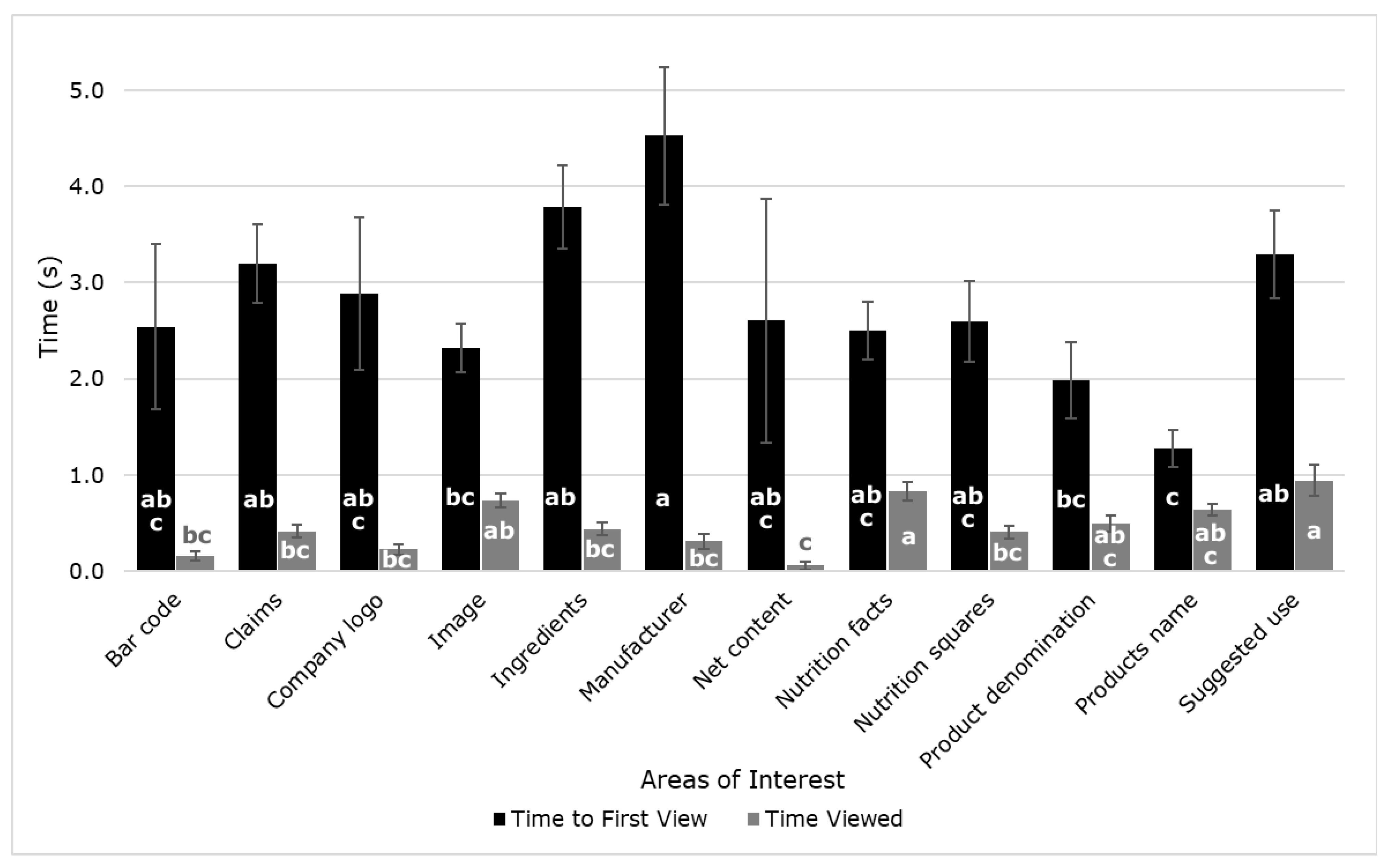

3.2. Differences in Eye-Tracking Data for Label Components

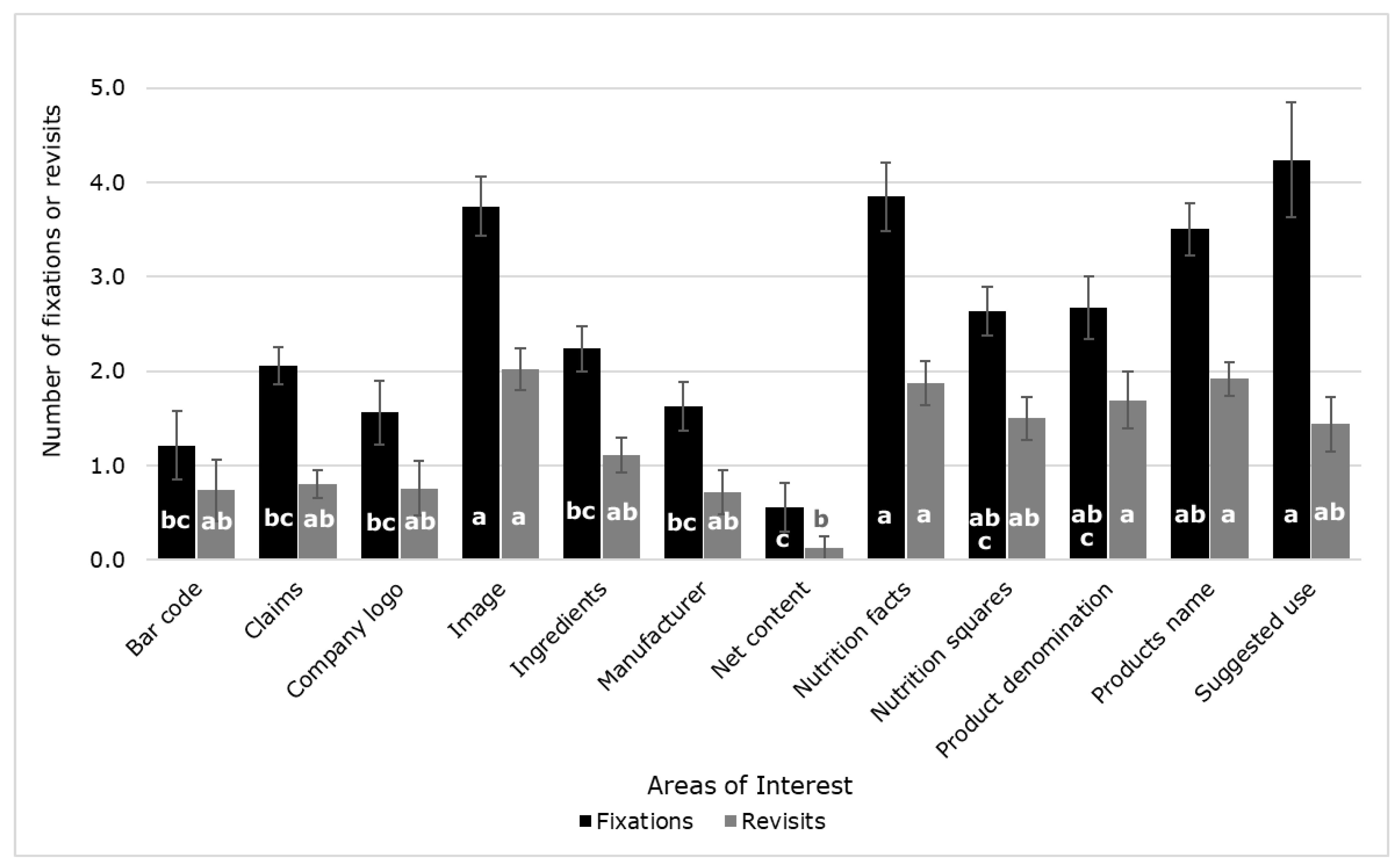

3.3. Integrating Eye Tracking and Emotional Response Data

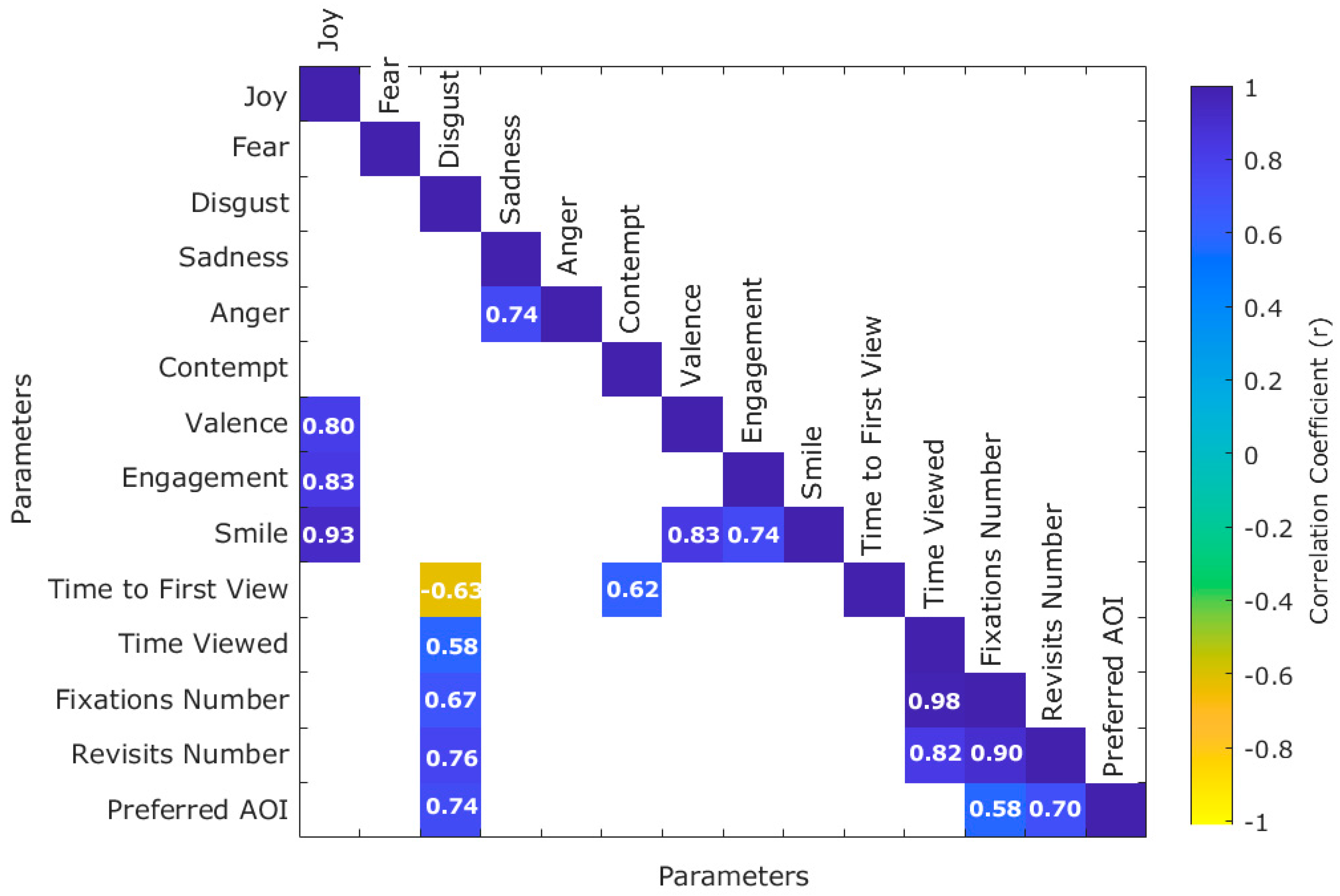

3.4. Integration and Analysis of Eye-Tracking and Emotional Response

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Buss, D. Food Companies Get Smart About Artificial Intelligence. Food Technol. 2018, 72, 26–41. [Google Scholar]

- He, W.; Boesveldt, S.; de Graaf, C.; de Wijk, R.A. Dynamics of autonomic nervous system responses and facial expressions to odors. Appl. Olfactory Cogn. 2014, 5, 104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Modica, E.; Cartocci, G.; Rossi, D.; Martinez Levy, A.C.; Cherubino, P.; Maglione, A.G.; Di Flumeri, G.; Mancini, M.; Montanari, M.; Perrotta, D. Neurophysiological responses to different product experiences. Comput. Intell. Neurosci. 2018, 2018, 9616301. [Google Scholar] [CrossRef] [PubMed]

- Schulte-Holierhoek, A.; Verastegui-Tena, L.; Goedegebure, R.P.; Fiszman, B.P.; Smeets, P.A. Sensory expectation, perception, and autonomic nervous system responses to package colours and product popularity. Food Qual. Prefer. 2017, 62, 60–70. [Google Scholar] [CrossRef]

- Vila-López, N.; Küster-Boluda, I. Consumers’ physiological and verbal responses towards product packages: Could these responses anticipate product choices? Physiol. Behav. 2019, 200, 166–173. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez Viejo, C.; Torrico, D.; Dunshea, F.; Fuentes, S. Emerging Technologies Based on Artificial Intelligence to Assess the Quality and Consumer Preference of Beverages. Beverages 2019, 5, 62. [Google Scholar] [CrossRef] [Green Version]

- Kreibig, S.D. Autonomic nervous system activity in emotion: A review. Biol. Psychol. 2010, 84, 394–421. [Google Scholar] [CrossRef]

- Liao, L.X.; Corsi, A.M.; Chrysochou, P.; Lockshin, L. Emotional responses towards food packaging: A joint application of self-report and physiological measures of emotion. Food Qual. Prefer. 2015, 42, 48–55. [Google Scholar] [CrossRef]

- Vila-López, N.; Kuster-Boluda, I.; Alacreu-Crespo, A. Designing a Low-Fat Food Packaging: Comparing Consumers’ Responses in Virtual and Physical Shopping Environments. Foods 2021, 10, 211. [Google Scholar] [CrossRef]

- Cuesta, U.; Niño, J.I.; Martínez-Martínez, L. Neuromarketing: Analysis of Packaging Using Gsr, Eye-Tracking and Facial Expression. In Proceedings of the Paper presented at The European Conference on Media, Communication & Film, Brighton, UK, 9–10 July 2018. [Google Scholar]

- Rodríguez-Escudero, A.I.; Carbonell, P.; Moreno-Albaladejo, P. The conjoint effect of front-label claims’ surface size and distance-to-center on customers’ visual attention and emotional response. J. Appl. Packag. Res. 2019, 11, 4. [Google Scholar]

- Songa, G.; Slabbinck, H.; Vermeir, I.; Russo, V. How do implicit/explicit attitudes and emotional reactions to sustainable logo relate? A neurophysiological study. Food Qual. Prefer. 2019, 71, 485–496. [Google Scholar] [CrossRef]

- Fuentes, S.; Tongson, E.; Gonzalez Viejo, C. Novel digital technologies implemented in sensory science and consumer perception. Curr. Opin. Food Sci. 2021, 41, 99–106. [Google Scholar] [CrossRef]

- Fuentes, S.; Gonzalez Viejo, C.; Torrico, D.; Dunshea, F. Development of a biosensory computer application to assess physiological and emotional responses from sensory panelists. Sensors 2018, 18, 2958. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alemdag, E.; Cagiltay, K. A systematic review of eye tracking research on multimedia learning. Comput. Educ. 2018, 125, 413–428. [Google Scholar] [CrossRef]

- Peißl, S.; Wickens, C.D.; Baruah, R. Eye-tracking measures in aviation: A selective literature review. Int. J. Aerosp. Psychol. 2018, 28, 98–112. [Google Scholar] [CrossRef]

- Scott, N.; Zhang, R.; Le, D.; Moyle, B. A review of eye-tracking research in tourism. Curr. Issues Tour. 2019, 22, 1244–1261. [Google Scholar] [CrossRef]

- Kredel, R.; Vater, C.; Klostermann, A.; Hossner, E.-J. Eye-tracking technology and the dynamics of natural gaze behavior in sports: A systematic review of 40 years of research. Front. Psychol. 2017, 8, 1845. [Google Scholar] [CrossRef] [Green Version]

- Motoki, K.; Saito, T.; Onuma, T. Eye-tracking research on sensory and consumer science: A review, pitfalls and future directions. Food Res. Int. 2021, 145, 110389. [Google Scholar] [CrossRef] [PubMed]

- Duerrschmid, K.; Danner, L. Eye tracking in consumer research. In Methods in Consumer Research, Volume 2; Elsevier: Amsterdam, The Netherlands, 2018; pp. 279–318. [Google Scholar]

- Popova, L.; Nonnemaker, J.; Taylor, N.; Bradfield, B.; Kim, A. Warning labels on sugar-sweetened beverages: An eye tracking approach. Am. J. Health Behav. 2019, 43, 406–419. [Google Scholar] [CrossRef] [PubMed]

- Fenko, A.; Nicolaas, I.; Galetzka, M. Does attention to health labels predict a healthy food choice? An eye-tracking study. Food Qual. Prefer. 2018, 69, 57–65. [Google Scholar] [CrossRef]

- Mokrý, S.; Birčiaková, N.; Slováčková, T.; Stávková, J.; Nagyová, Ľ. Perception of wine labels by generation Z: Eye-tracking experiment. Potravin. Slovak J. Food Sci. 2016, 10, 524–531. [Google Scholar]

- Merdian, P.; Piroth, P.; Rueger-Muck, E.; Raab, G. Looking behind eye-catching design: An eye-tracking study on wine bottle design preference. Int. J. Wine Bus. Res. 2020, 33, 134–151. [Google Scholar] [CrossRef]

- Fazio, M.; Reitano, A.; Loizzo, M.R. Consumer Preferences for New Products: Eye Tracking Experiment on Labels and Packaging for Olive Oil Based Dressing. Proceedings 2021, 70, 59. [Google Scholar] [CrossRef]

- Peng-Li, D.; Byrne, D.V.; Chan, R.C.; Wang, Q.J. The influence of taste-congruent soundtracks on visual attention and food choice: A cross-cultural eye-tracking study in Chinese and Danish consumers. Food Qual. Prefer. 2020, 85, 103962. [Google Scholar] [CrossRef]

- Sung, B.; Butcher, L.; Easton, J. Elevating Food Perceptions Through Luxury Verbal Cues: An Eye-Tracking and Electrodermal Activity Experiment. Australas. Mark. J. 2021, 2021, 18393349211028676. [Google Scholar] [CrossRef]

- Frelih, N.G.; Podlesek, A.; Babič, J.; Geršak, G. Evaluation of psychological effects on human postural stability. Measurement 2017, 98, 186–191. [Google Scholar] [CrossRef]

- Gonzalez Viejo, C.; Fuentes, S.; Torrico, D.; Dunshea, F. Non-Contact Heart Rate and Blood Pressure Estimations from Video Analysis and Machine Learning Modelling Applied to Food Sensory Responses: A Case Study for Chocolate. Sensors 2018, 18, 1802. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stone, A.; Potton, A. Emotional responses to disfigured faces and disgust sensitivity: An eye-tracking study. J. Health Psychol. 2019, 24, 1191–1200. [Google Scholar] [CrossRef]

- Monteiro, P.; Guerreiro, J.; Loureiro, S.M.C. Understanding the role of visual attention on wines’ purchase intention: An eye-tracking study. Int. J. Wine Bus. Res. 2019, 32, 161–179. [Google Scholar] [CrossRef]

- Gunaratne, N.M.; Fuentes, S.; Gunaratne, T.M.; Torrico, D.D.; Ashman, H.; Francis, C.; Gonzalez Viejo, C.; Dunshea, F.R. Consumer acceptability, eye fixation, and physiological responses: A study of novel and familiar chocolate packaging designs using eye-tracking devices. Foods 2019, 8, 253. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez Viejo, C.; Fuentes, S.; Howell, K.; Torrico, D.; Dunshea, F. Integration of non-invasive biometrics with sensory analysis techniques to assess acceptability of beer by consumers. Physiol. Behav. 2019, 200, 139–147. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez Viejo, C.; Fuentes, S.; Howell, K.; Torrico, D.; Dunshea, F.R. Robotics and computer vision techniques combined with non-invasive consumer biometrics to assess quality traits from beer foamability using machine learning: A potential for artificial intelligence applications. Food Control 2018, 92, 72–79. [Google Scholar] [CrossRef]

- Drexler, D.; Fiala, J.; Havlíčková, A.; Potůčková, A.; Souček, M. The effect of organic food labels on consumer attention. J. Food Prod. Mark. 2018, 24, 441–455. [Google Scholar] [CrossRef]

- Schienle, A.; Gremsl, A.; Übel, S.; Körner, C. Testing the effects of a disgust placebo with eye tracking. Int. J. Psychophysiol. 2016, 101, 69–75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aydin, S.G.; Kaya, T.; Guler, H. Wavelet-based study of valence–arousal model of emotions on EEG signals with LabVIEW. Brain Inform. 2016, 3, 109–117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gere, A.; Danner, L.; de Antoni, N.; Kovács, S.; Dürrschmid, K.; Sipos, L. Visual attention accompanying food decision process: An alternative approach to choose the best models. Food Qual. Prefer. 2016, 51, 1–7. [Google Scholar] [CrossRef]

- Dalrymple, K.A.; Jiang, M.; Zhao, Q.; Elison, J.T. Machine learning accurately classifies age of toddlers based on eye tracking. Sci. Rep. 2019, 9, 1–10. [Google Scholar]

- Torrico, D.D.; Fuentes, S.; Viejo, C.G.; Ashman, H.; Gurr, P.A.; Dunshea, F.R. Analysis of thermochromic label elements and colour transitions using sensory acceptability and eye tracking techniques. LWT Food Sci. Technol. 2018, 89, 475–481. [Google Scholar] [CrossRef]

| AOI/Emotion | Bar Code | Claims | Company Logo | Image | Ingredients | Manufacturer | Net Content | Nutrition Facts | Nutrition Squares | Product Denomination | Products Name | Suggested Use |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Joy | 0.02 | 2.24 | 1.82 | 1.39 | 4.78 | 1.51 | 11.82 | 2.49 | 2.88 | 1.44 | 2.88 | 3.54 |

| ±0.02 | ±2.24 | ±1.81 | ±0.83 | ±2.02 | ±1.51 | ±8.08 | ±1.12 | ±2.14 | ±0.88 | ±1.21 | ±2.44 | |

| Fear | 1.82 | 0.53 | 0.42 | 1.18 | 2.83 | 0.01 | 0.07 | 1.35 | 2.15 | 1.52 | 3.04 | 1.10 |

| ±1.82 | ±0.37 | ±0.41 | ±0.49 | ±1.16 | ±0.01 | ±0.07 | ±0.59 | ±1.26 | ±1.05 | ±0.91 | ±0.90 | |

| Disgust | 0.47 | 0.65 | 0.56 | 0.99 | 0.44 | 0.44 | 0.31 | 1.21 | 0.92 | 0.52 | 1.61 | 0.60 |

| ±0.10 | ±0.11 | ±0.10 | ±0.22 | ±0.04 | ±0.07 | ±0.04 | ±0.37 | ±0.43 | ±0.07 | ±0.78 | ±0.11 | |

| Sadness | 0.09 | 0.80 | 0.91 | 0.08 | 0.11 | 0.02 | 0.02 | 0.15 | 0.03 | 0.43 | 0.17 | 0.65 |

| ±0.07 | ±0.74 | ±0.86 | ±0.02 | ±0.05 | ±0.00 | ±0.01 | ±0.11 | ±0.01 | ±0.29 | ±0.11 | ±0.37 | |

| Anger | 0.17 | 1.56 | 0.57 | 0.16 | 0.03 | 0.01 | 0.00 | 0.04 | 0.01 | 0.87 | 0.08 | 0.20 |

| ±0.11 | ±1.52 | ±0.53 | ±0.11 | ±0.01 | ±0.00 | ±0.00 | ±0.03 | ±0.00 | ±0.83 | ±0.04 | ±0.12 | |

| Contempt | 3.79 | 0.22 | 0.53 | 1.07 | 2.46 | 3.76 | 0.15 | 0.24 | 0.18 | 0.21 | 0.21 | 3.26 |

| ±3.45 | ±0.03 | ±0.30 | ±0.66 | ±1.51 | ±3.10 | ±0.02 | ±0.06 | ±0.01 | ±0.02 | ±0.03 | ±2.00 | |

| Valence | −5.11 | −0.94 | −2.13 | −0.52 | 2.11 | 2.03 | 11.67 | 0.79 | 1.46 | 2.70 | 0.51 | −4.89 |

| ±3.52 | ±1.95 | ±3.31 | ±1.00 | ±2.76 | ±1.99 | ±7.13 | ±1.68 | ±2.39 | ±2.06 | ±1.57 | ±3.03 | |

| Engagement | 9.14 | 10.79 | 10.55 | 8.70 | 11.73 | 4.34 | 18.88 | 8.17 | 11.59 | 9.28 | 7.99 | 13.39 |

| ±3.79 | ±3.34 | ±4.15 | ±1.87 | ±2.67 | ±2.94 | ±9.91 | ±1.89 | ±3.45 | ±2.85 | ±1.62 | ±3.67 | |

| Smile | 3.06 | 2.25 | 2.44 | 3.03 | 7.19 | 2.82 | 12.58 | 4.80 | 4.01 | 5.39 | 4.59 | 4.15 |

| ±1.72 | ±2.01 | ±1.82 | ±0.94 | ±2.22 | ±2.15 | ±7.40 | ±1.35 | ±2.26 | ±2.05 | ±1.34 | ±2.39 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fuentes, S.; Gonzalez Viejo, C.; Torrico, D.D.; Dunshea, F.R. Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis. Sensors 2021, 21, 7641. https://doi.org/10.3390/s21227641

Fuentes S, Gonzalez Viejo C, Torrico DD, Dunshea FR. Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis. Sensors. 2021; 21(22):7641. https://doi.org/10.3390/s21227641

Chicago/Turabian StyleFuentes, Sigfredo, Claudia Gonzalez Viejo, Damir D. Torrico, and Frank R. Dunshea. 2021. "Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis" Sensors 21, no. 22: 7641. https://doi.org/10.3390/s21227641

APA StyleFuentes, S., Gonzalez Viejo, C., Torrico, D. D., & Dunshea, F. R. (2021). Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis. Sensors, 21(22), 7641. https://doi.org/10.3390/s21227641