A Two-Filter Approach for State Estimation Utilizing Quantized Output Data

Abstract

:1. Introduction

Main Contributions

- Developing an explicit model (of the GMM form) for the PMF of the quantized output considering a finite- and infinite-level quantizer, to solve in closed-form filtering and smoothing recursions in a Bayesian framework;

- Designing Gaussian sum filtering and smoothing algorithms to deal with quantized data, providing closed expressions for the state estimates and for the filtering and smoothing PDFs.

2. Statement of the Problem

2.1. System Model

2.2. Quantizer Model

2.3. Problem Definition

3. Gaussian Sum Filtering and Smoothing for Quantized Data

3.1. Gaussian Mixture Models

3.2. General Bayesian Framework

3.3. Computing an Explicit Model of

3.4. Understanding the Gaussian Sum Filtering and Smoothing Algorithms

3.5. Gaussian Sum Filtering for Quantized Data

3.6. Computing the State Estimator from a GMM

3.7. Backward Filtering for Quantized Data

| Algorithm 1 Gaussian sum filter algorithm for quantized output data |

| 1 Input: The PDF of the initial state , e.g., , , , |

| . The points of the Gauss–Legendre quadrature . |

|

| 20 Output: The state estimation in (10), the covariance matrix of the estimation error |

| in (12), the filtering PDFs , the predictive PDFs , and the set |

| , for . |

| Algorithm 2 Backward-filtering algorithm for quantized output data |

| 1 Input: The initial backward measurement given in (39), e.g., , , |

| , , , and . The set for computed in Algorithm 1. |

|

| 19 Output: The backward prediction and the backward measurement |

| update for . |

3.8. Smoothing Algorithm with Quantized Data

| Algorithm 3 Gaussian sum smoothing algorithm for quantized output data |

| 1 Input: The PDFs and obtained from Algorithm 1 and |

| obtained from Algorithm 2. |

| 2 Save the PDF |

|

| 13 Output: The state estimation in (11), the covariance matrix of the estimation error |

| in (13), and the smoothing PDFs , for . |

3.9. Computing the Smoothing Joint PDF

| Algorithm 4 Gaussian sum smoother to compute for quantized output data |

| 1 Input: The PDF obtained in Algorithm 1, computed in |

| Algorithm 2, and the PDF given in Equation (5). |

|

| 11 Compute and store according to Theorem 5 with , |

| , , , , and given in (63). |

| 12 Output: The smoothing PDFs , for . |

4. Numerical Example

4.1. Example 1: First-Order System

- The filtering and smoothing PDFs are non-Gaussian, although the process and output noises in (1) and (2) are Gaussian distributed;

- The accuracy of the standard and quantized Kalman filtering and smoothing decreased as the quantization step increased;

- The state estimates obtained with particle filter and smoother were similar to the results obtained using the Gaussian sum filter and smoother. However, the characterization of the filtering and smoothing PDFs using the Gaussian sum filter and smoother were better than the PDF obtained by the particle filter and smoother. Notice that a correct characterization of a PDF is important when high-order moments need to be computed, especially in system identification tasks;

- In order to implement the Gaussian sum filter and smoother, the parameters K (the number that defines the quality of the approximation) and (the Gaussian components kept after the Gaussian sum reduction algorithm) need to be chosen by the user. These parameters can be found in a simulation study by the trial and error approach and should be set by a trade-off between the time complexity and the accuracy of the estimation. A large value of K produces an accurate estimate, but a high computational load;

- The larger the quantization step is, the larger the number of Gaussian components, K, needed to approximate in order to obtain an accurate approximation. However, for a large quantization step, the number K needed to obtain a good approximation of the filtering and smoothing PDFs is relatively small compared to the number of particles required to obtain similar results using the particle filter and smoother;

- The maximum number of Gaussian components kept after the Gaussian reduction procedure is important for the accuracy of the approximation. In the simulations, was used. Furthermore, it was noticed that once an adequate was defined, incrementing this value did not produce a significant improvement in the estimation. However, this increment in was really critical for the resulting numerical complexity of the algorithm (and hence, the execution time), which increased since the Gaussian sum reduction procedure (e.g., Kullback–Leibler reduction) utilized more time to reduce a large amount of Gaussian components;

- The Gaussian sum smoother execution time for all values of was small. This occurred because in each case, a relatively small number of Gaussian components to approximate were used. However, the particle smoother execution time is variable for different values of . As decreased, the -norm between the Gaussian sum smoother and the ground truth decreased, and a larger number of particles to obtain a comparable -norm between the particle smoother and the ground truth were required.

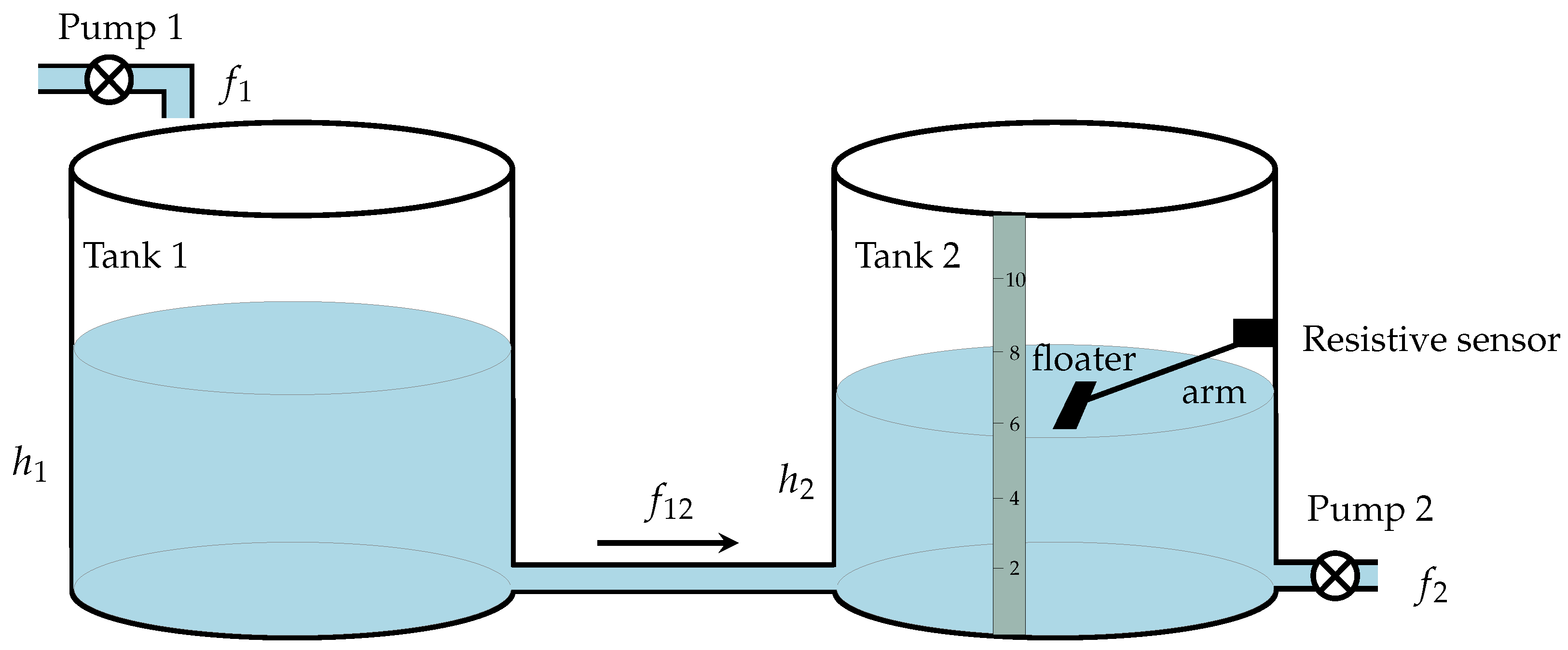

4.2. Real-World Application: Tank Liquid Level

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PDF/PMF: | Probability density/mass function |

| GMM: | Gaussian mixture model |

| GSF/GSS: | Gaussian sum filter/smoother |

| PF/PS: | Particle filter/smoother |

| KF/KS: | Kalman filter/smoother |

| QKF/QKS: | Quantized Kalman filter/smoother |

| FLQ/ILQ: | Finite-/infinite-level quantizer |

| MSE: | Mean squared error |

| : | Number of Gaussian components kept after the reduction procedure |

| GT: | Ground truth obtain by using the particle filter/smoother with a large number |

| of particles | |

| K: | Order of the polynomials in the Gauss–Legendre quadrature |

Appendix A. Technical Lemmata

Appendix B. Quantities to Perform the Gaussian Sum Filter

Appendix C. Quantities to Perform the Backward Filter

Appendix D. Quantities to Perform the Gaussian Sum Smoother

Appendix E. Quantities to Compute p(xt+1, xt|y1:N)

References

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. Trans. ASME-J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Kamen, E.W.; Su, J.K. Introduction to Optimal Estimation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Anderson, B.D.O.; Moore, J.B. Optimal Filtering; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1979. [Google Scholar]

- Leong, A.S.; Dey, S.; Quevedo, D.E. Transmission scheduling for remote state estimation and control with an energy harvesting sensor. Automatica 2018, 91, 54–60. [Google Scholar] [CrossRef]

- Liang, H.; Guo, X.; Pan, Y.; Huang, T. Event-Triggered Fuzzy Bipartite Tracking Control for Network Systems Based on Distributed Reduced-Order Observers. IEEE Trans. Fuzzy Syst. 2021, 29, 1601–1614. [Google Scholar] [CrossRef]

- Liu, L.; Gao, T.; Liu, Y.J.; Tong, S.; Chen, C.L.P.; Ma, L. Time-varying IBLFs-based adaptive control of uncertain nonlinear systems with full state constraints. Automatica 2021, 129, 109595. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.J.; Chen, A.; Tong, S.; Chen, C.L.P. Integral Barrier Lyapunov function-based adaptive control for switched nonlinear systems. Sci. China Inf. Sci. 2020, 63, 132203. [Google Scholar] [CrossRef] [Green Version]

- Gibson, S.; Ninness, B. Robust maximum-likelihood estimation of multivariable dynamic systems. Automatica 2005, 41, 1667–1682. [Google Scholar] [CrossRef]

- Agüero, J.C.; Tang, W.; Yuz, J.I.; Delgado, R.; Goodwin, G.C. Dual time–frequency domain system identification. Automatica 2012, 48, 3031–3041. [Google Scholar] [CrossRef]

- Kaltiokallio, O.; Hostettler, R.; Yiğitler, H.; Valkama, M. Unsupervised Learning in RSS-Based DFLT Using an EM Algorithm. Sensors 2021, 21, 5549. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Netto, M.; Huang, Z.; Yu, S.S.; Gómez-Expósito, A.; Wang, S.; Kamwa, I.; Akhlaghi, S.; Mili, L.; Terzija, V.; et al. Roles of Dynamic State Estimation in Power System Modeling, Monitoring and Operation. IEEE Trans. Power Syst. 2021, 36, 2462–2472. [Google Scholar] [CrossRef]

- Ji, X.; Yin, Z.; Zhang, Y.; Wang, M.; Zhang, X.; Zhang, C.; Wang, D. Real-time robust forecasting-aided state estimation of power system based on data-driven models. Int. J. Electr. Power Energy Syst. 2021, 125, 106412. [Google Scholar] [CrossRef]

- Bonvini, M.; Sohn, M.D.; Granderson, J.; Wetter, M.; Piette, M.A. Robust on-line fault detection diagnosis for HVAC components based on nonlinear state estimation techniques. Appl. Energy 2014, 124, 156–166. [Google Scholar] [CrossRef]

- Nemati, F.; Safavi Hamami, S.M.; Zemouche, A. A nonlinear observer-based approach to fault detection, isolation and estimation for satellite formation flight application. Automatica 2019, 107, 474–482. [Google Scholar] [CrossRef]

- Jeong, H.; Park, B.; Park, S.; Min, H.; Lee, S. Fault detection and identification method using observer-based residuals. Reliab. Eng. Syst. Saf. 2019, 184, 27–40. [Google Scholar] [CrossRef]

- Noshad, Z.; Javaid, N.; Saba, T.; Wadud, Z.; Saleem, M.Q.; Alzahrani, M.E.; Sheta, O.E. Fault Detection in Wireless Sensor Networks through the Random Forest Classifier. Sensors 2019, 19, 1568. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Shen, B.; Zou, L.; Shen, Y. Event-Triggering State and Fault Estimation for a Class of Nonlinear Systems Subject to Sensor Saturations. Sensors 2021, 21, 1242. [Google Scholar] [CrossRef]

- Corbetta, M.; Sbarufatti, C.; Giglio, M.; Todd, M.D. Optimization of nonlinear, non-Gaussian Bayesian filtering for diagnosis and prognosis of monotonic degradation processes. Mech. Syst. Signal Process. 2018, 104, 305–322. [Google Scholar] [CrossRef]

- Ahwiadi, M.; Wang, W. An Adaptive Particle Filter Technique for System State Estimation and Prognosis. IEEE Trans. Instrum. Meas. 2020, 69, 6756–6765. [Google Scholar] [CrossRef]

- Ding, D.; Han, Q.L.; Ge, X.; Wang, J. Secure State Estimation and Control of Cyber-Physical Systems: A Survey. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 176–190. [Google Scholar] [CrossRef]

- McLaughlin, D. An integrated approach to hydrologic data assimilation: Interpolation, smoothing, and filtering. Adv. Water Resour. 2002, 25, 1275–1286. [Google Scholar] [CrossRef]

- Cosme, E.; Verron, J.; Brasseur, P.; Blum, J.; Auroux, D. Smoothing problems in a Bayesian framework and their linear Gaussian solutions. Mon. Weather Rev. 2012, 140, 683–695. [Google Scholar] [CrossRef]

- Stone, L.D.; Streit, R.L.; Corwin, T.L.; Bell, K.L. Bayesian Multiple Target Tracking; Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Schizas, I.D.; Giannakis, G.B.; Roumeliotis, S.I.; Ribeiro, A. Consensus in Ad Hoc WSNs With Noisy Links—Part II: Distributed Estimation and Smoothing of Random Signals. IEEE Trans. Signal Process. 2008, 56, 1650–1666. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Dong, K.; Ding, Z.; He, Y. A Novel Distributed State Estimation Algorithm with Consensus Strategy. Sensors 2019, 19, 2134. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Han, Y.; Cui, M.; Liu, S. Optimal Sensor and Relay Nodes Power Scheduling for Remote State Estimation with Energy Constraint. Sensors 2020, 20, 1073. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chiang, K.W.; Duong, T.T.; Liao, J.K.; Lai, Y.C.; Chang, C.C.; Cai, J.M.; Huang, S.C. On-Line Smoothing for an Integrated Navigation System with Low-Cost MEMS Inertial Sensors. Sensors 2012, 12, 17372–17389. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rostami Shahrbabaki, M.; Safavi, A.A.; Papageorgiou, M.; Papamichail, I. A data fusion approach for real-time traffic state estimation in urban signalized links. Transp. Res. Part C Emerg. Technol. 2018, 92, 525–548. [Google Scholar] [CrossRef]

- Ahmed, A.; Naqvi, S.A.A.; Watling, D.; Ngoduy, D. Real-Time Dynamic Traffic Control Based on Traffic-State Estimation. Transp. Res. Rec. 2019, 2673, 584–595. [Google Scholar] [CrossRef]

- Schreiter, T.; van Lint, H.; Treiber, M.; Hoogendoorn, S. Two fast implementations of the Adaptive Smoothing Method used in highway traffic state estimation. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Portugal, 19–22 September2010; pp. 1202–1208. [Google Scholar]

- Särkkä, S. Bayesian Filtering and Smoothing; Cambridge University Press: Cambridge, UK, 2013; Volume 3. [Google Scholar]

- Widrow, B.; Kollár, I. Quantization Noise: Roundoff Error in Digital Computation, Signal Processing, Control, and Communications; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Msechu, E.J.; Giannakis, G.B. Sensor-Centric Data Reduction for Estimation With WSNs via Censoring and Quantization. IEEE Trans. Signal Process. 2012, 60, 400–414. [Google Scholar] [CrossRef]

- Wang, L.Y.; Yin, G.G.; Zhang, J. System identification using binary sensors. IEEE Trans. Automat. Contr. 2003, 48, 1892–1907. [Google Scholar] [CrossRef] [Green Version]

- Curry, R.E. Estimation and Control with Quantized Measurements; MIT Press: Cambridge, MA, USA, 1970. [Google Scholar]

- Liu, S.; Wang, Z.; Hu, J.; Wei, G. Protocol-based extended Kalman filtering with quantization effects: The Round-Robin case. Int. J. Robust Nonlinear Control 2020, 30, 7927–7946. [Google Scholar] [CrossRef]

- Malyavej, V.; Savkin, A.V. The problem of optimal robust Kalman state estimation via limited capacity digital communication channels. Syst. Control Lett. 2005, 54, 283–292. [Google Scholar] [CrossRef]

- Farhadi, A.; Charalambous, C.D. Stability and reliable data reconstruction of uncertain dynamic systems over finite capacity channels. Automatica 2010, 46, 889–896. [Google Scholar] [CrossRef]

- Silva, E.I.; Agüero, J.C.; Goodwin, G.C.; Lau, K.; Wang, M. The SNR Approach to Networked Control. In The Control Handbook: Control System Applications; Levine, W.S., Ed.; CRC Press: Boca Raton, FL, USA, 2011; Chapter 25; pp. 1–26. [Google Scholar]

- Godoy, B.I.; Agüero, J.C.; Carvajal, R.; Goodwin, G.C.; Yuz, J.I. Identification of sparse FIR systems using a general quantisation scheme. Int. J. Control 2014, 87, 874–886. [Google Scholar] [CrossRef]

- Li, Z.M.; Chang, X.H.; Yu, L. Robust quantized H∞ filtering for discrete-time uncertain systems with packet dropouts. Appl. Math. Comput. 2016, 275, 361–371. [Google Scholar] [CrossRef]

- Li, S.; Sauter, D.; Xu, B. Fault isolation filter for networked control system with event-triggered sampling scheme. Sensors 2011, 11, 557–572. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, L.; Liang, H.; Sun, Y.; Ahn, C.K. Adaptive Event-Triggered Fault Detection Scheme for Semi-Markovian Jump Systems With Output Quantization. IEEE Trans. Syst. Man, Cybern. Syst. 2021, 51, 2370–2381. [Google Scholar] [CrossRef]

- Zhang, X.; Han, Q.; Ge, X.; Ding, D.; Ding, L.; Yue, D.; Peng, C. Networked control systems: A survey of trends and techniques. IEEE/CAA J. Autom. Sin. 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Goodwin, G.C.; Haimovich, H.; Quevedo, D.E.; Welsh, J.S. A moving horizon approach to Networked Control system design. IEEE Trans. Autom. Control 2004, 49, 1427–1445. [Google Scholar] [CrossRef]

- Gustafsson, F.; Karlsson, R. Statistical results for system identification based on quantized observations. Automatica 2009, 45, 2794–2801. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.Y.; Yin, G.G.; Zhang, J.; Zhao, Y. System Identification with Quantized Observations; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Marelli, D.E.; Godoy, B.I.; Goodwin, G.C. A scenario-based approach to parameter estimation in state-space models having quantized output data. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 2011–2016. [Google Scholar]

- Leong, A.S.; Dey, S.; Nair, G.N. Quantized Filtering Schemes for Multi-Sensor Linear State Estimation: Stability and Performance Under High Rate Quantization. IEEE Trans. Signal Process. 2013, 61, 3852–3865. [Google Scholar] [CrossRef]

- Li, D.; Kar, S.; Alsaadi, F.E.; Dobaie, A.M.; Cui, S. Distributed Kalman Filtering with Quantized Sensing State. IEEE Trans. Signal Process. 2015, 63, 5180–5193. [Google Scholar] [CrossRef]

- Rana, M.M.; Li, L. An Overview of Distributed Microgrid State Estimation and Control for Smart Grids. Sensors 2015, 15, 4302–4325. [Google Scholar] [CrossRef] [Green Version]

- Chang, X.H.; Liu, Y. Robust H∞ Filtering for Vehicle Sideslip Angle With Quantization and Data Dropouts. IEEE Trans. Veh. Technol. 2020, 69, 10435–10445. [Google Scholar] [CrossRef]

- Curiac, D. Towards wireless sensor, actuator and robot networks: Conceptual framework, challenges and perspectives. J. Netw. Comput. Appl. 2016, 63, 14–23. [Google Scholar] [CrossRef]

- Allik, B.; Piovoso, M.J.; Zurakowski, R. Recursive estimation with quantized and censored measurements. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 5130–5135. [Google Scholar]

- Zhou, Y.; Li, J.; Wang, D. Unscented Kalman Filtering based quantized innovation fusion for target tracking in WSN with feedback. In Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; Volume 3, pp. 1457–1463. [Google Scholar]

- Wigren, T. Approximate gradients, convergence and positive realness in recursive identification of a class of non-linear systems. Int. J. Adapt. Control Signal Process. 1995, 9, 325–354. [Google Scholar] [CrossRef]

- Gustafsson, F.; Karlsson, R. Estimation based on Quantized Observations. IFAC Proc. Vol. 2009, 42, 78–83. [Google Scholar] [CrossRef]

- Cedeño, A.L.; Albornoz, R.; Carvajal, R.; Godoy, B.I.; Agüero, J.C. On Filtering Methods for State-Space Systems having Binary Output Measurements. IFAC-PapersOnLine 2021, 54, 815–820. [Google Scholar] [CrossRef]

- Gersho, A.; Gray, R.M. Vector Quantization and Signal Compression; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 159. [Google Scholar]

- Frühwirth, S.; Celeux, G.; Robert, C.P. Handbook of Mixture Analysis; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Lo, J. Finite-dimensional sensor orbits and optimal nonlinear filtering. IEEE Trans. Inf. Theory 1972, 18, 583–588. [Google Scholar] [CrossRef]

- Cedeño, A.L.; Orellana, R.; Carvajal, R.; Agüero, J.C. EM-based identification of static errors-in-variables systems utilizing Gaussian Mixture models. IFAC-PapersOnLine 2020, 53, 863–868. [Google Scholar] [CrossRef]

- Orellana, R.; Carvajal, R.; Escárate, P.; Agüero, J.C. On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models. Sensors 2021, 21, 3837. [Google Scholar] [CrossRef] [PubMed]

- Kitagawa, G. The two-filter formula for smoothing and an implementation of the Gaussian-sum smoother. Ann. Inst. Stat. Math. 1994, 46, 605–623. [Google Scholar] [CrossRef]

- Kitagawa, G.; Gersch, W. Smoothness Priors Analysis of Time Series; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1996; Volume 116. [Google Scholar]

- Karlsson, R.; Gustafsson, F. Particle filtering for quantized sensor information. In Proceedings of the 2005 13th European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005; pp. 1–4. [Google Scholar]

- DeGroot, M.H. Optimal Statistical Decisions; Wiley Classics Library, Wiley: Hoboken, NJ, USA, 2005. [Google Scholar]

- Borovkov, A.A. Probability Theory; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Cohen, H. Numerical Approximation Methods; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Arasaratnam, I.; Haykin, S.; Elliott, R.J. Discrete-time nonlinear filtering algorithms using Gauss–Hermite quadrature. Proc. IEEE 2007, 95, 953–977. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature kalman smoothers. Automatica 2011, 47, 2245–2250. [Google Scholar] [CrossRef]

- Runnalls, A.R. Kullback-Leibler approach to Gaussian mixture reduction. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 989–999. [Google Scholar] [CrossRef]

- Balenzuela, M.P.; Dahlin, J.; Bartlett, N.; Wills, A.G.; Renton, C.; Ninness, B. Accurate Gaussian Mixture Model Smoothing using a Two-Filter Approach. In Proceedings of the 2018 IEEE Conference on Decision and Control (CDC), Miami, FL, USA, 17–19 December 2018; pp. 694–699. [Google Scholar]

- Reddy, H.P.; Narasimhan, S.; Bhallamudi, S.M. Simulation and state estimation of transient flow in gas pipeline networks using a transfer function model. Ind. Eng. Chem. Res. 2006, 45, 3853–3863. [Google Scholar] [CrossRef]

- Chang, S.I.; Wang, S.J.; Lin, M.S. The Role of Simulation in Control System Design/Modification. In Nuclear Simulation; Springer: Berlin/Heidelberg, Germany, 1987; pp. 205–222. [Google Scholar]

- Anderson, B.D.O.; Moore, J.B. Optimal Control: Linear Quadratic Methods; Courier Corporation: Massachusetts, MA, USA, 2007. [Google Scholar]

- Gómez, J.C.; Sad, G.D. A State Observer from Multilevel Quantized Outputs. In Proceedings of the 2020 Argentine Conference on Automatic Control (AADECA), Buenos Aires, Argentina, 28–30 October 2020; pp. 1–6. [Google Scholar]

- Goodwin, G.C.; Graebe, S.F.; Salgado, M.E. Control System Design; Prentice Hall: Hoboken, NJ, USA, 2001; Volume 240. [Google Scholar]

| FLQ: | ILQ: with in (7) FLQ: with | FLQ: | |

|---|---|---|---|

| ∞ | |||

| KS | QKS | GSS | PS | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| - | - | Par(6) | Par(8) | Par(10) | ||||||

| 7 | 0.0379 | 0.1494 | 0.4158 | 0.4221 | 0.4735 | 2.0420 | 3.3538 | 4.0462 | ||

| 5 | 0.0048 | 0.0044 | 0.2695 | 0.3706 | 0.4588 | 3.0074 | 3.5665 | 4.0192 | ||

| 3 | 0.0033 | 0.0043 | 0.2609 | 0.3491 | 0.4618 | 4.2471 | 5.5501 | 6.3408 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cedeño, A.L.; Albornoz, R.; Carvajal, R.; Godoy, B.I.; Agüero, J.C. A Two-Filter Approach for State Estimation Utilizing Quantized Output Data. Sensors 2021, 21, 7675. https://doi.org/10.3390/s21227675

Cedeño AL, Albornoz R, Carvajal R, Godoy BI, Agüero JC. A Two-Filter Approach for State Estimation Utilizing Quantized Output Data. Sensors. 2021; 21(22):7675. https://doi.org/10.3390/s21227675

Chicago/Turabian StyleCedeño, Angel L., Ricardo Albornoz, Rodrigo Carvajal, Boris I. Godoy, and Juan C. Agüero. 2021. "A Two-Filter Approach for State Estimation Utilizing Quantized Output Data" Sensors 21, no. 22: 7675. https://doi.org/10.3390/s21227675