Segmentation Scale Effect Analysis in the Object-Oriented Method of High-Spatial-Resolution Image Classification

Abstract

:1. Introduction

2. Materials and Methods

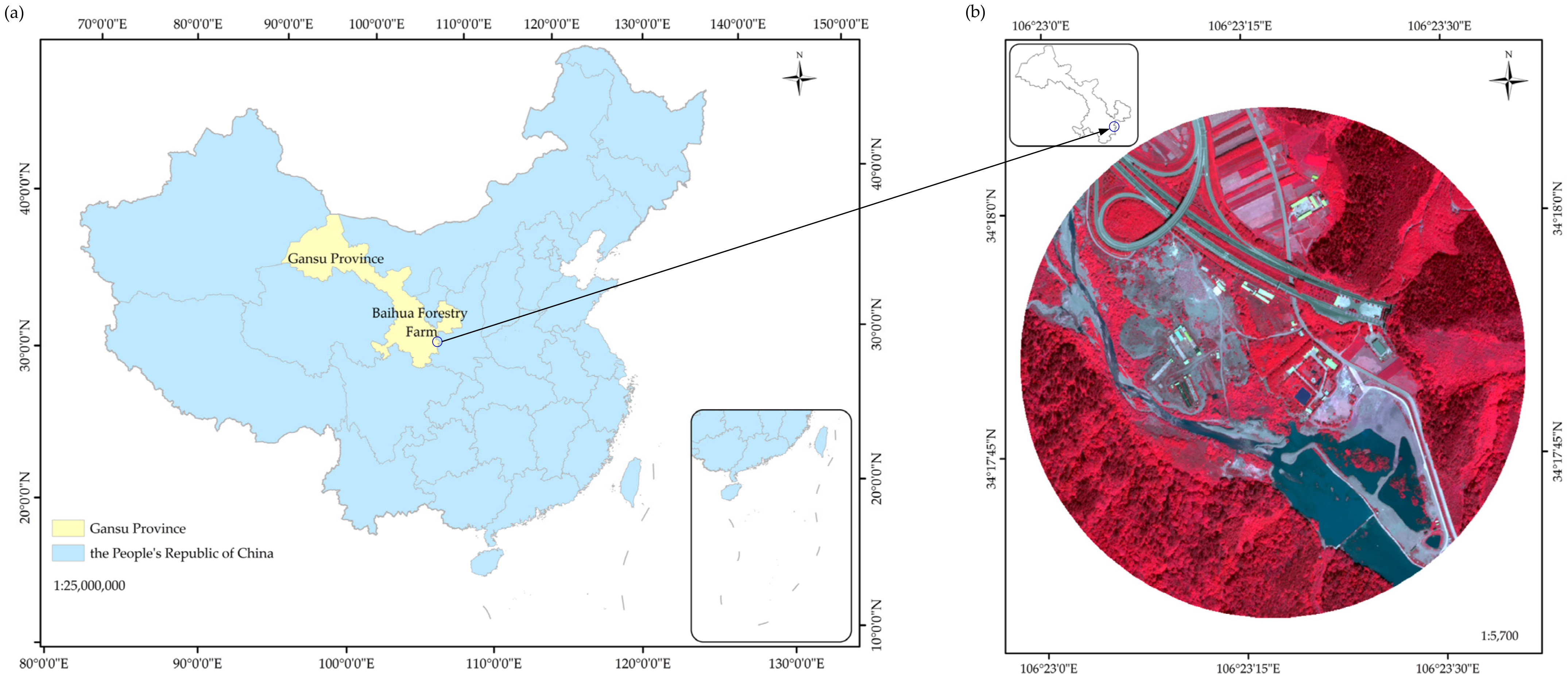

2.1. Study Area

2.2. Data Resource and Processing

2.3. Image Segmentation

2.4. Feature Selection and Extraction

2.5. CART Algorithm

2.6. Accuracy Assessment

3. Results

3.1. Image Segmentation

3.2. Feature Selection and Analysis

3.3. Object Areas and Segmentation Scale Relationship Analysis

3.4. Classification Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, M.Z.; Su, W.; Fu, Y.T.; Zhu, D.; Yao, C. Super-resolution enhancement of Sentinel-2 image for retrieving LAI and chlorophyll content of summer corn. Eur. J. Agron. 2019, 111, 125938. [Google Scholar] [CrossRef]

- Su, W.; Zhang, M.Z.; Bian, D.H.; Liu, Z. Phenotyping of corn plants using unmanned aerial vehicle (UAV) images. Remote Sens. 2019, 11, 2021. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.S.; Grunes, M.R.; Ainsworth, T.L.; Du, L.J.; Schuler, D.L.; Cloude, S.R. Unsupervised classification using polarimetric decomposition and the complex Wishart classifier. IEEE Trans. Geosci. Remote 2002, 37, 2249–2258. [Google Scholar]

- Krylov, V.A.; Moser, G.; Serpico, S.B.; Zerubia, J. Supervised High Resolution Dual Polarization SAR Image Classification by Finite Mixtures and Copulas. IEEE J. Sel. Top. Signal Process. 2011, 5, 554–566. [Google Scholar] [CrossRef] [Green Version]

- Voisin, A.; Krylov, V.A.; Moser, G.; Serpico, S.B.; Zerubia, J. Supervised Classification of Multisensor and Multiresolution Remote Sensing Images with a Hierarchical Copula-Based Approach. IEEE Trans. Geosci. Remote 2014, 52, 3346–3358. [Google Scholar] [CrossRef] [Green Version]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Hao, S.; Chen, Y.F.; Hu, B.; Cui, Y.H. A classifier-combined method based on D-S evidence theory for the land cover classification of the Tibetan Plateau. Environ. Sci. Pollut. Res. 2021, 28, 16152–16164. [Google Scholar] [CrossRef]

- Dobrini, D.; Gaparovi, M.; Medak, M. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.H.; Wang, Q.H. An automated method to parameterize segmentation scale by enhancing intersegment homogeneity and intersegment heterogeneity. IEEE Trans. Geosci. Remote 2015, 12, 1282–1283. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who launched what, when and why; trends in global land-cover observation capacity from civilian earth observation satellites. ISPRS J. Photogramm. 2017, 103, 115–128. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; Freek, V.D.M.; Harald, V.D.W.; Van, F. Geographic object-based image analysis-towards a new paradigm. ISPRS J. Photogram. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Laliberte, A.S.; Rango, A.; Havstad, K.M.; Paris, J.F.; Beck, R.F.; Mcneely, R.; Gonzalez, A.L. Object-Oriented Image Analysis for Mapping Shrub Encroachment from 1937 to 2003 in Southern New Mexico. Remote Sens. Environ. 2004, 93, 198–210. [Google Scholar] [CrossRef]

- Pringle, R.M.; Syfert, M.; Webb, J.K.; Shine, R. Quantifying Historical Changes in Habitat Availability for Endangered Species: Use of Pixel-and Object-Based Remote Sensing. J. Appl. Ecol. 2009, 46, 544–553. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, C.M.; Liu, Y.X.; Ma, X.X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Gil-Yepes, J.L.; Ruiz, L.A.; Recio, J.A.; Balaguer-Beser, A.; Hermosilla, T. Description and Validation of a New Set of Object-based Temporal Geostatistical Features for Land-use/landcover Change Detection. ISPRS J. Photogramm. 2016, 121, 77–91. [Google Scholar] [CrossRef]

- Huang, H.S.; Lan, Y.B.; Yang, A.Q.; Zhang, Y.L.; Deng, J.Z. Deep Learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Lourenço, P.; Teodoro, A.C.; Gonçalves, J.A.; Honrado, J.P.; Cunha, M.; Sillero, N. Assessing the Performance of Different OBIA Software Approaches for Mapping Invasive Alien Plants Along Roads with Remote Sensing Data. Int. J. Appl. Earth Obs. 2021, 95, 102263. [Google Scholar] [CrossRef]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Myint, S.; Chandra, G.; Wang, L.; Gillette, S.C. Identifying Mangrove Species and Their Surrounding Land Use and Land Cover Classes Using an Object-Oriented Approach with a Lacunarity Spatial Measure. GISci. Remote Sens. 2008, 45, 188–208. [Google Scholar] [CrossRef]

- Rizvi, I.A.; Mohan, B.K. Object-Based Image Analysis of High-Resolution Satellite Images Using Modified Cloud Basis Function Neural Network and Probabilistic Relaxation Labeling Process. IEEE T. Geosci. Remote 2011, 49, 4815–4820. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping seabed sediments: Comparison of manual, geostatistical, object-based image analysis and machine learning approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef] [Green Version]

- Roelfsema, C.; Kovacs, E.; Ortiz, J.C.; Wolff, N.H.; Callaghan, D.; Wettle, M.; Ronan, M.; Hamylton, S.M.; Mumby, P.J.; Phinn, S. Coral reef habitat mapping: A combination of object-based image analysis and ecological modelling. Remote Sens. Environ. 2018, 208, 27–41. [Google Scholar] [CrossRef]

- Lombard, F.; Andrieu, J. Mapping Mangrove Zonation Changes in Senegal with Landsat Imagery Using an OBIA Approach Combined with Linear Spectral Unmixing. Remote Sens. 2021, 13, 1961. [Google Scholar] [CrossRef]

- Guirado, E.; Blanco-Sacristán, J.; Rodríguez-Caballero, E.; Tabik, S.; Alcaraz-Segura, D.; Martínez-Valderrama, J.; Cabello, J. Mask R-CNN and OBIA Fusion Improves the Segmentation of Scattered Vegetation in Very High-Resolution Optical Sensors. Sensors 2021, 21, 320. [Google Scholar] [CrossRef]

- Walter, V. Object-based Classification of Remote Sensing Data for Change Detection. ISPRS J. Photogramm. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. J. Photogramm. Remote Sens. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Kim, M.; Warner, T.A.; Madden, M.; Atkinson, D.S. Multi-scale GEOBIA with very high spatial resolution digital aerial imagery: Scale, texture and image objects. Int. J. Remote Sens. 2011, 32, 2825–2850. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Wang, Q.H. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1164. [Google Scholar] [CrossRef]

- Drǎgut, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterization for multi-scale image segmentation on multiple layers. ISPRS J. Photogram. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Marghany, M. Genetic algorithm for oil spill automatic detection from ENVISAT satellite data. In Proceedings of the International Conference on Computational Science and Its Applications ICCSA 2013, Ho Chi Minh City, Vietnam, 24–27 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 587–598. [Google Scholar]

- Ohta, Y.I.; Kanade, T.; Sakai, T. Color information for region segmentation. Comput. Graph. Image Process. 1980, 13, 222–241. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth: Belmont, CA, USA, 2017. [Google Scholar]

- Chen, Q.; Zhao, Z.; Zhou, J.; Zeng, M.; Xia, J.S.; Sun, T.; Zhao, X. New Insights into the Pulang Porphyry Copper Deposit in Southwest China: Indication of Alteration Minerals Detected Using ASTER and WorldView-3 Data. Remote Sens. 2021, 13, 2798. [Google Scholar] [CrossRef]

- Shayeganpour, S.; Tangestani, M.H.; Homayouni, S.; Vincent, R.K. Evaluating pixel-based vs. object-based image analysis approaches for lithological discrimination using VNIR data of Worldview-3. Front. Earth Sci. 2021, 15, 38–53. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J.R.; Tullis, J.A. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Dronova, I.; Tiede, D.; Levick, S. Landscape analysis of wetland plant functional types: The effects of image segmentation scale, vegetation classes and classification methods. Remote Sens. Environ. 2012, 127, 357–369. [Google Scholar] [CrossRef]

- Bao, W.; Yao, X. Research on Multifeature Segmentation Method of Remote Sensing Images Based on Graph Theory. J. Sens. 2016, 12, 8750927. [Google Scholar] [CrossRef] [Green Version]

- Witharana, C.; Civco, D.L. Optimizing muti-resolution segmentation scale using empirical methods: Exploring the sensitivity of the supervised discrepancy measure Euclidean distance 2 (ED2). ISPRS J. Photogram. Remote Sens. 2014, 87, 108–121. [Google Scholar] [CrossRef]

- Tab, F.A.; Naghdy, G.; Mertins, A. Scalable multiresolution color image segmentation. Signal Process. 2006, 86, 1670–1687. [Google Scholar] [CrossRef] [Green Version]

- eCognition Developer. eCognition Developer 9.0: Reference Book; Trimble Documentation; Trimble: Munich, Germany, 2014. [Google Scholar]

- Benz, U.C.; Hoffmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogram. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Pearson, R.L.; Miller, L.D. Remote mapping of standing crop biomass for estimation of the productivity of the shortgrass Prairie. In Proceedings of the Eighth International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 2–6 October 1972; p. 1355. [Google Scholar]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. J. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Voisin, A.; Krylov, V.A.; Moser, G.; Serpico, S.B.; Zerubia, J. Classification of Very High Resolution SAR Images of Urban Areas Using Copulas and Texture in a Hierarchical Markov Random Field Model. IEEE Trans. Geosci. Remote Sens. 2013, 10, 96–100. [Google Scholar] [CrossRef]

- Wu, Q.; Zhong, R.; Zhao, W.; Song, K.; Du, L.M. Land-cover classification using GF-2 images and airborne lidar data based on Random Forest. Int. J. Remote Sens. 2019, 40, 2410–2426. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Rango, A. Texture and scale in Object-based analysis of subdecimeter resolution Unmanned Aerial Vehicle (UAV) imagery. IEEE Trans. Geosci. Remote 2009, 47, 761–770. [Google Scholar] [CrossRef] [Green Version]

- Punia, M.; Joshi, P.; Porwal, M. Decision tree classification of land use land cover for Delhi, India using IRS-P6 AWiFS data. Expert Syst. Appl. 2011, 38, 5577–5583. [Google Scholar] [CrossRef]

- Phiri, D.; Morgenroth, J.; Xu, C. Long-term land cover change in Zambia: An assessment of driving factors. Sci. Total Environ. 2019, 697, 134206. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Nyirenda, V.; Murayama, Y.; Ranagalage, M. Decision Tree Alogrithms for Developing Rulesets for Object-Based Land Cover Classification. ISPRS Int. J. Geo-Inf. 2020, 9, 329. [Google Scholar] [CrossRef]

- Elmahdy, S.; Ali, T.; Mohamed, M. Regional Mapping of Groundwater Potential in Ar Rub Al Khali, Arabian Peninsula Using the Classification and Regression Trees Model. Remote Sens. 2021, 13, 2300. [Google Scholar] [CrossRef]

- Elmahdy, S.I.; Ali, T.A.; Mohamed, M.M. Flash Flood Susceptibility modeling and magnitude index using machine learning and geohydrological models: A modified hybrid approach. Remote Sens. 2020, 12, 2695. [Google Scholar] [CrossRef]

- Avitabile, V.; Baccini, A.; Friedl, M.A.; Schmullius, C. Capabilities and limitations of Landsat and Landcover data for aboveground woody biomass estimation of Uganda. Remote Sens. Environ. 2012, 117, 366–380. [Google Scholar] [CrossRef]

- Wang, Y.; Qi, Q.; Liu, Y.; Jiang, L.; Wang, J. Unsupervised segmentation parameter selection using the local spatial statistics for remote sensing image segmentation. Int. J. Appl. Earth Obs. Geo-Inf. 2019, 81, 98–109. [Google Scholar] [CrossRef]

- Dao, P.D.; Mantripragada, K.; He, Y.H.; Qureshi, F.Z. Improving hyperspectral image segmentation by applying inverse noise weighting and outlier removal for optimal scale selection. ISPRS J. Photogram. Remote Sens. 2021, 171, 348–366. [Google Scholar] [CrossRef]

- Ming, D.P.; Li, J.; Wang, J.Y.; Zhang, M. Scale parameter selection by spatial statistics for GeOBIA: Using mean-shift based multi-scale segmentation as an example. ISPRS J. Photogram. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

- Su, T.F.; Zhang, S.W. Local and global evaluation for remote sensing image segmentation. ISPRS J. Photogram. Remote Sens. 2017, 130, 256–276. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D.M. Segmentation for Object-based Image analysis (OBIA): A review of algorithm and challenges from remote sensing perspective. ISPRS J. Photogram. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Accounting for the area of polygon sampling units for the prediction of primary accuracy assessment indices. Remote Sens. Environ. 2014, 142, 9–19. [Google Scholar] [CrossRef]

- Kurtz, C.; Stumpf, A.E.; Malet, J.; Gancarski, P.; Puissant, A.; Passat, N. Hierarchical extraction of landslides from multiresolution remotely sensed optical images. ISPRS J. Photogram. Remote Sens. 2014, 87, 122–136. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixel. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef] [Green Version]

- Hadavand, A.; Saadatseresht, M.; Homayouni, S. Segmentation parameter selection for object-based land-cover mapping from ultra high resolution spectral and elevation data. Int. J. Remote Sens. 2017, 38, 3586–3607. [Google Scholar] [CrossRef]

| Features | Formula | Reference or Note | |

|---|---|---|---|

| Spectral Bands | B1 | is spectral value of pixel (x, y), n is pixel’s total amount, k is the spectral band | CoastalBlue (427 nm) Blue (482 nm) Green (547 nm) Yellow (604 nm) Red (660 nm) RedEdge (723 nm) NIR1 (824 nm) NIR2 (914 nm) |

| B2 | |||

| B3 | |||

| B4 | |||

| B5 | |||

| B6 | |||

| B7 | |||

| B8 | |||

| Brightness | is object v’s brightness, is the average band value of object v in band i and band j, nL is band’s total amount [40] | ||

| Max Difference | |||

| Vegetation Indices | NDVI | (NIR − R)/(NIR + R) | R: Red band; G: Green Band; NIR: Near-infrared band [42,43,44,45] |

| RVI | NIR/R | ||

| DVI | NIR-R | ||

| NDWI | (G − NIR)/(G + NIR) | ||

| GLCM Texture | Formula | Reference or Note |

|---|---|---|

| Mean |

| |

| Variance | ||

| Homogeneity | ||

| Contrast | ||

| Dissimilarity | ||

| Entropy | ||

| Secondary Moment |

| Segmentation Scale | AUC | Precision | Recall | OA |

|---|---|---|---|---|

| 45 | 0.9455 | 0.9569 | 0.8636 | 0.9338 |

| 50 | 0.9240 | 0.8672 | 0.8185 | 0.9054 |

| 55 | 0.9199 | 0.7699 | 0.7379 | 0.9362 |

| 60 | 0.9373 | 0.9001 | 0.8488 | 0.8882 |

| 65 | 0.9704 | 0.9406 | 0.9479 | 0.9178 |

| 70 | 0.9182 | 0.7099 | 0.7132 | 0.9210 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, S.; Cui, Y.; Wang, J. Segmentation Scale Effect Analysis in the Object-Oriented Method of High-Spatial-Resolution Image Classification. Sensors 2021, 21, 7935. https://doi.org/10.3390/s21237935

Hao S, Cui Y, Wang J. Segmentation Scale Effect Analysis in the Object-Oriented Method of High-Spatial-Resolution Image Classification. Sensors. 2021; 21(23):7935. https://doi.org/10.3390/s21237935

Chicago/Turabian StyleHao, Shuang, Yuhuan Cui, and Jie Wang. 2021. "Segmentation Scale Effect Analysis in the Object-Oriented Method of High-Spatial-Resolution Image Classification" Sensors 21, no. 23: 7935. https://doi.org/10.3390/s21237935

APA StyleHao, S., Cui, Y., & Wang, J. (2021). Segmentation Scale Effect Analysis in the Object-Oriented Method of High-Spatial-Resolution Image Classification. Sensors, 21(23), 7935. https://doi.org/10.3390/s21237935