Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning

Abstract

1. Introduction

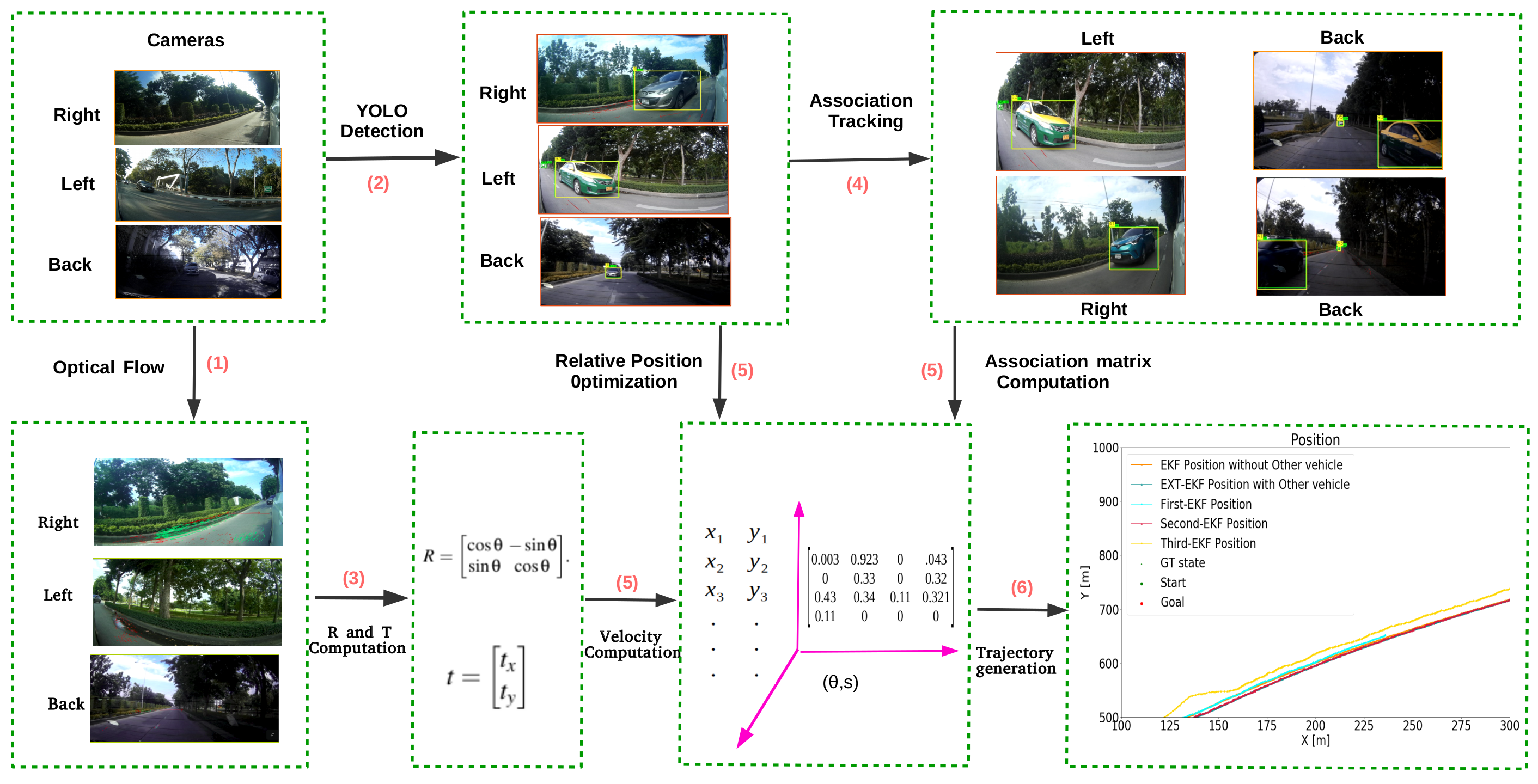

- We introduce a new method for estimation of the host vehicle’s linear and angular velocity that uses optical flow and RANSAC.

- We introduce a new method for estimating the instantaneous positions of any visible vehicles (target vehicles) relative to the host vehicle using YOLO, a camera calibration model, and a nonlinear optimization procedure.

- We introduce a new solution to the multiple target tracking (MTT) problem under conditions in which target vehicles switch from one camera view to another.

- We introduce a sensor fusion method utilizing an extended Kalman filter with novel system and sensor models.

2. Related Work

3. Proposed Method

3.1. Camera Calibration

3.2. Linear and Angular Velocity Computation from Optical Flow

| Algorithm 1: Velocity from Optical Flow. |

|

3.3. Object Detection and Relative Position Estimation Based on Deep Learning

3.3.1. Target Vehicle Detection

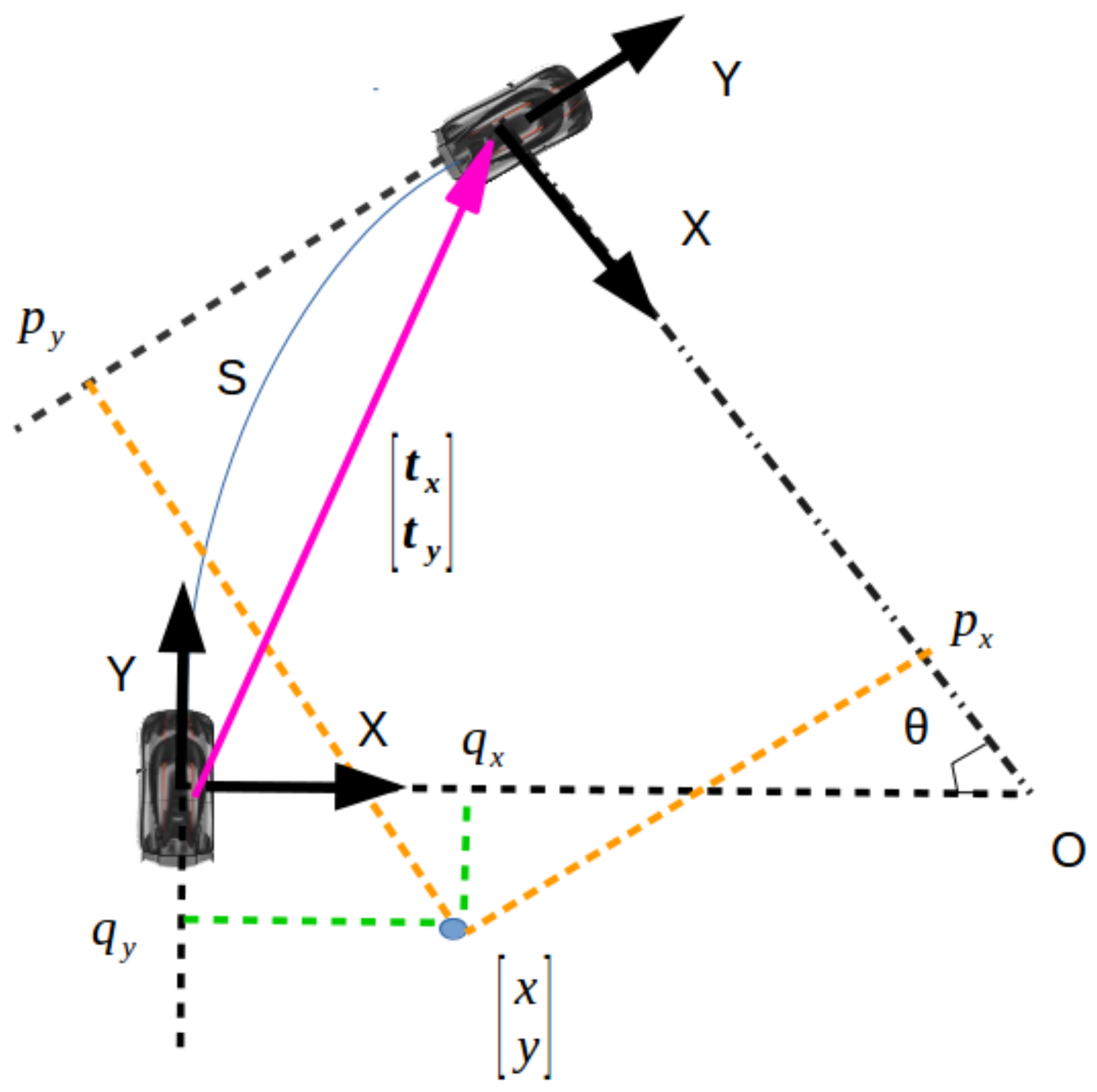

3.3.2. 3D Backprojection to Obtain Relative Position

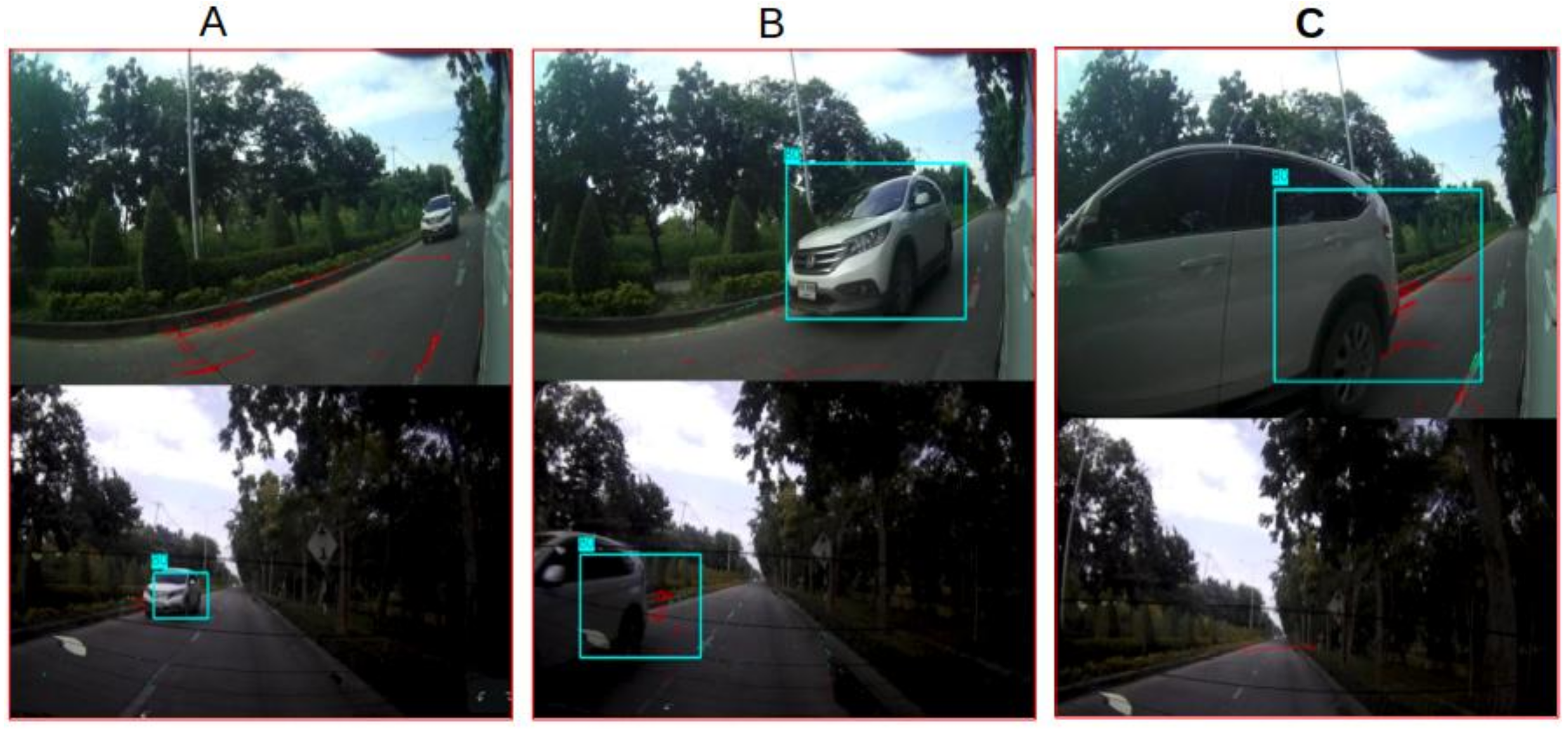

3.4. Visual Tracking and Camera Switch Processing

3.4.1. Visual Track Handling

3.4.2. Camera Switches

3.5. Vehicle Trajectory Estimation

3.5.1. Vehicle State

3.5.2. Observation Model

3.5.3. Initialization

3.5.4. Noise Parameters

3.5.5. Update Algorithm

4. Results and Discussion

4.1. Experiment I (Velocity Estimate Comparison Using Simulation Data)

4.1.1. Results

4.1.2. Discussion

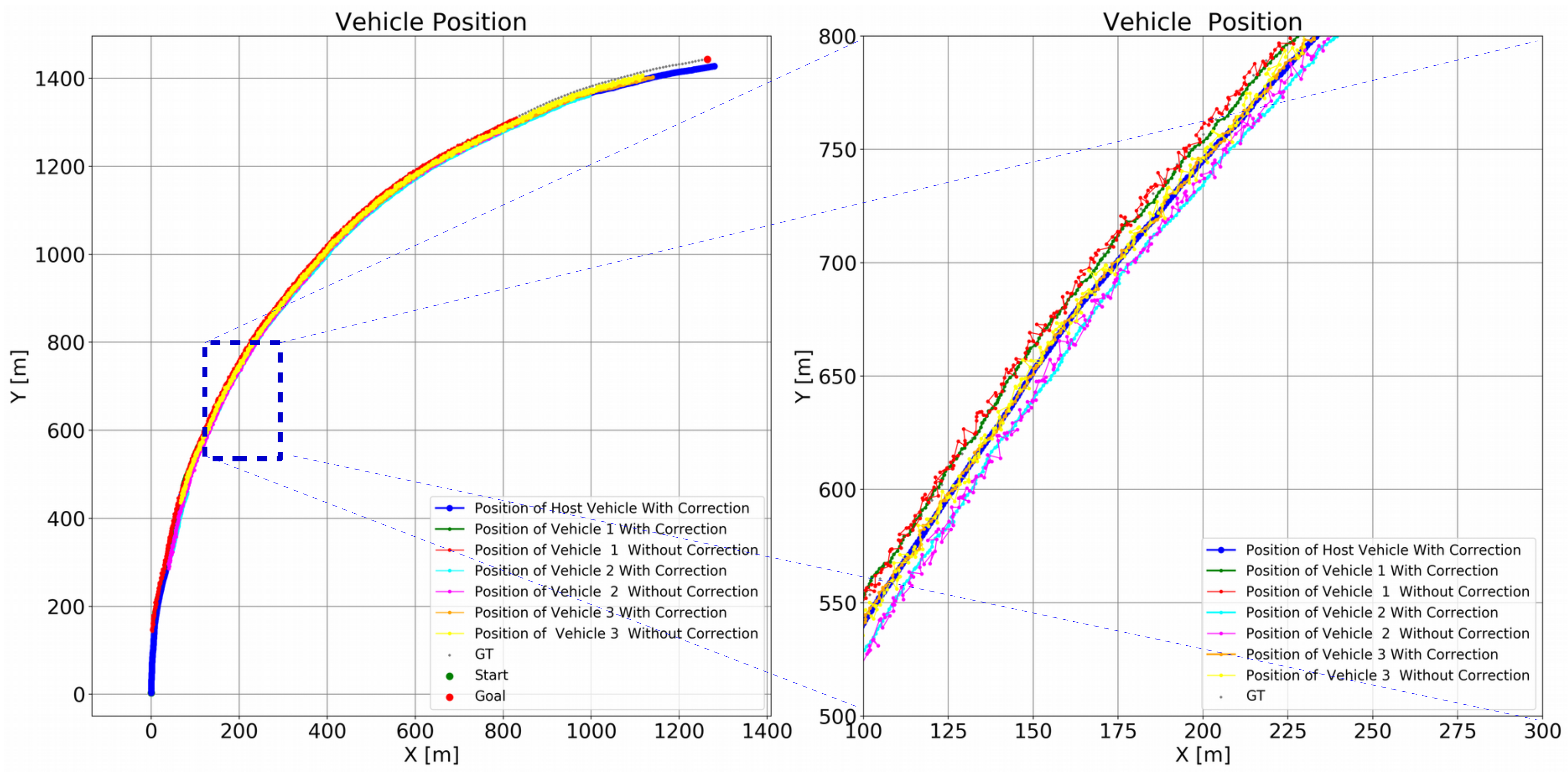

4.2. Experiment II (Trajectory Estimation in Simulation)

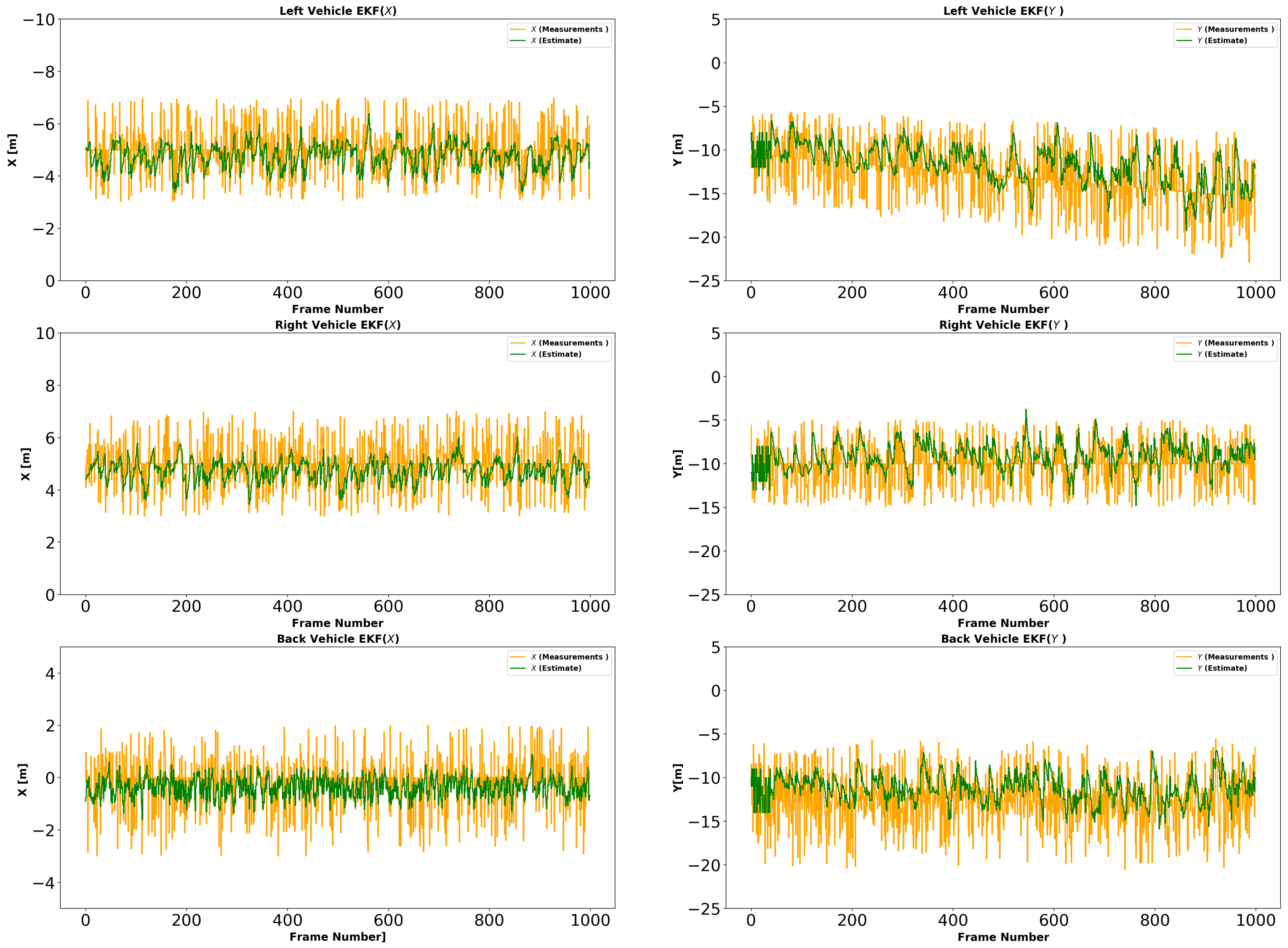

4.2.1. Results

4.2.2. Discussion

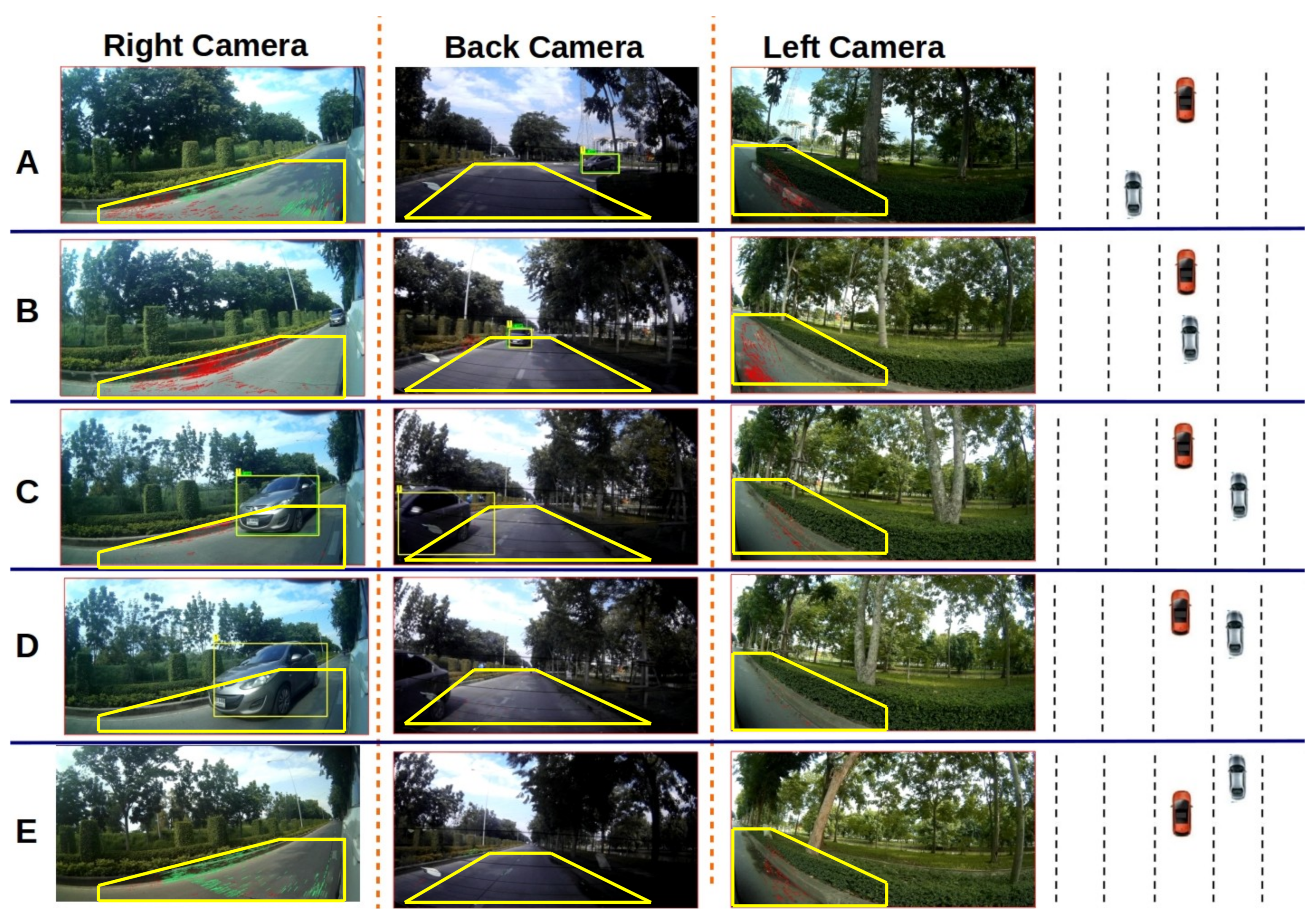

4.3. Experiment III (Visual Tracking Evaluation)

4.3.1. Results

4.3.2. Discussion

4.4. Experiment IV (Velocity and Trajectory Estimation in Real World)

4.4.1. Results

4.4.2. Discussion

4.5. Experiment V (Comparison between Proposed Method and Visual-SLAM)

4.5.1. Results

4.5.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EKF | Extended Kalman Filter |

| IDASs | Intelligent Driver Assistance System |

| MTT | Multiple Target Track |

| OR | Outlier Ratio |

| GN | Gaussian Noise |

| P | Proposed Model |

| O | ORB SLAM |

| L_RMSE | RMSE of linear velocity |

| A_RMSE | RMSE of angular velocity |

| pg | Predicted values to ground truth |

| mg | Measurement values to ground truth. |

| TFs | Total Frames |

| GT IDs | Number of GroundTruth IDs |

| IDS | Number of ID Switches |

| DS | DeepSORT |

| SSBC | Number of Successful Switches Between Cameras |

References

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems 28 (NIPS 2015); Cortes, C., Lawrence, N., Lee, D., Sugiyamam, M., Garnett, R., Eds.; Neural Information Processing Systems Foundation Inc.: San Diego, CA, USA, 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Schubert, R.; Richter, E.; Wanielik, G. Comparison and evaluation of advanced motion models for vehicle tracking. In Proceedings of the 2008 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 1–6. [Google Scholar]

- Berthelot, A.; Tamke, A.; Dang, T.; Breuel, G. Handling uncertainties in criticality assessment. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 571–576. [Google Scholar]

- Tamke, A.; Dang, T.; Breuel, G. A flexible method for criticality assessment in driver assistance systems. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 697–702. [Google Scholar]

- Huang, J.; Tan, H.-S. Vehicle future trajectory prediction with a DGPS/INS-based positioning system. In Proceedings of the 2006 American Control Conference, Minneapolis, MN, USA, 14–16 June 2006; p. 6. [Google Scholar]

- Liu, P.; Kurt, A.; Özgüner, Ü. Trajectory prediction of a lane changing vehicle based on driver behavior estimation and classification. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–14 October 2014; pp. 942–947. [Google Scholar]

- Sorstedt, J.; Svensson, L.; Sandblom, F.; Hammarstrand, L. A new vehicle motion model for improved predictions and situation assessment. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1209–1219. [Google Scholar] [CrossRef]

- Schreier, M. Bayesian environment representation, prediction, and criticality assessment for driver assistance systems. at-Automatisierungstechnik 2017, 65, 151–152. [Google Scholar] [CrossRef][Green Version]

- Ammoun, S.; Nashashibi, F. Real time trajectory prediction for collision risk estimation between vehicles. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; pp. 417–422. [Google Scholar]

- Morris, D.; Haley, P.; Zachar, W.; McLean, S. Ladar-based vehicle tracking and trajectory estimation for urban driving. arXiv 2017, arXiv:1709.08517. [Google Scholar]

- Dickmann, J.; Klappstein, J.; Hahn, M.; Appenrodt, N.; Bloecher, H.-L.; Werber, K.; Sailer, A. Automotive radar the key technology for autonomous driving: From detection and ranging to environmental understanding. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Clarke, D.; Andre, D.; Zhang, F. Synthetic aperture radar for lane boundary detection in driver assistance systems. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2021; pp. 238–243. [Google Scholar]

- Basit, A.; Qureshi, W.S.; Dailey, M.N.; Krajník, T. Joint localization of pursuit quadcopters and target using monocular cues. J. Intell. Robot. Syst. 2015, 78, 613–630. [Google Scholar] [CrossRef][Green Version]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Yao, Z.; Jiang, H.; Cheng, Y.; Jiang, Y.; Ran, B. Integrated Schedule and Trajectory Optimization for Connected Automated Vehicles in a Conflict Zone. IEEE Trans. Intell. Transp. Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- Soleimaniamiri, S.; Ghiasi, A.; Li, X.; Hunag, Z. An analytical optimization approach to the joint trajectory and signal optimization problem for connected automated vehicles. Transp. Res. Part C Emerg. Technol. 2020, 120, 102759. [Google Scholar] [CrossRef]

- Zambrano-Martinez, J.L.; Calafate, C.T.; Soler, D.; Cano, J.-C.; Manzoni, P. Modeling and Characterization of Traffic Flows in Urban Environments. Sensors 2018, 18, 2020. [Google Scholar] [CrossRef] [PubMed]

- Min, W.; Wynter, L. Real-time road traffic prediction with spatio-temporal correlations. Transp. Res. Part C Emerg. Technol. 2011, 19, 606–616. [Google Scholar] [CrossRef]

- Pandey, T.; Pena, D.; Byrne, J.; Moloney, D. Leveraging Deep Learning for Visual Odometry Using Optical Flow. Sensors 2021, 21, 1313. [Google Scholar] [CrossRef] [PubMed]

- Zhai, G.; Liu, L.; Zhang, L.; Liu, Y.; Jiang, Y. PoseConvGRU: A Monocular Approach for Visual Ego-motion Estimation by Learning. Pattern Recognit. 2020, 102, 107187. [Google Scholar] [CrossRef]

- Bian, J.W.; Li, Z.; Wang, N.; Zhan, H.; Shen, C.; Cheng, M.M.; Reid, I. Unsupervised scale-consistent depth and ego-motion learning from monocular video. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6612–6619. [Google Scholar]

- Li, R.; Wang, S.; Long, Z.; Gu, D. Undeepvo: Monocular visual odometry through unsupervised deep learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018. [Google Scholar]

- Munaro, M.; Basso, F.; Menegatti, E. Tracking people within groups with RGB-D data. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular slam system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

| OR | GN | ||||||

|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0.0500.003 | 0 | 0 | 0.0080.003 | |

| 0.0700.003 | 0.1970.008 | 0.1880.004 | 0.0200.003 | 0.0510.002 | 0.0300.002 | ||

| 40% | 0.1710.006 | 0.4660.025 | 0.1890.002 | 0.0320.003 | 0.1200.006 | 0.0350.002 | |

| 0.2850.009 | 1.4000.064 | 0.1990.003 | 0.0880.029 | 0.1720.005 | 0.0510.004 | ||

| 0.0820.005 | 0.2360.010 | 0.1760.010 | 0.0190.002 | 0.0620.002 | 0.0490.002 | ||

| 70% | 0.2040.008 | 0.9700.010 | 0.2200.015 | 0.0940.002 | 0.1480.002 | 0.0840.003 | |

| 0.3360.021 | 6.4110.032 | 0.2310.032 | 0.1010.003 | 0.2150.021 | 0.0910.001 |

| Host Vehicle | Target Vehicle(s) | Performance |

|---|---|---|

| Noise: 0 Angular velocity: rad/s. Linear velocity: m/s. | No vehicle | Estimated host vehicle path tracks ground truth path perfectly. Angular and linear velocity track the ground truth. Host vehicle position: = 0, = 15,613.221. |

| No vehicle | Host vehicle’s path is tracked smoothly. Host vehicle position: = 25.236, = 21,236.324. | |

| Angular velocity: rad/s. Linear velocity: m/s. Percentage of outliers: 10%. Noise: Gaussian with fixed. | Three vehicles are simulated. Left vehicle: x = −5 m, y = −10 m; Right vehicle: x = 5 m, y = −10 m; Back vehicle: x = 0 m, y = −11 m. Noise: 0. | Host vehicle path is tracked smoothly, and target vehicles’ paths fit the host vehicle’s path. Host vehicle position: = 64.231, = 2,121,545.342. Target vehicle relative position: = 3.341, = 0. |

| Three vehicles are simulated as above with noise: x: 40%, y: 50%. | Details are given in Section 3. Host vehicle position: = 47.34, = 24,456.63. Target vehicle relative position: = 2.272, = 5.59. |

| Place | Camera | TFs | GT IDs | P IDS | P SSBC | DS IDS | DS SSBC |

|---|---|---|---|---|---|---|---|

| left | 2 | 0 | 0 | ||||

| I | right | 3245 | 17 | 2 | 19 | 2 | 0 |

| back | 35 | 6 | 4 | ||||

| left | 0 | 0 | 0 | ||||

| II | right | 1493 | 11 | 3 | 11 | 3 | 0 |

| back | 25 | 4 | 2 | ||||

| left | 0 | 0 | 0 | ||||

| III | right | 2236 | 6 | 2 | 6 | 2 | 0 |

| back | 16 | 4 | 3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qu, L.; Dailey, M.N. Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning. Sensors 2021, 21, 7969. https://doi.org/10.3390/s21237969

Qu L, Dailey MN. Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning. Sensors. 2021; 21(23):7969. https://doi.org/10.3390/s21237969

Chicago/Turabian StyleQu, Lianen, and Matthew N. Dailey. 2021. "Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning" Sensors 21, no. 23: 7969. https://doi.org/10.3390/s21237969

APA StyleQu, L., & Dailey, M. N. (2021). Vehicle Trajectory Estimation Based on Fusion of Visual Motion Features and Deep Learning. Sensors, 21(23), 7969. https://doi.org/10.3390/s21237969