Author Contributions

Conceptualization, M.C., J.G., G.W., K.C., K.K. and M.K. (Michał Korycki); methodology, M.C., J.G., G.W., K.C., K.K. and M.K. (Michał Korycki); software, K.C., K.K., M.K. (Michał Korycki) and G.W.; validation, M.C., J.G. and G.W.; formal analysis, M.C. and J.G.; investigation, M.C., J.G., G.W., K.C., K.K. and M.K. (Michał Korycki); resources, G.W., A.J. and M.K. (Michał Kruk); data curation, A.J., M.K. (Michał Kruk) and G.W.; writing—original draft preparation, K.C., K.K., M.K. (Michał Korycki) and G.W.; writing—review and editing, K.C., K.K., M.K. (Michał Korycki), G.W., M.C. and J.G.; visualization, K.C., K.K., M.K. (Michał Korycki); supervision, M.C.; project administration, K.C., G.W. and M.K. (Michał Kruk); All authors have read and agreed to the published version of the manuscript.

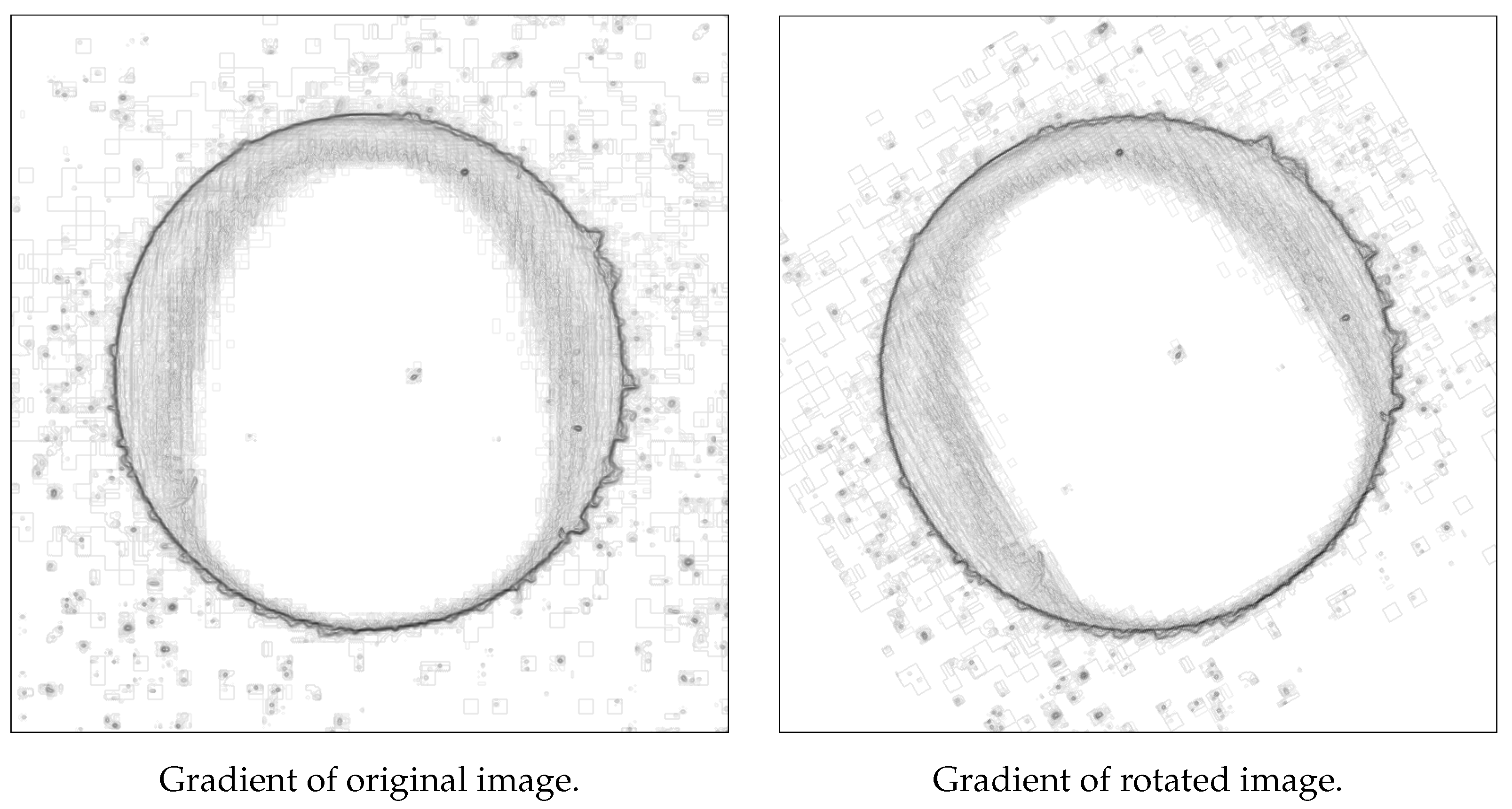

Figure 1.

Comparison of gradients. Rotated image has visible blank spaces.

Figure 1.

Comparison of gradients. Rotated image has visible blank spaces.

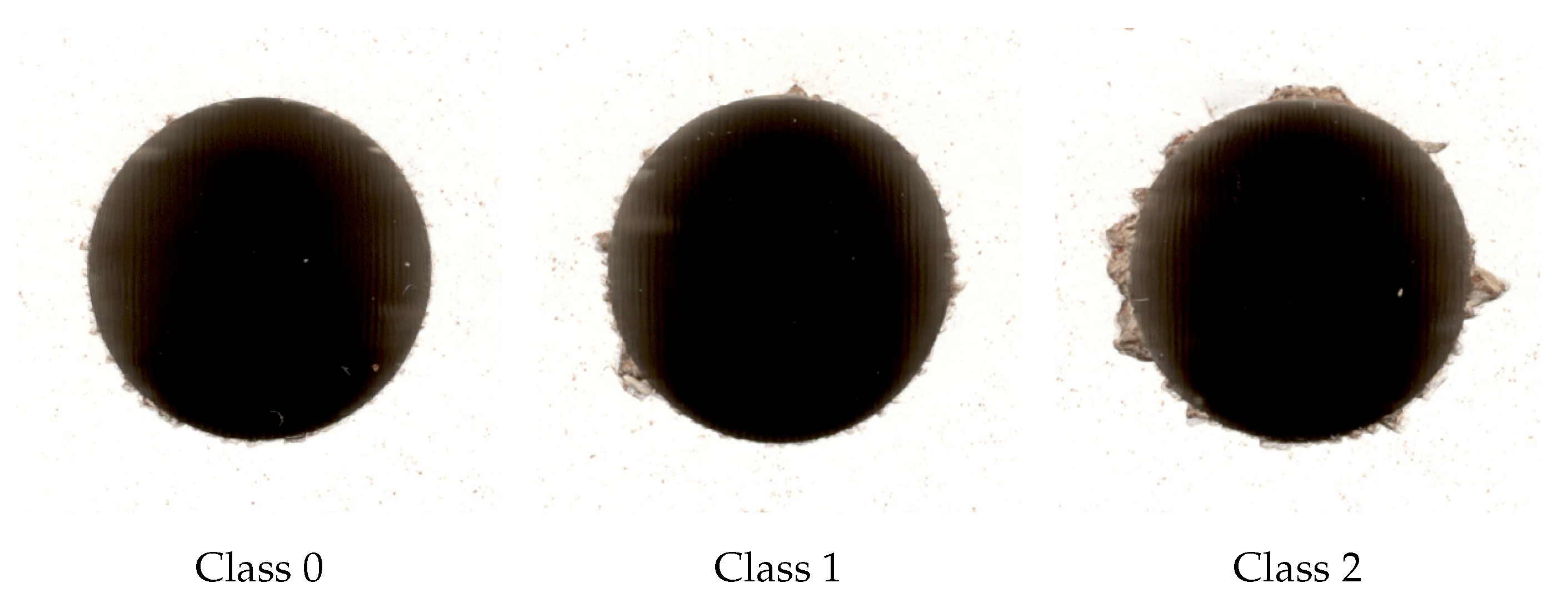

Figure 2.

Exemplary images from original dataset.

Figure 2.

Exemplary images from original dataset.

Figure 3.

Model accuracy for the training and validation sets in relation to different number of epochs used to train the CNN.

Figure 3.

Model accuracy for the training and validation sets in relation to different number of epochs used to train the CNN.

Figure 4.

Model loss for the training and validation sets in relation to different number of epochs used to train the CNN.

Figure 4.

Model loss for the training and validation sets in relation to different number of epochs used to train the CNN.

Figure 5.

Creation of additional data entry for images belonging to class 2.

Figure 5.

Creation of additional data entry for images belonging to class 2.

Figure 6.

Images generated using simple data augmentation techniques.

Figure 6.

Images generated using simple data augmentation techniques.

Figure 7.

Images generated by the GANs.

Figure 7.

Images generated by the GANs.

Figure 8.

ROC curves for the Microsoft Custom Vision model.

Figure 8.

ROC curves for the Microsoft Custom Vision model.

Figure 9.

Model accuracy for the model with 1 layer and a batch size of 256 in relation to different number of epochs used to train the CNN.

Figure 9.

Model accuracy for the model with 1 layer and a batch size of 256 in relation to different number of epochs used to train the CNN.

Figure 10.

Model loss for the model with 1 layer and a batch size of 256 in relation to different number of epochs used to train the CNN.

Figure 10.

Model loss for the model with 1 layer and a batch size of 256 in relation to different number of epochs used to train the CNN.

Figure 11.

ROC curves for the 6 best models.

Figure 11.

ROC curves for the 6 best models.

Table 1.

Sizes of the classes in the dataset.

Table 1.

Sizes of the classes in the dataset.

| Class | Description | Number of Objects |

|---|

| Class 0 | very fine | 33 |

| Class 1 | acceptable | 271 |

| Class 2 | unacceptable | 155 |

| Total | | 459 |

Table 2.

Number of observations in the dataset and its distribution between classes.

Table 2.

Number of observations in the dataset and its distribution between classes.

| Class | Train | Validation | Test |

|---|

| Class 0 | 19 | 7 | 7 |

| Class 1 | 162 | 54 | 55 |

| Class 2 | 93 | 31 | 31 |

| Sum | 274 | 92 | 93 |

Table 3.

Confusion matrix for CNN Model on validation set trained on original dataset (274 observations).

Table 3.

Confusion matrix for CNN Model on validation set trained on original dataset (274 observations).

| True/Predicted | Class 0 | Class 1 | Class 2 |

|---|

| Class 0 | 0/7 | 7/7 | 0/7 |

| Class 1 | 0/54 | 54/54 | 0/54 |

| Class 2 | 0/31 | 31/31 | 0/31 |

Table 4.

Number of observations in the training dataset after the combination of images was applied to original data.

Table 4.

Number of observations in the training dataset after the combination of images was applied to original data.

| Class | Train |

|---|

| Class 0 | 361 |

| Class 1 | 1597 |

| Class 2 | 521 |

| Sum | 2479 |

Table 5.

Number of observations in the train dataset and its distribution between classes after data augmentation.

Table 5.

Number of observations in the train dataset and its distribution between classes after data augmentation.

| Class | Train |

|---|

| Class 0 | 1440 |

| Class 1 | 3190 |

| Class 2 | 2080 |

| Sum | 6710 |

Table 6.

Structure of GAN generator model.

Table 6.

Structure of GAN generator model.

| Layer | Output Shape | Parameters |

|---|

| Dense | (None, 3200) | 259,200 |

| LeakyReLU | (None, 3200) | 0 |

| Reshape | (None, 5, 5, 128) | 0 |

| Conv2DTranspose | (None, 10, 10, 128) | 262,272 |

| LeakyReLU | (None, 10, 10, 128) | 0 |

| Conv2DTranspose | (None, 20, 20, 128) | 262,272 |

| LeakyReLU | (None, 20, 20, 128) | 0 |

| Conv2DTranspose | (None, 40, 40, 128) | 262,272 |

| LeakyReLU | (None, 40, 40, 128) | 0 |

| Conv2DTranspose | (None, 80, 80, 128) | 262,272 |

| LeakyReLU | (None, 80, 80, 128) | 0 |

| Conv2D | (None, 80, 80, 1) | 1153 |

| Total params | 1,309,441 | |

| Trainable params | 1,309,441 | |

Table 7.

Structure of the GAN discriminator model.

Table 7.

Structure of the GAN discriminator model.

| Layer | Output shape | Parameters |

|---|

| Conv2D | (None, 40, 40, 64) | 640 |

| LeakyReLU | (None, 40, 40, 64) | 0 |

| Dropuot | (None, 40, 40, 64) | 0 |

| Conv2D | (None, 20, 20, 64) | 36,928 |

| LeakyReLU | (None, 20, 20, 64) | 0 |

| Dropuot | (None, 20, 20, 64) | 0 |

| Flatten | (None, 25, 600) | 0 |

| Dense | (None, 1) | 26,601 |

| Total params | 63,169 | |

| Trainable params | 0 | |

| Non-trainable params | 63,169 | |

Table 8.

Structure of the GAN model.

Table 8.

Structure of the GAN model.

| Layer | Output Shape | Parameters |

|---|

| Generator model | (None, 80, 80, 1) | 1,309,441 |

| Discriminator model | (None, 1) | 63,169 |

| Total params | 1,372,610 | |

| Trainable params | 1,309,441 | |

| Non-trainable params | 63,169 | |

Table 9.

Number of classes in the training dataset generated by the GANs.

Table 9.

Number of classes in the training dataset generated by the GANs.

| Class | Train |

|---|

| Class 0 | 1400 |

| Class 1 | 3100 |

| Class 2 | 2000 |

| Sum | 6500 |

Table 10.

Number of observations in the dataset and its distribution between classes.

Table 10.

Number of observations in the dataset and its distribution between classes.

| Class | Train | Validation | Test |

|---|

| Class 0 | 3201 | 7 | 7 |

| Class 1 | 7887 | 54 | 55 |

| Class 2 | 4601 | 31 | 31 |

| Sum | 15,689 | 92 | 93 |

Table 11.

Confusion matrices for the Custom Vision model on the validation set with different correcting vectors.

Table 11.

Confusion matrices for the Custom Vision model on the validation set with different correcting vectors.

| Custom Vision Model, Default Results |

|---|

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 6/7 | 0/7 |

| Class 1 | 0/54 | 54/54 | 0/54 |

| Class 2 | 0/31 | 19/31 | 12/31 |

| Custom Vision Model, Original Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 3/7 | 4/7 | 0/7 |

| Class 1 | 2/54 | 52/54 | 0/54 |

| Class 2 | 0/31 | 16/31 | 15/31 |

| Custom Vision Model, Multiplied Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 2/7 | 5/7 | 0/7 |

| Class 1 | 2/54 | 52/54 | 0/54 |

| Class 2 | 0/31 | 16/31 | 15/31 |

Table 12.

Precision and recall per class for the Microsoft Custom Vision model on the validation set with different correction vectors.

Table 12.

Precision and recall per class for the Microsoft Custom Vision model on the validation set with different correction vectors.

| Microsoft Custom Vision | Class 0 | Class 1 | Class 2 |

|---|

| Precision (default) | 1.00 | 0.68 | 1.00 |

| Recall (default) | 0.14 | 1.00 | 0.39 |

| Precision (original ratio) | 0.60 | 0.72 | 1.00 |

| Recall (original ratio) | 0.43 | 0.96 | 0.48 |

| Precision (training ratio) | 0.50 | 0.71 | 1.00 |

| Recall (training ratio) | 0.29 | 0.96 | 0.48 |

Table 13.

F1 scores for the Custom Vision model on the validation set with different correcting vectors.

Table 13.

F1 scores for the Custom Vision model on the validation set with different correcting vectors.

| Model | F1 Micro | F1 Macro |

|---|

| Model with default results | 0.73 | 0.54 |

| Model with original training vector | 0.76 | 0.66 |

| Model with multiplied training vector | 0.75 | 0.61 |

Table 14.

Structure of the proposed CNN model; example of a model with 4 layers.

Table 14.

Structure of the proposed CNN model; example of a model with 4 layers.

| Layer | Output Shape | Parameters |

|---|

| Conv2D | (None, 78, 78, 32) | 320 |

| Conv2D | (None, 76, 76, 32) | 9248 |

| MaxPooling | (None, 38, 38, 32) | 0 |

| Conv2D | (None, 36, 36, 64) | 18,496 |

| Conv2D | (None, 34, 34, 64) | 36,928 |

| MaxPooling | (None, 17, 17, 64) | 0 |

| Conv2D | (None, 15, 15, 128) | 73,856 |

| Conv2D | (None, 13, 13, 128) | 147,584 |

| MaxPooling | (None, 6, 6, 128) | 0 |

| Conv2D | (None, 4, 4, 128) | 295,168 |

| Conv2D | (None, 2, 2, 128) | 590,080 |

| MaxPooling | (None, 1, 1, 128) | 0 |

| Flatten | (None, 256) | 0 |

| Dense | (None, 128) | 32,896 |

| Dense | (None, 64) | 8256 |

| Dense | (None, 3) | 195 |

| Total params | 1,213,027 | |

| Trainable params | 1,213,027 | |

| Non-trainable params | 0 | |

Table 15.

Parameter configuration and model loss for the training and validation sets.

Table 15.

Parameter configuration and model loss for the training and validation sets.

| Number | Batch | Epochs | Train | Validation |

|---|

| of Layers | Size | Loss | Loss |

|---|

| 1 | 256 | 1 | 0.51 | 0.72 |

| 1 | 512 | 1 | 0.86 | 0.89 |

| 1 | 128 | 1 | 0.33 | 0.90 |

| 4 | 512 | 3 | 0.44 | 0.92 |

| 3 | 512 | 2 | 0.59 | 0.93 |

| 4 | 16 | 4 | 1.03 | 0.93 |

| 2 | 512 | 1 | 0.88 | 0.93 |

| 4 | 128 | 2 | 0.29 | 0.97 |

| 1 | 512 | 5 | 0.05 | 0.98 |

| 3 | 512 | 4 | 0.23 | 0.98 |

Table 16.

Parameter configuration, AUC for each class, and minimum AUC for the validation set.

Table 16.

Parameter configuration, AUC for each class, and minimum AUC for the validation set.

| Number of | Batch | Epochs | AUC | AUC | AUC | min |

|---|

| Layers | Size | Class 0 | Class 1 | Class 2 | AUC |

|---|

| 2 | 8 | 4 | 0.70 | 0.72 | 0.80 | 0.70 |

| 3 | 8 | 6 | 0.69 | 0.73 | 0.78 | 0.69 |

| 3 | 8 | 1 | 0.73 | 0.68 | 0.78 | 0.68 |

| 4 | 8 | 11 | 0.68 | 0.71 | 0.77 | 0.68 |

| 3 | 8 | 11 | 0.67 | 0.71 | 0.76 | 0.67 |

| 3 | 16 | 8 | 0.67 | 0.68 | 0.79 | 0.67 |

| 3 | 8 | 5 | 0.67 | 0.66 | 0.79 | 0.66 |

| 4 | 8 | 10 | 0.69 | 0.66 | 0.75 | 0.66 |

| 2 | 8 | 6 | 0.66 | 0.68 | 0.77 | 0.66 |

| 3 | 16 | 3 | 0.66 | 0.75 | 0.80 | 0.66 |

Table 17.

Description of chosen models based on the validation loss and the highest minimal AUC value.

Table 17.

Description of chosen models based on the validation loss and the highest minimal AUC value.

| Model | Number | Batch | Epochs | Validation | min |

|---|

| of Layers | Size | Loss | AUC |

|---|

| 1 | 1 | 256 | 1 | 0.72 | 0.53 |

| 2 | 1 | 512 | 1 | 0.89 | 0.46 |

| 3 | 1 | 128 | 1 | 0.90 | 0.55 |

| 4 | 2 | 8 | 4 | 2.61 | 0.70 |

| 5 | 3 | 8 | 6 | 2.11 | 0.69 |

| 6 | 3 | 8 | 1 | 1.76 | 0.68 |

Table 18.

Validation loss for the 6 best models after removing the third dense layer.

Table 18.

Validation loss for the 6 best models after removing the third dense layer.

| Model | Number | Batch | Epochs | 3rd Dense | Validation |

|---|

| of Layers | Size | Layer Present | Loss |

|---|

| 1 | 1 | 256 | 1 | Yes | 0.72 |

| 1 | 1 | 256 | 1 | No | 1.05 |

| 2 | 1 | 512 | 1 | Yes | 0.89 |

| 2 | 1 | 512 | 1 | No | 1.00 |

| 3 | 1 | 128 | 1 | Yes | 0.90 |

| 3 | 1 | 128 | 1 | No | 0.75 |

| 4 | 2 | 8 | 4 | Yes | 2.61 |

| 4 | 2 | 8 | 4 | No | 3.27 |

| 5 | 3 | 8 | 6 | Yes | 2.11 |

| 5 | 3 | 8 | 6 | No | 2.89 |

| 6 | 3 | 8 | 1 | Yes | 1.76 |

| 6 | 3 | 8 | 1 | No | 1.55 |

Table 19.

Confusion matrices for models 1–6.

Table 19.

Confusion matrices for models 1–6.

| Model 1 |

|---|

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 0/7 | 5/7 | 2/7 |

| Class 1 | 5/54 | 46/54 | 3/54 |

| Class 2 | 0/31 | 10/31 | 21/31 |

| Model 2 |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 0/7 | 7/7 | 0/7 |

| Class 1 | 0/54 | 54/54 | 0/54 |

| Class 2 | 0/31 | 29/31 | 2/31 |

| Model 3 |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 0/7 | 7/7 | 0/7 |

| Class 1 | 8/54 | 46/54 | 0/54 |

| Class 2 | 2/31 | 8/31 | 21/31 |

| Model 4 |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 0/7 | 7/7 | 0/7 |

| Class 1 | 1/54 | 49/54 | 4/54 |

| Class 2 | 0/31 | 14/31 | 17/31 |

| Model 5 |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 5/7 | 1/7 |

| Class 1 | 1/54 | 47/54 | 6/54 |

| Class 2 | 0/31 | 11/31 | 20/31 |

| Model 6 |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 0/7 | 6/7 | 1/7 |

| Class 1 | 2/54 | 51/54 | 1/54 |

| Class 2 | 0/31 | 16/31 | 15/31 |

Table 20.

Precisions and recalls for models 1–6.

Table 20.

Precisions and recalls for models 1–6.

| Model 1 | Class 0 | Class 1 | Class 2 |

|---|

| Precision | 0.00 | 0.75 | 0.81 |

| Recall | 0.00 | 0.85 | 0.68 |

| Model 2 | | | |

| Precision | 0.00 | 0.60 | 1.00 |

| Recall | 0.00 | 1.00 | 0.06 |

| Model 3 | | | |

| Precision | 0.00 | 0.75 | 1.00 |

| Recall | 0.00 | 0.85 | 0.68 |

| Model 4 | | | |

| Precision | 0.00 | 0.70 | 0.81 |

| Recall | 0.00 | 0.91 | 0.55 |

| Model 5 | | | |

| Precision | 0.50 | 0.75 | 0.74 |

| Recall | 0.14 | 0.87 | 0.65 |

| Model 6 | | | |

| Precision | 0.00 | 0.70 | 0.88 |

| Recall | 0.00 | 0.94 | 0.48 |

Table 21.

F1 scores for the 6 best models.

Table 21.

F1 scores for the 6 best models.

| Model | F1 Micro | F1 Macro |

|---|

| Model 1 | 0.73 | 0.51 |

| Model 2 | 0.61 | 0.29 |

| Model 3 | 0.73 | 0.54 |

| Model 4 | 0.72 | 0.48 |

| Model 5 | 0.74 | 0.57 |

| Model 6 | 0.72 | 0.48 |

Table 22.

Confusion matrices for models 1, 3, and 5, corrected with the original training vector on the validation set.

Table 22.

Confusion matrices for models 1, 3, and 5, corrected with the original training vector on the validation set.

| Confusion Matrix, Model 1, Original Training Vector |

|---|

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 4/7 | 1/7 | 2/7 |

| Class 1 | 37/54 | 12/54 | 5/54 |

| Class 2 | 5/31 | 3/31 | 23/31 |

| Confusion Matrix, Model 3, Original Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 3/7 | 2/7 | 2/7 |

| Class 1 | 37/54 | 15/54 | 2/54 |

| Class 2 | 7/31 | 2/31 | 22/31 |

| Confusion Matrix, Model 5, Original Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 5/7 | 1/7 |

| Class 1 | 3/54 | 43/54 | 8/54 |

| Class 2 | 0/31 | 11/31 | 20/31 |

Table 23.

Confusion matrices for models 1, 3, and 5, corrected with multiplied training vector on the validation set.

Table 23.

Confusion matrices for models 1, 3, and 5, corrected with multiplied training vector on the validation set.

| Confusion Matrix, Model 1, Multiplied Training Vector |

|---|

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 4/7 | 2/7 |

| Class 1 | 14/54 | 35/54 | 5/54 |

| Class 2 | 2/31 | 5/31 | 24/31 |

| Confusion Matrix, Model 3, Multiplied Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 4/7 | 2/7 |

| Class 1 | 16/54 | 36/54 | 2/54 |

| Class 2 | 2/31 | 6/31 | 23/31 |

| Confusion Matrix, Model 5, Multiplied Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 1/7 | 5/7 | 1/7 |

| Class 1 | 1/54 | 45/54 | 8/54 |

| Class 2 | 0/31 | 11/31 | 20/31 |

Table 24.

Precisions and recalls for models 1, 3, and 5 with correcting vectors applied.

Table 24.

Precisions and recalls for models 1, 3, and 5 with correcting vectors applied.

|

Model 1

| Class 0 | Class 1 | Class 2 |

|---|

| Precision (default) | 0.00 | 0.75 | 0.81 |

| Recall (default) | 0.00 | 0.85 | 0.68 |

| Precision (original ratio) | 0.09 | 0.75 | 0.77 |

| Recall (original ratio) | 0.57 | 0.22 | 0.74 |

| Precision (training ratio) | 0.06 | 0.80 | 0.77 |

| Recall (training ratio) | 0.14 | 0.65 | 0.77 |

| Model 3 | Class 0 | Class 1 | Class 2 |

| Precision (default) | 0.00 | 0.75 | 1.00 |

| Recall (default) | 0.00 | 0.85 | 0.68 |

| Precision (original ratio) | 0.06 | 0.79 | 0.85 |

| Recall (original ratio) | 0.43 | 0.28 | 0.71 |

| Precision (training ratio) | 0.05 | 0.78 | 0.85 |

| Recall (training ratio) | 0.14 | 0.67 | 0.74 |

| Model 5 | Class 0 | Class 1 | Class 2 |

| Precision (default) | 0.50 | 0.75 | 0.74 |

| Recall (default) | 0.14 | 0.87 | 0.65 |

| Precision (original ratio) | 0.25 | 0.73 | 0.69 |

| Recall (original ratio) | 0.14 | 0.80 | 0.65 |

| Precision (training ratio) | 0.50 | 0.74 | 0.69 |

| Recall (training ratio) | 0.14 | 0.83 | 0.65 |

Table 25.

F1 micro- and macro-scores for the best models with different correcting vectors.

Table 25.

F1 micro- and macro-scores for the best models with different correcting vectors.

| Model | F1 Micro | F1 Macro |

|---|

| Model 1 (default) | 0.73 | 0.51 |

| Model 1 (original ratio) | 0.42 | 0.42 |

| Model 1 (training ratio) | 0.65 | 0.52 |

| Model 3 (default) | 0.73 | 0.54 |

| Model 3 (original ratio) | 0.43 | 0.43 |

| Model 3 (training ratio) | 0.65 | 0.53 |

| Model 5 (default) | 0.74 | 0.57 |

| Model 5 (original ratio) | 0.70 | 0.54 |

| Model 5 (training ratio) | 0.72 | 0.56 |

Table 26.

Confusion matrices for best CNN Model and Custom Vision model corrected with original training vector on test set.

Table 26.

Confusion matrices for best CNN Model and Custom Vision model corrected with original training vector on test set.

| Confusion Matrix, Best Cnn Model, Original Training Vector |

|---|

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 6/7 | 1/7 | 0/7 |

| Class 1 | 19/55 | 26/55 | 10/55 |

| Class 2 | 1/31 | 2/31 | 28/31 |

| Confusion Matrix, Custom Vision Model, Original Training Vector |

| True/Predicted | Class 0 | Class 1 | Class 2 |

| Class 0 | 4/7 | 3/7 | 0/7 |

| Class 1 | 1/55 | 52/55 | 2/55 |

| Class 2 | 0/31 | 11/31 | 20/31 |

Table 27.

Precisions and recalls for best CNN Model and Custom Vision model corrected with original training vector on the test set.

Table 27.

Precisions and recalls for best CNN Model and Custom Vision model corrected with original training vector on the test set.

|

CNN Model

| Class 0 | Class 1 | Class 2 |

|---|

| Precision | 0.23 | 0.89 | 0.74 |

| Recall | 0.86 | 0.47 | 0.90 |

| Custom Vision Model | | | |

| Precision | 0.80 | 0.79 | 0.91 |

| Recall | 0.57 | 0.95 | 0.65 |

Table 28.

F1 micro- and macro-scores for the best CNN Model and the Custom Vision model.

Table 28.

F1 micro- and macro-scores for the best CNN Model and the Custom Vision model.

| Model | F1 Micro | F1 Macro |

|---|

| CNN Model | 0.65 | 0.60 |

| Custom Vision Model | 0.82 | 0.76 |