3.1.1. Soft and Smart Propeller

Rotary wing UAVs, for example quadrotors, represent a type of UAV that has had enormous diffusion in recent years, and it is used in many cases by untrained and unskilled operators. The safety of this kind of UAV is often not sufficiently considered, given the perception of these vehicles as toys. For this reason, above all young people and children are exposed to the risk of serious injuries, including disfigurement and permanent loss of vision [

41].

Rotor systems developed to decrease the safety risks of this kind of UAV include passive protections, such as bumpers, cages, shrouds, and ducts, acting as mechanical barriers able to separate the rotor from the environment [

41] and active protections such as sensors, acting to prevent contact with the rotor or to brake it.

To ensure rotor safety, active protections rely on the use of sensors such as light detection and ranging (LIDAR) and radar, mechanical contact and capacitive touch/proximity sensing, ultrasonic and reflective infrared (IR) sensors and cameras, together with safeguard mechanisms [

41]. This kind of protection issue concerns the sensor cost and weight, as well as the difficulty to obtain all-around rotor sensing [

41]. Active protection approaches are essentially based on evading the contact or braking the rotors. In the first case, the quality of the UAV’s onboard sensors is critical for the effectiveness of the contact evasion. Moreover, even if the UAV active protection is perfectly and correctly working and the quality of its sensors suitable, some specific conditions can make the evasion impossible to be put in practice, for example when the vehicle has no safe way to move because it is backed into a corner [

41].

In the case of rotor braking approaches, some important issues that reduce efficiency are weight, complexity, and system failure occurrences [

41]. An interesting solution to face all the above issues uses the existing rotor structure as a sensor [

41]. A thin plastic hoop is mounted on the shaft driving the rotor, so that it suffers the effects of a possible collision before the rotor. The hoop rotates thanks to the passive friction with the rotor hub fast enough to quickly detect intrusion, but slow enough to be safe to contact, compared to the rotor, with its light weight and wide surface area. Some reflective targets passing in front of the IR proximity sensor are attached to the hoop base. In this way, the IR sensor can estimate the hoop speed by measuring the time between the target subsequent crossings. Any contact of an object with the hoop causes the latter to suddenly decelerate. This situation is revealed by the IR sensor as a large negative hoop speed delta, or as a time threshold exceeding within two target subsequent crossings.

Considering that the hoop speed can also vary when the rotor speed changes for normal mission operations, it is necessary to set a reference hoop speed variability or missed detection value for the object contact detection and the consequently electrodynamic braking operation. This means finding a tradeoff between the reliability and the response time of the system. This rotor safety system can detect collisions from all directions, and does not require training for operators or compromise the time flight endurance and UAV aerodynamics. Moreover, the system can prevent operation if compromised and can be implemented at low cost, adding little weight. However, to ensure that wear does not cause a reduction in frame speed, it is important to fine-tune the mating contact surfaces. In case of a hard impact the hoop can break. Finally, the conflicting needs of requiring wider hoops and higher torque motors and at the same time smaller rotors to ensure suitable warning and prompt braking at high flight speeds can compromise UAV thrust [

41]. The system concept was tested to investigate its functionality and effectiveness. In particular, the braking response was investigated to provide both the circuit activation delay and decaying rotor speed profile. The initial rotor velocity was about

, corresponding to 260 Hz, while the hoop was spinning at 30 Hz, with −0.5 Hz tolerance changes per revolution allowed before triggering. A Chronos 1.4 High Speed Camera was used to carry out the profile of the rotor braking velocity. A value of 0.0118 s was measured for the latency between the trigger event and the starting of the rotor deceleration to which a further time of 0.0474 s was needed for the rotor to completely stop. Therefore, the proposed system, according to the results provided in [

41], has shown to be capable of halting the rotor within 0.06 s of activation. Other test results reported in [

41] have shown the reduced impact damages of the proposed safety system with respect to a rotor system without hoop.

Another solution, dealing with passive protection, to reduce injuries and damages caused by UAV impacts in the air or on the ground, relies on the construction of soft vehicles, by using specific material to make the system less sharp and lower in weight, to assure a low collision impact force. In particular, this has emerged in the concept of flexible blades [

42,

43,

44,

45].

To keep the flexible blade shape for rotor-based UAVs, blade rigidity in the direction of the force must be adequate, otherwise the flexible material can easily bend due to its thinness and poor rigidity.

The problem of the flexible blade rigidity can be solved by looking at dragonfly wings, which, despite being very thin, can withstand very large forces thanks to their corrugated shape. This is the idea on which the research proposed in [

44] is based. A camber structure inspired by the structure of dragonfly wings was used to build flexible blades aimed at improving safety for rotor-based UAVs. The blades were realized by means of a 3D printer and a polyethylene film and were designed, fabricated, and tested on a commercial UAV, the Walkera Rodeo 150 [

46]. To define the proper film thickness, ensuring sufficient stiffness for the blades to provide thrust and torque like the ones generated by the original blades, experiments carrying out force/torque measurements were performed, by means of the data acquisition system shown in

Figure 3. The considered test setup included a brushless DC (BLDC) motor, a force/torque sensor (Nano 17), a controller (Maxon ESCON 36/3 EC controller, by Maxon Motor ag, Sachseln, Switzerland), a data acquisition device (NI-DAQ USB-6343, by National Instruments, Austin, Texas) and a gearbox with a 1:4 gear ratio. As can be seen in

Figure 3 angular velocity and samples per second were provided as input to a LabVIEW user interface. These data allowed the DAQ to give the controller the angular velocity of motor. The values measured by the force/torque sensor (velocity, force, and torque) were recorded and then used to carry out the blade thrust, torque, and efficiency values blade by averaging data [

44].

Trust experiments were carried out by varying the angular velocity from 0 to 8000 revolutions per minute (rpm), in steps of 1000 rpm, to determine the film thickness, from 0 to 10,000 rpm to determine the design parameter value related to the angle of attack and from 0 to 11,000 rpm in case of the parameter affecting the leading-edge angle [

44].

The resulting flexible blades, fabricated according to the design parameters experimentally provided, were capable of generating 0.54 N thrust or more, having similar thrust and efficiency to the ones given by the original blades. The UAV considered in the study, in fact, had a weight of 220 g, requiring at least 0.54 N of thrust per blade to hover [

44].

The idea of designing a deformable propeller inspired by the structure of dragonfly wings was explored in [

45], to further improve the UAV safety performance and to be applied also in the case of large UAVs [

45]. The proposed propeller was made by using both rigid and soft parts. In particular, the hub and wing were made of rigid plastic, while silicone rubber and nylon monofilaments were used for the soft propeller parts, in order to reproduce the nodus properties of the dragonfly wing. Nylon monofilament tendons connected the hub and the wing. The number of these tendons could be changed to vary the propeller stiffness. The designed structure included a bendable segment, to reduce the impact forces arising from a collision and protect the propeller.

As the proposed propeller of can deform during its rotation, it is essential to measure its generated thrust force. An experimental setup including a force gauge (IMADA-Japan ZTS-5N, by IMADA CO., LTD., Toyohashi, Japan) and a motor was used to measure the thrust forces provided by two same sized propellers, a rigid one and the proposed deformable one, for different rotation speeds.

The obtained results showed that in the same operating conditions, the difference in the thrust force values measured for the two kinds of tested propellers was small [

45]. In particular, the maximum velocity reached by the rigid propeller was 2800 rpm while, in the case of the deformable propeller, it was 3200 rpm. The greater value obtained by the deformable propeller involved a greater deformation at high speed and a pitch angle decrease, implying that, at the same velocity, the drag force on the soft propeller was smaller than the one exerted on the rigid one. Both the thrust forces of the rigid and soft propeller were approximately equivalent, equal to just under 1.3 N, at their highest speed [

45].

3.1.2. Flight Testing

UAV hovering accuracy, attitude stability and track accuracy are essential performance parameters that can strongly affect the vehicle operation in terms of efficiency in applications for example investigation, inspection, material delivery.

A UAV flight performance test method based on an integrated dual-antenna global positioning system/inertial navigation system (GPS/INS) was proposed in [

47] to provide hovering, attitude stability and track measurements. In particular, the attitude measurement was carried out by using two antennas mounted at different positions on the vehicle and the carrier phase measurement, to determine the relative position between the GPS antennas. The UAV attitude parameters were carried out according to the workflow shown in

Figure 4.

The vehicle performances were determined by means of evaluation function models [

47]. To obtain the hovering accuracy, the authors used the initial hovering position as the reference point and found the deviation of the UAV’s real time position and reference position in a certain time interval. Therefore, mean deviation and standard deviation could be defined and decomposed into horizontal and vertical hover accuracy as needed. The UAV attitude stability was carried out from the mean and standard deviation of the three attitude angles (heading, pitch and roll angle) and the initial value deviation, respectively. Concerning the track accuracy, it had to be analyzed in straight-line flight, using the differential GPS/INS system data as a reference to be compared with the real-time data collected during the UAV flight. The deviation in the compared data reflected the flight path accuracy of the UAV. In [

47] the least squares linear fitting method was used to perform three-dimensional linear fitting on the reference data at different times, and then calculate the difference between the real-time and fitting data. This was done to face the possible inconsistency between the real-time acquisition time of the UAV and the integrated inertial navigation device. After the average distance value was calculated, the UAV track accuracy was obtained [

47].

The system was tested performing both static and dynamic simulations. It consisted of a base station and a mobile station. The first one, used for the continuous GPS satellite observation, was set at a fixed point position. The mobile station, mounted on the UAV, moved with it after the initialization. The synchronization data received from the mobile and the base stations were integrated and linearly combined to provide a virtual carrier phase and the relative position between the receivers. In the static test the system positioning and attitude accuracy were tested considering a baseline length of 0.8 m and a test time of 200 s (1000 epochs).

In the dynamic test, the system was placed in a flatbed trolley that was pushed to make a uniform linear motion and two consecutive circular motions. From the static test results, it was discovered that the standard deviation of the pitch, roll and heading angles of the system was equal or less than 0.1 degrees. In the dynamic test, these values were slightly larger probably due to the irregularities of the ground during the uniform motion.

A real UAV flight test was carried out, too. It was shown that the obtained UAV path was substantially consistent with the target one, by importing in Google Earth the received GPS data [

47].

A new test procedure was proposed in [

48] and tested for different UAVs and weather conditions. In particular, a set of tasks was developed and implemented to successfully control the proper operation of UAVs. In this way, it was possible to determine parameters as hovering and positioning accuracy, device position drift, positioning repeatability, variability of the positioning accuracy, deviation, and repeatability of the distance, by means of which UAV safety and proper operability could be certified. There were five proposed tasks. The first one focused on the determination of the UAV hovering accuracy, controlling its position during the hovering, and so examining the vehicle position drift during the flight. The second task was devoted instead to the determination of the UAV’s accuracy and repeatability in reaching a position. The accuracy of the installed UAV barometric system, an essential system to assure the right implementation of the programmed flight altitudes, was the object of the third task. A simulation of a photogrammetric mission was developed in the last two tasks to analyze the influence of inaccuracy on the implementation of this kind of mission on the image overlap. To perform the experimental test campaign, two DJI A2 multirotor stabilization controllers (COM-1, COM-2) [

49] were installed on DJ Spreading Wings S 900 platforms [

50]. The UAV position in space was measured by means of a Robotic Total Station (RTS) Leica Nova MS50, by Leica Geosystems AG, Heerbrugg, Switzerland, refs. [

51,

52] equipped with servomotors and a moving prism tracking system. Two measurement points were considered for the experimental tests: the RTS, placed higher in relation to the UAV’s take-off with a 360° miniprism on board, and the reference point. The wind speed and the atmospheric pressure were measured during the tests, at a lower altitude and distant from the UAV, near the RTS position. The coordinates of the points measured by the Leica Nova MS50 were considered as reference and compared with the coordinate and altitude data recorded by the UAV, in the LOG files saved in its on-board computer. Analysis was performed taking into account the data related to the coordinates of the points designed to be implemented by the UAV. By comparing the design values and the reference data measured by the Leica Nova MS50, the accuracy of the UAV mission could be assured, to ensure for example an appropriate distance in case of separation from infrastructure or obstacles. The LOG file data of the UAV on-board computers could be compared with the design values to determine the on-board computer’s internal accuracy, for example to verify that the planned tasks could be fulfilled by its software. Finally, the differences between the MS50 data and those included in the LOG files were used to determine the on-board device’s accuracy. The experimental test campaign reported in [

48] involved all the five proposed tasks.

In this test, the UAV moved from the take-off place to reach the altitude of 20 m, then rose to the altitude of 90 m with successive increasing steps of 5 m. At each of these 15 measurement points, the UAV hovers for at least 15 s. According to the obtained results, increasing differences between the programmed and the Leica Nova MS50 measured altitudes were found, as shown in

Figure 5.

The maximum value of these differences, for COM-1, was equal to 2.7 m in light wind test conditions and to 4 m in strong wind test conditions. For COM-2, instead, these values were equal respectively to 9.5 m and almost 8 m. The test results show, therefore, a barometer scale error occurrence, affecting the reached UAV flying altitude from the take-off position. This is essential information, since the barometric sensor errors can compromise low-altitude UAV flight missions, for applications such as power line inspection, photogrammetry or related to smart city implementation [

48]. By looking at the parameter, denoted in the paper as scale, expressing the ratio of the measured Leica Nova MS50 reached altitude and the design altitude, it was possible show that the barometric sensor in COM-1, with mean scale values of 0.98 in light wind and 0.95 in strong wind, was more susceptible to wind influence than the COM-2 sensor with mean scale values of 0.89 in light wind and 0.90 in strong wind.

3.1.3. Fault and Damage Detection

Monitoring the UAV’s health during missions is essential to meet the safety requirements. Vibration measurements can be used to infer the condition of the UAV’s mechanical integrity, providing vehicle health status information [

53,

54,

55].

An example is represented by the quadcopter fault detection and identification (FDI) method proposed in [

53], which exploits the airframe vibration signals by using the information acquired from the UAV acceleration sensors during flight.

In particular, the proposed method first collects airframe vibration data during the quadcopter’s flight by means of the accelerometer sensor. These data were reorganized to be associated to

K in-flight quadcopter health states, forming the

Di (

i = 1, 2, …,

K) datasets. Then, the datasets were preprocessed to obtain the

di (

i = 1, 2, …,

K) datasets, dividing the Di into multiple subsets to have units of 1 s. Then feature vector extraction was performed with wavelet packet decomposition to obtain

θi (

i = 1, 2, …,

K), which are imported for the long and short-Term Memory (LSTM) network training, as seen in

Figure 6. To obtain faster convergence speed and better performance, the standard deviation of wavelet packet coefficients was adopted for the extraction of the original airframe vibration signal characteristics, to construct the feature vector for the LSTM-based FDI model training. The proposed method was experimentally validated by acquiring the vibration data for the three UAV blade axes X, Y and Z, under three health states (

K): non-damaged blades, 5% broken blades and 15% broken blades. The percentage refers to the damaged part of the blade with respect to its mass. The platform used to carry out the experimental validation was the Parrot AR.UAV equipped with a three-axis gyroscope, three-axis accelerometer, ultrasound altimeter and two cameras. The UAV sent to a laptop the gyroscope measurements and the velocity estimated by the on-board software by means of the vehicle’s downward-facing camera. In this way, it was possible to monitor in real time the quadcopter during the flight and the vibration data. When the fracture of the UAV blade was not serious, the acceleration fluctuation was not wide. The angular velocity can represent the UAV’s whole flight state. Therefore, only the x, y, and z axes’ angular velocity needed to be extracted from the UAV flight vibration data [

53]. Data preprocessing removed invalid and acceleration data in the three datasets and divided them into more subsets. Then, 24-dimensional feature vectors were generated by decomposing the angular velocity data of the subsets into eight wavelet component signals and building eight-dimensional feature vectors for each axis by wavelet packet coefficient standard deviations [

53].

Another interesting contribution can be found in [

55], in which a propeller fault detection algorithm based on the analysis of the vibrations coming from propeller imbalances was proposed. In particular, the proposed method relies on a Kalman filter-based approach to estimate the imbalance for each propeller, taking as input the accelerometer measurements and the motor force commands. The proposed fault detection algorithm was experimentally validated by using three different UAV types with four or six propellers, different total masses, and actuators. Two kinds of trajectories were considered in the validation phase, to focus both on situations in which the motor forces strongly vary (T1) and when the motor forces are more uniform along the trajectory (T2). In the first case, the UAV was made to move along set points chosen randomly; in the second case it was made to fly along a horizontal circle. The three UAVs used were all equipped with Crazyflie 2.0 flight controller by Bitcraze AB, Malmo, Sweden [

56], including a STM32F4 microcontroller by STMicroelectronics International N.V., The Netherlands and a MPU9250 IMU (Inertial Measurement Unit) by TDK InvenSense, San Jose, California. The results of the experiments conducted by using a quadcopter with brushed motors, (numbered in the paper as motor 0, 1, 2 and 3), the smallest UAV considered in the paper, showed that, in case of no damage affecting the propellers, the estimated imbalances rapidly decay to values close to zero, without varying again. In the case of a damaged propeller at motor 0, the associated imbalance estimated presented a different trend far from values close to zero, evident and similar for both the two trajectories, T1 and T2. However, in the case of T1, the motor forces presented a larger variance, so provided more information to identify the faulty propeller. Moreover, in the case of a damaged propeller, the accelerometer measurements showed an increase in amplitude of about threefold. Other experiments were carried out, involving a medium quadcopter and a large hexacopter with brushless motors, showing successful detection of the damaged propeller by the proposed method.

The proposed approach was compared to a loss-of-effectiveness approach, too. In particular, the effect of damage on the propeller for which the least thrust was produced at a given angular velocity compared to that produced by an undamaged propeller was quantified in the paper by means of estimated propeller effectiveness factors. These can be estimated in terms of the average thrust produced considering the case of near-hover flight and a symmetric UAV for which all propellers produce equal force in hover, assuming the commanded velocities were accurately tracked by the motors as:

where,

represents the thrust force,

the command force,

the number of propellers,

the collective mass of the propellers,

the force that should be produced by each propeller on average. These estimations take into account that near hover, the UAV has zero average translation and zero average acceleration, therefore the forces must average to the hover forces [

55]. Considering the experiments carried out for the small quadcopter, the propeller factors were computed restricting the sample to an interval of 20 s, beginning 5 s after the UAV takes off.

With these values, there was a lack of a strong correlation between the damage and the propeller factors, so that any perceived loss of effectiveness was completely masked by the brushed motor performance variability. The approach proposed in [

55], instead, proved to be able of rapidly and accurately identify the damaged propeller.

A three-stage algorithm able to detect the rotor fault occurrence and also determine its type and scale was proposed in [

57]. The idea of the proposed method too originates from the fact that unbalanced UAV rotating parts cause vibrations. So, thanks to measurements of acceleration from the vehicle IMU sensor, signal processing and machine learning, it can be possible to detect and gain information about rotor faults. In particular, the first step of the proposed method aimed to obtain raw acceleration measurements in the two axes of a plane parallel to the rotor disks; the vertical axis was not considered capable of providing significant vibration information. These data were stored in a cyclic buffer and preprocessed with the regular Hanning window. The second step deals with the feature extraction. It was performed both in the time and frequency domains with fast Fourier transform (FFT), wavelet packet decomposition (WPD) and by the measurement of the signal power in linearly spaced frequency bands (BP, BandPower). The third step aimed at carrying out the classification of the signals and the rotor condition determination, by means of the support vector machine (SVM) with the Gaussian kernel. In particular, the algorithm included three different classifiers to first detect the fault occurrence (healthy/damaged rotor), to estimate the scale of damage (light/severe) and to estimate the type of the fault (propeller blade damaged edge/distorted tip). Ten experimental flights were carried out to build the dataset. Several thousand acceleration signal vectors considering different flight scenarios were included to train and test the SVM classifier.

The UAV used in the experimental validation was the Falcon V5 [

58] equipped with 10-DOF IMU (ADIS16488) by Analog Device, Wilmington, MA providing acceleration measurements with respect to three axes of rotation. Different sets of healthy/damaged rotors, flight trajectories, UAV loads and propeller materials and manufactures were considered in the experimental tests. Concerning the feature extraction method, the best performance was achieved using FFT and WPD. The wrong classification number due to missing fault occurrences faults was, instead, similar for the three feature extraction methods. Regarding the estimation of the damage scale (light/severe), the best results were obtained by the FFT feature extraction method, which obtained a correct fault scale estimation ratio (light/severe) of around 90%, unlike the WPD which obtained the lowest correct estimation percentage, at around 70%. However, the WPD proved to be the best method for rotor damage type estimation, reaching a 90% correct fault type estimation ratio, unlike the FFT that in this case of obtained a correct estimation percentage around 80%. Considering that the FFT achieves the best fault detection and damage scale estimation, at the cost of slightly lower performance in terms of fault type classification, the authors of [

57] chose this method for further development in ongoing research. In particular, the next research step will deal with the implementation of the algorithm in the UAV onboard controller to investigate its effectiveness in real-time during the vehicle flight.

Major faults and damages can affect the UAV when its flight stability is compromised due to changes in the vehicle’s center of gravity, occurring for example because of a movement of the payload or the loss of part or all of it, or the extraction or retraction of a camera lens unit mounted on the UAV to change the focal length [

11]. To face this problem a patent was provided describing a system able to detect the UAV’s center of gravity change and to react by changing the length of one or more vehicle arms and shifting the UAV’s center of thrust [

11]. After receiving some measurements from one or more sensors, such as the gyroscope, IMU, accelerometer, mass and GPS sensor, the angular velocity of each UAV rotor was computed and compared with a predefined threshold value. Depending on whether or not this threshold was exceeded, the length of one or more vehicle arms was adjusted and the angular velocity was set to the threshold value to ensure the flight stability, or the arm lengths were left unchanged [

11].

3.1.4. Avoid Collisions

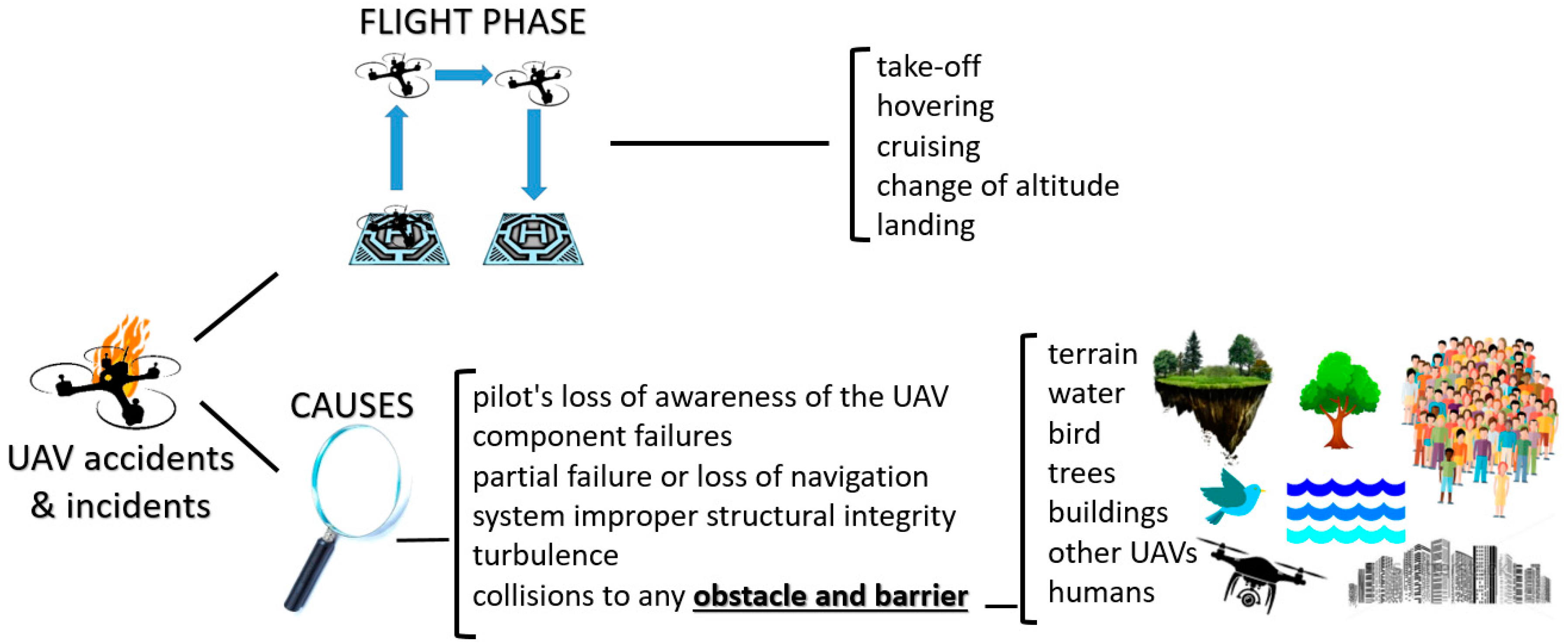

UAV safety can be threatened by collisions with other UAVs, aircrafts, or birds due to different causes including equipment malfunctions, weather conditions or operator errors. To face this problem UAVs should be made completely autonomous to eliminate the human error and capable of sense and avoid in a suitable time any obstacles.

In [

59], an overview of the most followed research trends and results on collision avoidance in autonomous systems was presented according to the classification shown in

Figure 7. As it can be seen in the figure, the first step to avoid a collision is to perceive the obstacle, by using sensors capable of perceiving the UAV surroundings and environment.

In the perception phase of collision avoidance systems, both active and passive sensors can be used. Active sensors use backscattering readings from their own emission source. Passive sensors, instead, use another source, for example reflected sunlight, to read the obstacle’s discharged energy. Examples of active sensors are sonar or ultrasonic sensors, LIDAR, and radar.

Fast response, less processing power requirements, large area scanning capabilities, weather and less dependence on lighting conditions are important advantages of these active sensors. Moreover, they can provide useful information, in terms of parameters of interest of the obstacles, for example the distance or angles, in an accurate way. Examples of passive sensors are optical or visual cameras, working in visible light, and thermal or infrared (IR) cameras, working in infrared light. IR cameras work well in poor light conditions, while visual cameras’ performance strongly depends on light and weather conditions [

59]. All cameras ask for high processing requirements since they rely on heavy image-processing to elaborate the raw data and provide useful information. The longest covered range can be obtained from radar (>1000 m), the shortest from ultrasonic and visual cameras (0–100 m), while LIDAR and IR cameras have a medium range (100–1000 m) [

59]. Using more than kind of sensor makes it possible to carry out collision avoidance comprehensively.

As shown in the

Figure 7, once the obstacle, (that in case of UAV swarm can also be any other UAV belonging to the group), is perceived and detected, it can be avoided by following different approaches. In the geometric approaches, the analysis of geometric attributes, usually simulating the UAV and obstacle trajectories, is carried out to ensure that the minimum allowed distance between UAVs and obstacles is not exceeded. In particular, these methods operate by computing the time to collision depending on the UAV and obstacle distances and their velocities [

59,

60]. The geometric approaches can detect both static and dynamic obstacles [

59].

In the force-field methods the attractive or repulsive force field concept is used to attract the UAV towards a target or repulse it from the obstacle. In particular, these methods rely on the generation of a force map in which the obstacle creates a repulsive force, while the waypoint creates an attractive force. According to that force map, the optimal collision-free path is worked out [

61]. To have optimal performance, these methods require pre-mission path planning [

59]. The force-field methods present some shortcomings related to the existence of local minima corresponding to total force close to zero, as well as of nonreachable waypoints owing to obstacle proximity. Improvements can be obtained by considering additional force terms as well as condition exceptions [

61].

The optimized methods calculate the avoidance trajectory by means of geographical information. Probabilistic search algorithms can be used for providing the best search areas. Unfortunately, these kinds of algorithms present a high computational complexity, which can be overcome with optimization methods, for example, genetic algorithms, Bayesian, or particle swarm optimizations. These methods require pre-mission path planning, since they need to know in depth the environment, for example by means of high-definition maps and predefined coordinates [

59]. These methods are suitable only for static environments [

59].

In sense-and-avoid methods the computational power is reduced, with short response times, by simplifying the collision avoidance process to individual obstacle detection and avoidance, preventing collisions between UAVs within a swarm as well as between UAVs and obstacles. To do that, the UAV is equipped with different kinds of sensors, for example radar, LIDAR, and sonar. These methods do not need any preplanning and are suitable for both static and dynamic indoor and outdoor environments. Moreover, in the case of UAV swarms, since they rely on local environment sensing and information processing carried out separately from each UAV, they are not dependent on UAV communication [

59].

When using a multisensor integration solution, size and weight constraints must also be taken into account, especially for small UAVs [

62]. These considerations were the base motivation for the research work proposed in [

62], presenting the integration of a camera sensor and LIDAR-based sensors into a safe avoidance path detection system. The LIDAR sensor starts the detection process by determining the distance to obstacle and then allowing the camera to capture the image frames. In particular, three image frames at different distances (separated by an interval of 15 cm) are captured after obstacle detection. Then, a speeded-up robust features (SURF) algorithm [

63,

64] is used to find both the obstacle and free space regions by taking into account the relationship in an image perspective of the object size changes and distance. In particular, by means of the SURF size expansion characteristic together with the size change ratio, it is possible to approximate the obstacle’s physical size and define the safe avoidance path [

62]. The algorithm first generates feature points detected on each image frames. Then, feature points, matched from the second frame and third frame to the first one, are identified to provide important information related to the environment depth (free space or obstacles). Experiments were carried out to analyze the size changes across image frames. The size and distance were inversely proportional to each other. This result confirms that closer objects present more significant size changes compared to distant objects. The image frames were broken down into several sections, to categorize the sections ahead of the UAV representing a threat or danger. The size changes were calculated by measuring the Euclidean distance ratio difference between matched features points in the image frames to detect obstacle close the UAV, no danger zones or ambiguity situations (obstacle near but textureless or background textureless). The Parrot A.r UAV 2.0 was chosen to carry out the experimentation in [

62]. The built-in UAV camera was used by considering a lower resolution (640 × 360) to decrease the computation time of the algorithm. Due to its high accurate measured range, low cost, and small size, the LIDAR lite v3 [

65] was chosen as range sensor. Experiments were carried out including 10 different situations with one or more obstacles that were both fairly textured and textureless. Straight and side obstacles were considered, the first ones placed to be aligned with the UAV, the second ones on the left or right of the straight obstacles. The detection system works successfully when it shows safe path, moving the UAV away from the obstacles. The detection system initiates when the LIDAR sensor detects the obstacle 200 cm ahead and stops at 170 cm. In the textured situation, the proposed system showed good performance; for the textureless case, the performance rapidly decreased with increasing side obstacle distance. However, the proposed detection system was demonstrated to be capable of producing a safe avoidance path at a distance of 200 cm from the obstacle, and in the case of multiple obstacles.

Low-cost solutions for obstacle perception were the object of the research work presented in [

66]. In particular, a comparison of different UAV obstacle sensing solutions in wildlife environments, as summarized in

Table 1. State of the art solutions achieved better performance in terms of obtained obstacle measurements, but suffered in terms of the required financial investment, power, and computational effort, making them not feasible for low-cost wildlife monitoring UAVs. However, a low-cost solution fusing a 1D laser, a LIDAR lite V3, with a 2D camera, a Logitech Webcam C930e, provided sufficient obstacle measurements to perform safe flights for the target application involving acceptable cost and requiring less payload, power, and computational resources [

66].

Obstacle avoidance is particularly critical for autonomous UAV for indoor applications, when GPS is missing and cannot provide the vehicle with position information. Sonar sensors are not suitably accurate, being also prone to sudden jumps in distance readings. Furthermore the presence of echoes from the room walls degrade the quality of the returned signal. LIDAR is not suitably efficient in the detection of objects that are not placed in the frontal line. Systems allowing laser scanning can cause issues in terms of required weight, encumbrance, and computational power. Although IR sensors have a limited field of view that is useful in indoor environments, they also have a minimum range, making them an unsuitable solution [

67].

The metrological characterization of an IR distance sensor, the Teraranger One by Terabee, was carried out in [

67] to prove that it represents a good solution to realize an effective anticollision system in indoor environments. The focused sensor has a maximum detection distance of 14 m, with a minimum distance of 20 cm. The Teraranger One accuracy is about ±2 cm with a resolution equal to about 5 mm and field of view (FOV) of about ±2 degrees. This IR sensor ensures the same measurement accuracy at any distance, and its performance is almost independent from the target material’s color, texture and reflectivity. Before starting the metrological characterization tests, to ensure repeatable experiment conditions, a controlled environment was set up indoors and outdoors. The Teraranger One was placed on a rigid vertical support, easily movable to change the distance measured in the case of voluminous or irremovable targets. Behind the tested sensor, a camera sensitive to IR light was placed to examine the environmental conditions and the target. To estimate the tested sensor error, a TLM99 laser distance detector by Stanley was used, since it has an accuracy much higher and a maximum distance far beyond the maximum one of the tested sensor. The tested targets included both objects with a good reflectivity, easily visible to the IR, placed in an environment not accessible to sunlight and object having unusual shapes, low reflectivity in the IR range, placed under the sunlight, in a very noisy outdoor environment. Experimental results showed, for the indoor environment, good performance of the Teraranger One that was not dependent on the target shape, material, or color. Outdoors, the tested IR sensor showed a standard deviation, when close to the target, between 4 mm and 8 mm. At a distance of 2750 mm, the standard deviation value was of 61 mm, exponentially increasing in proportion to the distance. For this reason, the measurement made was considered unreliable for distances greater than 2 m.

An interesting overview of sense-and-avoid technology, its trends and the challenge to efficiently avoid moving obstacles was presented in [

68]. The paper focused on a small UAV operating in the low altitude airspace. The sensing technologies discussed include vision-based, radar and LIDAR systems. Vision-based architectures have garnered interest for collision avoidance, thanks to the convenient standalone electrooptical system budgets. Recent approaches using that technology have been directed to the use of deep learning techniques. Adopted daylight cameras typically have an instantaneous field of view (IFOV) in the range [0.01–0.05°], a FOV of several tens of degrees, and frame rates of 10 Hz or more [

68]. To provide both a suitable coverage and resolution, multicamera systems can be installed, but this can represent a challenge for small UAVs with their limited weight and size requirements.

Radar and LIDAR can be easily integrated onboard small UAVs. In the case of radar, the challenges coming from the limited power available onboard and the low radar target cross-section can be faced by means of the frequency-modulated continuous wave (FMCW) architectures. To provide angular measurements, increasing the available SNR (signal-to-noise ratio), beam steering and/or multichannel operations can be used. The current research is oriented to develop light collision avoidance radars [

68]. In

Table 2, the main specifications of three commercial radar sensors are shown. The Echodyne system provides the largest detection range, the Aerotenna has the smallest. However, the best detection range is provided by the largest and heaviest system, requiring the highest operating power, too [

68].

LIDARs have improved their performance, miniaturized and reduced weight, thanks to the introduction of solid-state technology. In

Table 2, the main specifications of three LIDAR systems available on the market are shown. The systems in the table are characterized by a very good range accuracy at a high update rate, but their detection range tends to be limited.

The limitations of the power emission required to deal with the eye-safety issues makes it difficult to develop significant improvements for this technology. However, there are improvements in the angular resolution that are very important for 3D collision avoidance applications to prevent a sparse coverage of the FOV.

Although vision-based, radar and LIDAR systems are subject to continuous improvements. They have some shortcomings, concerning mainly the detection range and sensing accuracy, making them challenging to be optimally used to avoid collisions with moving obstacles [

68]. The situation is even more complicated in the case of the low-altitude operations, where, for radar, ground echoes are increased and signal-to-clutter ratio reduced, while for low vision-based systems, the probability of intruders located below the horizon is increased [

68].

3.1.5. UAV Safe Landing

Landing is a critical flight phase for UAVs, prone to safety issues, since it is not always possible for the vehicle to find a suitable landing zone, especially in case of an emergency due to technical malfunctions or adverse weather conditions.

UAV landing must be operated by minimizing the probability of human injuries, casualties and property damage while at the same time maximizing the chance of survival of the UAV and its payload [

69]. Suitable landing areas should be relatively homogeneous and free from obstacles [

69].

The suitability of a landing zone to be considered safe depends on the terrain, for example in outdoor environments usually grass is a better landing zone than water. Another factor to be considered is the appropriate distance from people as well as man-made objects or structures, which can be static, for example buildings and roads, or dynamic, for example cars [

69]. To preserve UAV and its payload, areas different from the ones considered rough, as for example stony areas, must be preferred [

69].

Landing zones can be divided into indoor and outdoor zones, (

Figure 8). The first type typically includes static flat zones that can be further divided into known and unknown zones [

70].

Known indoor landing zones require the UAV to be trained to recognize, during the overflight, by its on-board cameras, features of the marked landing platform. In case there are no landmarks to land on, the UAV must detect unknown zones for which it recognizes some common features belonging to a typical landing zone. This operation is carried out by image processing, looking for that image showing results identical to the parameters identifying a safe landing zone [

70].

Outdoor landing zones can be both static and dynamic, and further divided into known and unknown landing zones. Fixed marked areas, for example runways, flat roofs, helipads and playgrounds are outdoor static landing zones. Known zones can refer to a marked landing zone (with different shapes or colors) or to locations of which the UAV knows coordinates and orientation.

Unknown static landing zones, where no landmarks or suitable location are known, requires the UAV to find its own safe landing zone by using its visual sensors to analyze the ground features and look for a flat, obstacle-free and sufficiently large zone [

70].

Dynamic landing zones are moving platforms, for example the bed of a moving truck, bus roof or ship deck. For these kinds of zones, the UAV must first find the moving platform to land on and then start following it, taking into account the unknown state of the platform and environment. The dynamic known landing zones are marked with different colors, shapes, or images, univocally identifiable (for example, by a quick response (QR) code, H-shaped marking, or black cross in a black circle on a white backdrop). For dynamic unknown landing zones, the UAV must find by itself the safe landing zone by using onboard vision-based systems [

70].

According to the classification proposed in [

70], landing zone detection techniques can be classified into three main categories: camera-based, LIDAR-based, and a combination of camera and LIDAR. Concerning the techniques belonging to the first class, they are based on the use of the on-board camera (monocular vision) or cameras (binocular or stereo vision) to capture images that are then passed through image-processing algorithms to detect the closest safe landing zone. Camera-based techniques include different methods to detect the safe landing zone and include stereo ranging (SR), structure from motion (SfM), color segmentation (CS) and simultaneous localization and mapping (SLAM).

SR method uses two cameras placed on the left and right sides of the UAV to capture the same image from a different angle. Due to the different position of the left and right cameras, pixel disparity is found on the objects of the acquired images.

Different image-processing algorithms process these images to perceive the image depth. To find the safe landing zone, it is essential to carry out an accurate terrain profile. However, the pixel disparity can vary by changing the UAV height. Moreover, the camera resolution, UAV motion, light and atmospheric conditions can make landing zone detection very complex.

SfM uses a sequence of digital overlapped images of a static subject that are captured from different positions to carry out a 3D point cloud. A bundle adjustment algorithm estimates the 3D geometry and camera positions by means of image metadata using an automated scale-invariant feature transform (SIFT) image-matching method [

71]. For safe landing purposes, SfM can provide the 3D terrain surface. In particular, software, automating much of the SfM procedure implementation steps, for example Agisoft PhotoScan or VisualSFM, is used to carry out estimates of photo location, orientation, and other camera parameters to reconstruct a 3D point cloud of the monitored area, by which the UAV can find a safe landing zone [

70].

Both SfM and SR can take advantage of GPS or other navigational systems to find the safe landing zones. Where the GPS signal is denied, the UAV needs to be equipped with high-resolution cameras and an image-processing unit to process overlapping images to make the SfM algorithm able to find the safe zone to land on.

CS segments the captured images into red, green, and blue (RGB) colors, then, attributes to each RGB color pixel a gray scale value, from 0 to 255. A gray scale value threshold is determined before starting the UAV mission, so that values below the threshold are denied for the selection of the landing zone. Values over the threshold are passed to an algorithm that checks if the corresponding areas are suitable to allow the UAV to land. If not, the method is repeated. CS methods rely on the variation of grayscale values to determine the obstructions for landing [

70]. CS results can be used for example to extract object-oriented information aimed at carrying out the test image classification into objects such as roads, houses, shrubs, and water [

70,

72]. CS has some limitations, for example, in the case of wide green fields that can have different colors depending on the seasons or when colors can be the same both for unsafe and safe landing areas [

70].

SLAM methods use UAV-captured images to generate a 3D map of the environment, and estimate the vehicle position. This process includes sensor data alignment using different algorithms. From the provided 3D map, a grid map is generated, divided into small regions from which the safe landing zone is identified. UAV height affects the SLAM method’s performance, which can potentially be solved by using high-resolution camera or LIDAR along with camera [

70]. Information from images can be extracted using SLAM methods by means of the direct and the feature-based methods [

73]. In the first one, also called dense or semidense, the geometry is optimized through the intensities of the image pixels. In the feature-based method, the position of the camera and scene geometry are carried out as a function of a set of feature observations extracted from the image. The direct method provides a denser environment representation than the feature-based one thanks to the comparison of the entire image [

74]. The second class of landing zone detection techniques includes those methods using LIDAR. In particular, by sending pulsed laser beams to the target ground area, measuring the reflected pulse signal, and comparing it with the transmitted one, it is possible to determine the range between the UAV and the target. Combining laser range with GPS and IMU system information (position and orientation data), a point cloud having 3D coordinates is generated and used to make a digital elevation model (DEM) to provide a 3D representation of the target. This representation is then used to find the safe landing zone [

70].

The combined approaches belonging to the third class of landing zone detection techniques use both camera and LIDAR to get more reliable and accurate results. In this case, a DEM is generated to find a safe zone for the UAV to land on. To carry out DEM, other than on-board sensors such as cameras and LIDAR, a NASA dataset including predetermined DEM can be used [

70].

A different combined approach, involving a camera and the UAV propeller, is used in the patent [

12] to prevent the vehicle from landing on water and consequently being damaged. In particular, the rotation of the propeller is controlled to generate airflow and so cause water surface ripples while the camera acquires images of the landing area.

Since the water surface ripples form multiple spots in the acquired image due to the sparkling effect they produce under the light, if the number of these spots in the image exceeds a preset light spot number, a water surface is recognized. However, the proposed approach requires a light environment, and is more suitable to operate under daylight conditions [

12].

Altitude control is essential during the UAV landing phase. High-quality position information can be obtained by means of a global navigation satellite system (GNSS) receiver antenna, but in some environments, for example towns and cities, or in the case of low-altitude operations, the line of sight from the satellite can be lost, creating a dangerous situation for the UAV. Accurate position information can be achieved by means of techniques based on real-time kinematic global positioning system (RTK GPS), where a GPS generates GPS correction data thanks to terrestrial base station that knows its GPS location and transfers these data to the UAV to correct GPS values and obtain more accurate location values [

75]. However, the need to insert a base station in the area covered by the UAV flight can represent a disadvantage.

Target distance measurements can be carried out by camera-based and laser systems, but the former require burdensome computational efforts, while the latter are not very energy efficient.

Ultrasonic sensors, on the other hand, are low in cost and smaller in size and are a good compromise in terms of energy efficiency and accuracy for low-altitude detection of obstacles, requiring less computational effort than a camera-based system and being more energy efficient than a laser system. However, ultrasonic sensor performance can be affected by relative humidity and atmospheric conditions. To address this problem, in [

76], the effects of temperature and relative humidity on distance measurements carried out by this kind of sensor during UAV landing were analyzed. Two commercial ultrasonic sensors, HC-SR04 by ElecFreaks [

77] and Parallax PING [

78], were tested in a climatic chamber and two mathematical models were developed to provide compensation corrections needed to avoid the systematic errors due to relative humidity and temperature variations. In the test phase, each sensor was placed on a fixed structure and the distance measurements were provided by an Arduino platform communicating with a PC through two USB interfaces. To investigate the wind effects on the distance measurements, computer fans placed on the platform base were used to suitably simulate the UAV landing by reproducing the air flow under the vehicle, that is, the ground effect turbulence affecting the ultrasonic sensor distance measurements. Measurements were carried out in the Kambic KK-105 CH environmental chamber [

79] considering, for temperature and humidity measurements, a HD2817T certified probe [

80] as reference measurement system, and using the Leica Disto 3 [

81] as distance measurement reference system. After the sensor tests, an equation allowing the compensation of the temperature and relative humidity effects on distance measurements was formulated by performing a linear regression for each sensor measurement compared to the fixed distance values. The variation of reference distance and the obtained one are compared, and the valued scale factor and bias trend is used to estimate the distance compensation. The distance errors of the two sensors before and after correction demonstrated the validity of the proposed compensation.

A real-time landing gear control system relying on adaptive 3D sensing aimed at expanding the safe flying area for UAVs by a technology for safe landing on unknown ground was developed in [

82]. Landing gear are devices able to dampen the impacts of landing for flying vehicles. In the proposed research, depending on the measured landing area shape, landing gear lengths were controlled in real time to make all landing gears contact the ground simultaneously to allow safe landing on grounds of any shape. The proposed system input, as shown in

Figure 9, was the image obtained from a high-speed camera in which a line laser was irradiated. Next, by means of a light section method, 3D sensing was carried out. In particular, the bright point’s 3D position was determined by means of a geometric relationship between the bright point coordinate and the plane of the line laser. The line laser direction could be controlled by changing the angle of the galvanometer mirror to adaptively change the measurement area at high speed. After that, the adaptive 3D sensing updated the heights of the contact points and the landing gear control values were determined. In this way, an image in which the line laser is irradiated on a different location was made. The procedure was iterated at high speed, to allow all landing gear to touch the ground at the same time.

The performance of the proposed system was experimentally validated. Adaptive 3D sensing was implemented by using a SONY IMX382 vision chip [

83], a New Scale Technologies DK-M3-RS-U-360 galvanometer mirror [

84] and a Kikoh Giken MLX-D12-640-10 line laser [

85]. To simulate the landing sequence, the MISUMI RSH220B-C21A-N-F1-5-700 axis robot was attached vertically to the ground. Experiments were carried out to evaluate the measurement rate, to qualitatively verify the landing gear response (a horizontal board and a block imitating a boulder were used as the objects of the measurements) and to simulate the landing sequence on the unknown ground (soil composed of microbeads and polystyrene blocks to mimic boulders) with the proposed system. The system showed good performance by achieving high-speed 3D sensing with an update time of 10 ms for two landing gears and reaching simultaneous contact with a time lag within 20 ms in the best case, with a descending speed of 100 mm/s [

82].