Parameter Estimation of Poisson–Gaussian Signal-Dependent Noise from Single Image of CMOS/CCD Image Sensor Using Local Binary Cyclic Jumping

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Poisson–Gaussian Signal-Dependent Noise Model

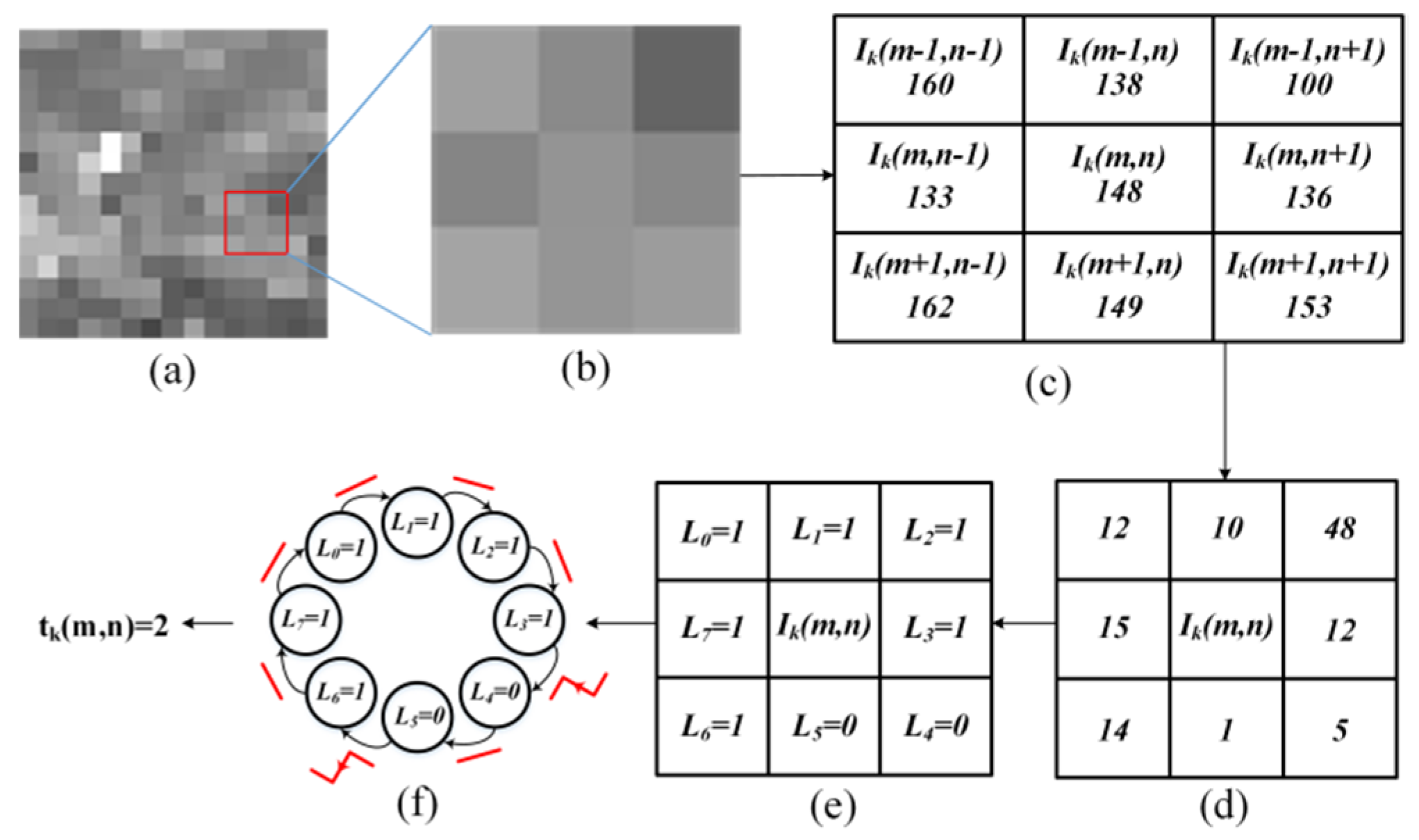

3.2. Proposed Noise Parameter Estimation Model

4. Experimental Results

5. Computational Complexity

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Z.P.; Jiang, M.; Zhang, X.N.; Chen, X.Y.; Hou, W.K. Space-time-multiplexed multi-image visible light positioning system exploiting pseudo-miller-coding for smart phones. IEEE Trans. Wirel. Commun. 2017, 16, 8261–8274. [Google Scholar] [CrossRef]

- Cao, C.; Shirakawa, Y.; Tan, L.; Seo, M.W. A time-resolved NIR lock-in pixel CMOS image sensor with background cancelling capability for remote heart rate detection. IEEE J. Solid-State Circ. 2019, 54, 978–991. [Google Scholar] [CrossRef]

- Hasan, A.M.; Melli, A.; Wahid, K.A. Denoising low-dose CT images using multiframe blind source separation and block matching filter. IEEE Trans. Radiat. Plasma Med. Sci. 2018, 27, 279–287. [Google Scholar] [CrossRef]

- Ma, X.L.; Hu, S.H.; Yang, D.S. SAR Image De-noising Based on Residual Image Fusion and Sparse Representation. KSII Trans. Internet Inf. Syst. 2019, 13, 3620–3637. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.T.; Nie, H.F.; Nie, K.M.; Jin, W.M. Fixed-pattern noise correction method based on improved moment matching for a TDI CMOS image sensor. J. Opt. Soc. Am. A 2017, 34, 1500–1510. [Google Scholar] [CrossRef] [PubMed]

- Han, L.Q.; Xu, J.T. Long exposure time noise in pinned photodiode CMOS image sensors. IEEE Electr. Device Lett. 2018, 39, 979–982. [Google Scholar] [CrossRef]

- Ding, L.; Zhang, H.Y.; Xiao, J.S.; Lei, J.F.; Xu, F.; Lu, S.J. Mixed Noise Parameter Estimation Based on Variance Stable Transform. CMES-Comput. Model. Eng. Sci. 2020, 122, 675–690. [Google Scholar] [CrossRef]

- Ehret, T.; Davy, A.; Morel, J.M. Model-blind video denoising via frame-to-frame training. Comput. Vis. Pattern Recognit. 2019, 11, 11369–11378. [Google Scholar] [CrossRef] [Green Version]

- Yi, W.; Qiang, C.Q.; Yan, Y. Robust impulse noise variance estimation based on image histogram. IEEE Signal. Proc. Lett. 2010, 17, 485–488. [Google Scholar] [CrossRef]

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian–Gaussian noise modeling and fitting for single-image raw-data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pham, T.D. Estimating parameters of optimal average and adaptive wiener filters for image restoration with sequential Gaussian simulation. IEEE Signal. Proc. Lett. 2015, 11, 1950–1954. [Google Scholar] [CrossRef] [Green Version]

- Pyatykh, S.; Hesser, J. Image sensor noise parameter estimation by variance stabilization and normality assessment. IEEE Trans. Image Process. 2014, 23, 3990–3998. [Google Scholar] [CrossRef] [PubMed]

- Mäkitalo, M.; Foi, A. Noise parameter mismatch in variance stabilization with an application to Poisson–Gaussian noise estimation. IEEE Trans. Image Process. 2014, 23, 5348–5359. [Google Scholar] [CrossRef]

- Huang, X.T.; Chen, L.; Tian, J.; Zhang, X.L. Blind image noise level estimation using texture-based eigenvalue analysis. Multimed. Tools Appl. 2015, 75, 2713–2714. [Google Scholar] [CrossRef]

- Jeong, B.G.; Kim, B.C.; Moon, Y.H.; Eom, I.K. Simplified noise model parameter estimation for signal-dependent noise. Signal. Process. 2014, 96, 266–273. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, G.; Xu, J. Parameter estimation of signal-dependent random noise in CMOS/CCD image sensor based on numerical characteristic of mixed Poisson noise samples. Sensors 2018, 18, 2276. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wang, G.; Xu, J. The modified gradient edge detection method for the color filter array image of the CMOS image sensor. Opt. Laser Technol. 2014, 62, 73–81. [Google Scholar] [CrossRef]

- Liu, X.; Tanaka, M.; Okutomi, M. Practical signal-dependent noise parameter estimation from a single noisy image. IEEE Trans. Image Process. 2014, 23, 4361–4371. [Google Scholar] [CrossRef] [PubMed]

- Dong, L.; Zhou, J.; Tang, Y.Y. Effective and fast estimation for image sensor noise via constrained weighted least squares. IEEE Trans. Image Process. 2018, 27, 2715–2730. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, Z.; Wei, K. Noise estimation for image sensor based on local entropy and median absolute deviation. Sensors 2019, 19, 339. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Chen, J.; Chao, H. Image blind denoising with generative adversarial network based noise modelling. Comput. Vis. Pattern Recognit. 2018, 11, 3155–3164. [Google Scholar] [CrossRef]

- Guo, S.; Yan, Z.; Zhang, K. Toward convolutional blind denoising of real photographs. Comput. Vis. Pattern Recognit. 2019, 11, 1712–1722. [Google Scholar] [CrossRef] [Green Version]

- Zhu, S.; Xu, G.; Cheng, Y. BDGAN: Image blind denoising using generative adversarial networks. Pattern Recognit. Comput. Vis. 2019, 12, 241–252. [Google Scholar] [CrossRef]

- Tan, Z.; Li, K.; Wang, Y. Differential evolution with adaptive mutation strategy based on fitness landscape analysis. Inf. Sci. 2021, 549, 142–163. [Google Scholar] [CrossRef]

- Tan, Z.; Li, K. Differential evolution with mixed mutation strategy based on deep reinforcement learning. Appl. Soft Comput. 2021, 11, 107678. [Google Scholar] [CrossRef]

- Standard Kodak PCD0992 Test Images. Available online: http://r0k.us/graphics/kodak/ (accessed on 1 March 2018).

- Xu, J.; Zhang, L.; Zhang, D. Multi-channel Weighted Nuclear Norm Minimization for Real Color Image Denoising. IEEE Comput. Soc. 2017, 11, 1105–1113. [Google Scholar] [CrossRef] [Green Version]

- Mafi, M. Deep convolutional neural network for mixed random impulse and Gaussian noise reduction in digital images. IET Image Process. 2020, 14, 3791–3801. [Google Scholar] [CrossRef]

| Noise Parameters | Time (s) | ||||

|---|---|---|---|---|---|

| a | b | Image Gradient Matrix | Local Grey Entropy | Image Histogram | LBCJ |

| 0.005 | 0.0016 | 12.72 | 19.56 | 19.22 | 11.66 |

| 0.005 | 0.0036 | 12.86 | 19.59 | 18.56 | 11.45 |

| 0.005 | 0.0064 | 12.71 | 19.55 | 18.67 | 11.51 |

| 0.005 | 0.0100 | 12.69 | 19.55 | 18.56 | 11.25 |

| 0.010 | 0.0016 | 15.87 | 19.45 | 18.89 | 11.40 |

| 0.010 | 0.0036 | 15.53 | 19.56 | 18.52 | 11.39 |

| 0.010 | 0.0064 | 16.48 | 19.66 | 18.52 | 12.17 |

| 0.010 | 0.0100 | 16.14 | 19.59 | 18.55 | 12.46 |

| 0.015 | 0.0016 | 15.54 | 19.68 | 18.64 | 13.17 |

| 0.015 | 0.0036 | 15.97 | 19.52 | 19.03 | 13.51 |

| 0.015 | 0.0064 | 15.61 | 19.56 | 18.88 | 11.87 |

| 0.015 | 0.0100 | 15.33 | 19.57 | 19.04 | 12.01 |

| 0.020 | 0.0016 | 22.52 | 30.56 | 20.52 | 12.36 |

| 0.020 | 0.0036 | 22.18 | 30.59 | 20.52 | 12.06 |

| 0.020 | 0.0064 | 22.45 | 30.52 | 20.62 | 12.68 |

| 0.020 | 0.0100 | 21.60 | 30.61 | 20.83 | 12.33 |

| Noise Parameters | Memory Consumption (MB) | ||||

|---|---|---|---|---|---|

| a | b | Image Gradient Matrix | Local Grey Entropy | Image Histogram | LBCJ |

| 0.005 | 0.0016 | 3738 | 3721 | 3507 | 3513 |

| 0.005 | 0.0036 | 3741 | 3719 | 3500 | 3518 |

| 0.005 | 0.0064 | 3799 | 3716 | 3515 | 3511 |

| 0.005 | 0.0100 | 3797 | 3715 | 3512 | 3500 |

| 0.010 | 0.0016 | 3775 | 3730 | 3500 | 3499 |

| 0.010 | 0.0036 | 3770 | 3722 | 3488 | 3512 |

| 0.010 | 0.0064 | 3749 | 3729 | 3487 | 3510 |

| 0.010 | 0.0100 | 3744 | 3743 | 3525 | 3517 |

| 0.015 | 0.0016 | 3775 | 3728 | 3446 | 3340 |

| 0.015 | 0.0036 | 3769 | 3727 | 3453 | 3354 |

| 0.015 | 0.0064 | 3762 | 3721 | 3452 | 3428 |

| 0.015 | 0.0100 | 3753 | 3720 | 3463 | 3462 |

| 0.020 | 0.0016 | 3733 | 3731 | 3472 | 3469 |

| 0.020 | 0.0036 | 3733 | 3733 | 3470 | 3463 |

| 0.020 | 0.0064 | 3731 | 3731 | 3469 | 3464 |

| 0.020 | 0.0100 | 3742 | 3732 | 3452 | 3500 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wu, Y.; Zhang, Y.; Zhao, J.; Si, Y. Parameter Estimation of Poisson–Gaussian Signal-Dependent Noise from Single Image of CMOS/CCD Image Sensor Using Local Binary Cyclic Jumping. Sensors 2021, 21, 8330. https://doi.org/10.3390/s21248330

Li J, Wu Y, Zhang Y, Zhao J, Si Y. Parameter Estimation of Poisson–Gaussian Signal-Dependent Noise from Single Image of CMOS/CCD Image Sensor Using Local Binary Cyclic Jumping. Sensors. 2021; 21(24):8330. https://doi.org/10.3390/s21248330

Chicago/Turabian StyleLi, Jinyu, Yuqian Wu, Yu Zhang, Jufeng Zhao, and Yingsong Si. 2021. "Parameter Estimation of Poisson–Gaussian Signal-Dependent Noise from Single Image of CMOS/CCD Image Sensor Using Local Binary Cyclic Jumping" Sensors 21, no. 24: 8330. https://doi.org/10.3390/s21248330