Recent Advances in Collaborative Scheduling of Computing Tasks in an Edge Computing Paradigm

Abstract

:1. Introduction

2. Computing Scenarios

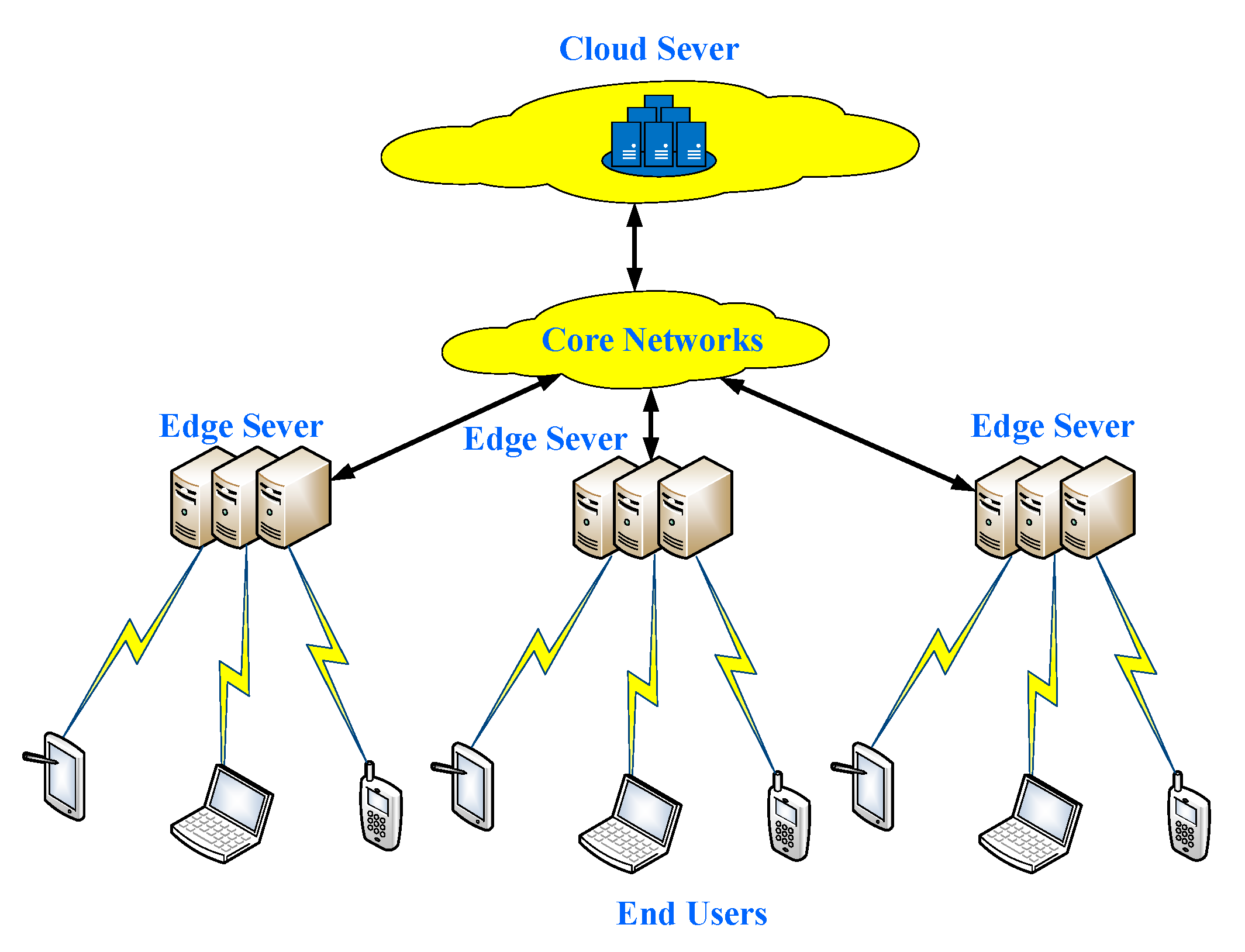

2.1. Basic Edge Computing

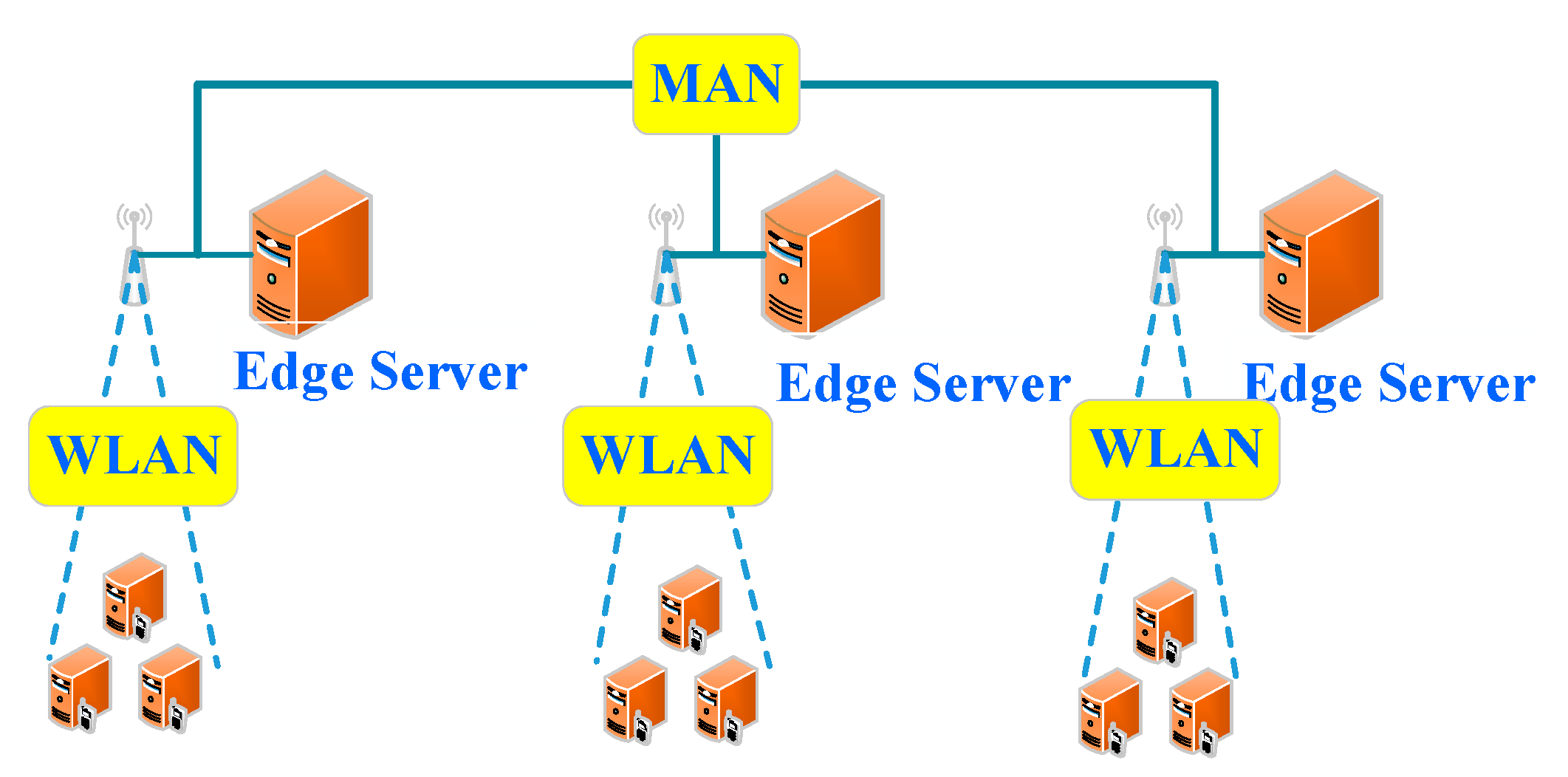

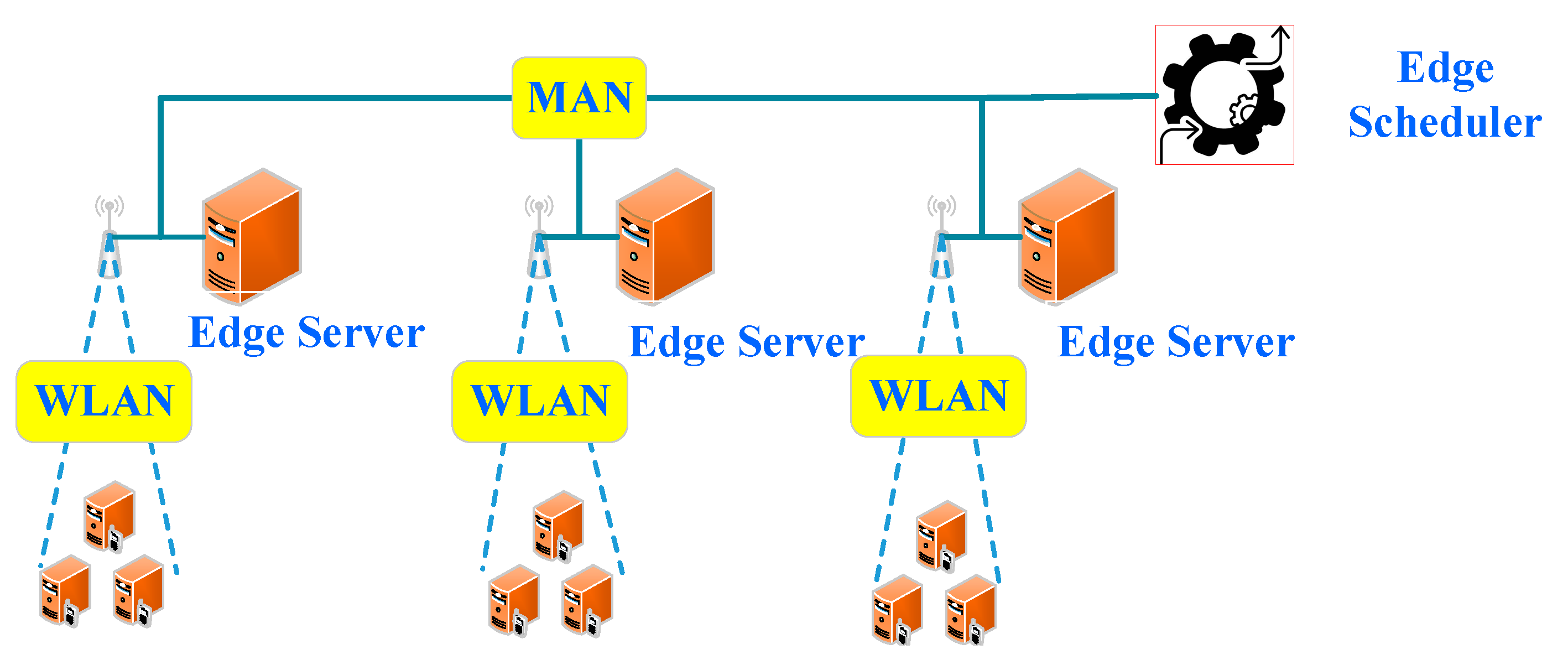

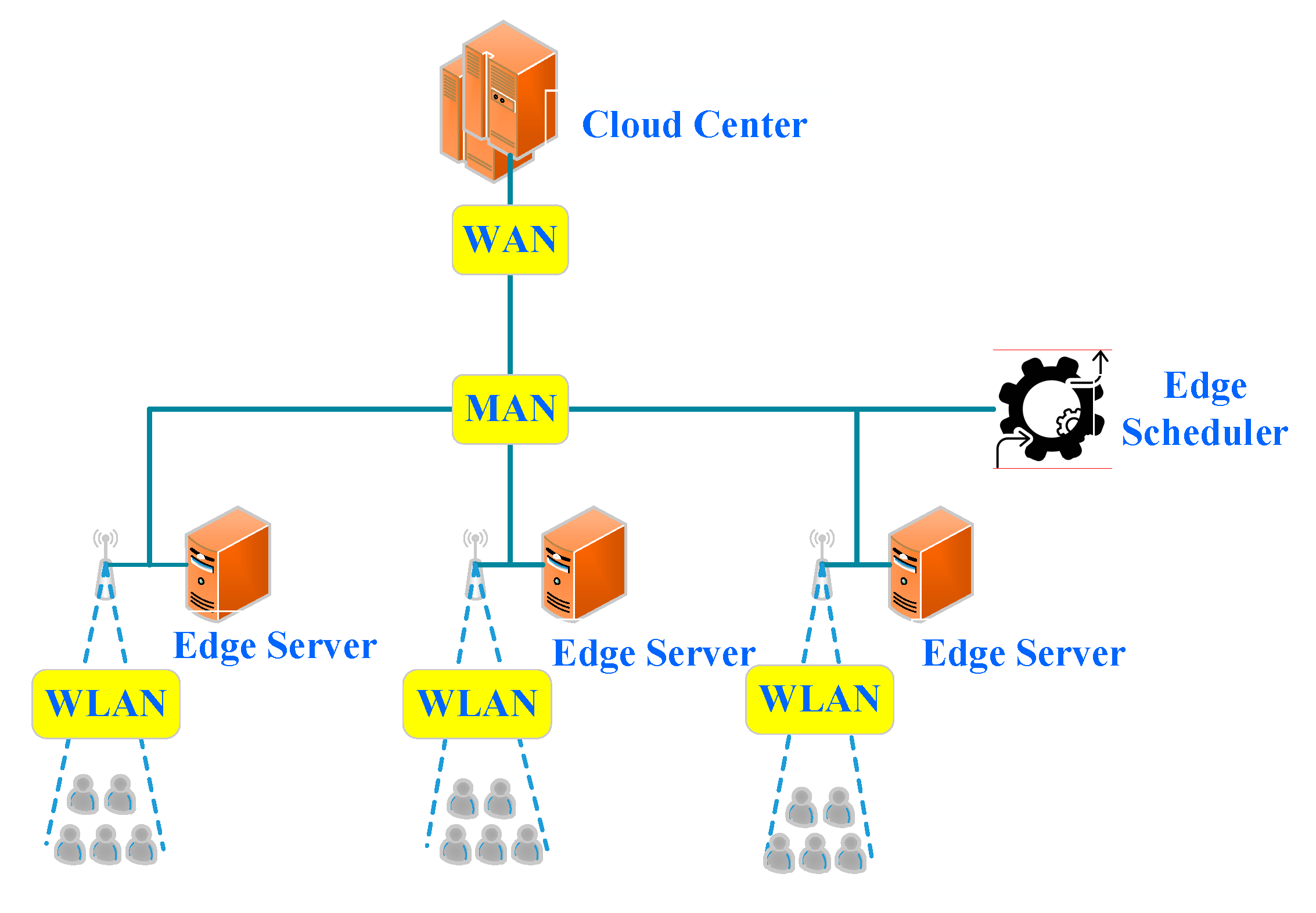

2.2. Scheduler-Based Edge Computing

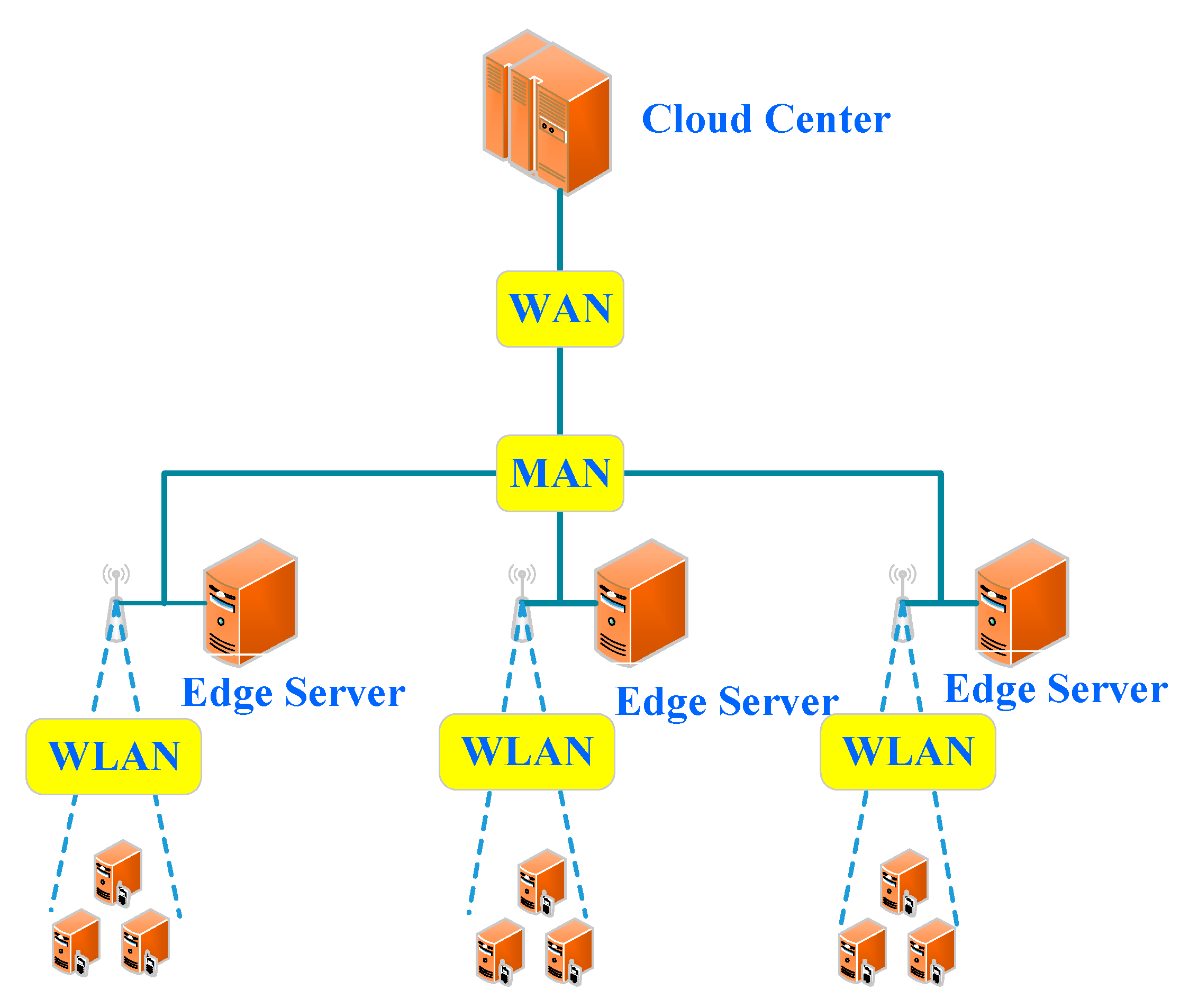

2.3. Edge-Cloud Computing

2.4. Scheduler-Based Edge-Cloud Computing

3. Computing Task Analysis

3.1. Local Execution

3.2. Full Offloading

3.3. Partial Offloading

4. System Model and Problem Formulation

4.1. System Model

4.2. Communications Model

4.3. Task Offloading Model

4.4. Problem Formulation

5. Computing Task Scheduling Scheme

5.1. Minimal Delay Time

5.2. Minimal Energy Consumption

5.3. Minimal Delay Time and Energy Consumption

6. Issues and Future Directions

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Patidar, S.; Rane, D.; Jain, P. A Survey Paper on Cloud Computing. In Proceedings of the 2012 Second International Conference on Advanced Computing & Communication Technologies, Institute of Electrical and Electronics Engineers (IEEE), Rohtak, Haryana, India, 7–8 January 2012; pp. 394–398. [Google Scholar]

- Moghaddam, F.F.; Ahmadi, M.; Sarvari, S.; Eslami, M.; Golkar, A. Cloud computing challenges and opportunities: A survey. In Proceedings of the 2015 1st International Conference on Telematics and Future Generation Networks (TAFGEN), Institute of Electrical and Electronics Engineers (IEEE), Kuala Lumpur, Malaysia, 26–27 May 2015; pp. 34–38. [Google Scholar]

- Varghese, B.; Wang, N.; Barbhuiya, S.; Kilpatrick, P.; Nikolopoulos, D.S. Challenges and Opportunities in Edge Computing. In Proceedings of the 2016 IEEE International Conference on Smart Cloud (SmartCloud), Institute of Electrical and Electronics Engineers (IEEE), New York, NY, USA, 18–20 November 2016; pp. 20–26. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Rincon, J.A.; Guerra-Ojeda, S.; Carrascosa, C.; Julian, V. An IoT and Fog Computing-Based Monitoring System for Cardiovascular Patients with Automatic ECG Classification Using Deep Neural Networks. Sensors 2020, 20, 7353. [Google Scholar] [CrossRef]

- Liu, B. Research on collaborative scheduling technology Based on edge computing. Master’s Thesis, South China University of Technology, Guangzhou, China, 2019. [Google Scholar]

- Jiao, J. Cooperative Task Scheduling in Mobile Edge Computing System. Master’s Thesis, University of Electronic Science and Technology, Chengdu, China, 2018. [Google Scholar]

- Zhao, J.; Li, Q.; Gong, Y.; Zhang, K. Computation Offloading and Resource Allocation for Cloud Assisted Mobile Edge Computing in Vehicular Networks. IEEE Trans. Veh. Technol. 2019, 68, 7944–7956. [Google Scholar] [CrossRef]

- Lyu, X.; Ni, W.; Tian, H.; Liu, R.P.; Wang, X.; Giannakis, G.B.; Paulraj, A. Optimal Schedule of Mobile Edge Computing for Internet of Things Using Partial Information. IEEE J. Sel. Areas Commun. 2017, 35, 2606–2615. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Joint Task Offloading Scheduling and Transmit Power Allocation for Mobile-Edge Computing Systems. In Proceedings of the 2017 IEEE Wireless Communications and Networking Conference (WCNC), Institute of Electrical and Electronics Engineers (IEEE), San Francisco, CA, USA, 19–22 March 2017; pp. 1–6. [Google Scholar]

- Tao, X.; Ota, K.; Dong, M.; Qi, H.; Li, K. Performance Guaranteed Computation Offloading for Mobile-Edge Cloud Computing. IEEE Wirel. Commun. Lett. 2017, 6, 774–777. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.; Song, C.; Han, H.; Jung, H.; Kang, S. Collaborative Task Scheduling for IoT-Assisted Edge Computing. IEEE Access 2020, 8, 216593–216606. [Google Scholar] [CrossRef]

- Wang, S.; Zafer, M.; Leung, K.K. Online placement of multi-component applications in edge computing environments. IEEE Access 2017, 5, 2514–2533. [Google Scholar] [CrossRef]

- Zhao, T.; Zhou, S.; Guo, X.; Zhao, Y.; Niu, Z. A Cooperative Scheduling Scheme of Local Cloud and Internet Cloud for Delay-Aware Mobile Cloud Computing. In Proceedings of the 2015 IEEE Globecom Workshops (GC Wkshps), Institute of Electrical and Electronics Engineers (IEEE), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar]

- Kao, Y.-H.; Krishnamachari, B.; Ra, M.-R.; Bai, F. Hermes: Latency optimal task assignment for resource-constrained mobile computing. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Institute of Electrical and Electronics Engineers (IEEE), Kowloon, Hong Kong, 26 April–1 May 2015; pp. 1894–1902. [Google Scholar]

- Cuervo, E.; Balasubramanian, A.; Cho, D.; Wolman, A.; Saroiu, S.; Chandra, R.; Bahl, P. Maui: Making smartphones last longer with code offload. In Proceedings of the MobiSys, ACM, San Francisco, CA, USA, 15–18 June 2010; pp. 49–62. [Google Scholar]

- Munoz, O.; Pascual-Iserte, A.; Vidal, J. Optimization of Radio and Computational Resources for Energy Efficiency in Latency-Constrained Application Offloading. IEEE Trans. Veh. Technol. 2015, 64, 4738–4755. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Cao, J.; Tang, S.; Han, D.; Suri, N. Run Time Application Repartitioning in Dynamic Mobile Cloud Environments. IEEE Trans. Cloud Comput. 2014, 4, 336–348. [Google Scholar] [CrossRef]

- Yang, L.; Cao, J.; Cheng, H.; Ji, Y. Multi-User Computation Partitioning for Latency Sensitive Mobile Cloud Applications. IEEE Trans. Comput. 2015, 64, 2253–2266. [Google Scholar] [CrossRef]

- Liu, L.; Chang, Z.; Guo, X.; Ristaniemi, T. Multi-objective optimization for computation offloading in mobile-edge computing. In Proceedings of the 2017 IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; pp. 832–837. [Google Scholar]

- Carson, K.; Thomason, J.; Wolski, R.; Krintz, C.; Mock, M. Mandrake: Implementing Durability for Edge Clouds. In Proceedings of the 2019 IEEE International Conference on Edge Computing (EDGE), Institute of Electrical and Electronics Engineers (IEEE), Milan, Italy, 8–13 July 2019; pp. 95–101. [Google Scholar]

- Liu, J.; Mao, Y.; Zhang, J.; Letaief, K.B. Delay-optimal computation task scheduling for mobile-edge computing systems. In Proceedings of the 2016 IEEE International Symposium on Information Theory (ISIT), Institute of Electrical and Electronics Engineers (IEEE), Barcelona, Spain, 10–15 July 2016; pp. 1451–1455. [Google Scholar]

- Lazar, A. The throughput time delay function of anM/M/1queue (Corresp.). IEEE Trans. Inf. Theory 1983, 29, 914–918. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, W.; Cao, Y.; Li, D.; Wang, L. Energy-Delay Tradeoff for Dynamic Offloading in Mobile-Edge Com-puting System with Energy Harvesting Devices. IEEE Trans. Industr. Inform. 2018, 14, 4642–4655. [Google Scholar] [CrossRef]

- Chen, X. Decentralized Computation Offloading Game for Mobile Cloud Computing. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 974–983. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient Multi-User Computation Offloading for Mobile-Edge Cloud Computing. IEEE/ACM Trans. Netw. 2016, 24, 2795–2808. [Google Scholar] [CrossRef] [Green Version]

- Yuan, H.; Bi, J.; Zhou, M.; Liu, Q.; Ammari, A.C. Biobjective Task Scheduling for Distributed Green Data Centers. IEEE Trans. Autom. Sci. Eng. 2020. Available online: https://ieeexplore.ieee.org/document/8951255 (accessed on 29 December 2020). [CrossRef]

- Guo, X.; Liu, S.; Zhou, M.; Tian, G. Dual-Objective Program and Scatter Search for the Optimization of Disassembly Sequences Subject to Multiresource Constraints. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1091–1103. [Google Scholar] [CrossRef]

- Fu, Y.; Zhou, M.; Guo, X.; Qi, L. Scheduling Dual-Objective Stochastic Hybrid Flow Shop with Deteriorating Jobs via Bi-Population Evolutionary Algorithm. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 5037–5048. [Google Scholar] [CrossRef]

- Sheng, Z.; Pfersich, S.; Eldridge, A.; Zhou, J.; Tian, D.; Leung, V.C.M. Wireless acoustic sensor networks and edge computing for rapid acoustic monitoring. IEEE/CAA J. Autom. Sin. 2019, 6, 64–74. [Google Scholar] [CrossRef] [Green Version]

- Yang, G.; Zhao, X.; Huang, J. Overview of task scheduling algorithms in cloud computing. Appl. Electron. Tech. J. 2019, 45, 13–17. [Google Scholar]

- Zhang, P.; Zhou, M.; Wang, X. An Intelligent Optimization Method for Optimal Virtual Machine Allocation in Cloud Data Centers. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1725–1735. [Google Scholar] [CrossRef]

- Yuan, H.; Bi, J.; Zhou, M. Spatial Task Scheduling for Cost Minimization in Distributed Green Cloud Data Centers. IEEE Trans. Autom. Sci. Eng. 2018, 16, 729–740. [Google Scholar] [CrossRef]

- Yuan, H.; Zhou, M.; Liu, Q.; Abusorrah, A. Fine-Grained Resource Provisioning and Task Scheduling for Heterogeneous Applications in Distributed Green Clouds. IEEE/CAA J. Autom. Sin. 2020, 7, 1380–1393. [Google Scholar]

- Alfakih, T.; Hassan, M.M.; Gumaei, A.; Savaglio, C.; Fortino, G. Task Offloading and Resource Allocation for Mobile Edge Computing by Deep Reinforcement Learning Based on SARSA. IEEE Access 2020, 8, 54074–54084. [Google Scholar] [CrossRef]

- Yuchong, L.; Jigang, W.; Yalan, W.; Long, C. Task Scheduling in Mobile Edge Computing with Stochastic Requests and M/M/1 Servers. In Proceedings of the 2019 IEEE 21st International Conference on High Performance Computing and Communications, IEEE 17th International Conference on Smart City, IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Institute of Electrical and Electronics Engineers (IEEE), Zhangjiajie, China, 10–12 August 2019; pp. 2379–2382. [Google Scholar]

- Li, X.; Wan, J.; Dai, H.-N.; Imran, M.; Xia, M.; Celesti, A. A Hybrid Computing Solution and Resource Scheduling Strategy for Edge Computing in Smart Manufacturing. IEEE Trans. Ind. Inform. 2019, 15, 4225–4234. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, M. A More Accurate Delay Model based Task Scheduling in Cellular Edge Computing Systems. In Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC), Institute of Electrical and Electronics Engineers (IEEE), Chengdu, China, 6–9 December 2019; pp. 72–76. [Google Scholar]

- Zhang, Y.; Du, P. Delay-Driven Computation Task Scheduling in Multi-Cell Cellular Edge Computing Systems. IEEE Access 2019, 7, 149156–149167. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Z.; Zeadally, S.; Chao, H.-C. Efficient Task Scheduling with Stochastic Delay Cost in Mobile Edge Computing. IEEE Commun. Lett. 2019, 23, 4–7. [Google Scholar] [CrossRef]

- Yuan, H.; Zhou, M. Profit-Maximized Collaborative Computation Offloading and Resource Allocation in Distributed Cloud and Edge Computing Systems. IEEE Trans. Autom. Sci. Eng. 2020. Available online: https://ieeexplore.ieee.org/document/9140317 (accessed on 29 December 2020). [CrossRef]

- Xu, J.; Li, X.; Ding, R.; Liu, X. Energy efficient multi-resource computation offloading strategy in mobile edge computing. CIMS 2019, 25, 954–961. [Google Scholar]

- Ning, Z.; Huang, J.; Wang, X.; Rodrigues, J.J.P.C.; Guo, L. Mobile Edge Computing-Enabled Internet of Vehicles: Toward Energy-Efficient Scheduling. IEEE Netw. 2019, 33, 198–205. [Google Scholar] [CrossRef]

- Li, S.; Huang, J. Energy Efficient Resource Management and Task Scheduling for IoT Services in Edge Computing Paradigm. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Institute of Electrical and Electronics Engineers (IEEE), Guangzhou, China, 12–15 December 2017; pp. 846–851. [Google Scholar]

- Yoo, W.; Yang, W.; Chung, J. Energy Consumption Minimization of Smart Devices for Delay-Constrained Task Processing with Edge Computing. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020; pp. 1–3. [Google Scholar]

- Zhang, Q.; Lin, M.; Yang, L.T.; Chen, Z.; Khan, S.U.; Li, P. A Double Deep Q-Learning Model for Energy-Efficient Edge Scheduling. IEEE Trans. Serv. Comput. 2018, 12, 739–749. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, Y.; Xiang, W.; Gu, X.; Zhao, H. Joint Optimization of Energy Consumption and Packet Scheduling for Mobile Edge Computing in Cyber-Physical Networks. IEEE Access 2018, 6, 15576–15586. [Google Scholar] [CrossRef]

- Bi, J.; Yuan, H.; Duanmu, S.; Zhou, M.C.; Abusorrah, A. Energy-optimized Partial Computation Offloading in Mobile Edge Computing with Genetic Simulated-annealing-based Particle Swarm Optimization. IEEE Internet Things J. 2020. Available online: https://ieeexplore.ieee.org/document/9197634 (accessed on 29 December 2020). [CrossRef]

- Yu, H.; Wang, Q.; Guo, S. Energy-Efficient Task Offloading and Resource Scheduling for Mobile Edge Computing. In Proceedings of the 2018 IEEE International Conference on Networking, Architecture and Storage (NAS), Institute of Electrical and Electronics Engineers (IEEE), Chongqing, China, 11–14 October 2018; pp. 1–4. [Google Scholar]

- Mao, Y.; Zhang, J.; Song, S.H.; Letaief, K.B. Stochastic joint radio and computational resource management for multi-user mobile-edge computing systems. IEEE Trans. Wirel. Commun. 2017, 16, 5994–6009. [Google Scholar] [CrossRef] [Green Version]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q.S. Offloading in Mobile Edge Computing: Task Allocation and Computational Frequency Scaling. IEEE Trans. Commun. 2017, 65, 1. [Google Scholar]

- Sen, T.; Shen, H. Machine Learning based Timeliness-Guaranteed and Energy-Efficient Task Assignment in Edge Computing Systems. In Proceedings of the 2019 IEEE 3rd International Conference on Fog and Edge Computing (ICFEC); Institute of Electrical and Electronics Engineers (IEEE), Larnaca, Cyprus, 14–17 May 2019; pp. 1–10. [Google Scholar]

- Sajjad, H.P.; Danniswara, K.; Al-Shishtawy, A.; Vlassov, V. SpanEdge: Towards Unifying Stream Processing over Central and Near-the-Edge Data Centers. In Proceedings of the 2016 IEEE/ACM Symposium on Edge Computing (SEC); Institute of Electrical and Electronics Engineers (IEEE), Washington, DC, USA, 27–28 October 2016; pp. 168–178. [Google Scholar]

- Dong, Z.; Liu, Y.; Zhou, H.; Xiao, X.; Gu, Y.; Zhang, L.; Liu, C. An energy-efficient offloading framework with predictable temporal correctness. In Proceedings of the SEC ’17: IEEE/ACM Symposium on Edge Computing Roc, San Jose, CA, USA, 12–14 October 2017; pp. 1–12. [Google Scholar]

- Ren, J.; Yu, G.; Cai, Y.; He, Y. Latency Optimization for Resource Allocation in Mobile-Edge Computation Offloading. IEEE Trans. Wirel. Commun. 2018, 17, 5506–5519. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Yue, Y.; Wang, R.; Yu, M.; Yu, J.; Liu, H.; Ying, X.; Yu, R. Energy-Efficient Admission of Delay-Sensitive Tasks for Multi-Mobile Edge Computing Servers. In Proceedings of the 2019 IEEE 25th International Conference on Parallel and Distributed Systems (ICPADS), Institute of Electrical and Electronics Engineers (IEEE), Tianjin, China, 4–6 December 2019; pp. 747–753. [Google Scholar]

- Cao, X.; Wang, F.; Xu, J.; Zhang, R.; Cui, S. Joint Computation and Communication Cooperation for Energy-Efficient Mobile Edge Computing. IEEE Internet Things J. 2019, 6, 4188–4200. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Guo, J.; Yang, L.; Li, X.; Ji, H. Computation offloading considering fronthaul and backhaul in small-cell networks integrated with MEC. In Proceedings of the 2017 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS); Institute of Electrical and Electronics Engineers (IEEE), Atlanta, GA, USA, 1–4 May 2017; pp. 115–120. [Google Scholar]

- Chen, M.; Hao, Y. Task Offloading for Mobile Edge Computing in Software Defined Ultra-Dense Network. IEEE J. Sel. Areas Commun. 2018, 36, 587–597. [Google Scholar] [CrossRef]

- Sun, Y.; Zhou, S.; Xu, J. EMM: Energy-Aware Mobility Management for Mobile Edge Computing in Ultra Dense Networks. IEEE J. Sel. Areas Commun. 2017, 35, 2637–2646. [Google Scholar] [CrossRef] [Green Version]

- Nan, Y.; Li, W.; Bao, W.; Delicato, F.C.; Pires, P.F.; Dou, Y.; Zomaya, A.Y. Adaptive Energy-Aware Computation Offloading for Cloud of Things Systems. IEEE Access 2017, 5, 23947–23957. [Google Scholar] [CrossRef]

- Sahni, Y.; Cao, J.; Yang, L.; Ji, Y. Multi-Hop Offloading of Multiple DAG Tasks in Collaborative Edge Computing. IEEE Internet Things J. 2020. Available online: https://ieeexplore.ieee.org/document/9223724 (accessed on 29 December 2020). [CrossRef]

- Sahni, Y.; Cao, J.; Yang, L.; Ji, Y. Multi-Hop Multi-Task Partial Computation Offloading in Collaborative Edge Computing. IEEE Trans. Parallel Distrib. Syst. 2020, 32, 1. [Google Scholar]

- Zhang, P.; Zhou, M.; Fortino, G. Security and trust issues in Fog computing: A survey. Futur. Gener. Comput. Syst. 2018, 88, 16–27. [Google Scholar] [CrossRef]

- Wang, X.; Ning, Z.; Zhou, M.; Hu, X.; Wang, L.; Zhang, Y.; Yu, F.R.; Hu, B. Privacy-Preserving Content Dissemination for Vehicular Social Networks: Challenges and Solutions. IEEE Commun. Surv. Tutorials 2018, 21, 1314–1345. [Google Scholar] [CrossRef]

- Huang, X.; Ye, D.; Yu, R.; Shu, L. Securing parked vehicle assisted fog computing with blockchain and optimal smart contract design. IEEE/CAA J. Autom. Sin. 2020, 7, 426–441. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, L.; Lewis, F.L. Stochastic DoS attack allocation against collaborative estimation in sensor networks. IEEE/CAA J. Autom. Sin. 2020, 7, 1–10. [Google Scholar] [CrossRef]

- Zhang, P.; Zhou, M. Security and Trust in Blockchains: Architecture, Key Technologies, and Open Issues. IEEE Trans. Comput. Soc. Syst. 2020, 7, 790–801. [Google Scholar] [CrossRef]

- Oevermann, J.; Weber, P.; Tretbar, S.H. Encapsulation of Capacitive Micromachined Ultrasonic Transducers (CMUTs) for the Acoustic Communication between Medical Implants. Sensors 2021, 21, 421. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef] [Green Version]

- Fortino, G.; Messina, F.; Rosaci, D.; Sarne, G.M.L. ResIoT: An IoT social framework resilient to malicious activities. IEEE/CAA J. Autom. Sin. 2020, 7, 1263–1278. [Google Scholar] [CrossRef]

- Wang, F.-Y. Parallel Intelligence: Belief and Prescription for Edge Emergence and Cloud Convergence in CPSS. IEEE Trans. Comput. Soc. Syst. 2020, 7, 1105–1110. [Google Scholar] [CrossRef]

| Objectives | Studies | Features |

|---|---|---|

| Minimize delay time | [22,24,37,38,39,40,41] |

|

| Minimize energy consumption | [30,42,43,44,45,46,47] |

|

| Minimize both delay time and energy consumption | [48,51,52] |

|

| Objective | Scheme | Optimal | Complexity | Where | Pros and Cons |

|---|---|---|---|---|---|

| Delay Time | One-dimensional search algorithm [22] | Yes | low | ES | Achieving the minimum average delay in various specific scenarios, but not general ones |

| Greedy algorithm [24] | Yes | Medium | ES | Saving time by 20–30%, in comparison to the proposed random algorithm but only for a simple M/M/1 queuing system in a specific scenario. | |

| Customized TS algorithm [24] | Yes | Medium | ES | Efficient and suitable for scenarios with large number of tasks, but only for a simple M/M/1 queuing system in a specific scenario. | |

| Lyapunov function-based task scheduling algorithm [38] | NP | Medium | ES | Being more accurate than the other delay models; and smaller delay than that of a traditional scheduling algorithm. | |

| Efficient conservative heterogeneous earliest-finish-time algorithm [40] | Unc | Medium | ES | Reducing the delays of task offloading, and considering the task execution order. | |

| SA-based migrating birds optimization procedure [41] | N-O | Medium | ES/CC | Providing a high-accuracy and fine-grained energy model by jointly considering central processing unit (CPU), memory, and bandwidth resource limits, load balance requirements of all nodes, but only for a simple M/M/1 system. | |

| Sub-gradient algorithm [55] | Yes | Medium | ES | Providing a closed-form solution suitable for a specific scenario about the partial compression offloading but not for general scenarios; reducing the end-to-end latency. | |

| Energy cosumption | Energy efficient Multi-resource computation Offloading strategy task scheduling algorithm [30] | Yes | Medium | ES/CC | Comprehensively considering the workload conditions among mobile devices, edge servers and cloud centers; Having stable convergence speed and reducing the power consumption effectively in a specific scenario. |

| Ordinal Optimization-based Markov Decision Process [43] | Unc | High | ES/CC | Being effective and efficient; Making good tradeoff between delay time and energy consumption. | |

| Ben’s genetic algorithm [56] | N-O | Medium | ES | Effectively solving the problem of choosing which edge server to offload, and minimizing the total energy consumption, but working only for a simple M/M/1 queue model | |

| Algorithms for partial and binary offloading with energy consumption optimization [57] | Yes | High | ES | Joint computation and communication cooperation by considering both partial and binary offloading cases Reducing the power consumption effectively; obtaining the optimal solution in a partial offloading case | |

| Artificial fish swarm algorithm [58] | Yes | Medium | ES | Guaranteeing the global optimization, strong robustness and fast convergence for a specific problem and reducing the power consumption | |

| Delay/energy consumption | Multidimensional numerical method [17] | Yes | High | ES | Establishing the conditions under which total or no offloading is optimal; Reducing the execution delay of applications and minimizing the total consumed energy but failing to consider latency constraints |

| Software defined task offloading/Task Placement Algorithm [59] | Yes | Medium | ES | Solving the computing resource allocation and task placement problems; Reducing task duration and energy cost compared to random and uniform computation offloading schemes by considering computation amount and data size of a task in a software defined ultra network. | |

| Energy-aware mobility management algorithm [60] | N-O | High | ES | Making good tradeoff between delay time and energy consumption Dealing with various practical deployment scenarios including BSs dynamically switching on and off, but failing to consider the capability of a cloud server. | |

| Lyapunov Optimization on Time and Energy Cost [61] | Yes | High | ES/CC | Taking full advantages of green energy without significantly increasing the response time and having better optimization ability |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Li, Q.; Zhou, M.; Abusorrah, A. Recent Advances in Collaborative Scheduling of Computing Tasks in an Edge Computing Paradigm. Sensors 2021, 21, 779. https://doi.org/10.3390/s21030779

Chen S, Li Q, Zhou M, Abusorrah A. Recent Advances in Collaborative Scheduling of Computing Tasks in an Edge Computing Paradigm. Sensors. 2021; 21(3):779. https://doi.org/10.3390/s21030779

Chicago/Turabian StyleChen, Shichao, Qijie Li, Mengchu Zhou, and Abdullah Abusorrah. 2021. "Recent Advances in Collaborative Scheduling of Computing Tasks in an Edge Computing Paradigm" Sensors 21, no. 3: 779. https://doi.org/10.3390/s21030779

APA StyleChen, S., Li, Q., Zhou, M., & Abusorrah, A. (2021). Recent Advances in Collaborative Scheduling of Computing Tasks in an Edge Computing Paradigm. Sensors, 21(3), 779. https://doi.org/10.3390/s21030779