Efficient and Practical Correlation Filter Tracking

Abstract

:1. Introduction

2. Related Works

2.1. Correlation Filter-Based Trackers

2.2. Long-Term Tracking

3. The Proposed Method

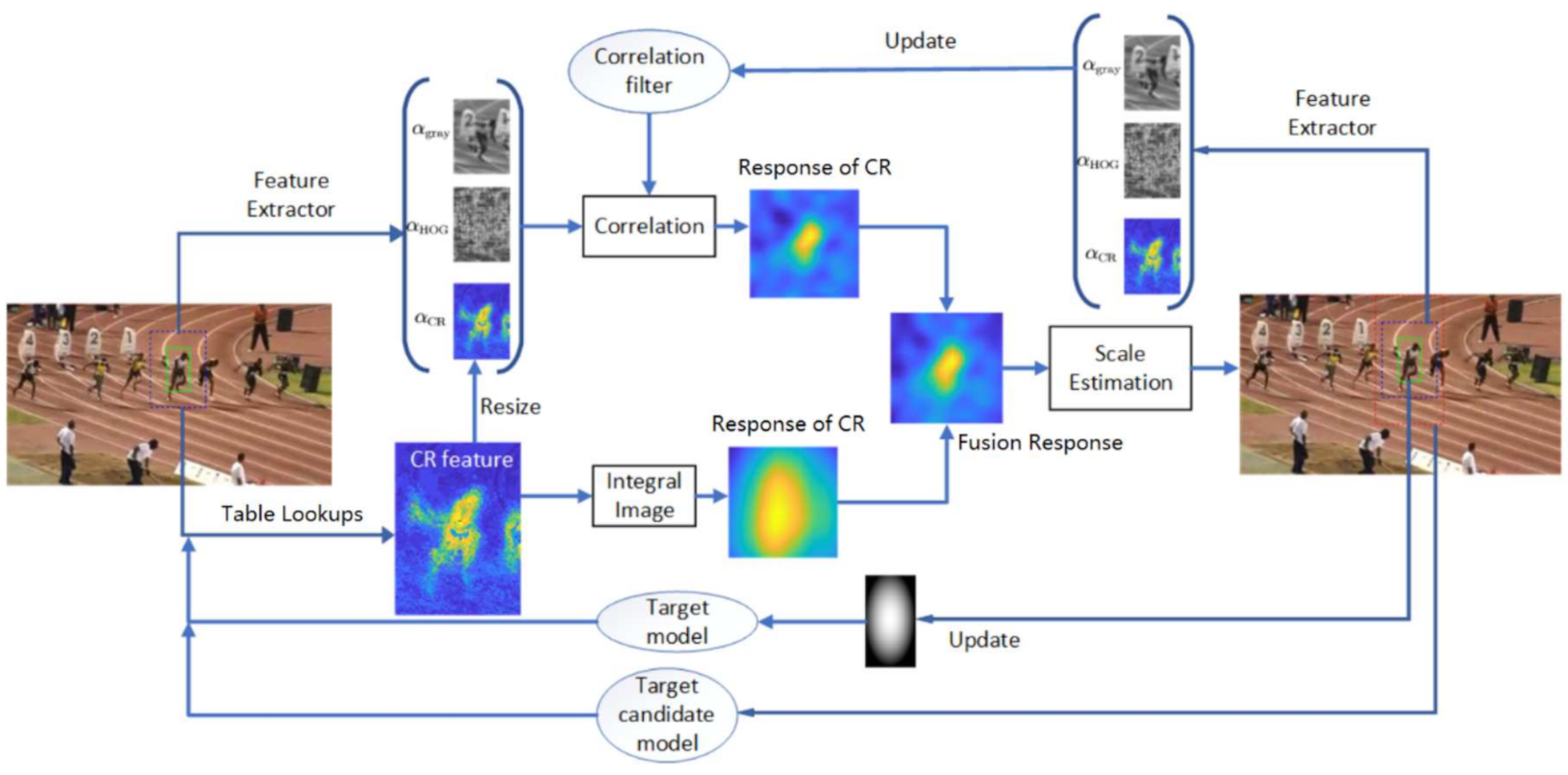

3.1. CRCF Tracker

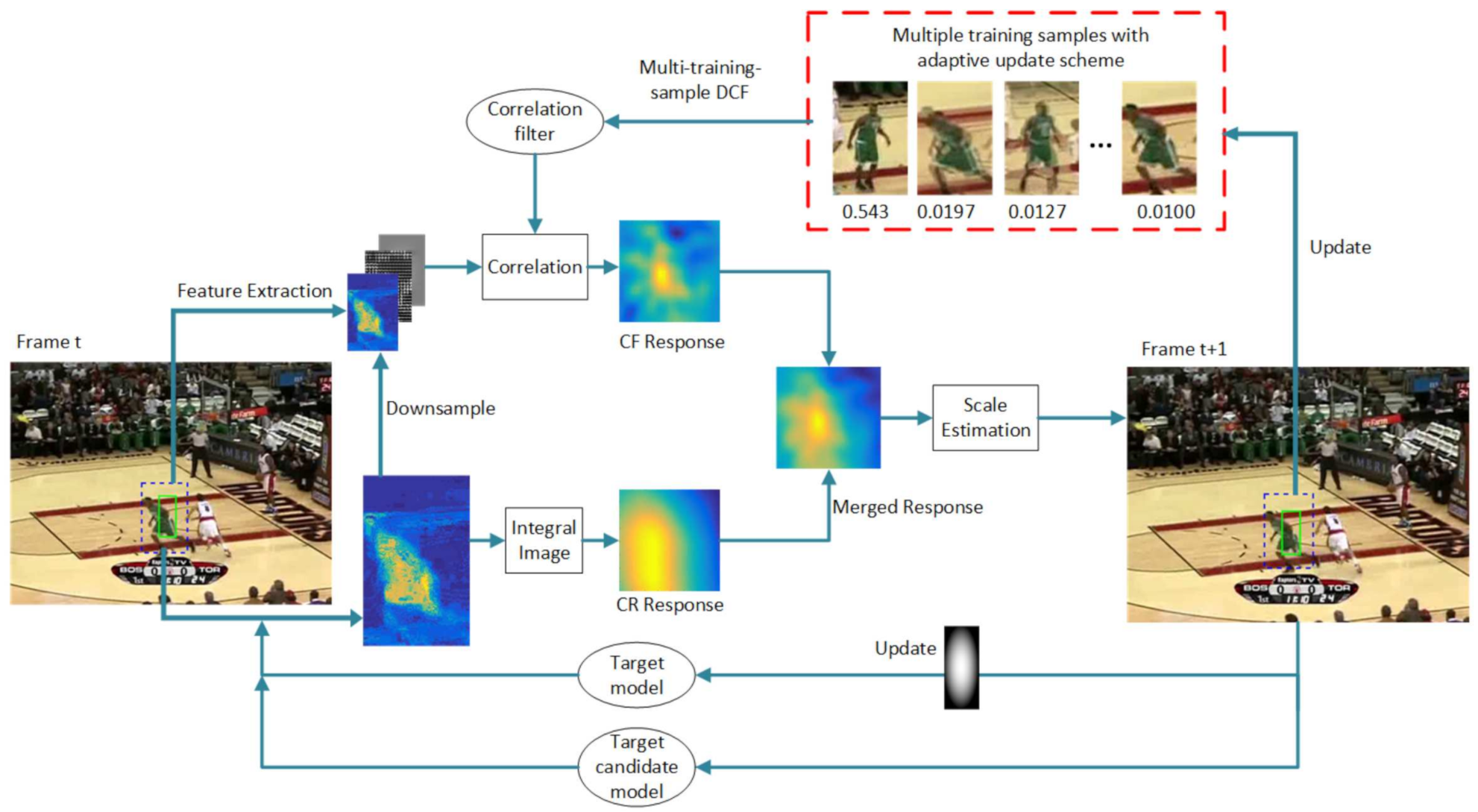

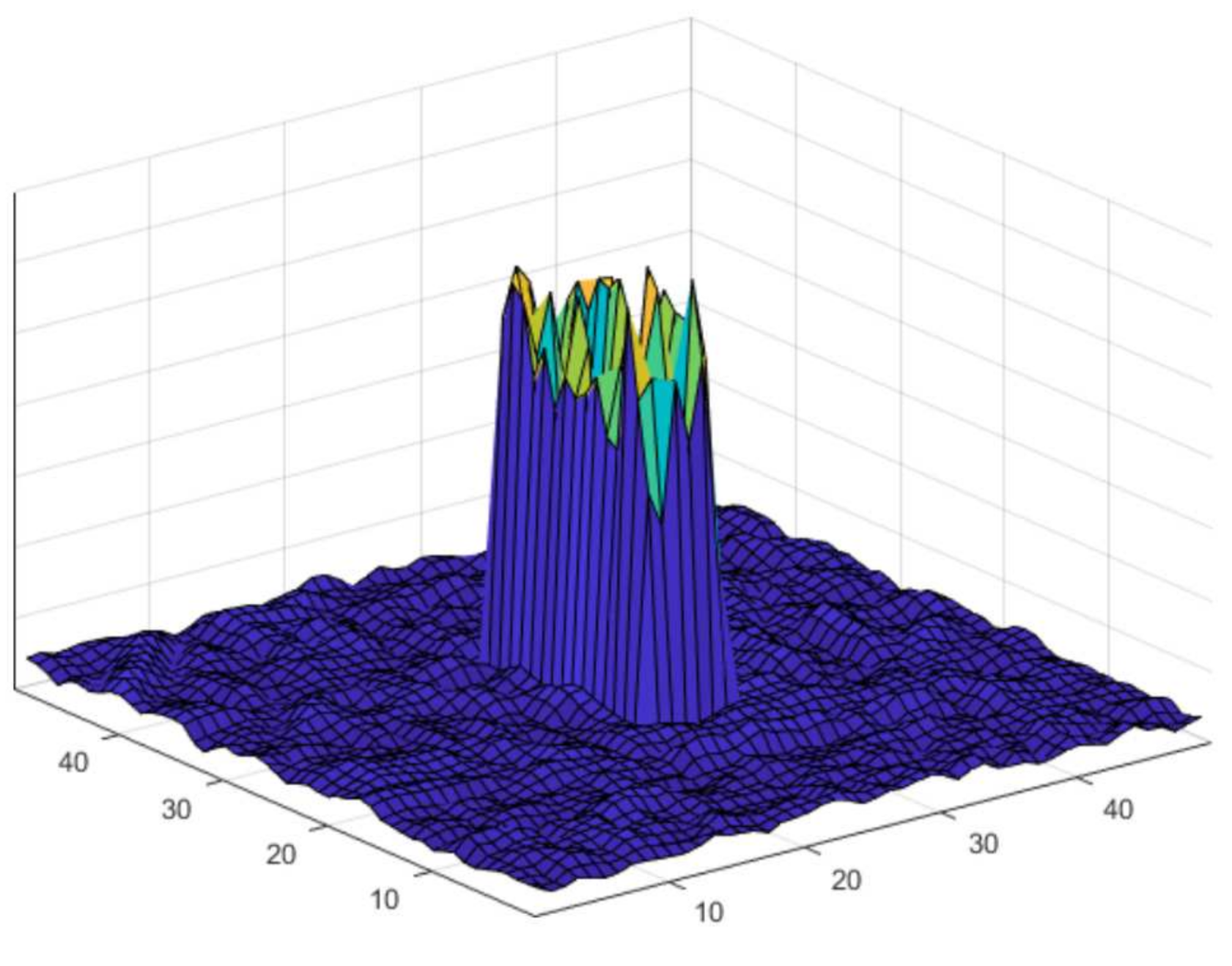

3.2. CRCF_ATU Tracker

- If the number of samples in the training set is less than N, the new sample is added into the training set.

- If the number of training samples exceeds N and the minimum weight is below the forgetting threshold, the sample with minimum weight is replaced by the new sample.

- If the number of training samples exceeds N and there is not any sample’s weight below the forgetting threshold, the closest two samples are merged into one sample.

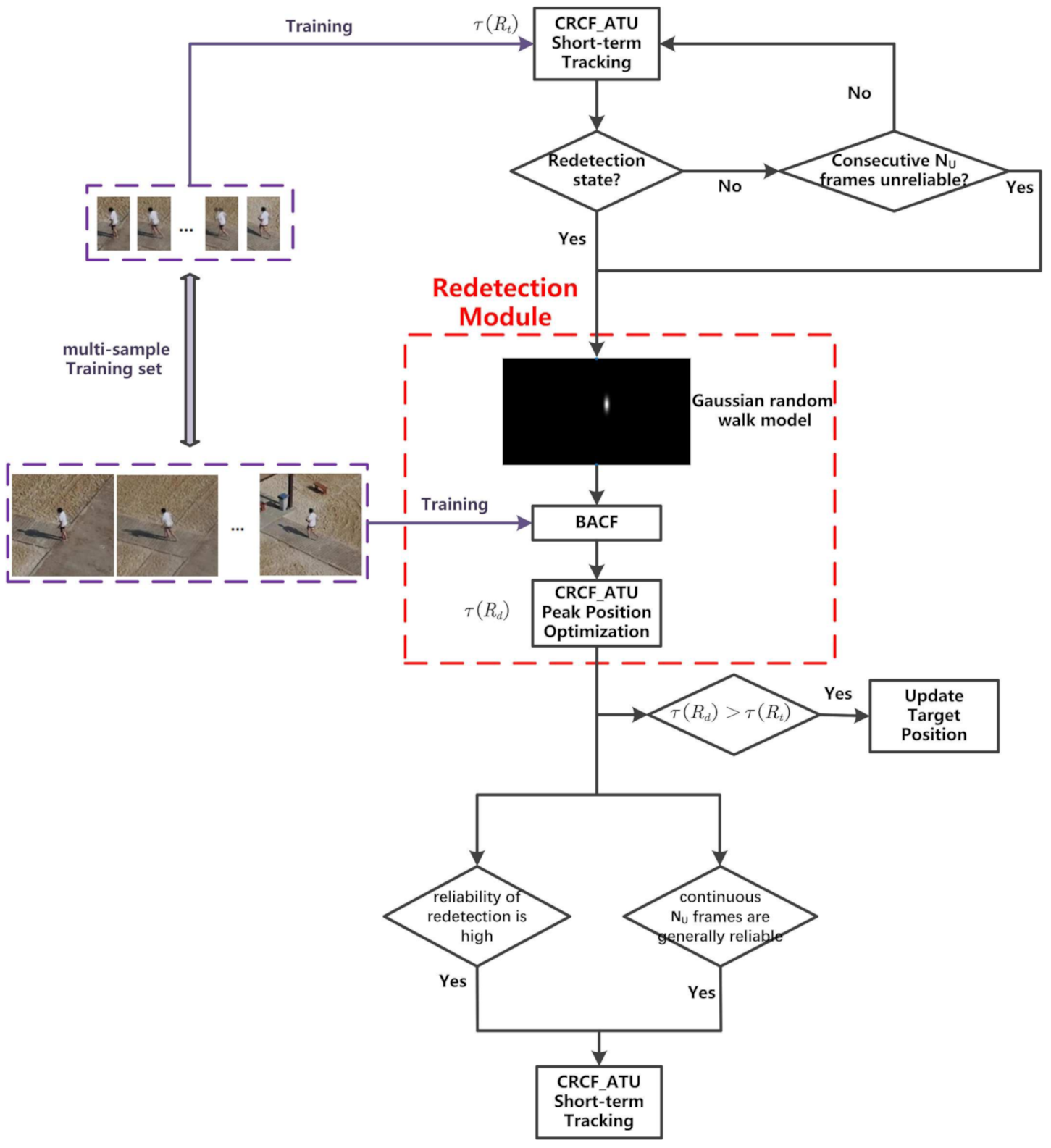

3.3. Expanding to Long-Term Tracking

4. Experiments

4.1. Experimental Setup

4.2. Implementation Details

4.3. Comparative Evaluation of Update Mechanism

4.4. Performance Verification

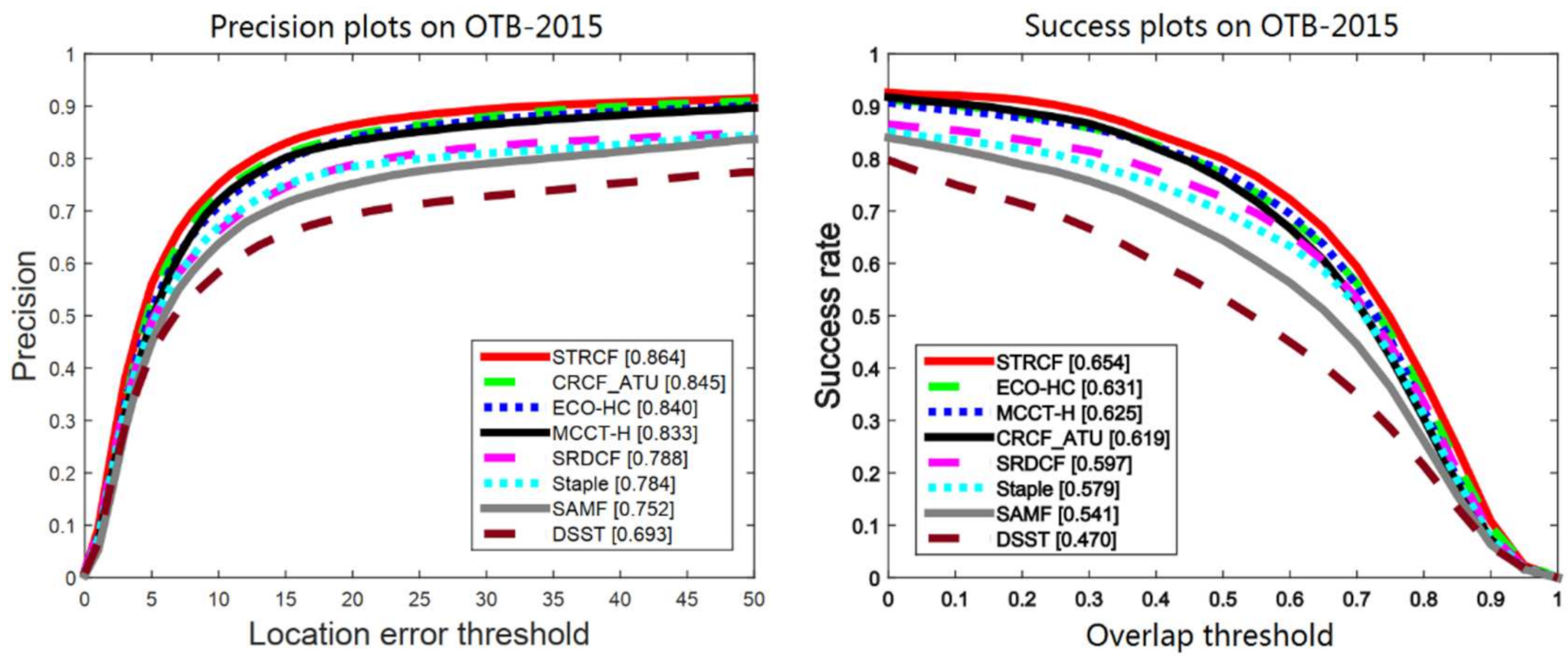

4.4.1. Results on OTB-2015

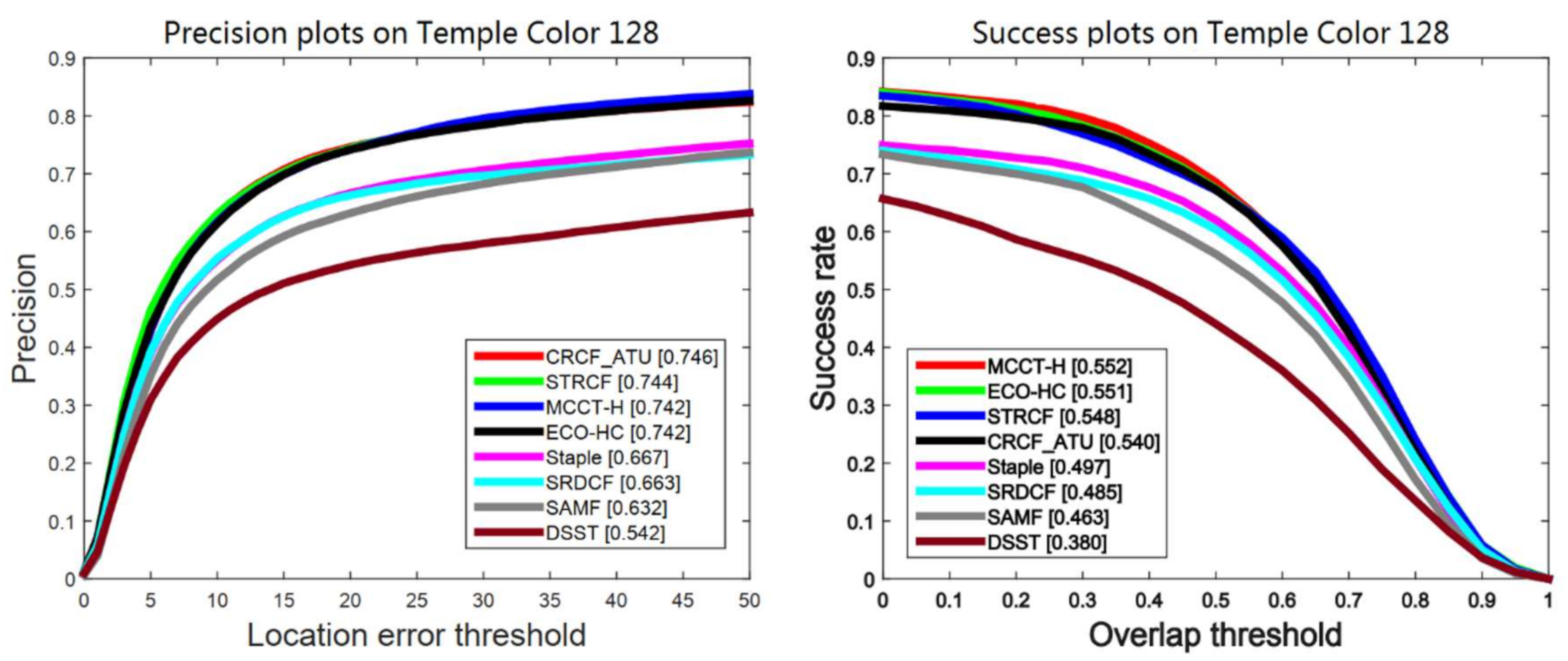

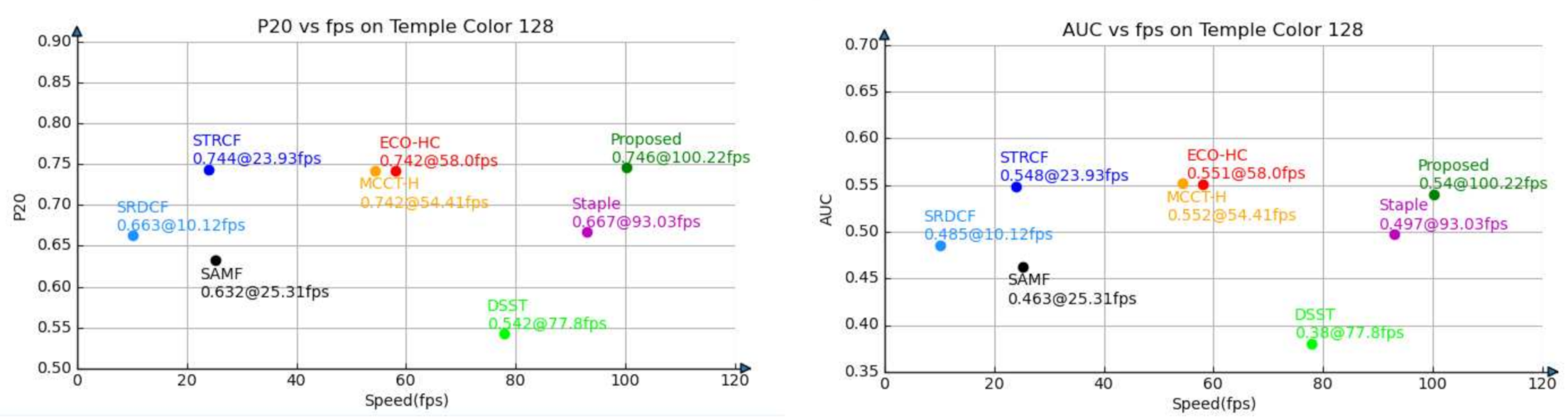

4.4.2. Results on Temple Color 128

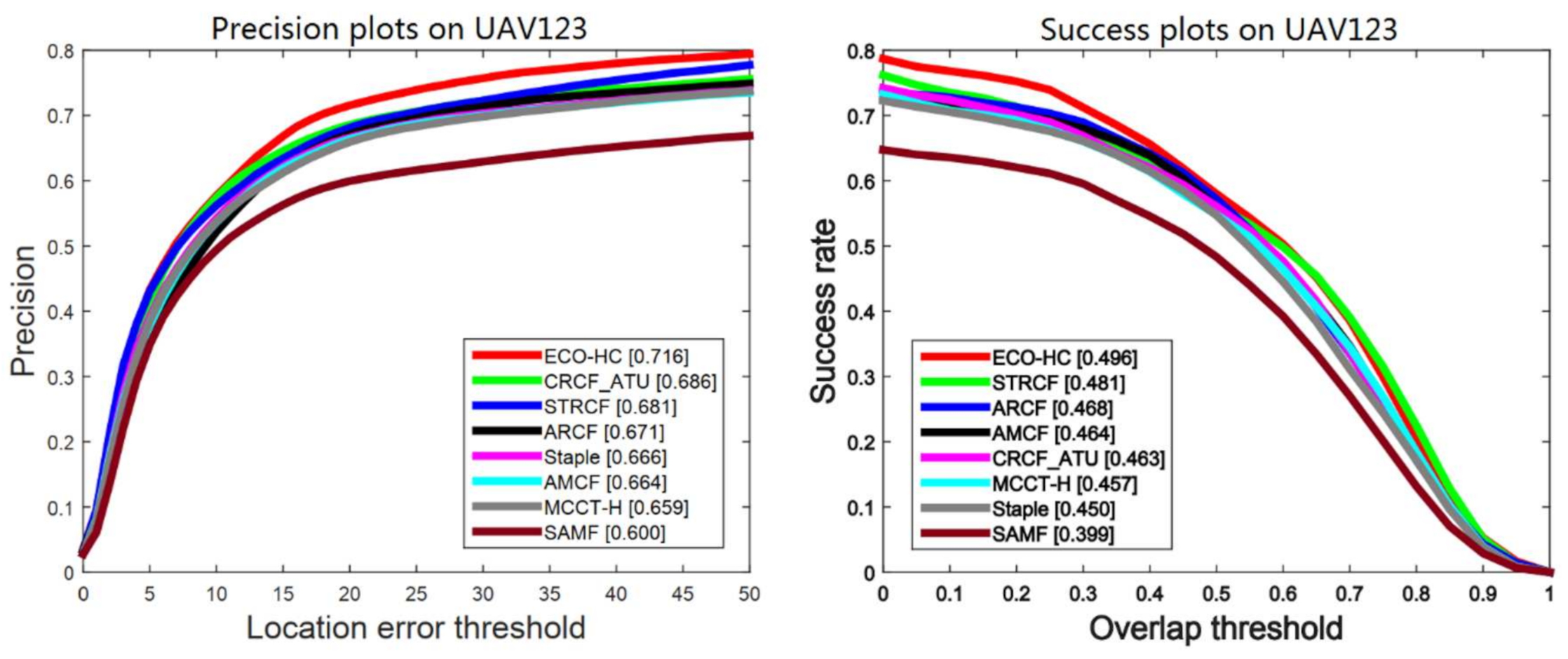

4.4.3. Results on UAV123

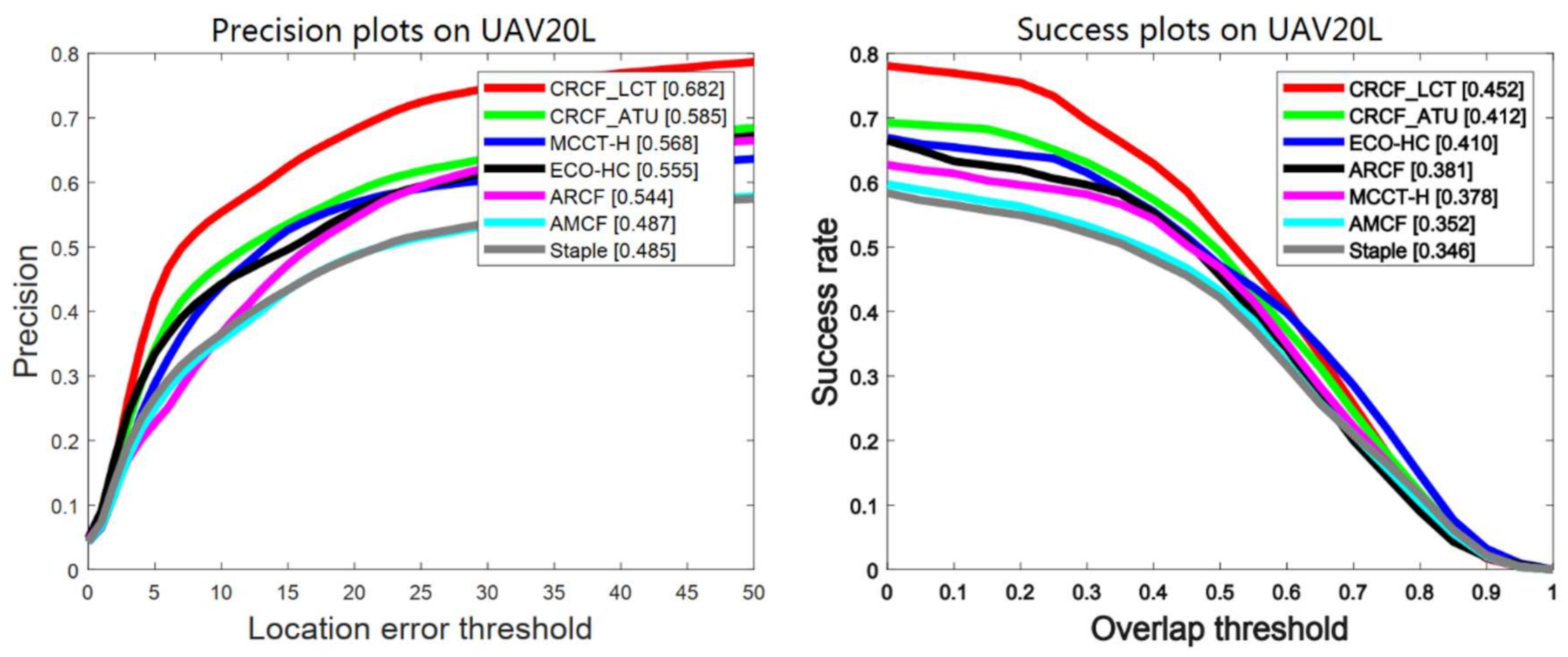

4.4.4. Long-Term Tracking Results on UAV20L

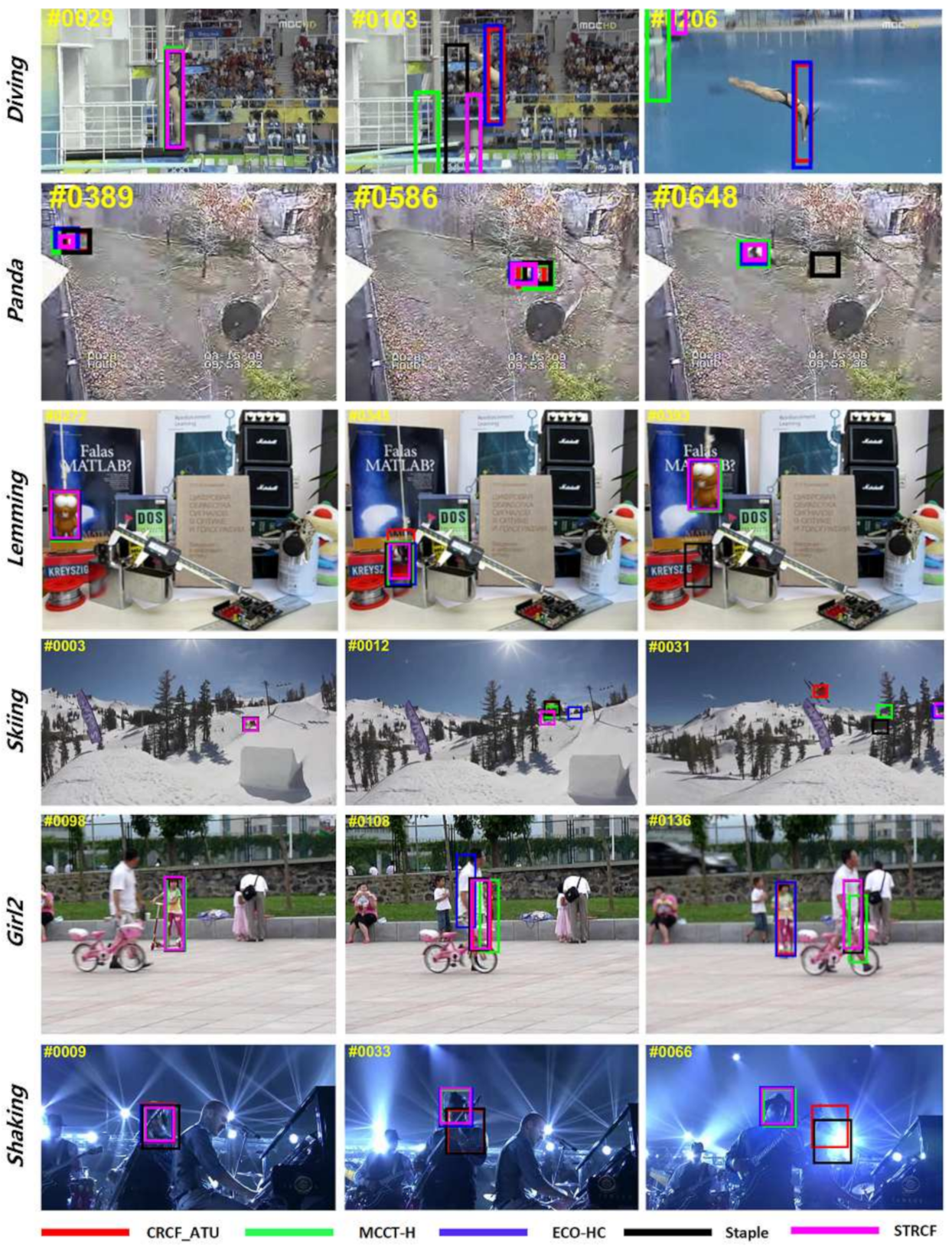

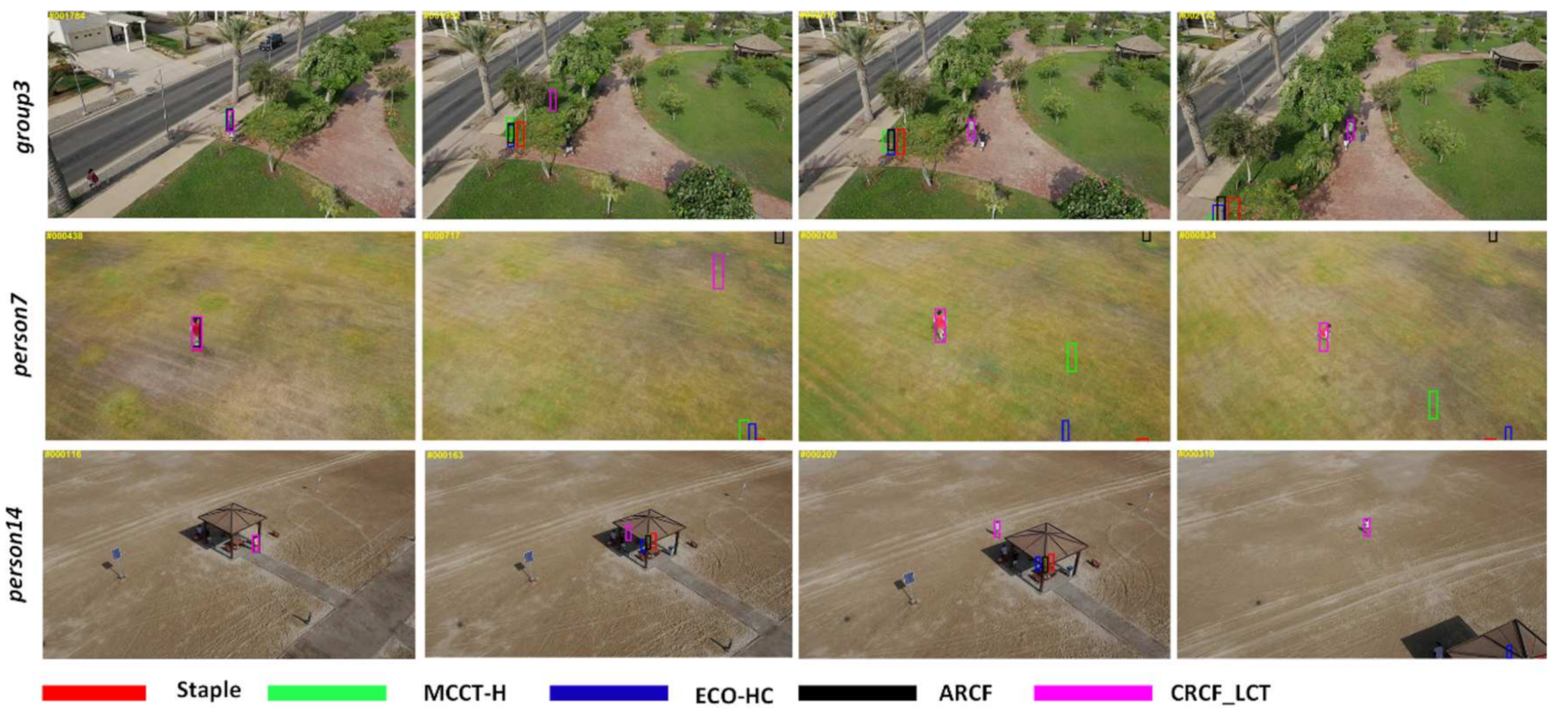

4.4.5. Qualitative Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Liu, T.; Wang, G.; Yang, Q. Real-time part-based visual tracking via adaptive correlation filters. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4902–4912. [Google Scholar]

- Li, S.X.; Chang, H.X.; Zhu, C.F. Adaptive pyramid mean shift for global real-time visual tracking. Image Vis. Comput. 2010, 28, 424–437. [Google Scholar] [CrossRef]

- Li, S.; Wu, O.; Zhu, C.; Chang, H. Visual object tracking using spatial context information and global tracking skills. Comput. Vis. Image Underst. 2014, 125, 1–15. [Google Scholar] [CrossRef]

- Jiang, S.; Li, S.; Zhu, C.; Lan, X. Robust visual tracking with channel weighted color ratio feature. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing, Xiamen, China, 5–7 July 2019. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.-H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liang, P.; Blasch, E.; Ling, H. Encoding color information for visual tracking: Algorithms and benchmark. IEEE Trans. Image Process. 2015, 24, 5630–5644. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In 2016 European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kozat, S.S.; Venkatesan, R.; Mihcak, M.K. Robust perceptual image hashing via matrix invariants. In Proceedings of the 2004 International Conference on Image Processing, ICIP ’04, Singapore, 24–27 October 2004. [Google Scholar]

- Jiang, S.; Li, S.; Zhu, C.; Yan, N. Efficient correlation filter tracking with adaptive training sample update scheme. In Proceedings of the International Conference on Pattern Recognition, ICPR2020, Milano, Italy, 10–15 January 2021. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In 2012 European Conference on Computer Vision; Spring: Berlin/Heidelberg, Germany, 2012; Volume 4, pp. 702–715. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate scale estimation for robust visual tracking. In 2014 British Machine Vision Conference; BMVA Press: London, UK, 2014. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, Y.; Zhu, J.; Hoi, S.C.H.; Song, W.; Wang, Z.; Liu, H. Robust estimation of similarity transformation for visual object tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, AAAI2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8666–8673. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J.V.D. Adaptive color attributes for real-time visual tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary learners for real-time tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, CA, USA, 26 June–1 July 2016; pp. 1401–1409. [Google Scholar]

- Danelljan, M.; Robinson, A.K.F.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In 2016 European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 472–488. [Google Scholar]

- Ma, C.; Huang, J.; Yang, X.; Yang, M. Hierarchical Convolutional Features for Visual Tracking. In 2015 IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 2015; pp. 3074–3082. [Google Scholar]

- Bhat, G.; Johner, J.; Danelljan, M.; Shahbaz, K.F.; Felsberg, M. Unveiling the Power of Deep Tracking. In Proceedings of the 2018 European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual tracking via adaptive spatially-regularized correlation filters. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Wang, N.; Zhou, W.; Li, H. Reliable re-detection for long-term tracking. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 730–743. [Google Scholar] [CrossRef]

- Wang, M.; Liu, Y.; Huang, Z. Large margin object tracking with circulant feature maps. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4800–4808. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Adaptive decontamination of the training set: A unified formulation for discriminative visual tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, CA, USA, 26 June–1 July 2016; pp. 1430–1438. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Pan, J. Augmented memory for correlation filters in real-time uav tracking. arXiv 2019, arXiv:1909.10989. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-term correlation tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Zhibin, H.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. Multi-store tracker (muster): A cognitive psychology inspired approach to object tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In 1999 IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Liu, H.; Hu, Q.; Li, B.; Guo, Y. Long-term object tracking with instance specific proposals. In Proceedings of the 2018 International Conference on Pattern Recognition, Sousse, Tunisia, 21–23 December 2018; pp. 1628–1633. [Google Scholar]

- Zitnick, C.L.; Dollar, P. Edge boxes: Locating object proposals from edges. In 2014 European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 391–405. [Google Scholar]

- Fan, H.; Ling, H. Parallel tracking. In 2017 IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 2017; pp. 5487–5495. [Google Scholar]

- Lukeźič, A.; Zajc, L.Č.; Vojíř, T.; Matas, J.; Kristan, M. Fucolot–a fully-correlational long-term tracker. In 2018 Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Bhat, G.; Danelljan, M.; Khan, F.S.; Felsberg, M. Combining local and global models for robust re-detection. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance, Auckland, New Zealand, 27–30 November 2018. [Google Scholar]

- Lukeźič, A.; Zajc, L.Č.; Vojíř, T.; Matas, J.; Kristan, M. Performance Evaluation Methodology for Long-Term Single-Object Tracking. IEEE Trans. Cybern. 2020, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dollar, P. Piotr’s Computer Vision Matlab Toolbox. 2015. Available online: https://github.com/pdollar/toolbox (accessed on 23 January 2021).

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning spatial-temporal regularized correlation filters for visual tracking. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In 2014 European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning aberrance repressed correlation filters for real-time uav tracking. In 2019 IEEE International Conference on Computer Vision; IEEE: New York, NY, USA, 2019. [Google Scholar]

| Method | P20 | AUC | FPS |

|---|---|---|---|

| CRCF+GMM | 0.825 | 0.608 | 90.32 |

| CRCF_ATU | 0.845 | 0.619 | 109.48 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, C.; Jiang, S.; Li, S.; Lan, X. Efficient and Practical Correlation Filter Tracking. Sensors 2021, 21, 790. https://doi.org/10.3390/s21030790

Zhu C, Jiang S, Li S, Lan X. Efficient and Practical Correlation Filter Tracking. Sensors. 2021; 21(3):790. https://doi.org/10.3390/s21030790

Chicago/Turabian StyleZhu, Chengfei, Shan Jiang, Shuxiao Li, and Xiaosong Lan. 2021. "Efficient and Practical Correlation Filter Tracking" Sensors 21, no. 3: 790. https://doi.org/10.3390/s21030790

APA StyleZhu, C., Jiang, S., Li, S., & Lan, X. (2021). Efficient and Practical Correlation Filter Tracking. Sensors, 21(3), 790. https://doi.org/10.3390/s21030790