Abstract

In the time series classification domain, shapelets are subsequences that are discriminative of a certain class. It has been shown that classifiers are able to achieve state-of-the-art results by taking the distances from the input time series to different discriminative shapelets as the input. Additionally, these shapelets can be visualized and thus possess an interpretable characteristic, making them appealing in critical domains, where longitudinal data are ubiquitous. In this study, a new paradigm for shapelet discovery is proposed, which is based on evolutionary computation. The advantages of the proposed approach are that: (i) it is gradient-free, which could allow escaping from local optima more easily and supports non-differentiable objectives; (ii) no brute-force search is required, making the algorithm scalable; (iii) the total amount of shapelets and the length of each of these shapelets are evolved jointly with the shapelets themselves, alleviating the need to specify this beforehand; (iv) entire sets are evaluated at once as opposed to single shapelets, which results in smaller final sets with fewer similar shapelets that result in similar predictive performances; and (v) the discovered shapelets do not need to be a subsequence of the input time series. We present the results of the experiments, which validate the enumerated advantages.

1. Introduction

1.1. Background

Due to the rise of the Internet-of-Things (IoT), a mass adoption of sensors in all domains, including critical domains such as health care, can be noted. These sensors produce data of a longitudinal form, i.e., time series. Time series differ from classical tabular data, since a temporal dependency is present where each value in the time series correlates with its neighboring values. One important task that emerges from this type of data is the classification of time series in their entirety. A model able to solve such a task can be applied in a wide variety of applications, such as distinguishing between normal brain activity and epileptic activity [1], determining different types of physical activity [2], or profiling electronic appliance usage in smart homes [3]. Often, the largest discriminative power can be found in smaller subsequences of these time series, called shapelets. Shapelets semantically represent intelligence on how to discriminate between the different targets of a time series dataset [4]. We can use a set of shapelets and the corresponding distances from each of these shapelets to each of the input time series as features for a classifier. It has been shown that such an approach outperforms a nearest neighbor search based on dynamic time warping distance on almost every dataset, which has been deemed to be the state-of-the-art for a long time [5]. Moreover, shapelets possess an interpretable characteristic since they can easily be visualized and retraced back to the input signal, making them very interesting for decision support applications in critical domains, such as the medical domain. In these critical domains, it is of vital importance that a corresponding explanation can be provided alongside a prediction, since the wrong decision can have a significant negative impact.

1.2. Related Work

Shapelet discovery was initially proposed by Ye and Keogh [6]. Unfortunately, the initial algorithm quickly becomes intractable, even for smaller datasets, because of its large computational complexity , with N the number of time series and M the length of the smallest time series in the dataset). This complexity was improved two years later, when Mueen et al. [7] proposed an extension to this algorithm that makes use of caching for faster distance computation and a better upper bound for candidate pruning. These improvements reduce the complexity to , but have a larger memory footprint. Rakthanmanon et al. [8] proposed an approximative algorithm, called Fast Shapelets (fs), that finds a suboptimal shapelet in by first transforming the time series in the original set to Symbolic Aggregate approXimation (sax) representations [9]. Although no guarantee can be made that the discovered shapelet is the one that maximizes a pre-defined metric, they showed that they are able to achieve very similar classification performances, empirically on 32 datasets.

All the aforementioned techniques search for a single shapelet that optimizes a certain metric, such as information gain. Often, one shapelet is not enough to achieve good predictive performances, especially for multi-class classification problems. Therefore, the shapelet discovery is applied in a recursive fashion in order to construct a decision tree. Lines et al. [10] proposed Shapelet Transform (st), which performs only a single pass through the time series dataset and maintains an ordered list of shapelet candidates, ranked by a metric, and then finally takes the top-k from this list in order to construct features. While the algorithm only performs a single pass, the computational complexity still remains , which makes the technique intractable for larger datasets. Extensions to this technique have been proposed in the subsequent years, which drastically improved the performance of the technique [11,12]. Lines et al. [10] compared their technique to 36 other algorithms for time series classification on 85 datasets [13], which showed that their technique is one of the top-performing algorithms for time series classification and the best-performing shapelet extraction technique in terms of predictive performance.

Grabocka et al. [4] proposed a technique where shapelets are learned through gradient descent, in which the linear separability of the classes after transformation to the distance space is optimized, called Learning Time Series Shapelets (lts). The technique is competitive with st, while not requiring a brute-force search, making it tractable for larger datasets. Unfortunately, lts requires the user to specify the number of shapelets and the length of each of these shapelets, which can result in a rather time-intensive hyper-parameter tuning process in order to achieve a good predictive performance. Three extensions of lts, which improve the computational runtime of the algorithm, have been proposed in the subsequent years. Unfortunately, in order to achieve these speedups, predictive performance had to be sacrificed. A first extension is called Scalable Discovery (sd) [14]. It is the fastest of the three extensions, improving the runtime by two to three orders of magnitude, but at the cost of having a worse predictive performance than lts on almost every tested dataset. Second, in 2015, Ultra-Fast Shapelets (ufs) [15] was proposed. It is a better compromise of runtime and predictive performance, as it is an order of magnitude slower than sd, but sacrifices less of its predictive performance. The final and most recent extension is called the Fused LAsso Generalized eigenvector method (flag) [16]. It is the most notable of the three extensions as it has runtimes competitive with sd, while being only slightly worse than lts in terms of predictive performance.

Several enhancements to shapelet discovery have recently been investigated as well. Wang et al. [17] investigated adversarial regularization in order to enhance the interpretability of the discovered shapelets. Guillemé et al. [18] investigated the added value of the location information of the discovered shapelets on top of distance-based information.

Most of the prior work regarding shapelet discovery was performed using univariate data. However, many real-world datasets are multi-variate. Extending shapelet discovery algorithm to deal with multi-variate data has therefore been gaining increasing interest in the time series analysis domain. Most of the existing works extend the gradient-based framework of lts [19,20] or perform a brute-force search with sampling [21].

1.3. Our Contribution

This paper is the first to investigate the feasibility of an evolutionary algorithm in order to discover a set of shapelets from a collection of labeled time series. The aim of the proposed algorithm, GENetic DIscovery of Shapelets (gendis), is to achieve state-of-the-art predictive performances similar to the best-performing algorithm, st, with a smaller number of shapelets, while having a low computational complexity similar to lts.

gendis tries to retain as many of the positive properties from lts as possible such as its scalable computational complexity, the fact that entire sets of shapelets are discovered as opposed to single shapelets, and that it can discover shapelets outside the original dataset. We demonstrate the added value of these two final properties through intuitive experiments in Section 3.2 and Section 3.3, respectively. Moreover, gendis has some benefits over lts. First, genetic algorithms are gradient-free, allowing for any objective function and an easier escape from local optima. Second, the total amount of shapelets and the length of each of these shapelets do not need to be defined prior to the discovery, alleviating the need to tune these, which could be computationally expensive and may require domain knowledge. Finally, we show by a thorough comparison, in Section 3.5, that gendis empirically outperforms lts in terms of predictive performance.

2. Materials and Methods

We first explain some general concepts from the time series analysis and shapelet discovery domain, on which we will then build further to elaborate our proposed algorithm, gendis.

2.1. Time Series Matrix and Label Vector

The input to a shapelet discovery algorithm is a collection of N time series. For the ease of notation, we assume that the time series are synchronized and have a fixed length of M, resulting in an input matrix . It is important to note that gendis could perfectly work with variable length time series as well. In that case, M would be equal to the minimal time series length in the collection. Since shapelet discovery is a supervised approach, we also require a label vector of length N, with each element with C the number of classes and corresponding to the label of the i-th time series in .

2.2. Shapelets and Shapelet Sets

Shapelets are small time series that semantically represent intelligence on how to discriminate between the different targets of a time series dataset. In other words, they are very similar to subsequences from time series of certain (groups of) classes, while being dissimilar to subsequences of time series of other classes. The output of a shapelet discovery algorithm is a collection of K shapelets, , called a shapelet set. In gendis, K and the length of each shapelet do not need to be defined beforehand, and each shapelet can have a variable length, smaller than M. These K shapelets can then be used to extract features for the time series, as we will explain subsequently.

2.3. Distance Matrix Calculation

Given an input matrix and a shapelet set , we can construct a pairwise distance matrix :

The distance matrix, , is constructed by calculating the distance between each (t, s) pair, where is an input time series and a shapelet from the candidate shapelet set. This matrix can then be fed to a machine learning classifier. Often, , such that we effectively reduce the dimension of our data. In order to calculate the distance from a shapelet s in to a time series t from , we slide the shapelet across the time series and take the minimum distance:

with a distance metric, such as the Euclidean distance, and a slice from t starting at index i and having the same length as s.

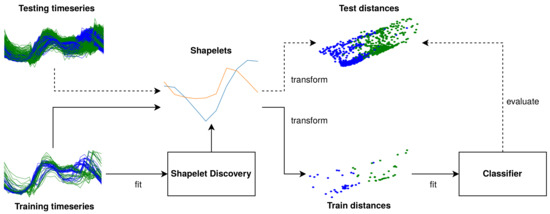

2.4. Shapelet Set Discovery

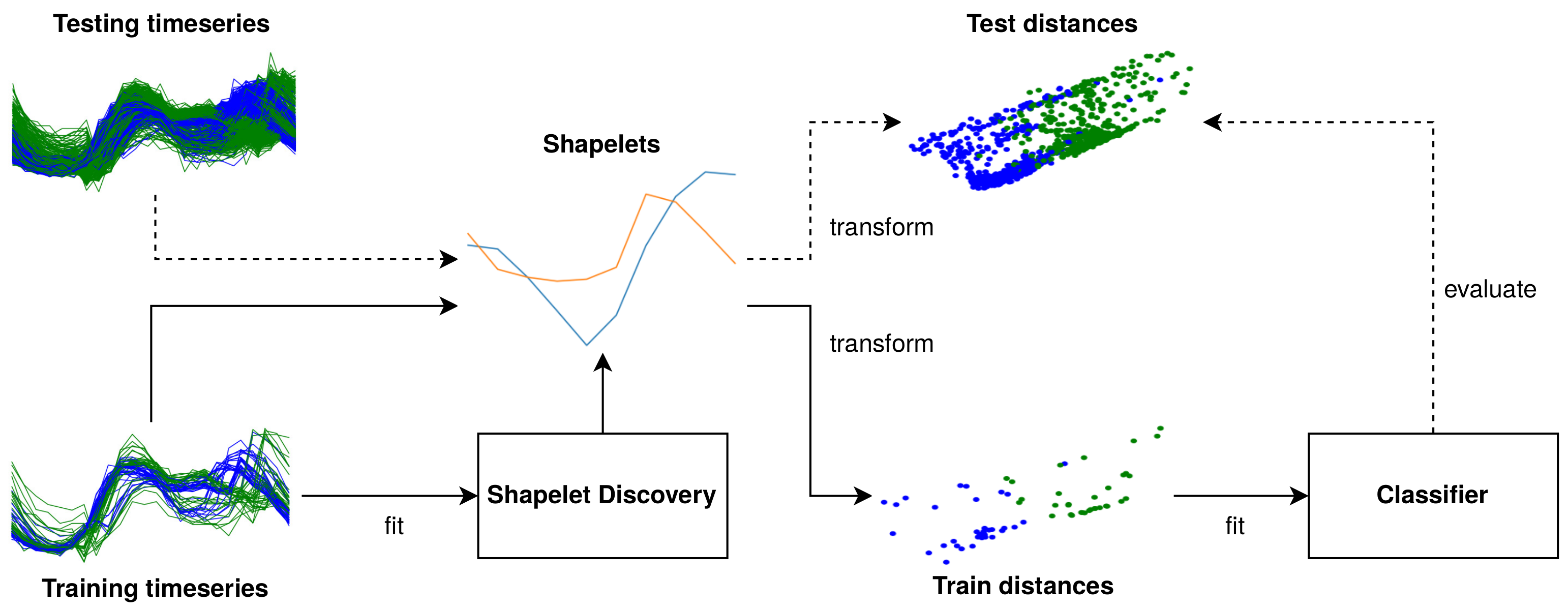

A conceptual overview of a shapelet discovery algorithm is depicted in Figure 1. The discovery algorithm tries to find a set of shapelets, , that produces a distance matrix, , that minimizes the loss function, , of the machine learning technique to which it is fed, , given the ground truth, .

Figure 1.

A schematic overview of shapelet discovery.

Once shapelets are found, these can be used to transform the time series into features that correspond to distances from each of the time series to the shapelets in the set. These features can then be fed to a classifier. It should be noted that both the shapelet discovery and the classification component can be trained jointly end-to-end. However, in gendis, these components are decoupled.

2.5. Genetic Discovery of Interpretable Shapelets

In this paper, we propose a genetic algorithm that evolves a set of variable-length shapelets, , in , which produces a distance matrix , based on a collection of time series that results in optimal predictive performance when provided to a machine learning classifier. The intuition behind the approach is similar to lts, which we mentioned in Section 1.2, but the advantage is that both the size of (K) and the length of each shapelet are evolved jointly, alleviating the need to specify the number of shapelets and the length of each shapelet prior to the extraction. Moreover, the technique is gradient-free, which allows for non-differentiable objectives and escaping local optima more easily.

2.5.1. Conceptual Overview

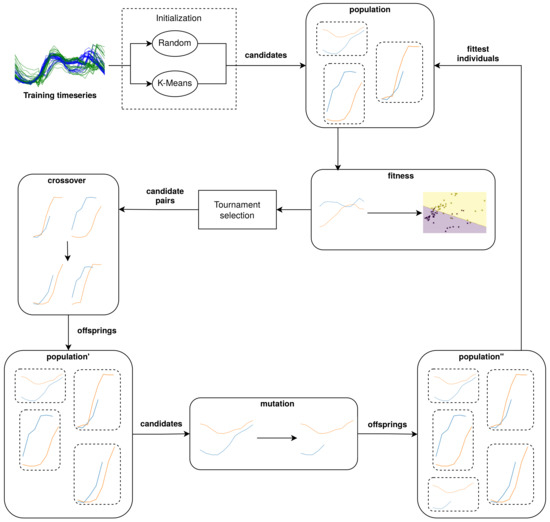

The building blocks of a genetic algorithm consist of at least a crossover, mutation, and selection operator [22]. Additionally, we seed, or initialize, the algorithm with specific candidates instead of completely random candidates [23] and apply elitism [24] to make sure the fittest candidate set is never discarded from the population or never experiences mutations that detriment its fitness.

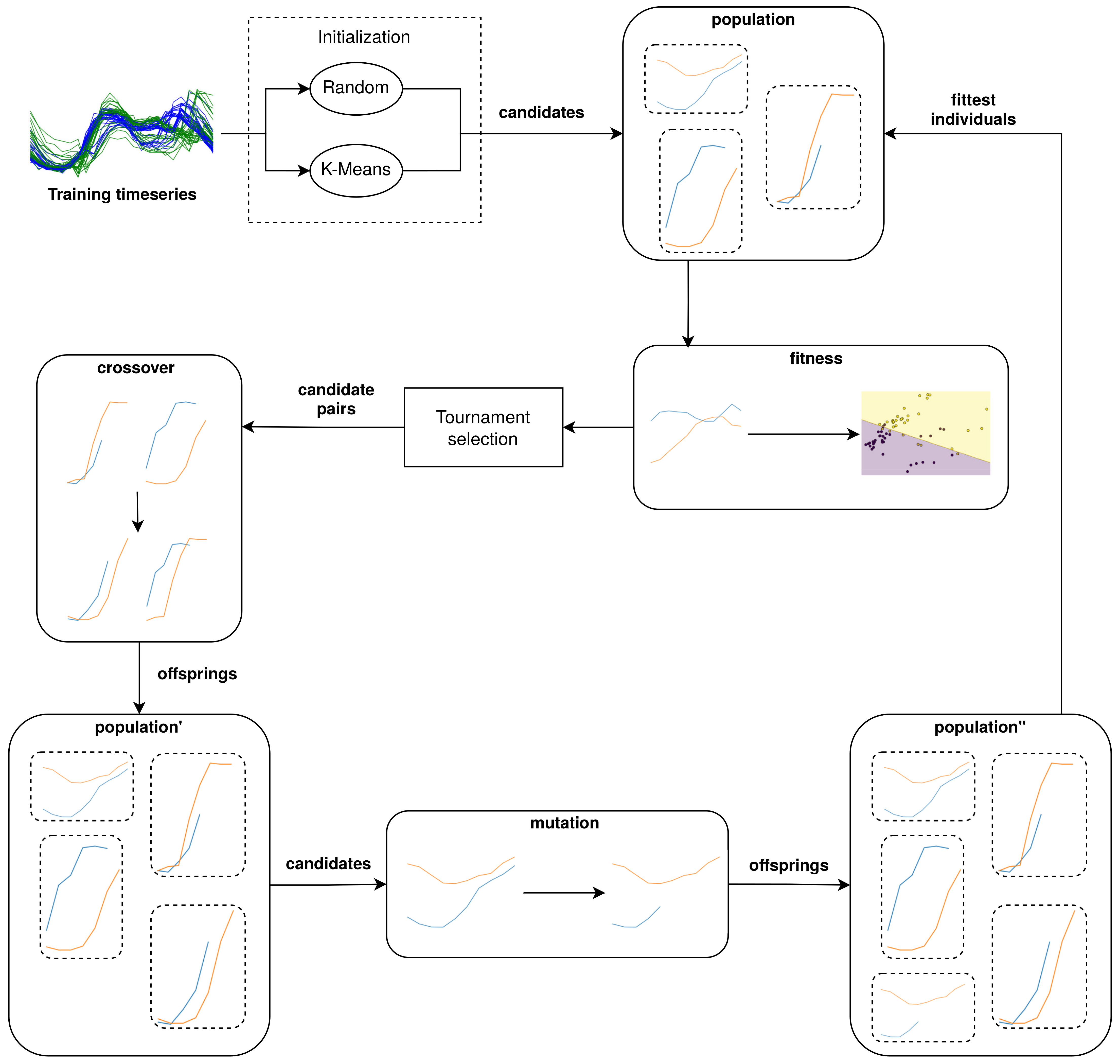

A conceptual overview of gendis is provided in Figure 2. Initially, a population is constructed through initialization operators. Afterwards, the individuals in the population are evolved iteratively to increase their quality. An iteration, or generation, of the algorithm first consists of calculating the fitness values for new individuals. When all fitness values are known, tournament selection is applied to select pairs of individuals or candidates. These pairs then undergo a crossover to generate new offspring, which are added to the population. Then, each of the individuals in the population undergoes mutations with a certain probability. Finally, the fittest individuals are selected from the population, and this process repeats until convergence or until the stop criteria are met. Each of these operations are elaborated upon in the following subsections.

Figure 2.

A conceptual overview of GENetic DIscovery of Shapelets (gendis).

2.5.2. Initialization

In order to seed the algorithm with initial candidate sets, we generate P candidate sets containing K shapelets, with K a random integer picked uniformly from , W a hyper-parameter of the algorithm, and P the population size. K is randomly chosen for each individual, and the default value of W is set to be . These two boundaries are chosen to be low in order to start with smaller candidate sets and grow them incrementally. This is beneficial for both the size of the final shapelet set, as well as the runtime of each generation. For each candidate set we initialize, we randomly pick one of the following two strategies with equal probability:

Initialization 1.

Apply K-means on a set of random subseries of a fixed random length sampled from . The K resulting centroids form a candidate set.

Initialization 2.

Generate K candidates of random lengths by sampling them from .

is a hyper-parameter that limits the length of the discovered shapelets, in order to combat overfitting. While Initialization 1 results in strong initial individuals, Initialization 2 is included in order to increase the population diversity and to decrease the time required to initialize the entire population.

2.5.3. Fitness

One of the most important components of a genetic algorithm is its fitness function. In order to determine the fitness of a candidate set , we first construct , which is the distance matrix obtained by calculating the distances between and . The goal of our genetic algorithm is to find an that produces a that results in the most optimal predictive performance when provided to a classifier. We measure the predictive performance directly by means of an error function defined on the predictions of a logistic regression model and the provided label vector . When two candidate shapelet sets produce the same error, the set with the lowest complexity is deemed to be the fittest. The complexity of a shapelet set is expressed as the sum of shapelet lengths ().

The fitness calculation is the bottleneck of the algorithm. Calculating the distance of a shapelet with length L to a time series of length M requires pointwise comparisons. Thus, in the worst case, operations need to be performed per time series, resulting in a computational complexity of . We apply these distance calculations to each individual representing a collection of shapelets from our population, in each generation. Therefore, the complexity of the entire algorithm is equal to , with G the total number of generations, P the population size, and K the (maximum) number of shapelets in the bag each individual of the population represents.

2.5.4. Crossover

We define three different crossover operations, which take two candidate shapelet sets, and , as the input and produce two new sets, and :

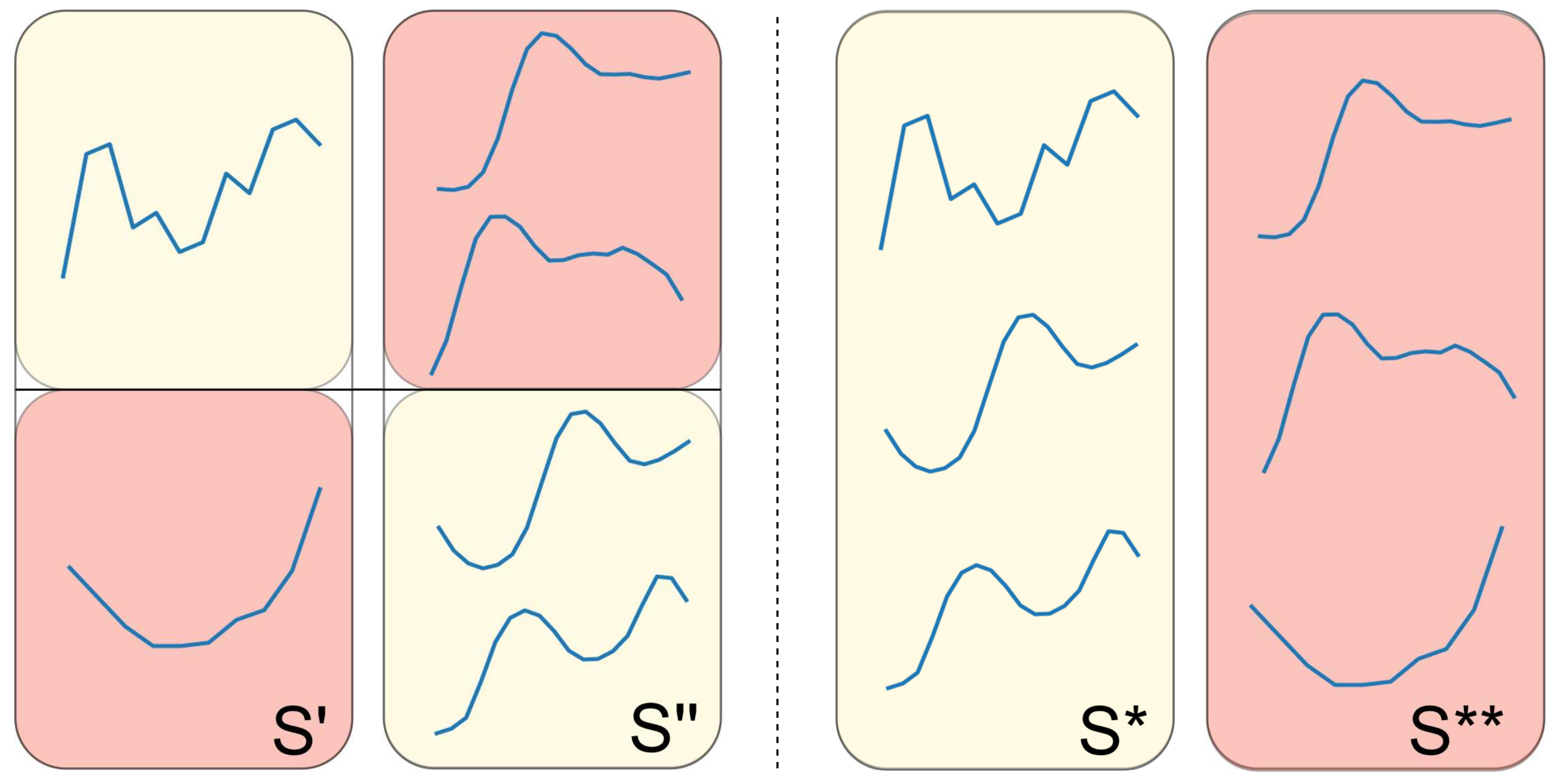

Crossover 1.

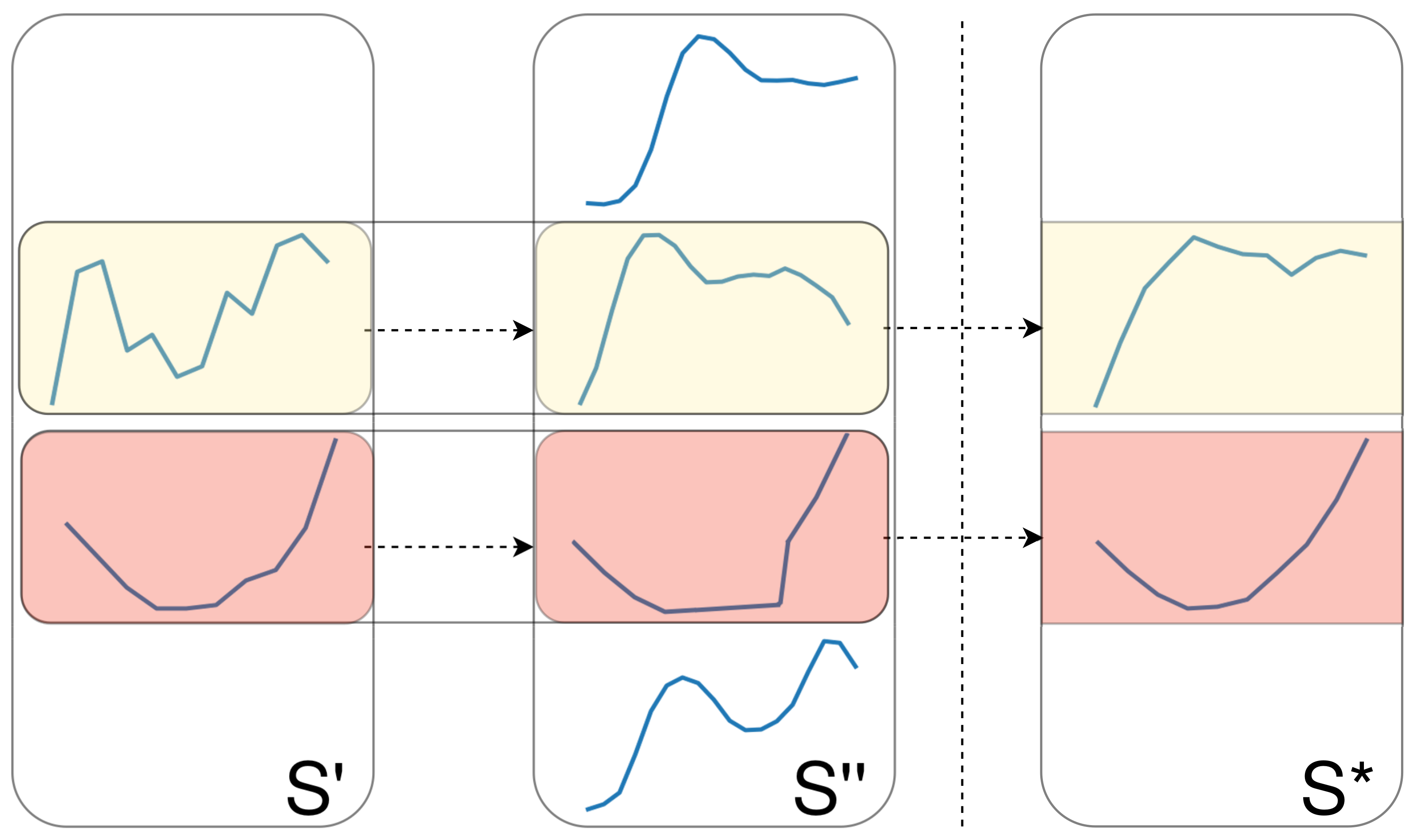

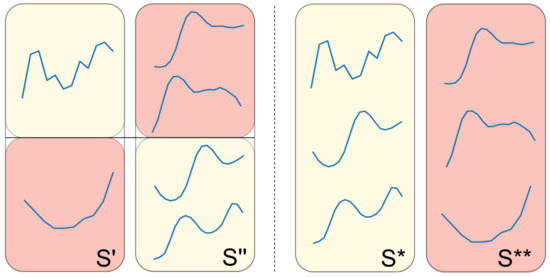

Apply one- or two-point crossover on two shapelet sets (each with a probability of 50%). In other words, we create two new shapelet sets that are composed of shapelets from both and . An example of this operation is provided in Figure 3.

Figure 3.

An example of a one-point crossover operation on two shapelet sets.

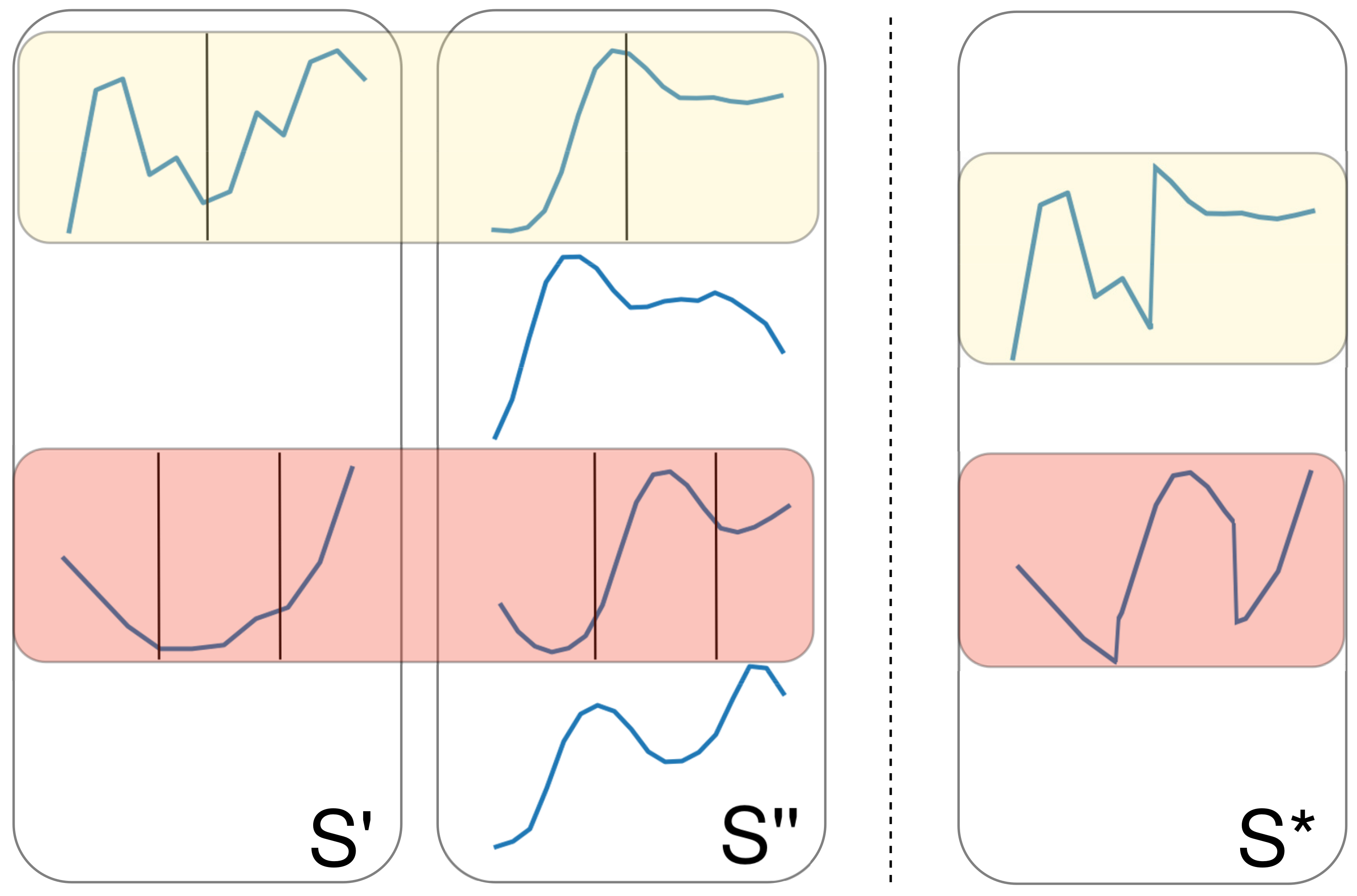

Crossover 2.

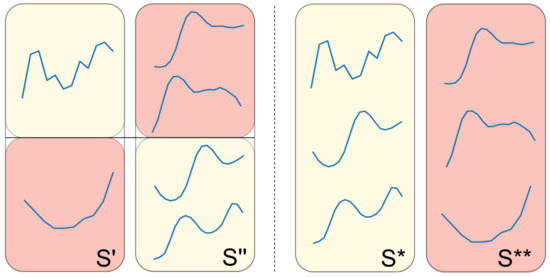

Iterate over each shapelet s in , and apply one- or two-point crossover (again with a probability of 50%) with another randomly chosen shapelet from to create . Apply the same, vice versa, to obtain . This differs from the first crossover operation as the one- or two-point crossover is performed on individual shapelets as opposed to entire sets. An example of this operation can be seen in Figure 4.

Figure 4.

An example of one- and two-point crossover applied on individual shapelets.

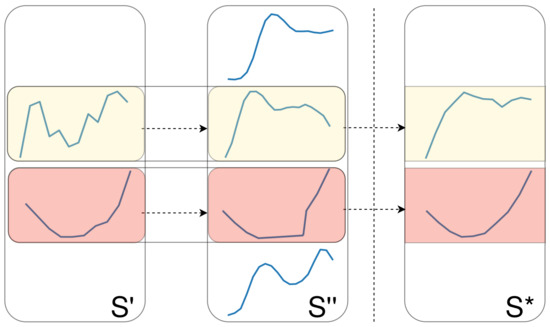

Crossover 3.

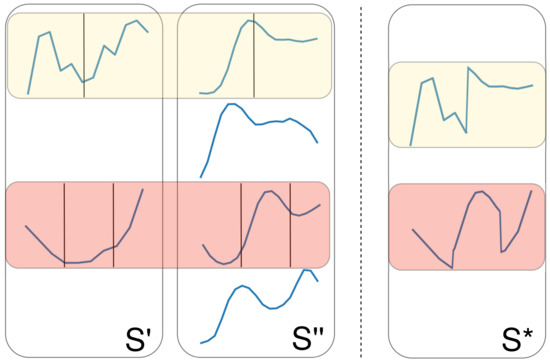

Iterate over each shapelet s in , and merge it with another randomly chosen shapelet from . The merging of two shapelets can be done by calculating the mean (or barycenter) of the two time series. When two shapelets being merged have varying lengths, we merge the shorter shapelet with a random part of the longer shapelet. A schematic overview of this strategy, on shapelets having the same length, is depicted in Figure 5.

Figure 5.

An example of the shapelet merging crossover operation.

It is possible that all or no techniques are applied on a pair of individuals. Each technique has a probability equal to the configured crossover probability () of being applied.

2.5.5. Mutations

The mutation operators are a vital part of the genetic algorithm, as they ensure population diversity and allow escaping from local optima in the search space. They take a candidate set as the input and produce a new, modified . In our approach, we define three simple mutation operators:

Mutation 1.

Take a random , and randomly remove a variable amount of data points from the beginning or ending of the time series.

Mutation 2.

Remove a random .

Mutation 3.

Create a new candidate using Initialization 2, and add it to .

Again, all techniques can be applied on a single individual, each having a probability equal to the configured mutation probability ().

2.5.6. Selection, Elitism, and Early Stopping

After each generation, a fixed number of candidate sets is chosen based on their fitness for the next generation. Many different techniques exist to select these candidate sets. We chose to apply tournament selection with small tournament sizes. In this strategy, a number of candidate sets is sampled uniformly from the entire population to form a tournament. Afterwards, one candidate set is sampled from the tournament, where the probability of being sampled is determined by its fitness. Smaller tournament sizes ensure better population diversity as the probability of the fittest individual being included in the tournament decreases. Using this strategy, it is however possible that the fittest candidate set from the population is never chosen to compete in a tournament. Therefore, we apply elitism and guarantee that the fittest candidate set is always transferred to the next generation’s population. Finally, since it can be hard to determine the ideal number of generations that a genetic algorithm should run, we implemented early stopping where the algorithm preemptively stops as soon as no candidate set with a better fitness has been found for a certain number of iterations ().

2.5.7. List of All Hyper-Parameters

We now present an overview of all hyper-parameters included in gendis, along with their corresponding explanation and default values.

- Maximum shapelets per candidate (W): the maximum number of shapelets in a newly generated individual during initialization (default: ).

- Population size (P): the total number of candidates that are evaluated and evolved in every iteration (default: 100).

- Maximum number of generations (G): the maximum number of iterations the algorithm runs (default: 100).

- Early stopping patience (): the algorithm preemptively stops evolving when no better individual has been found for iterations (default: 10).

- Mutation probability (): the probability that a mutation operator gets applied to an individual in each iteration (default: ).

- Crossover probability (): the probability that a crossover operator is applied on a pair of individuals in each iteration (default: ).

- Maximum shapelet length (): the maximum length of the shapelets in each shapelet set (individual) (default: M).

- The operations used during the initialization, crossover, and mutation phases are configurable as well (default: all mentioned operations).

3. Results and Discussion

In the following subsections, we present the setup of different experiments and the corresponding results in order to highlight the advantages of gendis.

3.1. Efficiency of Genetic Operators

In this section, we assess the efficiency of the introduced genetic operators by evaluating the fitness as a function of the number of generations using different sets of operators. It should be noted that our implementation easily allows configuring the number and type of operators used for each of the different steps in the genetic algorithm, allowing the user to tune these according to the dataset.

3.1.1. Datasets

We picked six datasets, with varying characteristics, to evaluate the fitness of different configurations. The chosen datasets and their corresponding properties are summarized in Table 1.

Table 1.

The chosen datasets, having varying characteristics, for the evaluation of the genetic operators’ efficiency. #Cls = number of Classes, TS_len = length of Time Series, #Train = number of Training time series, #Test = number of Testing time series.

3.1.2. Initialization Operators

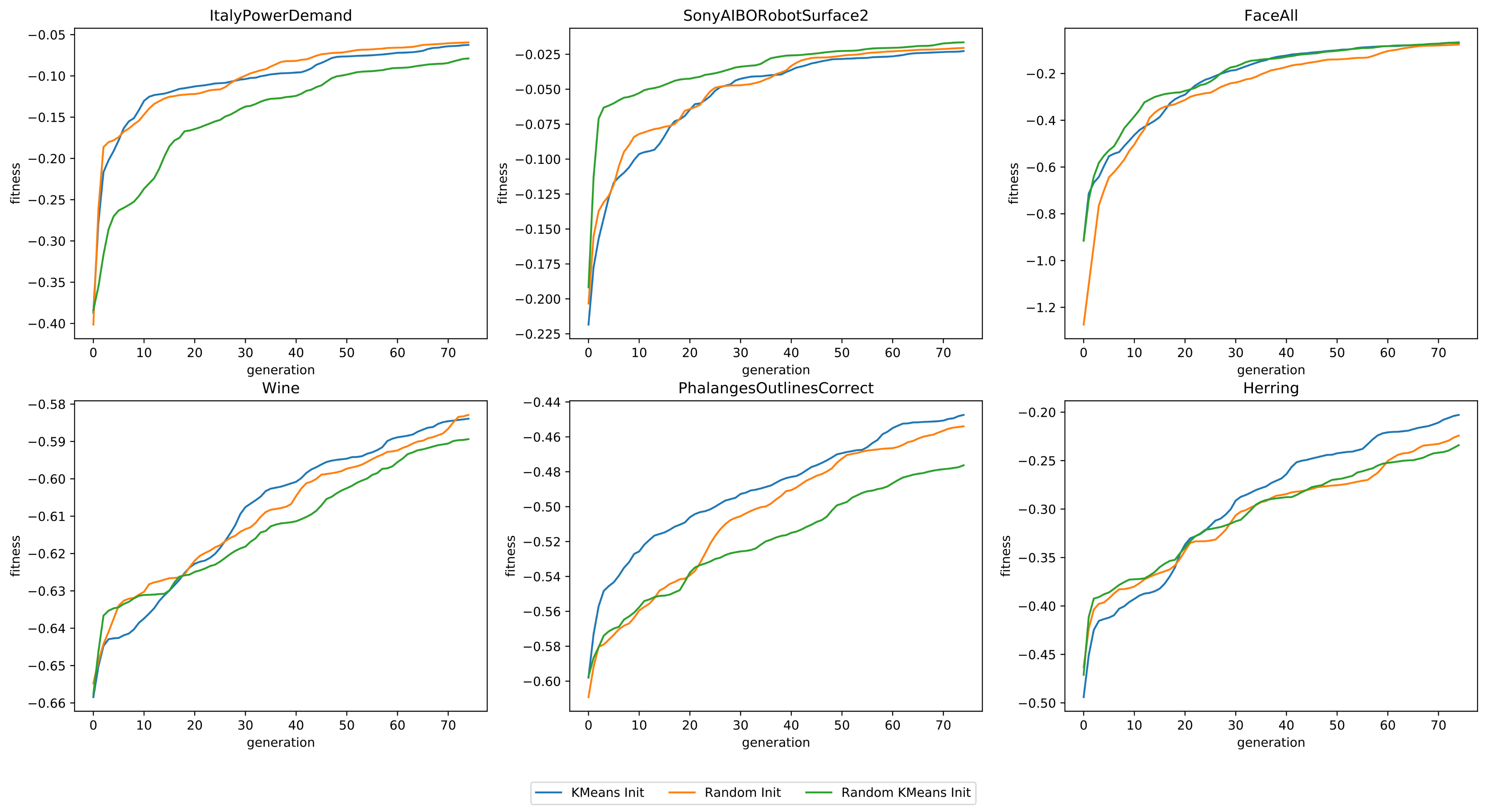

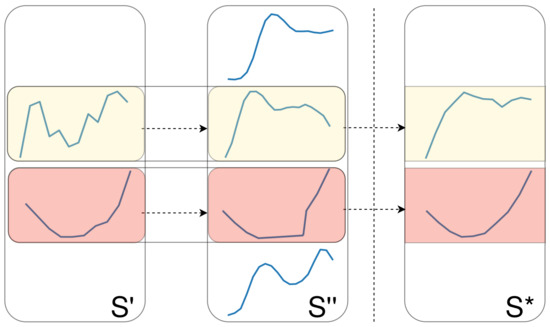

We first compare the fitness of GENDIS using three different sets of initialization operators:

- Initializing the individuals with K-means (Initialization 1)

- Randomly initializing the shapelet sets (Initialization 2)

- Using both initialization operations

Each configuration was tested using a small population (25 individuals), in order to reduce the required computational time, for 75 generations, as the impact of the initialization is highest in the earlier generations. All mutation and crossover operators were used. We show the average fitness of all individuals in the population in Figure 6. From these results, we can conclude that the two initialization operators are competitive with each other, as one operator will outperform the other on several datasets and vice versa on the others.

Figure 6.

The fitness as a function of the number of generations, for six datasets, using three different configurations of initialization operations.

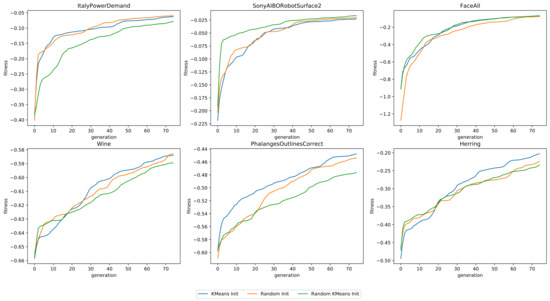

3.1.3. Crossover Operators

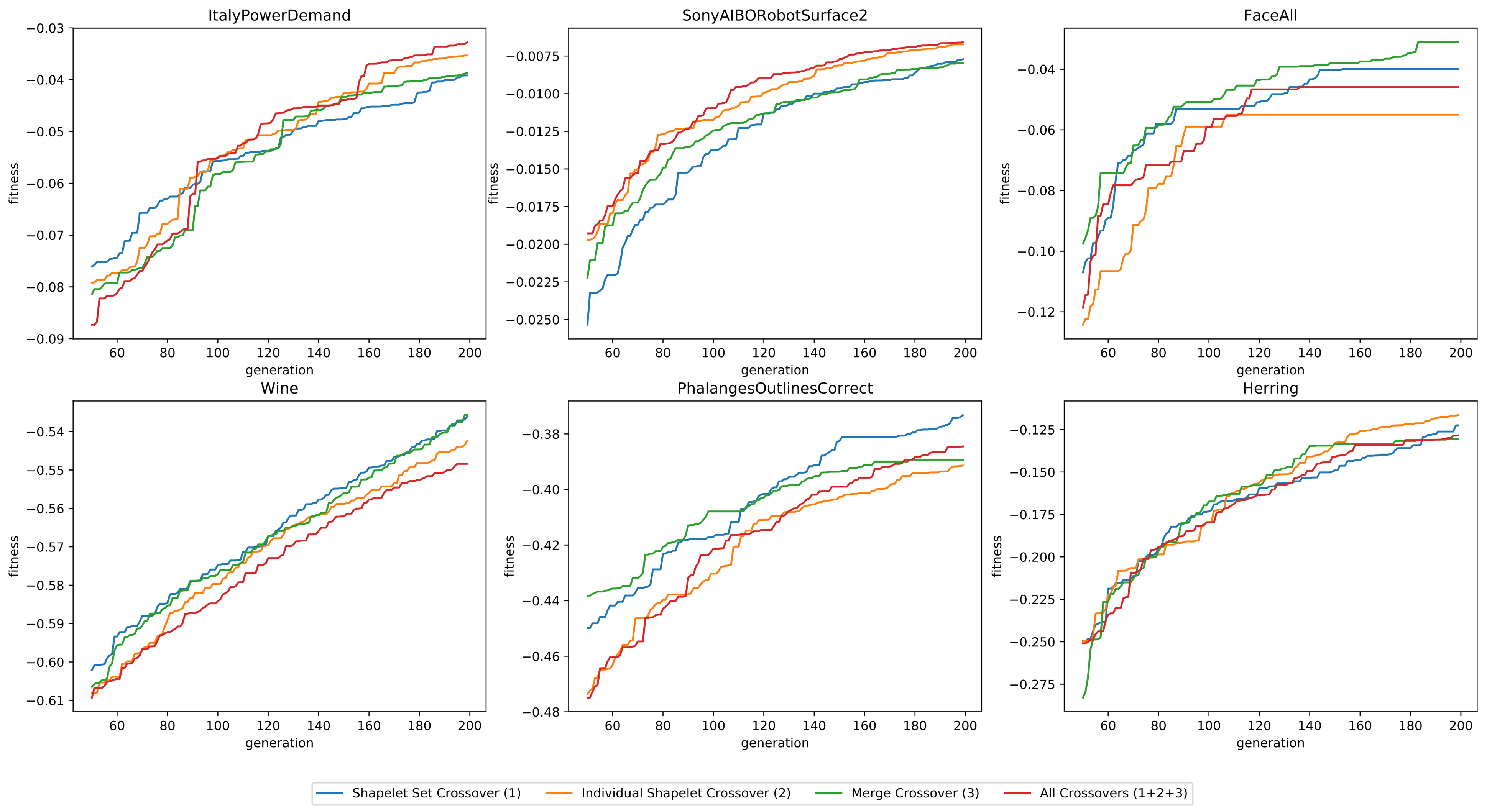

We now compare the average fitness of all individuals in the population, as a function of the number of generations, when configuring GENDIS to use four different sets of crossover operators:

- Using solely point crossovers on the shapelet sets (Crossover 1)

- Using solely point crossovers on individual shapelets (Crossover 2)

- Using solely merge crossovers (Crossover 3)

- Using all three crossover operations

Each run had a population of 25 individuals and ran for 200 generations. All mutation and initialization operators were used. As the average fitness is rather similar in the earlier generations, we truncated the first 50 measurements to better highlight the differences. The results are presented in Figure 7. As can be seen, it is again difficult to single out an operation that significantly outperforms the others.

Figure 7.

The fitness as a function of the number of generations, for six datasets, using four different configurations of crossover operations.

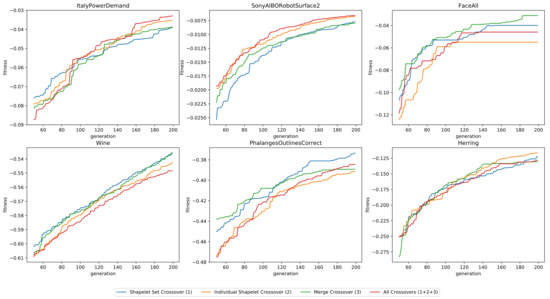

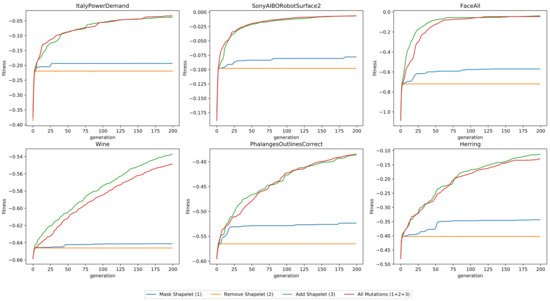

3.1.4. Mutation Operators

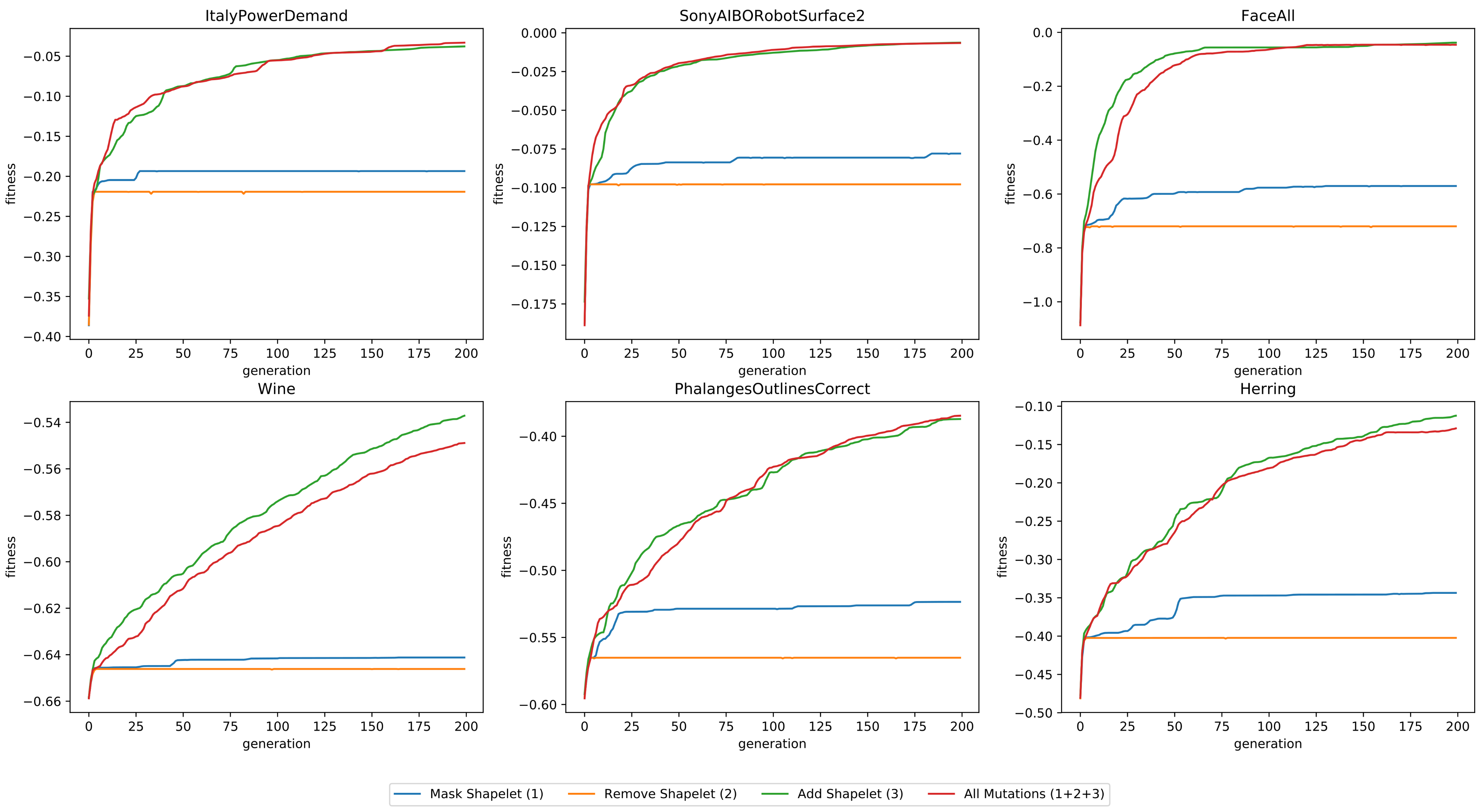

The same experiment was performed to assess the efficiency of the mutation operators. Four different configurations were used:

- Masking a random part of a shapelet (Mutation 1)

- Removing a random shapelet from the set (Mutation 2)

- Adding a shapelet, randomly sampled from the data, to the set (Mutation 3)

- Using all three mutation operations

The average fitness of the individuals, as a function of the number of generations, is depicted in Figure 8. It is clear that the addition of shapelets (Mutation 3) is the most significant operator. Without it, the fitness quickly converges to a sub-optimal value. The removal and masking of shapelets does not seem to increase the average fitness often, but they are important operators in order to keep the the number of shapelets and the length of the shapelets small.

Figure 8.

The fitness as a function of the number of generations, for six datasets, using four different configurations of mutation operations.

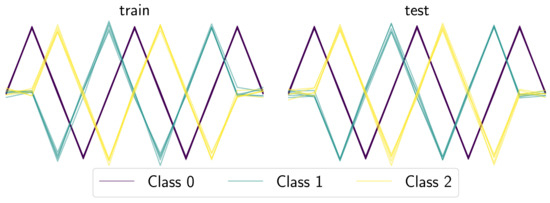

3.2. Evaluating Sets of Candidates Versus Single Candidates

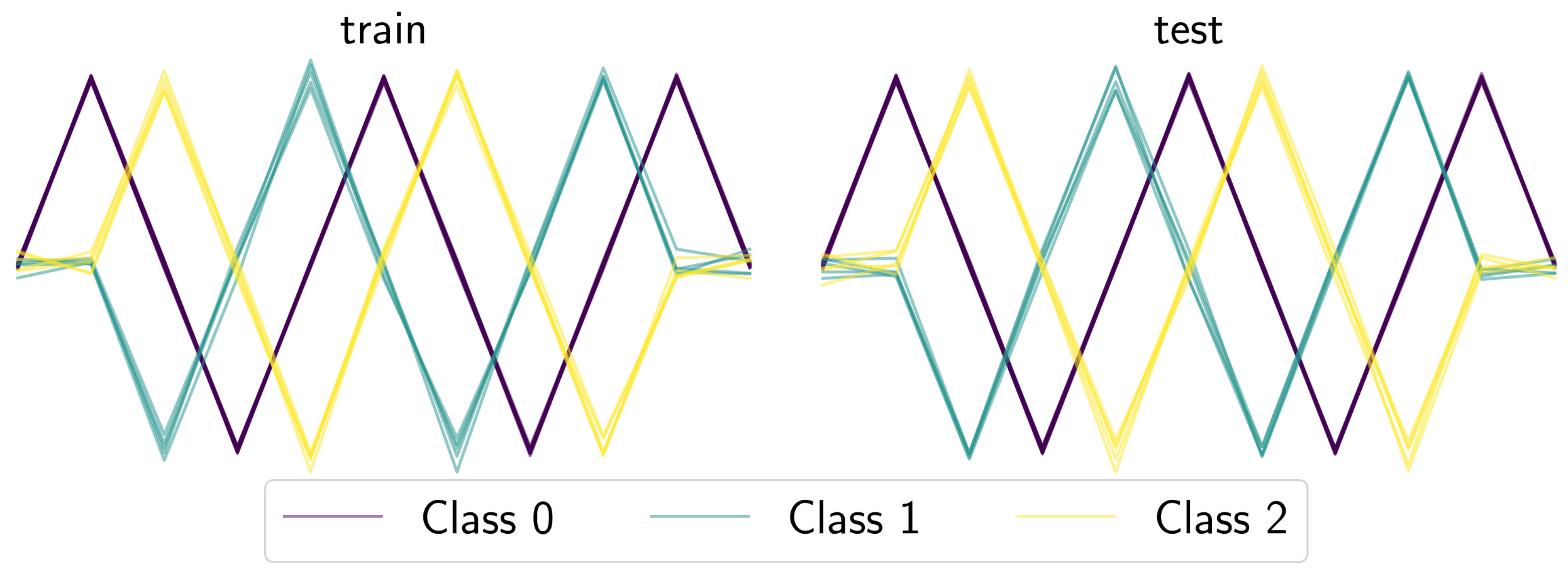

A key factor of gendis is that it evaluates entire sets of shapelets (a dependency between the shapelets is introduced), as opposed to evaluating single candidates independently and taking the top-k. The disadvantage of the latter approach is that similar shapelets will achieve similar values given a certain metric. When entire sets are evaluated, we can optimize both the quality metric for candidate sets, as the size of each of these sets. This results in smaller sets with fewer similar shapelets. Moreover, interactions between shapelets can be explicitly taken into account. To demonstrate these advantages, we compare gendis to st, which evaluates candidate shapelets individually as opposed to shapelet sets, on an artificial three-class dataset, depicted in Figure 9. The constructed dataset contains a large number of very similar time series of Class 0, while having a smaller number of more dissimilar time series of Classes 1 and 2. The distribution of time series across the three classes in both the training and test dataset is thus skewed, with the number of samples in Classes being equal to , respectively. This imbalance causes the independent approach to focus solely on extracting shapelets that can discriminate Class 0 from the other two, since the information gain will be highest for these individual shapelets. Clearly, this is not ideal as subsequences taken from the time series of Class 0 possess little to no discriminative power for the other two classes, as the distances to the time series from these two classes will be nearly equal.

Figure 9.

The generated training and test set for the artificial classification problem.

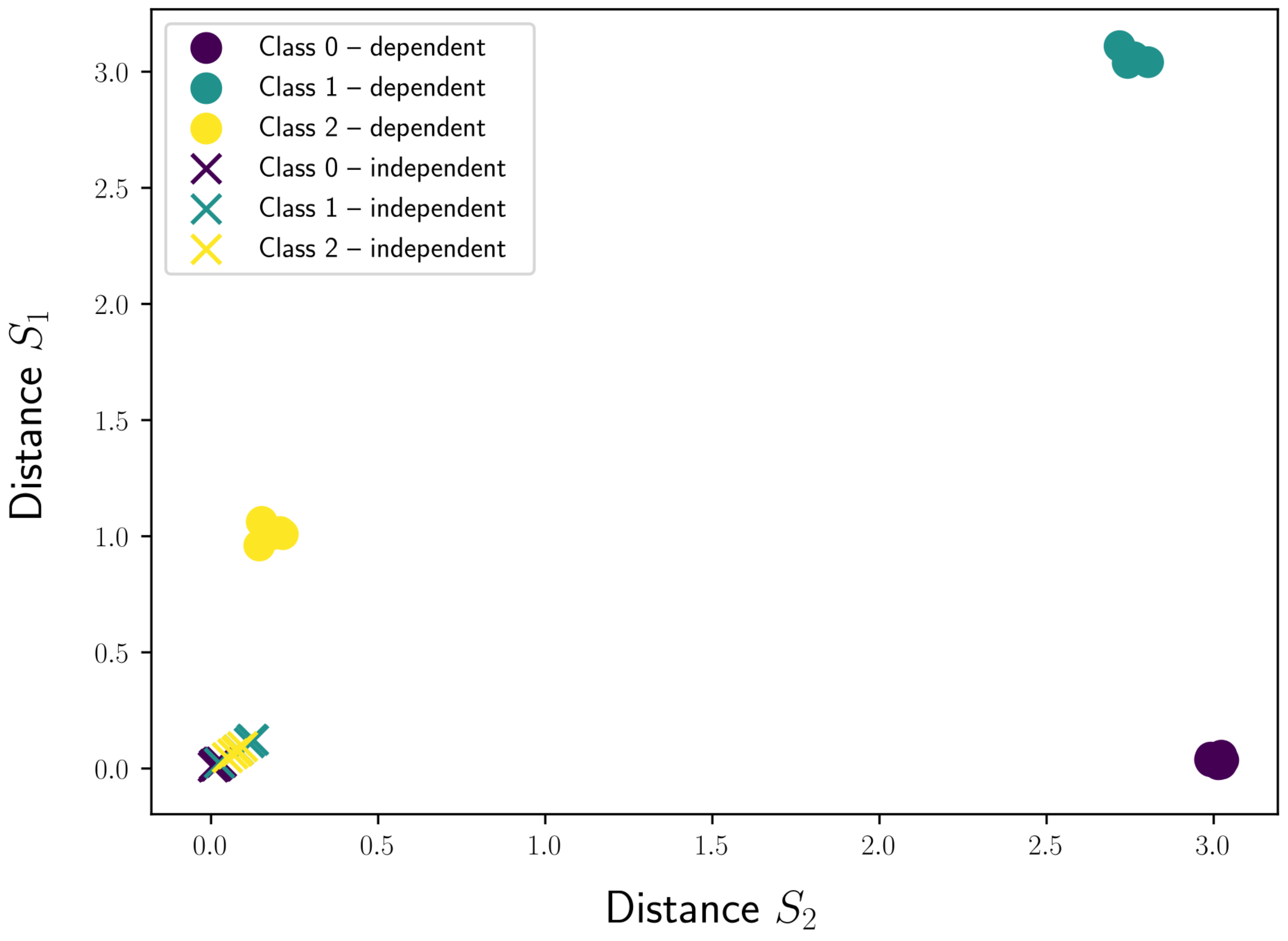

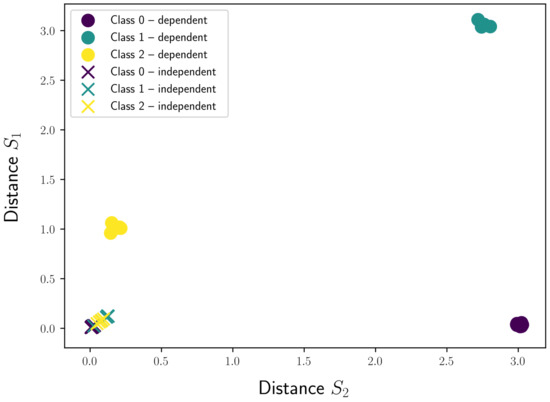

We extracted two shapelets with both techniques, which allowed us to visualize the different test samples in a two-dimensional transformed distance space, as shown in Figure 10. Each axis of this space represents the distances to a certain shapelet. For the independent approach, we can clearly see that the distances of the samples for all three classes to both shapelets are clustered near the origin of the space, making it very hard for a classifier to draw a separation boundary. On the other hand, a very clear separation can be seen for the samples of the three classes when using the shapelets discovered by gendis, a dependent approach. The low discriminative power of the independent approach is confirmed by fitting a logistic regression model with a tuned regularization type and strength on the obtained distances. The classifier fitted on the distances extracted by the independent approach is only able to achieve an accuracy of () on the rather straight-forward dataset. The accuracy score of gendis, a dependent approach, equals .

Figure 10.

The obtained feature representations using a dependent and independent approach.

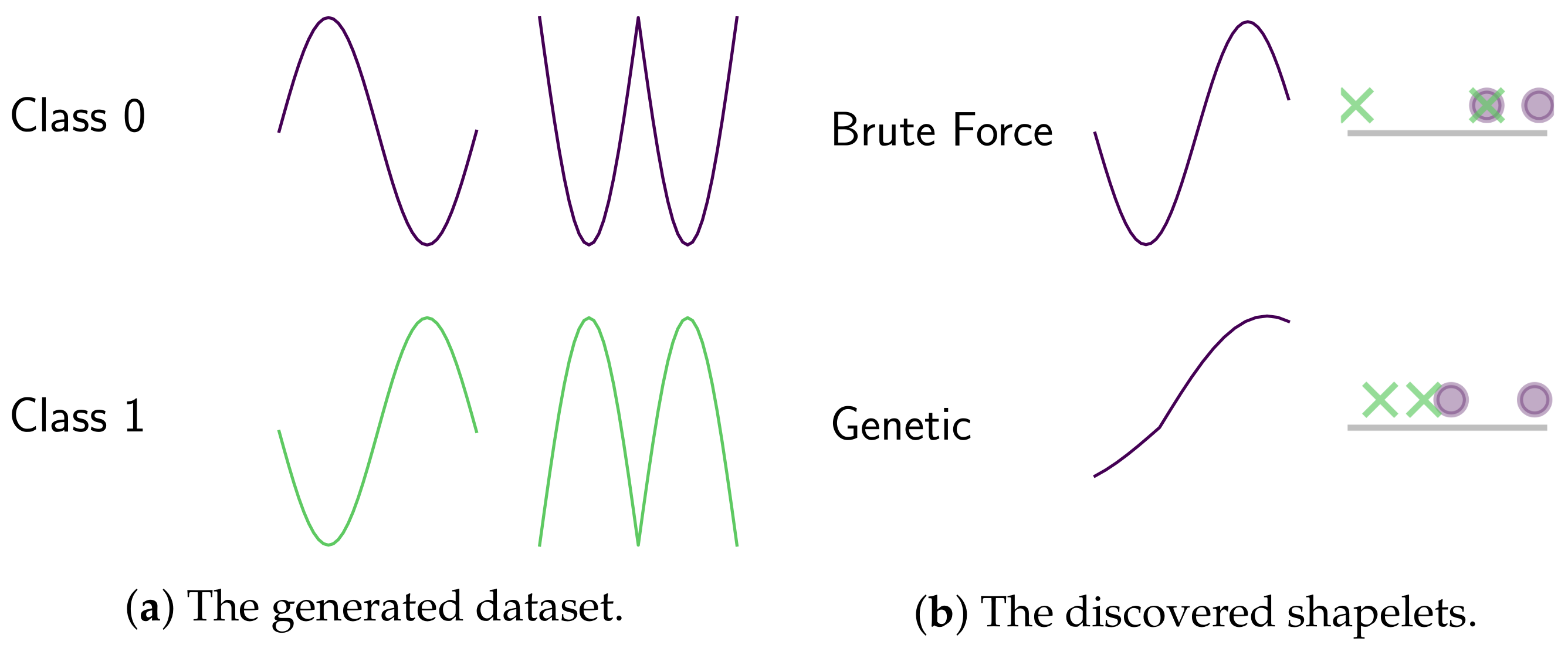

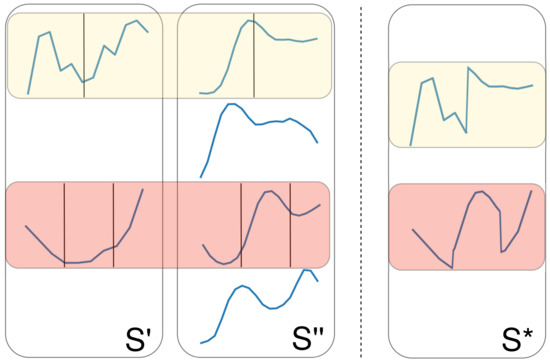

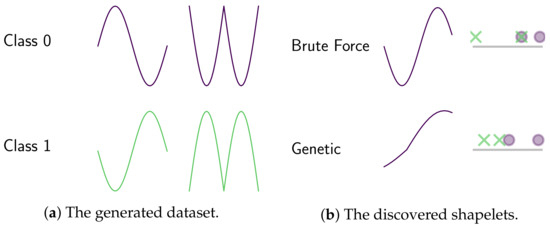

3.3. Discovering Shapelets Outside the Data

Another advantage of gendis is that the discovery of shapelets is not limited to be a subseries from . Due to the nature of the evolutionary process, the discovered shapelets can have a distance greater than zero to all time series in the dataset. More formally: . While this can be somewhat detrimental concerning interpretability, it can be necessary to get an excellent predictive performance. We demonstrate this through a very simple, artificial example. Assume we have a two-class classification problem and are provided two time series per class, as illustrated in Figure 11a. The extracted shapelet, and the distances to each time series, by a brute-force approach and a slightly modified version of gendis can be found in Figure 11b. The modification we made to gendis is that we specifically search for only one shapelet instead of an entire set of shapelets. We can see that the exhaustive search approach is not able to find a subseries in any of these four time series that separates both classes, while the shapelet extracted by gendis ensures perfect separation.

Figure 11.

A two-class problem with two time series per class and the extracted shapelets with corresponding distances on an ordered line by a brute-force approach versus gendis.

It is important to note here that discovering shapelets outside the data sacrifices interpretability for an increase in the predictive performance of the shapelets. As the operators that are used during the genetic algorithm are completely configurable for gendis, one can use only the first crossover operation (one- or two-point crossover on shapelet sets) to ensure all shapelets come from within the data.

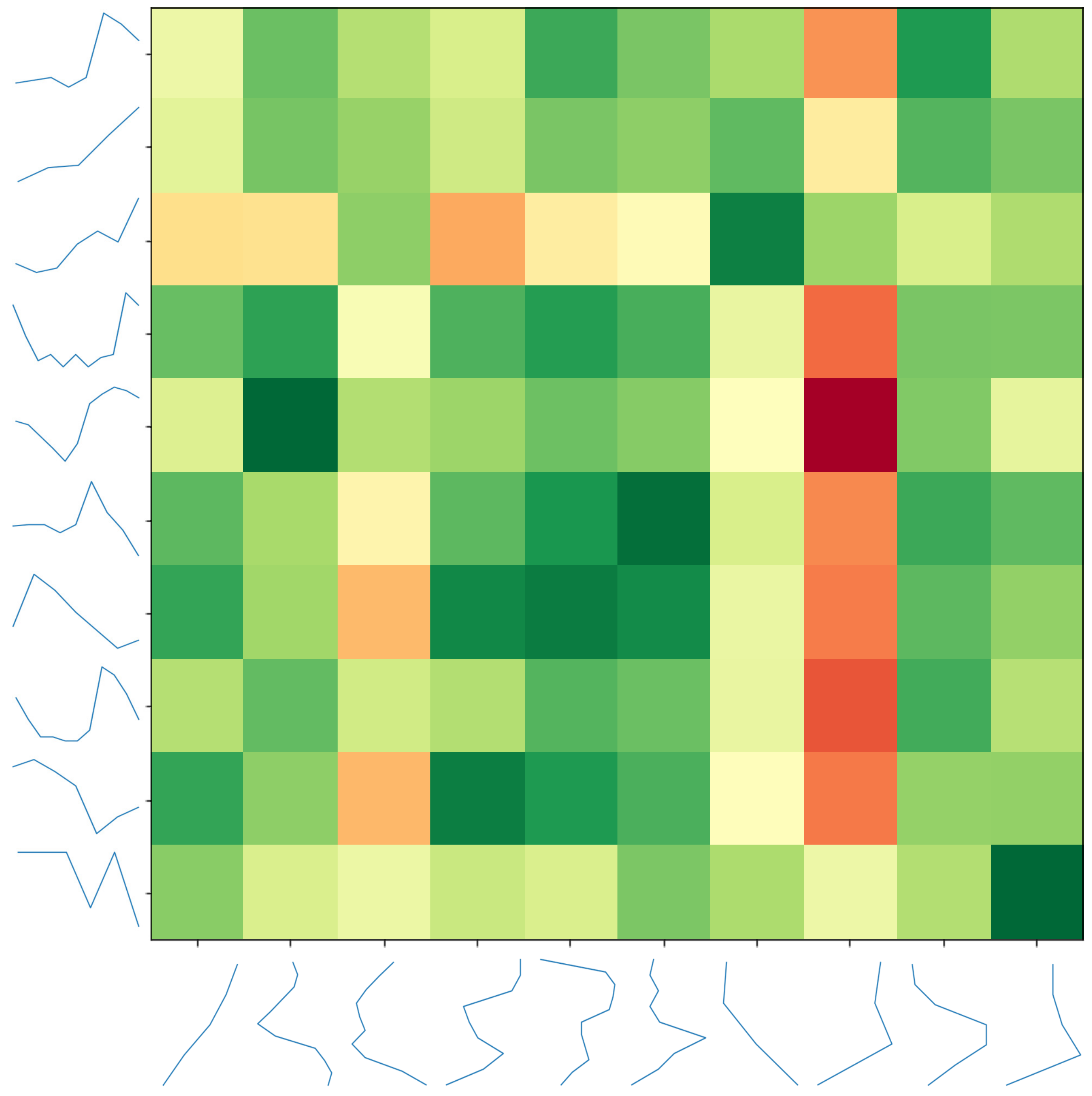

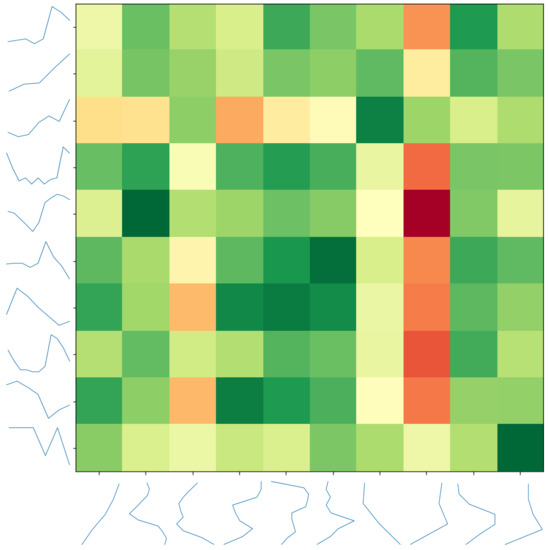

3.4. Stability

In order to evaluate the stability of our algorithm, we compare the extracted shapelets of two different runs on the ItalyPowerDemand dataset. We set the algorithm to evolve a large population (100 individuals) for a large number of generations (500) in order to ensure convergence. Moreover, we limited the maximum number of extracted shapelets to 10, in order to keep the visualization clear. We then calculated the similarity of the discovered shapelets between the two runs, using dynamic time warping [25]. A heat map of the distances is depicted in Figure 12. While the discovered shapelets are not exactly the same, we can often find pairs that contain the same semantic intelligence, such as a saw pattern or straight lines.

Figure 12.

A pairwise distance matrix between discovered shapelet sets of two different runs.

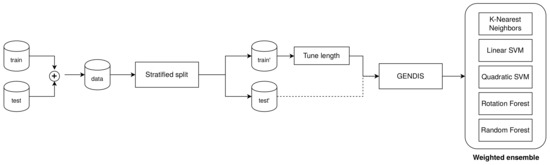

3.5. Comparing gendis to fs, st, and lts

In this section, we compare our algorithm gendis to the results from Bagnall et al. [13], which are hosted online (www.timeseriesclassification.com). In that study, thirty-one different algorithms, including three shapelet discovery techniques, were compared on 85 datasets. The 85 datasets stem from different data sources and different domains, including electrocardiogram data from the medical domain and sensor data from the IoT domain. The three included shapelet techniques are Shapelet Transform (st) [10], Learning Time Series Shapelets (lts) [4], and Fast Shapelets (fs) [8]. A discussion of all three techniques can be found in Section 1.2.

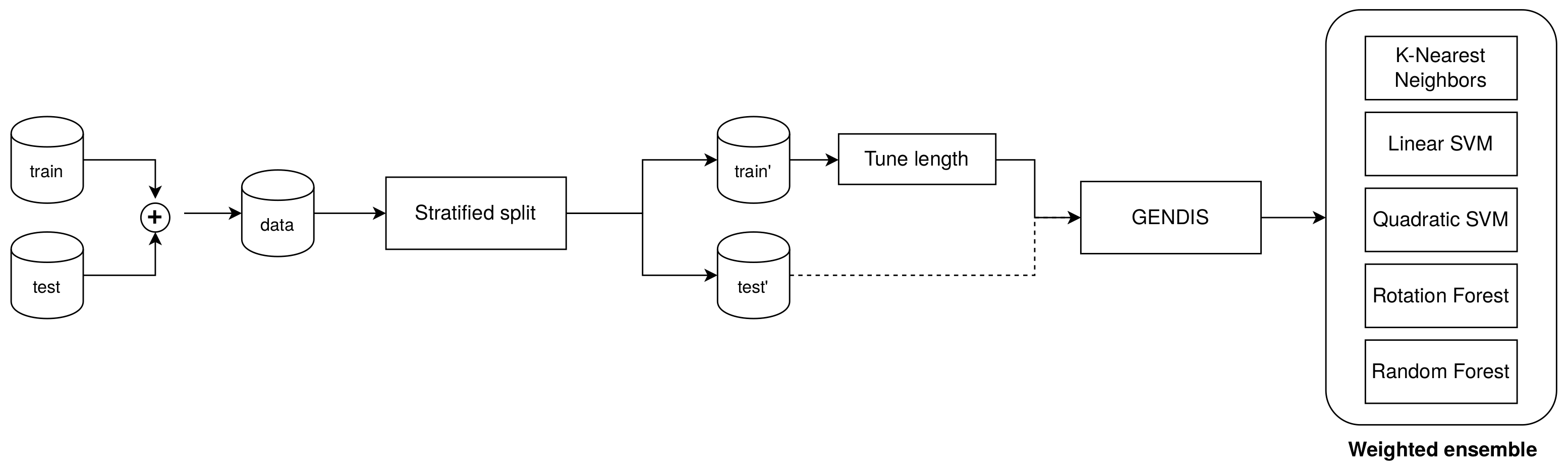

For 84 of the 85 datasets, we conducted twelve measurements by concatenating the provided training and testing data and re-partitioning in a stratified manner, as done in the original study. Only the “Phoneme” dataset could not be included due to problems with downloading the data while executing this experiment. On every dataset, we used the same hyper-parameter configuration for gendis: a population size of 100, a maximum of 100 iterations, early stopping after 10 iterations, and crossover and mutation probabilities of and , respectively. The only parameter that was tuned for every dataset separately was the maximum length for each shapelet, to combat overfitting. To tune this, we picked the length , which resulted in the best logarithmic (or entropy) loss using three-fold cross-validation on the training set. The distance matrix obtained through the extracted shapelets of gendis was then fed to a heterogeneous ensemble consisting of a rotation forest, random forest, support vector machine with a linear kernel, support vector with a quadratic kernel, and k-nearest neighbor classifier [26]. This ensemble matches the one used by the best-performing algorithm, st, closely. This is in contrast with fs, which produces a decision tree, and lts, which learns a separating hyperplane (similar to logistic regression) jointly with the shapelets. This setup is also depicted schematically in Figure 13. Trivially, the ensemble will empirically outperform each of the individual classifiers [27], but it does take a longer time to fit and somewhat takes the focus away from the quality of the extracted shapelets. Nevertheless, it is necessary to use an ensemble in order to allow for a fair comparison with st, as that was unfortunately used by Bagnall et al. [13] to generate their results. To give more insights into the quality of the extracted shapelets, we also report the accuracies using a Logistic Regression classifier. We tuned the type of regularization (ridge vs. lasso) and the regularization strength () using the training set. We recommend that future research compare their results to those obtained with the logistic regression classifier.

Figure 13.

The evaluation setup used to compare gendis to other shapelet techniques.

The mean accuracy over the twelve measurements of gendis in comparison to the mean of the hundred original measurements of the three other algorithms, retrieved from the online repository, can be found in Table 2 and Table 3. While a smaller number of measurements is conducted within this study, it should be noted that the measurements from Bagnall et al. [13] took over six months to generate. Moreover, accuracy is often not the most ideal metric to measure the predictive performance. Although it is one of the most intuitive metrics, it has several disadvantages such as skewness when the data are imbalanced. Nevertheless, the accuracy metric is the only one allowing for comparison to related work, as that metric was used in those studies. Moreover, the datasets used are merely benchmark datasets, and the goal is solely to compare the quality of the shapelets extracted by gendis to those of st. We recommend to use different performance metrics, which should be tailored to the specific use case. An example is using the area under the receiver operating characteristic curve (AUC) in combination with precision and recall for medical datasets.

Table 2.

A comparison between gendis and three other shapelet techniques on 85 datasets. ST, Shapelet Transform; LTS, Learning Time Series Shapelets; FS, Fast Shapelets; #Cls = number of Classes; TS_len = length of Time Series; #Train = number of Training time series; #Test = number of Testing time series; #Shapes = number of extracted shapelets by GENDIS.

Table 3.

A comparison between gendis and three other shapelet techniques on 85 datasets. ST, Shapelet Transform; LTS,Learning Time Series Shapelets; FS, Fast Shapelets; #Cls = number of Classes; TS_len = length of Time Series; #Train = number of Training time series; #Test = number of Testing time series; #Shapes = number of extracted shapelets by GENDIS.

For each dataset, we also performed an unpaired Student t-test with a cutoff value of to detect statistically significant differences. When the performance of an algorithm for a certain dataset is statistically better than all others, it is indicated in bold. From these results, we can conclude that fs is inferior to the three other techniques, while st most often achieves the best performance, but at a very high computational complexity.

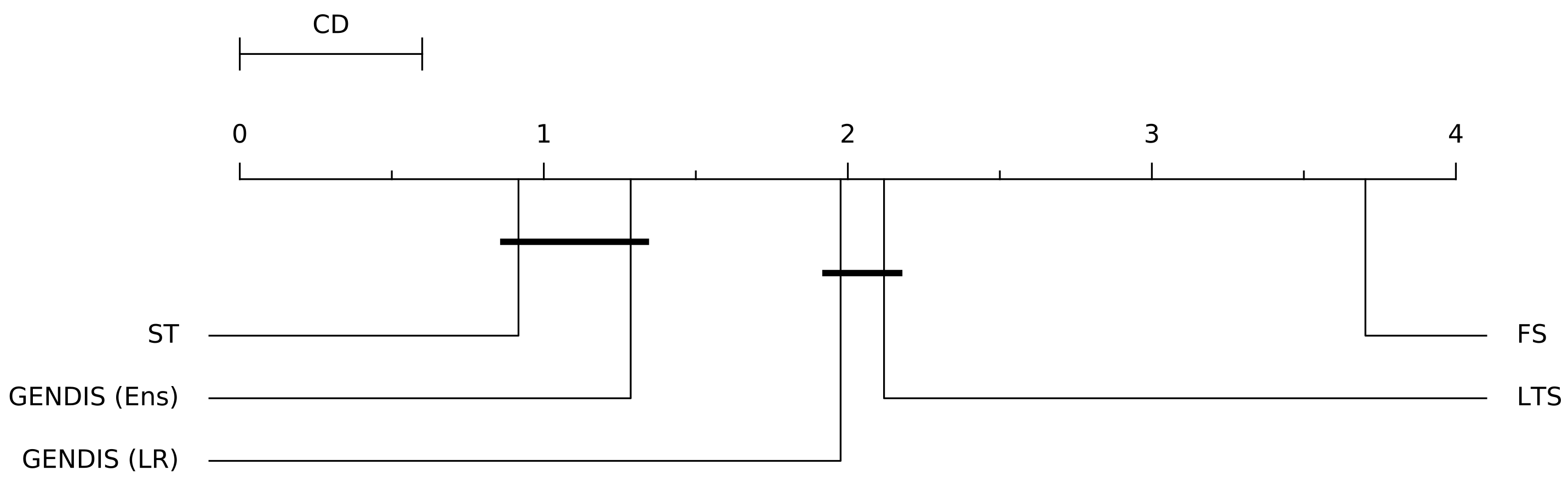

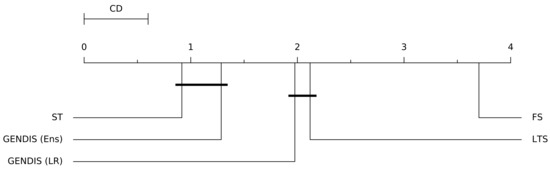

The average number of shapelets extracted by gendis is reported in the final column. The number of shapelets extracted by st in the original study equals . Thus, the total number of shapelets used to transform the original time series to distances is at least an order of magnitude less when using gendis. In order to compare the algorithms across all datasets, a Friedman ranking test [28] was applied with a Holm post-hoc correction [29,30]. We present the average rank of each algorithm using a critical difference diagram, with cliques formed using the results of the Friedman test with a Holm post-hoc correction at a significance cutoff level of in Figure 14. The higher the cutoff level, the less probable it is to form cliques. For gendis, both the results obtained with the ensemble and with the logistic regression classifier are used. From this, we can conclude that there is no statistical difference between st and gendis, while both are statistically better than fs and lts.

Figure 14.

A critical difference diagram of the four evaluated shapelet discovery algorithms with a significance level of .

4. Conclusions and Future Work

In this study, an innovative technique, called gendis, was proposed to extract a collection of smaller subsequences, i.e., shapelets, from a time series dataset that are very informative in classifying each of the time series into categories. gendis searches for this set of shapelets through evolutionary computation, a paradigm mostly unexplored within the domain of time series classification, which offers several benefits:

- evolutionary algorithms are gradient-free, allowing for an easy configuration of the optimization objective, which does not need to be differentiable

- only the maximum length of all shapelets has to be tuned, as opposed to the number of shapelets and the length of each shapelet, due to the fact that gendis evaluates entire sets of shapelets

- easy control over the runtime of the algorithm

- the possibility of discovering shapelets that do not need to be a subsequence of the input time series

Moreover, the proposed technique has a computational complexity that is multiple orders of magnitude smaller ( vs. ) than the current state-of-the-art, st, while outperforming it in terms of predictive performance, with much smaller shapelet sets.

We demonstrate these benefits through intuitive experiments where it was shown that techniques that evaluate single candidates can perform subpar on imbalanced datasets and how sometimes the necessity arises to extract shapelets that are not subsequences of input time series to achieve good separation. In addition, we compare the efficiency of the different genetic operators on six different datasets and assess the algorithm’s stability by comparing the output of two different runs on the same dataset. Moreover, we conduct an extensive comparison on a large amount of datasets to show that gendis is competitive to the current state-of-the-art while having a much lower computational complexity.

Several interesting directions can be identified for further research. First, optimizations in order to decrease the required runtime for gendis can be implemented, which would allow gendis to evolve larger populations in a similar amount of time. One significant optimization is to express the calculation of distances, one of the bottlenecks of gendis, algebraically in order to leverage gpu technologies. Second, further research on each of the operators within gendis can be performed. While we clearly demonstrated the feasibility of a genetic algorithm to achieve state-of-the-art performances with the operators discussed in this work, the amount of available research within the domain of time series analysis is growing rapidly. New insights from this domain can continuously be integrated within gendis. As an example, new time series clustering algorithms could be integrated as initialization operators of the genetic algorithm. Finally, it could be very valuable to extend gendis to work with multivariate data and to discover multivariate shapelets. This would require a different representation of the individuals in the population and custom genetic operators.

Author Contributions

Conceptualization, G.V. and F.O.; methodology, G.V.; software, G.V.; validation, G.V.; formal analysis, G.V.; investigation, G.V.; resources, G.V.; data curation, G.V.; writing—original draft preparation, G.V.; writing—review and editing, G.V., F.O., and F.D.T.; visualization, G.V.; supervision, F.O. and F.D.T.; project administration, F.O.; funding acquisition, G.V., F.O., and F.D.T. All authors read and agreed to the published version of the manuscript.

Funding

G.V. is funded by a PhD SB fellow scholarship of Fonds Wetenschappelijk Onderzoek (FWO) (1S31417N). This research received funding from the Flemish Government under the ‘Onderzoeksprogramma Artificiële Intelligentie (AI) Vlaanderen’ program.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

An implementation of gendis in Python 3 is available on GitHub (https://github.com/IBCNServices/GENDIS). Moreover, the code in order to perform the experiments to reproduce the results is included.

Conflicts of Interest

The authors declare no conflict of interest. The funding bodies had no role in any part of the study.

References

- Chaovalitwongse, W.A.; Prokopyev, O.A.; Pardalos, P.M. Electroencephalogram (EEG) time series classification: Applications in epilepsy. Ann. Oper. Res. 2006, 148, 227–250. [Google Scholar] [CrossRef]

- Liu, L.; Peng, Y.; Liu, M.; Huang, Z. Sensor-based human activity recognition system with a multilayered model using time series shapelets. Knowl. Based Syst. 2015, 90, 138–152. [Google Scholar] [CrossRef]

- Li, D.; Bissyandé, T.F.; Kubler, S.; Klein, J.; Le Traon, Y. Profiling household appliance electricity usage with n-gram language modeling. In Proceedings of the 17th IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 604–609. [Google Scholar] [CrossRef]

- Grabocka, J.; Schilling, N.; Wistuba, M.; Schmidt-Thieme, L. Learning time-series shapelets. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 392–401. [Google Scholar] [CrossRef]

- Abanda, A.; Mori, U.; Lozano, J.A. A review on distance based time series classification. Data Min. Knowl. Discov. 2019, 33, 378–412. [Google Scholar] [CrossRef]

- Ye, L.; Keogh, E. Time series shapelets: A new primitive for data mining. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, France, 28 June–1 July 2009; pp. 947–956. [Google Scholar] [CrossRef]

- Mueen, A.; Keogh, E.; Young, N. Logical-shapelets: An expressive primitive for time series classification. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 1154–1162. [Google Scholar] [CrossRef]

- Rakthanmanon, T.; Keogh, E. Fast shapelets: A scalable algorithm for discovering time series shapelets. In Proceedings of the 2013 SIAM International Conference on Data Mining, Austin, TX, USA, 2–4 May 2013; pp. 668–676. [Google Scholar] [CrossRef]

- Lin, J.; Keogh, E.; Lonardi, S.; Chiu, B. A symbolic representation of time series, with implications for streaming algorithms. In Proceedings of the 8th ACM SIGMOD Workshop on Research Issues in Data Mining and Knowledge Discovery, San Diego, CA, USA, 13 June 2003; pp. 2–11. [Google Scholar] [CrossRef]

- Lines, J.; Davis, L.M.; Hills, J.; Bagnall, A. A shapelet transform for time series classification. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 289–297. [Google Scholar] [CrossRef]

- Hills, J.; Lines, J.; Baranauskas, E.; Mapp, J.; Bagnall, A. Classification of time series by shapelet transformation. Data Min. Knowl. Discov. 2014, 28, 851–881. [Google Scholar] [CrossRef]

- Bostrom, A.; Bagnall, A. Binary shapelet transform for multiclass time series classification. In Transactions on Large-Scale Data-and Knowledge-Centered Systems XXXII: Special Issue on Big Data Analytics and Knowledge Discovery; Springer: Heidelberg, Germany, 2017; pp. 24–46. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Grabocka, J.; Wistuba, M.; Schmidt-Thieme, L. Scalable discovery of time-series shapelets. arXiv 2015, arXiv:1503.03238. [Google Scholar]

- Wistuba, M.; Grabocka, J.; Schmidt-Thieme, L. Ultra-fast shapelets for time series classification. arXiv 2015, arXiv:1503.05018. [Google Scholar]

- Hou, L.; Kwok, J.T.; Zurada, J.M. Efficient learning of timeseries shapelets. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1209–1215. [Google Scholar] [CrossRef]

- Wang, Y.; Emonet, R.; Fromont, E.; Malinowski, S.; Menager, E.; Mosser, L.; Tavenard, R. Learning interpretable shapelets for time series classification through adversarial regularization. arXiv 2019, arXiv:1906.00917. [Google Scholar]

- Guilleme, M.; Malinowski, S.; Tavenard, R.; Renard, X. Localized Random Shapelets. In Proceedings of the 2nd International Workshop on Advanced Analysis and Learning on Temporal Data, Würzburg, Germany, 16–20 September 2019; pp. 85–97. [Google Scholar] [CrossRef]

- Raychaudhuri, D.S.; Grabocka, J.; Schmidt-Thieme, L. Channel masking for multivariate time series shapelets. arXiv 2017, arXiv:1711.00812. [Google Scholar]

- Medico, R.; Ruyssinck, J.; Deschrijver, D.; Dhaene, T. Learning Multivariate Shapelets with Multi-Layer Neural Networks. Advanced Course on Data Science & Machine Learning. 2018. Available online: https://biblio.ugent.be/publication/8569397/file/8569684 (accessed on 1 February 2021).

- Bostrom, A.; Bagnall, A. A shapelet transform for multivariate time series classification. arXiv 2017, arXiv:1712.06428. [Google Scholar]

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: Cambridge, UK, 1998. [Google Scholar]

- Julstrom, B.A. Seeding the population: Improved performance in a genetic algorithm for the rectilinear steiner problem. In Proceedings of the 1994 ACM Symposium on Applied Computing, Phoenix, AZ, USA, 6–8 March 1994; pp. 222–226. [Google Scholar] [CrossRef]

- Sheblé, G.B.; Brittig, K. Refined genetic algorithm-economic dispatch example. IEEE Trans. Power Syst. 1995, 10, 117–124. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using dynamic time warping to find patterns in time series. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Newport Beach, CA, USA, 14–17 August 1997; Volume 10, pp. 359–370. [Google Scholar] [CrossRef]

- Large, J.; Lines, J.; Bagnall, A. The heterogeneous ensembles of standard classification algorithms (HESCA): The whole is greater than the sum of its parts. arXiv 2017, arXiv:1710.09220. [Google Scholar]

- Dietterich, T.G. Ensemble methods in machine learning. In Proceedings of the International Workshop on Multiple Classifier Systems, Cagliari, Italy, 21–23 June 2000; pp. 1–15. [Google Scholar] [CrossRef]

- Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Holm, S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar] [CrossRef]

- Benavoli, A.; Corani, G.; Mangili, F. Should we really use post-hoc tests based on mean-ranks? J. Mach. Learn. Res. 2016, 17, 1–10. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).