Abstract

Position recognition is one of the core technologies for driving a robot because of differences in environment and rapidly changing situations. This study proposes a strategy for estimating the position of a camera mounted on a mobile robot. The proposed strategy comprises three methods. The first is to directly acquire information (e.g., identification (ID), marker size and marker type) to recognize the position of the camera relative to the marker. The advantage of this marker system is that a combination of markers of different sizes or having different information may be used without having to update the internal parameters of the robot system even if the user frequently changes or adds to the marker’s identification information. In the second, two novel markers are proposed to consider the real environment in which real robots are applied: a nested marker and a hierarchical marker. These markers are proposed to improve the ability of the camera to recognize markers while the camera is moving on the mobile robot. The nested marker is effective for robots like drones, which land and take off vertically with respect to the ground. The hierarchical marker is suitable for robots that move horizontally with respect to the ground such as wheeled mobile robots. The third method is the calculation of the position of an added or moved marker based on a reference marker. This method automatically updates the positions of markers after considering the change in the driving area of the mobile robot. Finally, the proposed methods were validated through experiments.

1. Introduction

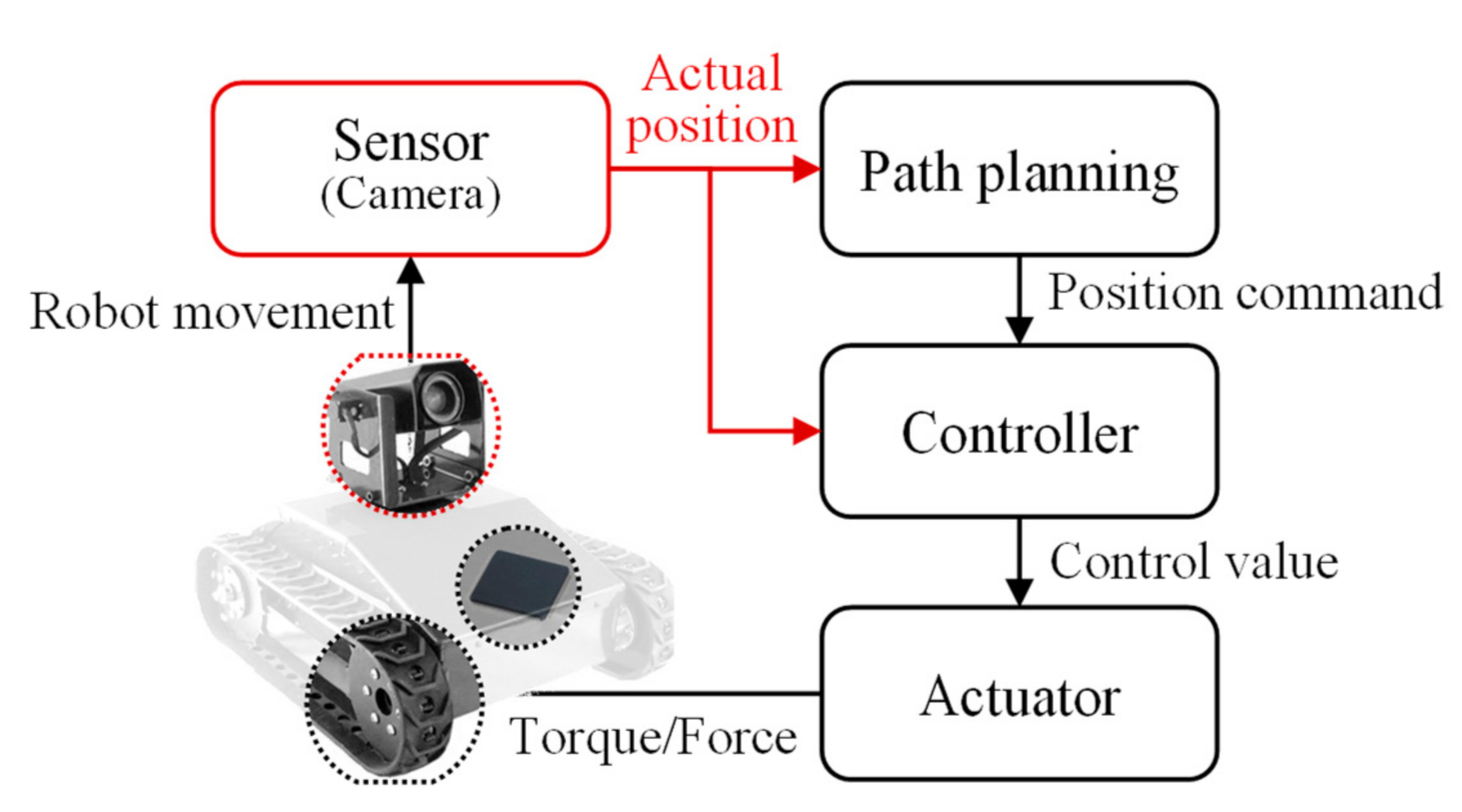

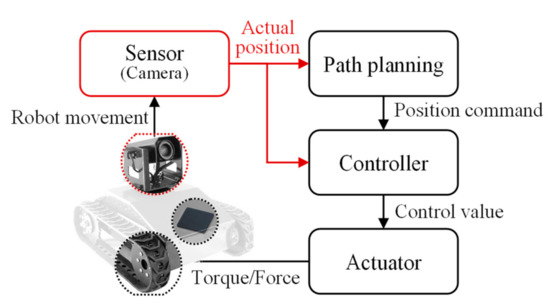

Mobile robots are widely used in various fields such as entertainment, medicine, rescue, the military, space, and agriculture [1]. Therefore, the technological development and performance improvement of mobile robots have been investigated in numerous aspects. In particular, studies are being actively conducted on the path planning of mobile robots in a given environment and on the control required to accurately move a robot along a planned path [2,3,4,5,6,7,8]. Figure 1 shows a simple driving mechanism for a mobile robot. A path is appropriately planned based on the surrounding environment. The location of the mobile robot must be accurately recognized to correctly perform a given command. Its position and direction information is identified through position recognition, the core technology of mobile robots [9] Position recognition, broadly speaking, can be either outdoor or indoor, where the former is mainly studied using the global positioning system (GPS) [10]. However, it is difficult to apply the GPS to indoor position recognition because it requires precise location information [11], so vision-based position recognition technology is used. This technology applies to position recognition based on the features of the environment and recognition of an artificial marker-based position [12]. Position recognition that uses features does not require additional work to be applied to the service environment; however, a complex algorithm is required to recognize features [13]. In addition, this method is difficult to apply to environments composed of monotonous structures such as corridors and factory walls having a small number of features.

Figure 1.

Driving mechanism of the mobile robot.

To solve this problem, research is being actively conducted on estimating the position of a camera using artificial markers such as augmented reality (AR) or quick response (QR) markers [14,15,16,17,18]. Even though these methods use a low central processing unit (CPU), relatively fast and accurate position recognition is possible because artificial markers are recognized through their patterns even in a monotonous environment [14,19,20,21]. In addition, it is possible to evaluate the exact size of a marker by recognizing the four corners of the marker. Marker-based camera position recognition is used not only to recognize the position of a mobile robot but also to improve robot functions such as object position recognition, robot arm control, and human–robot interaction. However, the previous methods of estimating the position of a camera using markers experienced certain problems. First, the correct size and position of markers must be predefined. Therefore, this information must be known beforehand based on data or must be calculated using an additional algorithm. Furthermore, when an indoor environment changes or the driving region of the robot is expanded, a marker must be moved or added to a predefined, precise position. However, this becomes more difficult as the number of markers increases, and the region becomes wider. In addition, the data-based information in the position estimation algorithm must be updated when the size and position of a marker change. Second, when the position of the camera changes because of the movement of the robot, the distance required to recognize a marker decreases according to the size of the marker and the distance between the marker and camera. This limits the movement range of the mobile robot.

In this paper, a strategy for estimating the position of a camera mounted on a mobile robot is developed based on proposed markers. The proposed strategy includes three methods to overcome the abovementioned problems.

First, the proposed markers have information such as identification (ID), marker size, and marker type. Therefore, to recognize the position of the camera, information can be obtained directly from the marker. The advantage of this marker system is that a combination of markers of different size or having different information may be used without needing to update the internal parameters of the robot system. This is true even if the user frequently changes or adds to the identification information of the marker.

Second, the two markers, a nested marker and a hierarchical marker, are proposed to increase the recognition distance of the markers considering the movement of the camera due to the mobile robot. The nested marker is used when the camera moves vertically along the center of the marker like a drone. The hierarchical marker is used when the center of the marker and the height of the camera do not match such as when a wheeled robot moves horizontally on a floor. These markers contain marker size information based on internal patterns.

Third, a new marker is added, or the position of an existing marker is changed, as the activity area of the robot changes or becomes wider. A method of predicting the position of the added or moved marker based on a known marker is proposed. Therefore, as the position of the marker is automatically updated, the process of accurately attaching the marker to the predefined position and updating the position information in the algorithm is not required.

Finally, the proposed methods are verified via experiments. First, the position of the camera is calculated for markers of various sizes using an existing method and the proposed method and then compared. The proposed method accurately estimates the camera position regardless of the marker size. Next, the method of calculating the position of the markers is verified using 12 markers attached at regular intervals along a corridor. Their positions are calculated using the proposed method and compared with the actual measured positions; the results show reasonable agreement. In addition, it was demonstrated that the location information of the markers is updated when the location of an existing marker is changed, or a new marker is added. Last, the recognition distances of three different markers and the proposed markers are compared. It was confirmed that the recognition distance for the proposed markers is more than that for the single-size markers.

2. Proposed Strategy for Camera Position Recognition

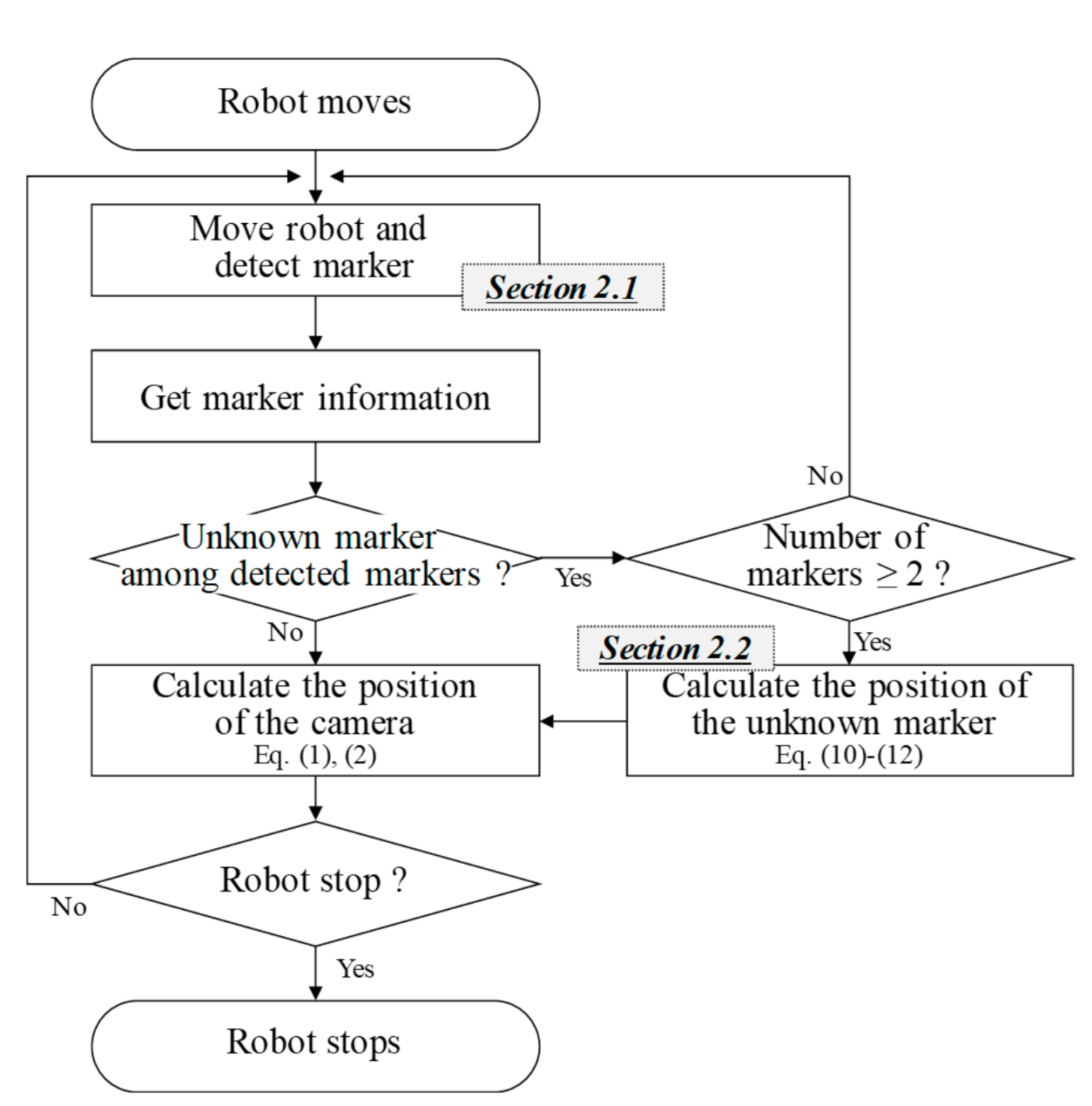

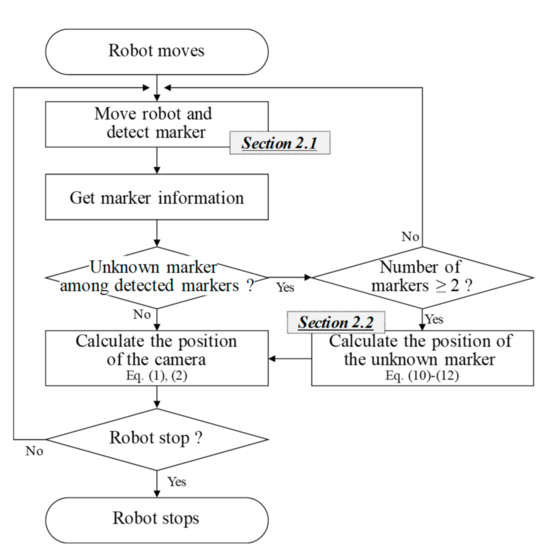

This paper proposes a method for estimating the position of a camera based on markers containing size information. The proposed method considers a situation in which new markers are added and existing markers are repositioned as the driving area of the robot expands or the environment changes. Figure 2 shows the flow chart of the proposed method.

Figure 2.

Flow chart of the proposed method.

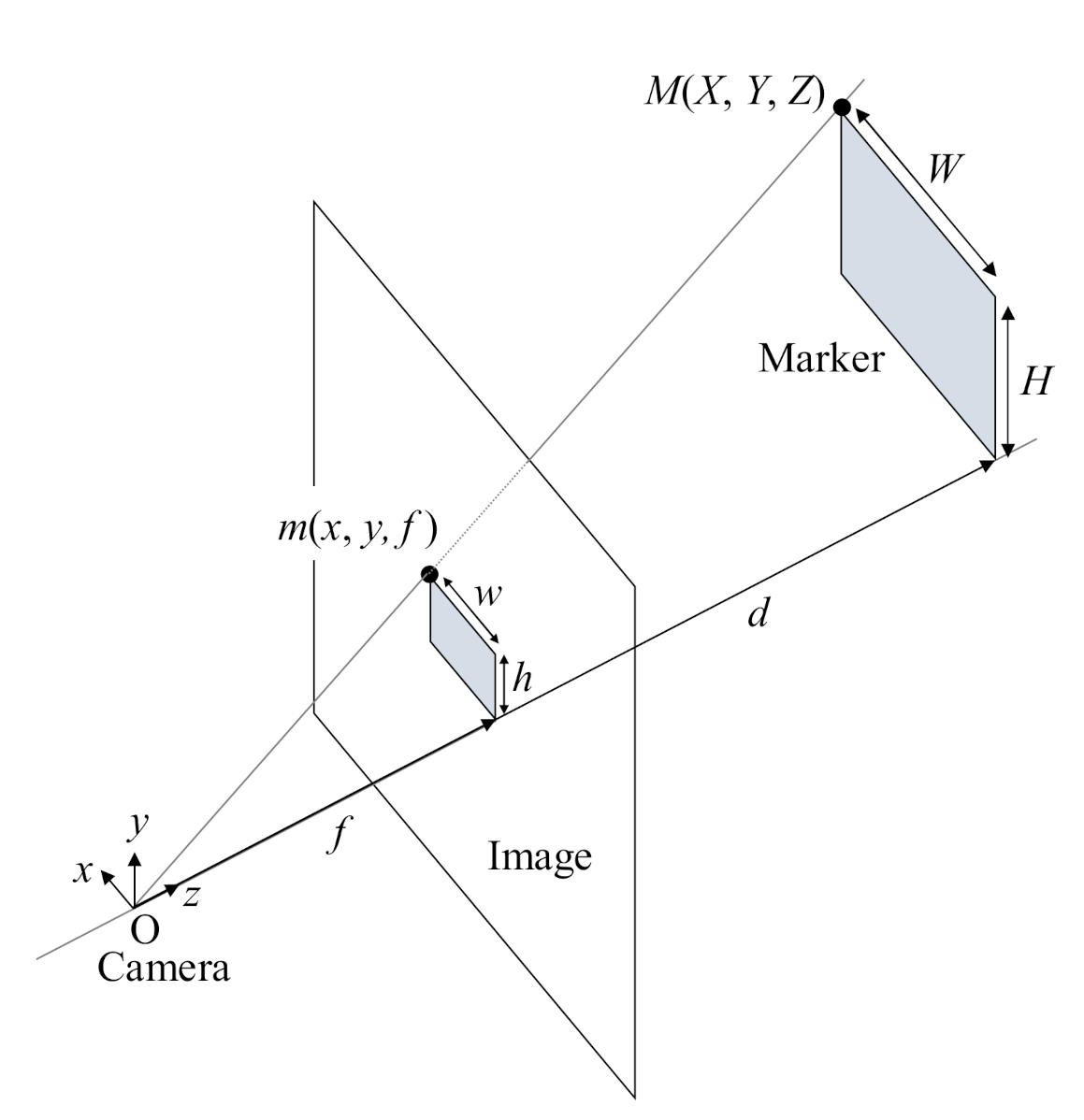

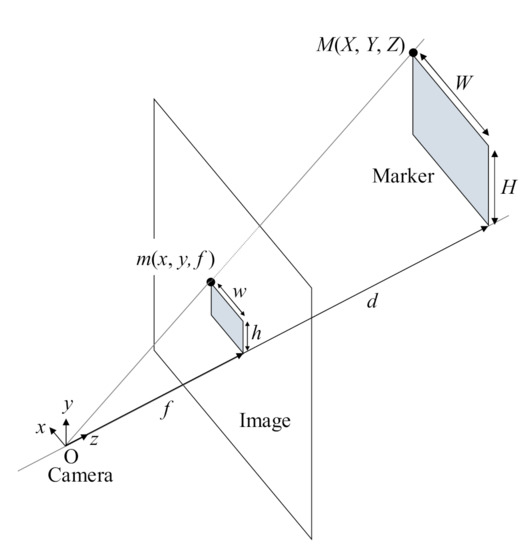

First, as the robot moves, markers are detected through the camera. A region of interest (ROI) is set for recognizing markers within the image obtained using the camera. The marker image is obtained by extracting the corners of the marker in the ROI. This image is transferred to an information extraction module, and the ROI is moved if the marker is not recognized. Next, the information about the marker contained in the image is extracted. This information includes the type, length of one side, and identification (ID). The proposed marker has a unique ID because it consists of multiple markers for increasing the recognition distance of the camera. The relationship between a square marker and the camera position is derived using a mathematical method [22]. Figure 3 shows the relationship between the actual position (M) of the marker in three-dimensional (3-D) space, the image of the marker position (m) projected in two-dimensional (2-D) space, and the position of the camera (O) [22].

Figure 3.

Relationship between camera, image, and marker.

The coordinates of the marker in the projected image are expressed as follows:

In addition, d is calculated using Equation (2).

The distance between the camera and the marker is d; the focal length of the camera is f; the width and height of the actual marker are W and H, respectively; and the width and height of the marker in the projected image are w and h, respectively.

The proposed marker is described in Section 2.1. When unknown markers without predefined location information are detected, their position is calculated. As the relative positions of the unknown markers are calculated based on the markers with predefined position information, the relative position can be calculated only if the number of recognized markers is more than two. A representative marker is selected from among the detected markers and the relative positions of the unknown markers are calculated based on the representative marker. Finally, the global positions of all markers are estimated using the global position of the representative marker and the calculated relative positions of the markers in a local coordinate system. The proposed method is based on the reference marker, and it assumes that there is no dead zone with no detected marker. The method for calculating the position of unknown markers is described in Section 2.2. After the position information of the detected markers is updated, the position of the camera is calculated using this information. First, the size of the markers in the image is calculated based on the resolution of the camera and the focal length of the camera for each pixel. Then, the camera position is evaluated using the calculated size of the marker in the image, the ID, and the actual size and type of the markers. If the marker information required for estimating the camera position is included inside a marker, it is possible to use a marker of the required size according to various environments without modifying the algorithm. In addition, it becomes easier to add and move markers when the driving area of the robot changes.

2.1. Proposed Markers Considering the Movement of the Camera

As the camera is mounted on the robot, the position of the camera continuously changes as the robot moves. Accordingly, the size of a marker in the projected image changes with the distance between the camera and marker. Table 1 shows the images of a marker with a size of 200 × 200 pixels and a marker with a size of 600 × 600 pixels captured by the camera at different distances from the markers. The first marker (200 × 200 pixels) is projected more clearly as the distance between the camera and marker decreases. However, the size of the marker in the image decreases as the distance increases. In contrast, the second marker (600 × 600 pixels) can be recognized even at a distance of 150 cm. However, only a part of the marker is visible at a distance of 10 cm, making it impossible to recognize.

Table 1.

Images of markers at different distances.

This paper proposes a method that uses markers of different sizes to solve the abovementioned problem and increase the recognition distance of markers.

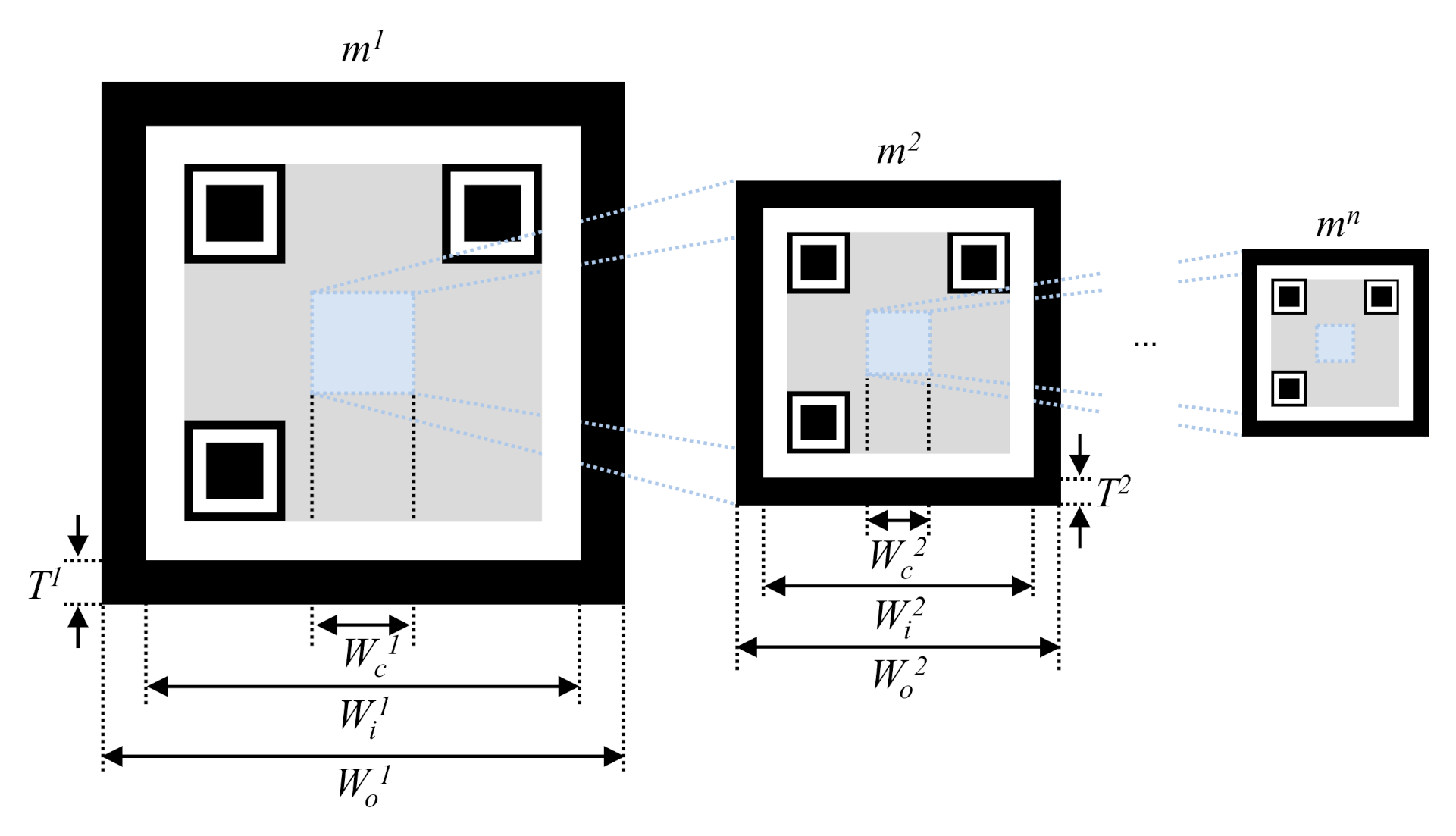

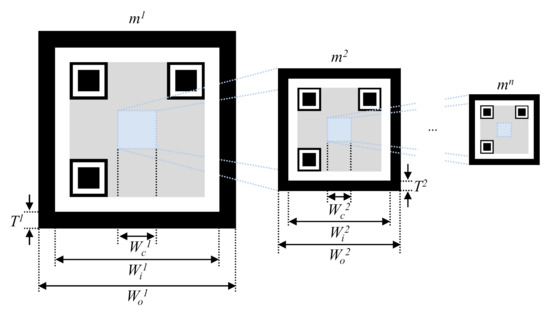

2.1.1. Nested Marker

A nested marker is one of the markers applied to the proposed method for camera position recognition. Figure 4 shows the proposed nested marker. It is composed of n nested markers. All markers have a black border to make them easier to find within an image. The length and thickness of the kth marker are and Tk, respectively. A white border is inserted within the black border to make the marker easier to recognize [23]. The thicknesses of the white and black borders are the same. Therefore, the length of one side of the white border () is calculated as follows:

Figure 4.

Structure of the proposed nested marker.

As shown in Figure 4, lower markers are nested at the center of upper markers. The nested area excludes the area with the marker pattern, and the width () of the nested area is as expressed follows:

where β represents the ratio of the sizes of the overlapping upper and lower markers.

β is set to be 20% or lower, and the total number of markers (n) is determined according to the recognition capability of the camera.

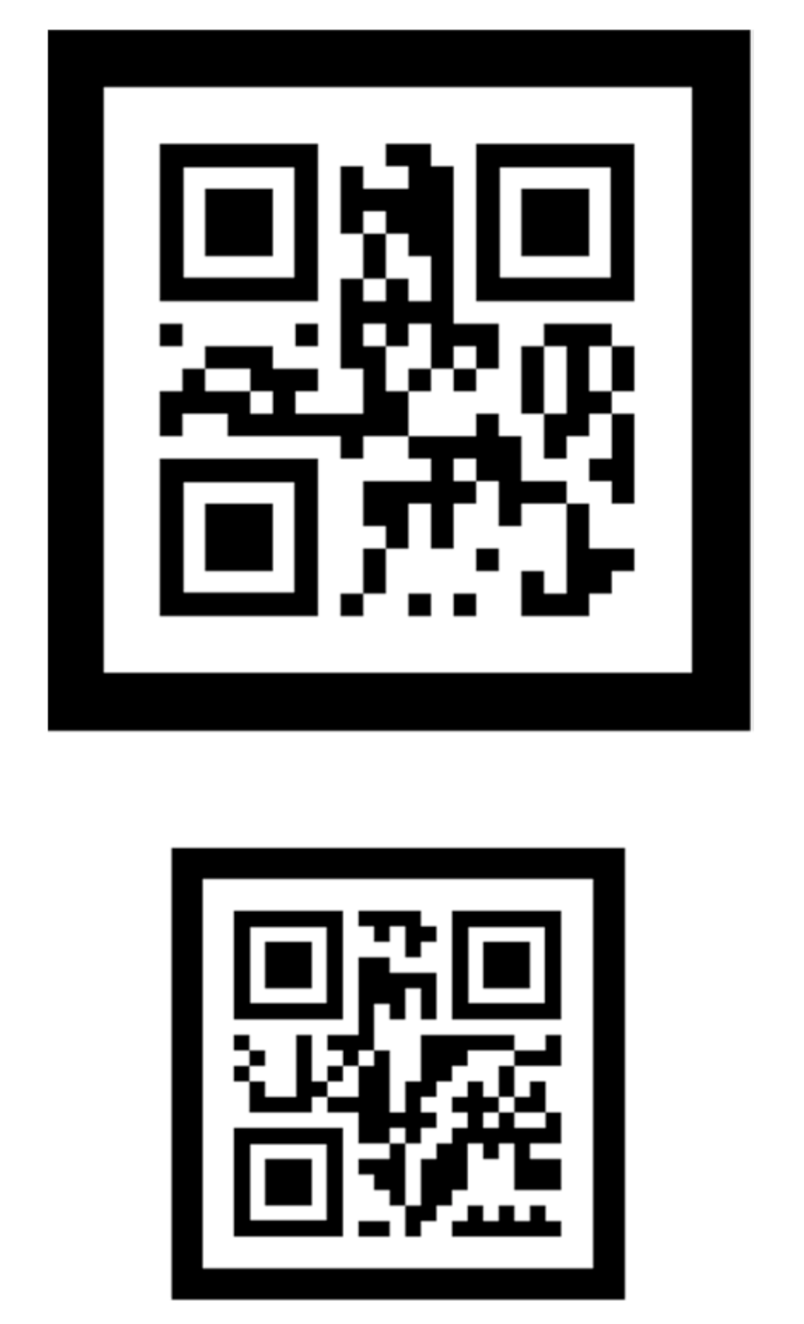

Figure 5 shows a 2-D nested marker. Each marker includes information about its size.

Figure 5.

2-D nested marker.

As the proposed marker is nested based on the center of markers, the recognition distance of the marker can be increased. Therefore, the proposed nested marker is suitable for flying robots like drones that take off and land vertically. This is because the center of the marker can be matched with the camera facing the ground.

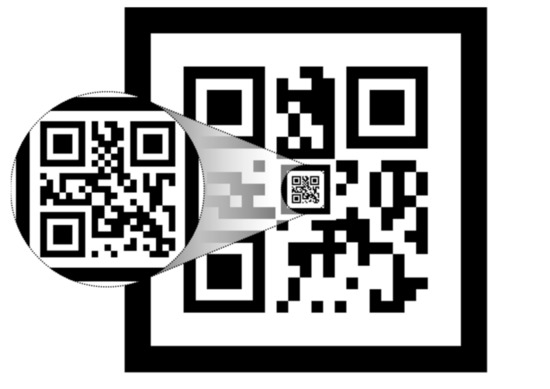

2.1.2. Hierarchical Marker

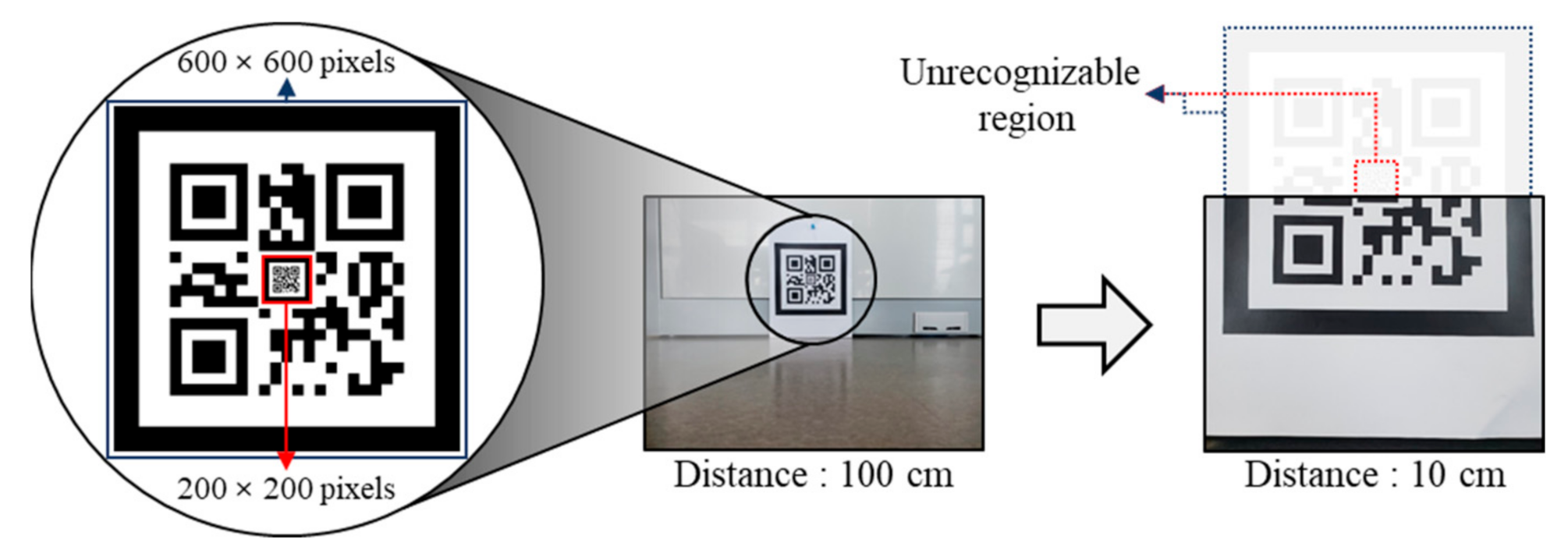

When the camera is mounted on a wheeled mobile robot that moves horizontally with respect to the ground, the image captured by the camera consists of the lower end of the larger marker when the distance between the robot and marker is less. As a result, the markers nested inside the larger marker are not visible. Figure 6 illustrates this problem.

Figure 6.

Problem in using nested marker with mobile robot.

The Intel RealSense D435 camera is used. A marker with a size of 200 × 200 pixels is nested inside a marker with a size of 600 × 600 pixels. When the distance between the camera and the nested marker is 10 cm, the marker is not recognized because it is out of the measuring area of the camera.

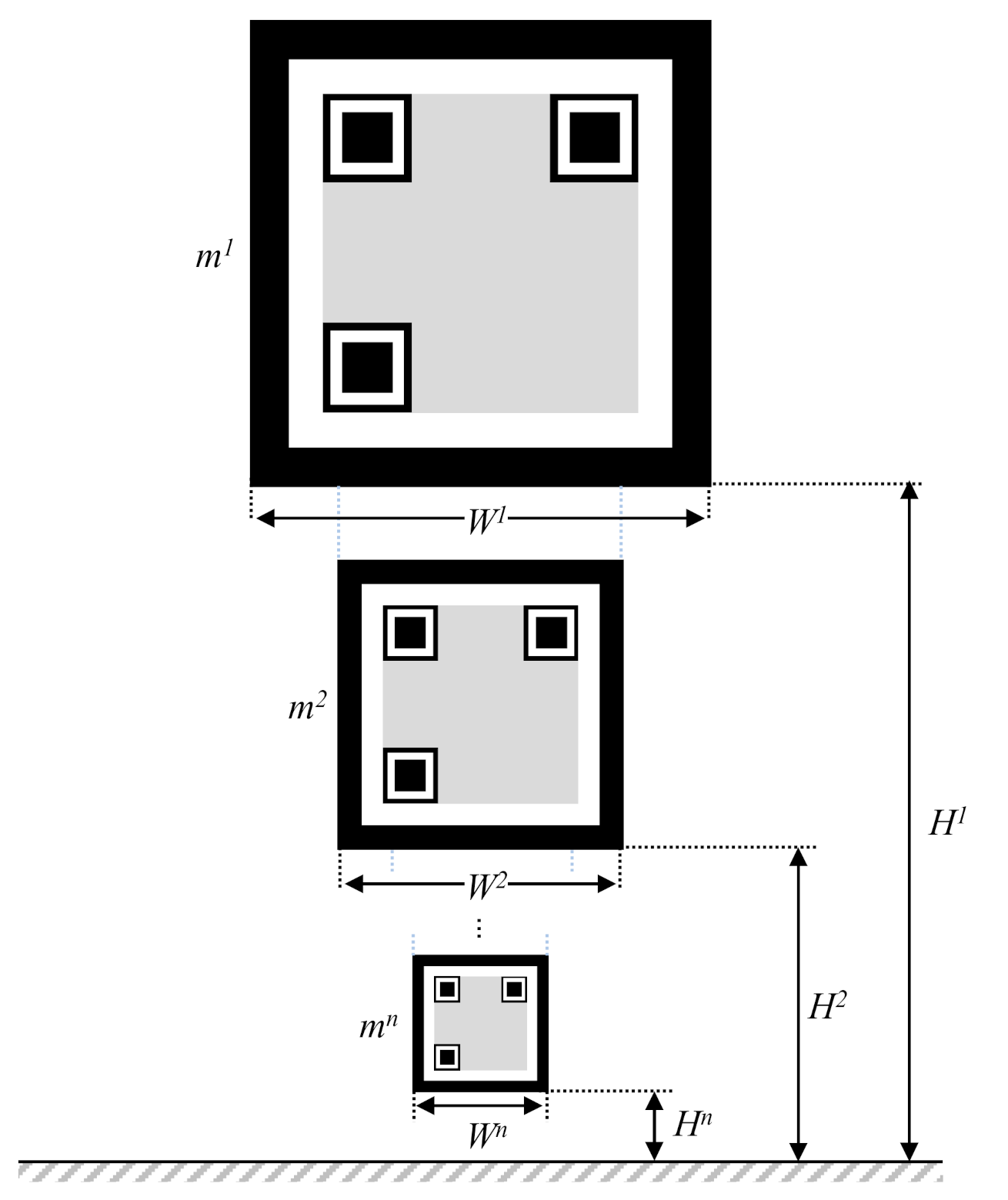

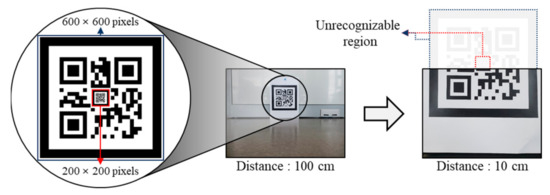

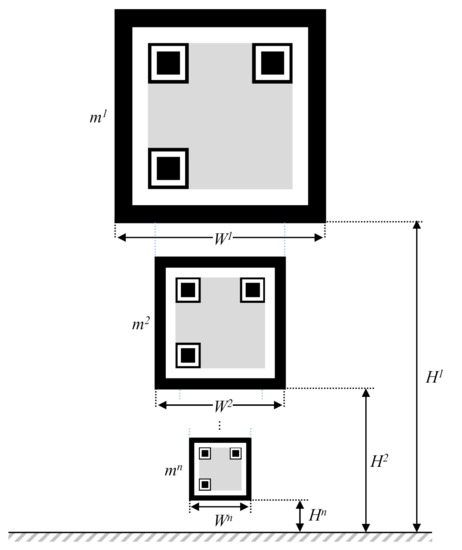

This problem is related to the height and angle of view of the camera. A hierarchical marker is proposed to overcome this problem. Multiple markers are hierarchically stacked in this marker, as shown in Figure 7. The height and width of the kth marker is Hk and Wk respectively.

Figure 7.

Structure of the proposed hierarchical marker.

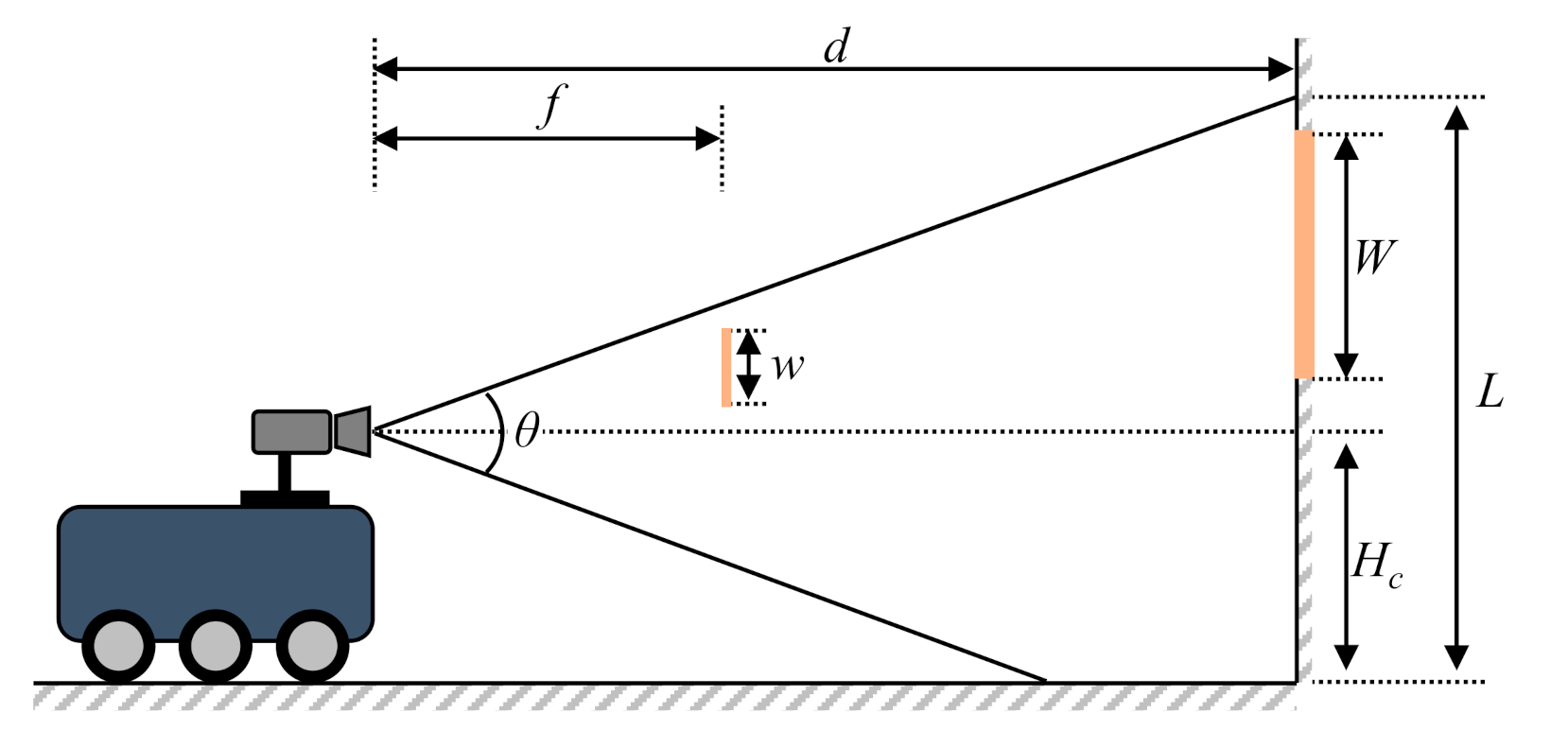

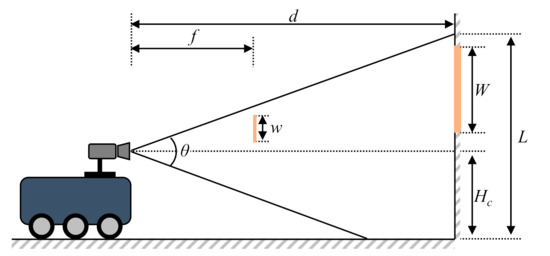

Figure 8 shows the relationship between the camera, marker, and marker image recognized by the camera. This relationship is used to derive the distance (d) from the center of the camera to the marker and the height (L) that the camera can measure, as follow:

where f is the focal length of the camera and θ is the maximum angle of view of the camera.

Figure 8.

Relationship between the camera, the marker, and the marker image.

These values are determined based on the specifications of the camera.

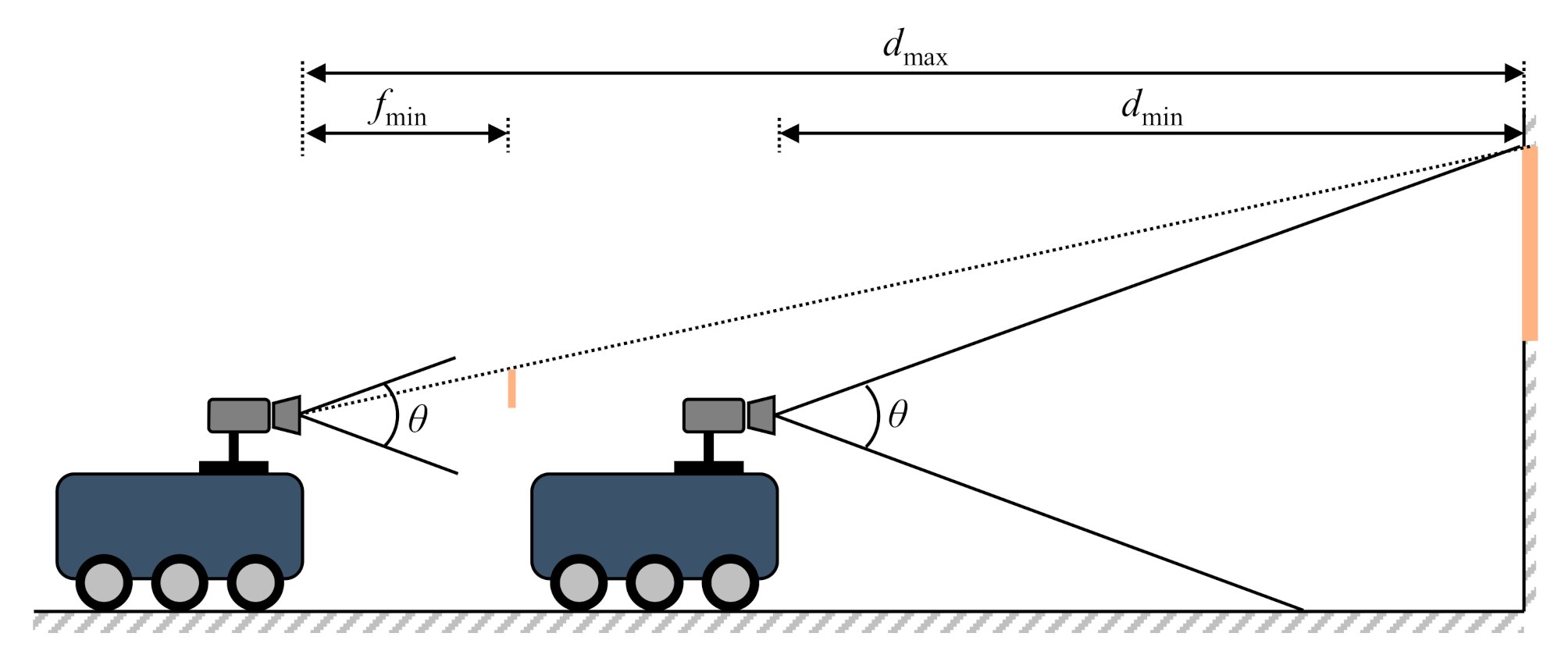

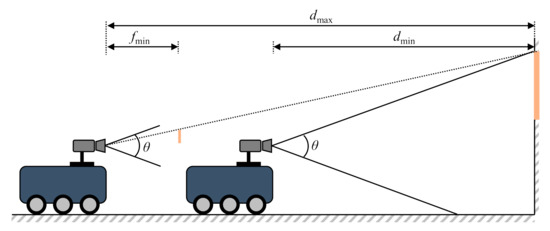

The abovementioned equations are used to calculate the maximum and minimum distances for recognizing a single marker and are calculated as shown in Figure 9.

where fmin is the minimum focal length of the camera.

Figure 9.

Maximum and minimum recognition distances.

Based on the previously calculated maximum and minimum recognition distances for a single marker, the condition for recognizing a marker in all sections as the robot moves is determined as follows:

Figure 10 shows the proposed 2-D hierarchical marker.

Figure 10.

2-D hierarchical marker.

2.1.3. Characteristics of the Proposed Markers

The proposed markers have information such as identification (ID), marker size, and marker type. Therefore, to recognize the position of the camera, the information of the marker can be obtained directly from it. The advantages of this marker system are as follows:

- (1)

- A mixture of markers of different sizes or containing different included information may be used.

- (2)

- It does not need to update the internal parameters of the robot system even if the user frequently changes or adds to the identification information of the marker.

Table 2 shows the characteristics of the existing markers used in the camera positioning system. The proposed markers can hold a larger amount of information than the others. This allows the markers containing more information to recognize the position of the camera. The robot system does not need to store this information, which allows it to have the advantage of adding markers at any time without changing the internal parameters of the robot system.

Table 2.

Characteristics of markers used in camera positioning systems.

2.2. Estimating the Position of Unknown Markers

This section explains the calculation of the position of a marker with unknown position information among detected markers. The proposed method determines the global positions of all markers by calculating the relative positions between them based on the markers defined in the global coordinate system. Markers are defined as follows:

- Reference marker: A marker whose position is predefined or calculated in the global coordinate system.

- Representative marker: A marker for calculating the relative positions of markers in the local coordinate system.

- Unknown marker: A marker whose position information is unknown because it has been newly added or moved.

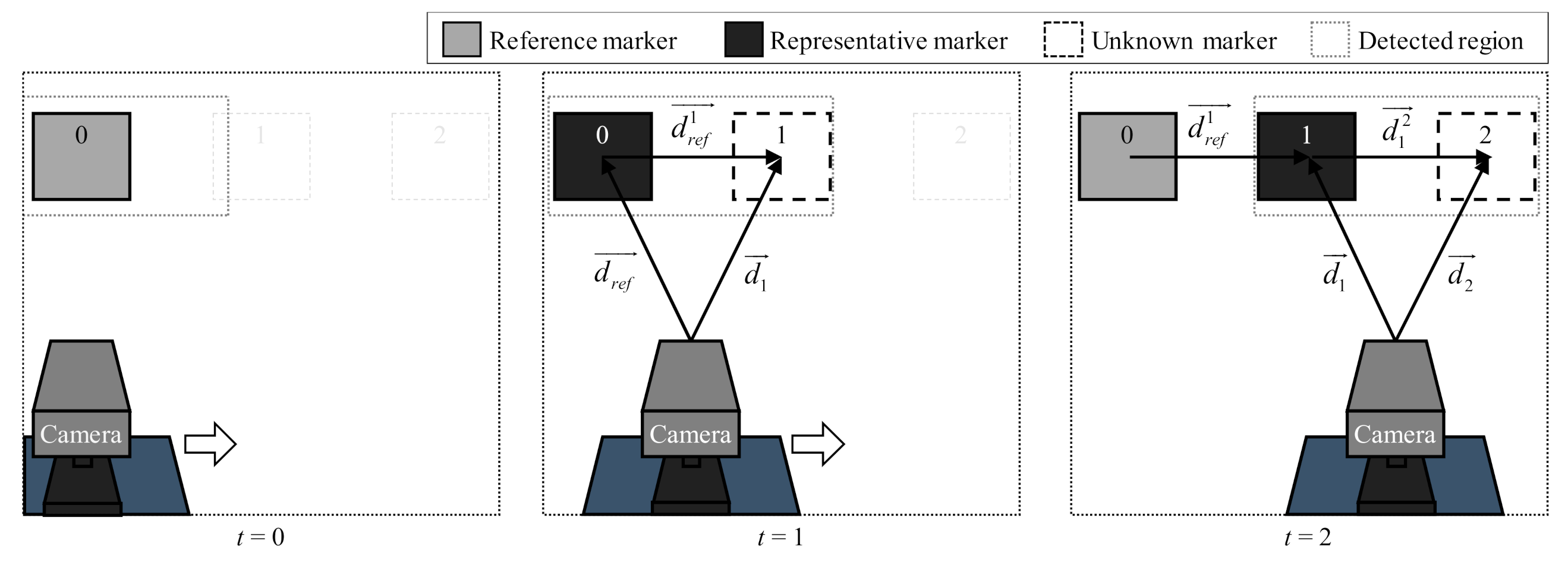

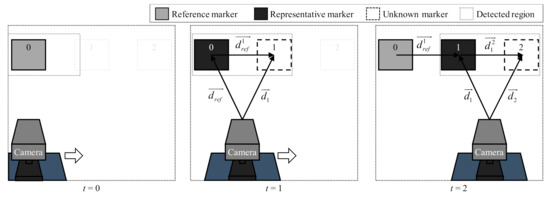

When two or more markers are recognized by the camera, the relative position of an unknown marker is calculated based on a representative marker. Among the recognized markers, a representative marker is determined and used to calculate a relative position vector. Figure 11 shows the process of calculating the marker position as the camera moves.

Figure 11.

Process of calculating unknown marker position.

When t = 0, the camera recognizes only the reference marker. Therefore, no calculation is performed because there are fewer than two recognized markers. A new marker (marker 1) is recognized at t = 1, Then, a reference marker is determined as a representative marker to calculate the relative position of marker 1. In addition, the relative position between the two markers and the absolute position of marker 1 are calculated.

At t = 2, marker 1 is selected as a representative marker because its position information was calculated in the previous step. Then, the relative and absolute positions of marker 2 are calculated in a similar manner.

The position information of unknown markers is updated as this process is repeated.

This method can be used to predict the position of markers not only when markers are moved or added based on environmental changes but also when markers are added to inaccurate positions.

3. Verification of Proposed Method

3.1. Marker-Based Camera Position Recognition

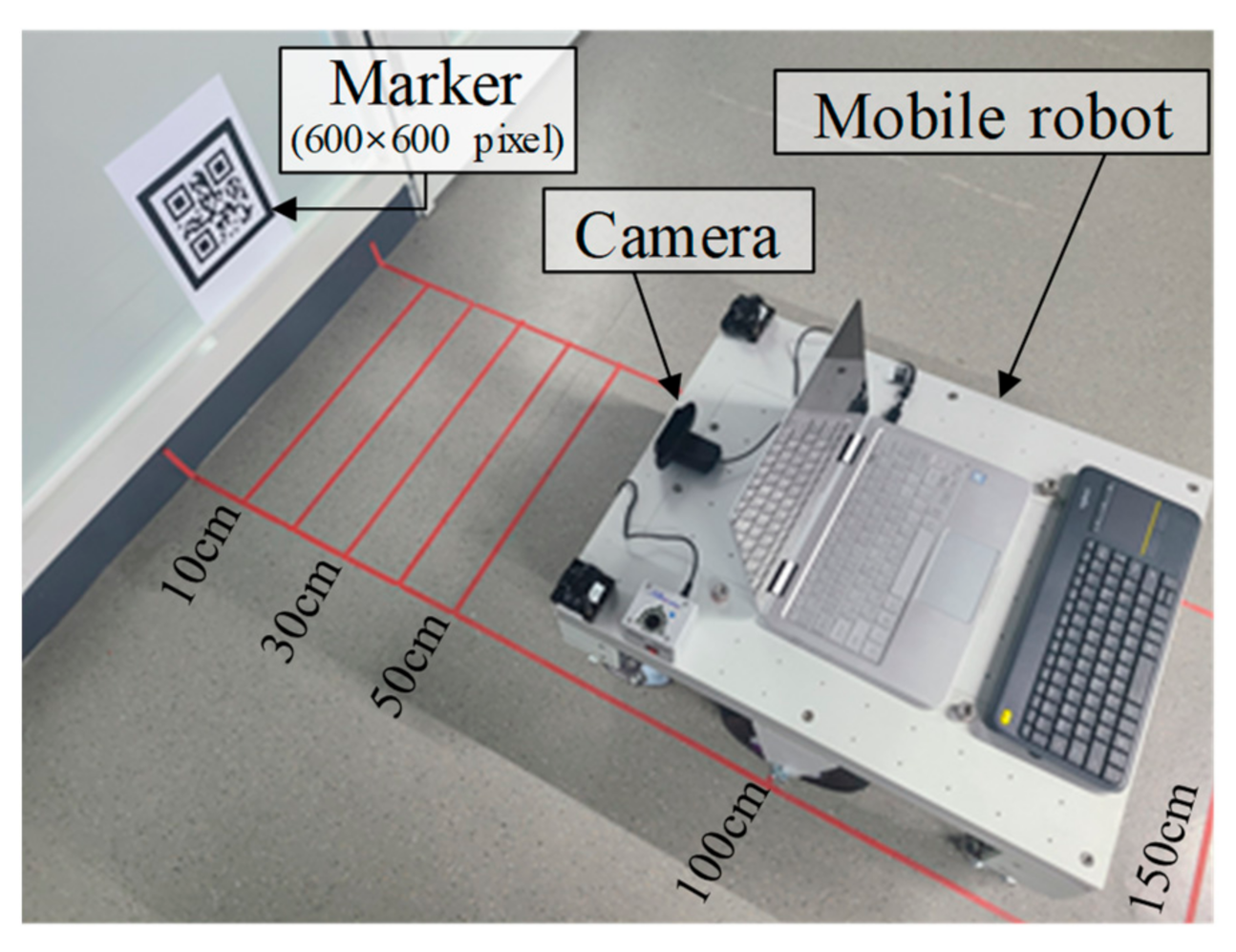

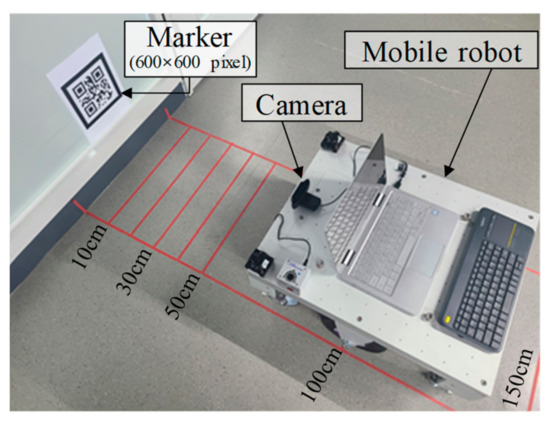

The proposed method for camera position recognition was verified by comparing it with a previous method using three single markers of different sizes. The sizes of the three markers were 200 × 200, 400 × 400, and 600 × 600 pixels, respectively, and an Intel RealSense D435 camera was used [23]. The distances between the markers and camera were calculated based on the Kanade–Lucas–Tomasi tracking algorithm of OpenCV [25]. The parameters for the previous method were set considering each pixel. As shown in Figure 12, the camera position was calculated at distance of 10, 30, 50, 100, and 150 cm.

Figure 12.

Test setup for verification of proposed method.

Table 3 shows the camera positions calculated using the proposed and previous methods.

Table 3.

Position of camera.

The camera position, calculated using the previous method, showed an error of at least 0.03% and at most 0.7% when the actual size of the marker matched the size of the marker set in the camera estimation algorithm. When the marker size was set as 200 × 200 pixels in the previous method, the error in estimating the camera position was small for the marker with a size of 200 × 200 pixels. However, it was calculated as 1/2 and 1/3 for the markers with sizes of 400 × 400 pixels and 600 × 600 pixels, respectively. On the contrary, as the proposed method acquired information from the markers and adaptively estimated the sizes of the markers, the error in the estimated camera position was 0.03% to 0.7% for all three markers.

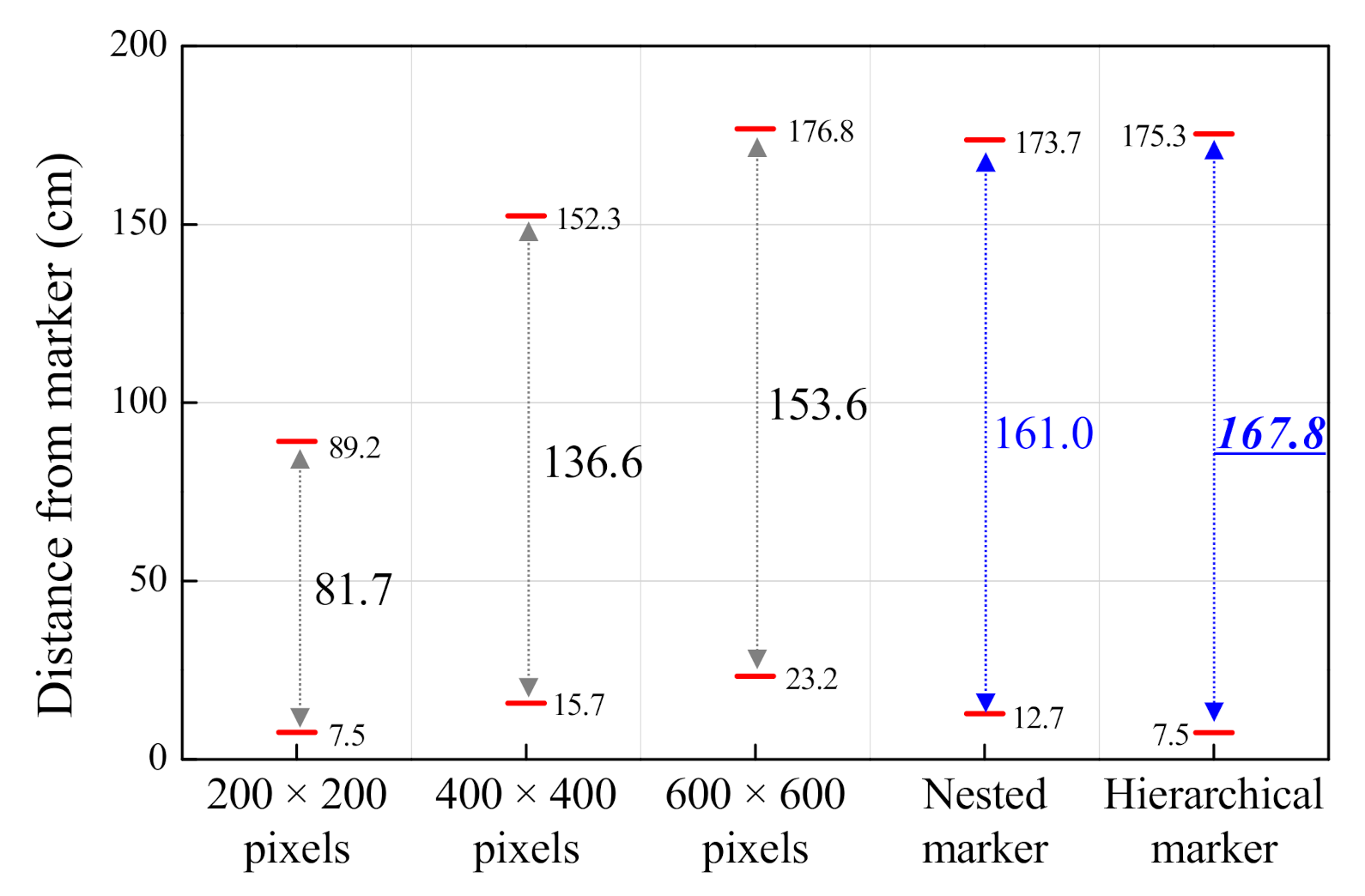

3.2. Recognition Distance of the Proposed Markers

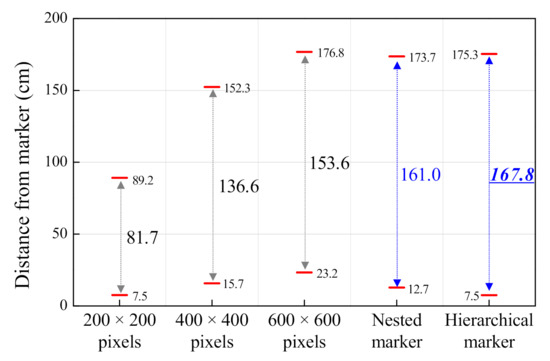

The effectiveness of the proposed nested marker and hierarchical marker was validated by comparing the measured recognition distances of the proposed markers and three conventional markers of different sizes. The nested marker was composed of two markers, one with a size of 600 × 600 pixels and the other with a size of 200 × 200 pixels. The hierarchical marker was constructed using markers with size of 200 × 200, 400 × 400, and 600 × 600 pixels. The camera was mounted on a mobile robot 30 cm above the ground. The marker was attached to a wall 50 cm above the ground. Figure 13 shows the test results.

Figure 13.

Recognition distances for different markers.

It was verified that the recognition distance increased when two proposed markers were used as compared to when a single-size marker was used. In addition, the minimum recognition distances of the hierarchical and nested markers were 7.5 cm and 12.7 cm, respectively. The recognition distance of the hierarchical markers was approximately 58.7% less than that of the nested marker because, as mentioned in Section 2.1.2, the 200 × 200 pixels’ marker was not recognized as the camera was approaching it. Therefore, the hierarchical marker is suitable for maximizing the recognition distance in the case of a camera moving horizontally with respect to the ground, such as in a wheeled mobile robot.

3.3. Position Recognition for Unknown Marker

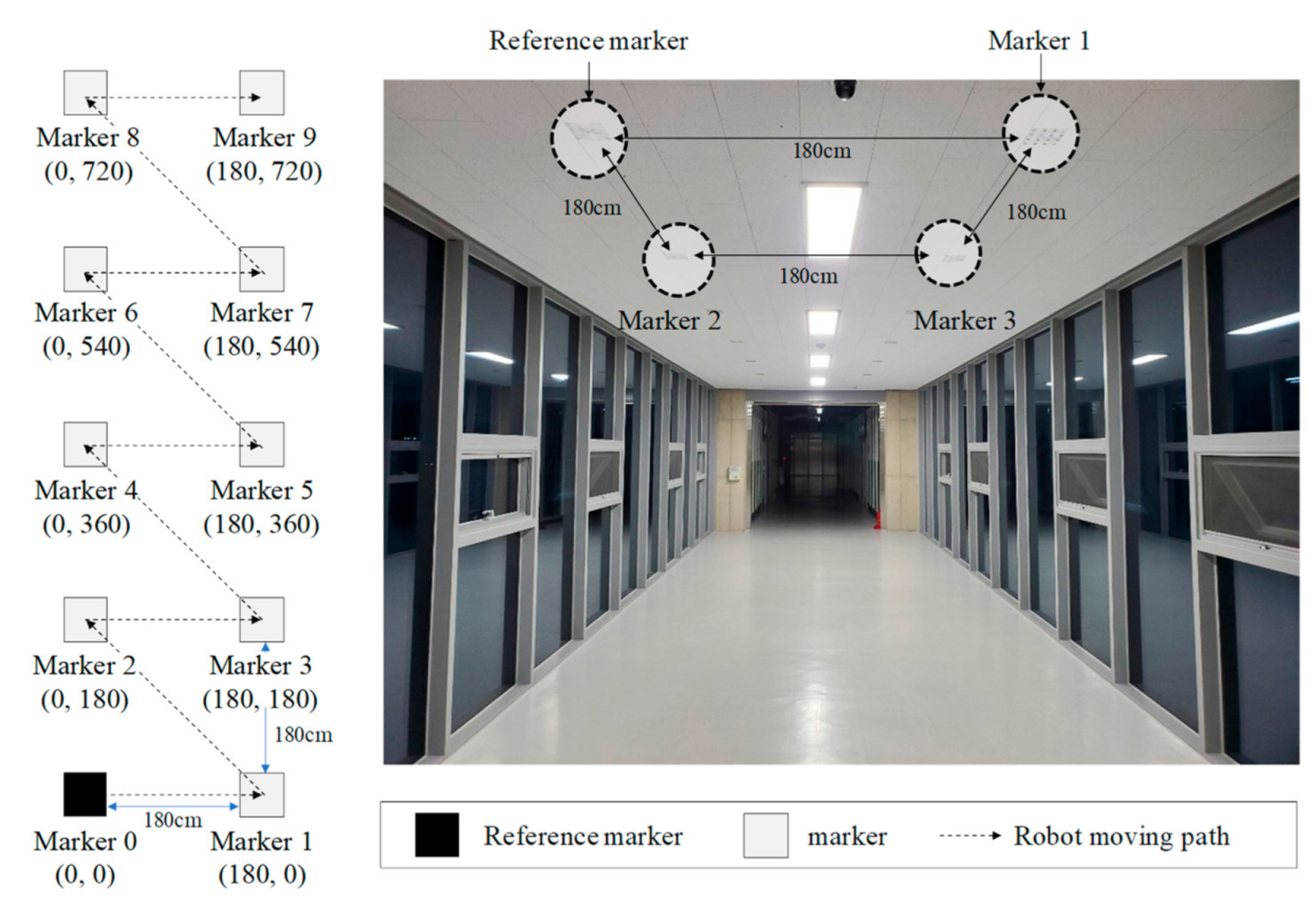

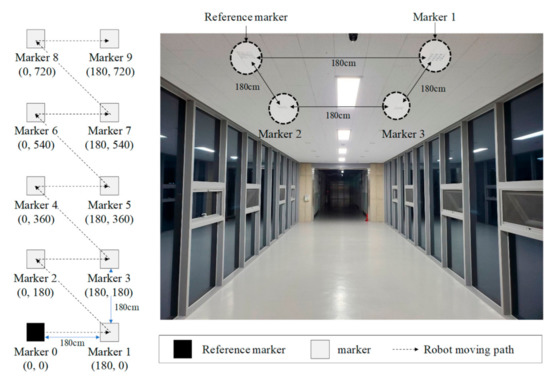

Figure 14 shows the test setup used to verify the proposed method of calculating marker position [26]. Ten markers were attached to a corridor at equal intervals of 180 cm. The wheeled mobile robot moved from marker 0 to 9 at a speed of 0.1 m/s [27]. The global position of the reference marker (marker 0) was set as 0, 0. The positions of the markers were measured at 10 Hz.

Figure 14.

Test setup to verify method of calculating marker position.

Table 4 lists the global positions of the markers calculated using the proposed method and the actual positions of the markers. The results showed reasonable agreement.

Table 4.

Positions of markers.

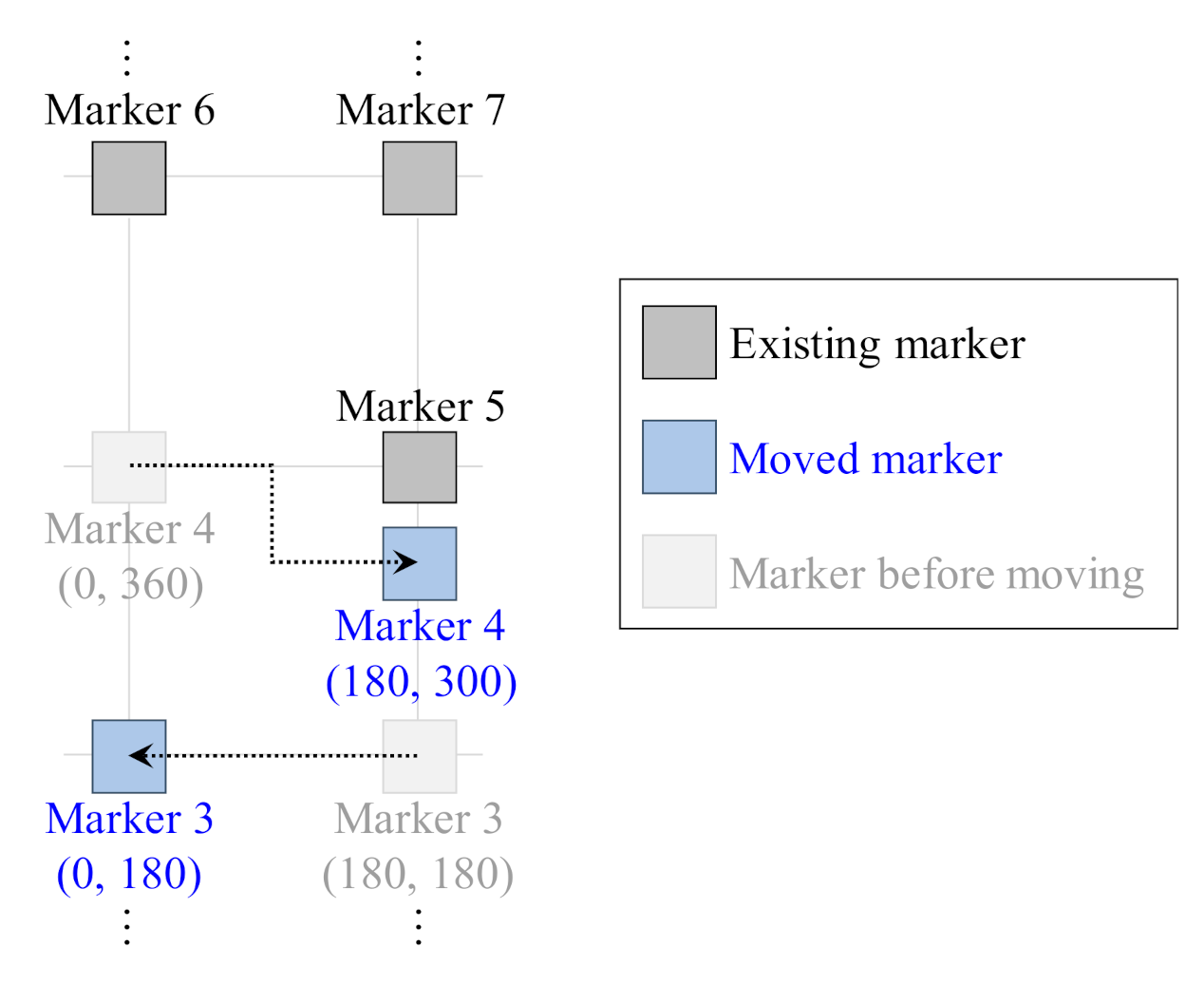

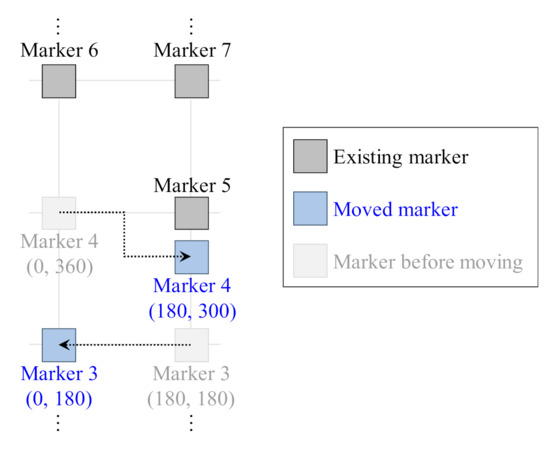

In addition, tests were performed to move markers and add new markers considering the frequent movement of markers or the use of additional markers owing to the expansion of the region. Figure 15 shows the test conditions for predicting marker movement. The position of marker 4 was changed from (0, 360) to (60, 420) in the global coordinate system, and the position of marker 5 was changed from (180, 300) to (180, 360).

Figure 15.

Test condition to verify method of calculating position of moved marker.

After changing their positions, the global positions of the markers were estimated. Table 5 shows the actual and estimated position of markers 3 and 4 before and after being moved. The global positions of the markers were estimated accurately even after they were moved.

Table 5.

Position of the moved marker.

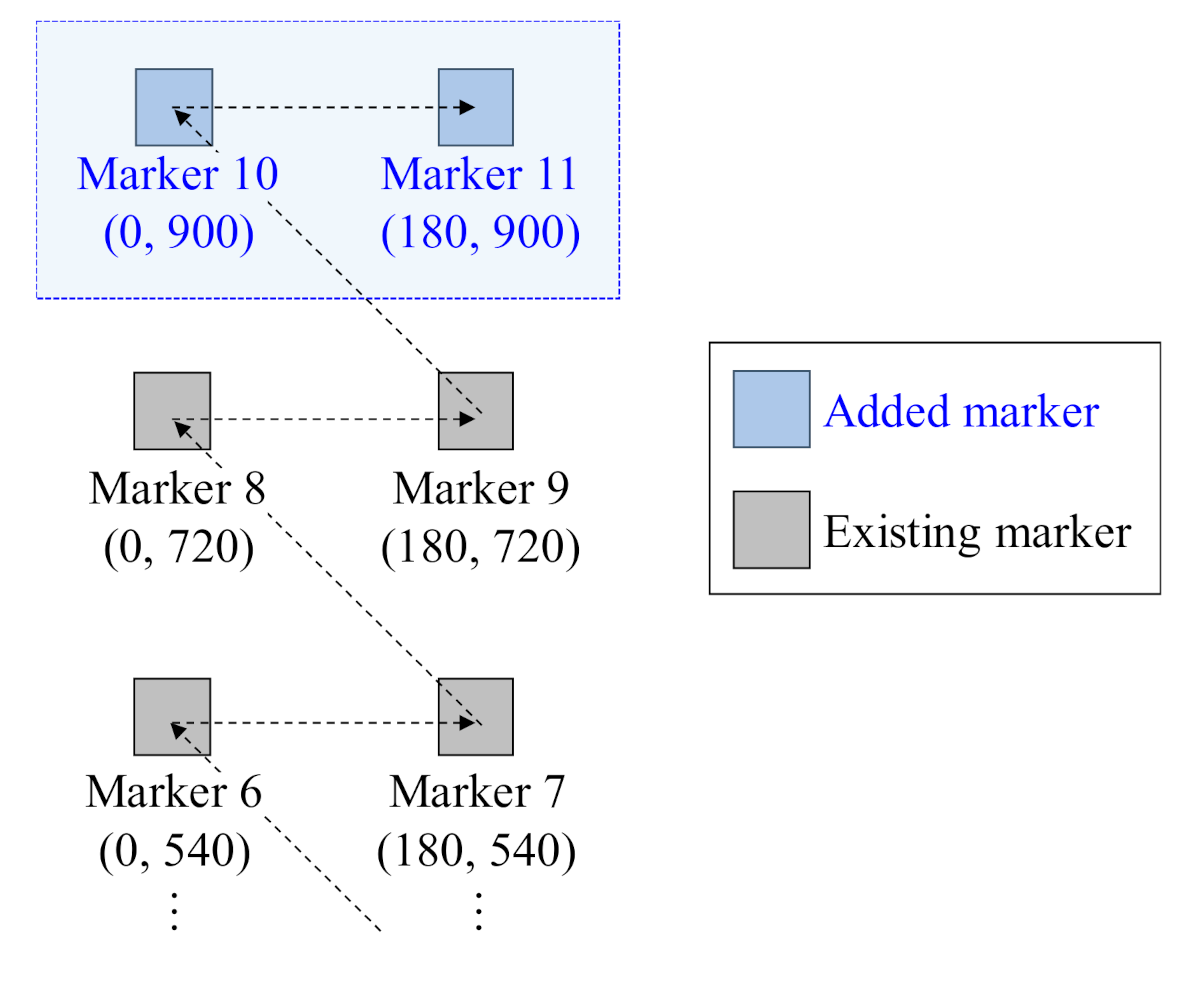

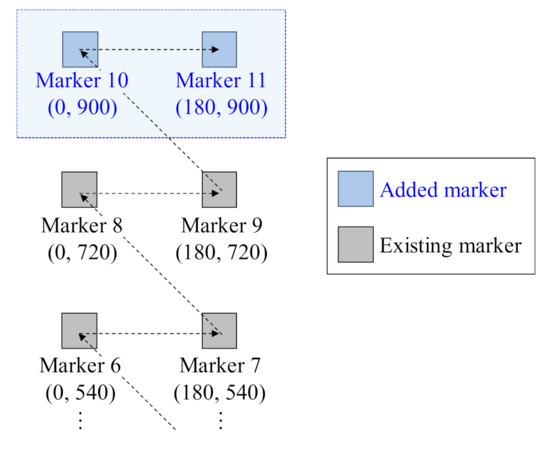

Two new markers (markers 10 and 11) were attached to the corridor to confirm the recognition of newly added markers, as shown in Figure 16, and the global positions of the markers were estimated.

Figure 16.

Test condition to verify method of calculating position of added marker.

Table 6 shows the actual and estimated global positions of all markers after the new markers were attached and the robot moved along the same path. It was confirmed that the global positions of the existing markers were updated, and the global positions of the new markers were estimated using the proposed method.

Table 6.

Positions of existing and added markers.

4. Conclusions

This paper proposed a method for recognizing the position of a camera mounted on a mobile robot based on marker information while considering an increase in the area where the robot is driven or a change in the environment. The proposed method not only used novel markers to increase the recognition distance of the camera but also calculated the position of added and moved markers to respond to environmental changes. Two new markers were proposed depending on the driving pattern of a robot. In addition, a method was proposed for calculating the position of a marker with unknown position information based on reference marker. The proposed methods for camera position recognition were verified by evaluating the camera position and comparing it with the position determined using a previous method. The method of calculating the position of an unknown marker was verified by calculating the position of the marker using the proposed method and comparing it with the actual measured position. In addition, the positions of markers were estimated and updated based on the movement of the marker or the addition of markers. The recognition distances of the proposed markers were evaluated and compared with the recognition distances of the three conventional markers of different sizes. The results of the experiments confirmed the effectiveness of the proposed method.

In order to approach and apply more real-world environments, further studies are required to tackle the problem of marker recognition degradation because of changes in light and to increase the accuracy of marker recognition by applying probability filters.

Author Contributions

Conceptualization, D.-G.G. and K.-H.S.; methodology, D.-G.G. and K.-M.Y.; software, D.-G.G. and K.-M.Y.; validation, M.-R.P. and J.K.; formal analysis, K.-M.Y. and M.-R.P.; investigation, J.K. and J.H.; resources, D.-G.G. and J.K.; data curation, D.-G.G. and J.H.; writing—original draft preparation, D.-G.G. and K.-M.Y.; writing—review and editing, M.-R.P. and J.L.; visualization, M.-R.P. and J.H.; supervision, K.-H.S. project administration, K.-H.S. and J.L.; funding acquisition, K.-H.S. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This material is based upon work supported by the Ministry of Trade, Industry & Energy (MOTIE, Korea) under Industrial Technology Innovation Program. (No.10067169, ‘Development of Disaster Response Robot System for Lifesaving and Supporting Fire Fighters at Complex Disaster Environment’) Furthermore, this work was supported by the National Research Foundation of Korea (NRF) grant funded by the government of Korea (MSIT) (No. NRF-2020R1C1C1008520).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Patle, B.K.; Ganesh, B.L.; Anish, P.; Parhi, D.R.K.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 15, 582–606. [Google Scholar] [CrossRef]

- Park, B.S.; Yoo, S.J.; Park, J.B.; Choi, Y.H. A Simple Adaptive Control Approach for Trajectory Tracking of Electrically Driven Nonholonomic Mobile Robots. IEEE Trans. Control Syst. Technol. 2010, 18, 1199–1206. [Google Scholar] [CrossRef]

- Liang, X.; Wang, H.; Chen, W.; Guo, D.; Liu, T. Adaptive Image-Based Trajectory Tracking Control of Wheeled Mobile Robots with an Uncalibrated Fixed Camera. IEEE Trans. Control Syst. Technol. 2015, 23, 2266–2282. [Google Scholar] [CrossRef]

- Liang, X.; Wang, H.; Liu, Y.; Chen, W.; Liu, T. Formation Control of Nonholonomic Mobile Robots without Position and Velocity Measurements. IEEE Trans. Robot. 2018, 34, 434–446. [Google Scholar] [CrossRef]

- Liang, X.; Wang, H.; Liu, Y.; Chen, W.; Jing, Z. Image-Based Position Control of Mobile Robots with a Completely Unknown Fixed Camera. IEEE Trans. Autom. Control 2018, 63, 3016–3023. [Google Scholar] [CrossRef]

- Ali, M.A.H.; Mailah, M. Path Planning and Control of Mobile Robot in Road Environments Using Sensor Fusion and Active Force Control. IEEE Trans. Veh. Technol. 2019, 68, 2176–2195. [Google Scholar] [CrossRef]

- Jiang, J.; Di Franco, P.; Astolfi, A. Shared Control for the Kinematic and Dynamic Models of a Mobile Robot. IEEE Trans. Control Syst. Technol. 2016, 24, 2112–2124. [Google Scholar] [CrossRef][Green Version]

- Zhang, K.; Chen, J.L.; Li, Y.; Gao, Y. Unified Visual Servoing Tracking and Regulation of Wheeled Mobile Robots with an Uncalibrated Camera. IEEE/ASME Trans. Mech. 2018, 23, 1728–1739. [Google Scholar] [CrossRef]

- Han, Y.J.; Park, T.H. Localization of a mobile robot using multiple ceiling lights. J. Inst. Control Robot. Syst. 2013, 19, 379–384. [Google Scholar] [CrossRef]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Colins, J. Global Positioning System: Theory and Practice, 5th ed.; Springer: Vienna, Austria, 2013. [Google Scholar]

- Wang, C.; Xing, L.; Tu, X. A Novel Position and Orientation Sensor for Indoor Navigation Based on Linear CCDs. Sensors 2020, 20, 748. [Google Scholar] [CrossRef] [PubMed]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Gao, X.; Wang, R.; Demmel, N.; Cremers, D. LDSO: Direct sparse odometry with loop closure. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2198–2204. [Google Scholar]

- Fiala, M. Designing Highly Reliable Fiducial Markers. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1317–1324. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.H.; Hwang, S.S. Autonomous UAV Landing System using Imagery Map and Marker Recognition. J. Inst. Control Robot. Syst. 2018, 24, 64–70. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Heo, S.W.; Park, T.H. Localization System for AGVs Using Laser Scanner and Marker Sensor. J. Inst. Control Robot. Syst. 2017, 23, 866–872. [Google Scholar] [CrossRef]

- Mondéjar-Guerra, V.; Garrido-Jurado, S.; Muñoz-Salinas, R.; Marín-Jiménez, M.J.; Medina-Carnicer, R. Robust identification of fiducial markers in challenging conditions. Expert Syst. Appl. 2018, 336–345. [Google Scholar] [CrossRef]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- Lee, E.H.; Lee, Y.; Choi, J.; Lee, S. Study of Marker Detection Performance on Deep Learning via Distortion and Rotation Augmentation of Training Data on Underwater Sonar Image. J. Korea Robot. Soc. 2019, 14, 14–21. [Google Scholar] [CrossRef]

- Cho, J.; Kang, S.S.; Kim, K.K. Object Recognition and Pose Estimation Based on Deep Learning for Visual Servoing. J. Korea Robot. Soc. 2019, 14, 1–7. [Google Scholar] [CrossRef]

- Lee, W.; Woo, W. Rectangular Marker Recognition using Embedded Context Information. In Proceedings of the Human Computer Interaction, Paphos, Cyprus, 2–6 September 2009; pp. 74–79. [Google Scholar]

- Depth Camera D435 – Intel® RealSense™ Depth and Tracking Cameras. Available online: https://www.intelrealsense.com/depth-camera-d435/ (accessed on 21 December 2020).

- Zheng, J.; Bi, S.; Cao, B.; Yang, D. Visual Localization of Inspection Robot Using Extended Kalman Filter and Aruco Markers. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 742–747. [Google Scholar]

- Visp_ros – ROS Wiki. Available online: http://wiki.ros.org/visp_ros (accessed on 20 December 2020).

- Abdulla, A.A.; Liu, H.; Stoll, N.; Thurow, K. Multi-floor navigation method for mobile robot transportation based on StarGazer sensors in life science automation. In Proceedings of the 2015 IEEE International Instrumentation and Measurement Technology Conference (I2MTC) Proceedings, Pisa, Italy, 11–14 May 2015; pp. 428–433. [Google Scholar]

- Amsters, R.; Slaets, P. Turtlebot 3 as a Robotics Education Platform. In Proceedings of the International Conference on Robotics in Education (RiE), Sofia, Bulgaria, 26–28 April 2017; pp. 170–181. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).