Robust Target Detection and Tracking Algorithm Based on Roadside Radar and Camera

Abstract

:1. Introduction

2. Preliminaries

2.1. Target State Vector and Motion Model

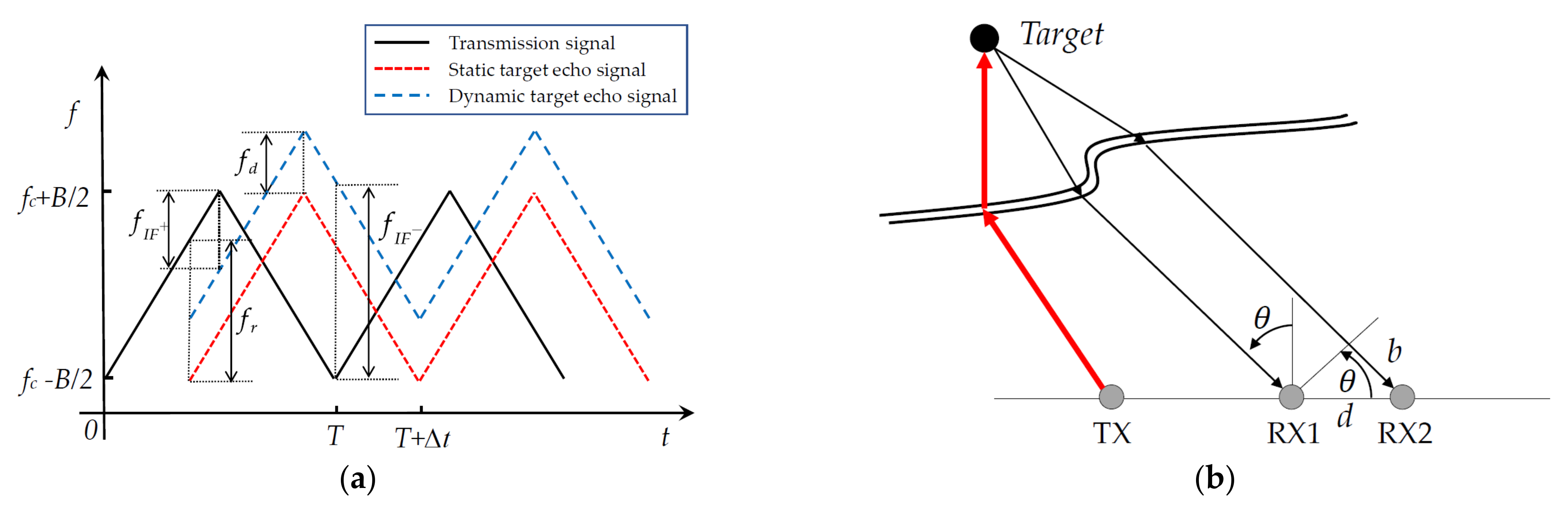

2.2. Radar Detection Model

2.3. Camera Detection Model

2.4. Sensor Calibration

2.5. Data Pre-Correlation

3. System Overview

3.1. Initialization

3.2. Prediction

3.3. Update

3.4. Pruning and Merging

4. Experiment and Results

4.1. Experiment Platform and Configuration

4.2. Tracking Algorithm Performance Analysis

4.2.1. Tracking Experiment for Pedestrians

4.2.2. Tracking Experiment for Cross Trajectory

4.2.3. Tracking Experiment for Vehicles

4.3. System Validation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- WHO. Global Status Report on Road Safety. 2018. Available online: http://www.who.int/violence_injury_prevention/road_traffic/en/ (accessed on 18 December 2020).

- Abdelkader, G.; Elgazzar, K. Connected Vehicles: Towards Accident-Free Intersections. In Proceedings of the 2020 IEEE 6th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 2–16 June 2020; pp. 1–2. [Google Scholar]

- Chang, X.; Li, H.; Rong, J.; Huang, Z.; Chen, X.; Zhang, Y. Effects of on-Board Unit on Driving Behavior in Connected Vehicle Traffic Flow. J. Adv. Transp. 2019, 2019, 8591623. [Google Scholar] [CrossRef]

- Li, H.; Zhao, G.; Qin, L.; Aizeke, H.; Yang, Y. A Survey of Safety Warnings Under Connected Vehicle Environments. IEEE Trans. Intell. Transp. Syst. 2020, in press. [Google Scholar] [CrossRef]

- Lio, M.D.; Biral, F.; Bertolazzi, E.; Galvani, M.; Tango, F. Artificial Co-Drivers as a Universal Enabling Technology for Future Intelligent Vehicles and Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2015, 16, 244–263. [Google Scholar] [CrossRef]

- Guerrero-Ibáñez, J.; Zeadally, S.; Contreras-Castillo, J. Sensor Technologies for Intelligent Transportation Systems. Sensors 2018, 18, 1212. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ning, L.; Nan, C.; Ning, Z.; Xuemin, S.; Mark, J.W. Connected Vehicles: Solutions and Challenges. IEEE Internet Things J. 2014, 1, 289–299. [Google Scholar]

- Chen, S.; Hu, J.; Shi, Y.; Peng, Y.; Zhao, L. Vehicle-to-Everything (v2x) Services Supported by LTE-Based Systems and 5G. IEEE Commun. Mag. 2017, 1, 70–76. [Google Scholar] [CrossRef]

- Chen, S.; Hu, J.; Shi, Y.; Zhao, L.; Li, W. A Vision of C-V2X: Technologies, Field Testing and Challenges with Chinese Development. IEEE Internet Things J. 2020, 7, 3872–3881. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; Liu, B.; Wan, S.; Qiao, P.; Pei, Q. An Edge Traffic Flow Detection Scheme Based on Deep Learning in an Intelligent Transportation System. IEEE Trans. Intell. Transp. Syst. 2020, in press. [Google Scholar] [CrossRef]

- Souza, A.M.D.; Oliveira, H.F.; Zhao, Z.; Braun, T.; Loureiro, A.A.F. Enhancing Sensing and Decision-Making of Automated Driving Systems with Multi-Access Edge Computing and Machine Learning. IEEE Intell. Transp. Syst. Mag. 2020, in press. [Google Scholar] [CrossRef]

- Wang, X.; Ning, Z.; Hu, X.; Ngai, E.C.-H.; Wang, L.; Hu, B.; Kwok, R.Y.K. A City-Wide Real-Time Traffic Management System: Enabling Crowdsensing in Social Internet of Vehicles. IEEE Commun. Mag. 2018, 56, 19–25. [Google Scholar] [CrossRef] [Green Version]

- Lefevre, S.; Petit, J.; Bajcsy, R.; Laugier, C.; Kargl, F. Impact of V2X privacy strategies on Intersection Collision Avoidance systems. In Proceedings of the 2013 IEEE Vehicular Networking Conference, Boston, MA, USA, 16–18 December 2013; pp. 71–78. [Google Scholar]

- Zhao, H.; Cui, J.; Zha, H.; Katabira, K.; Shao, X.; Shibasaki, R. Monitoring an intersection using a network of laser scanners. In Proceedings of the 2008 11th International IEEE Conference on Intelligent Transportation Systems, Beijing, China, 12–15 October 2008; pp. 428–433. [Google Scholar]

- Wang, C.C.; Lo, T.C.; Yang, S.W. Interacting Object Tracking in Crowded Urban Areas. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 4626–4632. [Google Scholar]

- Meissner, D.; Dietmayer, K. Simulation and calibration of infrastructure based laser scanner networks at intersections. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 670–675. [Google Scholar]

- Oliver, N.; Rosario, B.; Pentland, A. A Bayesian Computer Vision System for Modeling Human Interaction. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 255–272. [Google Scholar] [CrossRef] [Green Version]

- Babaei, P. Vehicles tracking and classification using traffic zones in a hybrid scheme for intersection traffic management by smart cameras. In Proceedings of the 2010 International Conference on Signal and Image Processing, Chennai, India, 15–17 December 2010; pp. 49–53. [Google Scholar]

- Felguera-Martin, D.; Gonzalez-Partida, J.T.; Almorox-Gonzalez, P.; Burgos-Garca, M. Vehicular Traffic Surveillance and Road Lane Detection Using Radar Interferometry. IEEE Trans. Veh. Technol. 2012, 61, 959–970. [Google Scholar] [CrossRef] [Green Version]

- Munoz-Ferreras, J.M.; Perez-Martinez, F.; Calvo-Gallego, J.; Asensio-Lopez, A.; Dorta-Naranjo, B.P.; Blanco-Del-Campo, A. Traffic Surveillance System Based on a High-Resolution Radar. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1624–1633. [Google Scholar] [CrossRef]

- Zhao, H.; Cui, J.; Zha, H.; Katabira, K.; Shao, X.; Shibasaki, R. Sensing an intersection using a network of laser scanners and video cameras. IEEE Intell. Transp. Syst. Mag. 2009, 1, 31–37. [Google Scholar] [CrossRef]

- Roy, A.; Gale, N.; Hong, L. Automated traffic surveillance using fusion of Doppler radar and video information. Math. Comput. Model. 2011, 54, 531–543. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. Available online: https://arxiv.org/abs/2004.10934 (accessed on 18 December 2020).

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceeding of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Hua, S.; Anastasiu, D.C. Effective Vehicle Tracking Algorithm for Smart Traffic Networks. In Proceedings of the 2019 IEEE International Conference on Service-Oriented System Engineering, San Francisco East Bay, CA, USA, 4–9 April 2019. [Google Scholar]

- Xun, L.; Lei, H.; Li, L.; Liang, H. A method of vehicle trajectory tracking and prediction based on traffic video. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications, Chengdu, China, 14–17 October 2016; pp. 449–453. [Google Scholar]

- Zhou, Z.; Peng, Y.; Cai, Y. Vision-based approach for predicting the probability of vehicle–pedestrian collisions at intersections. IET Intell. Transp. Syst. 2020, 14, 1447–1455. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, W.; Coifman, B.; Mills, J.P. Vehicle Tracking and Speed Estimation from Roadside Lidar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5597–5608. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, J.; Xu, H.; Wang, X.; Chen, R. Automatic Background Construction and Object Detection Based on Roadside LiDAR. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4086–4097. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zhang, Y.; Tian, Y.; Song, X. Real-Time Queue Length Detection with Roadside LiDAR Data. Sensors 2020, 20, 2342. [Google Scholar] [CrossRef]

- Lv, B.; Sun, R.; Zhang, H.; Xu, H.; Yue, R. Automatic Vehicle-Pedestrian Conflict Identification with Trajectories of Road Users Extracted from Roadside LiDAR Sensors Using a Rule-based Method. IEEE Access 2019, 7, 161594–161606. [Google Scholar] [CrossRef]

- Liu, W.J.; Kasahara, T.; Yasugi, M.; Nakagawa, Y. Pedestrian recognition using 79GHz radars for intersection surveillance. In Proceedings of the 2016 European Radar Conference, London, UK, 5–7 October 2016; pp. 233–236. [Google Scholar]

- Liu, W.; Muramatsu, S.; Okubo, Y. Cooperation of V2I/P2I Communication and Roadside Radar Perception for the Safety of Vulnerable Road Users. In Proceedings of the 2018 16th International Conference on Intelligent Transportation Systems Telecommunications (ITST), Lisboa, Portugal, 15–17 October 2018; pp. 1–7. [Google Scholar]

- Arguello, A.G.; Berges, D. Radar Classification for Traffic Intersection Surveillance based on Micro-Doppler Signatures. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 Sept. 2018; pp. 186–189. [Google Scholar]

- Kim, Y.-D.; Son, G.-J.; Song, C.-H.; Kim, H.-K. On the Deployment and Noise Filtering of Vehicular Radar Application for Detection Enhancement in Roads and Tunnels. Sensors 2018, 18, 837. [Google Scholar]

- Fu, Y.; Tian, D.; Duan, X.; Zhou, J.; Lang, P.; Lin, C.; You, X. A Camera–Radar Fusion Method Based on Edge Computing. In Proceedings of the 2020 IEEE International Conference on Edge Computing (EDGE), Beijing, China, 19–23 October 2020; pp. 9–14. [Google Scholar]

- Wang, J.G.; Chen, S.J.; Zhou, L.B.; Wan, K.W.; Yau, W.Y. Vehicle Detection and Width Estimation in Rain by Fusing Radar and Vision. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1063–1068. [Google Scholar]

- Schller, C.; Schnettler, M.; Krmmer, A.; Hinz, G.; Knoll, A. Targetless Rotational Auto-Calibration of Radar and Camera for Intelligent Transportation Systems. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3934–3941. [Google Scholar]

- Kaul, P.; Martini, D.D.; Gadd, M.; Newman, P. RSS-Net: Weakly-Supervised Multi-Class Semantic Segmentation with FMCW Radar. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 431–436. [Google Scholar]

- Mohammed, A.S.; Amamou, A.; Ayevide, F.K.; Kelouwani, S.; Agbossou, K.; Zioui, N. The perception system of intelligent ground vehicles in all weather conditions: A systematic literature review. Sensors 2020, 20, 6532. [Google Scholar] [CrossRef]

- Hasirlioglu, S.; Riener, A. Introduction to rain and fog attenuation on automotive surround sensors. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–7. [Google Scholar]

- Kutila, M.; Pyykonen, P.; Ritter, W.; Sawade, O.; Schaufele, B. Automotive LIDAR sensor development scenarios for harsh weather conditions. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 265–270. [Google Scholar]

- Buller, W.; Xique, I.J.; Fard, Z.B.; Dennis, E.; Hart, B. Evaluating Complementary Strengths and Weaknesses of ADAS Sensors. In Proceedings of the IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, USA, 27–30 August 2018; pp. 1–5. [Google Scholar]

- Vo, B.N.; Ma, W.K. The Gaussian Mixture Probability Hypothesis Density Filter. IEEE Trans. Signal Process. 2006, 54, 4091–4104. [Google Scholar] [CrossRef]

- Vo, B.T.; Vo, B.N.; Cantoni, A. Bayesian Filtering with Random Finite Set Observations. IEEE Trans. Signal Process. 2008, 56, 1313–1326. [Google Scholar] [CrossRef] [Green Version]

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive Radars: A review of signal processing techniques. IEEE Signal Process. Mag. 2017, 34, 22–35. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M.J.P.R. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Yin, S.; Ouyang, P.; Liu, L.; Guo, Y.; Wei, S. Fast Traffic Sign Recognition with a Rotation Invariant Binary Pattern Based Feature. Sensors 2015, 15, 2161–2180. [Google Scholar] [CrossRef] [Green Version]

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.C.; Qi, H.; Lim, J.; Yang, M.H.; Lyu, S. UA-DETRAC: A New Benchmark and Protocol for Multi-Object Detection and Tracking. Comput. Vis. Image Underst. 2020, 193, 102907. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Domhof, J.; Kooij, J.F.P.; Kooij, D.M. An Extrinsic Calibration Tool for Radar, Camera and Lidar. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8107–8113. [Google Scholar]

- Rother, C. A new approach to vanishing point detection in architectural environments. Image Vis. Comput. 2002, 20, 647–655. [Google Scholar] [CrossRef]

- Mahler, R.P.S.; Vo, B.T.; Vo, B.N. CPHD Filtering with Unknown Clutter Rate and Detection Profile. IEEE Trans. Signal Process. 2011, 59, 3497–3513. [Google Scholar] [CrossRef]

- Si, W.; Wang, L.; Qu, Z. Multi-Target Tracking Using an Improved Gaussian Mixture CPHD Filter. Sensors 2016, 16, 1964. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, H.; Jing, Z.; Hu, S. Gaussian mixture CPHD filter with gating technique. Signal Process. 2009, 89, 1521–1530. [Google Scholar] [CrossRef]

- Ristic, B.; Vo, B.N.; Clark, D.; Vo, B.T. A Metric for Performance Evaluation of Multi-Target Tracking Algorithms. IEEE Trans. Signal Process. 2011, 59, 3452–3457. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, J.; Li, S.; Zhang, H.; Huang, L.; Wang, P. Robust Target Detection and Tracking Algorithm Based on Roadside Radar and Camera. Sensors 2021, 21, 1116. https://doi.org/10.3390/s21041116

Bai J, Li S, Zhang H, Huang L, Wang P. Robust Target Detection and Tracking Algorithm Based on Roadside Radar and Camera. Sensors. 2021; 21(4):1116. https://doi.org/10.3390/s21041116

Chicago/Turabian StyleBai, Jie, Sen Li, Han Zhang, Libo Huang, and Ping Wang. 2021. "Robust Target Detection and Tracking Algorithm Based on Roadside Radar and Camera" Sensors 21, no. 4: 1116. https://doi.org/10.3390/s21041116