Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Design

2.2. Visible-Light Image (VLI) Acquisition and Preprocessing

2.3. Hyperspectral Image (HSI) Acquisition and Preprocessing

2.4. Multimodal Deep Learning Framework

2.4.1. Feature Concatenation

2.4.2. Multimodal Deep Learning Architectures

2.5. Performance Evaluation

3. Results

3.1. Inspection of Preliminary Performance

3.2. Fruit Maturity Estimation Performance Comparison of MD-CNNs

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Slavin, J.L.; Lloyd, B. Health Benefits of Fruits and Vegetables. Adv. Nutr. 2012, 3, 506–516. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De Oliveira, J.G.; Vitória, A.P. Papaya: Nutritional and pharmacological characterization, and quality loss due to physiological disorders. An overview. Food Res. Int. 2011, 44, 1306–1313. [Google Scholar] [CrossRef] [Green Version]

- Karunamoorthi, K.; Kim, H.-M.; Jegajeevanram, K.; Xavier, J.; Vijayalakshmi, J. Papaya: A gifted nutraceutical plant—A critical review of recent human health research. Tang Humanit. Med. 2014, 4. [Google Scholar] [CrossRef] [Green Version]

- Subenthiran, S.; Choon, T.C.; Cheong, K.C.; Thayan, R.; Teck, M.B.; Muniandy, P.K.; Afzan, A.; Abdullah, N.R.; Ismail, Z. Carica papayaLeaves Juice Significantly Accelerates the Rate of Increase in Platelet Count among Patients with Dengue Fever and Dengue Haemorrhagic Fever. Evid. Based Complement. Altern. Med. 2013, 2013, 1–7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- FAO. Major Tropical Fruits—Statistical Compendium 2018; Food and Agriculture Organization of the United Nations: Rome, Italy, 2019; p. 40. [Google Scholar]

- Briones, R.M.; Turingan, P.A.S.; Rakotoarisoa, M.A. Market Structure and Distribution of Benefits from Agricultural Exports: The Case of the Philippine Mango Industry; PIDS: Makati, Philippines, 2013; p. 24. [Google Scholar]

- COA Council of Agriculture. Council of Agriculture, Executive Yuan, R.O.C.(Taiwan). Available online: https://eng.coa.gov.tw/theme_data.php?theme=eng_news&id=289&print=Y (accessed on 22 August 2020).

- Honoré, M.N.; Belmonte-Ureña, L.J.; Navarro-Velasco, A.; Camacho-Ferre, F. The Production and Quality of Different Varieties of Papaya Grown under Greenhouse in Short Cycle in Continental Europe. Int. J. Environ. Res. Public Heal. 2019, 16, 1789. [Google Scholar] [CrossRef] [Green Version]

- Choi, H.S.; Cho, J.B.; Kim, S.G.; Choi, H.S. A real-time smart fruit quality grading system classifying by external appearance and internal flavor factors. In Proceedings of the 2018 IEEE International Conference on Industrial Technology (ICIT), Lyon, France, 19–22 February 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2081–2086. [Google Scholar]

- Walsh, K.B. Chapter 9—Postharvest Regulation and Quality Standards on Fresh Produce. In Postharvest Handling, 2nd ed.; Florkowski, W.J., Shewfelt, R.L., Brueckner, B., Prussia, S.E., Eds.; Academic Press: San Diego, CA, USA, 2009; pp. 205–246. [Google Scholar]

- Li, B.; Lecourt, J.; Bishop, G. Advances in Non-Destructive Early Assessment of Fruit Ripeness towards Defining Optimal Time of Harvest and Yield Prediction—A Review. Plants 2018, 7, 3. [Google Scholar] [CrossRef] [Green Version]

- Camelo, A.F.L. Manual for the Preparation and Sale of Fruits and Vegetables; Food and Agriculture Organization of the United Nations: Rome, Italy, 2004; Available online: http://www.fao.org/3/y4893e/y4893e05.htm (accessed on 8 October 2020).

- Shewfelt, R.L. Chapter 17—Measuring Quality and Maturity. In Postharvest Handling, 2nd ed.; Florkowski, W.J., Shewfelt, R.L., Brueckner, B., Prussia, S.E., Eds.; Academic Press: San Diego, CA, USA, 2009; pp. 461–481. [Google Scholar]

- Prabha, D.S.; Kumar, J.S. Assessment of banana fruit maturity by image processing technique. J. Food Sci. Technol. 2013, 52, 1316–1327. [Google Scholar] [CrossRef] [Green Version]

- Rivera, N.V.; Gómez-Sanchis, J.; Chanona-Pérez, J.; Carrasco, J.J.; Millán-Giraldo, M.; Lorente, D.; Cubero, S.; Blasco, J. Early detection of mechanical damage in mango using NIR hyperspectral images and machine learning. Biosyst. Eng. 2014, 122, 91–98. [Google Scholar] [CrossRef]

- Li, Z.; Thomas, C. Quantitative evaluation of mechanical damage to fresh fruits. Trends Food Sci. Technol. 2014, 35, 138–150. [Google Scholar] [CrossRef]

- Mopera, L.E. Food Loss in the Food Value Chain: The Philippine Agriculture Scenario. J. Dev. Sustain. Agric. 2016, 11, 9. [Google Scholar]

- Beltran, J.C.; Pannell, D.J.; Doole, G.J. Economic implications of herbicide resistance and high labour costs for management of annual barnyardgrass (Echinochloa crusgalli) in Philippine rice farming systems. Crop. Prot. 2012, 31, 31–39. [Google Scholar] [CrossRef]

- PSA. Agricultural Indicators System: Population and Labor Force | Philippine Statistics Authority; Philippine Statistics Authority: Quezon City, Philippines, 2019. Available online: https://psa.gov.ph/content/agricultural-indicators-system-population-and-labor-force-0 (accessed on 14 April 2020).

- Calegario, F.F.; Puschmann, R.; Finger, F.L.; Costa, A.F.S. Relationship between Peel Color and Fruit Quality of Papaya (Carica papaya L.) Harvested at Different Maturity Stages. In Proceedings of the Annual Meeting of the Florida State Horticultural Society, Orlando, FL, USA, 2–4 November 1997; FAO: Rome, Italy, 1997. Available online: https://bit.ly/2NeMgnu (accessed on 22 August 2020).

- Ab Rahim, A.A.; Sawal, M.S.A.; Tajjudin, M.; Halim, I.S.A. A Non-invasive Method to Measure the Sweetness of Malaysian Papaya Quantitatively Using NIR Full-transmittance Technique—A Preliminary Study. In Proceedings of the 2011 Third International Conference on Computational Intelligence, Communication Systems and Networks, Bali, Indonesia, 26–28 July 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 379–384. [Google Scholar]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Bauer, A.; Bostrom, A.G.; Ball, J.; Applegate, C.; Cheng, T.; Laycock, S.; Rojas, S.M.; Kirwan, J.; Zhou, J. Combining computer vision and deep learning to enable ultra-scale aerial phenotyping and precision agriculture: A case study of lettuce production. Hortic. Res. 2019, 6, 70. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Behera, S.K.; Rath, A.K.; Sethy, P.K. Maturity status classification of papaya fruits based on machine learning and transfer learning approach. Inf. Process. Agric. 2020. [Google Scholar] [CrossRef]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods 2017, 13, 1–12. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [Green Version]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Hu, M.; Zhai, G. Application of Deep Learning Architectures for Accurate and Rapid Detection of Internal Mechanical Damage of Blueberry Using Hyperspectral Transmittance Data. Sensors 2018, 18, 1126. [Google Scholar] [CrossRef] [Green Version]

- Nijjar, P.S.; Masri, S.C.; Tamene, A.; Kassahun, H.; Liao, K.; Valeti, U. Benefits and Limitations of Multimodality Imaging in the Diagnosis of a Primary Cardiac Lymphoma. Tex. Hear. Inst. J. 2014, 41, 657–659. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, D.; Wang, M.; Li, A. A Multimodal Deep Neural Network for Human Breast Cancer Prognosis Prediction by Integrating Multi-Dimensional Data. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 16, 841–850. [Google Scholar] [CrossRef]

- Radu, V.; Tong, C.; Bhattacharya, S.; Lane, N.D.; Mascolo, C.; Marina, M.K.; Kawsar, F. Multimodal Deep Learning for Activity and Context Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Oramas, S.; Barbieri, F.; Nieto, O.; Serra, X. Multimodal Deep Learning for Music Genre Classification. Trans. Int. Soc. Music. Inf. Retr. 2018, 1, 4–21. [Google Scholar] [CrossRef]

- Chen, F.; Ji, R.; Su, J.; Cao, D.; Gao, Y. Predicting Microblog Sentiments via Weakly Supervised Multimodal Deep Learning. IEEE Trans. Multimedia 2017, 20, 997–1007. [Google Scholar] [CrossRef]

- Kim, T.; Kang, B.; Rho, M.; Sezer, S.; Im, E.G. A Multimodal Deep Learning Method for Android Malware Detection Using Various Features. IEEE Trans. Inf. Forensics Secur. 2019, 14, 773–788. [Google Scholar] [CrossRef] [Green Version]

- Heidbrink, S.; Rodhouse, K.N.; Dunlavy, D.M. Multimodal Deep Learning for Flaw Detection in Software Programs. arXiv 2020, arXiv:200904549. [Google Scholar]

- Acetoab, G.; Ciuonzoa, D.; Montieria, A.; Pescapéab, A. MIMETIC: Mobile encrypted traffic classification using multimodal deep learning. Comput. Netw. 2019, 165, 106944. [Google Scholar] [CrossRef]

- Cheng, R.; Hu, W.; Chen, H.; Fang, Y.; Wang, K.; Xu, Z.; Bai, J. Hierarchical visual localization for visually impaired people using multimodal images. Expert Syst. Appl. 2021, 165, 113743. [Google Scholar] [CrossRef]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal Deep Learning. In Proceedings of the 28th International Conference on Machine Learning, ICML 2011, Bellevue, WA, USA, 28 June–2 July 2011; p. 8. [Google Scholar]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud Univ. Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Riyadi, S.; Rahni, A.A.A.; Mustafa, M.M.; Hussain, A. Shape Characteristics Analysis for Papaya Size Classification. In Proceedings of the 2007 5th Student Conference on Research and Development, Selangor, Malaysia, 11–12 December 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–5. [Google Scholar]

- Muhammad, G. Automatic Date Fruit Classification by Using Local Texture Descriptors and Shape-Size Features. Eur. Model. Symp. 2014, 174–179. [Google Scholar] [CrossRef]

- Oo, L.M.; Aung, N.Z. A simple and efficient method for automatic strawberry shape and size estimation and classification. Biosyst. Eng. 2018, 170, 96–107. [Google Scholar] [CrossRef]

- Barragán-Iglesias, J.; Méndez-Lagunas, L.L.; Rodríguez-Ramírez, J. Ripeness indexes and physicochemical changes of papaya (Carica papaya L. cv. Maradol) during ripening on-tree. Sci. Hortic. 2018, 236, 272–278. [Google Scholar] [CrossRef]

- Pereira, L.F.S.; Barbon, S.; Valous, N.A.; Barbin, D.F. Predicting the ripening of papaya fruit with digital imaging and random forests. Comput. Electron. Agric. 2018, 145, 76–82. [Google Scholar] [CrossRef]

- Villacrés, J.F.; Cheein, F.A. Detection and Characterization of Cherries: A Deep Learning Usability Case Study in Chile. Agronomy 2020, 10, 835. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Generative Adversarial Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 5046–5063. [Google Scholar] [CrossRef]

- Gao, Z.; Shao, Y.; Xuan, G.; Wang, Y.; Liu, Y.; Han, X. Real-time hyperspectral imaging for the in-field estimation of strawberry ripeness with deep learning. Artif. Intell. Agric. 2020, 4, 31–38. [Google Scholar] [CrossRef]

- Foster, D.H.; Amano, K. Hyperspectral imaging in color vision research: Tutorial. J. Opt. Soc. Am. A 2019, 36, 606–627. [Google Scholar] [CrossRef]

- Basulto, F.S.; Duch, E.S.; Saavedra, A.L.; Santamaría, J.M. Postharvest Ripening and Maturity Indices for Maradol Papaya. Interciencia 2009, 34, 6. [Google Scholar]

- Young, I.; Gerbrands, J.; Van Vliet, L. Fundamentals of Image Processing; Delft University of Technology: Delft, The Netherlands, 2009; pp. 1–85. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2017, arXiv:161105431. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:170404861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bot-tlenecks. arXiv 2019, arXiv:180104381. [Google Scholar]

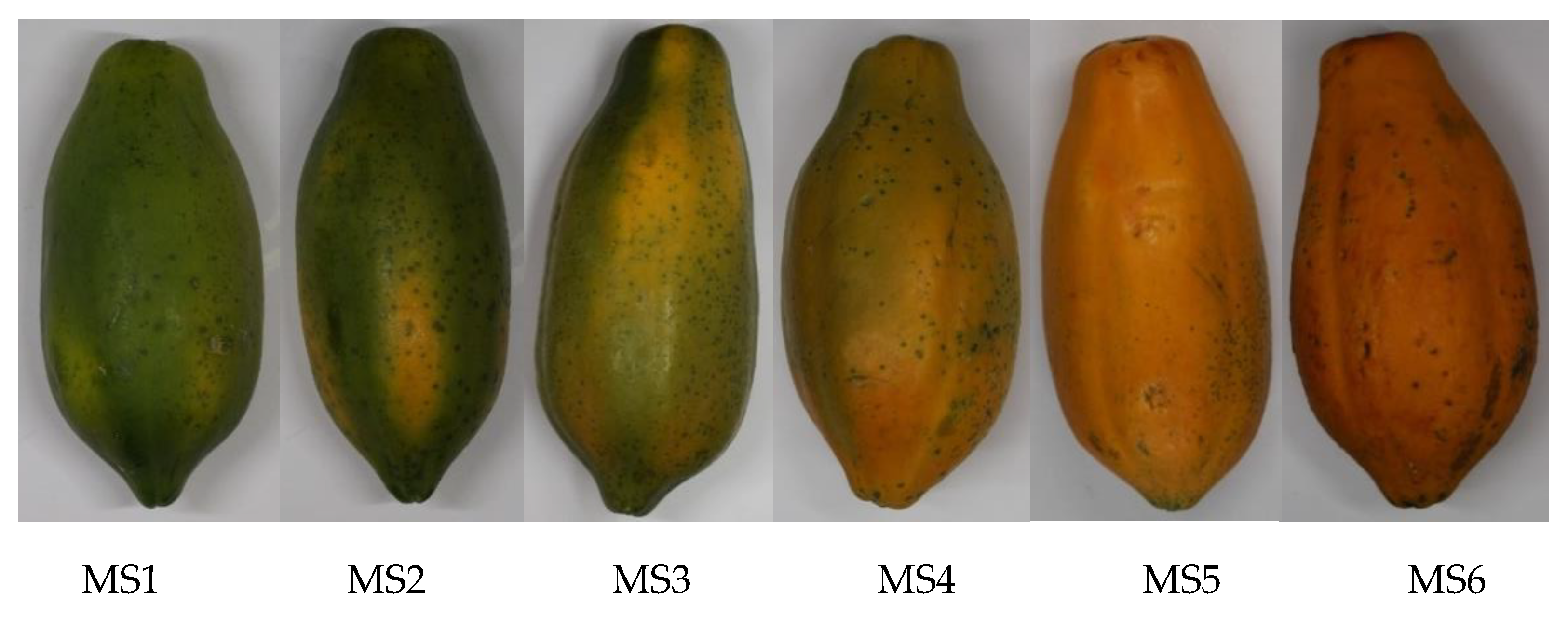

| Maturity Stage | Descriptions | Number of RGB Images | Number of HS Data Cubes |

|---|---|---|---|

| MS1 | Green with trace of yellow (15% ripe) | 520 | 64 |

| MS2 | More green than yellow (25% ripe) | 570 | 74 |

| MS3 | Mix of green and yellow (50% ripe) | 964 | 88 |

| MS4 | More yellow than green (75% ripe) | 707 | 80 |

| MS5 | Fully ripe (100% ripe) | 749 | 101 |

| MS6 | Overripe | 917 | 105 |

| Maturity Stage | Number of RGB+HS Multimodal Data Cubes (32 × 32 × 153 image size) |

|---|---|

| MS1 | 576 |

| MS2 | 666 |

| MS3 | 792 |

| MS4 | 720 |

| MS5 | 909 |

| MS6 | 945 |

| Deep Learning Model | Depth | Number of Parameters |

|---|---|---|

| MD-AlexNet | 8 | 4,938,982 |

| MD-VGG16 | 16 | 17,956,038 |

| MD-VGG19 | 19 | 23,265,734 |

| MD-ResNet50 | 50 | 30,358,790 |

| MD-ResNeXt50 | 50 | 29,819,206 |

| MD-MobileNet | 88 | 7,475,590 |

| MD-MobileNetV2 | 88 | 7,028,998 |

| Deep Learning Model | Training | Validation | ||

|---|---|---|---|---|

| Top-2 Error Rate (%) | Accuracy (%) | Top-2 Error Rate (%) | Accuracy (%) | |

| MD-AlexNet | 0.00 | 100.00 | 0.83 | 88.22 |

| MD-VGG16 | 0.00 | 100.00 | 0.83 | 88.64 |

| MD-VGG19 | 0.00 | 100.00 | 1.86 | 85.74 |

| MD-ResNet50 | 0.00 | 99.34 | 7.44 | 66.53 |

| MD-ResNeXt50 | 0.04 | 99.42 | 16.12 | 56.40 |

| MD-MobileNet | 0.04 | 99.27 | 16.32 | 56.40 |

| MD-MobileNetV2 | 0.04 | 99.23 | 19.63 | 55.37 |

| Deep Learning Model | Precision | Recall | F1-Score | Top-2 Error Rate |

|---|---|---|---|---|

| MD-AlexNet | 0.8850 | 0.8817 | 0.88 | 1.8077 |

| MD-VGG16 | 0.9016 | 0.9033 | 0.90 | 1.4461 |

| MD-VGG19 | 0.8733 | 0.8733 | 0.87 | 2.3138 |

| MD-ResNet50 | 0.7516 | 0.6850 | 0.69 | 7.3030 |

| MD-ResNeXt50 | 0.6150 | 0.5550 | 0.52 | 16.3413 |

| MD-MobileNet | 0.5617 | 0.5633 | 0.55 | 16.9197 |

| MD-MobileNetV2 | 0.5783 | 0.5667 | 0.56 | 18.3659 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation. Sensors 2021, 21, 1288. https://doi.org/10.3390/s21041288

Garillos-Manliguez CA, Chiang JY. Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation. Sensors. 2021; 21(4):1288. https://doi.org/10.3390/s21041288

Chicago/Turabian StyleGarillos-Manliguez, Cinmayii A., and John Y. Chiang. 2021. "Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation" Sensors 21, no. 4: 1288. https://doi.org/10.3390/s21041288

APA StyleGarillos-Manliguez, C. A., & Chiang, J. Y. (2021). Multimodal Deep Learning and Visible-Light and Hyperspectral Imaging for Fruit Maturity Estimation. Sensors, 21(4), 1288. https://doi.org/10.3390/s21041288