1. Introduction

Currently, several applications, such as teleoperation [

1], intelligent vehicles and aircraft [

2], assistive and rehabilitation technology [

3], and robot-assisted surgery [

4] require the collaboration of human and robot partners to accomplish a shared task. To implement efficient communication in a human–robot collaboration system, both humans and robots need to understand the current state of their partner and be able to predict what they will do next [

4,

5]. Robots are enabled to infer human intentions in human–robot collaborative tasks through various modes, such as biological signals [

6,

7,

8,

9]. Biological signals can infer movement intention for intuitive and natural interaction between humans and robots. Electromyogram (EMG), which is one of these signals, is widely used for the estimation of simultaneous and proportional motor intention [

10,

11,

12,

13].

In robotic applications, the EMG signal is used as a communication channel between a human and a robot by inferring human intention that is transferred to robot control. Specifically, it is used to infer the motion parameters of the upper limb, which are the most active parts of the human body. In this regard, the simultaneous and proportional motion prediction from EMG signals and its application are currently a hot research topic. The predicted parameters of upper limb motions can be kinetic or kinematic, such as joint angles or hand positions. Since the relationship between the EMG signal and kinetic parameters is more direct than that of kinematic parameters, the estimation of kinetic parameters has been widely studied in the context of myoelectric control [

13]. Therefore, in this paper, the mapping of the EMG signal to kinematic parameters, which is a 3-D hand position, is studied.

The simultaneous and proportional motion prediction for multiple DOFs at a single joint or multiple joints of upper limb motions have been studied by several researchers. Muceli et al. [

14] and Bao et al. [

15] proposed models to infer joint angle for the simultaneous activation of the multiple DOFs of the wrist. These studies are focused on the simultaneous and proportional motion prediction for multiple DOFs at a single joint. However, Pan et al. [

16], Zhang et al. [

17], and Liu et al. [

18] proposed methods to simultaneously predict multiple DOFs across multiple joints such as shoulder, elbow, and wrist. The methods that are used for the simultaneous and proportional motion prediction can be categorized as model-based [

19] and model-free approaches (i.e., artificial intelligence methods such as neural-network) [

20]. Both linear and non-linear regression techniques can be used to map EMG signals to motion parameters [

21,

22,

23,

24]. In this regard, fuzzy neural network approaches are good at mapping both linear and non-linear relationships between inputs and outputs. In this paper, a fuzzy neural network technique is used to establish the mapping of the EMG signal to 3-D of hand motion.

Most of the simultaneous and proportional motion prediction studies focused on myoelectric control. The myoelectric control aims at wearable assistive and rehabilitation technologies such as exoskeleton and prosthetics. These applications usually aim to assist users, who lost upper limb functionality, with the consideration of different joints that bring the hand in a certain position. Hence, to regain the upper limb motion functionality, it may be required to consider the simultaneous activation of multiple DOFs. However, in other human–robot collaboration systems, such as the manipulation of a robot in intelligent manufacturing [

25] or skill transfer [

26,

27], the hands alone can be in direct contact with the robot.

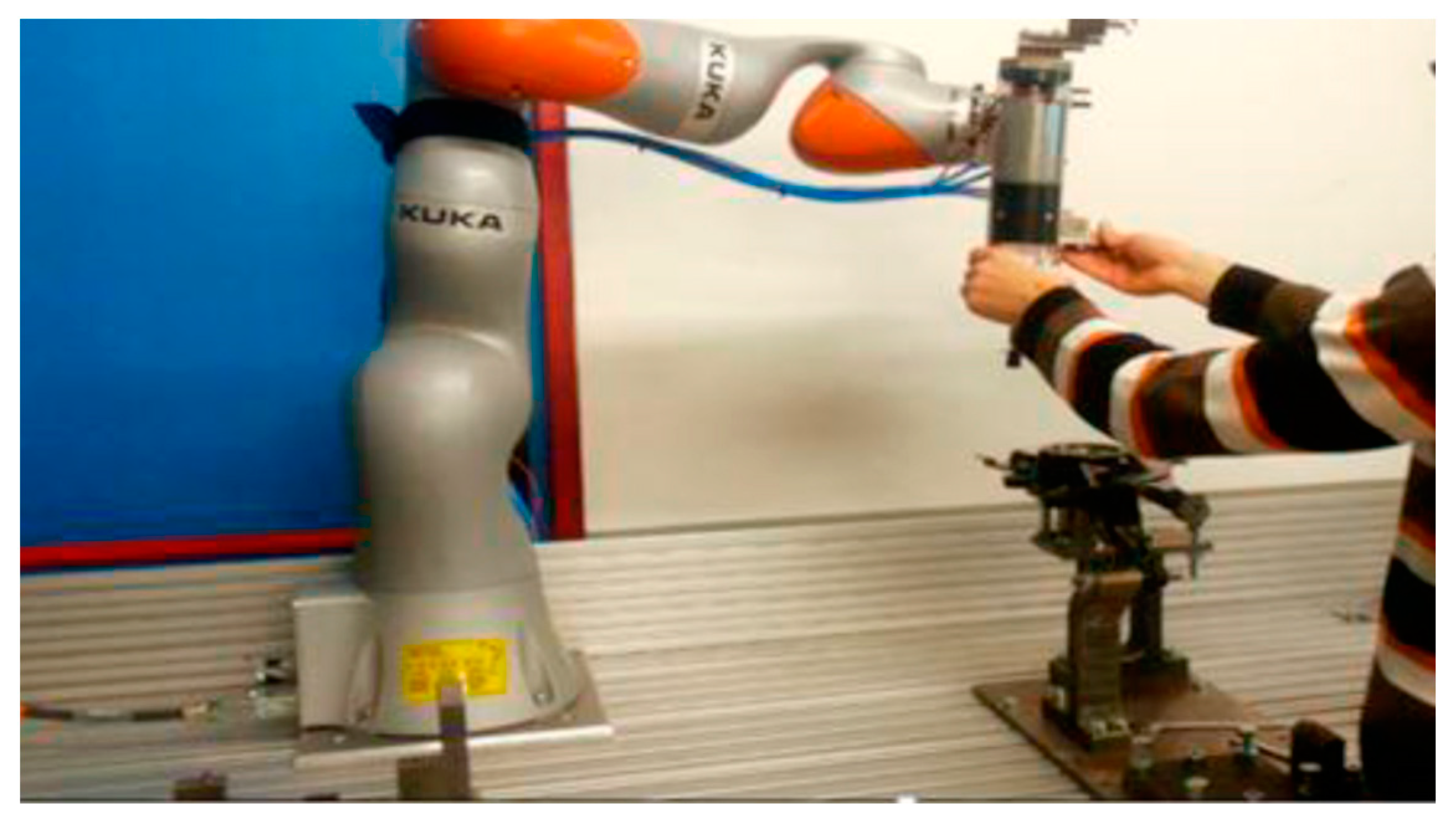

Figure 1 illustrates an example of the application of human–robot collaboration in manufacturing based on human hand trajectory prediction.

In these collaboration applications, healthy subjects are engaged with a robot partner, and the point of contact between the robot and human alone can be a concern. For instance, in skill transfer, human demonstrations are often simply treated as trajectories, and the robot can mimic the behaviors as demonstrated. The EMG signal is used to estimate the human arm endpoint position, without the concern of the motion of multiple arm joints, that fed to the robot to follow the trajectories.

EMG-based hand motor intention prediction, which aims for information transfer from human to robot, enables the robot to learn and acquire manipulation skills in a complex and dynamic environment [

28]. In this application, the prediction of the human arm endpoint position (i.e., the contact point between the hand and robot) alone can establish efficient communication between the robot and human partners. In this regard, Artemiadis et al. [

29,

30] proposed a state-space model that was used to enable the user to control an anthropomorphic robot arm trajectory in 3-D space. However, in their model, instead of directly estimating 3-D hand position from EMG signal, they used a model that took the joint angles (i.e., estimated angles from EMG signals) and lengths of upper limb as inputs. Even though the coordination of multiple DOFs brings the hand to be at a certain position in space [

31], it is not important to consider in our target of application. In our study, instead of first predicting multiple DOFs and then estimating the hand position, we directly estimate the 3-D hand position from the EMG signal. Our approach is similar to the study of Vogel et al. [

32] who proposed a method to estimate the position of the hand in space for EMG-based robotic arm systems. However, more complex trajectories, which are different from a point-to-point trajectory such as picking up and putting down objects, were considered in our study.

To the best of our knowledge, there are no studies which consider complex trajectories and directly predict the 3-D hand position from the EMG signal alone. In this paper, we explore how to predict the 3-D hand position for complex trajectories of hand movement directly from the EMG signals alone. We aimed an application of human–robot collaboration where the objects are held by the robot end-effector, while the human controls the robot either remotely by teleoperation or by holding the robot handle. The contribution of this research work is: First, the prediction of a 3-D hand position directly from the EMG signal for complex trajectories of hand movement is studied. The 3-D hand position is predicted from the EMG signal, without the direct consideration of joint movements. Second, our model is constructed for the prediction of a 3-D hand position from the EMG signal. For the efficient communication of a human and robot, the estimation of human intention at the current time might not be adequate and, hence, the future time intention should be estimated. Third, the effects of slow and fast motions on the accuracy of the prediction model are analyzed. Overall, this study proposed a model to predict human motor intention that can be used as information from human to robot. This paper is organized as follows:

Section 2 presents the proposed method for the acquisition of the EMG signals and the approaches of intention prediction.

Section 3 reports the results and discussion;

Section 4 describes the conclusion of the paper.

2. Materials and Methods

2.1. System Overview

As shown in

Figure 2, the human–robot collaboration system that is based on the prediction of continuous motion from EMG signals consists of four main elements: Human upper limb, EMG signal acquisition and processing, prediction model, and the robot. First, the EMG signals are collected, while the user performs a complex upper limb motion. Then, the collected EMG signal is preprocessed, and features are extracted from it. A prediction model is used to map the 3-D hand position signal to the extracted features. Finally, the hand position is fed to the controller of the robot to collaborate with the human in shared tasks.

2.1.1. The Human Upper Limb

It consists of several muscles that control the movements of the hand. The human upper limb movement requires the coordination of shoulder, elbow, wrist, and finger joints to perform a set of activities of daily life. In this paper, muscles that are mainly associated with the desired types of movement were identified.

2.1.2. EMG Signal Acquisition and Processing

It involves the selection of muscle position, preparation of the skin, signal acquisition, preprocessing, and feature extraction. First, the acquired raw EMG signals are filtered and rectified. Then, the linear envelope of the signal is generated, and features are extracted.

2.1.3. Continuous Motion Prediction Model

Dynamic model, musculoskeletal model, and artificial intelligence approaches are some of the techniques used to predict continuous motion parameters from EMG signals. In this paper, a recurrent fuzzy neural network is constructed to map EMG signals to 3-D hand positions.

2.1.4. Robot Controller

This part consists of the mechanism to convert the input signal to the robot output signal along with its feedback mechanism. This study aims to propose a method to predict human intention that can be fed to a robot controller to enhance human–robot collaboration. Once the hand position is predicted from the EMG signals, it is fed to the robot control to drive its end effector that holds an object to the desired trajectory. However, the control part is not studied in this paper, as we focused on the offline analysis.

2.2. Experimental Protocol

Six right-handed, able-bodied young adults participated in the experiment of hand movement for the desired tasks (age: 20 to 26 years old). The study adhered to the principles of the 2013 Declaration of Helsinki. The online experimental protocol was reviewed and approved by the local research ethics committee, and subjects signed the informed consent forms.

Two different complex tasks associated with a daily activity were designed in 3-D spaces. These motions are (1) picking up a bottle from the table and pouring it into a cup within the range of 10 × 45 × 50 cm (Task_1), and (2) manipulation tasks with multiple obstacles within the range of 20 × 30 × 20 cm (Task_2), as shown in

Figure 3. These two motions represent the complex trajectory of tasks executed by the collaboration of a human and robot in applications such as intelligent manufacturing.

After the EMG electrodes and sensors were in place, subjects were instructed to sit with their back straight and in front of a table, where they performed the desired task. At the initial position, the right forearm remained on the table and the shoulder was stabilized in the anatomical reference position with the elbow at 90 degrees flexion. Subjects performed the required movements by grasping their hands. The subjects’ wrist motion was kept fixed throughout the experiment.

Figure 4. demonstrates the experimental setup when one of the subjects conducted the required tasks. In the figure, the subjects are seen sitting in front of the table where the required trajectories were performed.

The subjects were asked to perform the designed complex trajectories by grasping their hand, while EMG and kinematic data were recorded. Since the objects are held by the robot during the real application, the types of hand grasping is the same for both tasks. The subjects were instructed to move their hands at fast and slow speeds. Since it was challenging to consider several distinct kinds of speed, as the level is determined by the subjects, we limited the consideration of speed for completion of the tasks by fast and slow motion. In this regard, the level of the speed was determined by the subjects based on the advice they received from the authors. To complete the trajectory of each task, fast and slow speed scenarios took approximately 3 and 5 s per cycle, respectively. In one trial, the subjects performed the trajectory 5 times repetitively (i.e., start and finish the task, and back to start point, and so on). In between each cycle, there was a 3-s break. The subjects were encouraged to have adequate rest time if needed. Each trial took about 35 and 45 s for fast and slow speeds, respectively. There were 9 trials for each task and speed; therefore, a total of 36 trials (2 task × 2-speed type × 9 trials) were conducted. During the experiment, both EMG and 3-D hand position signals were collected. After collecting both signals, data processing, construction of prediction model, and analysis of prediction performance were conducted. The flow diagram of the proposed method is shown in

Figure 5.

2.3. Data Acquisition

EMG and Kinematic data were acquired from the subjects simultaneously. Six muscles that predominantly activate joints associated with the desired movements are selected to collect the EMG signals, i.e., anterior deltoid, posterior delhtoid, biceps brachii, triceps brachii, extensor carpi radialis, and flexor carpi radialis. The 7th channel was placed at a bone near to elbow’s joint for reference. A device by Beijing Symtop Instruments Company Limited was used, to collect the EMG signals at the sample rate of 1000 Hz. The skin preparation, placement, and fixation of the electrode were done according to the guideline of SENIAM [

10]. The skin, where the electrode was fixed, was cleaned with alcohol to reduce the resistance between the skin and the electrodes. The muscle positions of each EMG electrode are shown in

Figure 6.

To collect the kinematic data, we used a FASTRAK tracking system from Polhemus that uses electromagnetic fields to determine the position and orientation of an object. FASTRAK is equipped with position and reference sensors. The important specification of the device is shown in

Table 1.

The position sensor was fixed at the hand and the reference sensor was fixed on the table as a reference. The 3-D (x, y, and z Cartesian coordinates) of hand position represent the coordinates of the point in the middle of the opisthenar area (dorsal) of the hand along the three planes (frontal, transverse, and sagittal). The FASTRAK sensor recorded the position of the hand, at a sample rate of 60 Hz in Cartesian space, relative to the reference sensor.

The EMG data and kinematics data were synchronized by the NI acquisition device and then sent to the computer as shown in

Figure 7. The figure is drawn from the sample of the collected data, while the subjects conducted the desired tasks during the experiment. The figures on the right-hand side (

Figure 7a) represent the synchronized EMG and position signal, while the subject performed Task_1. The top figure depicts the amplitude of the EMG signal; whereas the bottom three figures represent the position signal along x, y, and z axes, respectively. Similarly, the figures on the right-hand side (

Figure 7b) represent the synchronized EMG and position signal, while the subject performed Task_2. The collected data of EMG and position signals were analyzed on a MATLAB R2010 program.

2.4. Data Processing

The collected signals, especially EMG signals, were contaminated with various noises and these noises should be removed from the signals. First, the DC offset was removed from the raw EMG signals by using a Matlab function detrend, and the spikes were removed by using median filtering. Then the resulting signals were fully rectified. The fully rectified EMG and position signals were low pass filtered with Butterworth of order 2 and cut off frequency of 2 Hz to generate the linear envelope of EMG signal. The position signal was resampled at a frequency of 1 kHz to be consistent with the sampling frequency of the EMG signals. We checked that there was no significant difference between the original and resampled position signal.

After signal processing, the combinations of root mean square (RMS) and integrated EMG (IEMG) features were extracted. The selection was made after comparisons of the various combinations of seven time-domain features (i.e., mean absolute value, variance, root mean square, waveform length, integrated EMG, slope sign integral, and slope sign change). First, we analyzed the individual performance of mean absolute value, variance, root mean square, waveform length, integrated EMG, slope sign integral, and slope sign change. Then, we analyzed the performance by combining the features until no further improvement of performance was recorded from the combinations of features. As a result, the combination of RMS and IEMG was found to be the best performing features.

The selection of window length can be one of the factors that affect the performance of continuous motion parameter prediction from EMG signals. In this regard, we compared the prediction performance of an overlapping window length of 50 ms, 100 ms, 150 ms, 200 ms, 250 ms, and 300 ms. A total of 12 features (6 channels * 2 feature types = 12 features) were generated for each EMG data point, and they were used as an input for our prediction model.

2.5. Prediction Model

We used a recurrent fuzzy neural network (RFNN) to map the features of EMG signals to the 3-D hand position. The model combines the benefits of both the recurrent structure of the neural network and fuzzy logic. Various forms of RFNN have been used to address time-varying systems [

33,

34]. Where and how to use the feedback unit in the network differentiates one structure from the other. The structure of the model that is used in this paper is shown in

Figure 8.

2.5.1. Structure of RFNN

It has four layers along with a feedback unit in the rule layer. In the subsequent description, denotes the node output in layer .

1. Layer 1 (Input layer): It only transmits the input values to the next layer directly; therefore, there is no computation in this layer.

where,

is the inputs,

is number of input variables.

2. Layer 2 (Fuzzification layer): It is defined by the Gaussian membership function that corresponds to the linguistic label of an input variable in layer 1.

where,

is center and

is the width of the Gaussian membership function of the

term of the

input variable

.

3. Layer 3 (Rule layer): The fuzzy AND operator is used to integrate the fuzzy rules. The output of a rule node

represents a spatial firing strength of its corresponding rule.

Each node in this layer has a recurrent fuzzy rule node that forms an internal feedback loop.

where,

is a recurrent parameter that determines the ratio between the contributions of the current and past states.

4. Layer 4 (Defuzzification layer): Each node in this layer is called an output linguistic node and corresponds to one output linguistic variable. This layer performs the defuzzification operation.

where,

is the link weight is the center of the membership function of the

term of the

output linguistic variable.

2.5.2. Learning of the Model

1. Structure learning: Fuzzy rules are generated from the training data by a clustering algorithm. During learning, a rule is added or reduced based on a firing strength greater than a predefined threshold.

2. Parameter learning: All the parameters (center, width, recurrent parameter, and weight link) are learned by gradient descent algorithm. The cost function

, on the bases of squared error, is defined as

where,

is the desired output and

is the actual output.

By using the backpropagation algorithm, the parameters in the corresponding layer are updated

where,

,

,

, and

are the learning rate for the center, width, recurrent parameter, and weight link, respectively.

is the number of iterations.

Processed EMG activity from skeletal muscles precedes mechanical tension by 50–100 ms. This electromechanical delay (the time delay between electromyogram and the related mechanical output) motivated us to develop a prediction model. The features of the EMG signal at the current time

were used to predict the 3-D hand position at the advanced time

.

where,

is the three-dimensional position of the hand at time

, and

is a function that takes EMG signal feature

at time

.

The model in (11) is a prediction model in its strict sense, as it takes the current state of the input to predict the position of the hand at the future time. As shown in

Figure 9, the features of the EMG signal at the current time were mapped to the 3D hand position at an advanced time. During data collection, the EMG and kinematics data were synchronized. Subsequently, the signals were processed, and new data points are constructed by time window analysis (i.e., the EMG features are mapped to the 3D hand position). To construct the prediction model in (11), we shifted the position signal to a future time by a window length. In this model, the prediction time horizon of 50–300 ms with an increment of 50 ms was analyzed.

2.6. Performance Index

Normalized root mean square error (NRMSE) and Pearson correlation coefficient (CC) were used to measure the prediction accuracy. NRMSE is a widely used performance metric in continuous motion prediction. It is a non-dimensional form of the root mean square error and it is useful to compare root mean square error with different units. Moreover, it can be used to compare models of different scales. CC, which compares the strength of the association between the actual and predicted values [

13]. Since NRMSE measures the error while CC measures the similarity between the predicted and actual trajectories, combining the two indexes enables us to comprehensively evaluate the prediction performance.

where,

is the mean of the desired value and

is the mean value of the actual value.

is the total number of data points,

is the maximum of the desired value, and

is the minimum of the desired value.

4. Conclusions

The prediction of continuous human motion intention is the crucial element of human–robot collaboration systems. EMG Signal is a method used to infer human intention. Several parameters could be predicted from the EMG signal of the human upper limb so that the predicted output is fed to the robot to perform deigned tasks. The position signal is one of these parameters that can be predicted from the EMG signal.

In this study, we aimed to predict 3-D hand position, which can be used for information transfer from human to robot, from EMG signals alone. First, we designed two tasks having complex trajectories and associated with daily life, each with slow and quick motion scenarios. We constructed a prediction model that maps the EMG signals to 3-D hand positions. Then, we analyzed the prediction accuracy of the constructed RFNN model by using CC and NRMSE as performance metrics. Moreover, we analyzed the impact of the speed on the accuracy of prediction. We found that the 3-D hand position can be predicted well within a future time of 250 ms, from the EMG signal alone. Tasks performed with quick motion had slightly a better prediction performance than slow motion. However, the statistical difference in the accuracy of prediction between quick and slow motion was insignificant. The importance of this result is that once the model is trained and the parameters are identified, its performance will not significantly be affected by the change of speeds. Concerning the algorithm, we found that RFNN has a good performance in decoding for time-varying systems. The novelty of this study is we able to predict the 3-D hand position from EMG signal without the consideration of the degree of freedom at joints. The predicted parameter can be directly fed to a robot control to guide the end effector in the required trajectories.

Several limitations that need research attention exist in this study. The first limitation is that several tasks should be designed and analyzed to generalize our results to a general hand motion performed by a person. The second limitation is during EMG signal acquisition, the electrodes could shift from the target muscle or loose skin contact, which can distort the EMG signals and the accuracy of intention prediction. Third, the non-stationary characteristics of EMG signal impair the performance and robustness of motion intention prediction. Generally, EMG has a great potential to predict a continuous human intention, i.e., a 3-D hand position of the upper limb. However, there is a need to improve the performance/accuracy of prediction to accommodate more daily activities that can be used in the human–robot collaboration system. Hence, our future research direction will be to improve the performance of prediction and to test the system in real-time.