Combining Augmented Reality and 3D Printing to Improve Surgical Workflows in Orthopedic Oncology: Smartphone Application and Clinical Evaluation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Patient Selection

2.2. Image Processing and Model Manufacturing

2.2.1. Medical Image Acquisition and Segmentation

2.2.2. Computer-Aided Design

2.2.3. 3D Printing

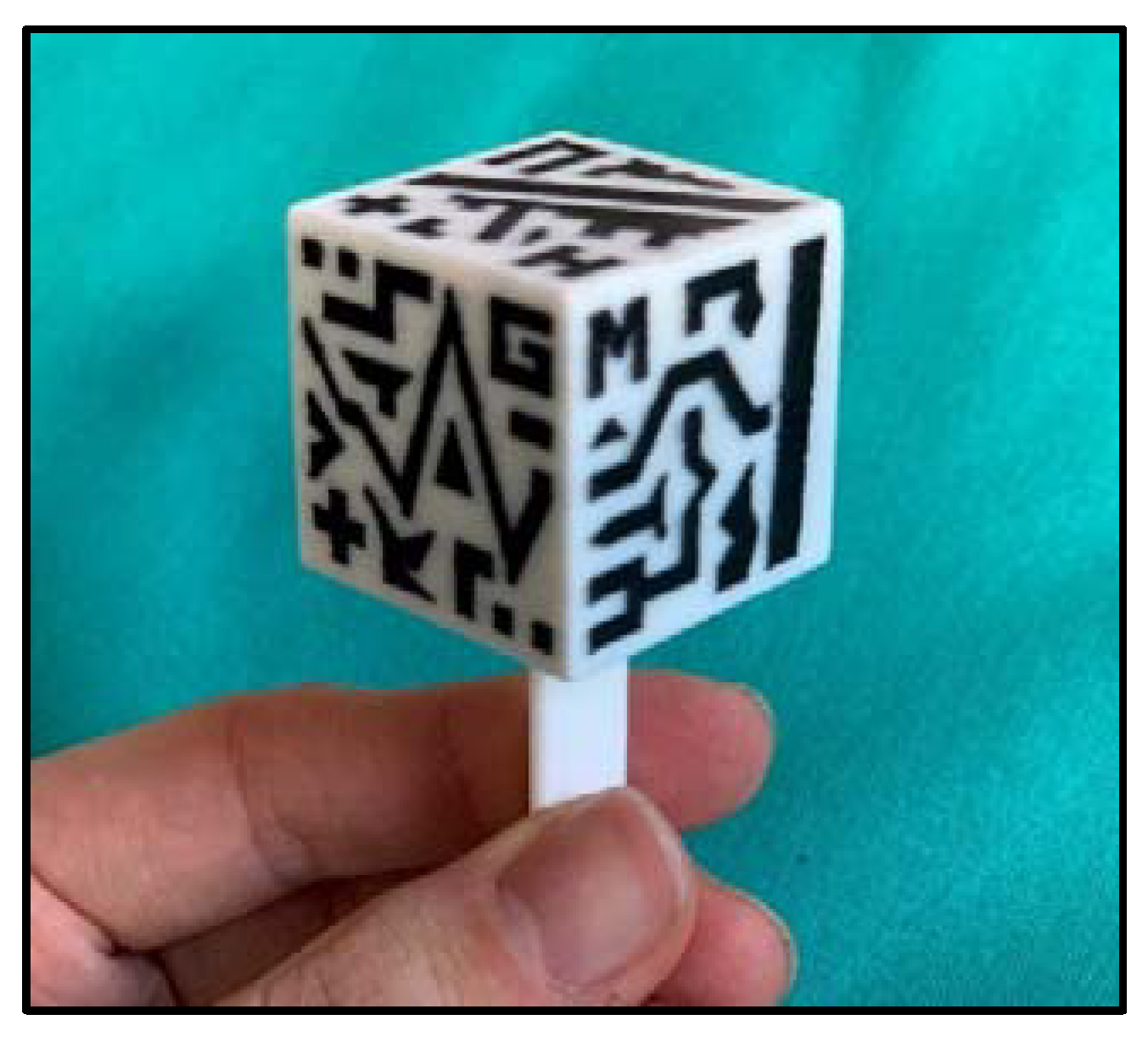

2.3. Augmented Reality System

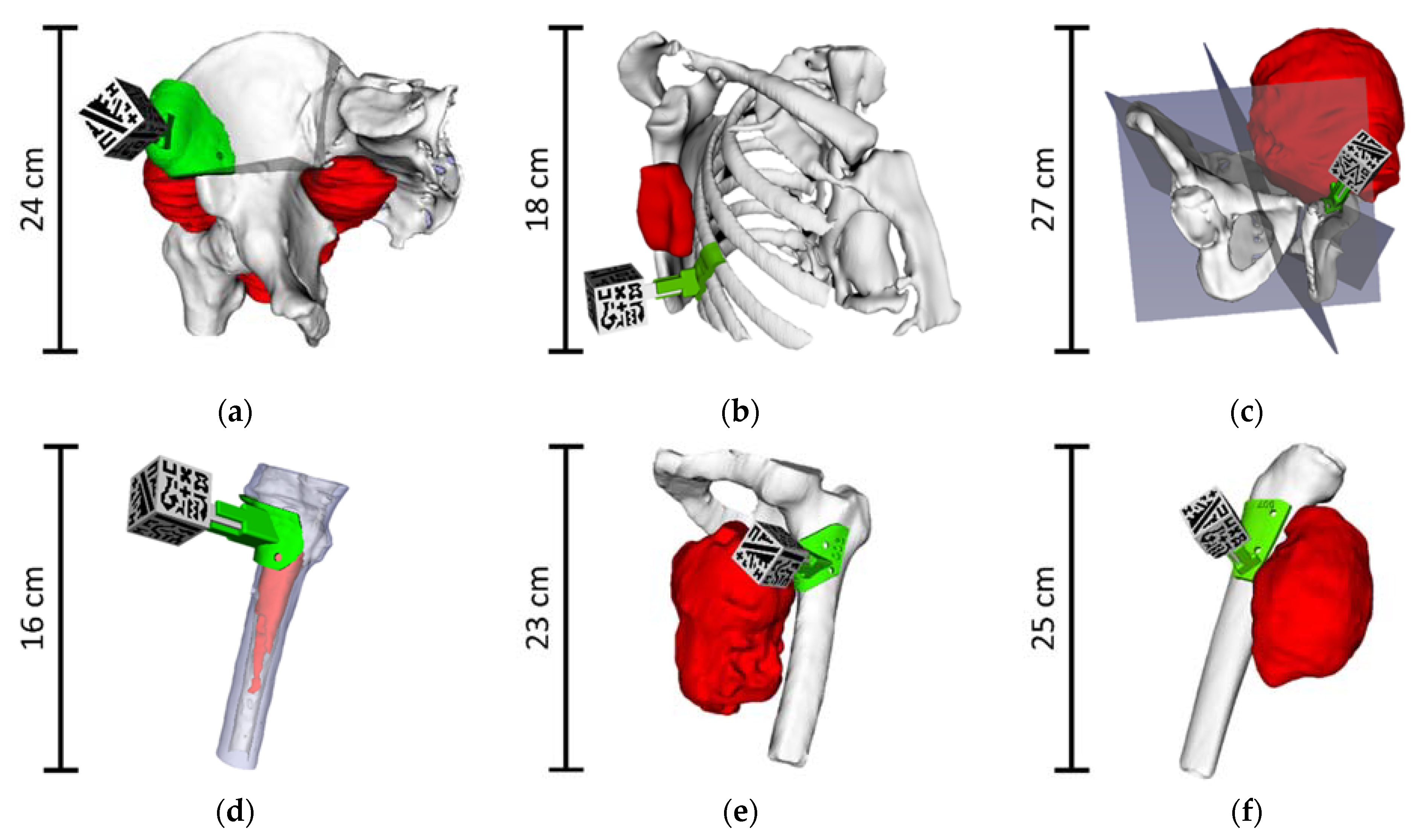

- In the Demo mode, all the virtual 3D models are displayed around the AR marker, without any patient registration (Figure 4a). The AR marker can be rotated to show the virtual models from any point of view.

- Clinic mode displays the virtual 3D models in their corresponding position with respect to the surgical guide, which is registered to the cubic marker (Figure 4b). This mode is designed to be used with the surgical guide fixed on a 3D printed bone (or fragment), allowing for surgical planning and training.

- Surgery mode will be used during the actual surgical intervention. The surgical guide will be attached to the patient’s bone, solving the registration between the patient and the AR system. The main difference with Clinic mode is that those models that will be essential to the surgeon, such as tumor or cutting planes, are augmented on top of the patient to guide the operation in real-time. Besides, an occlusion texture could be assigned to the bone model within the app, covering the models behind it, providing the same visualization as if the actual bone was occluding these elements (Figure 4c).

2.4. Augmented Reality System Performance

2.4.1. Surgical Guide Positioning Error

2.4.2. Augmented Reality Tracking Error

2.5. Integration of the Augmented Reality System in the Surgical Workflow

3. Results

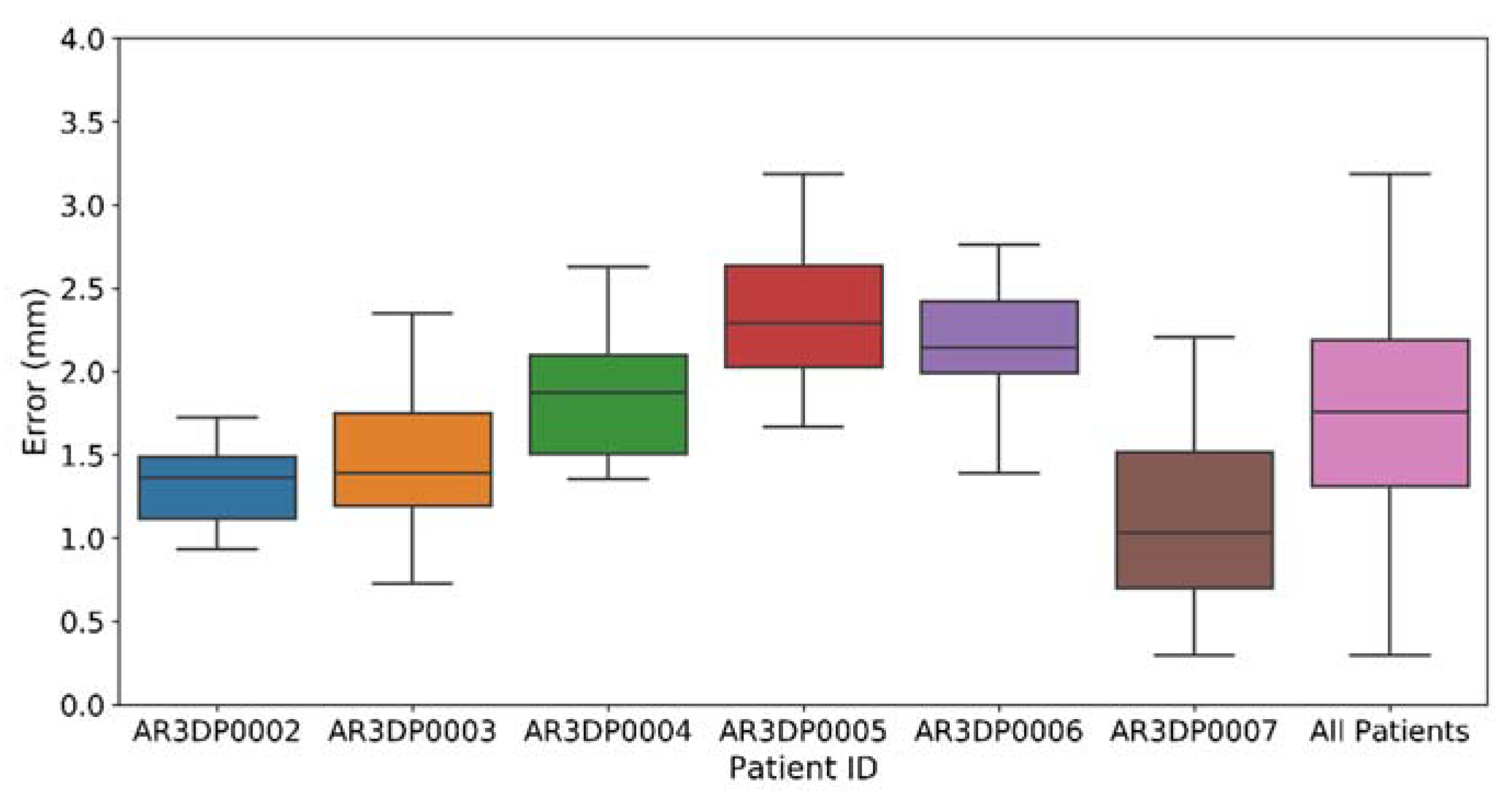

3.1. Augmented Reality Performance

3.2. Integration of the Augmented Reality System in the Surgical Workflow

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fletcher, C.D.; Unni, K.; Mertens, F. Pathology and Genetics of Tumours of Soft Tissue and Bone; IARCPress: Lyon, France, 2002. [Google Scholar]

- Hui, J.Y.C. Epidemiology and Etiology of Sarcomas. Surg. Clin. N. Am. 2016, 96, 901–914. [Google Scholar] [CrossRef]

- Clark, M.A.; Fisher, C.; Judson, I.; Thomas, J.M. Soft-Tissue Sarcomas in Adults. N. Engl. J. Med. 2005, 353, 701–711. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Casali, P.G.; Jost, L.; Sleijfer, S.; Verweij, J.; Blay, J.-Y. Soft tissue sarcomas: ESMO clinical recommendations for diagnosis, treatment and follow-up. Ann. Oncol. Off. J. Eur. Soc. Med. Oncol. 2008, 19 (Suppl. 2), ii89–ii93. [Google Scholar] [CrossRef]

- Bone sarcomas: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann. Oncol. Off. J. Eur. Soc. Med. Oncol. 2014, 25 (Suppl. 3), iii113–iii123. [CrossRef]

- Jeys, L.; Grimer, R.; Carter, S.; Tillman, R.; Abudu, S. Outcomes of Primary Bone Tumours of the Pelvis—The Roh Experience. Orthop. Proc. 2012, 94-B, 39. [Google Scholar] [CrossRef]

- Kawaguchi, N.; Ahmed, A.R.; Matsumoto, S.; Manabe, J.; Matsushita, Y. The Concept of Curative Margin in Surgery for Bone and Soft Tissue Sarcoma. Clin. Orthop. Relat. Res. 2004, 419, 165–172. [Google Scholar] [CrossRef] [PubMed]

- Gundle, K.R.; Kafchinski, L.; Gupta, S.; Griffin, A.M.; Dickson, B.C.; Chung, P.W.; Catton, C.N.; O’Sullivan, B.; Wunder, J.S.; Ferguson, P.C. Analysis of Margin Classification Systems for Assessing the Risk of Local Recurrence After Soft Tissue Sarcoma Resection. J. Clin. Oncol. 2018, 36, 704–709. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qureshi, Y.A.; Huddy, J.R.; Miller, J.D.; Strauss, D.C.; Thomas, J.M.; Hayes, A.J. Unplanned excision of soft tissue sarcoma results in increased rates of local recurrence despite full further oncological treatment. Ann. Surg. Oncol. 2012, 19, 871–877. [Google Scholar] [CrossRef]

- Smolle, M.A.; Andreou, D.; Tunn, P.-U.; Szkandera, J.; Liegl-Atzwanger, B.; Leithner, A. Diagnosis and treatment of soft-tissue sarcomas of the extremities and trunk. EFORT Open Rev. 2017, 2, 421–431. [Google Scholar] [CrossRef] [PubMed]

- Atesok, K.; Galos, D.; Jazrawi, L.M.; Egol, K.A. Preoperative Planning in Orthopaedic Surgery. Current Practice and Evolving Applications. Bull. Hosp. Jt. Dis. 2015, 73, 257–268. [Google Scholar]

- Cartiaux, O.; Docquier, P.-L.; Paul, L.; Francq, B.G.; Cornu, O.H.; Delloye, C.; Raucent, B.; Dehez, B.; Banse, X. Surgical inaccuracy of tumor resection and reconstruction within the pelvis: An experimental study. Acta Orthop. 2008, 79, 695–702. [Google Scholar] [CrossRef] [Green Version]

- Jeys, L.; Matharu, G.S.; Nandra, R.S.; Grimer, R.J. Can computer navigation-assisted surgery reduce the risk of an intralesional margin and reduce the rate of local recurrence in patients with a tumour of the pelvis or sacrum? Bone Jt. J. 2013, 95-B, 1417–1424. [Google Scholar] [CrossRef] [Green Version]

- Cho, H.S.; Oh, J.H.; Han, I.; Kim, H.-S. The outcomes of navigation-assisted bone tumour surgery. J. Bone Jt. Surg. Br. 2012, 94-B, 1414–1420. [Google Scholar] [CrossRef]

- Ventola, C.L. Medical Applications for 3D Printing: Current and Projected Uses. P T 2014, 39, 704–711. [Google Scholar]

- Barber, S.R.; Wong, K.; Kanumuri, V.; Kiringoda, R.; Kempfle, J.; Remenschneider, A.K.; Kozin, E.D.; Lee, D.J. Augmented Reality, Surgical Navigation, and 3D Printing for Transcanal Endoscopic Approach to the Petrous Apex. OTO Open Off. Open Access J. Am. Acad. Otolaryngol. Neck Surg. Found. 2018, 2, 2. [Google Scholar] [CrossRef] [PubMed]

- Yoo, S.-J.; Thabit, O.; Kim, E.K.; Ide, H.; Yim, D.; Dragulescu, A.; Seed, M.; Grosse-Wortmann, L.; van Arsdell, G. 3D printing in medicine of congenital heart diseases. 3D Print. Med. 2016, 2, 3. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Colaco, M.; Igel, D.A.; Atala, A. The potential of 3D printing in urological research and patient care. Nat. Rev. Urol. 2018, 15, 213–221. [Google Scholar] [CrossRef] [PubMed]

- Shuhaiber, J.H. Augmented Reality in Surgery. Arch. Surg. 2004, 139, 170–174. [Google Scholar] [CrossRef] [Green Version]

- Khor, W.S.; Baker, B.; Amin, K.; Chan, A.; Patel, K.; Wong, J. Augmented and virtual reality in surgery—the digital surgical environment: Applications, limitations and legal pitfalls. Ann. Transl. Med. 2016, 4, 454. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Inoue, D.; Cho, B.; Mori, M.; Kikkawa, Y.; Amano, T.; Nakamizo, A.; Yoshimoto, K.; Mizoguchi, M.; Tomikawa, M.; Hong, J.; et al. Preliminary Study on the Clinical Application of Augmented Reality Neuronavigation. J. Neurol. Surg. A. Cent. Eur. Neurosurg. 2013, 74, 71–76. [Google Scholar] [CrossRef] [Green Version]

- García-Mato, D.; Moreta-Martinez, R.; García-Sevilla, M.; Ochandiano, S.; García-Leal, R.; Pérez-Mañanes, R.; Calvo-Haro, J.A.; Salmerón, J.I.; Pascau, J. Augmented reality visualization for craniosynostosis surgery. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2020, 1–8. [Google Scholar] [CrossRef]

- Heinrich, F.; Joeres, F.; Lawonn, K.; Hansen, C. Comparison of Projective Augmented Reality Concepts to Support Medical Needle Insertion. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2157–2167. [Google Scholar] [CrossRef] [PubMed]

- Punyaratabandhu, T.; Liacouras, P.C.; Pairojboriboon, S. Using 3D models in orthopedic oncology: Presenting personalized advantages in surgical planning and intraoperative outcomes. 3D Print. Med. 2018, 4, 12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, M.; Chen, G.; Coles-Black, J.; Chuen, J.; Hardidge, A. Three-dimensional printing in orthopaedic preoperative planning improves intraoperative metrics: A systematic review. ANZ J. Surg. 2020, 90, 243–250. [Google Scholar] [CrossRef] [PubMed]

- Arnal-Burró, J.; Pérez-Mañanes, R.; Gallo-del-Valle, E.; Igualada-Blazquez, C.; Cuervas-Mons, M.; Vaquero-Martín, J. Three dimensional-printed patient-specific cutting guides for femoral varization osteotomy: Do it yourself. Knee 2017, 24, 1359–1368. [Google Scholar] [CrossRef] [PubMed]

- Sternheim, A.; Gortzak, Y.; Kolander, Y.; Dadia, S. Chapter 15—3D Printing in Orthopedic Oncology; Dipaola, M., Wodajo, F.M.B.T., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; pp. 179–194. ISBN 978-0-323-58118-9. [Google Scholar]

- Souzaki, R.; Ieiri, S.; Uemura, M.; Ohuchida, K.; Tomikawa, M.; Kinoshita, Y.; Koga, Y.; Suminoe, A.; Kohashi, K.; Oda, Y.; et al. An augmented reality navigation system for pediatric oncologic surgery based on preoperative CT and MRI images. J. Pediatr. Surg. 2013, 48, 2479–2483. [Google Scholar] [CrossRef]

- Abdel Al, S.; Chaar, M.K.A.; Mustafa, A.; Al-Hussaini, M.; Barakat, F.; Asha, W. Innovative Surgical Planning in Resecting Soft Tissue Sarcoma of the Foot Using Augmented Reality With a Smartphone. J. Foot Ankle Surg. 2020, 59, 1092–1097. [Google Scholar] [CrossRef] [PubMed]

- Pratt, P.; Ives, M.; Lawton, G.; Simmons, J.; Radev, N.; Spyropoulou, L.; Amiras, D. Through the HoloLensTM looking glass: Augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur. Radiol. Exp. 2018, 2, 2. [Google Scholar] [CrossRef]

- Kuhlemann, I.; Kleemann, M.; Jauer, P.; Schweikard, A.; Ernst, F. Towards X-ray free endovascular interventions—Using HoloLens for on-line holographic visualisation. Healthc. Technol. Lett. 2017, 4, 184–187. [Google Scholar] [CrossRef]

- Tang, R.; Ma, L.; Xiang, C.; Wang, X.; Li, A.; Liao, H.; Dong, J. Augmented reality navigation in open surgery for hilar cholangiocarcinoma resection with hemihepatectomy using video-based in situ three-dimensional anatomical modeling: A case report. Medicine 2017, 96, e8083. [Google Scholar] [CrossRef]

- Witowski, J.; DaRocha, S.; Kownacki, Ł; Pietrasik, A.; Pietura, R.; Banaszkiewicz, M.; Kamiński, J.; Biederman, A.; Torbicki, A.; Kurzyna, M. Augmented reality and three-dimensional printing in percutaneous interventions on pulmonary arteries. Quant. Imaging Med. Surg. 2019, 9, 23–29. [Google Scholar] [CrossRef]

- Moreta-Martinez, R.; García-Mato, D.; García-Sevilla, M.; Pérez-Mañanes, R.; Calvo-Haro, J.; Pascau, J. Augmented reality in computer-assisted interventions based on patient-specific 3D printed reference. Healthc. Technol. Lett. 2018, 5, 162–166. [Google Scholar] [CrossRef]

- Moreta-Martinez, R.; García-Mato, D.; García-Sevilla, M.; Pérez-Mañanes, R.; Calvo-Haro, J.A.; Pascau, J. Combining Augmented Reality and 3D Printing to Display Patient Models on a Smartphone. JoVE 2020. [Google Scholar] [CrossRef] [Green Version]

- Pieper, S.; Halle, M.; Kikinis, R. 3D Slicer. In Proceedings of the 2004 2nd IEEE International Symposium on Biomedical Imaging: Nano to Macro (IEEE Cat No. 04EX821), Arlington, VA, USA, 18 April 2004; Volume 1, pp. 632–635. [Google Scholar]

- Pérez-Mañanes, R.; Burró, J.A.; Manaute, J.R.; Rodriguez, F.C.; Martín, J.V. 3D Surgical Printing Cutting Guides for Open-Wedge High Tibial Osteotomy: Do It Yourself. J. Knee Surg. 2016, 29, 690–695. [Google Scholar] [CrossRef]

- The United States Pharmacopeia; United States Pharmacopeial Convention: Rockville, MD, USA, 2012; Volume 1, Available online: https://www.usp.org/ (accessed on 10 February 2021).

- Zislis, T.; Martin, S.A.; Cerbas, E.; Heath, J.R.; Mansfield, J.L.; Hollinger, J.O. A scanning electron microscopic study of in vitro toxicity of ethylene-oxide-sterilized bone repair materials. J. Oral Implantol. 1989, 15, 41–46. [Google Scholar] [PubMed]

- Sharma, N.; Cao, S.; Msallem, B.; Kunz, C.; Brantner, P.; Honigmann, P.; Thieringer, F.M. Effects of Steam Sterilization on 3D Printed Biocompatible Resin Materials for Surgical Guides-An Accuracy Assessment Study. J. Clin. Med. 2020, 9, 1506. [Google Scholar] [CrossRef] [PubMed]

- Moreta-Martinez, R.; Calvo-Haro, J.A.; Pérez-Mañanes, R.; García-Sevilla, M.; Mediavilla-Santos, L.; Pascau, J. Desktop 3D Printing: Key for Surgical Navigation in Acral Tumors? Appl. Sci. 2020, 10, 8984. [Google Scholar] [CrossRef]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-Squares Fitting of Two 3-D Point Sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 698–700. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wayne, M.; Ryan, J.; Stephen, S. Virtual and Augmented Reality Applications in Medicine and Surgery The Fantastic Voyage is here. Anat. Physiol. Curr. Res. 2019, 9, 1–6. [Google Scholar] [CrossRef]

- Wen, R.; Chng, C.B.; Chui, C.K. Augmented reality guidance with multimodality imaging data and depth-perceived interaction for robot-assisted surgery. Robotics 2017, 6, 13. [Google Scholar] [CrossRef] [Green Version]

- Fitzpatrick, J.; West, J.; Maurer, C. Predicting error in rigid-body point-based registration. IEEE Trans. Med Imaging 1998, 17, 694–702. [Google Scholar] [CrossRef] [PubMed]

- Street, R.L.J.; Makoul, G.; Arora, N.K.; Epstein, R.M. How does communication heal? Pathways linking clinician-patient communication to health outcomes. Patient Educ. Couns. 2009, 74, 295–301. [Google Scholar] [CrossRef] [PubMed]

- Kaiser, D.; Hoch, A.; Kriechling, P.; Graf, D.N.; Waibel, F.W.A.; Gerber, C.; Müller, D.A. The influence of different patient positions on the preoperative 3D planning for surgical resection of soft tissue sarcoma in the lower limb-a cadaver pilot study. Surg. Oncol. 2020, 35, 478–483. [Google Scholar] [CrossRef] [PubMed]

- Fett, D.; Küsters, R.; Schmitz, G. A Comprehensive Formal Security Analysis of OAuth 2.0. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1204–1215. [Google Scholar]

| Case ID | Gender/Age | Diagnosis | Tumor Location | Tumor Size [cm] |

|---|---|---|---|---|

| AR3DP0002 | M/62 | Myxofibrosarcoma | Right buttock | 18 × 19 × 17 |

| AR3DP0003 | F/71 | Liposarcoma | Right periscapular region | 3 × 3 × 6 |

| AR3DP0004 | M/19 | Ewing Sarcoma | Left iliac crest | 13 × 19 × 16 |

| AR3DP0005 | F/66 | Fibrous dysplasia | Left femur | 4 × 2 × 8 |

| AR3DP0006 | M/79 | Myxofibrosarcoma | Left thigh | 10 × 15 × 12 |

| AR3DP0007 | F/84 | Undifferentiated pleomorphic sarcoma | Right calf | 10 × 8 × 14 |

| Case ID | CT Resolution [mm] | CT–Surgery Time Span [Days] |

|---|---|---|

| AR3DP0002 | 0.93 × 0.93 × 1.00 | 13 |

| AR3DP0003 | 1.31 × 1.31 × 3.00 | 101 |

| AR3DP0004 | 0.98 × 0.98 × 2.50 | 137 |

| AR3DP0005 | 0.78 × 0.78 × 0.80 | 92 |

| AR3DP0006 | 1.10 × 1.10 × 5.00 | 94 |

| AR3DP0007 | 1.13 × 1.13 × 3.00 | 83 |

| Case ID | Phantom Dimension [cm] |

|---|---|

| AR3DP0002 | 17 × 15 × 13 |

| AR3DP0003 | 12 × 11 × 9 |

| AR3DP0004 | 22 × 22 × 19 |

| AR3DP0005 | 16 × 10 × 5 |

| AR3DP0006 | 17 × 15 × 10 |

| AR3DP0007 | 22 × 12 × 11 |

| Questions. | Individual Scores (per surgeon) | Avg. Score | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| 1. AR in surgeries (general) | 5 | 4 | 5 | 5 | 3 | 5 | 4.5 |

| 2. AR in surgeon’s operations (general) | 5 | 4 | 3 | 3 | 4 | 5 | 4.0 |

| 3. DEMO: surgeon understanding | 5 | 4 | 5 | 5 | 4 | 5 | 4.7 |

| 4. DEMO: surgical planning | 5 | 4 | 5 | 5 | 5 | 5 | 4.8 |

| 5. DEMO: patient communication | 5 | 5 | 5 | 5 | 5 | 5 | 5.0 |

| 6. CLINIC: PLA bone fragment | 5 | 4 | 4 | 5 | 4 | 5 | 4.5 |

| 7. CLINIC: practice with AR | 5 | 3 | 4 | 5 | 5 | 5 | 4.5 |

| 8. CLINIC: patient communication | 5 | 3 | 5 | 5 | 3 | 5 | 4.3 |

| 9. SURGERY: tumor location | 5 | 5 | 4 | 5 | 4 | 5 | 4.7 |

| 10. SURGERY: increase of accuracy | 5 | 4 | 4 | 5 | 5 | 5 | 4.7 |

| 11. SURGERY: phone case | 5 | 4 | 5 | 5 | 5 | 5 | 4.8 |

| 12. GENERIC: easiness of interpretation | 5 | 5 | 4 | 5 | 4 | 5 | 4.7 |

| 13. GENERIC: patient communication | 5 | 5 | 5 | 5 | 5 | 5 | 5.0 |

| 14. GENERIC: surgeon’s confidently | 4 | 4 | 5 | 5 | 4 | 5 | 4.5 |

| 15. GENERIC: use this workflow | 5 | 4 | 4 | 5 | 4 | 5 | 4.5 |

| Avg. Score | 4.9 | 4.1 | 4.5 | 4.9 | 4.3 | 5 | 4.5 |

| Questions | Individual Scores (per patient) | Avg. Score | |

|---|---|---|---|

| 1 | 2 | ||

| 1. Pathology understanding before ARHealth | 2 | 4 | 3 |

| 2. Pathology understanding after ARHealth | 5 | 5 | 5 |

| 3. General opinion about AR | 5 | 5 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreta-Martinez, R.; Pose-Díez-de-la-Lastra, A.; Calvo-Haro, J.A.; Mediavilla-Santos, L.; Pérez-Mañanes, R.; Pascau, J. Combining Augmented Reality and 3D Printing to Improve Surgical Workflows in Orthopedic Oncology: Smartphone Application and Clinical Evaluation. Sensors 2021, 21, 1370. https://doi.org/10.3390/s21041370

Moreta-Martinez R, Pose-Díez-de-la-Lastra A, Calvo-Haro JA, Mediavilla-Santos L, Pérez-Mañanes R, Pascau J. Combining Augmented Reality and 3D Printing to Improve Surgical Workflows in Orthopedic Oncology: Smartphone Application and Clinical Evaluation. Sensors. 2021; 21(4):1370. https://doi.org/10.3390/s21041370

Chicago/Turabian StyleMoreta-Martinez, Rafael, Alicia Pose-Díez-de-la-Lastra, José Antonio Calvo-Haro, Lydia Mediavilla-Santos, Rubén Pérez-Mañanes, and Javier Pascau. 2021. "Combining Augmented Reality and 3D Printing to Improve Surgical Workflows in Orthopedic Oncology: Smartphone Application and Clinical Evaluation" Sensors 21, no. 4: 1370. https://doi.org/10.3390/s21041370

APA StyleMoreta-Martinez, R., Pose-Díez-de-la-Lastra, A., Calvo-Haro, J. A., Mediavilla-Santos, L., Pérez-Mañanes, R., & Pascau, J. (2021). Combining Augmented Reality and 3D Printing to Improve Surgical Workflows in Orthopedic Oncology: Smartphone Application and Clinical Evaluation. Sensors, 21(4), 1370. https://doi.org/10.3390/s21041370