Hand Gesture Recognition Using Single Patchable Six-Axis Inertial Measurement Unit via Recurrent Neural Networks

Abstract

1. Introduction

2. Related Works

3. Methods

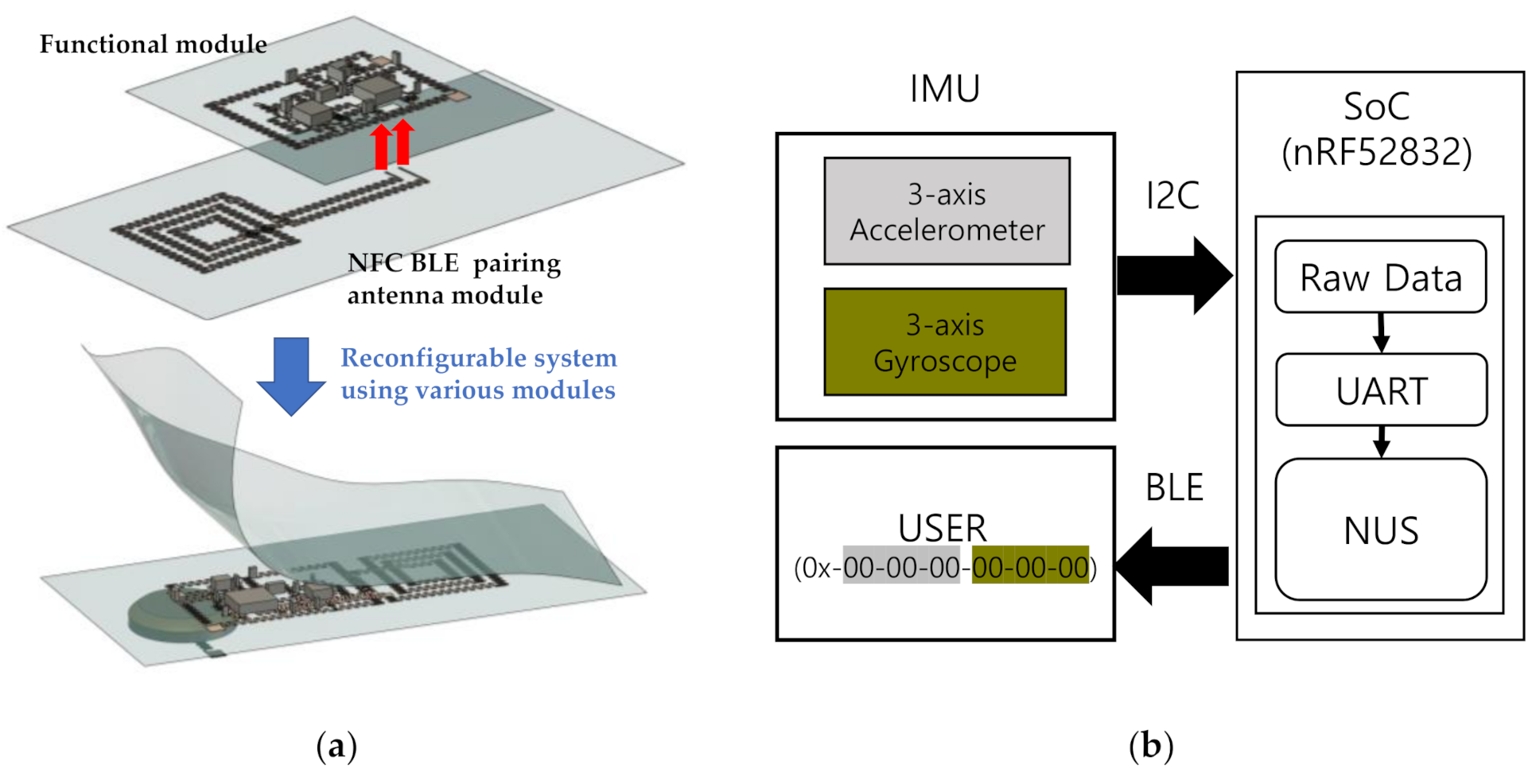

3.1. Design and Implementation of Patchable IMU

3.1.1. System Design

3.1.2. System Implementation Process

3.2. Hand Gesture Recognition via Recurrent Neural Networks

3.2.1. RNN with Bidirectional LSTM

3.2.2. RNN with GRU

3.3. RNN-Based Models Implementation

3.4. Database and Data Preparation

3.5. Evaluation Methodologies

4. Results

4.1. Implementation Results of Patchable IMU

4.2. Hand Gesture Classification with Public Database

4.3. Hand Gesture Classification with Collected Dataset

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kim, D.; Lu, N.; Ma, R.; Kim, Y.; Kim, R.; Wang, S.; Wu, J.; Won, S.M.; Tao, H.; Islam, A.; et al. Epidermal electronics. Science 2011, 333, 838–843. [Google Scholar] [CrossRef] [PubMed]

- Casson, A.J. Wearable EEG and Beyond. Biomed. Eng. Lett. 2019, 9, 53–71. [Google Scholar] [CrossRef] [PubMed]

- Van den Bogert, A.J.; Geijtenbeek, T.; Even-Zohar, O.; Steenbrink, F.; Hardin, E.C. A Real-Time System for Biomechanical Analysis of Human Movement and Muscle Function. Med. Biol. Eng. Comput. 2013, 51, 1069–1077. [Google Scholar] [CrossRef]

- Qi, Y.; Soh, C.B.; Gunawan, E.; Low, K.S.; Maskooki, A. A Novel Approach to Joint Flexion/Extension Angles Measurement Based on Wearable UWB Radios. IEEE J. Biomed. Health Inf. 2014, 18, 300–308. [Google Scholar]

- Jeong, H.; Wang, L.; Ha, T.; Mitbander, R.; Yang, X.; Dai, Z.; Qiao, S.; Shen, L.; Sun, N.; Lu, N. Modular and Reconfigurable Wireless E-Tattoos for Personalized Sensing. Adv. Mater. Technol. 2019, 4, 1900117. [Google Scholar] [CrossRef]

- Liu, Y.; Norton, J.J.S.; Qazi, R.; Zou, Z.; Ammann, K.R.; Liu, H.; Yan, L.; Tran, P.L.; Jang, K.; Lee, J.W.; et al. Epidermal Mechano-Acoustic Sensing Electronics for Cardiovascular Diagnostics and Human-Machine Interfaces. Sci. Adv. 2016, 2, e1601185. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Liu, Y.; Zhao, Y.; Ren, Z.; Guo, C.F. Flexible Electronics: Stretchable Electrodes and Their Future. Adv. Funct. Mater. 2019, 29, 1805924. [Google Scholar] [CrossRef]

- Lim, S.; Son, D.; Kim, J.; Lee, Y.B.; Song, J.; Choi, S.; Lee, D.J.; Kim, J.H.; Lee, M.; Hyeon, T.; et al. Wearable Electronics: Transparent and Stretchable Interactive Human Machine Interface Based on Patterned Graphene Heterostructures. Adv. Funct. Mater. 2014, 25, 375–383. [Google Scholar] [CrossRef]

- Novak, D.; Riener, R. A Survey of Sensor Fusion Methods in Wearable Robotics. Robot. Auton. Syst. 2015, 73, 155–170. [Google Scholar] [CrossRef]

- Teufl, W.; Lorenz, M.; Miezal, M.; Taetz, B.; Frohlich, M.; Bleser, G. Towards Inertial Sensor Based Mobile Gait analysis: Event-Detection and Spatio-Temporal Parameters. Sensors 2019, 19, 38. [Google Scholar] [CrossRef]

- Kan, Y.-C.; Kuo, Y.-C.; Lin, H.-C. Personalized Rehabilitation Recognition Model upon ANFIS. Proc. Eng. Technol. Innov. 2020, 14, 22–28. [Google Scholar] [CrossRef]

- Salvo, P.; Pingitore, A.; Barbini, A.; Di Francesco, F. A Wearable Sweat Rate Sensor to Monitor the Athletes’ Performance During Training. Sci. Sports 2017, 33, e51–e58. [Google Scholar] [CrossRef]

- Khurelbaatar, T.; Kim, K.; Lee, S.; Kim, Y.H. Consistent Accuracy in Whole-Body Joint Kinetics During Gait Using Wearable Inertial Motion Sensors and In-Shoe Pressure Sensors. Gait Posture 2015, 42, 65–69. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Kim, J.S.; Purevsuren, T.; Khuyagbaatar, B.; Lee, S.; Kim, Y.H. New Method to Evaluate Three-Dimensional Push-off Angle During Short-Track Speed Skating Using Wearable Inertial Measurement Unit Sensors. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2019, 233, 476–480. [Google Scholar] [CrossRef]

- Purevsuren, T.; Khuyagbaatar, B.; Lee, S.; Kim, Y.H. Biomechanical Factors Leading to High Loading in the Anterior Cruciate Ligament of the Lead Knee During Golf Swing. Int. J. Precis. Eng. Manuf. 2020, 21, 309–318. [Google Scholar] [CrossRef]

- Shull, P.B.; Jirattigalachote, W.; Hunt, M.A.; Cutkosky, M.R.; Delp, S.L. Quantified Self and Human Movement: A Review on the Clinical Impact of Wearable Sensing and Feedback for Gait Analysis and Intervention. Gait Posture 2014, 40, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Kun, L.; Inoue, Y.; Shibata, K.; Enguo, C. Ambulatory Estimation of Knee-Joint Kinematics in Anatomical Coordinate System Using Accelerometers and Magnetometers. IEEE Trans. Biomed. Eng. 2011, 58, 435–442. [Google Scholar] [CrossRef]

- Kim, K.; Lee, S. Implementation of Six-Axis Inertial Measurement Unit on a Stretchable Platform Using “Cut-and-Paste” Method for Biomedical Applications. Sens. Mater. 2019, 31, 1397–1405. [Google Scholar]

- Lee, J.; Kim, K.; Lee, S. Stretchable, Patch-Type, Wireless, 6-axis Inertial Measurement Unit for Mobile Health Monitoring. Proc. Eng. Technol. Innov. 2020, 14, 16–21. [Google Scholar] [CrossRef]

- Mohammed, S.; Tashev, I. Unsupervised Deep Representation Learning to Remove Motion Artifacts in Free-mode Body Sensor Networks. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks, BSN, Eindhoven, The Netherlands, 9–12 May 2017; pp. 183–188. [Google Scholar]

- Rivera, P.; Valarezo, E.; Choi, M.T.; Kim, T.S. Recognition of human hand activities based on a single wrist imu using recurrent neural networks. Int. J. Pharma Med. Biol. Sci. 2017, 6, 114–118. [Google Scholar] [CrossRef]

- Valarezo, E.; Rivera, P.; Park, H.; Park, N.; Kim, T.S. Human activities recognition with a single writs IMU via a Variational Autoencoder and android deep recurrent neural nets. Comput. Sci. Inf. Syst. 2020, 17, 581–597. [Google Scholar] [CrossRef]

- Lee, J.; Han, S.; Kim, K.; Kim, Y.; Lee, S. Wireless Epidermal Six-Axis Inertial Measurement Unit for Real-Time Joint Angle Estimation. Appl. Sci. 2020, 10, 2240. [Google Scholar] [CrossRef]

- Lee, H.; Choi, T.K.; Lee, Y.B.; Cho, H.R.; Ghaffari, R.; Wang, L.; Choi, H.J.; Chung, T.D.; Lu, N.; Hyeon, T.; et al. A Graphene-Based Electrochemical Device with Thermoresponsive Microneedles for Diabetes Monitoring and Therapy. Nat. Nanotechnol. 2016, 11, 566–572. [Google Scholar] [CrossRef] [PubMed]

- Miyamoto, A.; Lee, S.; Cooray, N.F.; Lee, S.; Mori, M.; Matsuhisa, N.; Jin, H.; Yoda, L.; Yokota, T.; Itoh, A.; et al. Inflammation-Free, Gas-Permeable, Lightweight, Stretchable On-Skin Electronics with Nanomeshes. Nat. Nanotechnol. 2017, 12, 907–913. [Google Scholar] [CrossRef] [PubMed]

- Gjoreski, H.; Bizjak, J.; Gjoreski, M.; Gams, M. Comparing Deep and Classical Machine Learning Methods for Human Activity Recognition using Wrist Accelerometer. In Proceedings of the IJCAI 2016 Workshop on Deep Learning for Artificial Intelligence, New York, NY, USA, 9–15 July 2016; AAAI Press: Palo Alto, CA, USA, 2016. [Google Scholar]

- Yang, J.B.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep Convolutional Neural Networks on Multichannel Time Series for Human Activity Recognition. In Proceedings of the IJCAI’15: 24th International Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; AAAI Press: Palo Alto, CA, USA, 2015; pp. 3995–4001. [Google Scholar]

- Hammerla, N.Y.; Halloran, S.; Ploetz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. In Proceedings of the International Joint Conference on Artificial Intelligence, Phoneix, AZ, USA, 12–17 February 2016; AAAI Press: Palo Alto, CA, USA, 2016. [Google Scholar]

- Edel, M.; Enrico, K. Binarized-BLSTM-RNN based human activity recognition. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcalá de Henares, Madrid, Spain, 4–7 October 2016; IEEE Xplore: Piscataway, NJ, USA, 2016. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Vu, T.H.; Dang, A.; Dung, L.; Wang, J.C. Self-Gated Recurrent Neural Networks for Human Activity Recognition on Wearable Devices. In Thematic Workshops ‘17: Proceedings of the on Thematic Workshops of ACM Multimedia; Association for Computing Machinery: New York, NY, USA, 2017; pp. 179–185. [Google Scholar]

- nWP026-nRF52832 NFC Antenna Tuning. Available online: https://infocenter.nordicsemi.com/index.jsp (accessed on 4 December 2020).

- Rogers, J.; Someya, T.; Huang, Y. Materials and Mechanics for Stretchable Electronics. Science 2010, 327, 1603–1607. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z. Mechanics Design of Stretchable Near Field Communication Antenna with Serpentine Wires. J. Appl. Mech. 2018, 85. [Google Scholar] [CrossRef]

- Schnee, J.; Stegmaier, J.; Lipowsky, T.; Li, P. Auto-Correction of 3D-Orientation of IMUs on Electric Bicycles. Sensors 2020, 20, 589. [Google Scholar] [CrossRef]

- Nonomura, Y. Sensor Technologies for Automobiles and Robots. IEEJ Trans. 2020, 15, 984–994. [Google Scholar] [CrossRef]

- Chung, S.; Lim, J.; Noh, K.J.; Kim, G.; Jeong, H. Sensor Data Acquisition and Multimodal Sensor Fusion for Human Activity Recognition Using Deep Learning. Sensors 2019, 19, 1716. [Google Scholar] [CrossRef]

- Chen, J.; Zheng, J.; Gao, Q.; Zhang, J.; Zhang, J.; Omisore, O.M.; Wang, L.; Li, H. Polydimethylsiloxane (PDMS)-Based Flexible Resistive Strain Sensors for Wearable Applications. Appl. Sci. 2018, 8, 345. [Google Scholar] [CrossRef]

- Cole, C.A.; Janos, B.; Anshari, D.; Thrasher, J.F.; Strayer, S.; Valafar, H. Recognition of Smoking Gesture Using Smart Watch Technology. In Proceedings of the International Conference on Health Informatics and Medical Systems (HIMS), Las Vegas, NV, USA, 25–28 July 2016; 2016; pp. 9–14. [Google Scholar]

- Valarezo Añazco, E.; Rivera Lopez, P.; Lee, S.; Byun, K.; Kim, T.S. Smoking activity recognition using a single wrist IMU and deep learning light. In Proceedings of the 2nd International Conference on Digital Signal Processing, Beijing, China, 16–18 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 48–51. [Google Scholar]

- Nasri, N.; Orts-Escolano, S.; Cazorla, M. An Semg-Controlled 3d Game for Rehabilitation Therapies: Real-Time Time Hand Gesture Recognition Using Deep Learning Techniques. Sensors 2020, 20, 6451. [Google Scholar] [CrossRef] [PubMed]

- Rivera, P.; Valarezo, E.; Kim, T.S. An Integrated ARMA-based Deep Autoencoder and GRU Classifier System for Enhanced Recognition of Daily Hand Activities. Int. J. Pattern Recognit. Artif. Intell. 2020. [Google Scholar] [CrossRef]

- Gallagher, A.J.; Ni Anniadh, A.; Bruyere, K.; Otténio, M.; Xie, H.; Gilchrist, M.D. Dynamic Tensile Properties of Human Skin. In Proceedings of the 2012 International Research Council on the Biomechanics of Injury Conference, Dublin, Ireland, 12–14 September 2012; Volume 40, pp. 494–502. [Google Scholar]

- Valarezo, E.; Rivera, P.; Park, J.M.; Gi, G.; Kim, T.Y.; Kim, T. Human Activity Recognition Using a Single Wrist IMU Sensor via Deep Learning Convolutional and Recurrent Neural Nets. Unikom J. IctDes. Eng. Technol. Scine 2017, 1, 1–5. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Lefebvre, G.; Berlemont, S.; Mamalet, F.; Garcia, C. BLSTM-RNN Based 3D Gesture Classification. In Artificial Neural Networks and Machine Learning—ICANN 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8131, pp. 381–388. [Google Scholar]

- Deeplearning4j Development Team, “Deeplearning4j: Open-Source Distributed Deep Learning for the JVM, Apache Software Foundation 2.0. Available online: http://deeplearning4j.org (accessed on 5 December 2020).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Kelleen, T.; Lin, Z.; Gimelshein, N.; Antiaga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Chen, M.; AlRegib, G.; Juang, B. 6DMG: A New 6D Motion Gesture Database. In Proceedings of the Second ACM Multimedia Systems Conference (MMSys), Chapel Hill, NC, USA, 22–24 February 2012. [Google Scholar]

- Khan, N.; Mellor, H.S.; Plotz, T. Optimising sampling rates for accelerometer-based human activity recognition. Pattern Recognit. Lett. 2016, 73, 33–40. [Google Scholar] [CrossRef]

- Hees, V.T.; Gorzelniak, L.; Leon, E.C.D.; Eder, M.; Pias, M.; Taherian, S.; Ekelund, U.; Renstrom, F.; Franks, P.W.; Horsch, A.; et al. Separating Movement and Gravity Components in an Acceleration Signal and Implications for the Assessment of Human Daily Physical Activity. PLoS ONE 2013, 8, e61691. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Al-antari, M.A.; Al-masani, M.A.; Metwally, M.; Hussain, D.; Valarezo, E.; Rivera, P.; Gi, G.; Park, J.M.; Kim, T.S.; Park, S.J.; et al. Non-local means filter denoising for DEXA images. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 572–575. [Google Scholar]

| (a) | |||

| (%) | Circle | V Shape | X Shape |

| Circle | 100 | 0 | 0 |

| V Shape | 0 | 94.85 | 5.15 |

| X Shape | 5 | 1.67 | 93.33 |

| (b) | |||

| (%) | Circle | V Shape | X Shape |

| Circle | 100 | 0 | 0 |

| V Shape | 0 | 99.16 | 0.84 |

| X Shape | 0 | 1.67 | 98.33 |

| (a) | |||

| (%) | Circle | V Shape | X Shape |

| Circle | 98.14 | 0.93 | 0.93 |

| V Shape | 0 | 92.66 | 7.34 |

| X Shape | 0 | 8.43 | 91.57 |

| (b) | |||

| (%) | Circle | V Shape | X Shape |

| Circle | 97.50 | 0 | 2.50 |

| V Shape | 0 | 96.72 | 3.28 |

| X Shape | 0 | 8.20 | 91.80 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valarezo Añazco, E.; Han, S.J.; Kim, K.; Lopez, P.R.; Kim, T.-S.; Lee, S. Hand Gesture Recognition Using Single Patchable Six-Axis Inertial Measurement Unit via Recurrent Neural Networks. Sensors 2021, 21, 1404. https://doi.org/10.3390/s21041404

Valarezo Añazco E, Han SJ, Kim K, Lopez PR, Kim T-S, Lee S. Hand Gesture Recognition Using Single Patchable Six-Axis Inertial Measurement Unit via Recurrent Neural Networks. Sensors. 2021; 21(4):1404. https://doi.org/10.3390/s21041404

Chicago/Turabian StyleValarezo Añazco, Edwin, Seung Ju Han, Kangil Kim, Patricio Rivera Lopez, Tae-Seong Kim, and Sangmin Lee. 2021. "Hand Gesture Recognition Using Single Patchable Six-Axis Inertial Measurement Unit via Recurrent Neural Networks" Sensors 21, no. 4: 1404. https://doi.org/10.3390/s21041404

APA StyleValarezo Añazco, E., Han, S. J., Kim, K., Lopez, P. R., Kim, T.-S., & Lee, S. (2021). Hand Gesture Recognition Using Single Patchable Six-Axis Inertial Measurement Unit via Recurrent Neural Networks. Sensors, 21(4), 1404. https://doi.org/10.3390/s21041404