1. Introduction

Object classification is important and occurs in our daily lives. Typical human classifications rely on three main elements, which are vision, haptic sensing and user experience. In the situation of lacking any of these elements, the classification could become a challenging task. For example, an experienced farmer classifies the quality of crops for grading. The same classification task would be challenging for a rookie, or a vision-impaired operator. Although vision provides fruitful information for object classification, there are scenarios where vision is not available or limited. For example, vision is often not available in a rough terrain due to blockage of lighting during a rescuing mission.

This paper aims to develop a wearable tool for supporting classification with flexion of fingers, so that classification can become conceivable during an operation, or under the situation that visual recognition is unavailable.

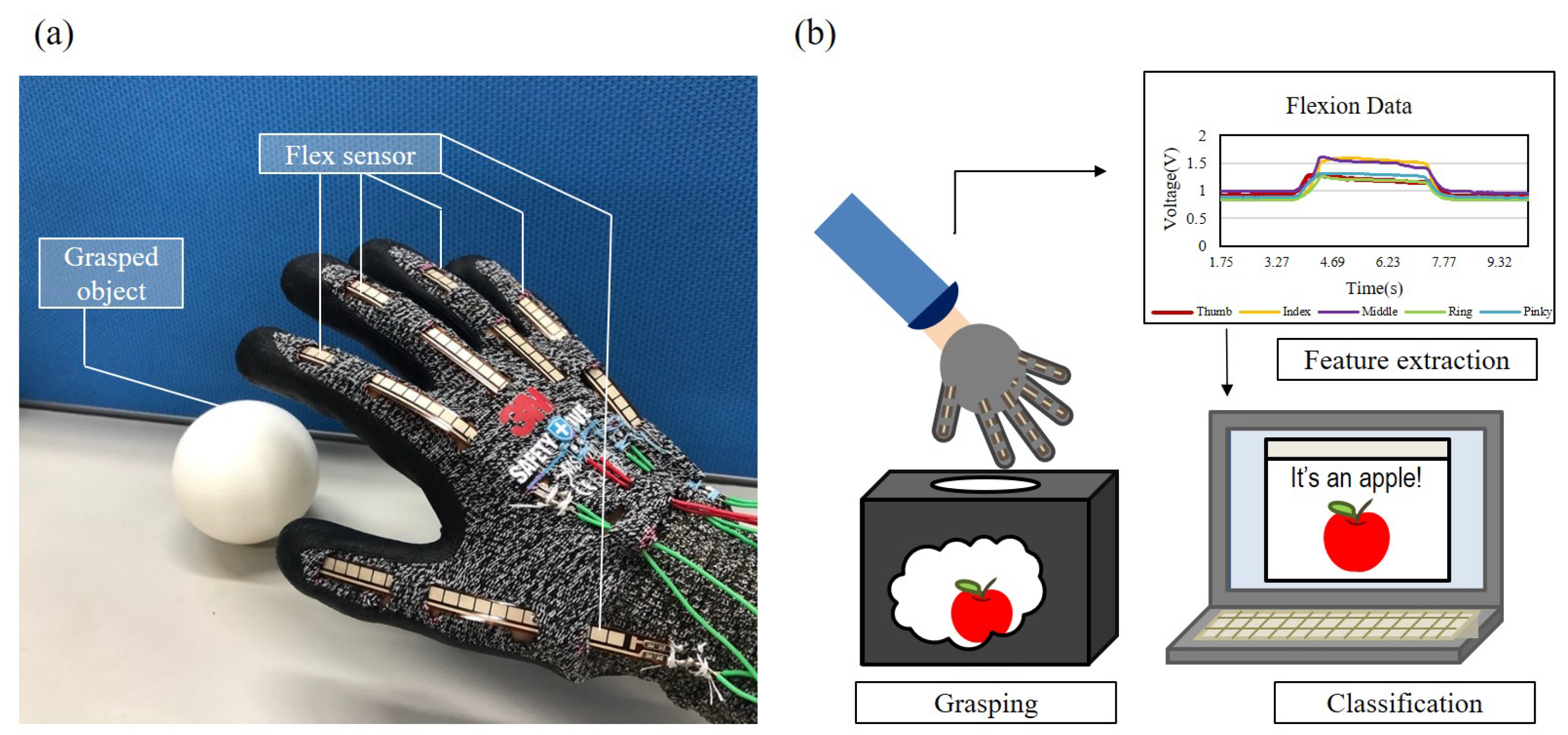

Figure 1 shows an overview of the proposed method, where

Figure 1a,b are a photo of the proposed flexion glove, and an illustration of the classification process, respectively. The object classification is performed by flexion signals rendered from five flex sensors during a grasp. The flexion, which is also referred as bending in this paper, from each finger is acquired by the flexion glove. Feature values are extracted from the flexion signals and are plugged into support vector machine, a machine learning classifier, for object classification.

Human hand has great potential for object classification because of its special configuration that it has fingers with different lengths and different orientations. Such a configuration results in fruitful information while grasping an object, and also leads to dexterous motion, which robots are difficult to imitate. For example, five fingers of a hand can perform grasping and pinching in coordination with each other [

1]. The configuration of human hand is not usually employed in robotic grippers in application because it is complicated and the singularities, in terms of kinematics and controller design, often exist. Even the highly dexterous robots are usually using fingers with the same length. For example, Li et al. developed a method to grasp an object with shape uncertainty, and the hands used in their work have the same length for each finger [

2]. In order to take the advantages of human hand configuration on object sensing, a glove for human hands is chosen as the sensing source for our classification method.

Wearable devices for monitoring hand joints motion have been investigated from different perspectives in recent studies [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. For example, Sundaram et al. developed a scalable tactile glove consisting of 548 pressure sensing point over the palm and fingers for learning the signatures of human grasping [

5]. Hughes et al. integrate resistive-pressure sensors into a glove for sensing, proprioception and identification [

4]. Saggio et al. investigated the electrical features of commercial resistive flex sensors [

6]. Sbernini developed a glove using arrays of bend sensors which can measure three joints of a single finger for the posture of a human hand [

8]. Such wearable devices can be applied in different fields. For example, sensory gloves have been developed as master-slave inputs for manipulating robot arms [

9,

10,

11]. Wearable devices for rehabilitation purpose were also investigated [

14,

15,

16]. For example, Kang et al. developed a tendon-driven robotic hand for assisting grasping and rehabilitation [

17].

Object classification tasks with machine learning are often conducted in the visual aspect. The general operation is to extract the features from the input data, and then perform classifications in the feature space [

18,

19,

20]. While the vision-based hand movement tracking systems are also very popular, they are relatively costly and have limits when the vision of the hand are blocked. Flexion-based sensors have advantages of low-cost, suitable for various of grasping postures, easy to install on a glove, and their long life cycle, which is typically over 1 million times of complete bends. Upon these advantages, the flex-based sensor is chosen for measuring hand gesture in this work.

4. Results and Discussions

The section of results includes three parts, which are the obtained flexion signals on the printed objects, the classification results with SVM, and the results of the “Grasp-to-see” application, respectively. The patterns of flexion signals for grasping objects from the printed set are first investigated. Because the size and shape of the objects are specified, the patterns and trends of flexion signals can be systematically discussed. The second part is focused on the performance of the SVM classifications on the sets of printed objects and daily-life objects. The flexion signals of five fingers are utilized as the input features for SVM. The convergence of the SVM training and the accuracy of the classifications on both object sets are presented. Finally, a real-time application using the proposed flexion glove is demonstrated on daily-life objects. The details of the results are presented in following sections.

4.1. Flexion Signals on the Printed Objects

Figure 9 shows nine examples of flexion data from grasping each of nine different printed objects, along with grasping photos. For a better visualization of the grasping gestures, the photos in

Figure 9 were taken with the same subject performing the grasping without wearing the glove.

Figure 9a–c are the flexion results obtained from grasping the objects with the shapes of cuboid, cylinder and sphere, respectively. Each shape has three different sizes as specified in

Section 3.1. The grasping of the objects from the smallest to the largest are shown from the top row to the bottom row in

Figure 9. Five different colors of curves in each result indicate the flexion of five fingers, where red, yellow, purple, green and blue represent the thumb, index, middle, ring and pinky fingers, respectively.

For grasping cuboid objects, as the examples shown in

Figure 9a, the phases of picking, holding and releasing can be clearly distinguished from the figures. As shown in the first two rows of

Figure 9a, the index finger and middle finger tend to bend together in the holding phase while the ring finger and the pinky are another group bending together. The flexion of the thumb reaches its peaks during the picking phase and relaxed in the holding phase, except grasping the largest cuboid with the width of 9 cm. Grasping the 9 cm cuboid is a special case with a different pattern of flexion signals that the middle finger bends the most while there is nearly no flexion in the pinky. It could be the result of that the middle finger tried to handle the object with additional flexion in DIP and PIP joints for the largest object.

For grasping cylinder objects, as the examples shown in

Figure 9b, the grasping patterns are similar to the ones with cuboids in

Figure 9a, except the flexion of the thumb. Two semi-synchronized flexion groups, index-middle and ring-pinky, are bent together in the holding phase for the small and mid-size cylinders. For the largest cylinder, the flexion of the thumb and middle finger are greater than the other three fingers, which can be interpreted as that the thumb and middle finger are dominant in grasping the largest cylinder.

For grasping sphere objects, as the examples shown in

Figure 9c, the flexion signals of different fingers tend to be similar, except the one with the smallest sphere where the thumb and ring finger have relatively small flexion comparing with the others. It may be due to that the subject held the sphere at the position of the ring finger in the fist, so that the ring finger cannot curl as much as the index, middle and pinky fingers. For the mid-size and large sphere, all the five fingers have the same tendency which is reasonable as the shape of the objects is fully symmetric.

Figure 10 shows the extracted features from grasping the printed objects. The features are the stable flexion signals during the holding phase. The values are obtained with the criterion in Equation (

4) from the signals shown in

Figure 9. The averages and standard deviations of the features of all the 180 tests, from 20 repetitions of the same test, are plotted with data points and error bars in the radar plot in

Figure 10. A clear tendency can be immediately observed from the three charts in

Figure 10 that the flexion signals decrease when the size of the object is increased. It is because when the size of an object increases, the fingers would bend less to fit the exterior of the object. Another observation is that the flexion signal of the thumb usually does not change as much as other fingers while the standard deviations of the thumb are greater than the other four fingers. It can be interpreted as that the subject tends to curl her other four fingers to hold the object, and the role of thumb is dispensable.

According to the results presented in

Figure 9 and

Figure 10, the patterns of grasping objects of different sizes and shapes are different and have potential to be distinguishable. Therefore, SVM, a machine learning method, is employed for further classification using the flexion signals.

4.2. Classification with SVM on Both Object Sets

The grasping task were performed 20 times on the both sets of printed objects and daily-life objects shown in

Figure 7a,b, respectively. The features of the flexion signals were extracted with Equations (

3) and (

4). A total of 360 sets of grasping features were obtained including the 20 times grasping on each of 9 printed objects and 9 daily-life objects.

Figure 11 shows the convergence test indicating the relation between the number of training data and the accuracy of object classification on the both object sets. For each object set, 45 out of 180 data are utilized for testing the accuracy of the classification while the rest 135 are used for training the classifier. In order to see the trend of the convergence in the training, the numbers of feature sets used to train the classifiers are 27, 54, 81, 108, 135 from the total 135 training sets. The 45 flexion data for testing include 5 times of grasping on 9 different printed objects.

Figure 11a shows that with 135 of training data, the accuracy of the classification can be achieved 95.56% in classifying the set of printed objects.

Figure 11b shows that with 135 of training data, the accuracy of the classification can be achieved 88.89% in classifying the set of daily-life objects.

A confusion matrix is a common presentation method which is usually used to display the performance of a classification.

Figure 12a,b are the confusion matrices for the classification with the set of printed objects and daily-life objects, respectively. Each of the confusion matrix include 45 prediction results. The vertical and horizontal axes of the confusion matrices are the ground truth and the prediction of the SVM classifier. That means, the prediction is more accurate if more data falls in the diagonal, from upper-left to lower-right, of the matrix. The values on the matrix table are the percentages of test data falling in a class. For example, the value of 20% located at the ground truth of “cylinder (3 cm)” and the prediction of “cuboid (3 cm)" in

Figure 12a indicates that there is 1 count out of 5 cylinder with the width of 3 cm being misclassified to the category of the 3 cm cuboid. The ideal case for a classification would result in concentrated values along the diagonal of the matrix, which indicate the prediction well match the ground truth.

According to the results in

Figure 12a, it is found that the test data almost all fall in the diagonal of the matrix. 43 out of 45 test data is correctly predicted which leads to the accuracy rate of 95.56%.

Figure 12b shows the confusion matrix of the predictions on the set of daily-life objects. The nine different objects from the set of daily-life objects have 135 feature sets for training data and 45 for testing, which is the same setup as the set of printed objects. The results show the flexion glove can classify the daily-life objects at the accuracy of 88.89%.

The accuracy of the classify printed object set and daily-life object set are 95.56% and 88.89%, respectively. The reason why the printed object set has higher accuracy could be that the shape and sizes are simple, which make the grasping signals more distinguishable between different classes. While the nine objects from daily-life object set are randomly choose for the grasping task. Some of the shape are more complex, such as the ketchup or cleaner, are the combination of different shapes. The classification also relies on the holding position. Although the subject is not asked to perform the task with a specific holding position, the actual holding positions are quite consistent during the experiments. An additional 15 tasks with a holding position from the top of each printed object were tested, and it was found that the accuracy was reduced from 95% to 86% for classification on the data with the two holding positions. The results suggest that more training data may be needed for dealing with random holding positions.

From the confusion matrix shown in

Figure 12b we find that the cereal box and strawberry sticks box are the most frequently misclassified cases among all 9 classes. 40% of cereal box, 2 out of 5, been misclassified as strawberry box and 60% of strawberry box, 3 out of 5, been misclassified as cereal box in the 45 times of test.

Figure 13 shows the comparison of the two misclassified cases. It is observed that they all share some extent of similarity in grasping posture regardless of the object shape. For example, in

Figure 13 the radar chart is form by the features of holding two misclassified objects, and highly overlapped data are shown. The misclassification is believed due to the reason that the two objects have the similar shape. The size of the sides that were being held were 2.5 cm and 5 cm for strawberry box and cereal box, respectively. The main difference between the posture of holding two objects is the angle between the thumb and index finger while the flexion from the fingers did not change very much. It shows that the glove may not be very sensitive when it comes to the shape of cuboid with different thickness on one side. The success of the classification may be sifnificantly improved with installation of an additional sensor between thumb and index finger.

4.3. Grasp-to-See Application

The glove is able to perform object classification in real-time with pre-trained classifiers. Photos of the real-time tests, which is also known as grasp-to-see application in this paper, are shown in

Figure 14, where

Figure 14a,b are the step-by-step photos for the classifications on the set of printed objects and the daily-life objects, respectively. In the grasp-to-see application, the user is first asked to sit in front of the computer and to follow the instruction on the screen. Once the test starts, the user is instructed to pick up the object on the desk within 1 s while at the same time, the flexion signals are started being collected through the serial ports on an Arduino board to the SVM program on the computer. After picking up the object, the user is instructed to hold the object for 4 s for the program to extract features from the flexion of his/her five fingers. The extracted features are plugged into the SVM classifier and it takes around 1 to 1.5 s for classifying the object. The whole process takes about 6 s to identify a given objects in the pre-trained classes. It only requires one single grasp for the object classification.

4.4. Future Work

The proposed classification, like all other classification methods, has its own limit and chances of misclassifying an object. The method can be further improved by practical solutions. For example, specially designed handles can be made for different tools, so that different types of tools can be better classified for working during the night or in insufficient lighting conditions. Furthermore, the presented results are based on one subject and can also be applied to robotic hands. When the glove is applied to the hands of different subjects, calibration and initial training are needed prior to the test. Initial training is common to different recognition methods, such as fingerprint lock, face lock and so on. The glove is based on supervised learning method and is aimed to perform classification tasks for known objects. A classification glove that can automatically adapt to different subjects for unknown objects is another interesting concept, and can be a follow-up work to the proposed glove.