Human-Robot Perception in Industrial Environments: A Survey

Abstract

:1. Introduction

- HR Coexistence, where humans and robots share the same working space, but performing tasks with different aims; here, the human is perceived as a generic obstacle to be avoided, and the robot action is limited to collision avoidance only.

- HR Cooperation, in which human and robots perform different tasks but with the same objectives that should be fulfilled simultaneously in terms of time and space. In this scenario, the collision avoidance algorithm includes human detection techniques, so the robot can differentiate the human operator from a generic object.

- HR Collaboration (HRC), where a direct interaction is established between the human operator and the robot while executing complex tasks. This can be achieved either by coordinated physical contact or by contactless actions, such as speech, intentions recognition, etc.

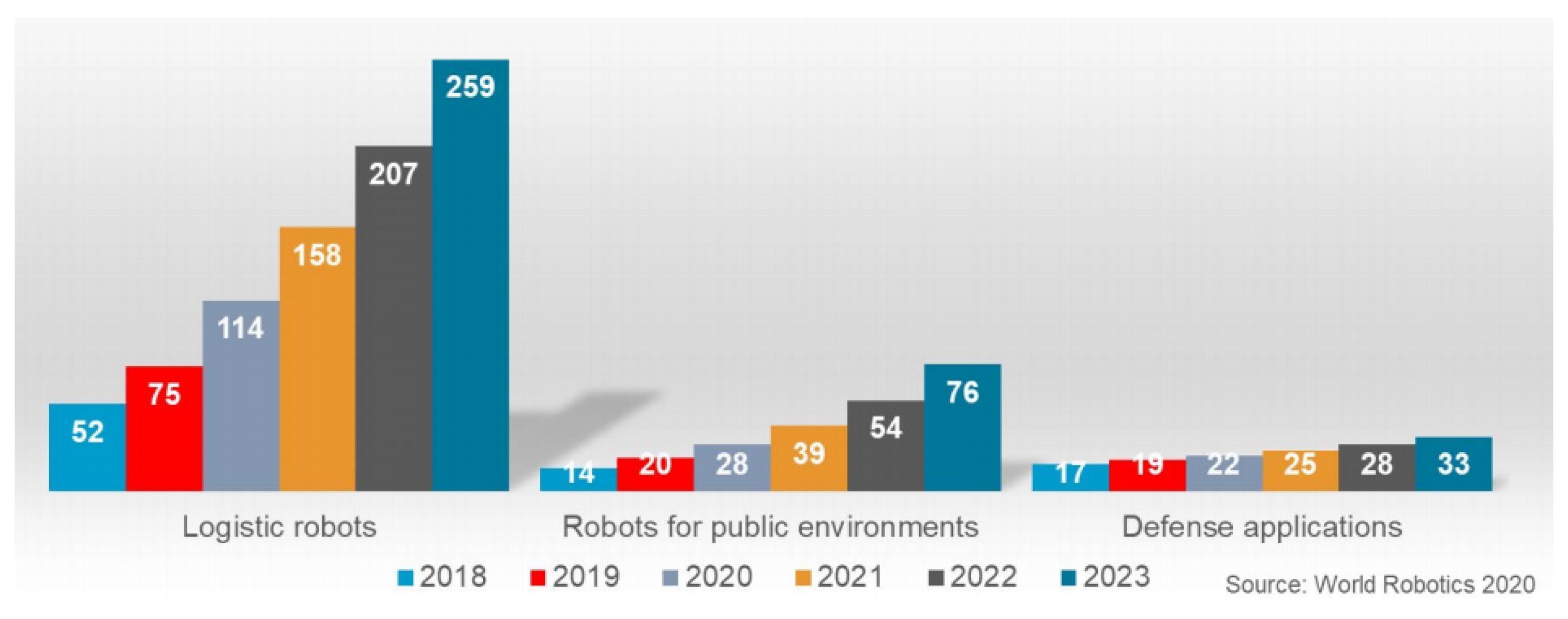

2. Robotic Systems and Human-Robot Perception

2.1. Fixed-Base Manipulators and Cobots

- A full awareness of the human presence and the environment is necessary.

- Only the safe management of the shared spaces can be sufficient, guaranteeing that humans cannot be injured during the robot motion.

- Type of sensor: the sensor output depends on the sensor technology. Therefore, the algorithms used to process the data can be quite different.

- Methodology to detect obstacles in the scene: the chosen methodology depends on the type of sensor but also on its location. The sensor can be positioned somewhere in the environment to monitor the entire scene or can be mounted on the robot arm. In the first case, it is necessary to distinguish humans and obstacles from the manipulator, otherwise the robotic system can identify itself as an obstacle. In the second case, the sensor position is not fixed and must be estimated to recreate the 3D scene.

- Anti-collision policy: once the obstacle is detected on the robot path, and hence the risk of a possible collision, the robot can be stopped providing some warning (e.g., sounding an alarm) or its trajectory can be automatically re-planned to avoid the obstacle.

2.2. Mobile Robots

2.3. Mobile Manipulators

2.4. A Brief Overview of HRP in Industry

- Figure 3 and Figure 4 aggregate the sources based on the employed sensors types, also carrying information about the used robot type. It is worth noting that by splitting sensors according to their presence on robots or on human operators, it is clear how (based on what has been analyzed) the sensory equipment for HRP are currently mainly positioned on the robot counterpart.

- Table 1 aims to enrich the overview presentation and ease the reader consultation, focusing on algorithms to implement HRP.

3. Proofs of Concept for Future Applications of Perception Technologies

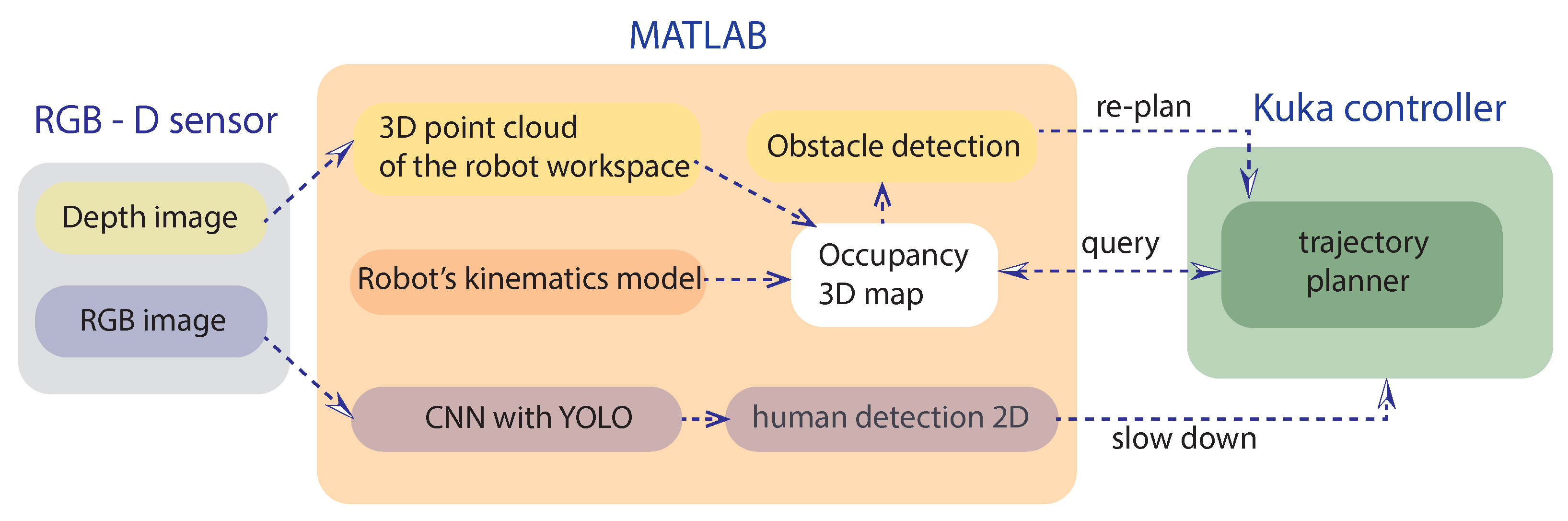

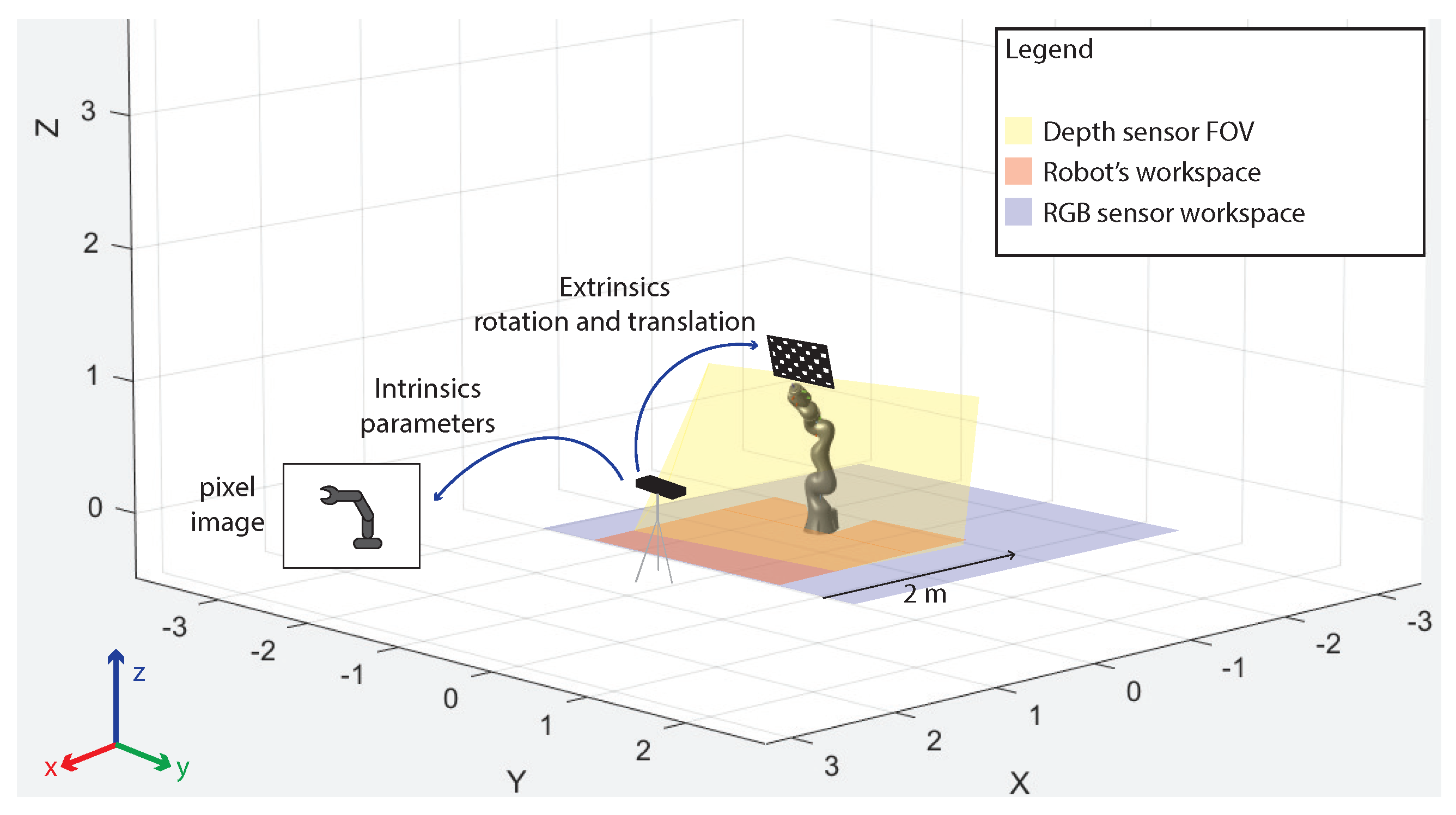

3.1. Collaborative Fixed-Base Manipulator: A Proof of Concept (POC)

- Within the robot workspace, human safety is ensured by a collision avoidance strategy based on depth sensor information.

- Outside the depth sensor range, the human presence is detected thanks to a different processing of the RGB image only, performed by a YOLO CNN.

- The use of a high accuracy sensor, commonly mounted on the robot arm to identify the shape of the object to grasp.

- The data processing process, which uses the RGB and depth information separately.

Potentialities, Preliminary Validation and Feasibility of the POC

- pros:

- Possibility of defining two monitoring areas (long and short-range) and therefore greater safety for the human operator.

- Possibility of processing RGB and depth images separately to structure a flexible monitoring technique for dynamic obstacle avoidance.

- Data processing methodology that reduces the amount of information, allowing efficient real-time functioning.

- No need to calculate the distance between objects using an inflation radius to ensure a safe distance between obstacles and robots.

- cons:

- The depth sensor is sensitive to reflective surfaces.

- Depth sensor measurements are reliable in a limited range sufficient to monitor the robot workspace only.

- The RGB-D sensor is not suitable for installation in dusty industrial environments or with high variability of brightness.

- Need to have more than one sensor to cover any blind spots and to monitor the 3D robot workspace.

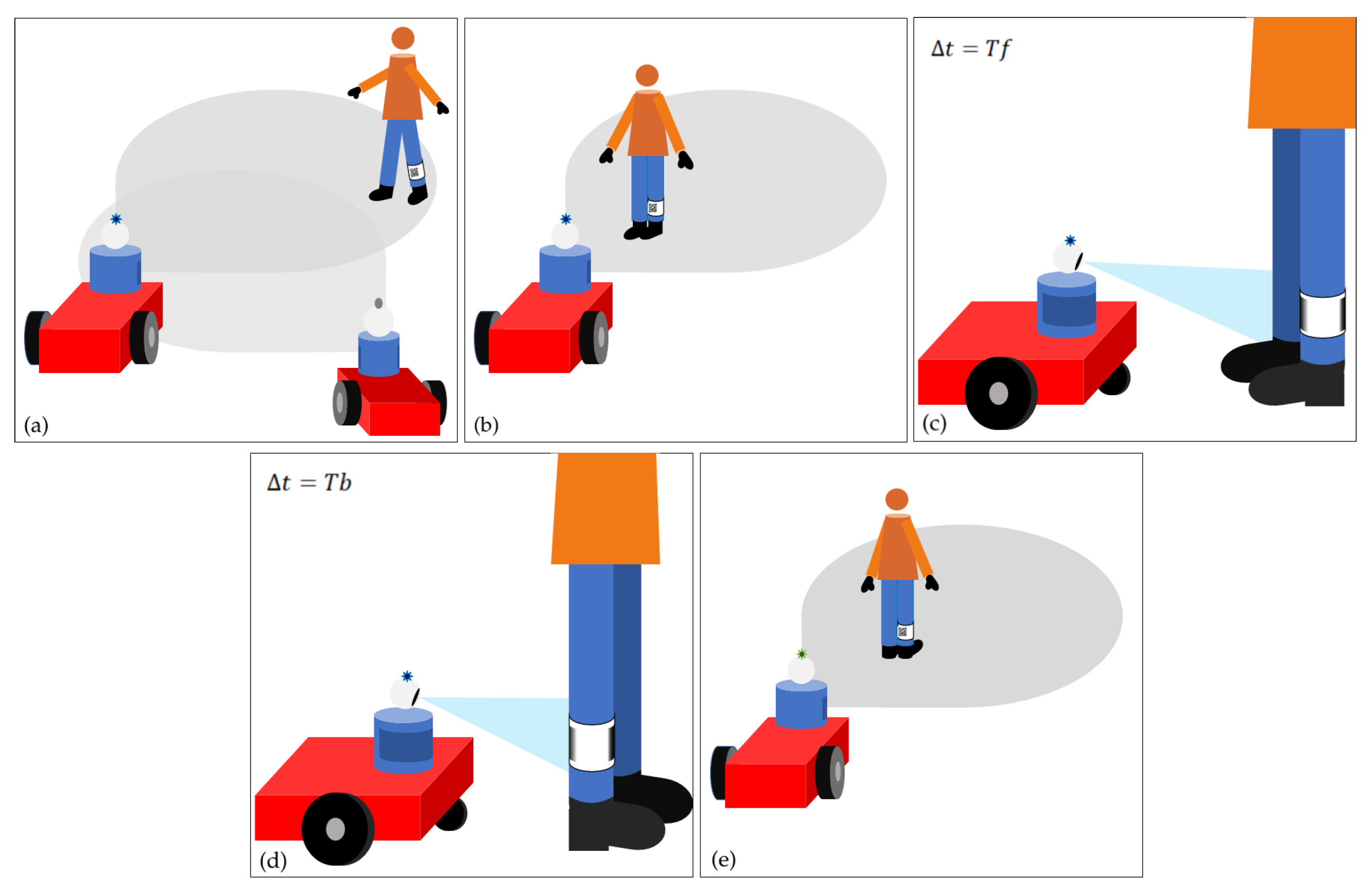

3.2. Collaborative Sen3Bot: A Proof of Concept

- In the light of the envisioned collaborative extension:

- If the area has the highest criticality level (area of type 1), the AMR must work in cooperative mode, implying that conservative avoidance of humans is implemented.

- If the area has the medium criticality (area of type 2), the AMR can switch between two modes, cooperation or collaboration with human operators.

- The working space taken into account is an area with criticality level equal to 2, i.e., a sub-critical area, corresponding to a zone that includes cobots workstations and manual stations where human operators are likely to be present, but expected to be mostly static.

- In such a sub-critical area, the human operators are assumed to be mainly trained ones, i.e., they are aware that the area is shared with AMRs and know how to interact with them. Operators of this type are identified by a tag, e.g., a QR code, on the front and the back of a wearable leg band.

- According to the Sen3bot Net rules, if a critical area of type 2 is foreseen to be crossed by an AGV, two Sen3Bots are sent to the scene if the human operator is moving within the environment.

- The operator approaches the AMR in wait4col idle mode.

- The operator stops at a fixed distance Df in front of the mobile robot letting it read for a given time Tf the front leg band tag, which contains relevant information (such as the operator ID).

- If the operator turns around, letting the robot read the back tag for a time Tb, then the collaborative mode of the Sen3Bot is activated.

4. Conclusions and Future Trends

- Vision sensors are fundamental to handle the human presence for any kind of robotic system, and in particular the most used one is the RGB-D camera.

- The combination of different kinds of sensors, possibly located on the robot and/or the human operator, can allow new types of collaboration and applications.

- Laser sensors are often used for human-perception purposes in combination with the vision ones in the case of mobile agents and manipulators, since they are typically present and used for navigation.

- The use of new, non-standard sensors is still limited, mainly due to the critical management of their somehow unconventional outputs.

- Most of the methods involved in HRP are enabled by the recognition of objects or human behavior, especially taking advantage of artificial intelligence algorithms.

- New HRC applications can be envisaged also with sensors more commonly available, thanks to an innovative use of the information provided by them, as in the first presented POC, or through a coded collaborative HR behavior, as in the second POC.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CPPS | Cyber Physical Production System |

| CPS | Cyber Physical System |

| CPHS | Cyber Physical Human System |

| HR | Human-Robot |

| HRI | Human-Robot Interaction |

| HRP | Human-Robot Perception |

| FoF | Factory of the Future |

| HRC | Human-Robot Collaboration |

| RGB | Red Green Blue |

| RGB-D | Red Green Blue—Depth |

| LIDAR | Laser Imaging Detection and Ranging |

| 3D | Tri-Dimensional |

| ISO | International Organization for Standardization |

| TS | Technical Specification |

| 2D | Two-Dimensional |

| IMU | Inertial Measurement Unit |

| ANN | Artificial Neural Network |

| IMR | Industrial Mobile Robot |

| AMR | Autonomous Mobile Robot |

| AGV | Automated Guided Vehicle |

| YOLO | You Only Look Once |

| CNN | Convolution Neural Network |

| EMG | Electromyography |

| AR | Augmented Reality |

| RNN | Recurrent Neural Networks |

| OpenCV | Open Computer Vision |

| SAN | Social-Aware Navigation |

| MES | Manufacturing Execution System |

| EN | European Standards |

| 2½D | Two-and-a-Half-Dimensional |

| RFID | Radio Frequency IDentification |

| ID | Identifier |

| FOV | Field Of View |

| POC | Proof of Concept |

| DH | Denavit–Hartenberg |

| GB | Gigabyte |

| RAM | Random-Access Memory |

| GPU | Graphics Processing Unit |

| SIFT | Scale-Invariant Features Transform |

| MOPS | Multi-scale Oriented Patches |

| UAV | Unmanned Aerial Vehicle |

| DOF | Degrees Of Freedom |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| ANSI | American National Standards Institute |

| QR | Quick Response |

| ROS | Robot Operating System |

| IP | Internet Protocol |

| KPI | Key Performance Indicators |

References

- Wu, X.; Goepp, V.; Siadat, A. Cyber Physical Production Systems: A Review of Design and Implementation Approaches. In Proceedings of the 2019 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Macao, China, 15–18 December 2019; pp. 1588–1592. [Google Scholar]

- Bonci, A.; Pirani, M.; Longhi, S. An embedded database technology perspective in cyber-physical production systems. Procedia Manuf. 2017, 11, 830–837. [Google Scholar] [CrossRef]

- Bonci, A.; Pirani, M.; Dragoni, A.F.; Cucchiarelli, A.; Longhi, S. The relational model: In search for lean and mean CPS technology. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 127–132. [Google Scholar]

- Bonci, A.; Pirani, M.; Cucchiarelli, A.; Carbonari, A.; Naticchia, B.; Longhi, S. A review of recursive holarchies for viable systems in CPSs. In Proceedings of the 2018 IEEE 16th International Conference on Industrial Informatics (INDIN), Porto, Portugal, 18–20 July 2018; pp. 37–42. [Google Scholar]

- Yildiz, Y. Cyberphysical Human Systems: An Introduction to the Special Issue. IEEE Control Syst. Mag. 2020, 40, 26–28. [Google Scholar]

- Dani, A.P.; Salehi, I.; Rotithor, G.; Trombetta, D.; Ravichandar, H. Human-in-the-Loop Robot Control for Human-Robot Collaboration: Human Intention Estimation and Safe Trajectory Tracking Control for Collaborative Tasks. IEEE Control Syst. Mag. 2020, 40, 29–56. [Google Scholar]

- Pantano, M.; Regulin, D.; Lutz, B.; Lee, D. A human-cyber-physical system approach to lean automation using an industrie 4.0 reference architecture. Procedia Manuf. 2020, 51, 1082–1090. [Google Scholar] [CrossRef]

- Bonci, A.; Longhi, S.; Nabissi, G.; Verdini, F. Predictive Maintenance System using motor current signal analysis for Industrial Robot. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019; pp. 1453–1456. [Google Scholar]

- Hentout, A.; Aouache, M.; Maoudj, A.; Akli, I. Human-robot interaction in industrial collaborative robotics: A literature review of the decade 2008–2017. Adv. Robot. 2019, 33, 764–799. [Google Scholar] [CrossRef]

- ISO Standard. ISO/TS 15066: 2016: Robots and Robotic Devices–Collaborative Robots; International Organization for Standardization: Geneva, Switzerland, 2016. [Google Scholar]

- Rosenstrauch, M.J.; Krüger, J. Safe human-robot-collaboration-introduction and experiment using ISO/TS 15066. In Proceedings of the 2017 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan, 22–24 April 2017; pp. 740–744. [Google Scholar]

- Aivaliotis, P.; Aivaliotis, S.; Gkournelos, C.; Kokkalis, K.; Michalos, G.; Makris, S. Power and force limiting on industrial robots for human-robot collaboration. Robot. Comput. Integr. Manuf. 2019, 59, 346–360. [Google Scholar] [CrossRef]

- Yao, B.; Zhou, Z.; Wang, L.; Xu, W.; Liu, Q. Sensor-less external force detection for industrial manipulators to facilitate physical human-robot interaction. J. Mech. Sci. Technol. 2018, 32, 4909–4923. [Google Scholar] [CrossRef]

- Wang, X.; Yang, C.; Ju, Z.; Ma, H.; Fu, M. Robot manipulator self-identification for surrounding obstacle detection. Multimed. Tools Appl. 2017, 76, 6495–6520. [Google Scholar] [CrossRef] [Green Version]

- Flacco, F.; De Luca, A. Real-time computation of distance to dynamic obstacles with multiple depth sensors. IEEE Robot. Autom. Lett. 2016, 2, 56–63. [Google Scholar]

- Brito, T.; Lima, J.; Costa, P.; Piardi, L. Dynamic collision avoidance system for a manipulator based on RGB-D data. In Proceedings of the ROBOT 2017: ROBOT 2017: Third Iberian Robotics Conference, Sevilla, Spain, 22–24 November 2017; Springer: Cham, Switzerland, 2017; pp. 643–654. [Google Scholar]

- Matsas, E.; Vosniakos, G.C. Design of a virtual reality training system for human–robot collaboration in manufacturing tasks. Int. J. Interact. Des. Manuf. IJIDeM 2017, 11, 139–153. [Google Scholar] [CrossRef]

- Vit, A.; Shani, G. Comparing rgb-d sensors for close range outdoor agricultural phenotyping. Sensors 2018, 18, 4413. [Google Scholar] [CrossRef] [Green Version]

- Zabalza, J.; Fei, Z.; Wong, C.; Yan, Y.; Mineo, C.; Yang, E.; Rodden, T.; Mehnen, J.; Pham, Q.C.; Ren, J. Smart sensing and adaptive reasoning for enabling industrial robots with interactive human-robot capabilities in dynamic environments—A case study. Sensors 2019, 19, 1354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Su, H.; Ovur, S.E.; Li, Z.; Hu, Y.; Li, J.; Knoll, A.; Ferrigno, G.; De Momi, E. Internet of things (iot)-based collaborative control of a redundant manipulator for teleoperated minimally invasive surgeries. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual Conference, 31 May–31 August 2020; pp. 9737–9742. [Google Scholar]

- Su, H.; Sandoval, J.; Vieyres, P.; Poisson, G.; Ferrigno, G.; De Momi, E. Safety-enhanced collaborative framework for tele-operated minimally invasive surgery using a 7-DoF torque-controlled robot. Int. J. Control. Autom. Syst. 2018, 16, 2915–2923. [Google Scholar] [CrossRef]

- Ding, Y.; Thomas, U. Collision Avoidance with Proximity Servoing for Redundant Serial Robot Manipulators. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual Conference, 31 May–31 August 2020; pp. 10249–10255. [Google Scholar]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Sakr, M.; Uddin, W.; Van der Loos, H.F.M. Orthographic Vision-Based Interface with Motion-Tracking System for Robot Arm Teleoperation: A Comparative Study. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23 March 2020; pp. 424–426. [Google Scholar]

- Yang, S.; Xu, W.; Liu, Z.; Zhou, Z.; Pham, D.T. Multi-source vision perception for human-robot collaboration in manufacturing. In Proceedings of the 2018 IEEE 15th International Conference on Networking, Sensing and Control (ICNSC), Zhuhai, China, 27–29 March 2018. [Google Scholar]

- Neto, P.; Simão, M.; Mendes, N.; Safeea, M. Gesture-based human-robot interaction for human assistance in manufacturing. Int. J. Adv. Manuf. Technol. 2019, 101, 119–135. [Google Scholar] [CrossRef]

- Digo, E.; Antonelli, M.; Cornagliotto, V.; Pastorelli, S.; Gastaldi, L. Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications. Robotics 2020, 9, 33. [Google Scholar] [CrossRef]

- Ragaglia, M.; Zanchettin, A.M.; Rocco, P. Trajectory generation algorithm for safe human-robot collaboration based on multiple depth sensor measurements. Mechatronics 2018, 55, 267–281. [Google Scholar] [CrossRef]

- Indri, M.; Lachello, L.; Lazzero, I.; Sibona, F.; Trapani, S. Smart sensors applications for a new paradigm of a production line. Sensors 2019, 19, 650. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- IFR—International Federation of Robotics. IFR World Robotics 2020 Service Robots Report Presentation. Available online: https://ifr.org/downloads/press2018/Presentation_WR_2020.pdf (accessed on 31 January 2021).

- Lynch, L.; Newe, T.; Clifford, J.; Coleman, J.; Walsh, J.; Toal, D. Automated Ground Vehicle (AGV) and Sensor Technologies—A Review. In Proceedings of the 2018 12th International Conference on Sensing Technology (ICST), Limerick, Ireland, 3–6 December 2018; pp. 347–352. [Google Scholar]

- Fedorko, G.; Honus, S.; Salai, R. Comparison of the traditional and autonomous agv systems. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2017; Volume 134, p. 00013. [Google Scholar]

- Zhou, S.; Cheng, G.; Meng, Q.; Lin, H.; Du, Z.; Wang, F. Development of multi-sensor information fusion and AGV navigation system. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; (postponed). Volume 1, pp. 2043–2046. [Google Scholar]

- Theunissen, J.; Xu, H.; Zhong, R.Y.; Xu, X. Smart agv system for manufacturing shopfloor in the context of industry 4.0. In Proceedings of the 2018 25th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Stuttgart, Germany, 20–22 November 2018; pp. 1–6. [Google Scholar]

- Oyekanlu, E.A.; Smith, A.C.; Thomas, W.P.; Mulroy, G.; Hitesh, D.; Ramsey, M.; Kuhn, D.J.; Mcghinnis, J.D.; Buonavita, S.C.; Looper, N.A.; et al. A Review of Recent Advances in Automated Guided Vehicle Technologies: Integration Challenges and Research Areas for 5G-Based Smart Manufacturing Applications. IEEE Access 2020, 8, 202312–202353. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, C.H.; Shao, X. User preference-aware navigation for mobile robot in domestic via defined virtual area. J. Netw. Comput. Appl. 2021, 173, 102885. [Google Scholar] [CrossRef]

- Chandan, K.; Zhang, X.; Albertson, J.; Zhang, X.; Liu, Y.; Zhang, S. Guided 360-Degree Visual Perception for Mobile Telepresence Robots. In Proceedings of the RSS—2020 Workshop on Closing the Academia to Real-World Gap in Service Robotics, Corvallis, OR, USA, 13 July 2020. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Luo, J.; Lin, Z.; Li, Y.; Yang, C. A teleoperation framework for mobile robots based on shared control. IEEE Robot. Autom. Lett. 2019, 5, 377–384. [Google Scholar] [CrossRef] [Green Version]

- Mavrogiannis, C.; Hutchinson, A.M.; Macdonald, J.; Alves-Oliveira, P.; Knepper, R.A. Effects of distinct robot navigation strategies on human behavior in a crowded environment. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea, 11–14 March 2019; pp. 421–430. [Google Scholar]

- Siva, S.; Zhang, H. Robot perceptual adaptation to environment changes for long-term human teammate following. Int. J. Robot. Res. 2020. [Google Scholar] [CrossRef]

- Han, F.; Siva, S.; Zhang, H. Scalable Representation Learning for Long-Term Augmented Reality-Based Information Delivery in Collaborative Human-Robot Perception. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; Springer: Cham, Switzerland, 2019; pp. 47–62. [Google Scholar]

- Benos, L.; Bechar, A.; Bochtis, D. Safety and ergonomics in human-robot interactive agricultural operations. Biosyst. Eng. 2020, 200, 55–72. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human-robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Wang, W.; Wang, R.; Chen, G. Path planning model of mobile robots in the context of crowds. arXiv 2020, arXiv:2009.04625. [Google Scholar]

- Li, J.; Lu, L.; Zhao, L.; Wang, C.; Li, J. An integrated approach for robotic Sit-To-Stand assistance: Control framework design and human intention recognition. Control Eng. Pract. 2021, 107, 104680. [Google Scholar] [CrossRef]

- Juel, W.K.; Haarslev, F.; Ramírez, E.R.; Marchetti, E.; Fischer, K.; Shaikh, D.; Manoonpong, P.; Hauch, C.; Bodenhagen, L.; Krüger, N. SMOOTH Robot: Design for a novel modular welfare robot. J. Intell. Robot. Syst. 2020, 98, 19–37. [Google Scholar] [CrossRef]

- Kolar, P.; Benavidez, P.; Jamshidi, M. Survey of datafusion techniques for laser and vision based sensor integration for autonomous navigation. Sensors 2020, 20, 2180. [Google Scholar] [CrossRef] [Green Version]

- Banisetty, S.B.; Feil-Seifer, D. Towards a unified planner for socially-aware navigation. arXiv 2018, arXiv:1810.00966. [Google Scholar]

- Marques, F.; Gonçalves, D.; Barata, J.; Santana, P. Human-aware navigation for autonomous mobile robots for intra-factory logistics. In Proceedings of the International Workshop on Symbiotic Interaction, Eindhoven, The Netherlands, 18–19 December 2017; Springer: Cham, Switzerland, 2017; pp. 79–85. [Google Scholar]

- Kenk, M.A.; Hassaballah, M.; Brethé, J.F. Human-aware Robot Navigation in Logistics Warehouses. In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2019), Prague, Czech Republic, 29–31 July 2019; pp. 371–378. [Google Scholar]

- Blaga, A.; Militaru, C.; Mezei, A.D.; Tamas, L. Augmented reality integration into MES for connected workers. Robot. Comput. Integr. Manuf. 2021, 68, 102057. [Google Scholar] [CrossRef]

- Berg, J.; Lottermoser, A.; Richter, C.; Reinhart, G. Human-Robot-Interaction for mobile industrial robot teams. Procedia CIRP 2019, 79, 614–619. [Google Scholar] [CrossRef]

- Chen, D.; He, J.; Chen, G.; Yu, X.; He, M.; Yang, Y.; Li, J.; Zhou, X. Human-robot skill transfer systems for mobile robot based on multi sensor fusion. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Virtual Conference, 31 August–4 September 2020; pp. 1354–1359. [Google Scholar]

- Röhrig, C.; Heß, D. OmniMan: A Mobile Assistive Robot for Intralogistics Applications. Eng. Lett. 2019, 27, 1–8. [Google Scholar]

- Röhrig, C.; Heß, D.; Röhrig, C.; Heß, D.; Röhrig, C.; Heß, D.; Bleja, J.; Grossmann, U.; Horster, B.; Roß, A.; et al. Mobile Manipulation for Human-Robot Collaboration in Intralogistics. In Proceedings of the IAENG Transactions on Engineering Sciences-Special Issue for the International Association of Engineers Conferences 2019, Hong Kong, China, 13–15 March 2019; World Scientific: Singapore, 2019; Volume 24, pp. 459–466. [Google Scholar]

- ISO Standard. ISO 10218-1:2012-01, Robots and Robotic Devices—Safety Requirements for Industrial Robots—Part 1: Robots (ISO 10218-1:2011); International Organization for Standardization: Geneva, Switzerland, 2012. [Google Scholar]

- ISO Standard. ISO 3691-4:2020-53.060-53-ICS Industrial Trucks—Safety Requirements and Verification—Part 4: Driverless Industrial Trucks and Their Systems; International Organization for Standardization: Geneva, Switzerland, 2020. [Google Scholar]

- Cofield, A.; El-Shair, Z.; Rawashdeh, S.A. A Humanoid Robot Object Perception Approach Using Depth Images. In Proceedings of the 2019 IEEE National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 July 2019; pp. 437–442. [Google Scholar]

- Lasota, P.A.; Fong, T.; Shah, J.A. A Survey of Methods for Safe Human-Robot Interaction; Now Publishers: Boston, MA, USA; Delft, The Netherlands, 2017. [Google Scholar]

- Saenz, J.; Vogel, C.; Penzlin, F.; Elkmann, N. Safeguarding collaborative mobile manipulators-evaluation of the VALERI workspace monitoring system. Procedia Manuf. 2017, 11, 47–54. [Google Scholar] [CrossRef]

- Diab, M.; Pomarlan, M.; Beßler, D.; Akbari, A.; Rosell, J.; Bateman, J.; Beetz, M. SkillMaN—A skill-based robotic manipulation framework based on perception and reasoning. Robot. Auton. Syst. 2020, 134, 103653. [Google Scholar] [CrossRef]

- Lim, G.H.; Pedrosa, E.; Amaral, F.; Lau, N.; Pereira, A.; Dias, P.; Azevedo, J.L.; Cunha, B.; Reis, L.P. Rich and robust human-robot interaction on gesture recognition for assembly tasks. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–30 April 2017; pp. 159–164. [Google Scholar]

- Lim, G.H.; Pedrosa, E.; Amaral, F.; Dias, R.; Pereira, A.; Lau, N.; Azevedo, J.L.; Cunha, B.; Reis, L.P. Human-robot collaboration and safety management for logistics and manipulation tasks. In Proceedings of the ROBOT 2017: Third Iberian Robotics Conference, Sevilla, Spain, 22–24 November 2017; Springer: Cham, Switzerland, 2017; pp. 643–654. [Google Scholar]

- Kousi, N.; Michalos, G.; Aivaliotis, S.; Makris, S. An outlook on future assembly systems introducing robotic mobile dual arm workers. Procedia CIRP 2018, 72, 33–38. [Google Scholar] [CrossRef]

- Schlotzhauer, A.; Kaiser, L.; Brandstötter, M. Safety of Industrial Applications with Sensitive Mobile Manipulators–Hazards and Related Safety Measures. In Proceedings of the Austrian Robotics Workshop 2018, Innsbruck, Austria, 17–18 May 2018; p. 43. [Google Scholar]

- Karami, H.; Darvish, K.; Mastrogiovanni, F. A Task Allocation Approach for Human-Robot Collaboration in Product Defects Inspection Scenarios. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Virtual Conference, 31 August–4 September 2020; pp. 1127–1134. [Google Scholar]

- Darvish, K.; Bruno, B.; Simetti, E.; Mastrogiovanni, F.; Casalino, G. Interleaved Online Task Planning, Simulation, Task Allocation and Motion Control for Flexible Human-Robot Cooperation. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 58–65. [Google Scholar]

- Chen, M.; Liu, C.; Du, G. A human-robot interface for mobile manipulator. Intell. Serv. Robot. 2018, 11, 269–278. [Google Scholar] [CrossRef]

- Al, G.A.; Estrela, P.; Martinez-Hernandez, U. Towards an intuitive human-robot interaction based on hand gesture recognition and proximity sensors. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Virtual Conference, 14–16 September 2020; pp. 330–335. [Google Scholar]

- Kim, W.; Balatti, P.; Lamon, E.; Ajoudani, A. MOCA-MAN: A MObile and reconfigurable Collaborative Robot Assistant for conjoined huMAN-robot actions. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual Conference, 31 May–31 August 2020; pp. 10191–10197. [Google Scholar]

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Li, W.; Ogunbona, P.O. Human detection from images and videos: A survey. Pattern Recognit. 2016, 51, 148–175. [Google Scholar] [CrossRef]

- Rahmaniar, W.; Hernawan, A. Real-Time Human Detection Using Deep Learning on Embedded Platforms: A Review. J. Robot. Control JRC 2021, 2, 462–468. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.O.; Lee, K.H.; Park, S.H.; Im, S.G.; Park, J. Obstacle avoidance for small UAVs using monocular vision. Aircr. Eng. Aerosp. Technol. 2011, 83. [Google Scholar] [CrossRef] [Green Version]

- Kunz, T.; Reiser, U.; Stilman, M.; Verl, A. Real-time path planning for a robot arm in changing environments. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5906–5911. [Google Scholar]

- Safeea, M.; Neto, P. Kuka sunrise toolbox: Interfacing collaborative robots with matlab. IEEE Robot. Autom. Mag. 2018, 26, 91–96. [Google Scholar] [CrossRef] [Green Version]

- Indri, M.; Sibona, F.; Cen Cheng, P.D. Sen3Bot Net: A meta-sensors network to enable smart factories implementation. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 719–726. [Google Scholar]

- Indri, M.; Sibona, F.; Cen Cheng, P.D. Sensor data fusion for smart AMRs in human-shared industrial workspaces. In Proceedings of the IECON 2019—45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 738–743. [Google Scholar]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety bounds in human robot interaction: A survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- American National Standard Institute. ANSI/ITSDF B56.5-2019, Safety Standard for Driverless, Automatic Guided Industrial Vehicles and Automated Functions of Manned Industrial Vehicles (Revision of ANSI/ITSDF B56.5-2012); American National Standards Institute/Industrial Truck Standards Development Foundation: New York, NY, USA, 2019. [Google Scholar]

- Indri, M.; Sibona, F.; Cen Cheng, P.D.; Possieri, C. Online supervised global path planning for AMRs with human-obstacle avoidance. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 1473–1479. [Google Scholar]

- Zbar ROS Node Documentation Page. Available online: http://wiki.ros.org/zbar_ros (accessed on 31 January 2021).

- Indri, M.; Trapani, S.; Bonci, A.; Pirani, M. Integration of a Production Efficiency Tool with a General Robot Task Modeling Approach. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation, Turin, Italy, 4–7 September 2018; Volume 1, pp. 1273–1280. [Google Scholar]

| Collision Avoidance | |

| [12] | Collision prediction using time-invariant models and neural networks on signal processing. |

| [14] | Collision prediction based on over-segmentation using forward kinematic model. |

| [15] | Collision avoidance through generation of repulsive vectors. |

| [16] | Collision avoidance and re-planning algorithms. |

| [17] | Collision avoidance exploiting skeletal tracking and positioning of the user. |

| [19] | Collision avoidance using color detection and allows online path planning. |

| [20] | Collision avoidance through virtual forces applied on the manipulator. |

| [22] | The algorithm imposes velocity limitations only when the motion is in proximity of obstacles. |

| Aware Navigation | |

| [36] | The robot travels in virtual areas defined a-priori by users. |

| [40] | Social momentum, teleoperation and optimal reciprocal collision avoidance are used as navigation strategies. |

| [45] | The planning model is based on RNNs and image quality assessment, to improve mobile robot motion in the context of crowds. |

| [49] | An autonomously sensed interaction context that can compute and execute human-friendly trajectories. |

| [50] | Robot navigation takes into consideration the theory of proxemics to assign values to a cost map. |

| [51] | A confidence index is assigned to each detected human obstacle, enclosed in a 3D box, to avoid it accordingly. |

| Environment Representation | |

| [41] | The algorithm adapts to continuous short-term and long-term environment changes with focus on human detection, through feature extraction. |

| [42] | Scene matching through a representation learning approach that learns a scalable long-term representation model. |

| [62] | Object localization and tags recognition allow the robot to gather semantic information about the environment. |

| Recognition of Objects and Behavior | |

| [47] | The robot assistance is improved using adaptive sensory fusion. |

| [24] | Teleoperation using coded gestures recognition as input commands. |

| [25] | Simultaneous perception of the working area and operator’s hands. |

| [53] | Gesture control and eye tracking technologies are used by the robot to interpret human intentions. |

| [54] | The human motion here is registered and used for skill transfer purposes. |

| [61] | The motion of the collaborating human operator is monitored to enable specific robot actions |

| [63] | Gesture recognition is performed considering a convolutional representation from deep learning and a contour-based hand feature. |

| [64] | Human tracking is implemented and trained according to human body patterns. |

| [65] | Human detection and behavior recognition is implemented exploiting redundancy of sources to reconstruct the environment. |

| [67] | The information related to the human activities and object locations in the robot workspace are used for the approach. |

| [69] | The operator’s hand pose is estimated using a Kalman filter and a particle filter. |

| [70] | Real-time hand gesture recognition is implemented using a ANN. |

| [26] | The gestures used to command the robot are processed and classified by an ANN. |

| [27] | The motion of the human operator’s upper body is tracked, with a focus on objects manipulation. |

| [28] | Sensor data fusion algorithm for prediction and estimation of the human occupancy within the robot working area. |

| Conjoined Action | |

| [37] | The 360-degree scene is enriched by interactive elements to improve the teleoperated navigation. |

| [39] | Teleoperated navigation is implemented through a hybrid shared control scheme. |

| [52] | Enhanced perception-based interactions using AR for collaborative operations. |

| [71] | Interaction forces of the human are transmitted from the admittance interface to the robot to perform conjoined movements. |

| Technology | Dual Camera Infrared Structured Light |

|---|---|

| RGB resolution and frame rate | 2560 × 1600 @15 fps (8 M pixels) |

| 1600 × 1200 @15 fps (2 M pixels) | |

| Depth resolution and frame rate | 1280 × 800 @2 fps |

| 640 × 400 @10 fps | |

| RGB Sensor FOV | H51° × V32° (8 M pixels) |

| H42° × V32° (2 M pixels) | |

| Depth Sensor FOV | H49° × V29° |

| Laser safety Class 1 | 820–860 nm |

| Operating environment | Indoor only |

| Trigger | External trig in/out |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonci, A.; Cen Cheng, P. D.; Indri, M.; Nabissi, G.; Sibona, F. Human-Robot Perception in Industrial Environments: A Survey. Sensors 2021, 21, 1571. https://doi.org/10.3390/s21051571

Bonci A, Cen Cheng PD, Indri M, Nabissi G, Sibona F. Human-Robot Perception in Industrial Environments: A Survey. Sensors. 2021; 21(5):1571. https://doi.org/10.3390/s21051571

Chicago/Turabian StyleBonci, Andrea, Pangcheng David Cen Cheng, Marina Indri, Giacomo Nabissi, and Fiorella Sibona. 2021. "Human-Robot Perception in Industrial Environments: A Survey" Sensors 21, no. 5: 1571. https://doi.org/10.3390/s21051571

APA StyleBonci, A., Cen Cheng, P. D., Indri, M., Nabissi, G., & Sibona, F. (2021). Human-Robot Perception in Industrial Environments: A Survey. Sensors, 21(5), 1571. https://doi.org/10.3390/s21051571