DailyCog: A Real-World Functional Cognitive Mobile Application for Evaluating Mild Cognitive Impairment (MCI) in Parkinson’s Disease †

Abstract

:1. Introduction

- Valuable information about cognitive abilities through small events and feelings related to daily function, which are often lost, will be captured in real time and in a real functional environment (the home) thus reflecting reality.

- The collected data may provide better insights into daily events/tasks/measures, which present the best descriptors of cognitive decline, as well as the relationships between them.

- Analyzing such data may lead to an improved definition of PD-MCI features that could assist clinicians in identifying and detecting PD-MCI for focused intervention methods and further research.

- There is no need to trouble the patient to go to the clinic for evaluation.

- The physician can receive information prior to a medical appointment and map out the patient’s cognitive status.

2. DailyCog

2.1. DailyCog App Description

2.1.1. Task Description

- Preparing a hot drink.

- Preparing a shopping list.

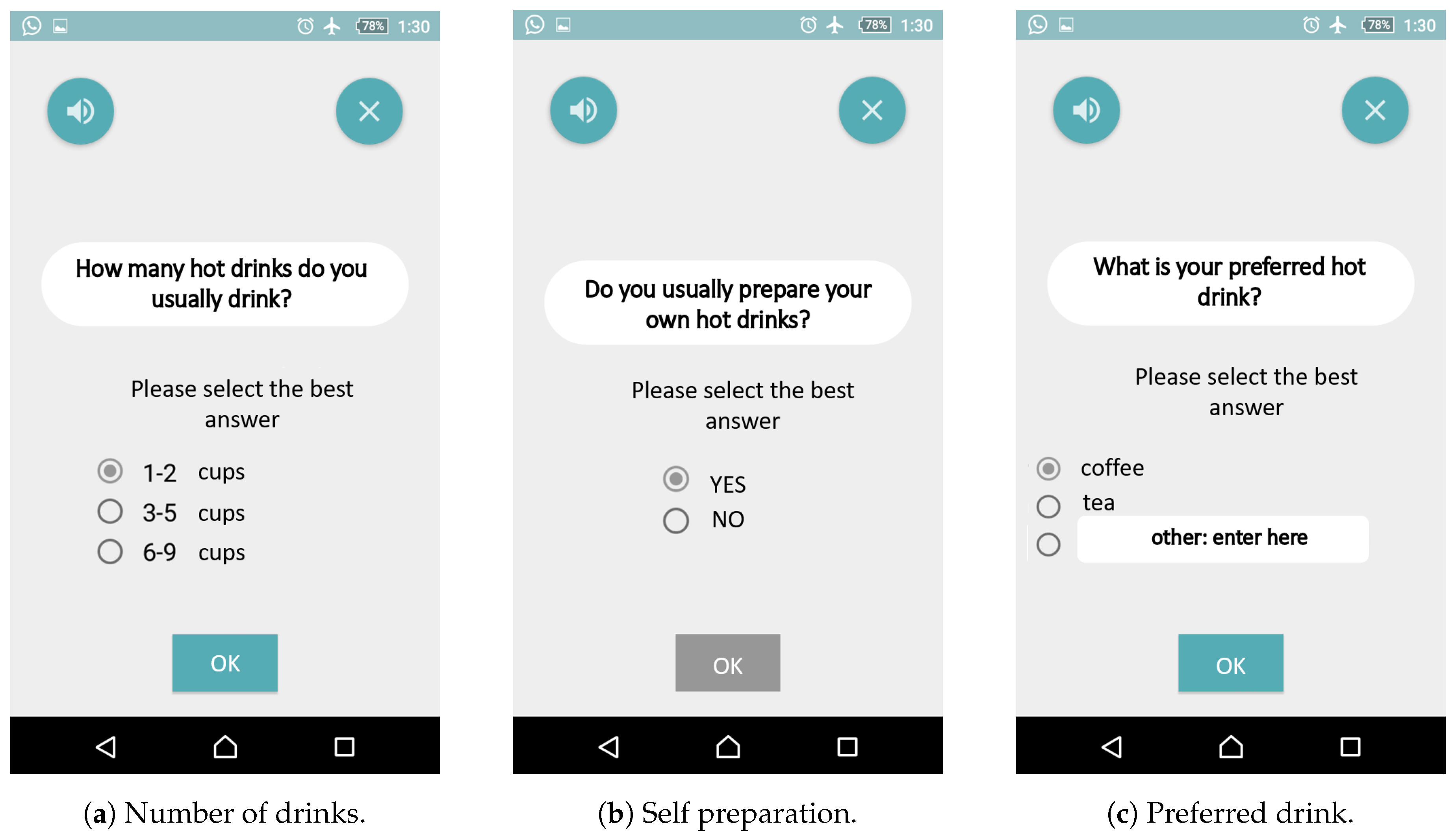

2.1.2. Self-Evaluation

2.1.3. Data Collection

2.2. DailyCog Design Considerations

3. User Study

3.1. Study Design and Selection Criteria

3.2. Case Study

3.3. Usability Study

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chahine, L.M.; Stern, M.B.; Chen-Plotkin, A. Blood-based biomarkers for Parkinson’s disease. Park. Relat. Disord. 2014, 20, S99–S103. [Google Scholar] [CrossRef] [Green Version]

- Jankovic, J. Parkinson’s disease: Clinical features and diagnosis. J. Neurol. Neurosurg. Psychiatry 2008, 79, 368–376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aarsland, D.; Bronnick, K.; Williams-Gray, C.; Weintraub, D.; Marder, K.; Kulisevsky, J.; Burn, D.; Barone, P.; Pagonabarraga, J.; Allcock, L.; et al. Mild cognitive impairment in Parkinson disease: A multicenter pooled analysis. Neurology 2010, 75, 1062–1069. [Google Scholar] [CrossRef]

- Barone, P.; Aarsland, D.; Burn, D.; Emre, M.; Kulisevsky, J.; Weintraub, D. Cognitive impairment in nondemented Parkinson’s disease. Mov. Disord. 2011, 26, 2483–2495. [Google Scholar] [CrossRef]

- Dubois, B.; Burn, D.; Goetz, C.; Aarsland, D.; Brown, R.G.; Broe, G.A.; Dickson, D.; Duyckaerts, C.; Cummings, J.; Gauthier, S.; et al. Diagnostic procedures for Parkinson’s disease dementia: Recommendations from the movement disorder society task force. Mov. Disord. 2007, 22, 2314–2324. [Google Scholar] [CrossRef]

- Rosenblum, S.; Meyer, S.; Gemerman, N.; Mentzer, L.; Richardson, A.; Israeli-Korn, S.; Livneh, V.; Karmon, T.F.; Nevo, T.; Yahalom, G.; et al. The Montreal Cognitive Assessment: Is It Suitable for Identifying Mild Cognitive Impairment in Parkinson’s Disease? Mov. Disord. Clin. Pract. 2020, 7, 648–655. [Google Scholar] [CrossRef]

- Litvan, I.; Goldman, J.G.; Tröster, A.I.; Schmand, B.A.; Weintraub, D.; Petersen, R.C.; Mollenhauer, B.; Adler, C.H.; Marder, K.; Williams-Gray, C.H.; et al. Diagnostic criteria for mild cognitive impairment in Parkinson’s disease: Movement Disorder Society Task Force guidelines. Mov. Disord. 2012, 27, 349–356. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosenblum, S.; Werner, P. Assessing the handwriting process in healthy elderly persons using a computerized system. Aging Clin. Exp. Res. 2006, 18, 433–439. [Google Scholar] [CrossRef]

- Muslimović, D.; Post, B.; Speelman, J.D.; Schmand, B. Cognitive profile of patients with newly diagnosed Parkinson disease. Neurology 2005, 65, 1239–1245. [Google Scholar] [CrossRef]

- Rosenblum, S. Handwriting measures as reflectors of executive functions among adults with Developmental Coordination Disorders (DCD). Front. Psychol. 2013, 4, 357. [Google Scholar] [CrossRef] [Green Version]

- Rosenblum, S.; Aloni, T.; Josman, N. Relationships between handwriting performance and organizational abilities among children with and without dysgraphia: A preliminary study. Res. Dev. Disabil. 2010, 31, 502–509. [Google Scholar] [CrossRef] [PubMed]

- Rosenblum, S.; Regev, N. Timing abilities among children with developmental coordination disorders (DCD) in comparison to children with typical development. Res. Dev. Disabil. 2013, 34, 218–227. [Google Scholar] [CrossRef]

- Jørgensen, J.T. A challenging drug development process in the era of personalized medicine. Drug Discov. Today 2011, 16, 891–897. [Google Scholar] [CrossRef]

- Klasnja, P.; Pratt, W. Healthcare in the pocket: Mapping the space of mobile-phone health interventions. J. Biomed. Inform. 2012, 45, 184–198. [Google Scholar] [CrossRef] [Green Version]

- Boulos, M.N.K.; Wheeler, S.; Tavares, C.; Jones, R. How smartphones are changing the face of mobile and participatory healthcare: An overview, with example from eCAALYX. Biomed. Eng. Online 2011, 10, 24. [Google Scholar] [CrossRef] [Green Version]

- Boulos, M.N.K.; Brewer, A.C.; Karimkhani, C.; Buller, D.B.; Dellavalle, R.P. Mobile medical and health apps: State of the art, concerns, regulatory control and certification. Online J. Public Health Inform. 2014, 5, 229. [Google Scholar]

- Ozdalga, E.; Ozdalga, A.; Ahuja, N. The smartphone in medicine: A review of current and potential use among physicians and students. J. Med Internet Res. 2012, 14, e128. [Google Scholar] [CrossRef] [PubMed]

- Richardson, A.; Rosenblum, S.; Hassin-Baer, S. Multidisciplinary Teamwork in the Design of DailyCog for Evaluating Mild Cognitive Impairment (MCI) in Parkinson’s Disease. In Proceedings of the 2019 International Conference on Virtual Rehabilitation (ICVR), Tel Aviv, Israel, 21–24 July 2019; pp. 1–2. [Google Scholar]

- Terry, K. Number of health apps soars but use does not always follow. Medscape Medical News, 18 September 2015. [Google Scholar]

- Landers, M.R.; Ellis, T.D. A Mobile App Specifically Designed to Facilitate Exercise in Parkinson Disease: Single-Cohort Pilot Study on Feasibility, Safety, and Signal of Efficacy. JMIR mHealth Uhealth 2020, 8, e18985. [Google Scholar] [CrossRef]

- Mazilu, S.; Hardegger, M.; Zhu, Z.; Roggen, D.; Troster, G.; Plotnik, M.; Hausdorff, J.M. Online detection of freezing of gait with smartphones and machine learning techniques. In Proceedings of the 2012 6th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth), San Diego, CA, USA, 21–24 May 2012; pp. 123–130. [Google Scholar]

- Lan, K.C.; Shih, W.Y. Early Diagnosis of Parkinson’s Disease Using a Smartphone. Procedia Comput. Sci. 2014, 34, 305–312. [Google Scholar] [CrossRef] [Green Version]

- Bot, B.M.; Suver, C.; Neto, E.C.; Kellen, M.; Klein, A.; Bare, C.; Doerr, M.; Pratap, A.; Wilbanks, J.; Dorsey, E.R.; et al. The mPower study, Parkinson disease mobile data collected using ResearchKit. Sci. Data 2016, 3, 160011. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Qu, Y.; Jin, B.; Jing, L.; Gao, Z.; Liang, Z. An Intelligent Mobile-Enabled System for Diagnosing Parkinson Disease: Development and Validation of a Speech Impairment Detection System. JMIR Med. Inform. 2020, 8, e18689. [Google Scholar] [CrossRef] [PubMed]

- Lo, C.; Arora, S.; Baig, F.; Lawton, M.A.; El Mouden, C.; Barber, T.R.; Ruffmann, C.; Klein, J.C.; Brown, P.; Ben-Shlomo, Y.; et al. Predicting motor, cognitive & functional impairment in Parkinson’s. Ann. Clin. Transl. Neurol. 2019, 6, 1498–1509. [Google Scholar]

- Sposaro, F.; Danielson, J.; Tyson, G. iWander: An Android application for dementia patients. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 3875–3878. [Google Scholar]

- Fernández Montenegro, J.M.; Villarini, B.; Angelopoulou, A.; Kapetanios, E.; Garcia-Rodriguez, J.; Argyriou, V. A Survey of Alzheimer’s Disease Early Diagnosis Methods for Cognitive Assessment. Sensors 2020, 20, 7292. [Google Scholar] [CrossRef] [PubMed]

- Kaimakamis, E.; Karavidopoulou, V.; Kilintzis, V.; Stefanopoulos, L.; Papageorgiou, V. Development/Testing of a Monitoring System Assisting MCI Patients: The European Project INLIFE. Stud. Health Technol. Inform. 2017, 242, 583–586. [Google Scholar] [PubMed]

- Cossu-Ergecer, F.; Dekker, M.; van Beijnum, B.F.; Tabak, M. Usability of a New eHealth Monitoring Technology That Reflects Health Care Needs for Older Adults with Cognitive Impairments and Their Informal and Formal Caregivers. In Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies—Volume 5 Healthinf: Healthinf, INSTICC, Funchal, Portugal, 19–21 January 2018; pp. 197–207. [Google Scholar] [CrossRef]

- Lentelink, S.; Tabak, M.; van Schooten, B.; Hofs, D.; op den Akker, H.; Hermens, H. A Remote Home Monitoring System to Support Informal Caregivers of People with Dementia. In Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies—Volume 5 Healthinf: Healthinf, INSTICC, Funchal, Portugal, 19–21 January 2018; pp. 94–102. [Google Scholar] [CrossRef]

- Thabtah, F.; Peebles, D.; Retzler, J.; Hathurusingha, C. Dementia Medical Screening using Mobile Applications: A Systematic Review with A New Mapping Model. J. Biomed. Inform. 2020, 11, 103573. [Google Scholar] [CrossRef]

- Charalambous, A.P.; Pye, A.; Yeung, W.K.; Leroi, I.; Neil, M.; Thodi, C.; Dawes, P. Tools for app-and web-based self-testing of cognitive impairment: Systematic search and evaluation. J. Med. Internet Res. 2020, 22, e14551. [Google Scholar] [CrossRef] [PubMed]

- Klimova, B. Mobile phone apps in the management and assessment of mild cognitive impairment and/or mild-to-moderate dementia: An opinion article on recent findings. Front. Hum. Neurosci. 2017, 11, 461. [Google Scholar] [CrossRef] [PubMed]

- Holden, R.J.; Karsh, B.T. The technology acceptance model: Its past and its future in health care. J. Biomed. Inform. 2010, 43, 159–172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Isaković, M.; Sedlar, U.; Volk, M.; Bešter, J. Usability pitfalls of diabetes mHealth apps for the elderly. J. Diabetes Res. 2016, 2016, 1604609. [Google Scholar] [CrossRef] [Green Version]

- Pang, G.K.H.; Kwong, E. Considerations and design on apps for elderly with mild-to-moderate dementia. In Proceedings of the 2015 International Conference on Information Networking (ICOIN), Siem Reap, Cambodia, 12–14 January 2015; pp. 348–353. [Google Scholar]

- Li, C.; Neugroschl, J.; Zhu, C.W.; Aloysi, A.; Schimming, C.A.; Cai, D.; Grossman, H.; Martin, J.; Sewell, M.; Loizos, M.; et al. Design Considerations for Mobile Health Applications Targeting Older Adults. J. Alzheimer’s Dis. 2020, 79, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Rosenblum, S.; Josman, N.; Toglia, J. Development of the Daily Living Questionnaire (DLQ): A Factor Analysis Study. Open J. Occup. Ther. 2017, 5, 4. [Google Scholar] [CrossRef] [Green Version]

- Mayer, J.M.; Zach, J. Lessons learned from participatory design with and for people with dementia. In Proceedings of the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services, Las Vegas, NV, USA, 21–26 July 2013; pp. 540–545. [Google Scholar]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

| Marker Name | Description (Task Data Recorded) | Task Time Recorded |

|---|---|---|

| date | Date task is performed | - |

| back press | Number of times the user pressed the “back” button | - |

| exit press | Number of times the user pressed the “exit” button | - |

| sound press | Number of times the user asked for audio-help | - |

| time total | The total time it took to complete the task | - |

| pic products | The number of photographs of the items that were taken | - |

| pic beverage | The number of photographs of the prepared drink that were taken | - |

| free time | Answer—do you have free time? | Y |

| cups frequency | Answer—How many drinks do you drink | Y |

| usually make | Answer—Do you usually prepare your own drinks? | Y |

| preferred beverage | Answer—What do you like to drink? | Y |

| est prep time | Answer—How long does it take to prepare your drink? | Y |

| order | The outcome of the ordering task. | Y |

| boil water | Instruction to boil the water | Y |

| place products | Instruction to place products on counter | Y |

| pic products | Instruction to take a photograph of products on counter | Y |

| water boiled | Answer—Has water boiled? | Y |

| all products | Answer—Are all products ready? | Y |

| pic beverage | Instruction to take a photograph of prepared drink | Y |

| difficulty | Answer—How difficult was task? | Y |

| est performance | Answer—How long do you estimate the task took? | Y |

| User | MoCA Score | Task | Total Time | Order Time |

|---|---|---|---|---|

| A | 21 | hot drink | 344.8 | 65.5 |

| shopping list | 527.3 | 109.6 | ||

| B | 27 | hot drink | 213.5 | 40.2 |

| shopping list | 217.6 | 43.2 | ||

| All | 23.8 ± 3.1 | hot drink | 493.8 ± 228.3 | 90.4 ± 66.5 |

| shopping list | 438.7 ± 207.1 | 49.6 ± 41.6 |

| User | MoCA Score | Task | Average |

|---|---|---|---|

| A | 21 | hot drink | 1.7 |

| shopping list | 2.5 | ||

| B | 27 | hot drink | 2.8 |

| shopping list | 3 | ||

| All | 23.8 ± 3.1 | hot drink | 1.9 ± 1 |

| shopping list | 1.7 ± 1.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosenblum, S.; Richardson, A.; Meyer, S.; Nevo, T.; Sinai, M.; Hassin-Baer, S. DailyCog: A Real-World Functional Cognitive Mobile Application for Evaluating Mild Cognitive Impairment (MCI) in Parkinson’s Disease. Sensors 2021, 21, 1788. https://doi.org/10.3390/s21051788

Rosenblum S, Richardson A, Meyer S, Nevo T, Sinai M, Hassin-Baer S. DailyCog: A Real-World Functional Cognitive Mobile Application for Evaluating Mild Cognitive Impairment (MCI) in Parkinson’s Disease. Sensors. 2021; 21(5):1788. https://doi.org/10.3390/s21051788

Chicago/Turabian StyleRosenblum, Sara, Ariella Richardson, Sonya Meyer, Tal Nevo, Maayan Sinai, and Sharon Hassin-Baer. 2021. "DailyCog: A Real-World Functional Cognitive Mobile Application for Evaluating Mild Cognitive Impairment (MCI) in Parkinson’s Disease" Sensors 21, no. 5: 1788. https://doi.org/10.3390/s21051788