Body and Hand–Object ROI-Based Behavior Recognition Using Deep Learning

Abstract

:1. Introduction

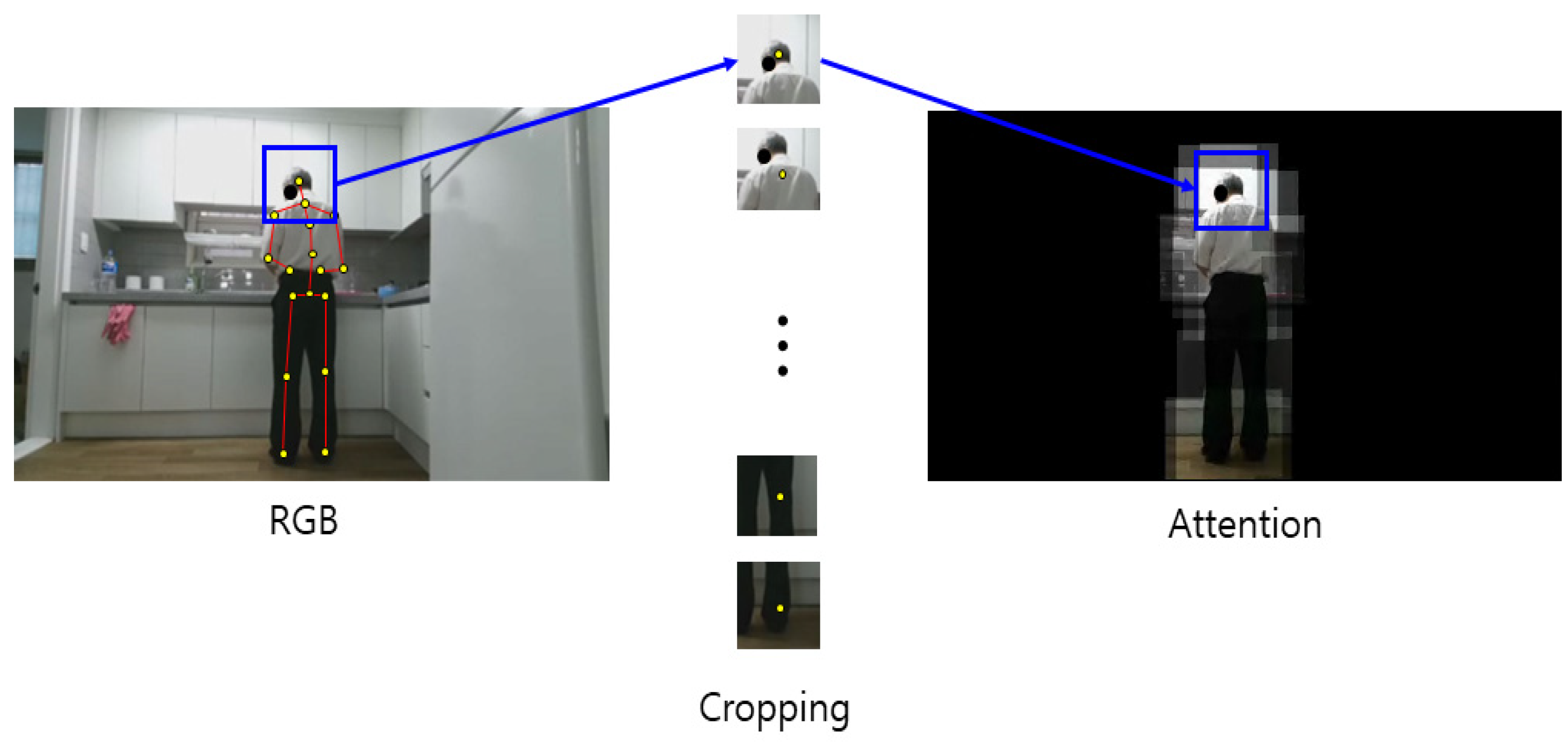

2. Techniques for Behavior Recognition

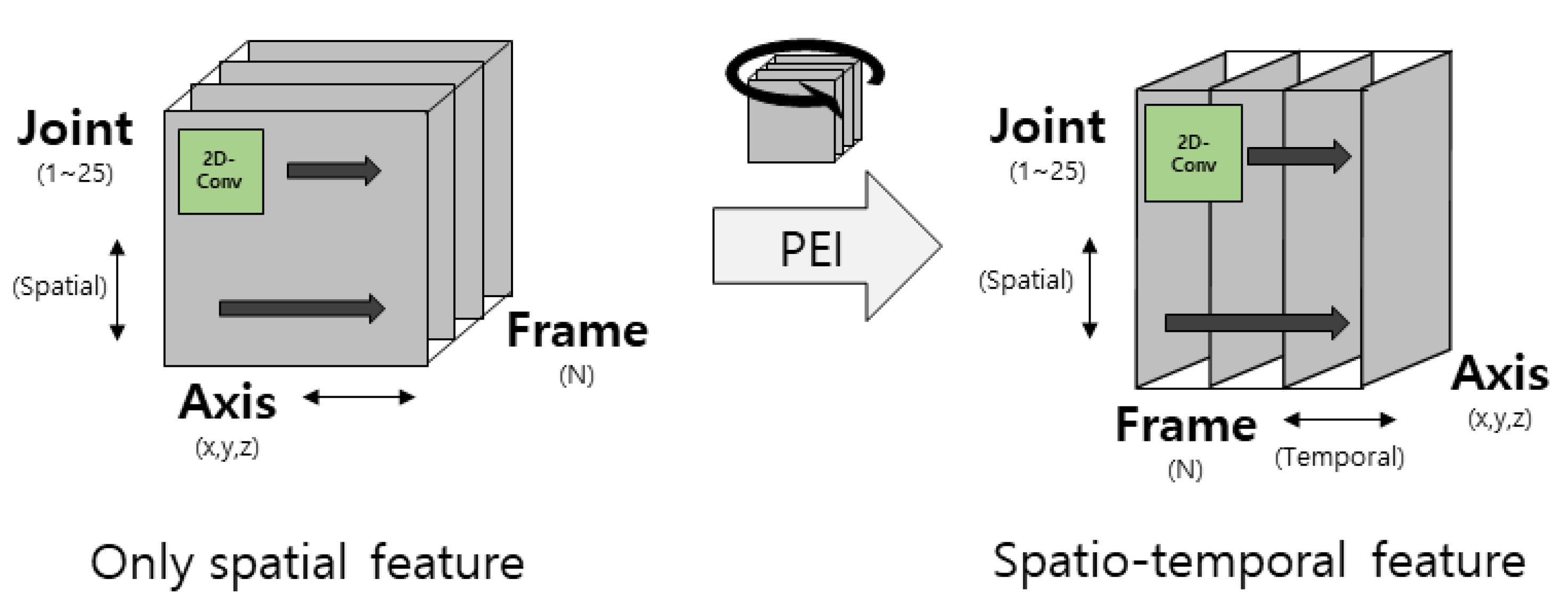

2.1. Pose Evolution Image (PEI)

2.2. 3D Convolutional Neural Network

3. Proposed Behavior Recognition Method

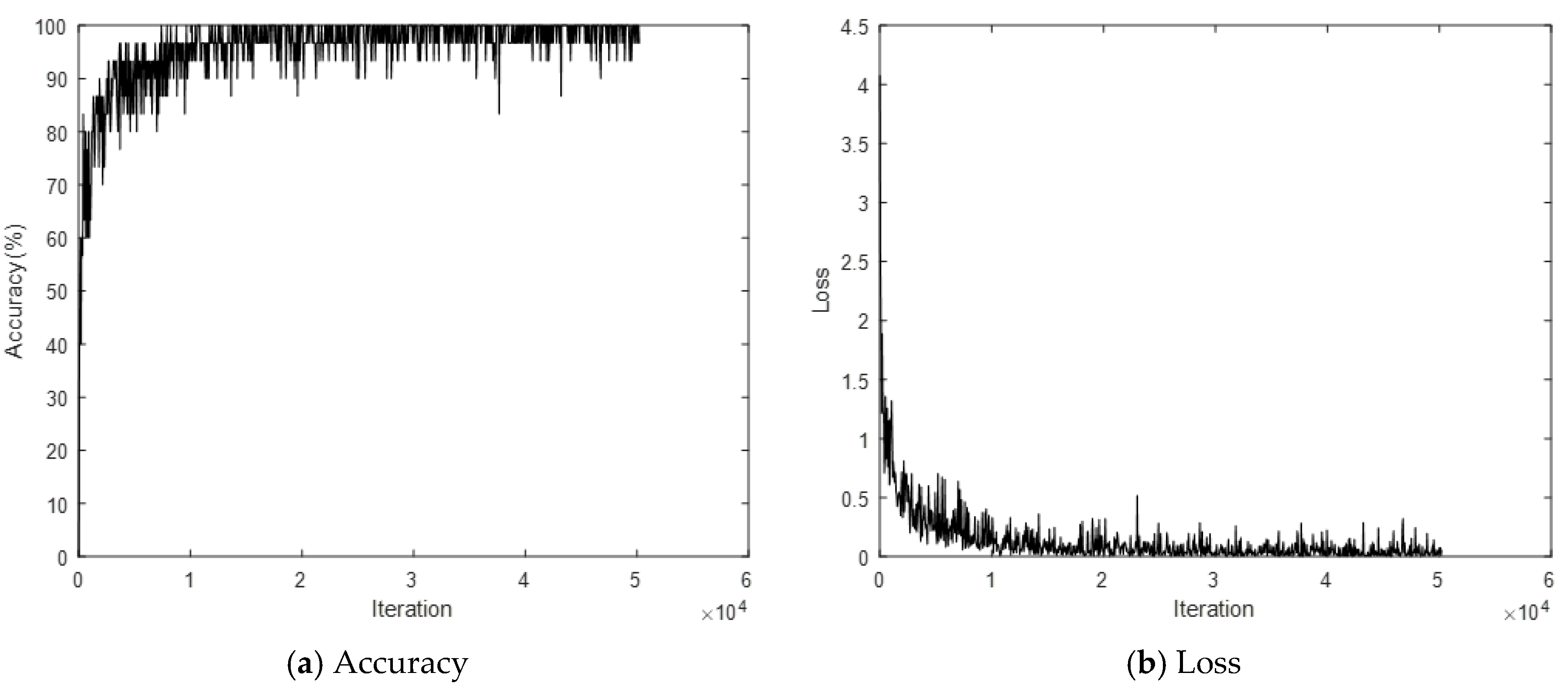

4. Experimental Result

4.1. Dataset

4.2. Evaluation Methods

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jeong, H.-S. A study on the philosophical to the problems of Korean society’s aged man. New Korean Philos. Assoc. 2013, 71, 335–354. [Google Scholar]

- Kim, N.S.; Shi, X. A study on the effective hyo cultural analysis of elderly care of korea, japan and china. Jpn. Modern Assoc. Korea 2019, 66, 335–354. [Google Scholar]

- Kim, J.K. A study on senior human rights in an aging society. Soc. Walfare Manag. Soc. Korea 2014, 1, 1–18. [Google Scholar]

- Lee, J.S. A study on the implementation of urban senior multi-carezon for the elderly. Korea Knowl. Infor. Technol. Soc. 2018, 13, 273–286. [Google Scholar]

- Cho, M.H.; Kwon, O.J.; Choi, J.S.; Kim, D.N. A study on the burden of family caregivers with demented elderly and its sug-gestions from the perspective of the well-being of family caregivers. Korean Soc. Gerontol. Soc. Walfare 2000, 9, 33–65. [Google Scholar]

- Lee, K.J. Care needs of elderly with dementia and burden in primary family caregiver. Korean Gerontol. Soc. 1995, 15, 30–51. [Google Scholar]

- Ostrowski, A.K.; DiPaola, D.; Partridge, E.; Park, H.W.; Breazeal, C. Older young people living with social robots. IEEE Robot. Autom. Mag. 2019, 26, 59–70. [Google Scholar] [CrossRef]

- Hosseini, S.H.; Hoher, K.M. Personal care robots for older young people: An overview. Asian Soc. Sci. 2017, 13, 11–19. [Google Scholar] [CrossRef] [Green Version]

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive social robots in delderly care: A review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef] [Green Version]

- Sun, N.; Yang, E.; Corney, J.; Chen, Y.; Ma, Z. A review of high-level robot functionality for elderly care. In Proceedings of the 2018 24th International Conference on Automation and Computing (ICAC), Newcastle upon Tyne, UK, 6–7 September 2018; pp. 1–6. [Google Scholar]

- Kim, M.-K.; Cha, E.-Y. Using skeleton vector information and rnn learning behavior recognition algorithm. Korean Soc. Broad Eng. 2018, 23, 598–605. [Google Scholar]

- Chang, J.-Y.; Hong, S.-M.; Son, D.; Yoo, H.; Ahn, H.-W. Development of real-time video surveillance system using the intel-ligent behavior recognition technique. Korea Instit. Internet Broadcast. Commun. 2019, 2, 161–168. [Google Scholar]

- Ko, B.C. Research trends on video-based action recognition. Korean Instit. Electron. Infor. Eng. 2017, 44, 16–22. [Google Scholar]

- Kim, M.S.; Jeong, C.Y.; Sohn, J.M.; Lim, J.Y.; Jeong, H.T.; Shin, H.C. Trends in activity recognition using smartphone sensors. Korean Electron. Telecommun. Trends 2018, 33, 89–99. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Ng, J.Y.-H.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. Computer Sci. 2015, arXiv:1503.08909. [Google Scholar]

- Simonyan, K.; Zisserman, A. Tow-stream convolutional networks for action recognition in videos. Computer Sci. 2014, arXiv:1406.2199. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatio-temporal features with 3D convolution networks. In Proceeding of IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Wang, L.; Qiao, Y.; Tang, X. Action recognition with trajectory-pooled deep-convolutional descriptors. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4305–4314. [Google Scholar]

- Yang, X.; Molchanov, P.; Kautz, J. Multilayer and multimodel fusion of deep neural networks for video classification. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherland, 15–19 October 2016; pp. 978–987. [Google Scholar]

- Sharma, S.; Kiros, R.; Salakhutdinov, R. Action recognition using visual attention. In Proceedings of the Neural Information Pro-cessing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 1–11. [Google Scholar]

- Li, S.; Li, W.; Cook, C.; Zhu, C.; Gao, Y. Independently Recurrent Neural Network (IndRNN): Building A Longer and Deeper RNN. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; Institute of Electrical and Electronics Engineers (IEEE), Salt Lake City, UT, USA, 18–22 June 2018; pp. 5457–5466. [Google Scholar]

- Wang, H.; Wang, L. Beyond joints: Learning representations from primitive geometries for skeleton-based action recogni-tion and detection. IEEE Trans. Image Proc. 2018, 27, 4382–4394. [Google Scholar] [CrossRef]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Skeleton-based action recognition with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 597–600. [Google Scholar]

- Yan, S.; Yuanjun, X.; Dahua, L. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceeding of AAAI Conf. Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 7444–7452. [Google Scholar]

- Song, S.; Lan, C.; Xing, J.; Zeng, W.; Liu, J. Spatio-temporal attention-based lstm networks for 3d action recognition and de-tection. IEEE Trans. Image Proc. 2018, 27, 3459–3471. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Li, Y.; Yang, J.; Luo, J. Action Recognition with Spatio–Temporal Visual Attention on Skeleton Image Sequences. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2405–2415. [Google Scholar] [CrossRef] [Green Version]

- Moore, D.; Essa, I.; Hayes, M. Exploiting human actions and object context for recognition tasks. In Proceedings of the Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1–7. [Google Scholar]

- Saitou, M.; Kojima, A.; Kitahashi, T.; Fukunaga, K. A Dynamic Recognition of Human Actions and Related Objects. In Proceedings of the First International Conference on Innovative Computing, Information and Control—Volume I (ICICIC’06), Beijing, China, 30 August–1 September 2006; pp. 1–4. [Google Scholar]

- Gu, Y.; Sheng, W.; Ou, Y.; Liu, M.; Zhang, S. Human action recognition with contextual constraints using a RGB-D sensor. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 674–679. [Google Scholar]

- Boissiere, A.M.D.; Noumeir, R. Infrared and 3d skeleton feature fusion for rgb-d action recognition. IEEE Access 2020, 8, 168297–168308. [Google Scholar] [CrossRef]

- Liu, G.; Qian, J.; Wen, F.; Zhu, X.; Ying, R.; Liu, P. Action Recognition Based on 3D Skeleton and RGB Frame Fusion. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 258–264. [Google Scholar]

- Liu, M.; Yuan, J. Recognizing Human Actions as the Evolution of Pose Estimation Maps. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1159–1168. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural. Inf. Process. Syst 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural. Inf. Process. Syst. 2014, 2, 3104–3112. [Google Scholar]

- Zhou, J.; Cui, G.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and ap-plications. Computer Sci. 2018, arXiv:1812.08434. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatio-temporal convolutions for action recognition. In Proceeding of IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6450–6459. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Wang, Q.; Kurillo, G.; Ofli, F.; Bajcsy, R. Evaluation of pose tracking accuracy in the first and second generations of mi-crosoft Kinect. In Proceeding of the International Conference on Healthcare Informatics, Dallas, TX, USA, 21–23 October 2015; pp. 380–389. [Google Scholar]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.-E.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jang, J.; Kim, D.H.; Park, C.; Jang, M.; Lee, J.; Kim, J. ETRI-activity3D: A large-scale RGB-D dataset for robots to recognize daily activities of the elderly. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 10990–10997. [Google Scholar]

- Wen, Y.H.; Gao, L.; Fu, H.; Zhang, F.L.; Xia, S. Graph CNNs with motif and variable temporal block for skeleton-based action recognition. In Proceeding of the AAAI Conf. on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8989–8996. [Google Scholar]

- Xu, Y.; Cheng, J.; Wang, L.; Xia, H.; Liu, F.; Tao, D. Ensemble one-dimensional convolution neural networks for skeletonbased action recognition. IEEE Signal Proc. Lett. 2018, 25, 1044–1048. [Google Scholar] [CrossRef]

- Xie, C.; Li, C.; Zhang, B.; Chen, C.; Han, J.; Liu, J. Memory Attention Networks for Skeleton-based Action Recognition. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence; International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1639–1645. [Google Scholar]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence Feature Learning from Skeleton Data for Action Recognition and Detection with Hierarchical Aggregation. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence; International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 786–792. [Google Scholar]

| 1 | eating food with a fork | 29 | hanging laundry |

| 2 | pouring water into a cup | 30 | looking around for something |

| 3 | taking medicine | 31 | using a remote control |

| 4 | drinking water | 32 | reading a book |

| 5 | putting (taking) food in (from) the fridge | 33 | reading a newspaper |

| 6 | trimming vegetables | 34 | writing |

| 7 | peeling fruit | 35 | talking on the phone |

| 8 | using a gas stove | 36 | playing with a mobile phone |

| 9 | cutting vegetable on the cutting board | 37 | using a computer |

| 10 | brushing teeth | 38 | smoking |

| 11 | washing hands | 39 | clapping |

| 12 | washing face | 40 | rubbing face with hands |

| 13 | wiping face with a towel | 41 | doing freehand exercise |

| 14 | putting on cosmetics | 42 | doing neck roll exercise |

| 15 | putting on lipstick | 43 | massaging a shoulder oneself |

| 16 | brushing hair | 44 | taking a bow |

| 17 | blow drying hair | 45 | talking to each other |

| 18 | putting on a jacket | 46 | handshaking |

| 19 | taking off a jacket | 47 | hugging each other |

| 20 | putting (taking) on (off) shoes | 48 | fighting each other |

| 21 | putting (taking) on (off) glasses | 49 | waving a hand |

| 22 | washing the dishes | 50 | flapping a hand up and down |

| 23 | vacuuming the floor | 51 | pointing with a finger |

| 24 | scrubbing the floor with a rag | 52 | opening the door and walking in |

| 25 | wiping off the dining table | 53 | falling on the floor |

| 26 | rubbing up furniture | 54 | sitting (standing) up |

| 27 | spreading (folding) bedding | 55 | lying down |

| 28 | washing a towel by hand |

| Method | Accuracy (%) |

|---|---|

| Skeleton (PEI-T1-2D-CNN) | 84.95 |

| Skeleton (PEI-T2-2D-CNN) | 85.88 |

| Skeleton (PEI-T3-2D-CNN) | 86.09 |

| Skeleton (PEI-T4-2D-CNN) | 85.20 |

| Method | Accuracy (%) |

|---|---|

| RGB (3D-CNN) | 79.20 |

| Body ROI RGB (3D-CNN) | 76.85 |

| Hand–object ROI RGB (3D-CNN) | 73.11 |

| Method | Accuracy (%) |

|---|---|

| ROIEnsAddNet1 | 84.68 |

| ROIEnsAddNet2 | 86.83 |

| ROIEnsAddNet3 | 92.79 |

| ROIEnsAddNet4 | 94.18 |

| ROIEnsMulNet1 | 85.85 |

| ROIEnsMulNet2 | 87.98 |

| ROIEnsMulNet3 | 94.87 |

| ROIEnsMulNet4 | 94.69 |

| Method | Accuracy (%) |

|---|---|

| IndRNN [22] | 73.90 |

| Beyond Joints [23] | 79.10 |

| SK-CNN [24] | 83.60 |

| ST-GCN [25] | 86.80 |

| Motif ST-GCN [43] | 89.90 |

| Ensem-NN [44] | 83.00 |

| MANs [45] | 82.40 |

| HCN [46] | 88.00 |

| FSA-CNN [42] | 90.60 |

| ROIEnsMulNet3 | 94.87 |

| Method | Accuracy (%) | ||

|---|---|---|---|

| Elderly Test | Young Test | ||

| FSA-CNN [42] | Elderly Training | 87.70 | 69.00 |

| Young Training | 74.90 | 85.00 | |

| ROIEnsAddNet3 | Elderly Training | 92.53 | 70.35 |

| Young Training | 73.57 | 89.87 | |

| ROIEnsMulNet3 | Elderly Training | 94.57 | 75.04 |

| Young Training | 79.51 | 92.54 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Byeon, Y.-H.; Kim, D.; Lee, J.; Kwak, K.-C. Body and Hand–Object ROI-Based Behavior Recognition Using Deep Learning. Sensors 2021, 21, 1838. https://doi.org/10.3390/s21051838

Byeon Y-H, Kim D, Lee J, Kwak K-C. Body and Hand–Object ROI-Based Behavior Recognition Using Deep Learning. Sensors. 2021; 21(5):1838. https://doi.org/10.3390/s21051838

Chicago/Turabian StyleByeon, Yeong-Hyeon, Dohyung Kim, Jaeyeon Lee, and Keun-Chang Kwak. 2021. "Body and Hand–Object ROI-Based Behavior Recognition Using Deep Learning" Sensors 21, no. 5: 1838. https://doi.org/10.3390/s21051838

APA StyleByeon, Y.-H., Kim, D., Lee, J., & Kwak, K.-C. (2021). Body and Hand–Object ROI-Based Behavior Recognition Using Deep Learning. Sensors, 21(5), 1838. https://doi.org/10.3390/s21051838