Smart System with Artificial Intelligence for Sensory Gloves

Abstract

1. Introduction

2. Related Works

3. System Description

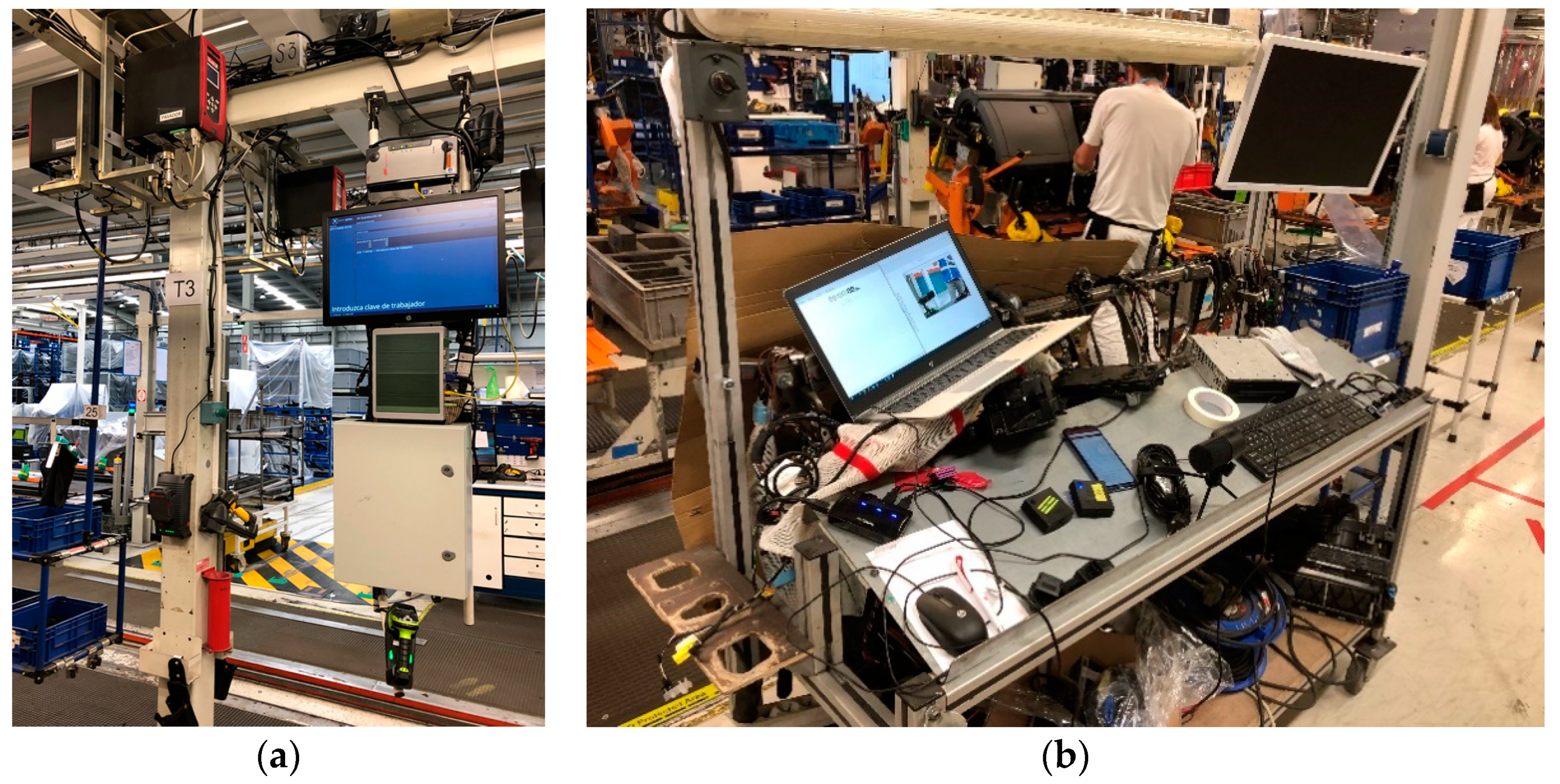

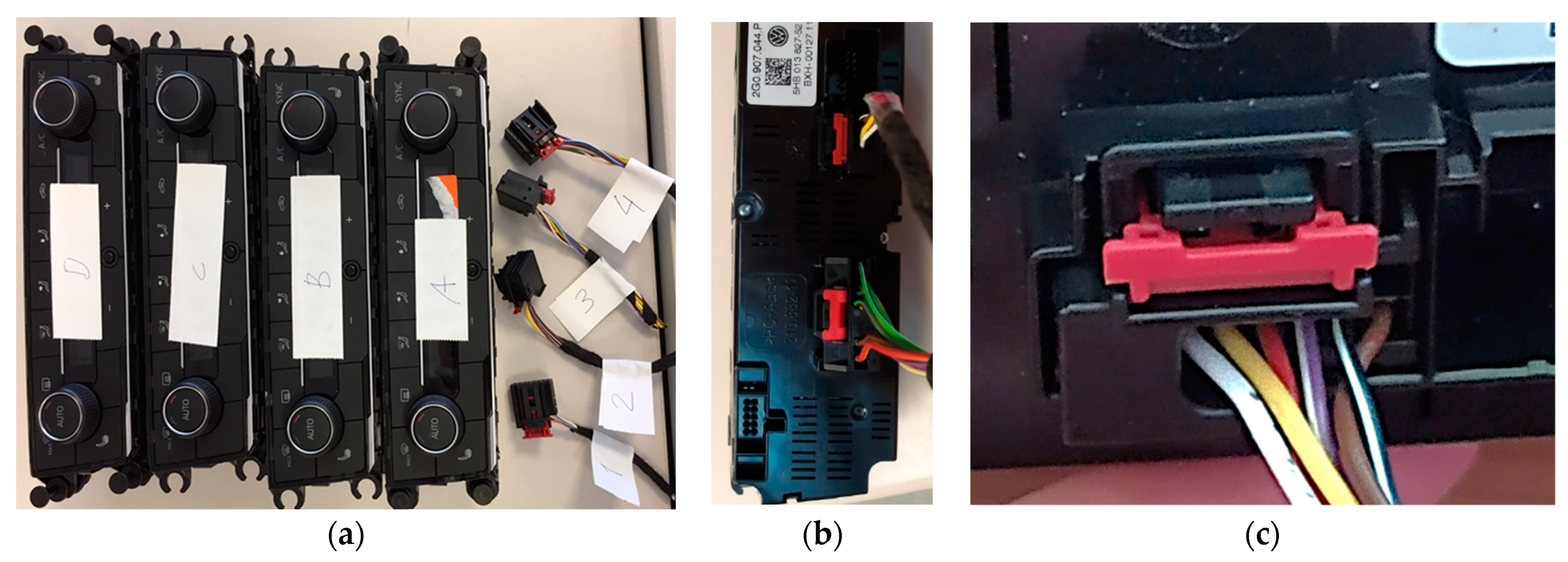

3.1. Industrial Environment

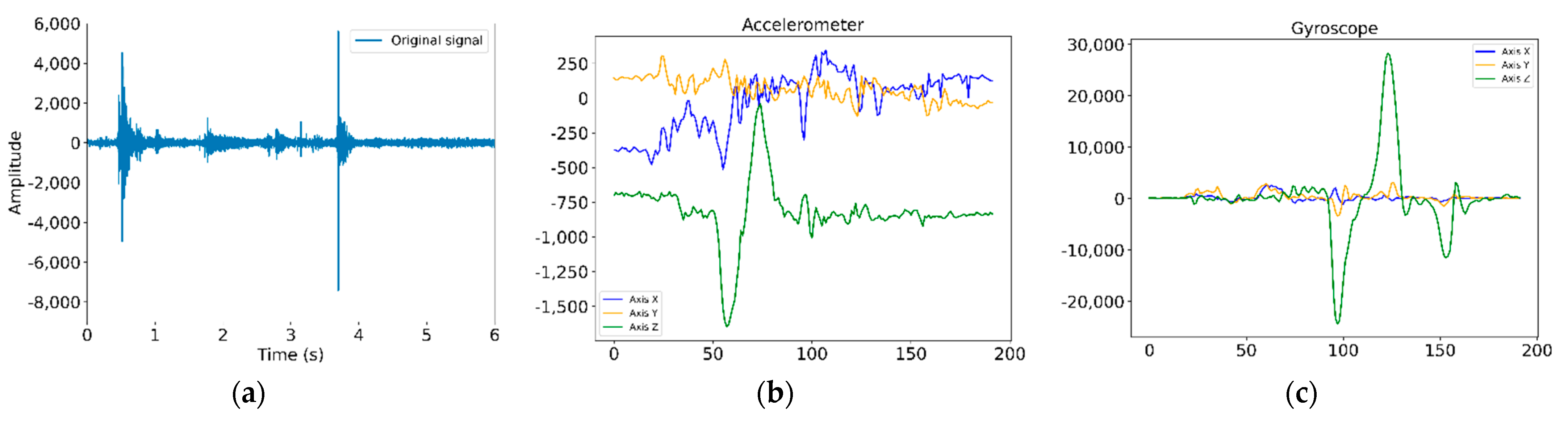

3.2. Signals and Their Acquisition

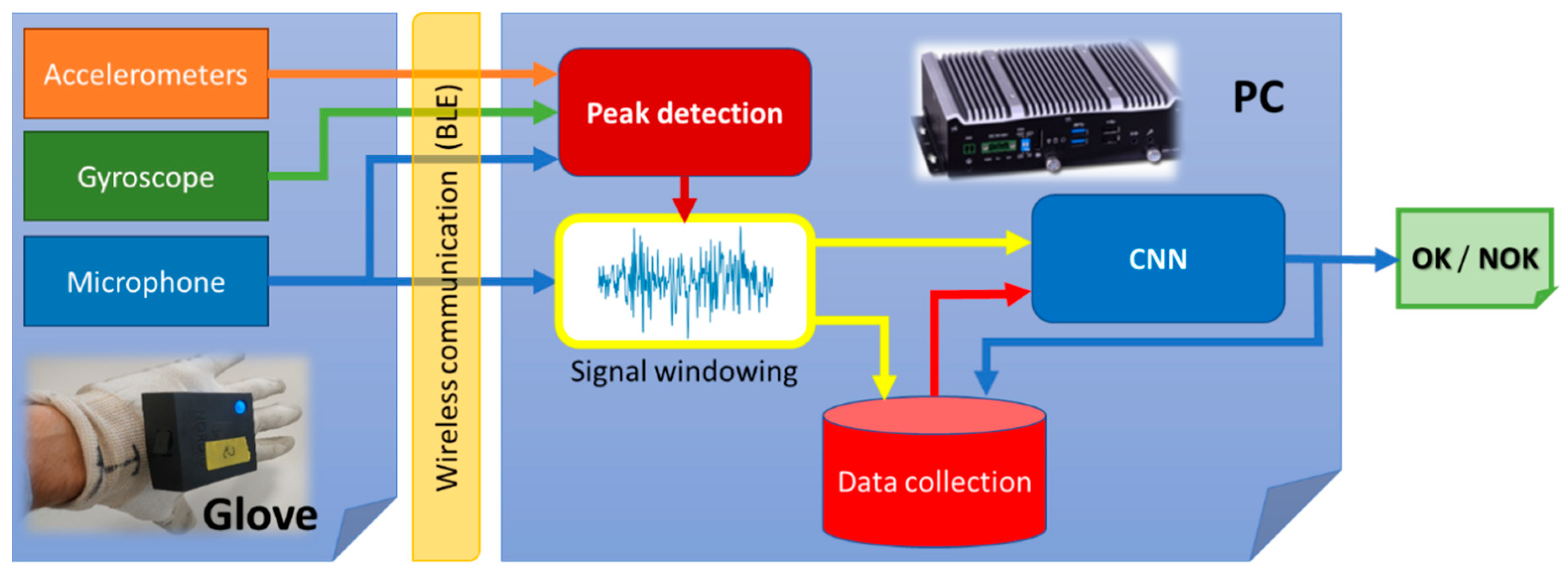

3.3. System Architecture

4. Smart Recognition System

4.1. Accelerometer-Based Recognition

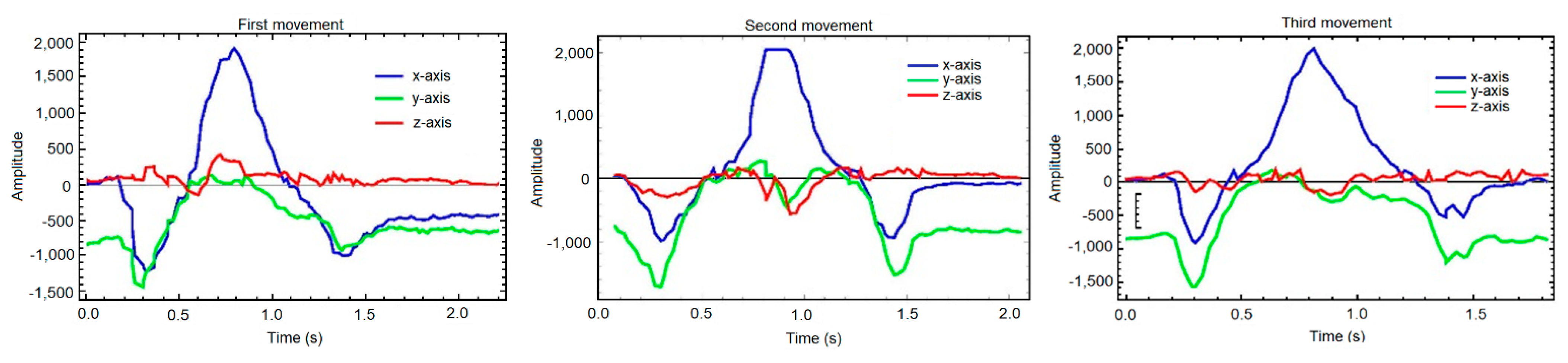

- In the first movement on the X-axis:

- In the first movement on the Y-axis:

- In the first movement on the Z-axis:

- Variation must be insignificant at the beginning and the end of the movement, that is,.

- It’s clear (see Figure 10) that there are two time moments where the movement is minimum and other one where the movement has its maximum value, that is, must have two minimums and , and one maximum between them.

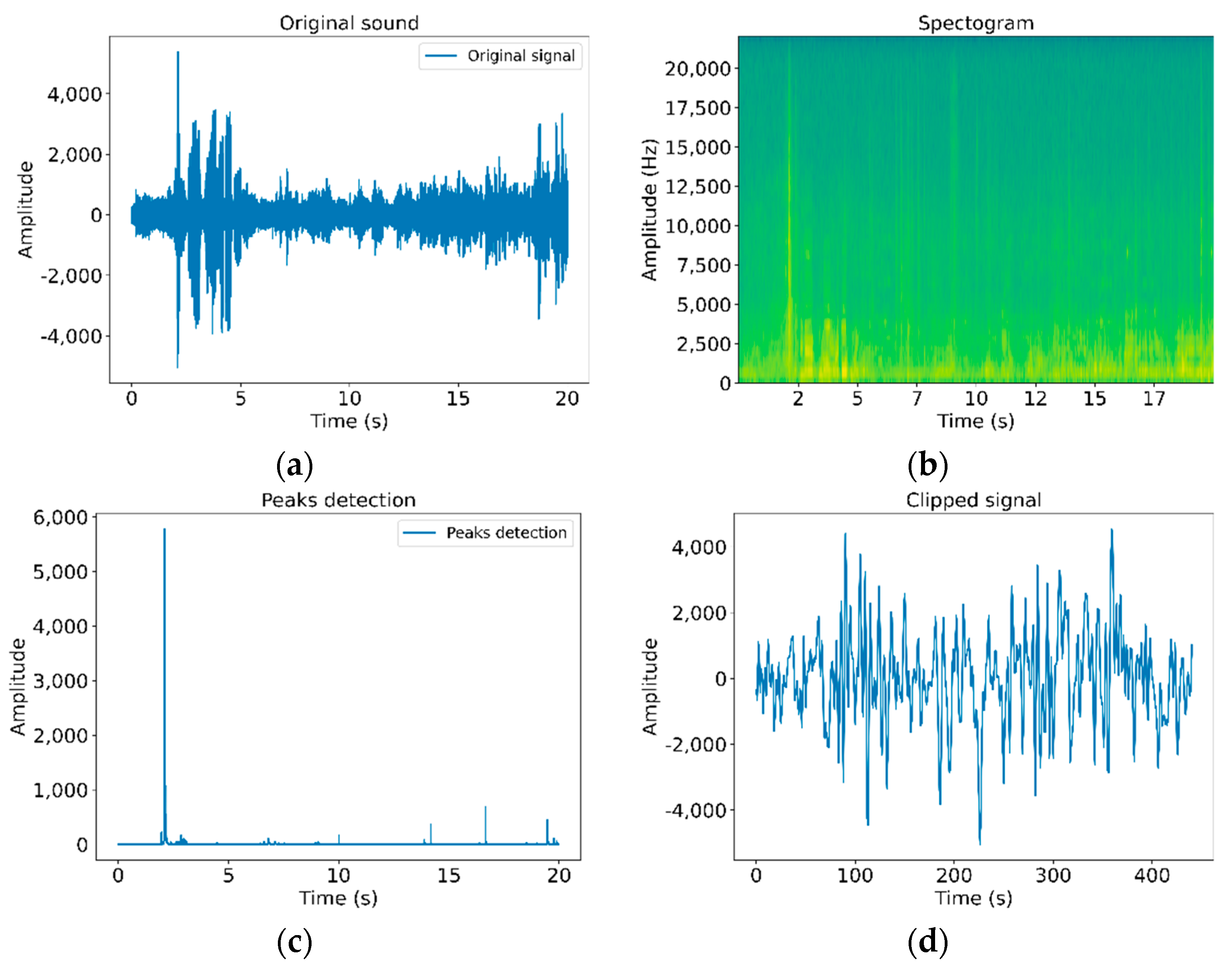

4.2. Data Sources

- Smoothing. The points of the signal are modified in such a way that those points that are higher than the adjacent ones (may be due to noise) are reduced, and those points that are lower than the adjacent ones are increased leading to a smoother signal. We obtain a sharper signal by means of a Savitzky–Golay filter, maintaining the original maximums and minimums.

- Decimation. A new signal is generated, with a lower number of points than the original one. In our case, we set a constant decimating factor of 50%.

- Deletion. Similar to signal decimation, but the elimination factor works under a user-imposed probability. A 30% in the case of the example depicted in Figure 12.

- Interpolation. Method opposite to decimation, which constructs new data points within the range of the discrete set of known data points (probability of 50).

- Modification of the amplitude. For each existing value, with a probability of 50%, its amplitude is modified a certain percentage delimited by the user. It can be expanded or reduced.

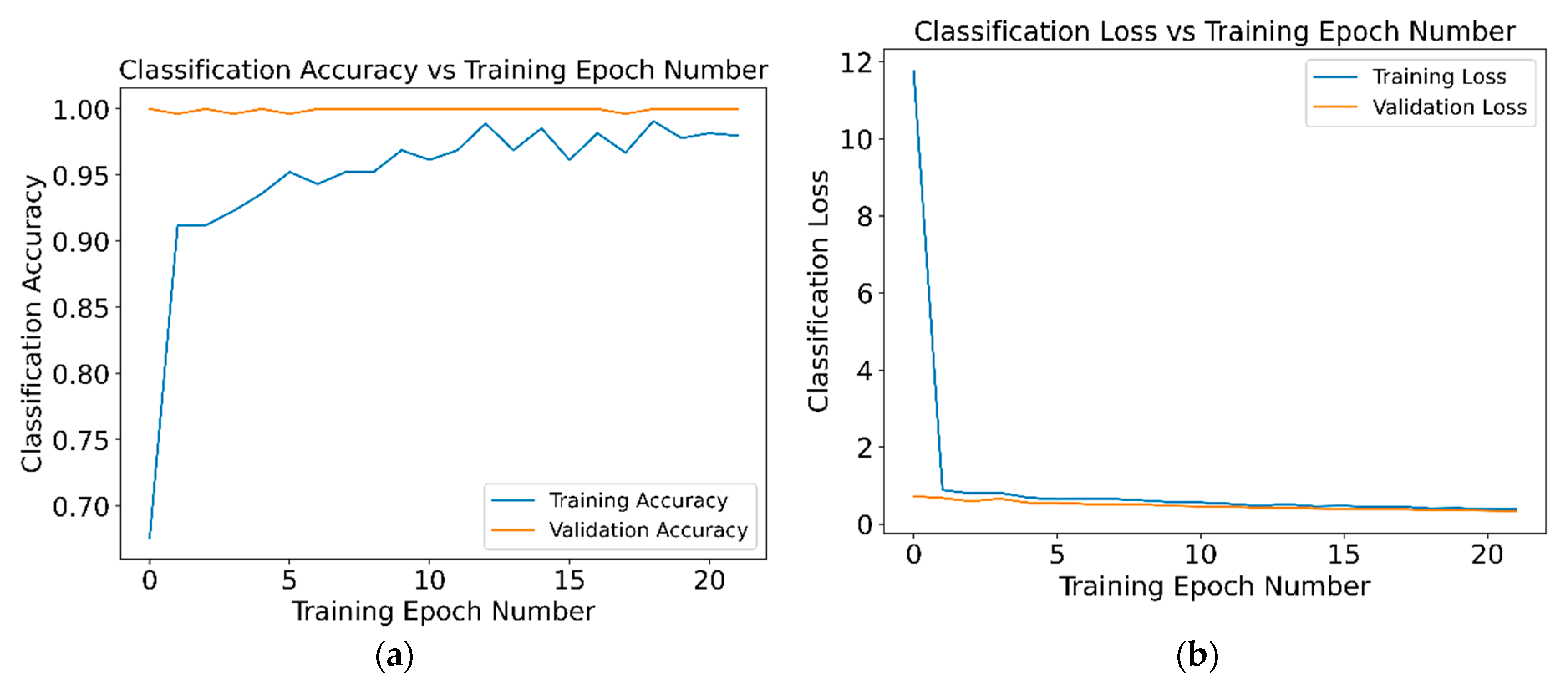

4.3. Convolutional Neural Network (CNN)

4.4. Peaks Detection

5. System Implementation

- Phase 1: Start of assembly. The logistics system indicates to the microcomputer the type of connector to be detected and, if necessary, its parameterization. In turn, the required information for detection is sent to the glove. The glove starts acquiring data from sensors. In anticipation of possible interruptions in the assembly process, a maximum period is set in which the signal will be captured. If this time limit is reached, an error is returned to the system and the recording made is discarded.

- Phase 2: Detection of possible clicking connection. The glove will sample continuously all analog signals (ACCs, gyroscope and audio), and will process in real time until a trigger of a possible clicking is detected. If this trigger occurs, the glove will send the clicking signal to the microcomputer. After that, the glove continues to sample uninterruptedly while waiting for more clicking.

- Phase 3: Clipping validity. The microcomputer will process the clicking, validating it or not, and notify the result of the event to the logistic system.

- Phase 4: End of registration. The device will end the registration when receiving the message from the computer of a successful ticketing, or by timeout, in that case a message will be sent to the microcomputer, indicating the latter to the logistic system that no clicking has been detected. In both cases, both the microcomputer and the glove wait to receive a new order from the logistics system.

6. System Validation: Experimental Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dipietro, L.; Sabatini, A.M.; Dario, P. A Survey of Glove-Based Systems and Their Applications. IEEE Trans. Syst. Man. Cybern. Part. C. (Appl. Rev.) 2008, 38, 461–482. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Lakulu, M.M.B. A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef] [PubMed]

- Sundaram, S.; Kellnhofer, P.; Li, Y.; Zhu, J.-Y.; Torralba, A.; Matusik, W. Learning the signatures of the human grasp using a scalable tactile glove. Nature 2019, 569, 698–702. [Google Scholar] [CrossRef] [PubMed]

- da Silva, A.F.; Goncalves, A.F.; Mendes, P.M.; Correia, J.H. FBG Sensing Glove for Monitoring Hand Posture. IEEE Sens. J. 2011, 11, 2442–2448. [Google Scholar] [CrossRef]

- Glauser, O.; Wu, S.; Panozzo, D.; Hilliges, O.; Sorkine-Hornung, O. Interactive hand pose estimation using a stretch-sensing soft glove. ACM Trans. Graph. (TOG) 2019, 38, 1–15. [Google Scholar] [CrossRef]

- Zhu, M.; Sun, Z.; Zhang, Z.; Shi, Q.; Liu, H.; Lee, C. Sensory-Glove-Based Human Machine Interface for Augmented Reality (AR) Applications. In Proceedings of the 2020 IEEE 33rd International Conference on Micro Electro Mechanical Systems (MEMS), Vancouver, BC, Canada, 18–22 January 2020; pp. 16–19. [Google Scholar] [CrossRef]

- Varnava, C. Tactile glove picks up shapes. Nat. Electron. 2019, 2, 212. [Google Scholar] [CrossRef]

- Baldi, T.L.; Scheggi, S.; Meli, L.; Mohammadi, M.; Prattichizzo, D. GESTO: A Glove for Enhanced Sensing and Touching Based on Inertial and Magnetic Sensors for Hand Tracking and Cutaneous Feedback. IEEE Trans. Hum. Mach. Syst. 2017, 47, 1066–1076. [Google Scholar] [CrossRef]

- Li, Q.; Kroemer, O.; Su, Z.; Veiga, F.F.; Kaboli, M.; Ritter, H.J. A Review of Tactile Information: Perception and Action Through Touch. IEEE Trans. Robot. 2020, 36, 1619–1634. [Google Scholar] [CrossRef]

- Kaboli, M.; Yao, K.; Feng, D.; Cheng, G. Tactile-based active object discrimination and target object search in an unknown workspace. Auton. Robot. 2019, 43, 123–152. [Google Scholar] [CrossRef]

- De Pasquale, G.; Mastrototaro, L.; Ruggeri, V. Wearable sensing systems for biomechanical parameters monitoring. Mater. Today Proc. 2019, 7, 560–565. [Google Scholar] [CrossRef]

- Lin, K.; Cao, J.; Gao, S. A Piezoelectric Force Sensing Glove for Acupuncture Quantification. In Proceedings of the 2020 IEEE International Conference on Flexible and Printable Sensors and Systems (FLEPS), Manchester, UK, 16–19 August 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Spilz, A.; Engleder, T.; Munz, M.; Karge, M. Development of a smart fabric force-sensing glove for physiotherapeutic Applications. Curr. Dir. Biomed. Eng. 2019, 5, 513–515. [Google Scholar] [CrossRef]

- Elder Akpa, A.H.; Fujiwara, M.; Suwa, H.; Arakawa, Y.; Yasumoto, K. A Smart Glove to Track Fitness Exercises by Reading Hand Palm. J. Sens. 2019, 9320145. [Google Scholar] [CrossRef]

- Hsiao, C.-P.; Li, R.; Yan, X.; Do, E.Y.-L. Tactile Teacher: Sensing Finger Tapping in Piano Playing. In Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction (TEI '15. ACM), New York, NY, USA, 15–19 January 2015; pp. 257–260. [Google Scholar] [CrossRef]

- Fang, B.; Guo, D.; Sun, F.; Liu, H.; Wu, Y. A robotic hand-arm teleoperation system using human arm/hand with a novel data glove. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2483–2488. [Google Scholar] [CrossRef]

- Chen, X.; Gong, L.; Wei, L.; Yeh, S.-C.; Xu, L.D.; Zheng, L.; Zou, Z. A Wearable Hand Rehabilitation System with Soft Gloves. IEEE Trans. Ind. Inform. 2021, 17, 943–952. [Google Scholar] [CrossRef]

- Ghate, S.; Yu, L.; Du, K.; Lim, C.T.; Yeo, J.C. Sensorized fabric glove as game controller for rehabilitation. In Proceedings of the 2020 IEEE Sensors, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, B.; Takahashi, N.; Koike, H. Sensor Glove Implemented with Artificial Muscle Set for Hand Rehabilitation. In Proceedings of the Augmented Humans International Conference (AHs ’20). Association for Computing Machinery, Kaiserslautern, Germany, 16–17 March 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, D.; Song, M.; Naqash, A.; Zheng, Y.; Xu, W.; Zhang, Y. Toward Whole-Hand Kinesthetic Feedback: A Survey of Force Feedback Gloves. IEEE Trans. Haptics 2019, 12, 189–204. [Google Scholar] [CrossRef] [PubMed]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable Haptic Systems for the Fingertip and the Hand: Taxonomy, Review, and Perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef] [PubMed]

- Akbari, J.; Dehghan, H.; Azmoon, H.; Forouharmajd, F. Relationship between Lighting and Noise Levels and Productivity of the Occupants in Automotive Assembly Industry. J. Environ. Public Health 2013, 527078. [Google Scholar] [CrossRef] [PubMed]

- Temme, N.M. Error Functions, Dawson’s and Fresnel Integrals; Cambridge University Press: Cambridge, UK, 2010; Chapter 7; pp. 159–172. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. Available online: https://arxiv.org/abs/1412.6980 (accessed on 5 March 2021).

| Sensor | Location | Range | Resolution | Sampling Rate |

|---|---|---|---|---|

| MEMS microphone | Both hands | 120 dBSPL SNR 62.6 dB | 16 bits | 8 kHz |

| Acelerometer | Both hands | ±2 g | 16 bits | 100 Hz |

| Gyroscope | Both hands | ±250° | 16 bits | 100 Hz |

| Non-capacitive microphone | 10 cm | 120 dBSPL SNR 78 dB | 16 bits | 44,100 Hz |

| Video | A 1 m | 640 × 480 pixel | 30 Hz |

| Connector | Samples Obtained at the Laboratory | Synthetic Laboratory Samples | Samples Taken at the Production Plant | Synthetic Production Line Samples |

|---|---|---|---|---|

| RJ45 | 2295 | 1792 | - | - |

| Climatronic | 1659 | 10,951 | 357 | 6257 |

| Dimmer | 1021 | 10,601 | 1164 | 10,775 |

| Type | Input Size | Output Size | Kernel Size | Number of Parameters |

|---|---|---|---|---|

| Conv2D | 161 × 165 × 1 | 161 × 165 × 32 | 3 × 3 | 320 |

| Max_Pooling2D | 161 × 165 × 32 | 80 × 82 × 32 | 2 × 2 | 0 |

| Conv2D | 80 × 82 × 32 | 78 × 80 × 32 | 3 × 3 | 9248 |

| Max_Pooling2D | 78 × 80 × 32 | 39 × 40 × 32 | 2 × 2 | 0 |

| Conv2D | 39 × 40 × 32 | 37 × 38 × 32 | 3 × 3 | 0 |

| Max_Pooling2D | 37 × 38 × 32 | 18 × 19 × 32 | 2 × 2 | 0 |

| Flatten | 18 × 19 × 32 | 10,944 | - | 0 |

| Dense | 10,944 | 64 | - | 700,480 |

| Dropout | 64 | 64 | - | 0 |

| Dense | 64 | 32 | - | 2080 |

| Dropout | 32 | 32 | - | 0 |

| Dense | 32 | 16 | - | 528 |

| Dropout | 16 | 16 | - | 0 |

| Dense | 16 | 2 | - | 34 |

| Dimmer | Climatronic | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset for Training | TN | FP | FN | TP | NOK | OK | TN | FP | FN | TP | NOK | OK |

| Laboratory | 516 | 49 | 12 | 587 | 565 | 599 | 172 | 8 | 1 | 177 | 180 | 178 |

| Laboratory + synthetic | 536 | 29 | 27 | 572 | 565 | 599 | 180 | 0 | 9 | 169 | 180 | 178 |

| Laboratory + plant | 558 | 7 | 8 | 591 | 565 | 599 | 179 | 1 | 0 | 178 | 180 | 178 |

| Labor + synth + plant | 549 | 16 | 9 | 590 | 565 | 599 | 180 | 0 | 1 | 177 | 180 | 178 |

| Plant + synthetics | 528 | 37 | 1 | 598 | 565 | 599 | 178 | 2 | 0 | 178 | 180 | 178 |

| Dataset Used for Training | Accuracy | Precision | Recall | F1 | Specificity |

|---|---|---|---|---|---|

| Laboratory | 0.9476 | 0.9230 | 0.9800 | 0.9506 | 0.9476 |

| Laboratory + synthetic | 0.9519 | 0.9517 | 0.9549 | 0.9533 | 0.9519 |

| Laboratory + plant | 0.9871 | 0.9883 | 0.9866 | 0.9875 | 0.9871 |

| Laboratory + synthetic + plant | 0.9785 | 0.9736 | 0.9850 | 0.9793 | 0.9785 |

| Plant + synthetic | 0.9674 | 0.9417 | 0.9983 | 0.9692 | 0.9674 |

| Dataset Used for Training | Accuracy | Precision | Recall | F1 | Specificity |

|---|---|---|---|---|---|

| Laboratory | 0.9749 | 0.9568 | 0.9944 | 0.9752 | 0.9749 |

| Laboratory + synthetic | 0.9749 | 1.0000 | 0.9494 | 0.9741 | 0.9749 |

| Laboratory + plant | 0.9972 | 0.9944 | 1.0000 | 0.9972 | 0.9972 |

| Laboratory + synthetic + plant | 0.9972 | 1.0000 | 0.9944 | 0.9972 | 0.9972 |

| Plant + synthetic | 0.9944 | 0.9889 | 1.0000 | 0.9944 | 0.9944 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cerro, I.; Latasa, I.; Guerra, C.; Pagola, P.; Bujanda, B.; Astrain, J.J. Smart System with Artificial Intelligence for Sensory Gloves. Sensors 2021, 21, 1849. https://doi.org/10.3390/s21051849

Cerro I, Latasa I, Guerra C, Pagola P, Bujanda B, Astrain JJ. Smart System with Artificial Intelligence for Sensory Gloves. Sensors. 2021; 21(5):1849. https://doi.org/10.3390/s21051849

Chicago/Turabian StyleCerro, Idoia, Iban Latasa, Claudio Guerra, Pedro Pagola, Blanca Bujanda, and José Javier Astrain. 2021. "Smart System with Artificial Intelligence for Sensory Gloves" Sensors 21, no. 5: 1849. https://doi.org/10.3390/s21051849

APA StyleCerro, I., Latasa, I., Guerra, C., Pagola, P., Bujanda, B., & Astrain, J. J. (2021). Smart System with Artificial Intelligence for Sensory Gloves. Sensors, 21(5), 1849. https://doi.org/10.3390/s21051849