Driving Environment Perception Based on the Fusion of Vehicular Wireless Communications and Automotive Remote Sensors

Abstract

:1. Introduction

2. System Overview

2.1. Overall Design of the Proposed System

2.2. Automotive Remote Sensors

2.3. V2X Communications

3. State Estimation and Prediction

3.1. Kalman Filtering

3.2. Data Fusion

3.3. Trajectory Prediction and Risk Assessment

4. Experimental Evaluation

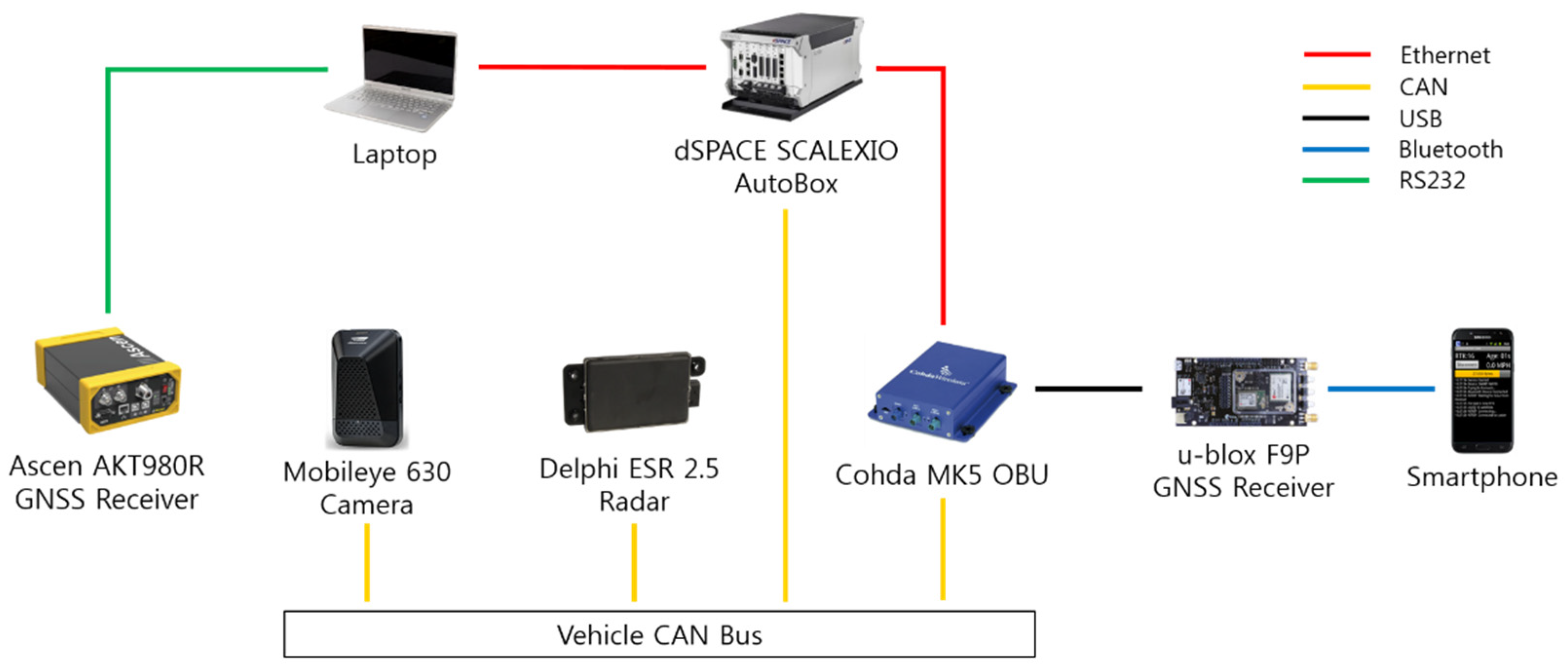

4.1. Vehicle Configuration

4.2. Surrounding Vehicle Localization

4.2.1. Experimental Environment

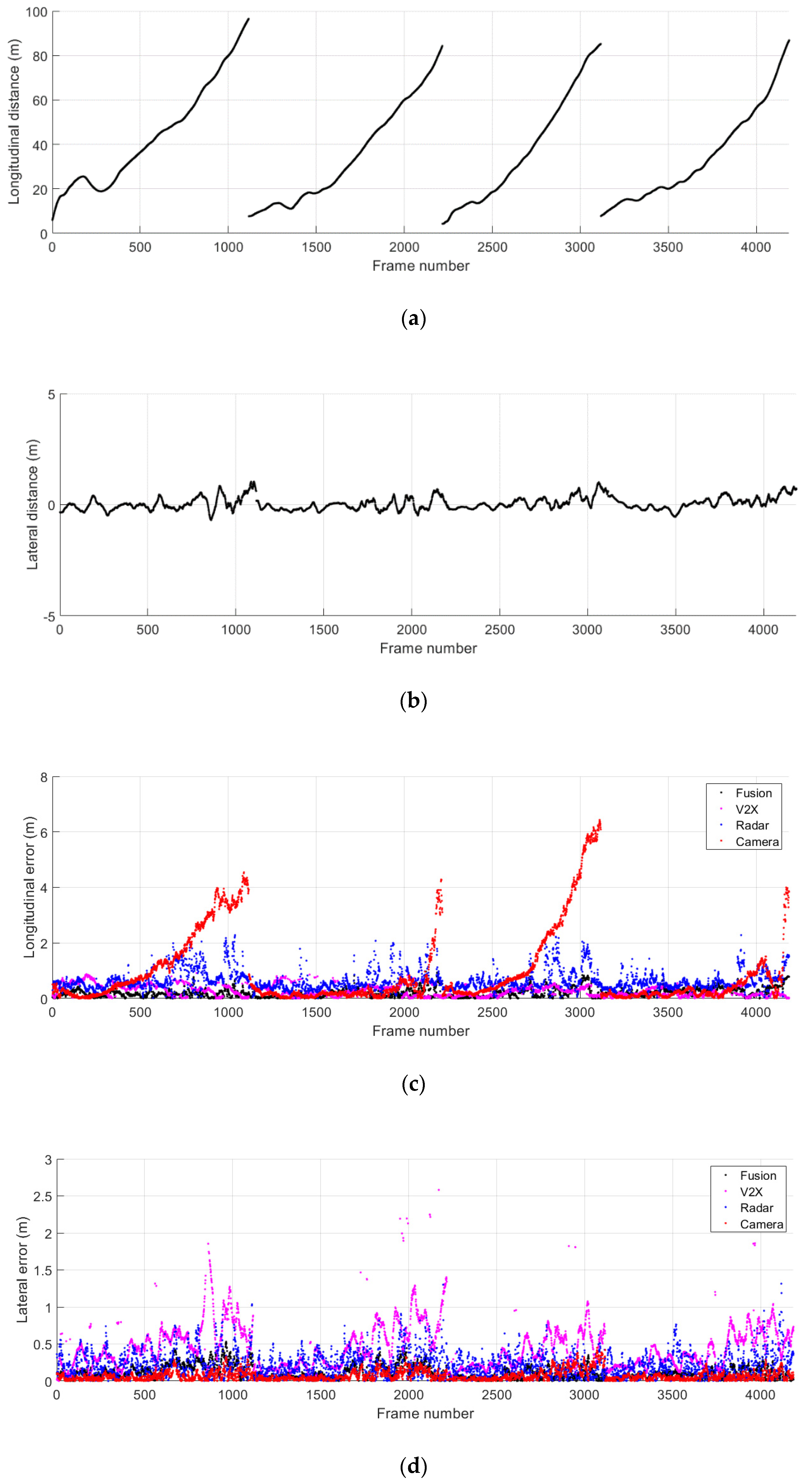

4.2.2. Performance Evaluation

4.3. Cut-In Driving Scenario

4.3.1. Experimental Environment

4.3.2. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADAS | Advanced driver assistance system |

| BSM | Basic safety message |

| CAM | Cooperative awareness message |

| CAN | Controller area network |

| CAV | Connected and automated vehicle |

| CTRV | Constant turn rate and velocity |

| C-V2X | Cellular vehicle-to-everything |

| DENM | Decentralized environmental notification message |

| DSRC | Dedicated short-range communications |

| ECU | Electronic control unit |

| FMCW | Frequency modulated continuous wave |

| FOV | Field of view |

| GNSS | Global navigation satellite system |

| ITS | Intelligent transportation system |

| NTRIP | Networked Transport of RTCM via Internet Protocol |

| OBU | On-board unit |

| ODD | Operational design domain |

| RSU | Roadside unit |

| RTCM | Radio Technical Commission for Maritime Services |

| TTC | Time-to-collision |

| V2I | Vehicle-to-infrastructure |

| V2N | Vehicle-to-network |

| V2P | Vehicle-to-pedestrian |

| V2V | Vehicle-to-vehicle |

| V2X | Vehicle-to-everything |

| WAVE | Wireless access in vehicular environments |

| WGS | World Geodetic System |

References

- World Health Organization. Global Status Report on Road Safety 2018; WHO: Geneva, Switzerland, 2018; ISBN 978-92-4-156568-4. [Google Scholar]

- Sustainable Mobility for All. Global Mobility Report 2017: Tracking Sector Performance; SuM4All: Washington, DC, USA, 2017; Available online: https://sum4all.org/publications/global-mobility-report-2017 (accessed on 20 November 2020).

- Rao, A.M.; Rao, K.R. Measuring Urban Traffic Congestion—A Review. Int. J. Traffic Transp. Eng. 2012, 2, 286–305. [Google Scholar]

- Litman, T. Autonomous Vehicle Implementation Predictions: Implications for Transport. Planning; Victoria Transport Policy Institute (VTPI): Victoria, BC, Canada, 2020. [Google Scholar]

- Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE J3016; SAE International: Warrendale, PA, USA, 2018.

- Mervis, J. Are We Going Too Fast on Driverless Cars? Available online: https://www.sciencemag.org/news/2017/12/are-we-going-too-fast-driverless-cars (accessed on 28 December 2020).

- Brown, B.; Laurier, E. The Trouble with Autopilots: Assisted and Autonomous Driving on the Social Road. In Proceedings of the Conference on Human Factors in Computing Systems, Denver, Colorado, USA, 6–11 May 2017; pp. 416–429. [Google Scholar]

- Karpathy, A. AI for Full-Self Driving. In Proceedings of the 5th Annual Scaled Machine Learning Conference, Mountain View, CA, USA, February 2020; Available online: https://info.matroid.com/scaledml-media-archive-2020 (accessed on 28 December 2020).

- Hawkins, A.J. Volvo Will Use Waymo’s Self-Driving Technology to Power a Fleet of Electric Robotaxis. The Verge, June 2020. Available online: https://www.theverge.com/2020/6/25/21303324/volvo-waymo-l4-deal-electric-self-driving-robot-taxi (accessed on 29 December 2020).

- Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; et al. Autonomous driving in urban environments: Boss and the Urban Challenge. J. Field Robot. 2008, 25, 425–466. [Google Scholar] [CrossRef] [Green Version]

- Wille, J.M.; Saust, F.; Maurer, M. Stadtpilot: Driving Autonomously on Braunschweig’s Inner Ring Road. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 506–511. [Google Scholar]

- Guizzo, E. How Google’s Self-Driving Car Works, IEEE Spectrum Online. 2011. Available online: http://spectrum.ieee.org/automaton/robotics/artificial-intelligence/how-google-self-driving-car-works (accessed on 27 November 2016).

- Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C.G.; et al. Making Bertha Drive—An Autonomous Journey on a Historic Route. IEEE Intell. Transp. Syst. Mag. 2014, 6, 8–20. [Google Scholar] [CrossRef]

- Broggi, A.; Cerri, P.; Debattisti, S.; Laghi, M.C.; Medici, P.; Molinari, D.; Panciroli, M.; Prioletti, A. PROUD—Public Road Urban Driverless-Car Test. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3508–3519. [Google Scholar] [CrossRef]

- Marti, E.; de Miguel, M.A.; Garcia, F.; Perez, J. A Review of Sensor Technologies for Perception in Automated Driving. IEEE Intell. Transp. Syst. Mag. 2019, 11, 94–108. [Google Scholar] [CrossRef] [Green Version]

- National Transportation Safety Board. Collision between a Car Operating with Automated Vehicle Control, Systems and a Tractor-Semitrailer Truck Near Williston, Florida, 7 May 2016; Highway Accident Report NTSB/HAR-17/02; NTSB: Washington, DC, USA, 2017. Available online: https://data.ntsb.gov/Docket?NTSBNumber=HWY16FH018 (accessed on 29 December 2020).

- National Transportation Safety Board. Collision between a Sport Utility Vehicle Operating with Partial Driving Automation and a Crash Attenuator, Mountain View, California, 23 March 2018; Highway Accident Report NTSB/HAR-20/01; NTSB: Washington, DC, USA, 2020. Available online: https://data.ntsb.gov/Docket?NTSBNumber=HWY18FH011 (accessed on 29 December 2020).

- National Transportation Safety Board. Collision between Car Operating with Partial Driving Automation and Truck-Tractor Semitrailer, Delray Beach, Florida, 1 March 2019; Highway Accident Brief NTSB/HAB-20/01; NTSB: Washington, DC, USA, 2020. Available online: https://data.ntsb.gov/Docket?NTSBNumber=HWY19FH008 (accessed on 29 December 2020).

- National Transportation Safety Board. Collision between Vehicle Controlled by Developmental Automated Driving System and Pedestrian, Tempe, Arizona, 18 March 2018; Highway Accident Report NTSB/HAR-19/03; NTSB: Washington, DC, USA, 2019. Available online: https://data.ntsb.gov/Docket?NTSBNumber=HWY18MH010 (accessed on 29 December 2020).

- Shladover, S.E. Connected and Automated Vehicle Systems: Introduction and Overview. J. Intell. Transp. Syst. 2018, 22, 190–200. [Google Scholar] [CrossRef]

- Guanetti, J.; Kim, Y.; Borrelli, F. Control of Connected and Automated Vehicles: State of the Art and Future Challenges. Annu. Rev. Control. 2018, 45, 18–40. [Google Scholar] [CrossRef] [Green Version]

- Eskandarian, A.; Wu, C.; Sun, C. Research Advances and Challenges of Autonomous and Connected Ground Vehicles. IEEE Trans. Intell. Transp. Syst. 2019, 1–29. [Google Scholar] [CrossRef]

- Baek, M.; Jeong, D.; Choi, D.; Lee, S. Vehicle Trajectory Prediction and Collision Warning via Fusion of Multisensors and Wireless Vehicular Communications. Sensors 2020, 20, 288. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rauch, A.; Maier, S.; Klanner, F.; Dietmayer, K. Inter-Vehicle Object Association for Cooperative Perception Systems. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, The Hague, The Netherlands, 6–9 October 2013; pp. 893–898. [Google Scholar]

- Obst, M.; Hobert, L.; Reisdorf, P. Multi-Sensor Data Fusion for Checking Plausibility of V2V Communications by Vision-Based Multiple-Object Tracking. In Proceedings of the IEEE Vehicular Networking Conference, Paderborn, Germany, 3–5 December 2014; pp. 143–150. [Google Scholar]

- de Ponte Müller, F.; Diaz, E.M.; Rashdan, I. Cooperative Positioning and Radar Sensor Fusion for Relative Localization of Vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016; pp. 1060–1065. [Google Scholar]

- Chen, Q.; Roth, T.; Yuan, T.; Breu, J.; Kuhnt, F.; Zöllner, M.; Bogdanovic, M.; Weiss, C.; Hillenbrand, J.; Gern, A. DSRC and Radar Object Matching for Cooperative Driver Assistance Systems. In Proceedings of the IEEE Intelligent Vehicles Symposium, Seoul, Korea, 28 June–1 July 2015; pp. 1348–1354. [Google Scholar] [CrossRef]

- Fujii, S.; Fujita, A.; Umedu, T.; Kaneda, S.; Yamaguchi, H.; Higashino, T.; Takai, M. Cooperative Vehicle Positioning via V2V Communications and Onboard Sensors. In Proceedings of the IEEE Vehicular Technology Conference, San Francisco, CA, USA, 5–8 September 2011; pp. 1–5. [Google Scholar]

- Dedicated Short Range Communications (DSRC) Message Set Dictionary; SAE J2735; SAE International: Warrendale, PA, USA, 2016.

- Strohm, K.M.; Bloecher, H.-L.; Schneider, R.; Wenger, J. Development of Future Short Range Radar Technology. In Proceedings of the European Radar Conference, Paris, France, 3–4 October 2005; pp. 165–168. [Google Scholar] [CrossRef]

- Hasch, J.; Topak, E.; Schnabel, R.; Zwick, T.; Weigel, R.; Waldschmidt, C. Millimeter-Wave Technology for Automotive Radar Sensors in the 77 GHz Frequency Band. IEEE Trans. Microw. Theory Techn. 2012, 60, 845–860. [Google Scholar] [CrossRef]

- Ramasubramanian, K.; Ramaiah, K. Moving from Legacy 24 GHz to State-of-the-Art 77-GHz Radar. ATZelektronik Worldw. 2018, 13, 46–49. [Google Scholar] [CrossRef]

- Klotz, M.; Rohling, H. 24 GHz Radar Sensors for Automotive Applications. In Proceedings of the International Conference on Microwaves, Radar and Wireless Communications, Wroclaw, Poland, 22–24 May 2000; pp. 359–362. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Gao, H.; Xie, G.; Gao, B.; Li, D. Technology and Application of Intelligent Driving Based on Visual Perception. CAAI Trans. Intell. Technol. 2017, 2, 126–132. [Google Scholar] [CrossRef]

- Stein, G.P.; Mano, O.; Shashua, A. Vision-Based ACC with a Single Camera: Bounds on Range and Range Rate Accuracy. In Proceedings of the IEEE Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 120–125. [Google Scholar]

- Dagan, E.; Mano, O.; Stein, G.P.; Shashua, A. Forward Collision Warning with a Single Camera. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 37–42. [Google Scholar]

- Han, J.; Heo, O.; Park, M.; Kee, S.; Sunwoo, M. Vehicle Distance Estimation Using a Mono-Camera for FCW/AEB Systems. Int. J. Automot. Technol. 2016, 17, 483–491. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The Robot That Won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Buehler, M.; Iagnemma, K.; Singh, S. (Eds.) The 2005 DARPA Grand Challenge: The Great Robot. Race; Springer: Berlin, Germany, 2007; ISBN 978-3-540-73428-4. [Google Scholar]

- Buehler, M.; Iagnemma, K.; Singh, S. (Eds.) The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin, Germany, 2009; ISBN 978-3-642-03990-4. [Google Scholar]

- Hecht, J. Lidar for Self-Driving Cars. Opt. Photonics News 2018, 29, 26–33. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an Open-Source Lidar and Visual Simultaneous Localization and Mapping Library for Large-Scale and Long-Term Online Operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- de Paula Veronese, L.; Ismail, A.; Narayan, V.; Schulze, M. An Accurate and Computational Efficient System for Detecting and Classifying Ego and Sides Lanes Using LiDAR. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; pp. 1476–1483. [Google Scholar] [CrossRef]

- Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors 2019, 19, 391. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kenney, J.B. Dedicated Short-Range Communications (DSRC) Standards in the United States. Proc. IEEE 2011, 99, 1162–1182. [Google Scholar] [CrossRef]

- MacHardy, Z.; Khan, A.; Obana, K.; Iwashina, S. V2X Access Technologies: Regulation, Research, and Remaining Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 1858–1877. [Google Scholar] [CrossRef]

- Zhao, L.; Li, X.; Gu, B.; Zhou, Z.; Mumtaz, S.; Frascolla, V.; Gacanin, H.; Ashraf, M.I.; Rodriguez, J.; Yang, M.; et al. Vehicular Communications: Standardization and Open Issues. IEEE Commun. Std. Mag. 2018, 2, 74–80. [Google Scholar] [CrossRef]

- IEEE Standard for Wireless Access in Vehicular Environments (WAVE)—Multi-Channel Operation; IEEE Std. 1609.4; IEEE: New York, NY, USA, 2016.

- Kalman, R.E. A new approach to linear filtering and prediction problems. Trans. ASME J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. In Proceedings of the SIGGRAPH, Los Angeles, CA, USA, 12–17 August 2001. Course 8. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. A New Extension of the Kalman Filter to Nonlinear Systems. In Proceedings of the AeroSense: The 11th International Symposium on Aerospace/Defense Sensing, Simulation, and Controls, Orlando, FL, USA, 21–25 April 1997; pp. 182–193. [Google Scholar]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A Tutorial on Particle Filters for Online Nonlinear/Non-Gaussian Bayesian Tracking. IEEE Trans. Signal. Process. 2002, 50, 174–188. [Google Scholar] [CrossRef] [Green Version]

- Daum, F. Nonlinear Filters: Beyond the Kalman Filter. IEEE Trans. Aerosp. Electron. Syst. Mag. 2005, 20, 57–69. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley and Sons: New York, NY, USA, 2001; ISBN 0-471-41655-X. [Google Scholar]

- Mo, L.; Song, X.; Zhou, Y.; Sun, Z.; Bar-Shalom, Y. Unbiased Converted Measurements for Tracking. IEEE Trans. Aerosp. Electron. Syst. 1998, 34, 1023–1027. [Google Scholar] [CrossRef]

- Lerro, D.; Bar-Shalom, Y. Tracking with Debiased Consistent Converted Measurements vs. EKF. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 1015–1022. [Google Scholar] [CrossRef]

- Escamilla-Ambrosio, P.J.; Lieven, N. A Multiple-Sensor Multiple-Target Tracking Approach for the Autotaxi System. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 601–606. [Google Scholar]

- Floudas, N.; Polychronopoulos, A.; Tsogas, M.; Amditis, A. Multi-Sensor Coordination and Fusion for Automotive Safety Applications. In Proceedings of the International Conference on Information Fusion, Florence, Italy, 10–13 July 2006; pp. 1–8. [Google Scholar]

- Matzka, S.; Altendorfer, R. A Comparison of Track-to-Track Fusion Algorithms for Automotive Sensor Fusion. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Seoul, Korea, 20–22 August 2008; pp. 189–194. [Google Scholar]

- Cheng, H. Autonomous Intelligent Vehicles: Theory, Algorithms, and Implementation; Springer: London, UK, 2011; ISBN 978-1-4471-2279-1. [Google Scholar]

- Aeberhard, M.; Schlichthärle, S.; Kaempchen, N.; Bertram, T. Track-to-Track Fusion with Asynchronous Sensors Using Information Matrix Fusion for Surround Environment Perception. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1717–1726. [Google Scholar] [CrossRef]

- Aeberhard, M.; Kaempchen, N. High-Level Sensor Data Fusion Architecture for Vehicle Surround Environment Perception. In Proceedings of the International Workshop Intelligent Transportation, Hamburg, Germany, 22–23 March 2011. [Google Scholar]

- Steinbaeck, J.; Steger, C.; Holweg, G.; Druml, N. Next Generation Radar Sensors in Automotive Sensor Fusion Systems. In Proceedings of the Sensor Data Fusion: Trends, Solutions, Applications, Bonn, Germany, 10–12 October 2017; pp. 1–6. [Google Scholar]

- Chong, C.Y.; Mori, S.; Barker, W.H.; Chang, K.C. Architectures and Algorithms for Track Association and Fusion. IEEE Aerosp. Electron. Syst. Mag. 2000, 15, 5–13. [Google Scholar] [CrossRef]

- Bar-Shalom, Y. On the Track-to-Track Correlation Problem. IEEE Trans. Autom. Control. 1981, 26, 571–572. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Campo, L. The Effect of the Common Process Noise on the Two-Sensor Fused-Track Covariance. IEEE Trans. Aerosp. Electron. Syst. 1986, 22, 803–805. [Google Scholar] [CrossRef]

- Chong, C.Y.; Mori, S. Convex Combination and Covariance Intersection Algorithms in Distributed Fusion. In Proceedings of the International Conference on Information Fusion, Montreal, QC, Canada, 7–10 August 2001. [Google Scholar]

- Ahmed-Zaid, F.; Bai, F.; Bai, S.; Basnayake, C.; Bellur, B.; Brovold, S.; Brown, G.; Caminiti, L.; Cunningham, D.; Elzein, H.; et al. Vehicle Safety Communications—Applications (VSC-A) Final Report; Rep. DOT HS 811 492A; National Highway Traffic Safety Administration: Washington, DC, USA, 2011.

- Bloecher, H.L.; Dickmann, J.; Andres, M. Automotive Active Safety and Comfort Functions Using Radar. In Proceedings of the IEEE International Conference on Ultra-Wideband, Vancouver, BC, Canada, 9–11 September 2009; pp. 490–494. [Google Scholar]

- Mobileye. Forward Collision Warning (FCW). Available online: https://www.mobileye.com/us/fleets/technology/forward-collision-warning/ (accessed on 10 November 2019).

| Type | Delphi ESR 2.5 | |

|---|---|---|

| Long-Range | Mid-Range | |

| Frequency band | 76.5 GHz | 76.5 GHz |

| Range | 175 m | 60 m |

| Range accuracy | 0.5 m | 0.25 m |

| Angular accuracy | 0.5 deg | 1.0 deg |

| Horizontal FOV | 20 deg | 90 deg |

| Data update | 50 ms | 50 ms |

| Type | Mobileye 630 |

|---|---|

| Frame size | 640 × 480 pixels |

| Dynamic range | 55 dB linear 100 dB in HDR |

| Range accuracy (longitudinal) | <10% (in general) |

| Width accuracy | <10% |

| Horizontal field-of-view (FOV) | 38 deg |

| Data update | 66–100 ms |

| Type | IEEE WAVE |

|---|---|

| Frequency | 5.850–5.925 GHz |

| Channel | 1 CCH, 6 SCH |

| Bandwidth | 10 MHz |

| Data rate | 3–27 Mbps |

| Maximum range | 1000 m |

| Modulation | OFDM |

| Media access control | CSMA/CA |

| Content | Description |

|---|---|

| Message count | Sequence number for the same type of messages originated from the same sender. |

| Temporary ID | Device identifier that is modified periodically for on-board units (OBUs). This value may be fixed for roadside units (RSUs). |

| DSRC second | Milliseconds within a minute that typically represents the moment when the position was determined. |

| Position | Geographic latitude, longitude, and height. |

| Position accuracy | Semi-major axis (length and orientation) and semi-minor axis (length) of an ellipsoid representing the position accuracy. |

| Transmission state | Vehicle transmission state (i.e., neutral, park, forward, and reverse). |

| Speed | Vehicle speed. |

| Heading | Vehicle heading. Past values may be used if the sender is stopped. |

| Steering wheel angle | Angle of the vehicle steering wheel. |

| Acceleration | Vehicle acceleration in longitudinal, lateral, and vertical axes. |

| Yaw rate | Vehicle yaw rate. |

| Brake system status | Status of the brake and other control systems (i.e., traction control, ABS, stability control, brake boost, and auxiliary brake). |

| Vehicle size | Vehicle width and length. |

| Condition | Stage | Warning Type | Color |

|---|---|---|---|

| No collision detected | No threat (Level 0) | Visual | Gray |

| Threat detected (Level 1) | Visual | Green | |

| Inform driver (Level 2) | Visual and audible | Yellow | |

| Warn driver (Level 3) | Visual and audible | Red |

| Scenario Number | Host Vehicle Speed (km/h) | Remote Vehicle Speed (km/h) | Remote Vehicle Driving Lane |

|---|---|---|---|

| 1 | 20 | 25 | Same as HV |

| 2 | 20 | 25 | Adjacent to HV |

| Data Range (m) | Camera Lon. Position Error | Radar Lon. Position Error | V2X Lon. Position Error | Fusion Lon. Position Error | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | |

| 0–10 | 0.36 | 0.24 | 0.39 | 0.18 | 0.25 | 0.22 | 0.13 | 0.13 |

| 10–20 | 0.19 | 0.15 | 0.51 | 0.21 | 0.31 | 0.28 | 0.12 | 0.11 |

| 20–30 | 0.33 | 0.23 | 0.54 | 0.15 | 0.38 | 0.37 | 0.24 | 0.09 |

| 30–40 | 0.63 | 0.45 | 0.56 | 0.20 | 0.33 | 0.33 | 0.29 | 0.16 |

| 40–50 | 1.23 | 0.79 | 0.74 | 0.42 | 0.40 | 0.38 | 0.24 | 0.23 |

| 50–60 | 1.82 | 0.98 | 1.09 | 0.57 | 0.33 | 0.33 | 0.23 | 0.23 |

| 60–70 | 2.73 | 1.69 | 0.83 | 0.32 | 0.26 | 0.25 | 0.28 | 0.16 |

| Total | 1.16 | 0.96 | 0.67 | 0.35 | 0.34 | 0.32 | 0.22 | 0.18 |

| Data Range (m) | Camera Lat. Position Error | Radar Lat. Position Error | V2X Lat. Position Error | Fusion Lat. Position Error | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | |

| 0–10 | 0.05 | 0.05 | 0.21 | 0.21 | 0.12 | 0.09 | 0.05 | 0.04 |

| 10–20 | 0.05 | 0.04 | 0.21 | 0.16 | 0.24 | 0.10 | 0.06 | 0.04 |

| 20–30 | 0.06 | 0.06 | 0.26 | 0.20 | 0.32 | 0.13 | 0.09 | 0.06 |

| 30–40 | 0.07 | 0.07 | 0.24 | 0.19 | 0.45 | 0.16 | 0.14 | 0.08 |

| 40–50 | 0.11 | 0.10 | 0.28 | 0.28 | 0.62 | 0.20 | 0.19 | 0.11 |

| 50–60 | 0.12 | 0.12 | 0.34 | 0.29 | 0.70 | 0.31 | 0.20 | 0.10 |

| 60–70 | 0.15 | 0.15 | 0.28 | 0.28 | 0.84 | 0.41 | 0.19 | 0.12 |

| Total | 0.09 | 0.09 | 0.26 | 0.23 | 0.48 | 0.28 | 0.13 | 0.09 |

| Data Range (m) | Camera Lon. Position Error | Radar Lon. Position Error | V2X Lon. Position Error | Fusion Lon. Position Error | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | |

| 0–10 | N/A | N/A | 0.30 | 0.28 | 0.44 | 0.40 | 0.31 | 0.31 |

| 10–20 | 0.49 | 0.48 | 0.40 | 0.31 | 0.36 | 0.36 | 0.34 | 0.32 |

| 20–30 | 0.91 | 0.80 | 0.44 | 0.39 | 0.41 | 0.40 | 0.40 | 0.40 |

| 30–40 | 1.52 | 1.37 | 0.43 | 0.40 | 0.47 | 0.46 | 0.42 | 0.42 |

| 40–50 | 2.80 | 1.82 | 0.37 | 0.33 | 0.34 | 0.33 | 0.31 | 0.30 |

| 50–60 | 3.61 | 2.52 | 0.39 | 0.26 | 0.27 | 0.26 | 0.24 | 0.22 |

| 60–70 | 4.29 | 3.50 | 0.31 | 0.26 | 0.38 | 0.33 | 0.28 | 0.27 |

| Total | 2.62 | 2.20 | 0.39 | 0.32 | 0.37 | 0.37 | 0.33 | 0.32 |

| Data Range (m) | Camera Lat. Position Error | Radar Lat. Position Error | V2X Lat. Position Error | Fusion Lat. Position Error | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | RMSE (m) | SD (m) | |

| 0–10 | N/A | N/A | 0.32 | 0.22 | 0.15 | 0.09 | 0.11 | 0.09 |

| 10–20 | 0.15 | 0.07 | 0.29 | 0.18 | 0.18 | 0.06 | 0.05 | 0.05 |

| 20–30 | 0.16 | 0.08 | 0.28 | 0.25 | 0.31 | 0.15 | 0.09 | 0.08 |

| 30–40 | 0.17 | 0.11 | 0.25 | 0.24 | 0.42 | 0.19 | 0.07 | 0.06 |

| 40–50 | 0.28 | 0.14 | 0.25 | 0.25 | 0.57 | 0.20 | 0.07 | 0.07 |

| 50–60 | 0.27 | 0.17 | 0.26 | 0.26 | 0.68 | 0.30 | 0.12 | 0.12 |

| 60–70 | 0.26 | 0.17 | 0.25 | 0.24 | 0.79 | 0.32 | 0.09 | 0.09 |

| Total | 0.22 | 0.14 | 0.27 | 0.26 | 0.51 | 0.30 | 0.09 | 0.09 |

| Scenario Number | Host Vehicle Speed (km/h) | Remote Vehicle Speed (km/h) | Cut-In Distance (m) | Number of Cut-In Maneuvers |

|---|---|---|---|---|

| 1 | 40–45 | 35–40 | 15–20 | 4 |

| 2 | 40–45 | 25–30 | 15–20 | 5 |

| 3 | 40–45 | 15–20 | 15–20 | 8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baek, M.; Mun, J.; Kim, W.; Choi, D.; Yim, J.; Lee, S. Driving Environment Perception Based on the Fusion of Vehicular Wireless Communications and Automotive Remote Sensors. Sensors 2021, 21, 1860. https://doi.org/10.3390/s21051860

Baek M, Mun J, Kim W, Choi D, Yim J, Lee S. Driving Environment Perception Based on the Fusion of Vehicular Wireless Communications and Automotive Remote Sensors. Sensors. 2021; 21(5):1860. https://doi.org/10.3390/s21051860

Chicago/Turabian StyleBaek, Minjin, Jungwi Mun, Woojoong Kim, Dongho Choi, Janghyuk Yim, and Sangsun Lee. 2021. "Driving Environment Perception Based on the Fusion of Vehicular Wireless Communications and Automotive Remote Sensors" Sensors 21, no. 5: 1860. https://doi.org/10.3390/s21051860

APA StyleBaek, M., Mun, J., Kim, W., Choi, D., Yim, J., & Lee, S. (2021). Driving Environment Perception Based on the Fusion of Vehicular Wireless Communications and Automotive Remote Sensors. Sensors, 21(5), 1860. https://doi.org/10.3390/s21051860