NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices

Abstract

:1. Introduction

2. Related Work

2.1. Fall Detection Using Wearable Sensors

2.2. Deep Learning Techniques for Wearable Sensors Based Fall Detection

2.3. Methods to Deal with Loss of Sensor Data

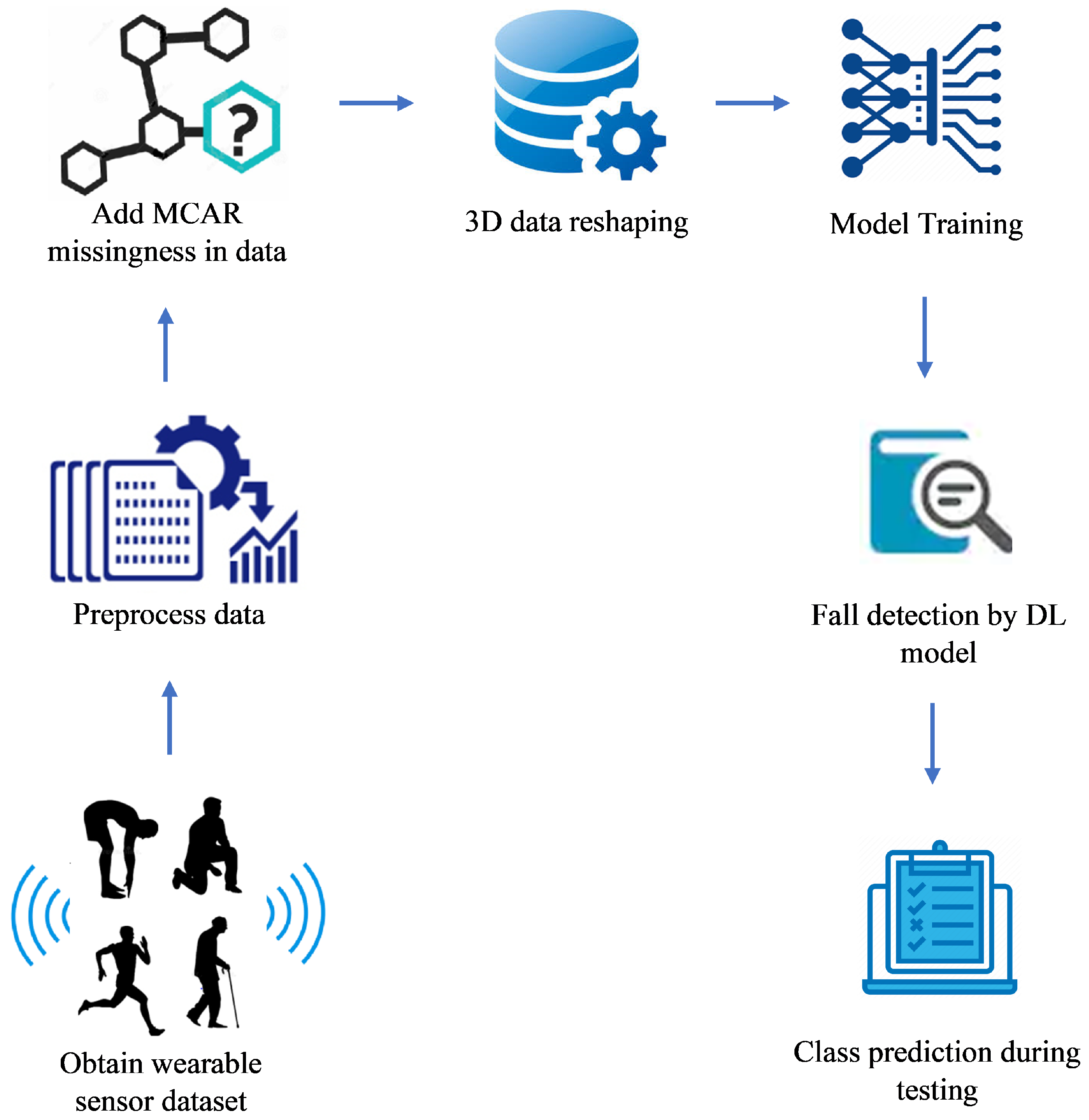

3. Proposed Framework: NT-FDS a Noise Tolerant Fault Detection System

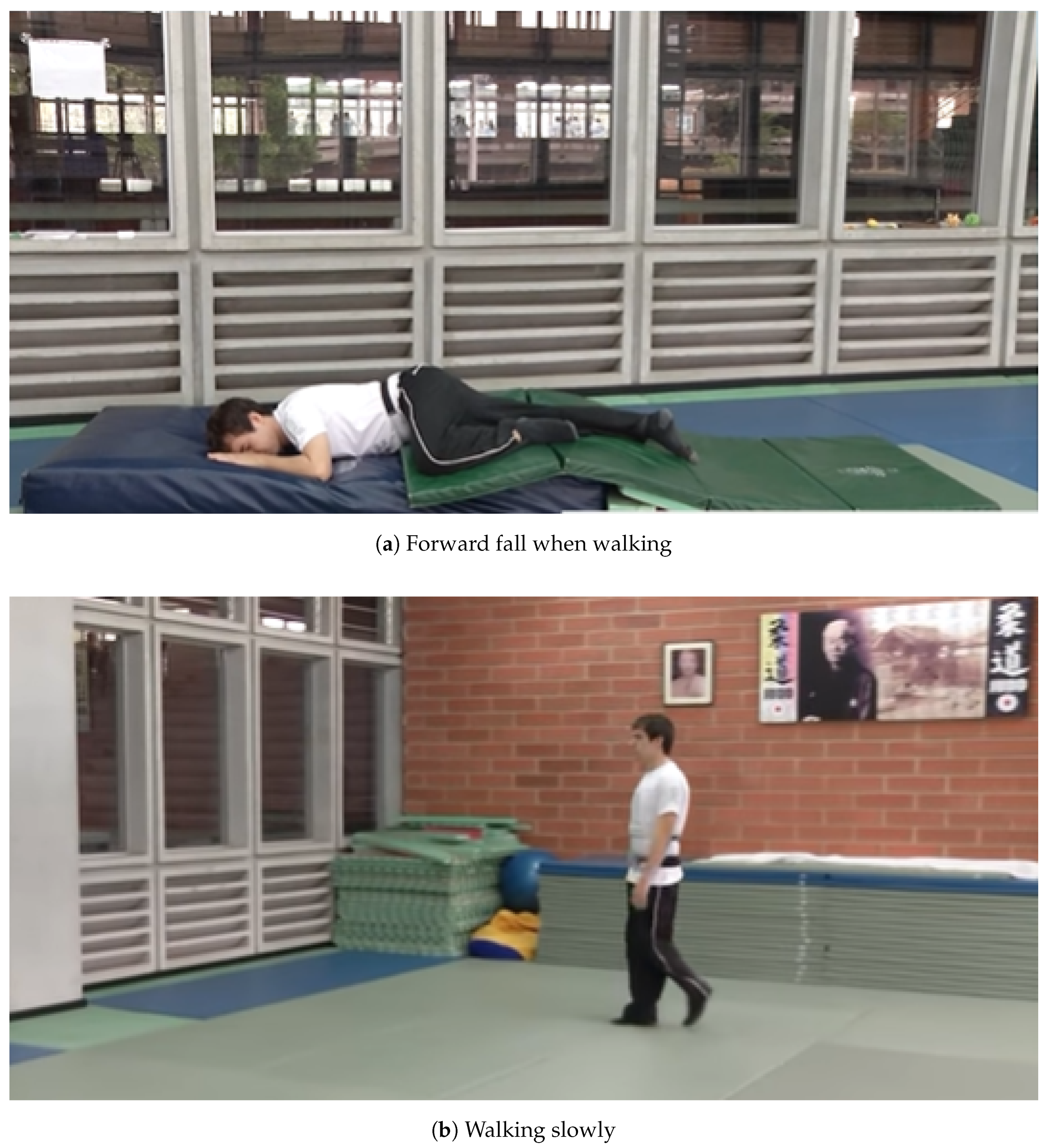

3.1. Datasets

3.2. Preprocessing

- Fall: this class characterizes the activity intervals when the subject suffers a dangerous state transition leading to a harmful shift of state, that is, a fall. All 15 types of falls performed by the participants are subsumed under the umbrella of this class label.

- ADL: this class characterizes the activity intervals when the subject maintains control of its state and performs tasks without abrupt state transitions which may lead to falls. All 19 types of ADLs performed by the participants are subsumed under the umbrella of this class label.

3.3. Missing Values

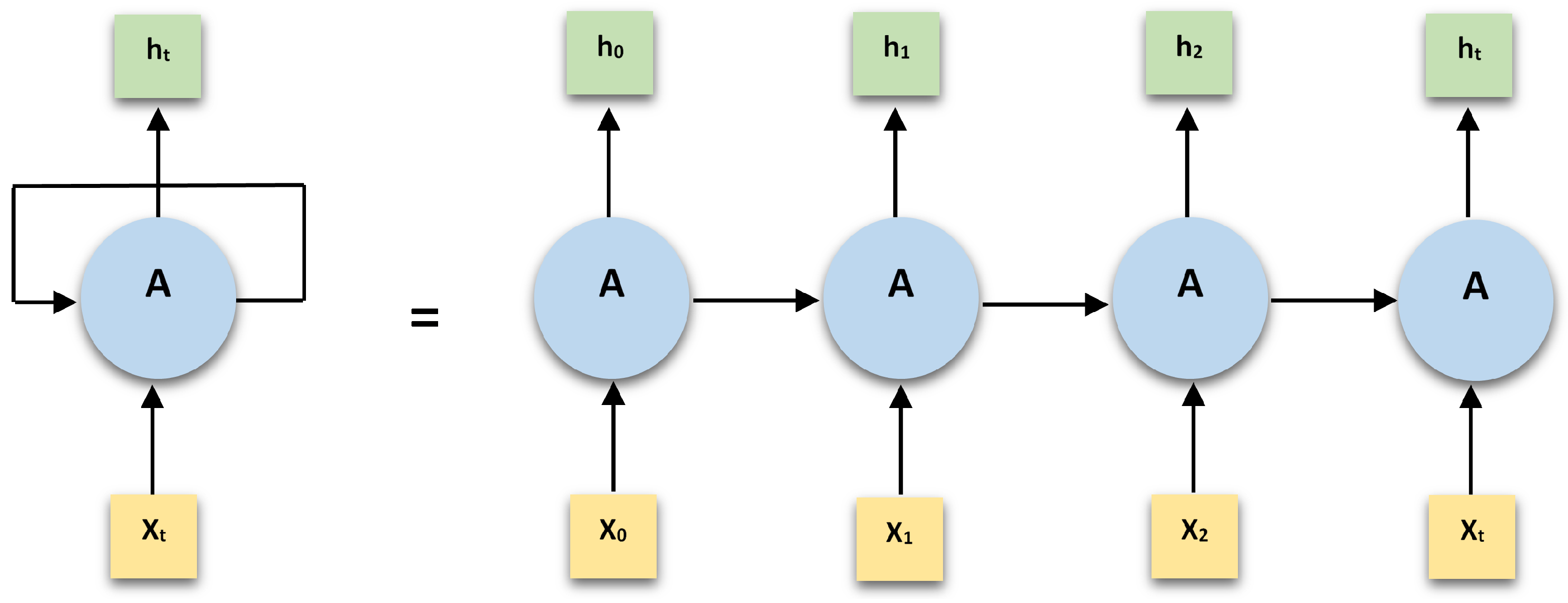

3.4. Deep Learning Model

| Algorithm 1: Algorithm for Deep Learning based Missing Data Imputation and Fall Detection. |

(1) Data Preprocessing:

|

3.5. Experimental Setup

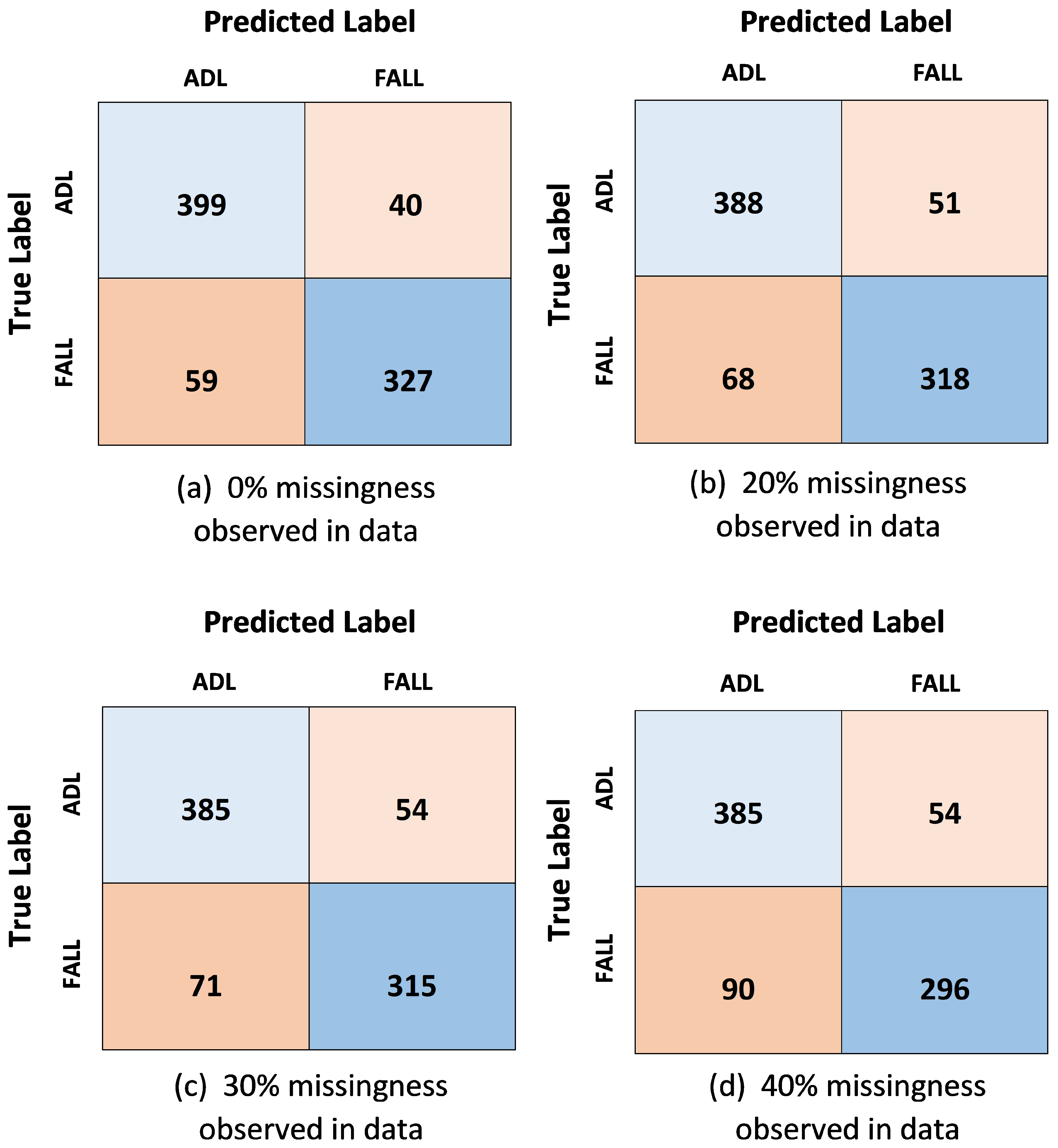

4. Performance Evaluation

- Positive (P): Observation is positive.

- Negative (N): Observation is not positive.

- True Positive (TP): Observation is positive. The prediction is positive.

- False Negative (FN): Observation is positive, but the prediction is negative.

- True Negative (TN): Observation is negative. The prediction is negative.

- False Positive (FP): Observation is negative, the prediction is positive.

4.1. Multisensor Fusion Approach

4.2. The Single Sensor Approach

4.3. Comparison with Existing State of the Art

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| IoMT | Internet of Medical Things |

| HAR | Human Activity Recognition |

| FDS | Fall Detection Systems |

| ADL | Activities of Daily Life |

| GRU | Gated Recurrent Units |

| RNN | Recurrent Neural Networks |

| BPTT | Backpropagation through time |

| LSTM | Long Short-Term Memory networks |

| BiLSTM | Birdirectional Long Short-Term Memory |

References

- Atzori, L.; Iera, A.; Morabito, G. Understanding the Internet of Things: Definition, potentials, and societal role of a fast-evolving paradigm. Ad Hoc Netw. 2017, 56, 122–140. [Google Scholar] [CrossRef]

- Available online: https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/the-internet-of-things-the-value-of-digitizing-the-physical-world (accessed on 10 January 2020).

- Falls. Available online: http://www.who.int/en/news-room/fact-sheets/detail/falls (accessed on 8 September 2019).

- Igual, R.; Medrano, C.; Plaza, I. Challenges, issues and trends in fall detection systems. Biomed. Eng. Online 2013, 12, 66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mastorakis, G.; Makris, D. Fall detection system using Kinect’s infrared sensor. J. Real Time Image Process. 2014, 9, 635–646. [Google Scholar] [CrossRef]

- Gasparrini, S.; Cippitelli, E.; Spinsante, S.; Gambi, E. A Depth-Based Fall Detection System Using a Kinect® Sensor. Sensors 2014, 14, 2756–2775. [Google Scholar] [CrossRef]

- Mirmahboub, B.; Samavi, S.; Karimi, N.; Shirani, S. Automatic monocular system for human fall detection based on variations in silhouette area. IEEE Trans. Biomed. Eng. 2013, 60, 427–436. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.C.; Lim, W.K. A simple vision-based fall detection technique for indoor video Surveillance by Jia-Luen Chua. Signal Image Video Process. 2015, 9, 623–633. [Google Scholar]

- Auvinet, E.; Multon, F.; Saint-Arnaud, A.; Rousseau, J.; Meunier, J. Fall detection with multiple cameras: An occlusion-resistant method based on 3-d silhouette vertical distribution. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 290–300. [Google Scholar] [CrossRef]

- Alwan, M.; Rajendran, P.J.; Kell, S.; Mack, D.; Dalal, S.; Wolfe, M.; Felder, R. A smart and passive floor-vibration based fall detector for elderly. In Proceedings of the International Conference on Information and Communication Technologies, Berkeley, CA, USA, 25–26 May 2006; pp. 1003–1007. [Google Scholar]

- Popescu, M.; Li, Y.; Skubic, M.; Rantz, M. An Acoustic Fall Detector System that Uses Sound Height Information to Reduce the False Alarm Rate. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2008, 2008, 4628–4631. [Google Scholar] [CrossRef]

- Hirata, Y.; Komatsuda, S.; Kosuge, K. Fall prevention control of passive intelligent walker based on human model. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1222–1228. [Google Scholar]

- Sixsmith, A.; Johnson, N.; Whatmore, R. Pyroelectric IR sensor arrays for fall detection in the older population. J. Phys. 2005, 128, 153–160. [Google Scholar] [CrossRef] [Green Version]

- Napolitano, M.; Neppach, C.; Casdorph, V.; Naylor, S.; Innocenti, M.; Silvestri, G. Neural-network-based scheme for sensor failure detection, identification, and accommodation. J. Guid. Control Dyn. 1995, 18, 1280–1286. [Google Scholar] [CrossRef]

- Hussain, S.; Mokhtar, M.; Howe, J.M. Sensor Failure Detection, Identification, and Accommodation Using Fully Connected Cascade Neural Network. IEEE Trans. Ind. Electron. 2015, 62, 1683–1692. [Google Scholar] [CrossRef]

- Jaques, N.; Taylor, S.; Sano, A.; Picard, R. Multimodal autoencoder: A deep learning approach to filling in missing sensor data and enabling better mood prediction. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 202–208. [Google Scholar]

- Zhang, Y.; Thorburn, P.J.; Xiang, W.; Fitch, P. SSIM—A Deep Learning Approach for Recovering Missing Time Series Sensor Data. IEEE Internet Things J. 2019, 6, 6618–6628. [Google Scholar] [CrossRef]

- Gruenwald, L.; Chok, H.; Aboukhamis, M. Using Data Mining to Estimate Missing Sensor Data. In Proceedings of the Seventh IEEE International Conference on Data Mining Workshops (ICDMW 2007), Omaha, NE, USA, 28–31 October 2007; pp. 207–212. [Google Scholar] [CrossRef] [Green Version]

- Hossain, T.; Goto, H.; Rahman, A.M.A.; Inoue, S. A Study on Sensor-based Activity Recognition Having Missing Data. In Proceedings of the 2018 Joint 7th International Conference on Informatics, Electronics & Vision (ICIEV) and 2018 2nd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 25–29 June 2018; pp. 556–561. [Google Scholar]

- Lai, C.-F.; Chang, S.Y.; Chao, H.C. Detection of cognitive injured body region using multiple triaxial accelerometers for elderly falling. IEEE Sens. J. 2011, 11, 763–770. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Z.; Li, B.; Lee, S.; Sherratt, R.S. An enhanced fall detection system for elderly person monitoring using consumer home networks. IEEE Trans. Consum. Electron. 2014, 60, 23–29. [Google Scholar] [CrossRef]

- Casilari, E.; Lora-Rivera, R.; García-Lagos, F. A Study on the Application of Convolutional Neural Networks to Fall Detection Evaluated with Multiple Public Datasets. Sensors 2020, 20, 1466. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bourke, A.K.; Lyons, G.M. A threshold-based fall-detection algorithm using a bi-axial gyroscope sensor. Med. Eng. Phys. 2008, 30, 84–90. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Stankovic, J.A.; Hanson, M.A.; Barth, A.T.; Lach, J.; Zhou, G. Accurate, Fast Fall Detection Using Gyroscopes and Accelerometer-Derived Posture Information. In Proceedings of the 2009 Sixth International Workshop on Wearable and Implantable Body Sensor Networks, Berkeley, CA, USA, 3–5 June 2009; pp. 138–143. [Google Scholar] [CrossRef] [Green Version]

- Nyan, M.N.; Tay, F.; Murugasu, E. A wearable system for pre-impact fall detection. J. Biomech. 2008, 41, 3475–3481. [Google Scholar] [CrossRef]

- Martinez-Villaseñor, L.; Ponce, H. Design and Analysis for Fall Detection System Simplification. J. Vis. Exp. 2020, 158, e60361. [Google Scholar] [CrossRef]

- Haobo, L.; Aman, S.; Francesco, F.; Julien Le, K.; Hadi, H.; Matteo, P.; Enea, C.; Ennio, G.; Susanna, S. Multisensor data fusion for human activities classification and fall detection. In Proceedings of the 2017 IEEE Sensors, Glasgow, UK, 29 October–1 November 2017. [Google Scholar]

- Pierleoni, P.; Belli, A.; Maurizi, L.; Palma, L.; Pernini, L.; Paniccia, M.; Valenti, S. A wearable fall detector for elderly people based on AHRS and barometric sensor. IEEE Sens. J. 2016, 16, 6733–6744. [Google Scholar] [CrossRef]

- He, Y.; Li, Y. Physical Activity Recognition Utilizing the Built-In Kinematic Sensors of a Smartphone. Int. J. Distrib. Sens. Netw. 2013. [Google Scholar] [CrossRef] [Green Version]

- Taylor, R.M.; Marc, E.C.; Vangelis, M.; Anne, H.H.N.; Coralys, C.R. SmartFall: A Smartwatch-Based Fall Detection System Using Deep Learning. Sensor 2018, 18, 3363. [Google Scholar] [CrossRef] [Green Version]

- Musci, M.; De Martini, D.; Blago, N.; Facchinetti, T.; Piastra, M. Online Fall Detection using Recurrent Neural Networks. arXiv 2018, arXiv:1804.04976. [Google Scholar]

- Perejón, D.-M.; Civit, B. Wearable Fall Detector Using Recurrent Neural Networks. Sensors 2019, 19, 4885. [Google Scholar] [CrossRef] [Green Version]

- Torti, E.; Fontanella, A.; Musci, M.; Blago, N.; Pau, D.; Leporati, F.; Piastra, M. Embedded Real-Time Fall Detection with Deep Learning on Wearable Devices. In Proceedings of the 2018 21st Euromicro Conference on Digital System Design (DSD), Prague, Czech Republic, 29–31 August 2018; pp. 405–412. [Google Scholar]

- Wang, G.; Li, Q.; Wang, L.; Zhang, Y.; Liu, Z. Elderly Fall Detection with an Accelerometer Using Lightweight Neural Networks. Electronics 2019, 8, 1354. [Google Scholar] [CrossRef] [Green Version]

- Microsoft Band 2 Smartwatch. Available online: https://www.microsoft.com/en-us/band (accessed on 3 March 2020).

- Notch: Smart Motion Capture for Mobile Devices. Available online: https://wearnotch.com/ (accessed on 10 March 2020).

- Klenk, J.; Schwickert, L.; Palmerini, L.; Mellone, S.; Bourke, A.; Ihlen, E.A.; Kerse, N.; Hauer, K.; Pijnappels, M.; Synofzik, M.; et al. The FARSEEING real-world fall repository: A large-scale collaborative database to collect and share sensor signals from real-world falls. Eur. Rev. Aging Phys. Act. 2016, 13, 8. [Google Scholar] [CrossRef] [Green Version]

- Angela, S.; José, D.L.; Jesús, F.V.-B. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Programs Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef]

- Martínez-Villaseñor, L.; Ponce, H.; Brieva, J.; Moya-Albor, E.; Núñez-Martínez, J.; Peñafort-Asturiano, C. UP-Fall Detection Dataset: A Multimodal Approach. Sensors 2019, 19, 1988. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vavoulas, G.; Pediaditis, M.; Spanakis, E.; Tsiknakis, M. The MobiFall dataset: An initial evaluation of fall detection algorithms using smartphones. In Proceedings of the 13th IEEE International Conference on BioInformatics and BioEngineering, Chania, Greece, 10–13 November 2013. [Google Scholar]

- Medrano, C.; Igual, R.; Plaza, I.; Castro, M. Detecting falls as novelties in acceleration patterns acquired with smartphones. PLoS ONE 2014, 9, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vilarinho, T.; Farshchian, B.; Bajer, D.G.; Dahl, O.H.; Egge, I.; Hegdal, S.S.; Lones, A.; Slettevold, J.N.; Weggersen, S.M. A combined smartphone and smartwatch fall detection system. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; pp. 26–28. [Google Scholar]

- Frank, K.; Vera, M.J.; Robertson, P.; Pfeifer, T. Bayesian Recognition of Motion Related Activities with Inertial Sensors. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium (PLANS 2014), Monterey, CA, USA, 5–8 May 2014; pp. 445–446. [Google Scholar]

- Casilari-Pérez, E.; Santoyo Ramón, J.; Cano-Garcia, J.M. UMAFall: A Multisensor Dataset for the Research on Automatic Fall Detection. Procedia Comput. Sci. 2017, 110, 32–39. [Google Scholar] [CrossRef]

- Schafer, J.L. The Analysis of Incomplete Multivariate Data; Chapman & Hall: London, UK, 1997. [Google Scholar]

- Goodfellow, I. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 20 March 2020).

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef] [Green Version]

- Espinosa, R.; Ponce, H.; Gutiérrez, S.; Martinez-Villaseñor, L.; Brieva, J.; Moya-Albor, E. A vision-based approach for fall detection using multiple cameras and convolutional neural networks: A case study using the UP-Fall detection dataset. Comput. Biol. Med. 2019, 115, 103520. [Google Scholar] [CrossRef]

- Available online: http://sistemic.udea.edu.co/en/research/projects/english-falls/ (accessed on 15 April 2020).

- Available online: https://drive.google.com/file/d/1Y2MSUijPcB7–PcGoAKhGeqI8GxKK0Pm/view (accessed on 15 August 2020).

| Approach Used | Strengths | Weaknesses |

|---|---|---|

| Vision based fall detection | 3D posture and scene analysis, inactivity monitoring, shape modeling, spatio-temporal motion analysis, occlusion sensitivity | Invasion of privacy, interference and noise in data, burdensome syncing of devices, difficult set up of devices |

| Ambience based fall detection | Safeguards privacy, robust occlusion sensitivity | Expensive equipment, detection dependent on short proximity range |

| Wearable sensors based fall detection | low costs, small size, light weight, low power consumption, portability, ease of use, protection of privacy, robust occlusion | Intrusive approach, sensors to be worn at all times |

| Sensor Fusion based fall detection | Robust measurements, accurate detection, high performance | Difficult set-up of equipment, complex syncing between devices |

| IoT based fall detection | High success rates for precision, accuracy and gain, accessibility with real-time patient monitoring | Threat of data security, compromise of privacy, strict global healthcare regulations |

| Ref. | Dataset | DL Algorithm Used | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|---|

| [30] | Smartwatch [35] Notch [36] Farseeing [37] | RNN (GRU) | 85 99 99 | 100 80 55 | 70 99 99 | 77 79 37 |

| [31] | SisFall [38] | CNN CAE CAE | 99.94 99.91 99.81 | 98.71 99.2 99.07 | 99.96 99.93 99.83 | NS |

| [30] | URFD [39] | CNN | 99.86 | 99.72 | 100 | 100 |

| [22] | UP-Fall [40] | CNN | 75.89 | 96.08 | 59.02 | NS |

| Dataset | No. of Subjects | Type of ADLs | Type of Falls | Sensing Device |

|---|---|---|---|---|

| MobiFall [41] | 24 (22 to 42 years old) | 9 | 4 | Smartphone |

| tFall [42] | 10 (20 to 42 years old) | 7 | 8 | Smartphone |

| Project gravity [43] | 3 (ages 22, 26, and 32) | 7 | 12 | Smartphone |

| DLR [44] | 16 (23 to 50 years old) | 6 | 1 | Wearable sensors |

| UMAfall [45] | 17 (18 to 55 years old) | 8 | 3 | Wearable sensors |

| SisFall | 23 (19 to 75 years old) | 19 | 15 | Wearable sensors |

| UP-Fall | 17 (18 to 24 years old) | 6 | 5 | Multi-modal sensors (wearable, ambient and vision) |

| Age | Gender | No. of Subjects | Weight (kg) | Height (m) | |

|---|---|---|---|---|---|

| Young Subjects | 19–30 | M | 11 | 59–82 | 1.65–1.84 |

| 19–30 | F | 12 | 41–64 | 1.50–1.69 | |

| Senior Subjects | 60–71 | M | 8 | 56–103 | 1.63–1.71 |

| 62–75 | F | 7 | 50–71 | 1.49–1.69 |

| Activity Description | Act Code | Trial Period | Trials |

|---|---|---|---|

| Walking slowly | D01 | 100 s | 1 |

| Walking quickly | D02 | 100 s | 1 |

| Jogging slowly | D03 | 100 s | 1 |

| Jogging quickly | D04 | 100 s | 1 |

| Walking upstairs and downstairs slowly | D05 | 25 s | 5 |

| Walking upstairs and downstairs quickly | D06 | 25 s | 5 |

| Slowly sit in a half height chair, wait a moment, and up slowly | D07 | 12 s | 5 |

| Quickly sit in a half height chair, wait a moment, and up quickly | D08 | 12 s | 5 |

| Slowly sit in a low height chair, wait a moment, and up slowly | D09 | 12 s | 5 |

| Quickly sit in a low height chair, wait a moment, and up quickly | D10 | 12 s | 5 |

| Sitting a moment, trying to get up, and collapse into a chair | D11 | 12 s | 5 |

| Sitting a moment, lying slowly, wait a moment, and sit again | D12 | 12 s | 5 |

| Sitting a moment, lying quickly, wait a moment, and sit again | D13 | 12 s | 5 |

| Being on one’s back change to lateral position, wait a moment, and change to one’s back | D14 | 12 s | 5 |

| Standing, slowly bending at knees, and getting up | D15 | 12 s | 5 |

| Standing, slowly bending without bending knees, and getting up | D16 | 12 s | 5 |

| Standing, get into a car, remain seated and get out of the car | D17 | 12 s | 5 |

| Stumble while walking | D18 | 12 s | 5 |

| Gently jump without falling (trying to reach a high object) | D19 | 12 s | 5 |

| Falling forward when walking triggered by a slip | F01 | 15 s | 5 |

| Falling backwards when walking triggered by a slip | F02 | 15 s | 5 |

| Falling laterally when walking triggered by a slip | F03 | 15 s | 5 |

| Falling forward when walking triggered by a trip | F04 | 15 s | 5 |

| Falling forward when jogging triggered by a trip | F05 | 15 s | 5 |

| Falling Vertically when walking caused by fainting | F06 | 15 s | 5 |

| Falling when walking, with use of hands in a table to dampen fall, caused by fainting | F07 | 15 s | 5 |

| Falling forward while trying to get up | F08 | 15 s | 5 |

| Falling laterally while trying to get up | F09 | 15 s | 5 |

| Falling forward while sitting down | F10 | 15 s | 5 |

| Falling backwards while sitting down | F11 | 15 s | 5 |

| Falling laterally while sitting down | F12 | 15 s | 5 |

| Falling forward when sitting, triggered by fainting or falling asleep | F13 | 15 s | 5 |

| Falling backwards when sitting, triggered by fainting or falling asleep | F14 | 15 s | 5 |

| Falling laterally when sitting, triggered by fainting or falling asleep | F15 | 15 s | 5 |

| Age | Gender | No. of Subjects | Weight (kg) | Height (m) |

|---|---|---|---|---|

| 18–24 | M | 9 | 54–99 | 1.62–1.75 |

| 18–24 | F | 8 | 53–71 | 1.57–1.70 |

| Activity Description | Act Code | Trial Period | Trials |

|---|---|---|---|

| Falling forward using hands | 01 | 10 s | 3 |

| Falling forward using knees | 02 | 10 s | 3 |

| Falling backwards | 03 | 10 s | 3 |

| Falling sideward | 04 | 10 s | 3 |

| Falling sitting in empty chair | 05 | 10 s | 3 |

| Walking | 06 | 60 s | 3 |

| Standing | 07 | 60 s | 3 |

| Sitting | 08 | 60 s | 3 |

| Picking up an object | 09 | 10 s | 3 |

| Jumping | 10 | 30 s | 3 |

| Laying | 11 | 60 s | 3 |

| Train | Test | |

|---|---|---|

| ADLs | 362 | 33 |

| Falls | 331 | 44 |

| Total | 693 | 77 |

| Train | Test | |

|---|---|---|

| ADLs | 4091 | 439 |

| Falls | 3334 | 386 |

| Total | 7425 | 825 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 98.01 | 97.4 | 0.0749 | 0.1198 |

| 80 | 20 | 96.39 | 94.81 | 0.1002 | 0.107 |

| 70 | 30 | 95.85 | 93.5 | 0.1205 | 0.2259 |

| 60 | 40 | 88.81 | 88.31 | 0.2694 | 0.282 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 89.51 | 88 | 0.2488 | 0.2899 |

| 80 | 20 | 86.70 | 85.58 | 0.2917 | 0.3179 |

| 70 | 30 | 85.10 | 84.85 | 0.2935 | 0.3321 |

| 60 | 40 | 83.6 | 82.55 | 0.3001 | 0.358 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.0259 | 100 | 95.45 | 94.28 |

| 80 | 20 | 0.0519 | 96.97 | 93.18 | 91.43 |

| 70 | 30 | 0.0649 | 93.93 | 93.18 | 91.17 |

| 60 | 40 | 0.1168 | 81.81 | 93.18 | 90 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.12 | 90.88 | 84.71 | 87.11 |

| 80 | 20 | 0.1442 | 88.38 | 82.38 | 85.08 |

| 70 | 30 | 0.1515 | 87.70 | 81.60 | 84.42 |

| 60 | 40 | 0.1745 | 87.70 | 76.68 | 81.05 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 97.65 | 96.1 | 0.084 | 0.1224 |

| 80 | 20 | 94.4 | 93.51 | 0.1571 | 0.196 |

| 70 | 30 | 87.73 | 87.01 | 0.3569 | 0.3765 |

| 60 | 40 | 82.67 | 81.82 | 0.4084 | 0.3827 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 95.3 | 97.21 | 0.1272 | 0.0841 |

| 80 | 20 | 94.11 | 95.88 | 0.1445 | 0.1026 |

| 70 | 30 | 90.79 | 93.82 | 0.2317 | 0.1521 |

| 60 | 40 | 88.32 | 91.39 | 0.282 | 0.2004 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.039 | 100 | 93.18 | 91.67 |

| 80 | 20 | 0.065 | 96.97 | 90.90 | 88.89 |

| 70 | 30 | 0.13 | 87.87 | 86.36 | 82.85 |

| 60 | 40 | 0.181 | 84.84 | 79.54 | 75.67 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.0278 | 99.54 | 94.56 | 95.41 |

| 80 | 20 | 0.0412 | 99.77 | 91.45 | 92.99 |

| 70 | 30 | 0.0618 | 98.86 | 88. 08 | 90.41 |

| 60 | 40 | 0.0860 | 99.77 | 81.86 | 86.22 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 77.62 | 74.03 | 0.4548 | 0.4754 |

| 80 | 20 | 70.04 | 66.23 | 0.5252 | 0.6135 |

| 70 | 30 | 64.80 | 62.34 | 0.6276 | 0.6207 |

| 60 | 40 | 57.76 | 46.75 | 0.6985 | 0.7331 |

| Original Data Observed | MCAR Missing Values Observed | Training Accuracy (%) | Testing Accuracy (%) | Training Loss | Testing Loss |

|---|---|---|---|---|---|

| 100 | 0 | 79.93 | 78.55 | 0.4374 | 0.489 |

| 80 | 20 | 78.74 | 77.58 | 0.4508 | 0.49 |

| 70 | 30 | 75.88 | 74.79 | 0.4938 | 0.5226 |

| 60 | 40 | 72.73 | 72 | 0.54 | 0.552 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.26 | 75.75 | 72.72 | 67.55 |

| 80 | 20 | 0.338 | 81.81 | 54.54 | 57.44 |

| 70 | 30 | 0.377 | 54.54 | 68.18 | 56.25 |

| 60 | 40 | 0.532 | 72.72 | 27.27 | 42.85 |

| Original Data Observed | MCAR Missing Values Observed | Error Rate | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|

| 100 | 0 | 0.2193 | 84.28 | 70.98 | 76.76 |

| 80 | 20 | 0.2242 | 82.68 | 71.76 | 76.90 |

| 70 | 30 | 0.2521 | 82.68 | 65.80 | 73.33 |

| 60 | 40 | 0.28 | 81.32 | 61.4 | 70.55 |

| Ref | Dataset Used | DL Algorithm Used | Accuracy | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|---|

| [31] | SisFall | RNN (LSTM) | 97.16 (Falls) 94.14 (ADLs) | NS | NS | NS |

| [32] | SisFall | RNN (LSTM) | 95.51 | 92.7 | 94.1 | NS |

| [33] | SisFall | One Layer GRU Two Layer GRU One Layer LSTM Two Layer LSTM | 96.4 96.7 96.3 96.1 | 88.2 87.5 88.2 90.2 | 96.3 96.8 96.4 97.1 | 68.2 68.1 69.5 68.3 |

| Proposed NT-FDS | SisFall | BiLSTM | 97.41 | 100 | 95.45 | 94.28 |

| Ref. | Dataset Used | DL Algorithm Used | Accuracy | Sensitivity (%) | Specificity (%) | Precision (%) |

|---|---|---|---|---|---|---|

| [51] | UP-Fall | 2D CNN (vision based approach) | 95.64 | NS | NS | NS |

| [26] | UP-Fall | RF SVM MLP KNN | 95.76 93.32 95.48 94.90 | 66.91 58.82 69.39 64.28 | 99.59 99.32 99.56 99.5 | 70.78 66.16 73.04 69.05 |

| [22] | UP-Fall | CNN | 75.89 | 96.08 | 59.02 | NS |

| Proposed NT-FDS | UP-Fall | BiLSTM | 97.21 | 99.54 | 94.56 | 95.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waheed, M.; Afzal, H.; Mehmood, K. NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices. Sensors 2021, 21, 2006. https://doi.org/10.3390/s21062006

Waheed M, Afzal H, Mehmood K. NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices. Sensors. 2021; 21(6):2006. https://doi.org/10.3390/s21062006

Chicago/Turabian StyleWaheed, Marvi, Hammad Afzal, and Khawir Mehmood. 2021. "NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices" Sensors 21, no. 6: 2006. https://doi.org/10.3390/s21062006

APA StyleWaheed, M., Afzal, H., & Mehmood, K. (2021). NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices. Sensors, 21(6), 2006. https://doi.org/10.3390/s21062006