Assessing the Capability and Potential of LiDAR for Weed Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Setup of Trials

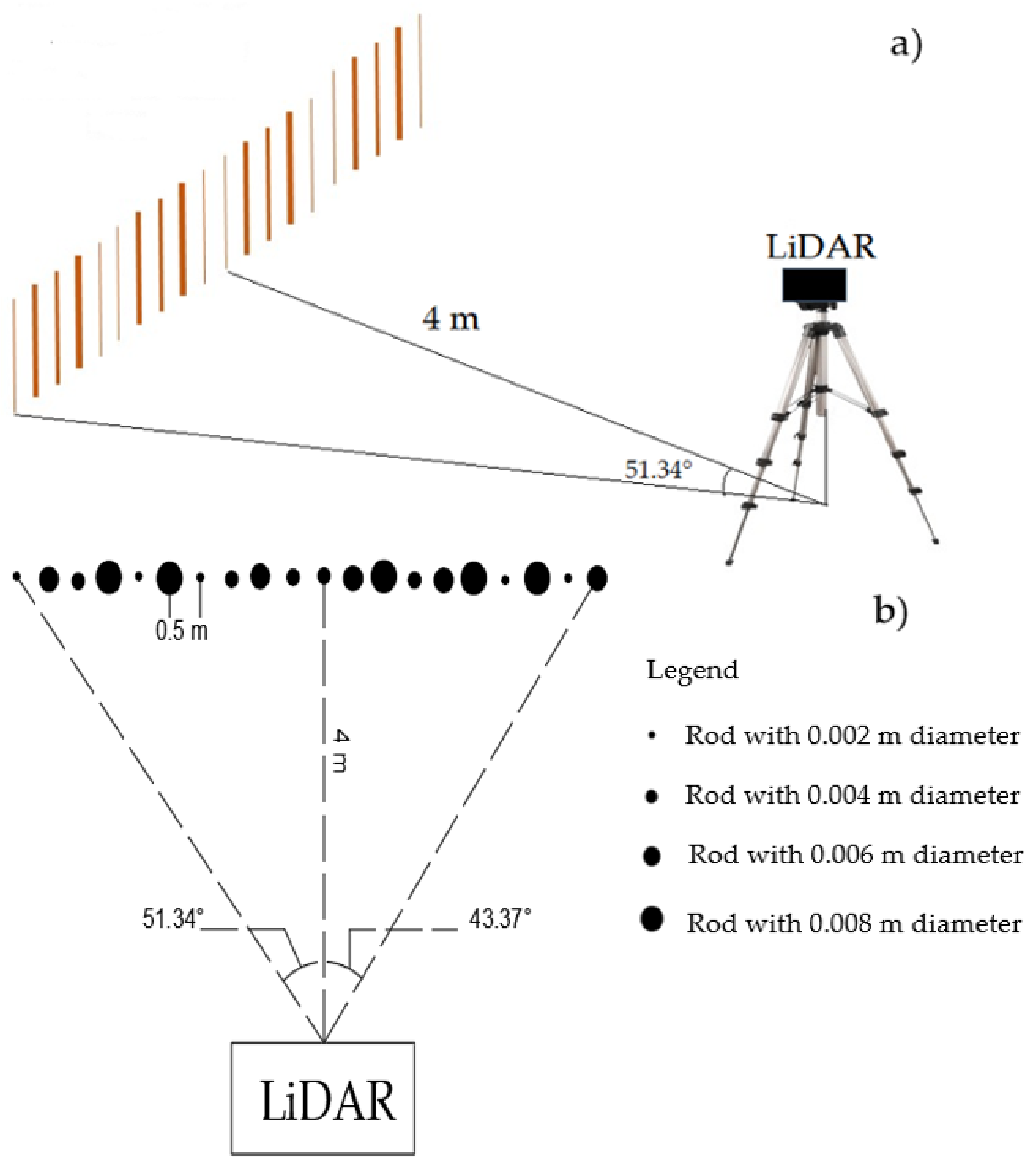

2.1.1. Scanning Lines of Rods

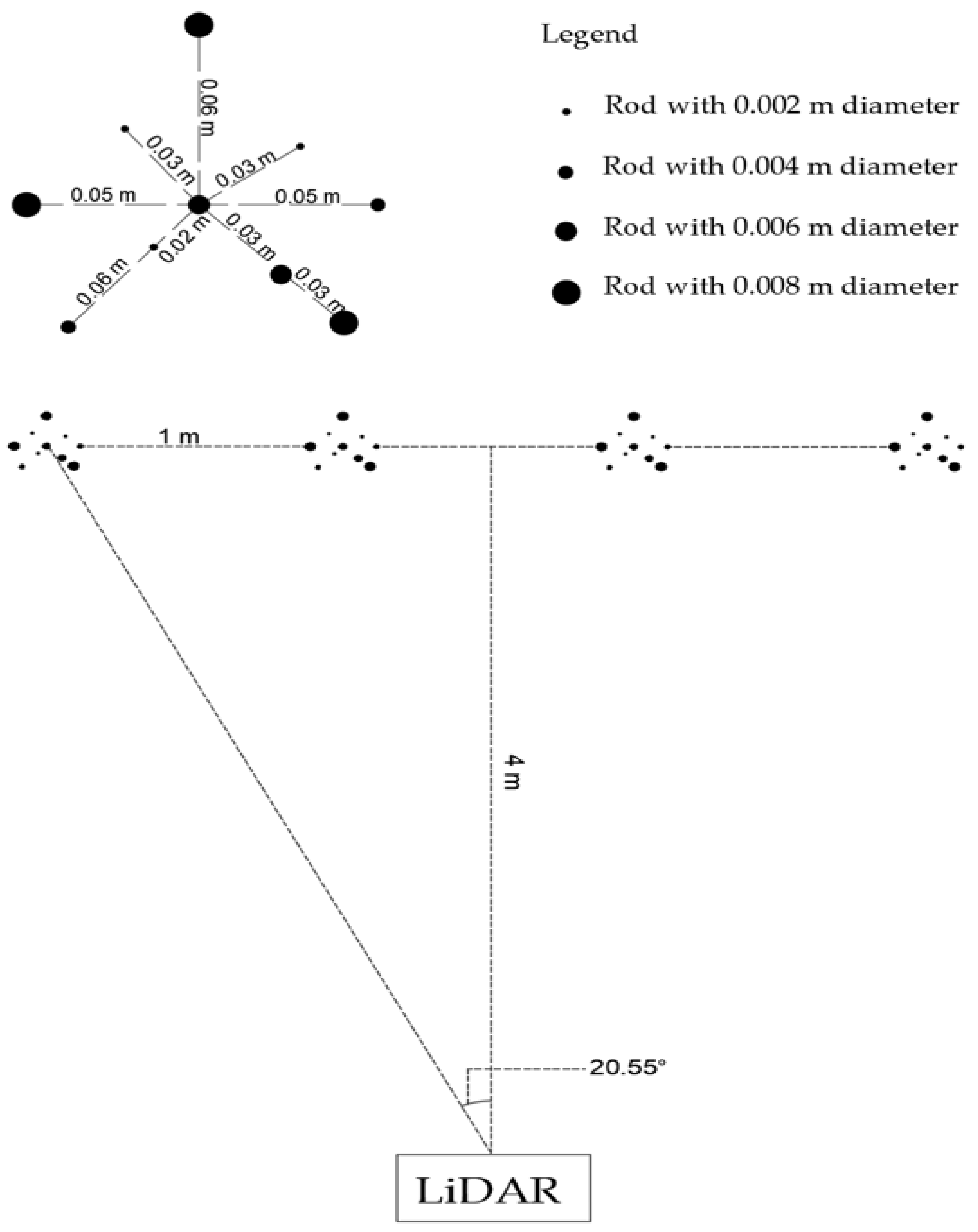

2.1.2. Scanning Groups of Rods

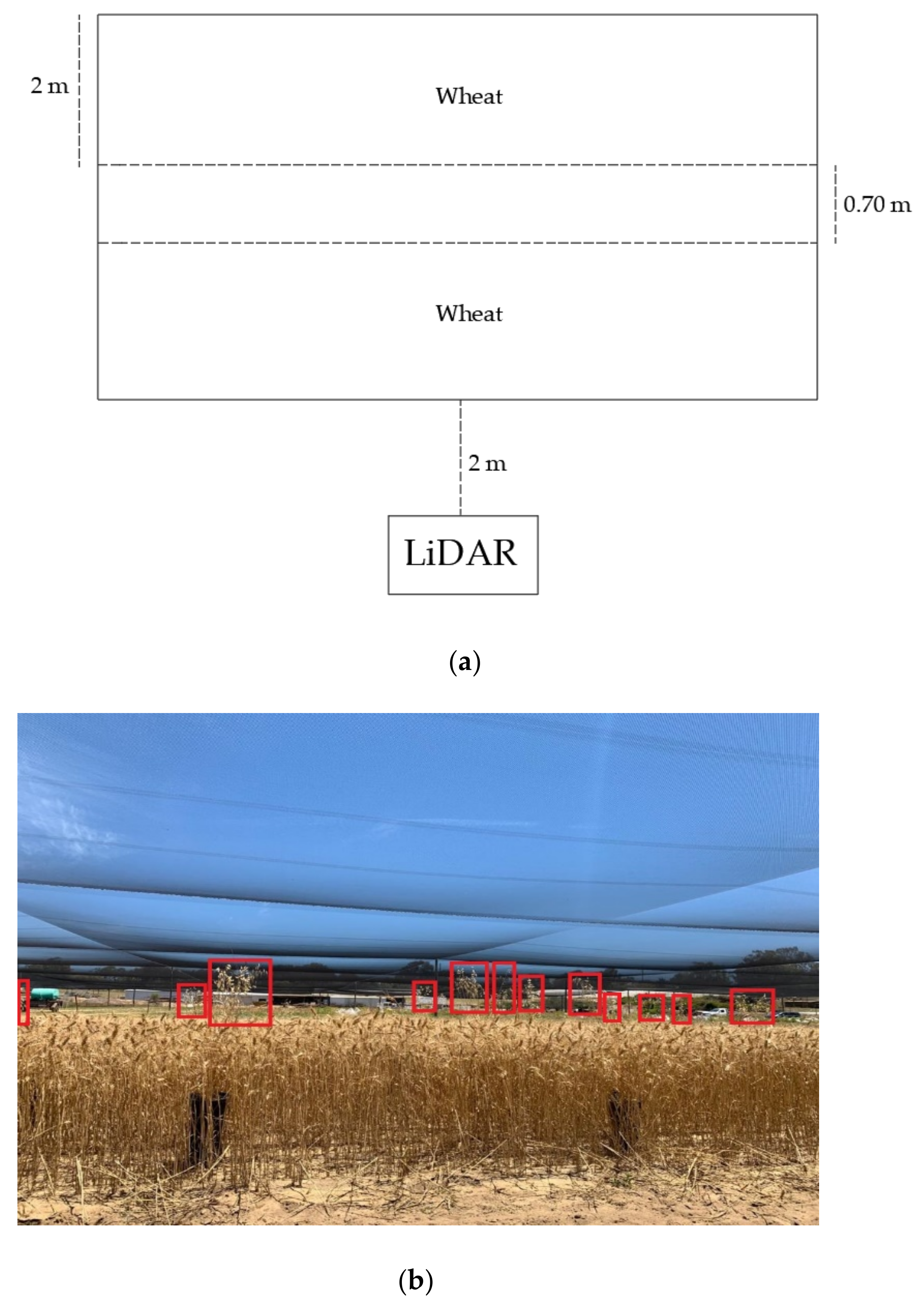

2.1.3. Scanning Weeds in Wheat Plot

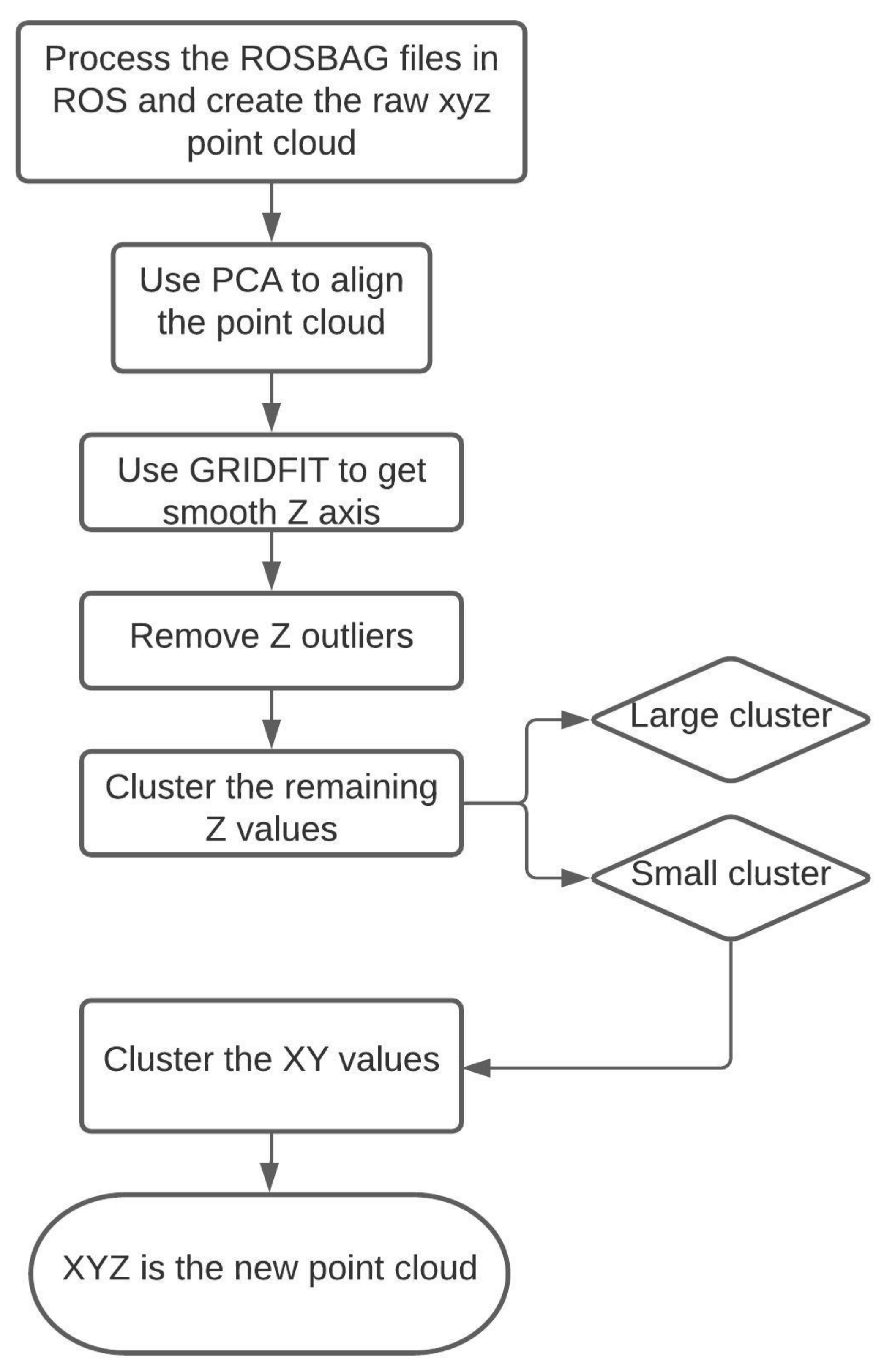

2.2. Data Acquisition and Processing

Estimating Positions of the Targets

2.3. Statistical Analysis

3. Results

3.1. Effect of Distance on LiDAR-Estimated Height of Rods

3.1.1. Scanning Lines of Rods

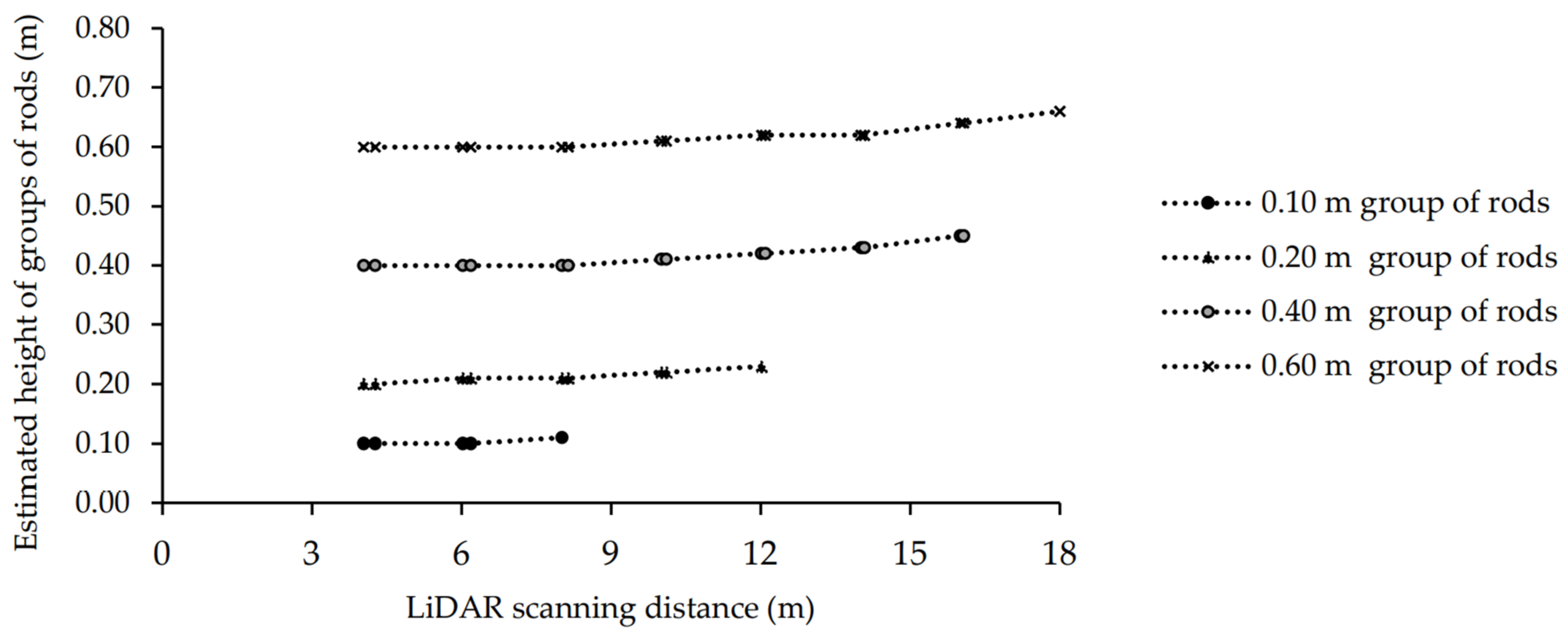

3.1.2. Scanning Groups of Rods

3.2. Estimating the Location of the Targets with the LiDAR

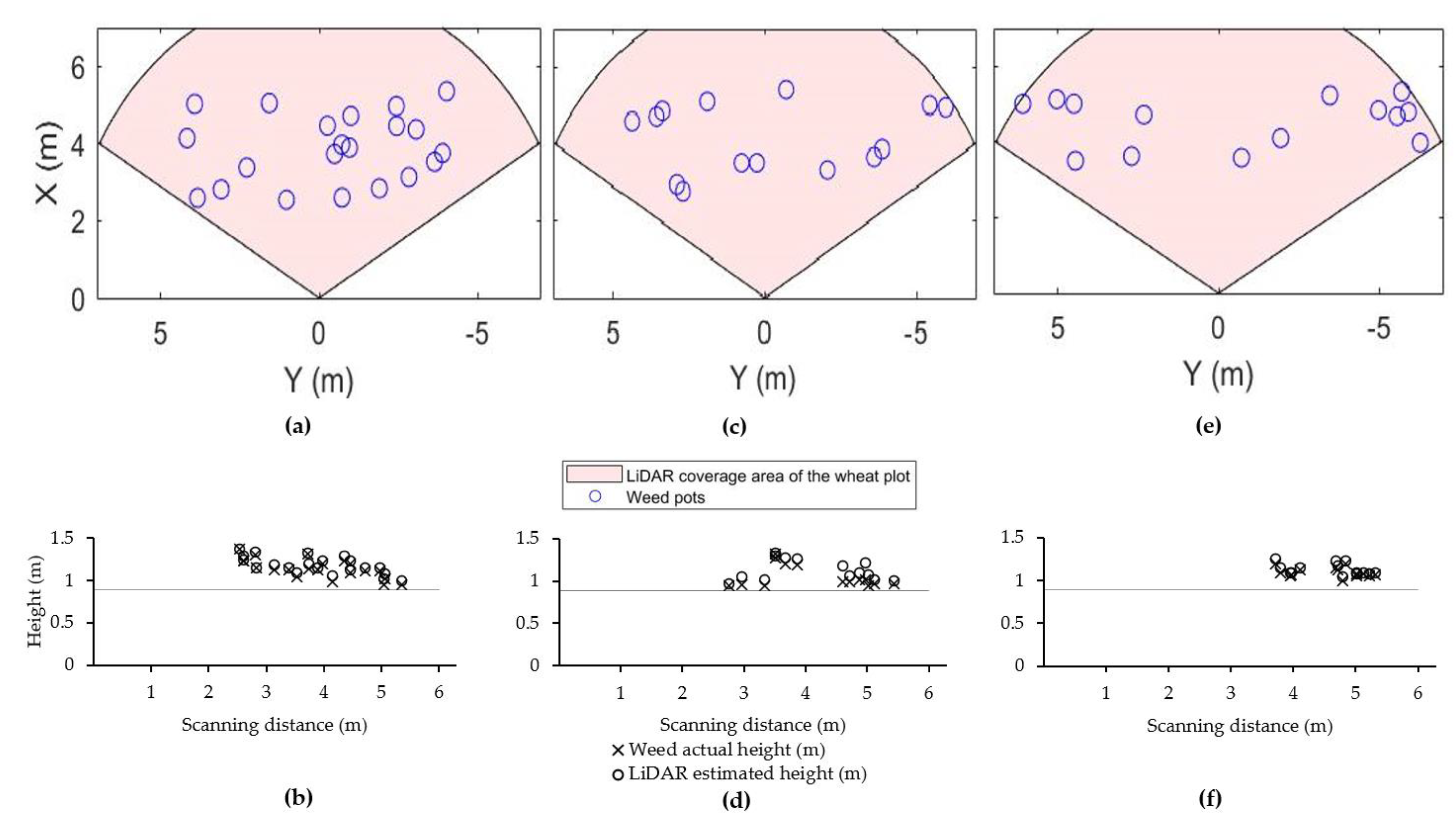

3.3. Scanning Weeds in Wheat Plot

4. Discussion

4.1. Distance and Target Size vs. Target Estimated Height

4.2. Estimating of the Target Location

5. Conclusions

Author Contributions

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Llewellyn, R.S.; Ronning, D.; Clarke, M.; Mayfield, A.; Walker, S.; Ouzman, J. Impact of Weeds in Australian Grain Production: The Cost of Weeds to Australian Grain Growers and the Adoption of Weed Management and Tillage Practices. Available online: https://grdc.com.au/__data/assets/pdf_file/0027/75843/grdc_weeds_review_r8.pdf. (accessed on 20 September 2019).

- Lopez-Granados, F. Weed Detection for Site-Specific Weed Management: Mapping and Real-Time Approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Christensen, S.; Sogaard, P.; Kudsk, P.; Norremark, M.; Lund, I.; Nadimi, E.S.; Jorgensen, R. Site-specific weed control technologies. Weed Res. 2009, 49, 233–241. [Google Scholar] [CrossRef]

- Dammer, K.H.; Wartenberg, G. Sensor-based weed detection and application of variable herbicide rates in real time. J. Crop. Prot. 2007, 26, 270–277. [Google Scholar] [CrossRef]

- Andújar, D.; Rueda-Ayala, V.; Moreno, H.; Rosell-Polo, J.R.; Escolà, A.; Valero, C.; Gerhards, R.; Fernández-Quintanilla, C.; Dorado, J.; Griepentrog, H.W. Discriminating crop, weeds and soil surface with a terrestrial LIDAR sensor. Sensors 2013, 13, 14662–14675. [Google Scholar] [CrossRef] [PubMed]

- Thorp, K.R.; Tian, L.F. A review of remote sensing of weeds in agriculture. Precisi. Agric. 2004, 5, 477–508. [Google Scholar] [CrossRef]

- Lopez-Granados, F.; Jurado-Exposito, M.; Pena-Barragan, J.M.; Garcia-Torres, L. Using remote sensing for identification of late-season grass weed patches in wheat. Weed Sci. 2006, 54, 346–353. [Google Scholar] [CrossRef]

- Severtson, D.; Callow, N.; Flower, K.; Neuhaus, A.; Olejnik, M.; Nansen, C. Unmanned aerial vehicle canopy reflectance data detects potassium deficiency and green peach aphid susceptibility in canola. Precis. Agric. 2016, 17, 659–677. [Google Scholar] [CrossRef]

- Murphy, M.E.; Boruff, B.; Callow, J.N.; Flower, K.C. Detecting frost stress in wheat: A controlled environment hyperspectral study on wheat plant components and implications for multispectral field sensing. Remote Sens. 2020, 12, 477. [Google Scholar] [CrossRef]

- Trimble. Weedseeker Spot Spray System. Available online: https://agriculture.trimble.com/product/weedseeker-spot-spray-system/ (accessed on 2 February 2021).

- Andújar, D.; Ribeiro, A.; Fernández-Quintanilla, C.; Dorado, J. Accuracy and feasibility of optoelectronic sensors for weed mapping in wide row crops. Sensors 2011, 11, 2304–2318. [Google Scholar] [CrossRef]

- Metari, S.; Deschenes, F. A new convolutional kernel for atmospheric point spread function applied to computer vision. In Proceedings of the IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Shwetha, H.R.; Kumar, D.N. Prediction of Land Surface Temperature under Cloudy Conditions using Microwave Remote Sensing and ANN. Aquatic Procedia 2015, 4, 1381–1388. [Google Scholar] [CrossRef]

- Sui, R.; Thomasson, J.A.; Hanks, J.; Wooten, J. Ground-based sensing system for weed mapping in cotton. Comput. Electron. Agric. 2008, 60, 31–38. [Google Scholar] [CrossRef]

- Papadopoulos, A.V.; Kati, V.; Chachalis, D.; Kotoulas, V.; Stamatiadis, S. Weed mapping in cotton using ground-based sensors and GIS. Environ. Monit. Assess. 2018, 190, 622. [Google Scholar] [CrossRef]

- Rumpf, T.; Romer, C.; Weis, M.; Sokefeld, M.; Gerhards, R.; Plumer, L. Sequential support vector machine classification for small-grain weed species discrimination with special regard to Cirsium arvense and Galium aparine. Comput. Electron. Agric. 2012, 80, 89–96. [Google Scholar] [CrossRef]

- Andújar, D.; Escolà, A.; Rosell-Polo, J.R.; Fernández-Quintanilla, C.; Dorado, J. Potential of a terrestrial LiDAR-based system to characterise weed vegetation in maize crops. Comput. Electron. Agric. 2013, 92, 11–15. [Google Scholar] [CrossRef]

- Zaman, Q.; Schumann, A.; Percival, D.; Read, S.; Esau, T.; Farooque, A. Development of cost-effective prototype variable rate sprayer for spot-specific application of agrochemicals in wild blubbery cropping systems. Comput. Electron. Agric. 2011, 76, 175–182. [Google Scholar] [CrossRef]

- Andújar, D.; Escolà, A.; Dorado, J.; Fernández-Quintanilla, C. Weed discrimination using ultrasonic sensors. Weed Res. 2011, 51, 543–547. [Google Scholar] [CrossRef]

- Weiss, U.; Biber, P. Plant detection and mapping for agricultural robots using a 3D LiDAR sensor. Robot. Auton. Syst. 2011, 59, 256–273. [Google Scholar] [CrossRef]

- Reiser, D.; Vazquez Arellano, M.; Garrido Izard, M.; Griepentrog, H.W.; Paraforos, D.S. Using assembled 2D LiDAR data for single plant detection. In Proceedings of the 5th International Conference on Machine Control, Guidance Vichy, France, 5–6 October 2016; pp. 5–6. [Google Scholar]

- Christiansen, M.P.; Laursen, M.S.; Jorgensen, R.N.; Skovsen, S.; Gislum, R. Ground vehicle mapping of fields using LiDAR to enable prediction of crop biomass. arXiv 2018, arXiv:1805.01426. [Google Scholar]

- Wallace, L.; Lucieer, A.; Watson, C.E.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Friedli, M.; Kirchgessner, N.; Grieder, C.; Liebisch, F.; Mannale, M.; Walter, A. Terrestrial 3D laser scanning to track the increase in canopy height of both monocot and dicot crop species under field conditions. Plant. Methods 2016, 12, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R.R. High throughput determination of plant height, ground cover, and above-ground biomass in wheat with LiDAR. Front. Plant. Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Guo, T.; Fang, Y.; Cheng, T.; Tian, Y.C.; Zhu, Y.; Chen, Q.; Qiu, X.L.; Yao, X. Detection of wheat height using optimized multi-scan mode of LiDAR during the entire growth stages. Comput. Electron. Agric. 2019, 165, 104959. [Google Scholar] [CrossRef]

- Moreno, H.; Valero, C.; Bengochea-Guevara, J.M.; Ribeiro, A.; Garrido-Izard, M.; Andujar, D. On-ground vineyard reconstruction using a LiDAR-based automated system. Sensors 2020, 20, 1102. [Google Scholar] [CrossRef] [PubMed]

- Shahbazi, N.; Flower, K.C.; Callow, J.N.; Mian, A.; Ashworth, M.B.; Beckie, H.J. Comparison of crop and weed height, for potential differentiation of weed patches at harvest. Weed Res. 2021, 61, 25–34. [Google Scholar] [CrossRef]

- Fernández-Quintanilla, C.; Pena, J.M.; Andújar, D.; Dorado, J.; Ribeiro, A.; Lopez-Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- Mason, H.; Goonewardene, L.; Spaner, D. Competitive traits and the stability of wheat cultivars in differing natural weed environments on the northern Canadian Prairies. J. Agric. Sci. 2008, 146, 21–33. [Google Scholar] [CrossRef]

- Mobli, A.; Yadav, R.; Chauhan, B.S. Enhanced weed-crop competition effects on growth and seed production of herbicide-resistant and herbicidesusceptible annual sowthistle (Sonchus oleraceus). Weed Biol. Manag. 2020, 20, 38–46. [Google Scholar] [CrossRef]

- SICK. Operating Instructions 3D LiDAR Sensor s MRS6000. Available online: https://cdn.sick.com/media/docs/0/40/540/operating_instructions_mrs6000_en_im0076540.pdf (accessed on 2 March 2021).

- Zisi, T.; Alexandridis, T.K.; Kaplanis, S.; Navrozidis, I.; Tamouridou, A.A.; Lagopodi, A.; Moshou, D.; Polychronos, V. Incorporating surface elevation information in UAV multispectral images for mapping weed patches. J. Imaging 2018, 4, 132. [Google Scholar] [CrossRef]

- Zadoks, J.C.; Chang, T.T.; Konzak, C.F. A decimal code for the growth stage of cereals. Weed Res. 1974, 14, 415–421. [Google Scholar] [CrossRef]

- Zhen, H. Image Data Anonymization or the “EU Longterm Dataset with Multiple Sensors for Authonomous Driving”. Available online: https://uniwa.uwa.edu.au/userhome/students2/22454482/Downloads/Report.pdf (accessed on 20 February 2021).

- Faloutsos, C. Cluster analysis: Basic concepts and methods. In Data Mining: Concepts and Techniques, 3rd ed.; Carnegie Mellon University: Pittsburgh, PA, USA, 2012; pp. 443–495. [Google Scholar]

- Rousseeuw, P.J. Silhouettes- A graphical aid to the interpretation and validation of cluster-analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Zandbergen, P.A. Positional accuracy of spatial data: Non-normal distributions and a critique of the national standard for spatial data accuracy. Transit. GIS 2008, 12, 103–130. [Google Scholar] [CrossRef]

- D’Errico, J. Surface Fitting Using Gridfit. MATLAB Central File Exchange. Available online: https://au.mathworks.com/matlabcentral/fileexchange/8998-surface-fitting-using-gridfit (accessed on 28 July 2020).

- Zhou, Q. The design and implementation of intrusion detection system based on data mining technology. Res. J. Appli. Sci. Eng. 2013, 5, 3824–3829. [Google Scholar] [CrossRef]

- Tang, J.L.; Wang, D.; Zhan, Z.; He, L.; Xin, J.; Xu, Y. Weed identification based on K-means feature learning combined with convolutional neural network. Comput. Electron. Agric. 2017, 135, 63–70. [Google Scholar] [CrossRef]

- Chen, G.X.; Jaradat, S.A.; Banerjee, N.; Tanaka, T.S.; Ko, M.S.H.; Zhang, M.Q. Evaluation and comparison of clustering algorithms in analyzing es cell gene expression data. Stat. Sin. 2002, 12, 241–262. [Google Scholar]

- Alwan, M.; Wagner, M.B.; Wasson, G.; Sheth, P. Characterization of infrared range-finder PBS-03JN for 2-D mapping. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation (ICRA’05), Barcelona, Spain, 18–22 April 2005; pp. 3936–3941. [Google Scholar]

- Zhu, X.X.; Nie, S.; Wang, C.; Xi, X.H.; Hu, Z.Y. A ground elevation and vegetation height retrieval algorithm using micro-pulse photon-counting Lidar data. Remote Sens. 2018, 10, 1962. [Google Scholar] [CrossRef]

- Kashani, A.G.; Olsen, M.J.; Parrish, C.E.; Wilson, N. A review of LIDAR radiometric processing: From Ad Hoc intensity correction to rigorous radiometric calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [PubMed]

- Balduzzi, M.A.F.; Van der Zande, D.; Stuckens, J.; Verstraeten, W.W.; Coppin, P. The properties of terrestrial laser system intensity for measuring leaf geometries: A case study with conference pear trees (Pyrus Communis). Sensors 2011, 11, 1657–1681. [Google Scholar] [CrossRef]

- Moe, K.T.; Owari, T.; Furuya, N.; Hiroshima, T. Comparing individual tree height information derived from field surveys, LiDAR and UAV-DAP for high-value timber species in Northern Japan. Forests 2020, 11, 223. [Google Scholar] [CrossRef]

- Tatoglu, A.; Pochiraju, K. Point cloud segmentation with LIDAR reflection intensity behavior. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 786–790. [Google Scholar] [CrossRef]

- Franceschi, M.; Teza, G.; Preto, N.; Pesci, A.; Galgaro, A.; Girardi, S. Discrimination between marls and limestones using intensity data from terrestrial laser scanner. Isprs J. Photogram. Remote Sens. 2009, 64, 522–528. [Google Scholar] [CrossRef]

- Jelalian, A.V. Laser Radar Systems; Artech House: Norwood, MA, USA, 1992. [Google Scholar]

- Cameron, J.E.; Storrie, A. Summer Fallow Weed Management: A Reference Manual for Grain Growers and Advisers in the Southern and Western Grains Regions of Australia. Available online: https://grdc.com.au/__data/assets/pdf_file/0028/98632/summer-fallow-weed-management-manual.pdf.pdf?utm_source=website&utm_medium=download_link&utm_campaign=pdf_download&utm_term=South;%20West&utm_content=Summer%20fallow%20weed%20management (accessed on 10 December 2020).

- Beckie, H.J.; Ashworth, M.B.; Flower, K.C. Herbicide resistance management: Recent developments and trends. Plants 2019, 8, 161. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Granados, F.; Torres-Sanchez, J.; De Castro, A.I.; Serrano-Perez, A.; Mesas-Carrascosa, F.J.; Pena, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 1–12. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant. Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Tang, L.; Shi, Y.P.; He, Q.; Sadek, A.W.; Qiao, C.M. Performance test of autonomous vehicle Lidar sensors under different weather conditions. Transp. Res. Rec. 2020, 2674, 319–329. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive Lidar sensors. In Proceedings of the 2019 30th IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. CNN-based Lidar point cloud de-noising in adverse weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Powles, S.B.; Yu, Q. Evolution in action: Plants resistant to herbicides. Annu. Rev. Plant. Biol. 2010, 61, 317–347. [Google Scholar] [CrossRef] [PubMed]

- Tamirat, T.W.; Pedersen, S.M.; Lind, K.M. Farm and operator characteristics affecting adoption of precision agriculture in Denmark and Germany. Acta Agric. Scand. Sec. B-Soil Plant. Sci. 2018, 68, 349–357. [Google Scholar] [CrossRef]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

| Rods Actual Height (m) | Number of Detected Rods | Mean Estimated Height (m) | SD of Estimated Heights (m) | LiDAR Angle to the Outermost Detected Rods (Right-Left) | LiDAR Distance from the Outermost Detected Rod (m) | |

|---|---|---|---|---|---|---|

| 4 m (10°) | 0.10 | 20 | 0.12 | ±0.014 | 51.34° to −43.37° | 6.40 |

| 0.20 | 20 | 0.20 | ±0.015 | 51.34° to −43.37° | 6.40 | |

| 0.40 | 20 | 0.40 | ±0.014 | 51.34° to −43.37° | 6.40 | |

| 0.60 | 20 | 0.60 | ±0.024 | 51.34° to −43.37° | 6.40 | |

| 6 m (9°) | 0.10 | 5 | 0.11 | ±0.006 | 9.45° to −9.45° | 6.08 |

| 0.20 | 20 | 0.20 | ±0.006 | 39.80° to −36.87° | 7.80 | |

| 0.40 | 20 | 0.40 | ±0.008 | 39.80° to −36.87° | 7.80 | |

| 0.60 | 20 | 0.60 | ±0.012 | 39.80° to −36.87° | 7.80 | |

| 8 m (7°) | 0.10 | 3 | 0.13 | ±0.009 | 3.58° to −3.58° | 8.01 |

| 0.20 | 5 | 0.22 | ±0.012 | 7.12° to −7.12° | 8.06 | |

| 0.40 | 20 | 0.41 | ±0.008 | 32.00° to −29.36° | 9.43 | |

| 0.60 | 20 | 0.61 | ±0.010 | 32.00° to −29.36° | 9.43 | |

| 10 m (7°) | 0.20 | 1 | 0.23 | N/A | 0 | 10.00 |

| 0.40 | 20 | 0.42 | ±0.019 | 26.56° to −24.23° | 11.18 | |

| 0.60 | 20 | 0.62 | ±0.020 | 26.56° to −24.23° | 11.18 | |

| 12 m (7°) | 0.40 | 20 | 0.44 | ±0.019 | 22.64° to −20.55° | 13.00 |

| 0.60 | 20 | 0.63 | ±0.015 | 22.64° to −20.55° | 13.00 | |

| 14 m (5°) | 0.40 | 7 | 0.47 | ±0.019 | 6.11° to −6.11° | 14.08 |

| 0.60 | 20 | 0.65 | ±0.021 | 19.80° to −17.74° | 14.86 | |

| 16 m (4°) | 0.60 | 11 | 0.67 | ±0.015 | 8.88° to −8.88° | 16.19 |

| Rod’s Height (m) | Scanning Distances of the LiDAR (m) | |||||||

|---|---|---|---|---|---|---|---|---|

| 4 | 6 | 8 | 10 | 12 | 14 | 16 | 18 | |

| 0.10 | 0.99 | 0.74 | 0.10 | 0.07 | 0.00 | 0.00 | 0.00 | 0.00 |

| 0.20 | 0.99 | 0.98 | 0.57 | 0.20 | 0.00 | 0.00 | 0.00 | 0.00 |

| 0.40 | 1.00 | 1.00 | 0.99 | 0.99 | 0.99 | 0.51 | 0.01 | 0.00 |

| 0.60 | 1.00 | 1.00 | 1.00 | 1.00 | 0.99 | 0.99 | 0.68 | 0.2 |

| Group of Rods Actual Height (m) | Number of Detected Group of Rods | Mean Estimated Heights (m) | SD of Estimated Heights (m) | LiDAR Angle to the Outermost Detected Group of Rods (Right-Left) | LiDAR Distance from the Outermost Detected Group of Rods (m) | |

|---|---|---|---|---|---|---|

| 4 m (10°) | 0.10 | 4 | 0.10 | ±0.002 | 20.55° to −20.55° | 4.27 |

| 0.20 | 4 | 0.20 | ±0.006 | 20.55° to −20.55° | 4.27 | |

| 0.40 | 4 | 0.40 | ±0.006 | 20.55° to −20.55° | 4.27 | |

| 0.60 | 4 | 0.60 | ±0.003 | 20.55° to −20.55° | 4.27 | |

| 6 m (9°) | 0.10 | 4 | 0.10 | ±0.003 | 14.04° to −14.04° | 6.18 |

| 0.20 | 4 | 0.21 | ±0.005 | 14.04° to −14.04° | 6.18 | |

| 0.40 | 4 | 0.40 | ±0.002 | 14.04° to −14.04° | 6.18 | |

| 0.60 | 4 | 0.60 | ±0.0008 | 14.04° to −14.04° | 6.18 | |

| 8 m (7°) | 0.10 | 2 | 0.11 | ±0.001 | 3.57° to −3.57° | 8.02 |

| 0.20 | 4 | 0.21 | ±0.009 | 10.62° to −10.62° | 8.14 | |

| 0.40 | 4 | 0.40 | ±0.006 | 10.62°to −10.62° | 8.14 | |

| 0.60 | 4 | 0.60 | ±0.005 | 10.62°to −10.62° | 8.14 | |

| 10 m (7°) | 0.20 | 4 | 0.22 | ±0.011 | 8.53° to −8.53° | 10.11 |

| 0.40 | 4 | 0.41 | ±0.006 | 8.53° to −8.53° | 10.11 | |

| 0.60 | 4 | 0.61 | ±0.007 | 8.53° to −8.53° | 10.11 | |

| 12 m (7°) | 0.20 | 4 | 0.23 | ±0.009 | 7.12° to −7.12° | 12.09 |

| 0.40 | 4 | 0.42 | ±0.010 | 7.12° to −7.12° | 12.09 | |

| 0.60 | 4 | 0.62 | ±0.010 | 7.12° to −7.12° | 12.09 | |

| 14 m (5°) | 0.40 | 4 | 0.43 | ±0.013 | 6.11° to −6.11° | 14.08 |

| 0.60 | 4 | 0.62 | ±0.008 | 6.11° to −6.11° | 14.08 | |

| 16 m (4°) | 0.40 | 4 | 0.46 | ±0.025 | 5.35° to −5.35° | 16.07 |

| 0.60 | 4 | 0.64 | ±0.005 | 5.35° to −5.35° | 16.07 | |

| 18 m (4°) | 0.60 | 2 | 0.66 | N/A | 1.59° to −1.59° | 18.06 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shahbazi, N.; Ashworth, M.B.; Callow, J.N.; Mian, A.; Beckie, H.J.; Speidel, S.; Nicholls, E.; Flower, K.C. Assessing the Capability and Potential of LiDAR for Weed Detection. Sensors 2021, 21, 2328. https://doi.org/10.3390/s21072328

Shahbazi N, Ashworth MB, Callow JN, Mian A, Beckie HJ, Speidel S, Nicholls E, Flower KC. Assessing the Capability and Potential of LiDAR for Weed Detection. Sensors. 2021; 21(7):2328. https://doi.org/10.3390/s21072328

Chicago/Turabian StyleShahbazi, Nooshin, Michael B. Ashworth, J. Nikolaus Callow, Ajmal Mian, Hugh J. Beckie, Stuart Speidel, Elliot Nicholls, and Ken C. Flower. 2021. "Assessing the Capability and Potential of LiDAR for Weed Detection" Sensors 21, no. 7: 2328. https://doi.org/10.3390/s21072328

APA StyleShahbazi, N., Ashworth, M. B., Callow, J. N., Mian, A., Beckie, H. J., Speidel, S., Nicholls, E., & Flower, K. C. (2021). Assessing the Capability and Potential of LiDAR for Weed Detection. Sensors, 21(7), 2328. https://doi.org/10.3390/s21072328