An Improved Method for Stable Feature Points Selection in Structure-from-Motion Considering Image Semantic and Structural Characteristics

Abstract

1. Introduction

- Aiming at the insufficient consideration of the stability and spatial distribution of feature points in existing methods, a new feature points selection method considering the semantic and structural characteristics of the image simultaneously is proposed to improve the stability and reliability of the selected feature points.

- A two-tuple classification model is constructed and a progressive multi-scale selection algorithm is developed, the new model and algorithm not only ensures the feature matching efficiency but also extract more reasonable feature points, which can better reflect the scene structure, and reduce the average reprojection error and improve the feature point matching rate.

2. Related Work

2.1. Existing Feature Point Selection Method for Feature Point Selection

- (1)

- A convolution with a Gaussian filter is performed on each image to construct a DoG pyramid. The features corresponding to each octave are then extracted as local extreme values in the image and across scales, as shown in Figure 1.

- (2)

- The DoG pyramid is traversed level by level (from top to bottom) to select a small number of feature points from each image for image-pair selection. For example, selecting the first 100 feature points for each image, if there are 4 or more feature point matches among the 100 feature points of two images, these two images are deemed to form an image pair.

- (3)

- A number threshold (Tn) is further determined for the feature point matching of an image pair.

- (4)

- Suppose the level of the pyramid is l = {l1, l2, …, ln}, where level ln is the top level and number (li) refers to the number of feature points in level li. For each pair of images, their feature points are collected from top to bottom until Based on a large number of experiments, Wu [20] suggested that a threshold of 8192 is sufficient for most scenarios.

- (5)

- The selected feature points are used for feature matching and are used as the input data for the subsequent aerial triangulation process.

2.2. Limitations of the Existing Method

3. Methodology

3.1. Recognition of Vegetation Areas and Extraction of Line Features

- (1)

- Recognition of vegetation areas

- (2)

- Extraction of line features

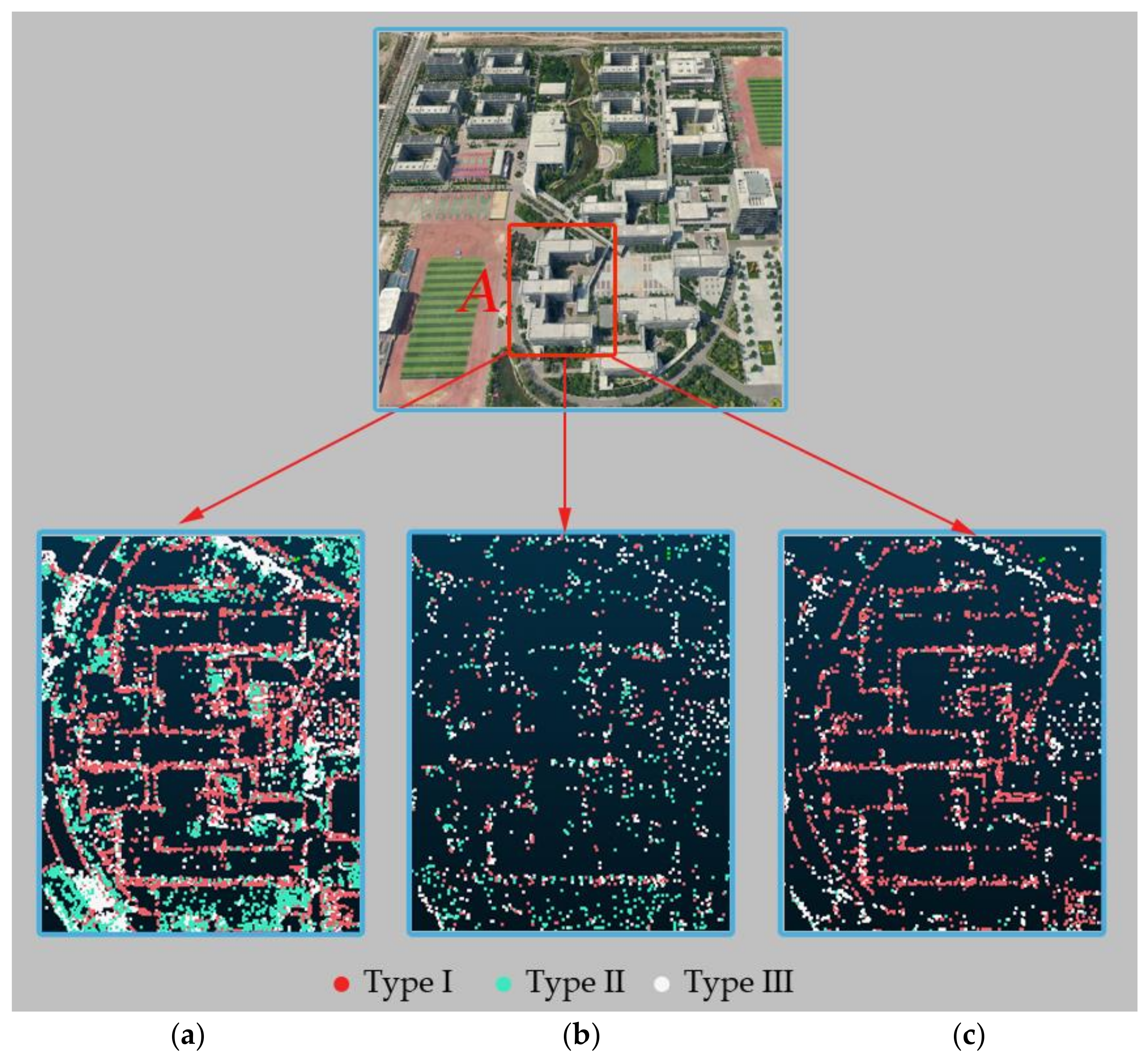

3.2. Construction of the Two-Tuple Classification Model

3.3. Feature Point Progressive Selection Algorithm

4. Results

4.1. Experimental Data and Computational Environment

4.2. Qualitative Evaluation and Analysis

4.3. Quantitative Evaluation and Analysis

5. Discussion and Conclusions

- (1)

- In terms of sparse point cloud reconstruction, compared with the advanced Wu method, the method proposed in this paper increases the feature points in areas with obvious structure features, such as boundary objects and building structure lines, so that the method proposed in this paper can better characterize the scene structure with a small number of feature points.

- (2)

- In terms of the feature matching time, after feature point selection by the Wu method and the proposed method, the matching time is significantly reduced, taking 3% and 3.5% of the initial feature matching time for these two methods, respectively.

- (3)

- In terms of the feature point matching rate, compared with the advanced Wu method, the feature point matching rate of the method proposed in this paper is increased to 83%, which reveals that the feature points selected by this method have a higher stability and robustness.

- (4)

- In terms of the average reprojection error, for the small experimental area (0.75 km2), the average reprojection error corresponding to the method proposed in this paper is 21% lower than that of Wu method, and for the large experimental area (40 km2), this error is reduced by 20%, indicating that the proposed method achieved a higher aerial triangulation accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chesley, J.T.; Leier, A.L.; White, S.; Torres, R. Using unmanned aerial vehicles and structure-from-motion photogrammetry to characterize sedimentary outcrops: An example from the Morrison Formation, Utah, USA. Sediment. Geol. 2017, 354, 1–8. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Efficient SFM for oblique UAV images: From match pair selection to geometrical verification. Remote Sens. 2018, 10, 1246. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SFM 3D model accuracy in high-relief landscapes by incorporating oblique images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef]

- Taddia, Y.; Corbau, C.; Zambello, E.; Pellegrinelli, A. UAVs for Structure-From-Motion Coastal Monitoring: A Case Study to Assess the Evolution of Embryo Dunes over a Two-Year Time Frame in the Po River Delta, Italy. Sensors 2019, 19, 1717. [Google Scholar] [CrossRef]

- Havlena, M.; Schindler, K. Vocmatch: Efficient multiview correspondence for structure from motion. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Cohen, A.; Sattler, T.; Pollefeys, M. Merging the unmatchable: Stitching visually disconnected sfm models. In Proceedings of the Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Eltner, A.; Sofia, G. Structure from motion photogrammetric technique. In Developments in Earth Surface Processes; Elsevier: Cambridge, MA, USA, 2020; Volume 23, pp. 1–24. [Google Scholar]

- Lowe D, G. Distinctive image features from scale-invariant keypoints. Int. J Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiong, J.; Hao, L. Photogrammetric processing of low-altitude images acquired by unpiloted aerial vehicles. Photogramm. Record 2011, 26, 190–211. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Z.; Gong, J. Generalized photogrammetry of spaceborne, airborne and terrestrial multi-source remote sensing datasets. Acta Geod. Cartogr. Sin. 2021, 50, 1–11, (In Chinese with English abstract). [Google Scholar]

- Cao, S.; Snavely, N. Learning to match images in large-scale collections. In Proceedings of the 12th European Conference on Computer Vision, Florance, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 259–270. [Google Scholar]

- Hartmann, W.; Havlena, M.; Schindler, K. Predicting matchability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 9–16. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the of the IEEE International Conference on Computer Vision, Washington, DC, USA, 13–16 December 2015; pp. 2938–2946. [Google Scholar]

- Yi, K.M.; Trulls, E.; Ono, Y.; Lepetit, V.; Salzmann, M.; Fua, P. Learning to find good correspondences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2666–2674. [Google Scholar]

- Pinto, M.F.; Melo, A.G.; Honório, L.M.; Marcato, A.L.M.; Conceição, A.G.S.; Timotheo, A.O. Deep Learning Applied to Vegetation Identification and Removal Using Multidimensional Aerial Data. Sensors 2020, 20, 6187. [Google Scholar] [CrossRef] [PubMed]

- Moulon, P.; Monasse, P.; Marlet, R. Global fusion of relative motions for robust, accurate and scalable structure from motion. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 1–8 December 2013; pp. 3248–3255. [Google Scholar]

- Shah, R.; Srivastava, V.; Narayanan, P.J. Geometry-aware feature matching for structure from motion applications. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Big Island, Hawaii, 6–9 January 2015; 278–285. [Google Scholar]

- Shah, R.; Deshpande, A.; Narayanan, P.J. Multistage SfM: A coarse-to-fine approach for 3d reconstruction. arXiv 2015, arXiv:1512.06235. [Google Scholar]

- Wu, C. Towards linear-time incremental structure from motion. In Proceedings of the International Conference on 3D Vision, Seattle, WA, USA, 29 June–1 July 2013. [Google Scholar]

- Braun, A.; Borrmann, A. Combining inverse photogrammetry and BIM for automated labeling of construction site images for machine learning. Autom. Constr. 2019, 106, 102879. [Google Scholar] [CrossRef]

- Nex, F.; Duarte, D.; Steenbeek, A.; Kerle, N. Towards real-time building damage mapping with low-cost UAV solutions. Remote Sens. 2019, 11, 287. [Google Scholar] [CrossRef]

- Liu, L.; Sun, M.; Ren, X.; Liu, X.; Liu, L.; Zheng, H.; Li, X. Review on methods of 3D reconstruction from UAV image sequences. Acta Sci. Nat. Univ. Pekin. 2017, 53, 1165–1178, (In Chinese with English abstract). [Google Scholar]

- Wu, C.; Agarwal, S.; Curless, B.; Seitz, S.M. Multicore bundle adjustment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Kwong, I.H.Y.; Fung, T. Tree height mapping and crown delineation using LiDAR, large format aerial photographs, and unmanned aerial vehicle photogrammetry in subtropical urban forest. Int. J. Remote Sens. 2020, 41, 5228–5256. [Google Scholar] [CrossRef]

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. 2016, 40, 247–275. [Google Scholar] [CrossRef]

- Aktar, R.; Kharismawati, D.E.; Palaniappan, K.; Aliakbarpour, H.; Bunyak, F.; Stapleton, A.E.; Kazic, T. Robust mosaicking of maize fields from aerial imagery. Appl. Plant Sci. 2020, 8, e11387. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. In Readings in Computer Vision; Fischler, M.A., Firchein, O., Eds.; Morgan Kaufmann: Burlington, MA, USA, 1987; pp. 726–740. [Google Scholar]

- Luo, Y.; Xu, J.; Yue, W.; Chen, W. Comparison vegetation index in urban green space information extraction. Remote Sens. Technol. Appl. 2006, 21, 212–219, (In Chinese with English abstract). [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Pena, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of vegetation information from visible unmanned aerial vehicle Images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–158, (In Chinese with English abstract). [Google Scholar]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. On Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Laurini, R.; Thompson, D. Fundamentals of Spatial Information Systems; Academic Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Yamaguchi, F. Computer-Aided Geometric Design: A Totally Four-Dimensional Approach; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Li, C.; Liu, X.; Wu, W.; Wu, P. An automated method for the selection of complex railway lines that accounts for multiple feature constraints. Trans. GIS 2019, 23, 1296–1316. [Google Scholar] [CrossRef]

- Li, C.; Zhang, H.; Wu, P.; Yin, Y.; Liu, S. A complex junction recognition method based on GoogLeNet model. Trans. GIS 2020, 24, 1756–1778. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Frahm, J.M.; Fite-Georgel, P.; Gallup, D.; Johnson, T.; Raguram, R.; Wu, C.; Jen, Y.H.; Dunn, E.; Clipp, B.; Lazebnik, S.; et al. Building Rome on a Cloudless Day. In Proceedings of the 11th European Conference on Computer Vision, Crete, Greece, 5 September 2010; Springer: Berlin, Heidelberg, Germany, 2010. [Google Scholar]

- Wang, B.; Zhu, Z.; Meng, L. CUDA-based acceleration algorithm of SIFT feature extraction. J. Northeast. Univ. (Nat. Sci.) 2013, 34, 200–204, (In Chinese with English abstract). [Google Scholar]

| Type | Type I | Type II | Type III |

|---|---|---|---|

| Classification value | 2 | 1 | 0 |

| Meaning | Line-Feature and non-vegetation area | Line-Feature and vegetation area Non-line-Feature and non-vegetation area | Non-line-Feature and vegetation area |

| Image Size/Pixels | Pixel Size/μm | Focal Length/mm | Number of Images | Altitude/m | Image Overlap/% | ||||

|---|---|---|---|---|---|---|---|---|---|

| VV* | SV* | VV and SV | VV | SV | VV | SV | 680 | VV | SV |

| 11,674 × 7514 | 8900 × 6650 | 6 | 120 | 82 | 2359 | 9436 | 80 | 80 | |

| Method | SIFT Method | SURF Method | Wu Method | Proposed Method | |

|---|---|---|---|---|---|

| Index | |||||

| Matching time | 19 d* 21 h* 44 min* | 6 d 23 h 5 min | 14 h 34 min | 16 h 38 min | |

| Matching rate | 68.41% | 71.12% | 80.19% | 83.42% | |

| Experimental Area | Method | Average Reprojection Error (Pixels) |

|---|---|---|

| 0.75 km2 (248 images) | Wu method | 0.33 |

| Proposed method | 0.26 | |

| 40 km2 (11,795 images) | Wu method | 0.34 |

| Proposed method | 0.28 |

| Experimental Area | Method | Average Reprojection Error (Pixels) |

|---|---|---|

| Image pair A (Vegetation area accounts for 82%) | Wu method | 0.38 |

| Proposed method | 0.36 | |

| Image pair B (Vegetation area accounts for 51%) | Wu method | 0.34 |

| Proposed method | 0.29 | |

| Image pair C (Vegetation area accounts for 25%) | Wu method | 0.33 |

| Proposed method | 0.26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Liu, Z.; Zhu, H.; Wu, P.; Li, C. An Improved Method for Stable Feature Points Selection in Structure-from-Motion Considering Image Semantic and Structural Characteristics. Sensors 2021, 21, 2416. https://doi.org/10.3390/s21072416

Wang F, Liu Z, Zhu H, Wu P, Li C. An Improved Method for Stable Feature Points Selection in Structure-from-Motion Considering Image Semantic and Structural Characteristics. Sensors. 2021; 21(7):2416. https://doi.org/10.3390/s21072416

Chicago/Turabian StyleWang, Fei, Zhendong Liu, Hongchun Zhu, Pengda Wu, and Chengming Li. 2021. "An Improved Method for Stable Feature Points Selection in Structure-from-Motion Considering Image Semantic and Structural Characteristics" Sensors 21, no. 7: 2416. https://doi.org/10.3390/s21072416

APA StyleWang, F., Liu, Z., Zhu, H., Wu, P., & Li, C. (2021). An Improved Method for Stable Feature Points Selection in Structure-from-Motion Considering Image Semantic and Structural Characteristics. Sensors, 21(7), 2416. https://doi.org/10.3390/s21072416