Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode

Abstract

:1. Introduction

- for easy and intuitive control of an industrial robot by operators without specialized training,

- to program movement of the industrial robot in an easy and intuitive way (task-based) by workers without specialized education,

- to make corrections in the control programs without stopping the robot,

- reduce the number of cables by using wireless, touchscreen tablets,

- development and modification of robot’s control programs using a digital twin in an offline mode, which increases a programmer’s safety, reduces costs and speeds up the software development process.

2. Related Work

- online (non-text-based),

- offline (text-based, including graphical),

- hybrid (a combination of both methods).

3. Materials and Methods

- connection to a selected robot (connected to a local Ethernet network),

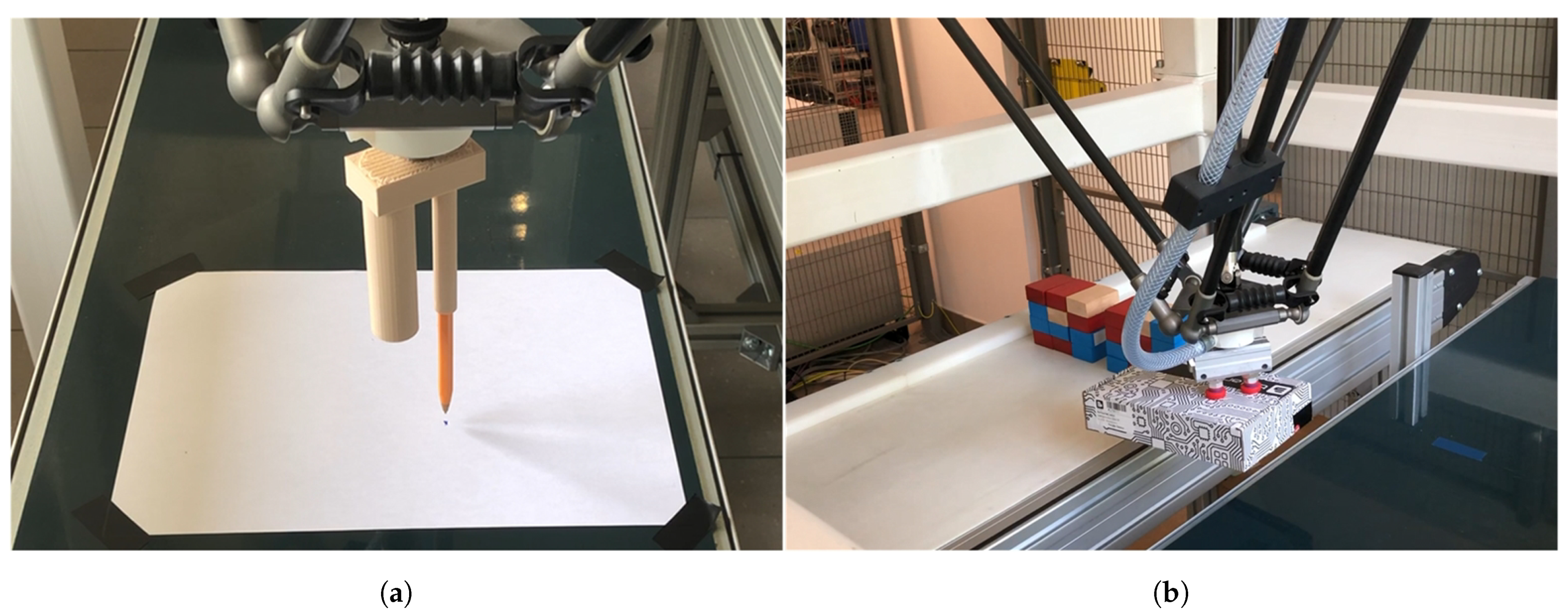

- switching between robot modes between transferring workpieces and drawing the corresponding pattern,

- controlling the working parameters of the robot and its start-up.

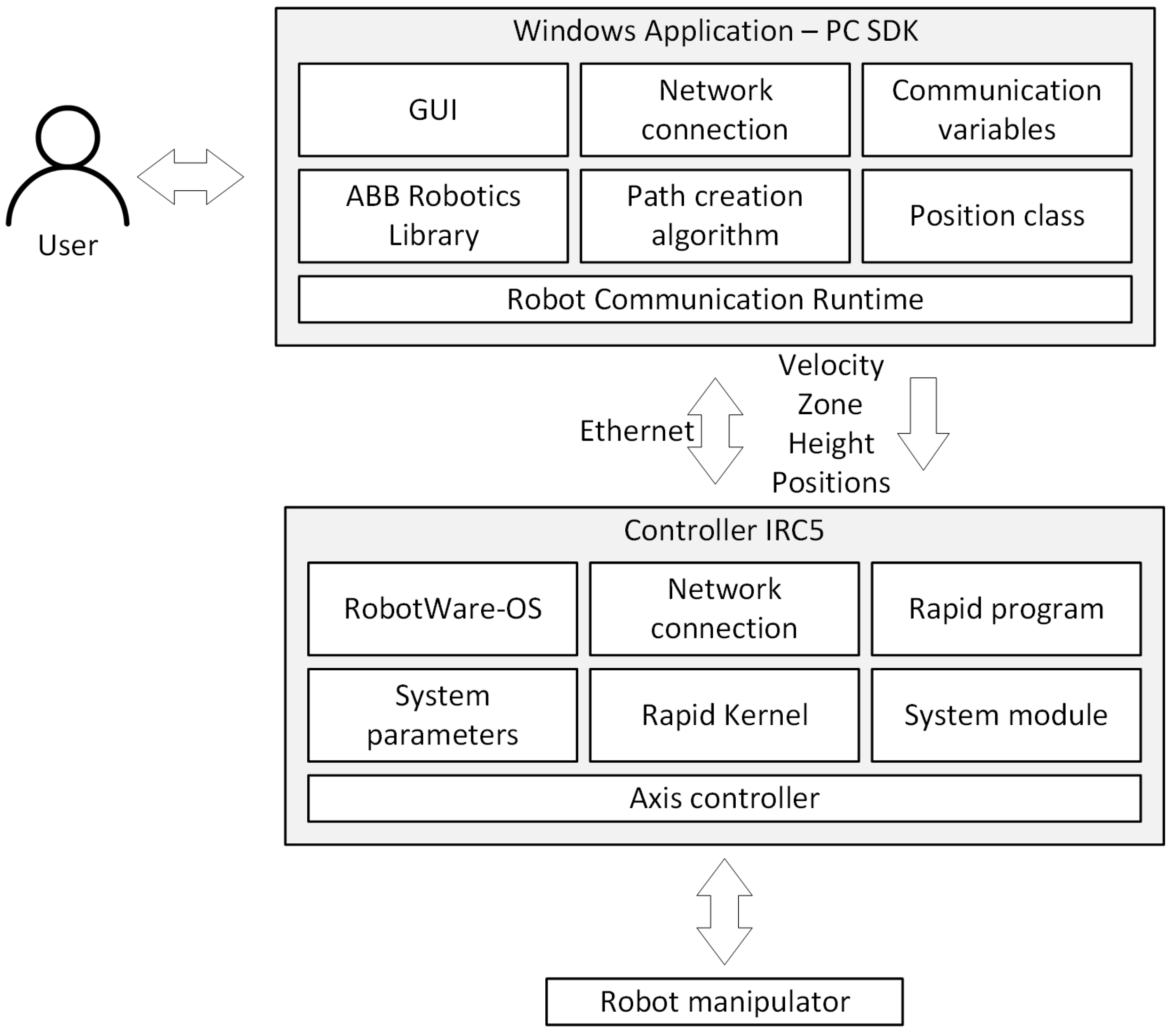

3.1. System Design

- the StylusDown event is used for when the pen is placed on the page,

- the StylusMove event is used for moving the pen and drawing the pattern,

- the StylusUp event is used for when the pen is lifted from the page.

- Connect to a selected controller (real or virtual) and take control over the selected controller.

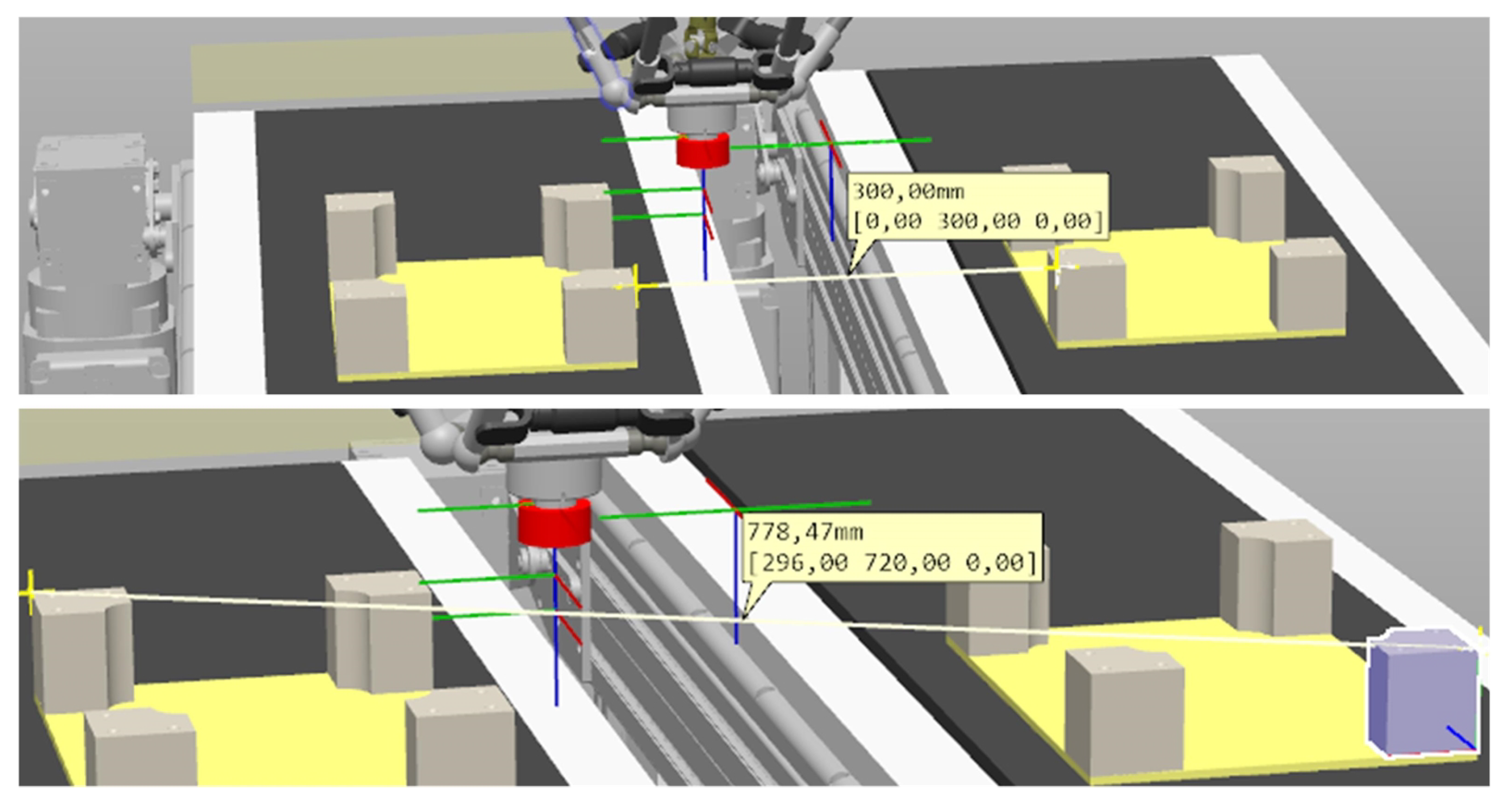

- Cooperate with a program developed in RAPID language that controls an industrial robot. The main task of the application is to be able to change the robot’s trajectory, both the position of individual points forming the trajectory and the robot’s motion parameters (TCP speeds, zones of passage through points, heights of objects).

- Allows selecting the operation mode. Two operation modes have been prepared (Figure 4 (3)):

- -

- Path—used to generate complex motion trajectories.

- -

- Pick and Place—used to modify trajectories carried out during the transfer of products.

- Allows for defining selected parameters of robot’s movement (TCP speeds, zones of passage through points, heights of objects—Figure 4 (2)).

- Define the sampling frequency (Figure 4 (5)). The application allows for creating continuous paths. This enables the user to create complex trajectories with different shapes.

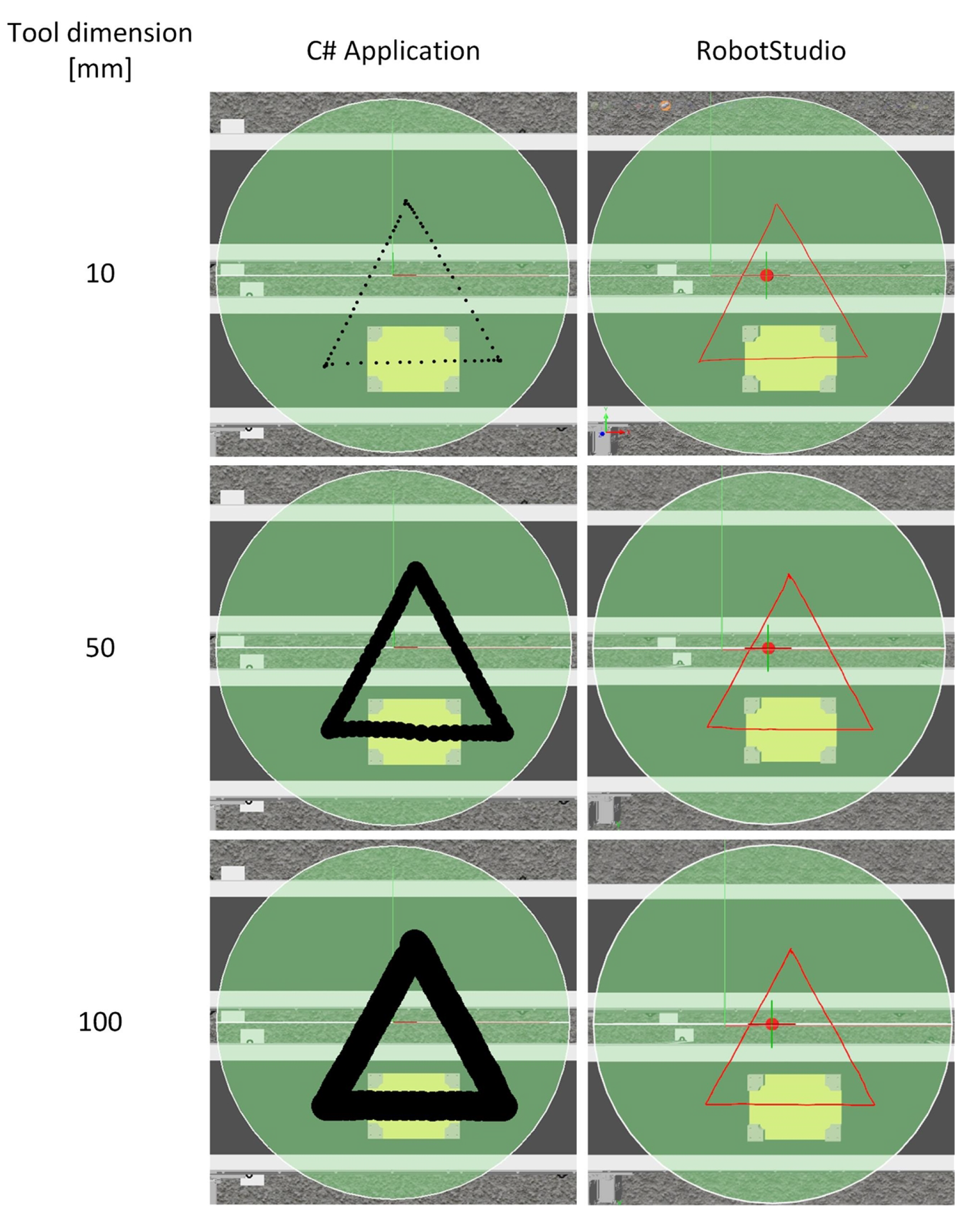

- Allows definition of tool dimensions (Figure 4 (6)). When generating a path, the operator has the possibility to define the dimensions of the robot tool (e.g., gripper) in the form of the diameter of the inserted points, which allows for generating trajectories between obstacles located at the workstation and prevents the robot from causing collisions during the process. The operator controls how obstacles are avoided during the creation of the trajectory.

- Allows selection of the station layout (Figure 4 (4,9)) and importing it to the developed application. The user has the option to select a station view with the robot’s working range marked (Figure 4 (4c)), which allows the generation of trajectories (Figure 4 (4f)) in the area available to the robot. If an attempt is made to start the robot with an uploaded trajectory that is completely or partially outside the robot’s area, the robot stops and displays an error of not being able to reach the set position.

- Saves the generated path, which can be reused several times (Figure 4 (7)).

- Deletes the path in case it needs to be modified (Figure 4 (8)).

- Starts and stops the control program (selected in Available controllers window) of the robot (Figure 4 (10,11)).

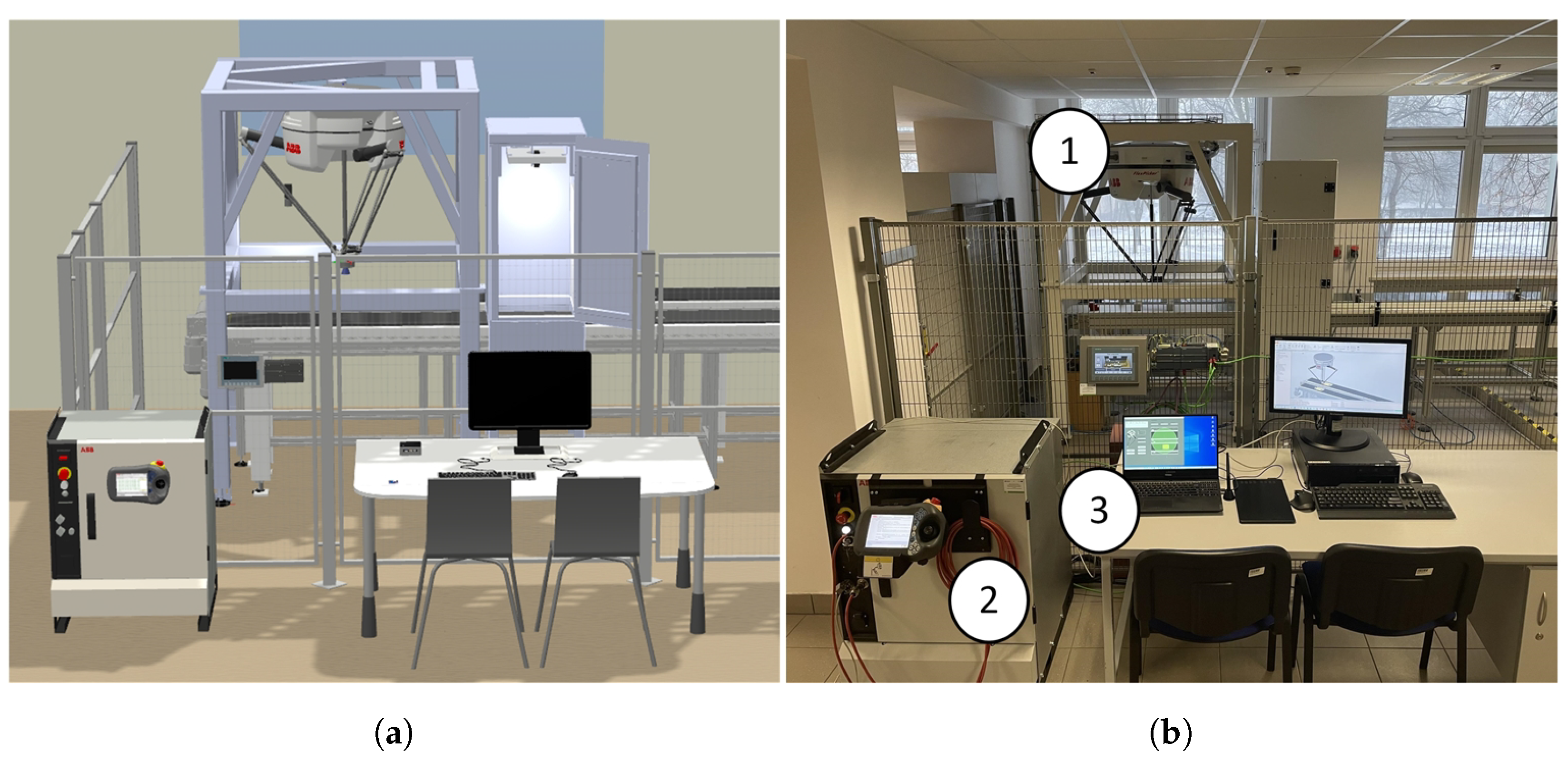

3.2. Test Stand

- ABB IRB360 FlexPicker robot with IRC5 controller,

- graphic tablet,

- personal computer.

- —planar distance from {0} to near base side,

- L—upper legs length,

- —planar distance from {P} to a platform vertex,

- —platform equilateral triangle side,

- —planar distance from {P} to near platform side.

4. Evaluation

4.1. Test 1

- Velocity 200 mm/s,

- Zone 0 mm,

- Hight of object 0 mm,

- Mode Path,

- Sampling frequency 16 Hz,

- Tool dimension 10–100 mm.

4.2. Test 2

- Velocity 200 mm/s,

- Zone 0 mm,

- Hight of object 0 mm,

- Mode Path,

- Sampling frequency 1–33 Hz,

- Tool dimension 10 mm.

4.3. Test 3

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Urrea, C.; Jara, D. Design, analysis, and comparison of control strategies for an industrial robotic arm driven by a multi-level inverter. Symmetry 2021, 13, 86. [Google Scholar] [CrossRef]

- Benotsmane, R.; Dudás, L.; Kovács, G. Trajectory optimization of industrial robot arms using a newly elaborated “whip-lashing” method. Appl. Sci. 2020, 10, 8666. [Google Scholar] [CrossRef]

- Kaczmarek, W.; Panasiuk, J. Robotyzacja Procesow Produkcyjnych; Wydawnictwo Naukowe PWN: Warsaw, Poland, 2017. [Google Scholar]

- Borys, S.; Kaczmarek, W.; Laskowski, D. Selection and optimization of the parameters of the robotized packaging process of one type of product. Sensors 2020, 20, 5378. [Google Scholar] [CrossRef]

- Ke, Q.; Liu, J.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. Computer vision for human–machine interaction. In Computer Vision For Assistive Healthcare; Elsevier: Amsterdam, The Netherlands, 2018; pp. 127–145. [Google Scholar] [CrossRef]

- Zabalza, J.; Fei, Z.; Wong, C.; Yan, Y.; Mineo, C.; Yang, E.; Rodden, T.; Mehnen, J.; Pham, Q.C.; Ren, J. Smart sensing and adaptive reasoning for enabling industrial robots with interactive human–robot capabilities in dynamic environments—A case study. Sensors 2019, 19, 1354. [Google Scholar] [CrossRef] [Green Version]

- Laskowski, D.; Łubkowski, K. Anthropo-technical systems reliability. Saf. Reliab. Methodol. Appl. 2014, 435–444. [Google Scholar] [CrossRef]

- Dymora, P.; Paszkiewicz, A. Performance analysis of selected programming languages in the context of supporting decision-making processes for industry 4.0. Appl. Sci. 2020, 10, 8521. [Google Scholar] [CrossRef]

- Bect, P.; Simeu-Abazi, Z.; Maisonneuve, P.L. Identification of abnormal events by data monitoring: Application to complex systems. Comput. Ind. 2015, 68, 78–88. [Google Scholar] [CrossRef]

- Esmaeilian, B.; Behdad, S.; Wang, B. The evolution and future of manufacturing: A review. J. Manuf. Syst. 2016, 39, 79–100. [Google Scholar] [CrossRef]

- Cherubini, A.; Passama, R.; Crosnier, A.; Lasnier, A.; Fraisse, P. Collaborative manufacturing with physical human–robot interaction. Robot. Comput. Integr. Manuf. 2016, 40, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Roy, R.N.; Drougard, N.; Gateau, T.; Dehais, F.; Chanel, C.P.C. How Can Physiological Computing Benefit Human-Robot Interaction? Robotics 2020, 9, 100. [Google Scholar] [CrossRef]

- De Treville, S.; Antonakis, J. Could lean production job design be intrinsically motivating? Contextual, configurational, and levels-of-analysis issues. J. Oper. Manag. 2006, 24, 99–123. [Google Scholar] [CrossRef]

- Romero, D.; Bernus, P.; Noran, O.; Stahre, J.; Berglund, Å.F. The operator 4.0: Human cyber-physical systems & adaptive automation towards human-automation symbiosis work systems. In IFIP International Conference on Advances in Production Management Systems; Springer: New York, NY, USA, 2016; Volume 488, pp. 677–686. [Google Scholar] [CrossRef] [Green Version]

- Gorecky, D.; Schmitt, M.; Loskyll, M.; Zühlke, D. Human-machine-interaction in the industry 4.0 era. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN 2014), Porto Alegre, Brazil, 27–30 July 2014; pp. 289–294. [Google Scholar] [CrossRef]

- Dilberoglu, U.M.; Gharehpapagh, B.; Yaman, U.; Dolen, M. The Role of Additive Manufacturing in the Era of Industry 4.0. Procedia Manuf. 2017, 11, 545–554. [Google Scholar] [CrossRef]

- Kucewicz, M.; Baranowski, P.; Stankiewicz, M.; Konarzewski, M.; Płatek, P.; Małachowski, J. Modelling and testing of 3D printed cellular structures under quasi-static and dynamic conditions. Thin-Walled Struct. 2019, 145, 106385. [Google Scholar] [CrossRef]

- Płatek, P.; Rajkowski, K.; Cieplak, K.; Sarzyński, M.; Małachowski, J.; Woźniak, R.; Janiszewski, J. Deformation process of 3D printed structures made from flexible material with different values of relative density. Polymers 2020, 12, 2120. [Google Scholar] [CrossRef]

- Elsisi, M.; Mahmoud, K.; Lehtonen, M.; Darwish, M.M. Reliable industry 4.0 based on machine learning and IOT for analyzing, monitoring, and securing smart meters. Sensors 2021, 21, 487. [Google Scholar] [CrossRef]

- Kaczmarek, W.; Panasiuk, J.; Borys, S.; Pobudkowska, A.; Majsterek, M. Analysis of the kinetics of swimming pool water reaction in analytical device reproducing its circulation on a small scale. Sensors 2020, 20, 17. [Google Scholar] [CrossRef] [PubMed]

- Frankó, A.; Vida, G.; Varga, P. Reliable identification schemes for asset and production tracking in industry 4.0. Sensors 2020, 20, 3709. [Google Scholar] [CrossRef] [PubMed]

- Črešnar, R.; Potočan, V.; Nedelko, Z. Speeding up the implementation of industry 4.0 with management tools: Empirical investigations in manufacturing organizations. Sensors 2020, 20, 3469. [Google Scholar] [CrossRef]

- Kammerer, K.; Pryss, R.; Hoppenstedt, B.; Sommer, K.; Reichert, M. Process-driven and flow-based processing of industrial sensor data. Sensors 2020, 20, 5245. [Google Scholar] [CrossRef]

- Yangui, S. A panorama of cloud platforms for iot applications across industries. Sensors 2020, 20, 2701. [Google Scholar] [CrossRef]

- Thames, L.; Schaefer, D. Software-defined Cloud Manufacturing for Industry 4.0. Procedia CIRP 2016, 52, 12–17. [Google Scholar] [CrossRef] [Green Version]

- Lele, A. Cloud computing. In Smart Innovation, Systems and Technologies; Springer Science and Business Media Deutschland GmbH: Singapore, 2019; Volume 132, pp. 167–185. [Google Scholar] [CrossRef]

- Alsharif, M.; Rawat, D.B. Study of machine learning for cloud assisted iot security as a service. Sensors 2021, 21, 1034. [Google Scholar] [CrossRef] [PubMed]

- Kaczmarek, W.; Panasiuk, J.; Borys, S.; Majsterek, M.; Pobudkowska, A. Studies on the work characteristics of amperometric free chlorine probes. In AIP Conference Proceedings; AIP Publishing LLC: College Park, MA, USA, 2018; Volume 2029, p. 020026. [Google Scholar] [CrossRef]

- De Carvalho Chrysostomo, G.G.; de Aguiar Vallim, M.V.B.; da Silva, L.S.; Silva, L.A.; de Aguiar Vallim Filho, A.R. A Framework for Big Data Analytical Process and Mapping—BAProM: Description of an Application in an Industrial Environment. Energies 2020, 13, 6014. [Google Scholar] [CrossRef]

- Liagkou, V.; Salmas, D.; Stylios, C. Realizing Virtual Reality Learning Environment for Industry 4.0. Procedia CIRP 2019, 79, 712–717. [Google Scholar] [CrossRef]

- Noghabaei, M.; Heydarian, A.; Balali, V.; Han, K. Trend analysis on adoption of virtual and augmented reality in the architecture, engineering, and construction industry. Data 2020, 5, 26. [Google Scholar] [CrossRef] [Green Version]

- Doolani, S.; Wessels, C.; Kanal, V.; Sevastopoulos, C.; Jaiswal, A.; Nambiappan, H.; Makedon, F. A Review of Extended Reality (XR) Technologies for Manufacturing Training. Technologies 2020, 8, 77. [Google Scholar] [CrossRef]

- Culot, G.; Fattori, F.; Podrecca, M.; Sartor, M. Addressing Industry 4.0 Cybersecurity Challenges. IEEE Eng. Manag. Rev. 2019, 47, 79–86. [Google Scholar] [CrossRef]

- Gasiba, T.E.; Lechner, U.; Pinto-Albuquerque, M. Cybersecurity challenges in industry: Measuring the challenge solve time to inform future challenges. Information 2020, 11, 533. [Google Scholar] [CrossRef]

- Wortmann, A.; Barais, O.; Combemale, B.; Wimmer, M. Modeling languages in Industry 4.0: An extended systematic mapping study. Softw. Syst. Model. 2020, 19, 67–94. [Google Scholar] [CrossRef] [Green Version]

- Nelles, J.; Kuz, S.; Mertens, A.; Schlick, C.M. Human-centered design of assistance systems for production planning and control: The role of the human in Industry 4.0. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 2099–2104. [Google Scholar] [CrossRef]

- Pan, Z.; Polden, J.; Larkin, N.; Van Duin, S.; Norrish, J. Recent progress on programming methods for industrial robots. Robot.-Comput. Integr. Manuf. 2012, 28, 87–94. [Google Scholar] [CrossRef] [Green Version]

- Horváth, C.M.; Korondi, P. Supportive robotic welding system for heavy, small series production with non-uniform welding grooves. Acta Polytech. Hung. 2018, 15, 141–165. [Google Scholar] [CrossRef]

- Zhou, Z.; Xiong, R.; Wang, Y.; Zhang, J. Advanced Robot Programming: A Review. Curr. Robot. Rep. 2020, 1, 251–258. [Google Scholar] [CrossRef]

- Kaczmarek, W.; Panasiuk, J. Programming Industrial Robots; PWN Scientific Publishing House: Warsaw, Poland, 2017. (In Polish) [Google Scholar]

- Massa, D.; Callegari, M.; Cristalli, C. Manual guidance for industrial robot programming. Ind. Rob. 2015, 42, 457–465. [Google Scholar] [CrossRef]

- Ferraguti, F.; Landi, C.T.; Secchi, C.; Fantuzzi, C.; Nolli, M.; Pesamosca, M. Walk-through Programming for Industrial Applications. Procedia Manuf. 2017, 11, 31–38. [Google Scholar] [CrossRef]

- Gan, Y.; Dai, X.; Li, D. Off-line programming techniques for multirobot cooperation system. Int. J. Adv. Robot. Syst. 2013, 10. [Google Scholar] [CrossRef]

- Cai, Y.; Yuan, P.; Shi, Z.; Chen, D.; Cao, S. Application of Universal Kriging for Calibrating Offline-Programming Industrial Robots. J. Intell. Robot. Syst. Theory Appl. 2019, 94, 339–348. [Google Scholar] [CrossRef]

- Yin, S.Y.; Chen, Z.X.; Lu, Z.Y. Calibrating workpiece position based on simulated annealing. Hanjie Xuebao/Trans. China Weld. Inst. 2003, 24, 1–3. [Google Scholar]

- ABB. RobotStudio. Available online: https://new.abb.com/products/robotics/robotstudio (accessed on 14 March 2021).

- FANUC. ROBOGUIDE. Available online: https://www.fanuc.eu/pl/en/robots/accessories/roboguide (accessed on 14 March 2021).

- KUKA. KUKA SIM.Pro. Available online: https://www.kuka.com/en-gb/products/robotics-systems/software/simulation-planning-optimization/kuka_sim (accessed on 14 March 2021).

- VC. Visual Components. Available online: https://www.visualcomponents.com/de/ (accessed on 14 March 2021).

- Mitsubishi. RT ToolBox 3. Available online: https://www.mitsubishielectric.com/fa/products/rbt/robot/smerit/rt3/index.html (accessed on 14 March 2021).

- Kaczmarek, W.; Panasiuk, J.; Borys, S.; Banach, P. Industrial robot control by means of gestures and voice commands in offline and online mode. Sensors 2020, 20, 6358. [Google Scholar] [CrossRef]

- Zhu, C.Y.; Pires, J.N.; Azar, A. A novel multi-brand robotic software interface for industrial additive manufacturing cells. Ind. Robot. 2020, 47, 581–592. [Google Scholar] [CrossRef]

- Ionescu, T.B. Leveraging graphical user interface automation for generic robot programming. Robotics 2021, 10, 3. [Google Scholar] [CrossRef]

- Robot Operating System. Available online: https://www.ros.org/ (accessed on 14 March 2021).

- RoboDK. Available online: https://robodk.com/ (accessed on 14 March 2021).

- drag&bot. Available online: https://www.dragandbot.com/ (accessed on 14 March 2021).

- Steinmetz, F.; Wollschlager, A.; Weitschat, R. RAZER-A HRI for Visual Task-Level Programming and Intuitive Skill Parameterization. IEEE Robot. Autom. Lett. 2018, 3, 1362–1369. [Google Scholar] [CrossRef] [Green Version]

- Process Simulate. Available online: https://www.plm.automation.siemens.com/global/pl/products/tecnomatix/ (accessed on 14 March 2021).

- Delmia. Available online: https://www.3ds.com/products-services/delmia/ (accessed on 14 March 2021).

- Park, C.B.; Lee, S.W. Real-time 3D pointing gesture recognition for mobile robots with cascade HMM and particle filter. Image Vis. Comput. 2011, 29, 51–63. [Google Scholar] [CrossRef]

- Tang, G.; Webb, P. The Design and Evaluation of an Ergonomic Contactless Gesture Control System for Industrial Robots. J. Robot. 2018, 2018, 9791286. [Google Scholar] [CrossRef] [Green Version]

- Bernier, E.; Chellali, R.; Thouvenin, I.M. Human gesture segmentation based on change point model for efficient gesture interface. In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication, Gyeongju, Korea, 26–29 August 2013; pp. 258–263. [Google Scholar] [CrossRef]

- Ghonge, E.P.; Kulkarni, M.N. Gesture based control of IRB1520ID using Microsoft’s Kinect. In Proceedings of the 2017 2nd International Conference on Communication and Electronics Systems (ICCES 2017), Coimbatore, India, 19–20 October 2017; pp. 355–358. [Google Scholar] [CrossRef]

- Prusaczyk, P.; Kaczmarek, W.; Panasiuk, J.; Besseghieur, K. Integration of robotic arm and vision system with processing software using TCP/IP protocol in industrial sorting application. AIP Conf. Proc. 2019, 2078, 020032. [Google Scholar] [CrossRef]

- Nawrat, Z.; Kostka, P. Robin heart surgery robotic system. Challenges in mechanical construction, control system and stuff training before first clinical application. Arch. Mech. Eng. 2014, 61, 163–178. [Google Scholar] [CrossRef]

- Tanaka, H.; Ohnishi, K.; Nishi, H.; Kawai, T.; Morikawa, Y.; Ozawa, S.; Furukawa, T. Implementation of bilateral control system based on acceleration control using FPGA for multi-DOF haptic endoscopic surgery robot. IEEE Trans. Ind. Electron. 2009, 56, 618–627. [Google Scholar] [CrossRef]

- Bottin, M.; Boschetti, G.; Rosati, G. A novel collision avoidance method for serial robots. In Mechanisms and Machine Science; Springer: Cham, The Netherlands, 2019; Volume 66, pp. 293–301. [Google Scholar] [CrossRef]

- Moe, S.; Pettersen, K.Y.; Gravdahl, J.T. Set-based collision avoidance applications to robotic systems. Mechatronics 2020, 69, 102399. [Google Scholar] [CrossRef]

- Moccia, R.; Iacono, C.; Siciliano, B.; Ficuciello, F. Vision-Based Dynamic Virtual Fixtures for Tools Collision Avoidance in Robotic Surgery. IEEE Robot. Autom. Lett. 2020, 5, 1650–1655. [Google Scholar] [CrossRef]

- Williams, R.L., II. The Delta Parallel Robot: Kinematics Solutions. Available online: https://www.ohio.edu/mechanical-faculty/williams/html/PDF/DeltaKin.pdf (accessed on 20 August 2020).

| Sample | Frequency [Hz] | Start Point [mm] | End Point [mm] | Average Time [s] | ||||

|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |||

| 1 | 33 | 199 | −343 | 0 | −89 | 357 | 0 | 4036 |

| 2 | 20 | 209 | −339 | 0 | −85 | 353 | 0 | 4132 |

| 3 | 10 | 205 | −343 | 0 | −87 | 357 | 0 | 402 |

| 4 | 7 | 203 | −343 | 0 | −87 | 361 | 0 | 3912 |

| 5 | 5 | 207 | −343 | 0 | −85 | 361 | 0 | 3908 |

| 6 | 4 | 201 | −337 | 0 | −89 | 367 | 0 | 3904 |

| 7 | 3 | 203 | −341 | 0 | −95 | 367 | 0 | 3924 |

| 8 | 2 | 197 | −339 | 0 | −87 | 351 | 0 | 3842 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaczmarek, W.; Lotys, B.; Borys, S.; Laskowski, D.; Lubkowski, P. Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode. Sensors 2021, 21, 2439. https://doi.org/10.3390/s21072439

Kaczmarek W, Lotys B, Borys S, Laskowski D, Lubkowski P. Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode. Sensors. 2021; 21(7):2439. https://doi.org/10.3390/s21072439

Chicago/Turabian StyleKaczmarek, Wojciech, Bartłomiej Lotys, Szymon Borys, Dariusz Laskowski, and Piotr Lubkowski. 2021. "Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode" Sensors 21, no. 7: 2439. https://doi.org/10.3390/s21072439

APA StyleKaczmarek, W., Lotys, B., Borys, S., Laskowski, D., & Lubkowski, P. (2021). Controlling an Industrial Robot Using a Graphic Tablet in Offline and Online Mode. Sensors, 21(7), 2439. https://doi.org/10.3390/s21072439