A BCI Based Alerting System for Attention Recovery of UAV Operators

Abstract

1. Introduction

2. Related Work

2.1. Brain Computer Interaction

2.2. Inattention Detection and Alerting System

2.3. EEG-Signal in Inattention State

3. Proposed Attention Recovery System

3.1. System Overview

3.2. Signal Processing Module

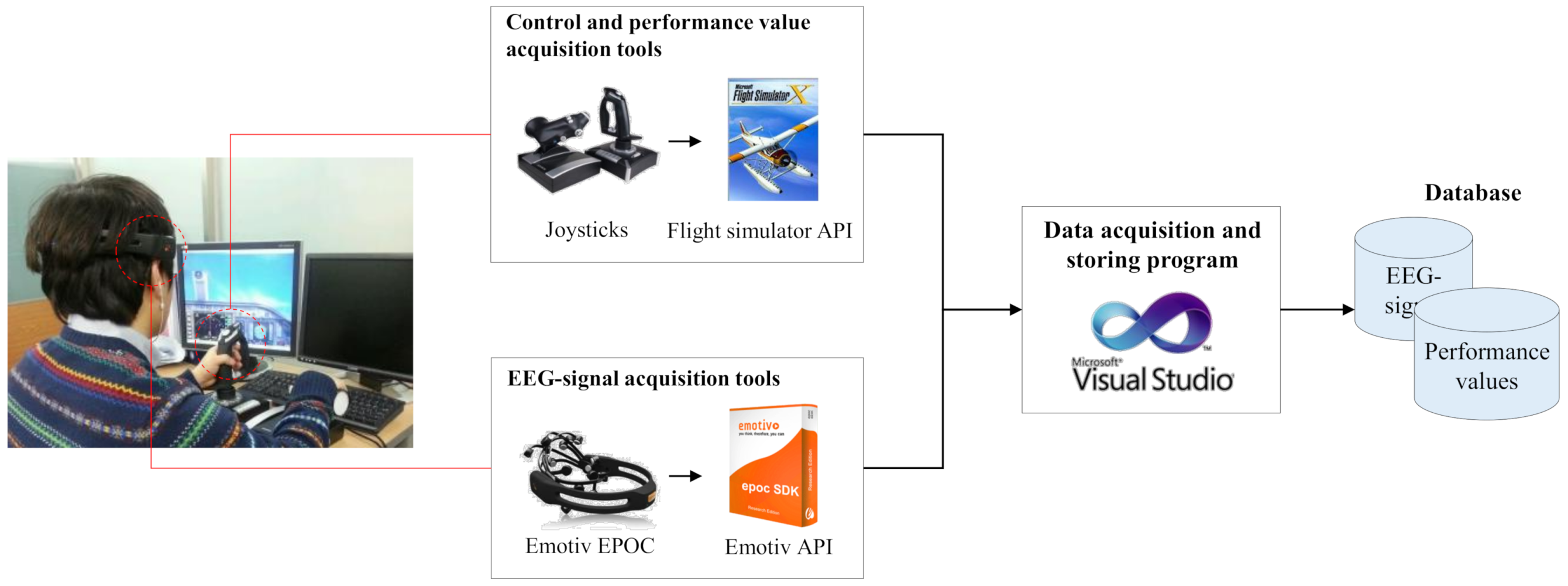

3.2.1. Data Acquisition Step

3.2.2. Data Preprocessing Step

3.2.3. Inattention Labeling Step

3.3. Inattention Detection Module

3.4. Alert Providing Module

3.5. Implementations

4. Experiment

4.1. Experiment Settings

4.1.1. Data Acquisition Procedure

4.1.2. Evaluation Measures

4.1.3. Experimental Details

4.2. Experiment Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shenoy, P.; Krauledat, M.; Blankertz, B.; Rao, R.P.; Müller, K.R. Towards adaptive classification for BCI. J. Neural Eng. 2006, 3, R13. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain—Computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Lee, B.G.; Lee, B.L.; Chung, W.Y. Mobile healthcare for automatic driving sleep-onset detection using wavelet-based EEG and respiration signals. Sensors 2014, 14, 17915–17936. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Meziane, R.; Otis, M.J.D.; Ezzaidi, H.; Cardou, P. A Smart Safety Helmet using IMU and EEG sensors for worker fatigue detection. In Proceedings of the 2014 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Timisoara, Romania, 16–18 October 2014; pp. 55–60. [Google Scholar]

- Lee, H.S.; Oh, S.; Jo, D.; Kang, B.Y. Estimation of driver’s danger level when accessing the center console for safe driving. Sensors 2018, 18, 3392. [Google Scholar] [CrossRef]

- Trost, L.C. Unmanned Air Vehicles (UAVs) for Cooperative Monitoring; Sandia National Laboratories: Albuquerque, NM, USA, 2000.

- Kay, G.; Dolgin, D.; Wasel, B.; Langelier, M.; Hoffman, C. Identification of the Cognitive, Psychomotor, and Psychosocial Skill Demands of Uninhabited Combat Aerial Vehicle (UCAV) Operators; Technical Report; Naval Air Warfare Center Aircraft Division: Patuxent River, MD, USA, 1999. [Google Scholar]

- Cooke, N.J. Human factors of remotely operated vehicles. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, San Francisco, CA, USA, 16–20 October 2006; SAGE Publications Sage CA: Los Angeles, CA, USA, 2006; Volume 50, pp. 166–169. [Google Scholar]

- Dehais, F.; Lafont, A.; Roy, R.; Fairclough, S. A neuroergonomics approach to mental workload, engagement and human performance. Front. Neurosci. 2020, 14, 268. [Google Scholar] [CrossRef]

- Lee, J.D. Dynamics of driver distraction: The process of engaging and disengaging. Ann. Adv. Automot. Med. 2014, 58, 24. [Google Scholar]

- Molloy, K.; Griffiths, T.D.; Chait, M.; Lavie, N. Inattentional deafness: Visual load leads to time-specific suppression of auditory evoked responses. J. Neurosci. 2015, 35, 16046–16054. [Google Scholar] [CrossRef]

- Todd, J.J.; Fougnie, D.; Marois, R. Visual short-term memory load suppresses temporo-parietal junction activity and induces inattentional blindness. Psychol. Sci. 2005, 16, 965–972. [Google Scholar] [CrossRef]

- Oei, N.Y.; Veer, I.M.; Wolf, O.T.; Spinhoven, P.; Rombouts, S.A.; Elzinga, B.M. Stress shifts brain activation towards ventral ‘affective’areas during emotional distraction. Soc. Cogn. Affect. Neurosci. 2012, 7, 403–412. [Google Scholar] [CrossRef]

- Dehais, F.; Peysakhovich, V.; Scannella, S.; Fongue, J.; Gateau, T. “Automation Surprise” in Aviation: Real-Time Solutions. In Proceedings of the 33rd annual ACM conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 2525–2534. [Google Scholar]

- Di Flumeri, G.; Aricò, P.; Borghini, G.; Sciaraffa, N.; Di Florio, A.; Babiloni, F. The dry revolution: Evaluation of three different EEG dry electrode types in terms of signal spectral features, mental states classification and usability. Sensors 2019, 19, 1365. [Google Scholar] [CrossRef]

- Choi, Y.; Park, J.; Shin, D. A semi-supervised inattention detection method using biological signal. Ann. Oper. Res. 2017, 258, 59–78. [Google Scholar] [CrossRef]

- Dehais, F.; Duprès, A.; Blum, S.; Drougard, N.; Scannella, S.; Roy, R.N.; Lotte, F. Monitoring pilot’s mental workload using ERPs and spectral power with a six-dry-electrode EEG system in real flight conditions. Sensors 2019, 19, 1324. [Google Scholar] [CrossRef]

- Chaudhuri, A.; Routray, A. Driver fatigue detection through chaotic entropy analysis of cortical sources obtained from scalp EEG signals. IEEE Trans. Intell. Transp. Syst. 2019, 21, 185–198. [Google Scholar] [CrossRef]

- Bashivan, P.; Rish, I.; Yeasin, M.; Codella, N. Learning representations from EEG with deep recurrent-convolutional neural networks. arXiv 2015, arXiv:1511.06448. [Google Scholar]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J.; Xu, H. Deep convolutional neural networks for mental load classification based on EEG data. Pattern Recognit. 2018, 76, 582–595. [Google Scholar] [CrossRef]

- Wu, E.Q.; Peng, X.; Zhang, C.Z.; Lin, J.; Sheng, R.S. Pilots’ fatigue status recognition using deep contractive autoencoder network. IEEE Trans. Instrum. Meas. 2019, 68, 3907–3919. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-based spatio–temporal convolutional neural network for driver fatigue evaluation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Machine Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Dehais, F.; Dupres, A.; Di Flumeri, G.; Verdiere, K.; Borghini, G.; Babiloni, F.; Roy, R. Monitoring pilot’s cognitive fatigue with engagement features in simulated and actual flight conditions using an hybrid fNIRS-EEG passive BCI. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 544–549. [Google Scholar]

- Hogervorst, M.A.; Brouwer, A.M.; Van Erp, J.B. Combining and comparing EEG, peripheral physiology and eye-related measures for the assessment of mental workload. Front. Neurosci. 2014, 8, 322. [Google Scholar] [CrossRef]

- Ahn, S.; Nguyen, T.; Jang, H.; Kim, J.G.; Jun, S.C. Exploring neuro-physiological correlates of drivers’ mental fatigue caused by sleep deprivation using simultaneous EEG, ECG, and fNIRS data. Front. Hum. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef]

- Liu, Y.; Ayaz, H.; Shewokis, P.A. Multisubject “learning” for mental workload classification using concurrent EEG, fNIRS, and physiological measures. Front. Hum. Neurosci. 2017, 11, 389. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, X.; Chen, J.; You, W. Feature weight driven interactive mutual information modeling for heterogeneous bio-signal fusion to estimate mental workload. Sensors 2017, 17, 2315. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Chung, W.Y. A single-channel SSVEP-based BCI speller using deep learning. IEEE Access 2018, 7, 1752–1763. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Vidal, J.J. Real-time detection of brain events in EEG. Proc. IEEE 1977, 65, 633–641. [Google Scholar] [CrossRef]

- Mason, S.G.; Birch, G.E. A general framework for brain-computer interface design. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 70–85. [Google Scholar] [CrossRef]

- Ekandem, J.I.; Davis, T.A.; Alvarez, I.; James, M.T.; Gilbert, J.E. Evaluating the ergonomics of BCI devices for research and experimentation. Ergonomics 2012, 55, 592–598. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; McFarland, D.J. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc. Natl. Acad. Sci. USA 2004, 101, 17849–17854. [Google Scholar] [CrossRef]

- Kübler, A.; Kotchoubey, B.; Kaiser, J.; Wolpaw, J.R.; Birbaumer, N. Brain—Computer communication: Unlocking the locked in. Psychol. Bull. 2001, 127, 358. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C.; Guger, C.; Harkam, W.; Ramoser, H.; Schlogl, A.; Obermaier, B.; Pregenzer, M. Current trends in Graz brain-computer interface (BCI) research. IEEE Trans. Rehabil. Eng. 2000, 8, 216–219. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; McFarland, D.J.; Neat, G.W.; Forneris, C.A. An EEG-based brain-computer interface for cursor control. Electroencephalogr. Clin. Neurophysiol. 1991, 78, 252–259. [Google Scholar] [CrossRef]

- Millan, J.R.; Renkens, F.; Mourino, J.; Gerstner, W. Noninvasive brain-actuated control of a mobile robot by human EEG. IEEE Trans. Biomed. Eng. 2004, 51, 1026–1033. [Google Scholar] [CrossRef] [PubMed]

- Birbaumer, N.; Ghanayim, N.; Hinterberger, T.; Iversen, I.; Kotchoubey, B.; Kübler, A.; Perelmouter, J.; Taub, E.; Flor, H. A spelling device for the paralysed. Nature 1999, 398, 297–298. [Google Scholar] [CrossRef] [PubMed]

- Donchin, E.; Spencer, K.M.; Wijesinghe, R. The mental prosthesis: Assessing the speed of a P300-based brain-computer interface. IEEE Trans. Rehabil. Eng. 2000, 8, 174–179. [Google Scholar] [CrossRef]

- Choi, Y.; Kwon, N.; Lee, S.; Shin, Y.; Ryo, C.Y.; Park, J.; Shin, D. Hypovigilance detection for ucav operators based on a hidden Markov model. Comput. Math. Methods Med. 2014, 2014. [Google Scholar] [CrossRef]

- Makeig, S.; Jung, T.P. Changes in alertness are a principal component of variance in the EEG spectrum. Neuroreport-Int. J. Rapid Commun. Res. Neurosci. 1995, 7, 213–216. [Google Scholar] [CrossRef]

- Myrden, A.; Chau, T. Effects of user mental state on EEG-BCI performance. Front. Hum. Neurosci. 2015, 9, 308. [Google Scholar] [CrossRef]

- Borghini, G.; Vecchiato, G.; Toppi, J.; Astolfi, L.; Maglione, A.; Isabella, R.; Caltagirone, C.; Kong, W.; Wei, D.; Zhou, Z.; et al. Assessment of mental fatigue during car driving by using high resolution EEG activity and neurophysiologic indices. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6442–6445. [Google Scholar]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Sciaraffa, N.; Colosimo, A.; Babiloni, F. Passive BCI in operational environments: Insights, recent advances, and future trends. IEEE Trans. Biomed. Eng. 2017, 64, 1431–1436. [Google Scholar] [CrossRef]

- Sheng, H.; Chen, Y.; Qiu, T. Multifractional property analysis of human sleep electroencephalogram signals. In Fractional Processes and Fractional-Order Signal Processing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 243–250. [Google Scholar]

- Chae, Y.; Jeong, J.; Jo, S. Toward brain-actuated humanoid robots: Asynchronous direct control using an EEG-based BCI. IEEE Trans. Robot. 2012, 28, 1131–1144. [Google Scholar] [CrossRef]

- Arun, S.; Murugappan, M.; Sundaraj, K. Hypovigilance warning system: A review on driver alerting techniques. In Proceedings of the 2011 IEEE Control and System Graduate Research Colloquium, Shah Alam, Malaysia, 27–28 June 2011; pp. 65–69. [Google Scholar]

- Ha, T.; Lee, S.; Shin, D. The effects of stimuli on the concentration recovery of UAV pilots. In Proceedings of the Spring Conference Korean Institution Industrial Engineering, Gyeongju, Korea, 10–11 May 2012; pp. 130–135. [Google Scholar]

- Niu, Y.F.; Xue, C.Q.; Zhou, X.Z.; Zhou, L.; Xie, Y.; Wang, H.Y.; Tang, W.Z.; Wu, W.Y.; Guo, Q.; Jin, T. Which is more prominent for fighter pilots under different flight task difficulties: Visual alert or verbal alert? Int. J. Ind. Ergon. 2019, 72, 146–157. [Google Scholar] [CrossRef]

- Vyas, S.A.; Suke, A.A.; Gedam, M. Real-time Intelligent Alert System on Driver’s Hypo-Vigilance Detection Using Template Matching Technique. Int. J. Recent Innov. Trends Comput. Commun. 2015, 3, 665–671. [Google Scholar]

- Awasekar, P.; Ravi, M.; Doke, S.; Shaikh, Z. Driver fatigue detection and alert system using non-intrusive eye and yawn detection. Int. J. Comput. Appl. 2019, 180, 1–5. [Google Scholar] [CrossRef]

- Fernández, A.; Usamentiaga, R.; Carús, J.L.; Casado, R. Driver distraction using visual-based sensors and algorithms. Sensors 2016, 16, 1805. [Google Scholar] [CrossRef]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef]

- Caldwell, J.A.; Hall, K.K.; Erickson, B.S. EEG data collected from helicopter pilots in flight are sufficiently sensitive to detect increased fatigue from sleep deprivation. Int. J. Aviat. Psychol. 2002, 12, 19–32. [Google Scholar] [CrossRef]

- Craig, A.; Tran, Y.; Wijesuriya, N.; Nguyen, H. Regional brain wave activity changes associated with fatigue. Psychophysiology 2012, 49, 574–582. [Google Scholar] [CrossRef]

- Jap, B.T.; Lal, S.; Fischer, P. Comparing combinations of EEG activity in train drivers during monotonous driving. Expert Syst. Appl. 2011, 38, 996–1003. [Google Scholar] [CrossRef]

- Lal, S.K.; Craig, A. Driver fatigue: Electroencephalography and psychological assessment. Psychophysiology 2002, 39, 313–321. [Google Scholar] [CrossRef]

- Zhao, C.; Zhao, M.; Liu, J.; Zheng, C. Electroencephalogram and electrocardiograph assessment of mental fatigue in a driving simulator. Accid. Anal. Prev. 2012, 45, 83–90. [Google Scholar] [CrossRef]

- Balasubramanian, V.; Adalarasu, K.; Gupta, A. EEG based analysis of cognitive fatigue during simulated driving. Int. J. Ind. Syst. Eng. 2011, 7, 135–149. [Google Scholar] [CrossRef]

- Duvinage, M.; Castermans, T.; Petieau, M.; Hoellinger, T.; Cheron, G.; Dutoit, T. Performance of the Emotiv Epoc headset for P300-based applications. Biomed. Eng. Online 2013, 12, 56. [Google Scholar] [CrossRef]

- Louali, R.; Belloula, A.; Djouadi, M.S.; Bouaziz, S. Real-time characterization of Microsoft Flight Simulator 2004 for integration into Hardware In the Loop architecture. In Proceedings of the 2011 19th Mediterranean Conference on Control & Automation (MED), Corfu, Greece, 20–23 June 2011; pp. 1241–1246. [Google Scholar]

- Flotzinger, D.; Pfurtscheller, G.; Neuper, C.; Berger, J.; Mohl, W. Classification of non-averaged EEG data by learning vector quantisation and the influence of signal preprocessing. Med. Biol. Eng. Comput. 1994, 32, 571–576. [Google Scholar] [CrossRef]

- Pregenzer, M.; Pfurtscheller, G. Frequency component selection for an EEG-based brain to computer interface. IEEE Trans. Rehabil. Eng. 1999, 7, 413–419. [Google Scholar] [CrossRef]

- Bashashati, A.; Fatourechi, M.; Ward, R.K.; Birch, G.E. A survey of signal processing algorithms in brain–computer interfaces based on electrical brain signals. J. Neural Eng. 2007, 4, R32. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Hu, H.; Ji, L.; Wang, P. Embedding dimension selection for adaptive singular spectrum analysis of EEG signal. Sensors 2018, 18, 697. [Google Scholar] [CrossRef] [PubMed]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-based brain-computer interfaces using motor-imagery: Techniques and challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [PubMed]

- Schlögl, A.; Flotzinger, D.; Pfurtscheller, G. Adaptive autoregressive modeling used for single-trial EEG classification-verwendung eines Adaptiven Autoregressiven Modells für die Klassifikation von Einzeltrial-EEG-Daten. Biomed. Tech. Eng. 1997, 42, 162–167. [Google Scholar] [CrossRef]

- Jiang, X.; Bian, G.B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef]

- Subasi, A.; Gursoy, M.I. EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Syst. Appl. 2010, 37, 8659–8666. [Google Scholar] [CrossRef]

- Du, X.; Zhu, F. A novel principal components analysis (PCA) method for energy absorbing structural design enhanced by data mining. Adv. Eng. Softw. 2019, 127, 17–27. [Google Scholar] [CrossRef]

- Liang, X.; Duan, F.; Mba, D.; Ian, B. Centrifugal Compressor Diagnosis Using Kernel PCA and Fuzzy Clustering. In Asset Intelligence through Integration and Interoperability and Contemporary Vibration Engineering Technologies; Springer: Berlin/Heidelberg, Germany, 2019; pp. 373–381. [Google Scholar]

- Heo, S.; Lee, J.H. Parallel neural networks for improved nonlinear principal component analysis. Comput. Chem. Eng. 2019, 127, 1–10. [Google Scholar] [CrossRef]

- Molloy, R.; Parasuraman, R. Monitoring an automated system for a single failure: Vigilance and task complexity effects. Hum. Factors 1996, 38, 311–322. [Google Scholar] [CrossRef]

- Glussich, D.; Histon, J. Human/automation interaction accidents: Implications for UAS operations. In Proceedings of the 29th Digital Avionics Systems Conference, Salt Lake City, UT, USA, 3–7 October 2010; pp. 4.A.3-1–4.A.3-11. [Google Scholar]

- Oh, S.H.; Lee, Y.R.; Kim, H.N. A novel EEG feature extraction method using Hjorth parameter. Int. J. Electron. Electr. Eng. 2014, 2, 106–110. [Google Scholar] [CrossRef]

- Rabiner, L.R.; Lee, C.H.; Juang, B.; Wilpon, J. HMM clustering for connected word recognition. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Glasgow, UK, 23–26 May 1989; pp. 405–408. [Google Scholar]

- Obermaier, B.; Guger, C.; Neuper, C.; Pfurtscheller, G. Hidden Markov models for online classification of single trial EEG data. Pattern Recognit. Lett. 2001, 22, 1299–1309. [Google Scholar] [CrossRef]

- Doroshenkov, L.; Konyshev, V.; Selishchev, S. Classification of human sleep stages based on EEG processing using hidden Markov models. Biomed. Eng. 2007, 41, 25. [Google Scholar] [CrossRef]

- Jain, A.; Bansal, R.; Kumar, A.; Singh, K. A comparative study of visual and auditory reaction times on the basis of gender and physical activity levels of medical first year students. Int. J. Appl. Basic Med. Res. 2015, 5, 124. [Google Scholar] [CrossRef]

- Atla, A.; Tada, R.; Sheng, V.; Singireddy, N. Sensitivity of different machine learning algorithms to noise. J. Comput. Sci. Coll. 2011, 26, 96–103. [Google Scholar]

- Usakli, A.B. Improvement of EEG signal acquisition: An electrical aspect for state of the art of front end. Comput. Intell. Neurosci. 2010, 2010, 630649. [Google Scholar] [CrossRef]

| Subject | Gender | Age |

|---|---|---|

| S1 | male | 26 |

| S2 | male | 22 |

| S3 | male | 24 |

| S4 | female | 28 |

| Detected | |||

|---|---|---|---|

| Inattention | Attention | ||

| Actual | Inattention | TP | FN |

| Attention | FP | TN | |

| ML Classifier | Accuracy | Precision | Recall |

|---|---|---|---|

| HMM | 0.766 | 0.879 | 0.674 |

| SVM | 0.734 | 0.709 | 0.683 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Park, J.; Shin, D.; Choi, Y. A BCI Based Alerting System for Attention Recovery of UAV Operators. Sensors 2021, 21, 2447. https://doi.org/10.3390/s21072447

Park J, Park J, Shin D, Choi Y. A BCI Based Alerting System for Attention Recovery of UAV Operators. Sensors. 2021; 21(7):2447. https://doi.org/10.3390/s21072447

Chicago/Turabian StylePark, Jonghyuk, Jonghun Park, Dongmin Shin, and Yerim Choi. 2021. "A BCI Based Alerting System for Attention Recovery of UAV Operators" Sensors 21, no. 7: 2447. https://doi.org/10.3390/s21072447

APA StylePark, J., Park, J., Shin, D., & Choi, Y. (2021). A BCI Based Alerting System for Attention Recovery of UAV Operators. Sensors, 21(7), 2447. https://doi.org/10.3390/s21072447